Abstract

Objective: We present a study evaluating the effects of visual and haptic feedback on human performance in a needle insertion task.

Materials and Methods: A one-degree-of-freedom needle insertion simulator with a three-layer tissue model (skin, fat and muscle) was used in perceptual experiments. The objective of the 14 subjects was to detect the puncture of each tissue layer using varying haptic and visual cues. Performance was measured by overshoot error—the distance traveled by the virtual needle after puncture.

Results: Without force feedback, real-time visual feedback reduced error by at least 87% in comparison to static image overlay. Force feedback, in comparison to no force feedback, reduced puncture overshoot by at least 52% when visual feedback was absent or limited to static image overlay. A combination of force and visual feedback improved performance, especially for tissues with low stiffness, by at least 43% with visual display of the needle position, and by at least 67% with visual display of layer deflection.

Conclusion: Real-time image overlay significantly enhances controlled puncture during needle insertion. Force feedback may not be necessary except in circumstances where visual feedback is limited.

Introduction

Current minimally invasive medical practice relies heavily on the manual skill of medical personnel. Minimally invasive cardiac bypass surgery, vitreoretinal microsurgery, and brachytherapy are examples of procedures where human accuracy, precision and dexterity are challenged. While visual information is used whenever possible, clinicians traditionally rely on manual haptic (force and tactile) feedback in visually obscured areas. Robotic and imaging technologies have become important aids to enhancing performance of minimally invasive tasks. For example, Fichtinger et al. have identified a need for increased precision in aiming and delivery of needles in nerve block and facet joint injection procedures, and have proposed robot-assisted mechanisms with CT guidance Citation[1]. Chinzei et al. have suggested a surgical assistant robot for use with intraoperative MRI (which offers exceptional soft tissue discrimination) for instrument insertion in procedures such as prostate tumor biopsy Citation[2]. Masamune et al. presented a needle insertion system for use with MRI-guided neurosurgery Citation[3]. There are also general skill-enhancing minimally invasive surgical systems such as the da Vinci™ Surgical System (Intuitive Surgical, Inc., Sunnyvale, CA). However, commercially available teleoperated surgical systems currently do not address the use of haptic feedback to the operator, which could potentially improve performance.

In this paper we assess the value of visual and force feedback for improving performance of medical tasks, specifically needle insertion. We developed a needle insertion simulation and experiment to determine any advantages of enhanced feedback. In related work, Kontarinis and Howe performed a teleoperated experiment in which subjects were asked to penetrate a thin membrane with a needle held by a slave manipulator, while being provided with combinations of visual (manipulator exposed/hidden), filtered force, and vibratory feedback at the master manipulator Citation[4]. The result was that a combination of force and vibration feedback reduced reaction time in comparison to a visually guided approach in puncture tasks. However, the effect of combinations of these feedback modes on boundary transition detection was not investigated.

For our study, we developed a simple one-degree-of-freedom needle insertion simulator. More sophisticated simulators have been developed using multi-degree-of-freedom haptic devices and more complex tissue models Citation[5–7]. In some simulators, force models are generated from qualitative or quantitative analysis of needle insertion for particular procedures, such as lumbar puncture. DiMaio and Salcudean Citation[8] applied the finite element method to quantify force distribution and tissue behavior for needle insertion into soft tissue. Other approaches use force data acquired during ex vivo needle insertions into animal models Citation[9] and MR imaging to determine layer anatomy Citation[10]. However, there are currently no validated models for insertion of a needle through multiple layers of tissue, so our experiments use a simplified model and assume that the measured effectiveness of various feedback methods is applicable to real needle insertions.

Our results can be applied to many tasks involving needle insertion and may also be relevant to other minimally invasive procedures. In general, there are three types of visual feedback to the operator during medical procedure. First, there may be no “internal” images available; this occurs in procedures such as lumbar puncture. In this case, the physician relies completely on haptic (and sometimes audio) feedback and a priori knowledge of anatomy. Second, there may be high-quality static preoperative images, but no or only low-quality real-time images available. In the case of prostate brachytherapy, high-quality CT images are acquired in advance to identify targets for implantation of radioactive seeds. However, the visualization provided to the surgeon during the procedure typically consists of low-quality ultrasound images. Third, there are new systems in development to allow real-time high-quality images during needle insertion procedures, such as robot-assisted therapy delivery under MRI guidance Citation[11]. In any needle insertion procedure performed manually, the physician directly senses insertion forces. Telerobotic systems for minimally invasive surgery also have the potential to provide force feedback to the operator. However, if an autonomous robot or teleoperated needle insertion system does not sense forces applied to tissue, then the operator will not receive any force feedback.

Materials and methods

Needle insertion simulator

For the purpose of this study, we created a simple needle insertion simulator. The position of the simulated needle is controlled by the single vertical degree of freedom (DOF) of the Impulse Engine® haptic interface (Immersion Corp., San Jose, CA). Although this device is not kinematically or dynamically equivalent to a needle, it has lower inertia than many other haptic devices used in needle insertion simulators and is sufficient for the purpose of testing the effects of different feedback modes.

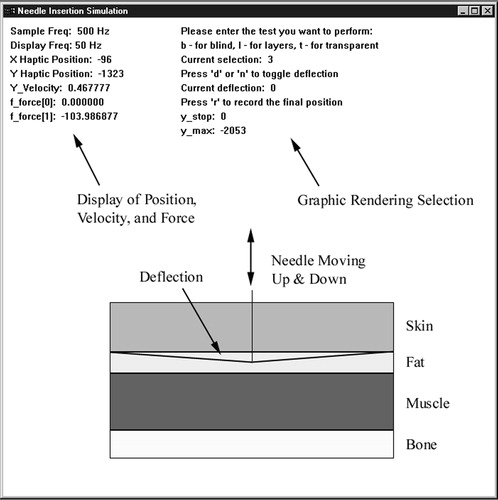

We created an interactive multi-layer tissue model that can be displayed as a static or dynamic image, with or without haptic feedback. A screenshot of the graphical user interface is shown in . Four tissue layers (skin, fat, muscle and bone) were simulated with spring-damper models, although only the first three layers were used in the experiment. Nominal spring and damping coefficients were assigned to each layer to reflect the relative difference in tissue properties. Force feedback to the user was based on linear stiffness and tissue deformation before puncture, and on damping force during needle translation after puncture. As the needle penetrates deeper into the layers, the user feels the total damping force accumulated from the above tissue layers. Each tissue was assigned stiffness and damping coefficients, as shown in . Nominal stiffness coefficients were assigned to reflect differences in tissue densities, and nominal damping coefficients were assigned to reflect the friction between the tissue and the needle shaft. We considered the bone as a solid structure (through which no puncture can occur) that has a very high stiffness and no viscosity. We implemented the following force model:

Skin:

Fat:

Muscle:

Bone:

Figure 1. Needle insertion simulator graphical user interface showing layer deflection for visual feedback.

Table I Simulation parameters.

The simulation was created with Microsoft Visual C++6.0 and the Impulse Engine® Standard Development Kit 4.2 on a Pentium III-based system with a Windows NT operating system. The servo loop ran at 500 Hz, and the graphics were updated at 50 Hz. The haptic device has position resolution of 0.031 mm and maximum instantaneous force output of 8.9 N. The velocity of the needle was obtained using a backward-difference formula.

Experimental method

Perceptual experiments were conducted with two groups of subjects. Subjects in the first group had medium previous exposure to haptic interfaces and virtual environments and limited exposure to actual needle insertion. A total of 10 volunteer subjects aged 21–31 (8 male, 2 female) were included. The second group was composed of 4 volunteer male subjects aged 26–40 who had extensive experience with needle insertion through medical training or research, but no experience with haptic devices. We presented a training simulation to the subjects at the beginning of the experiment to familiarize them with the mechanics of Impulse Engine®, introduce different insertion scenarios, and allow them to find a comfortable position for joystick operation. At the end of the training period, all users were observed to feel comfortable with the mechanics of the experiment. The experiment was not designed to measure the learning aspect of needle insertion. Thus, the results should not be interpreted as a measure of user adaptability, but rather as an evaluation of the immediate impact of different feedback methods.

The experiments consisted of three trials of nine tests each. In each trial, the tests were presented in random order to minimize the effect of learning across subjects. The goal of each test was to successfully detect the transition between the layers due to haptic or visual cues or both. For each test, the subject was asked to insert the virtual needle into the skin, move from skin into fat, and from fat into muscle before extracting the needle from the tissue completely. The subjects were instructed to stop the movement of the needle as soon as they felt that puncture had occurred through each layer, to allow the experimenter to record the penetration depth into the layer with respect to the layer boundary.

Each of the nine tests simulated a different needle insertion scenario. The conditions for each test are described in . “Deflection” refers to graphical deflection of the layer boundary prior to puncture due to the needle. Tissue deflection (D) and needle tracking (N) represent the real-time image overlay, whereas the rendering of the tissue layers (L) simulates a static image that would be obtained prior to the procedure. In test 8, subjects could see the outermost tissue boundary but none of the internal boundaries, whereas in test 9, the subjects were blindfolded and relied completely on force feedback. Tests 4 to 7 required some anatomical knowledge on the part of the user, since they mimicked situations where the imaging either provides real-time visual feedback, but does not capture the needle in the imaging plane, or is not real-time.

Table II Test conditions.

Results

To evaluate subject performance, we calculated the error between the penetration depth and the layer boundary for skin, fat and muscle in each test. We then averaged the error over three trials for each subject. The resulting errors were averaged over all subjects for puncture through skin, fat and muscle in each test. All values were converted from haptic device encoder counts to millimeters. Finally, the results were grouped as follows:

Tests where force feedback was always present and visual cues differed. This quantifies the effect of visual feedback on manual insertion or teleoperated tasks (with force feedback).

Tests where force feedback was always absent and visual cues differed. This quantifies the effect of visual feedback on teleoperated tasks.

Tests where visual feedback was limited and force feedback differed. This quantifies the effect of force feedback on insertion tasks with or without limited visual feedback.

The statistical significance of the data was evaluated by pair-wise t-tests (t and p values are provided). The notation ND, WD, NN, WN (“No Deflection”, “With Deflection”, “No Needle”, and “With Needle,” respectively) will henceforth be used to indicate different visual cues provided by the simulation. The meaning of each visual cue was described in the Experimental method section above.

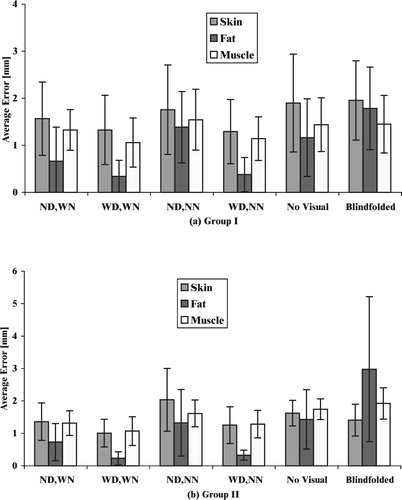

Force feedback with varying visual feedback

shows the results of experiments in which the subjects received force feedback, yet visual feedback was different in each test. These correspond to tests 2, 3 and 6–9 in . These are respectively labeled “ND, WN”, “WD, WN”, “ND, NN”, “WD, NN”, “No Visual”, and “Blindfolded” in . In tests 2, 3, 6 and 7, the tissue boundary layers were present. The results of average errors of the tests are shown in . As compared to the case when no visual feedback was present, we observed that subjects in Group I, who had minimal prior needle insertion experience, had a 43% reduction in error (t18=1.365, p<0.09) in the test with fat layer puncture when only the needle was rendered (case “ND,WN” in ), but a 67% reduction in error (t18=2.586, p<0.01) when only the deflection was rendered (case “WD,NN” in ). From , Group II (subjects with needle insertion experience) showed at least a 45% reduction in error (t6=0.889, p<0.21) when only the needle was rendered, and a 75% reduction in error (t18=1.671, p<0.08) when only the deflection was added. Skin and muscle trials did not show a significant reduction in error. This indicates that in low-level force display scenarios (e.g., fat), users greatly benefit from the presence of visual feedback based on real-time tissue boundary tracking, whereas in high-force interactions (e.g., skin, muscle), force rendering is sufficient. We observed similar performance and error reduction from the two groups, indicating that performance with force feedback is independent of prior needle insertion experience.

Figure 2. Average error in mm when force feedback is present, with different types of visual feedback, for a) Group I, and b) Group II. ND=no deflection, WD=with deflection, NN=no needle, and WN=with needle.

Table III Average overshoot error for skin, fat and muscle puncture for Group I (subjects with no needle insertion experience) and Group II (subjects with needle insertion experience). The visual and force feedback cues correspond to the tests described in .

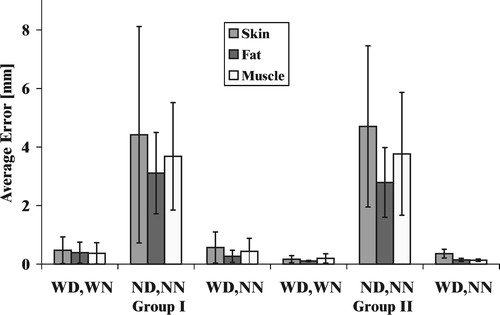

No force feedback with varying visual feedback

shows the effect of visual feedback on the performance of the subjects when force feedback is absent. This corresponds to tests 1, 4 and 5 described in , and to “WD,WN”, “ND,NN” and “WD,NN” in , respectively. The layer boundaries were rendered for all cases, which is equivalent to overlay of pre-operative image data.

Figure 3. Average error in mm when force feedback is absent, with different types of visual feedback. ND=no deflection, WD=with deflection, NN=no needle, and WN=with needle.

When deflection of the boundary layer and rendering of the needle were absent (test 4), the subjects performed poorly for all tissue types, as shown by the average errors in . Clearly, without any force feedback and needle rendering, the subject has to guess the location of the needle based on an abstract spatial model of the needle with respect to the boundaries (anatomical knowledge). As compared to the experiment for the fat layer with needle and layer deflection absent, subjects in Group I showed a 91% reduction in error (t18=5.683, p<0.0001), and Group II showed a 96% reduction in error (t6=3.9, p<0.004) with the addition of deflection rendering (test 5). With the addition of both deflection and needle rendering (test 1), subjects in Group I showed an 87% reduction in error (t18=6.061, p<0.0001), and Group II showed a 95% reduction in error (t6=3.839, p<0.004), as compared to the experiment in which the needle and layer deflection were absent. From , the results of the skin and muscle layers also indicate significant differences in error for the case when any additional visual feedback was present, as compared to when both needle and layer rendering were absent. Overall, any type of extra visual feedback improved the performance by at least 87%. Therefore, boundary layer deflection is a sufficient aid to improve performance, possibly more important than additional visibility of the needle. This indicates that the use of real-time image overlay improves insertion depth accuracy.

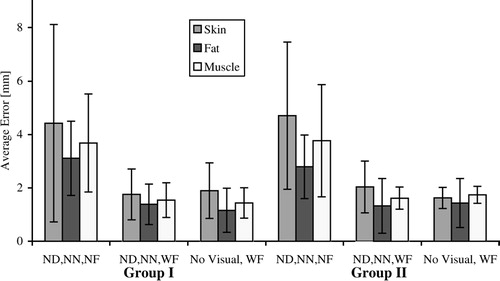

Limited visual feedback with varying force feedback

In , visual feedback was limited to tissue boundaries (no deflection and no needle) in each experiment (tests 4, 6 and 8 in ). Tests 4, 6 and 8 were labeled “ND,NN,NF”, “ND,NN,WF”, and “No visual, WF” in , respectively. The addition of force feedback (test 6), as compared to the no force feedback case (test 4), reduced the error in Group I significantly (), as indicated by the t-test results of t18=2.093 with p<0.025, t18=3.257 with p<0.004, and t18=2.093 with p<0.025, for the skin, fat and muscle layers, respectively. Similarly for Group II, force feedback decreased the error greatly for all layers (t6=1.583 with p<0.08, t6=1.613 with p<0.07, and t6=1.472 with p<0.06 for the skin, fat and muscle layers, respectively). Addition of force feedback improves layer transition detection by at least 52% for subjects in both groups. Test 6 did not show a significant improvement in error reduction over test 8, indicating that the display of the internal structure (static display of tissue layers) is not necessary if force feedback is provided. The results show that addition of force feedback to telesurgical systems could improve surgical performance in situations where vision is limited or occluded. We also observe that the visibility of tissue boundaries has little effect on performance in this scenario, because experiments in which tissue layers alone were visible showed insignificant difference in error from experiments where the tissue layers were invisible.

Discussion

We created a simple virtual needle insertion simulator with visual and force feedback and performed a set of perceptual experiments to evaluate performance of a needle insertion task. Users experienced different combinations of visual and haptic feedback, mimicking manual or teleoperated scenarios with and without image overlay (real-time or pre-operative). The addition of force feedback to systems that obstruct the field of view reduces error in the detection of transitions between tissue layers by at least 52%. Addition of real-time visual feedback (image overlay) improved user performance by at least 87% in scenarios without force feedback. In areas where haptic and visual feedback are greatly needed, such as transition through the fat layer, presentation of both force and visual feedback improved performance by at least 43% in comparison to scenarios without any feedback.

In general, the results demonstrate that visual feedback alone is a more effective aid than haptic feedback alone. While a teleoperated procedure (where no force feedback is provided) could benefit from the addition of force feedback, better performance can be obtained from real-time image overlay for manual or teleoperated needle insertions to assist in task visualization. Our experimental results show that graphical display of the deflection of tissue boundaries has a dominating influence on performance. However, the importance of force feedback increases as the quality of visual feedback degrades. On average, tests with force feedback showed larger overshoot () because of the slow human reaction to the sudden release of spring force after puncture. When the user pushes against a boundary that is suddenly removed, he or she is not able to stop immediately due to both reaction time and arm impedance. Force feedback provides an important haptic cue that is used in actual needle insertion procedures to detect layer transitions. To minimize this overshoot effect for a teleoperated robot, control parameters should be carefully selected to provide an appropriate amount of force feedback.

We note that every clinical needle insertion procedure has different forces within tissue layers and during transitions between tissue layers. Our work applies to needle insertion procedures that include sharp force drops after puncture when transitioning between tissue layers. We selected a very generic set of layer models that approximate (within an order of magnitude) the forces and deflections of many common needle insertion procedures. Although user performance may vary somewhat with tissue parameters, we believe that the impact of various feedback methods will apply generically.

In the case of surgeries with pre-operative planning (e.g., many X-ray-, fluoroscopy-, MR- or CT-guided procedures), the surgical team may only have static images or 3D reconstructions representing the patient. During the procedure, the team must make decisions based on a combination of these static images and training/experience. Because our study shows the importance of real-time visual feedback, we propose that when real-time imaging is of low quality or not possible, an accurate mechanical model could be used to provide simulated visual feedback. Accurate mechanical models Citation[8], Citation[12] could present graphical feedback reflecting the estimated state of the tissues impacted by needle insertion. One application of such visualization would be needle insertion for therapeutic delivery to the prostate (i.e., brachytherapy), where it might be difficult to identify the target exactly in real-time ultrasound images. Having a proper tissue model would enable the image-guided system to help the surgeon identify the “deformed” location of a target. Alterovitz et al. Citation[13] have developed a method for pre-operative planning with tissue deformation determined by a mechanical model, but further work is required to obtain models with sufficient patient-specific accuracy.

References

- Fichtinger G, Masamune K, Patriciu A, Tanacs A, Anderson J H, DeWeese T L, Taylor R H, Stoianovici D. Robotically assisted percutaneous local therapy and biopsy. Proceedings of 10th International Conference on Advanced Robotics, BudapestHungary, August, 2001, 133–51

- Chinzei K, Hata N, Jolesz A, Kikinis R. Surgical assist robot for the active navigation in the intraoperative MRI: hardware design issues. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, TakamatsuJapan, October, 2000. 1: 727–32

- Masamune K, Kobayashi E, Masutani Y, Suzuki M, Dohi T, Iseki H, Takakuro K. Development of an MRI-compatible needle insertion manipulator for stereotactic neurosurgery. J Image Guided Surgery 1995; 1(4)242–8

- Kontarinis D A, Howe R D. Tactile display of vibratory information in teleoperation and virtual environments. Presence 1995; 4: 387–402

- Dang T, Annaswamy T M, Srinivasan M A. Development and evaluation of an epidural injection simulator with force feedback for medical training. Studies in Health Technology and Informatics 1996; 29: 564–79

- Kwon D S, Kyung K U, Kwon S M, Ra J B, Park H W, Kang H S, Zeng J, Cleary K R. Realistic force reflection in a spine biopsy simulator. Proceedings of IEEE International Conference on Robotics and Automation, SeoulKorea, May, 2001, 1358–63

- Raja J B, Kwon S M, Kim J K, Yi J, Kim K H, Park H W, Kyung K U, Kwon D S, Kang H S, Jiang L, Cleary K R, Zeng J, Mun S K. A visually guided spine biopsy simulator with force feedback. Proceedings of SPIE International Conference on Medical Imaging. 2001, 36–45

- DiMaio S P, Salcudean S E. Needle insertion modeling and simulation. IEEE Trans Robotics Automation 2003; 19(5)864–75

- Brett P N, Harrison A J, Thomas T A. Schemes for the identification of tissue types and boundaries at the tool point for surgical needles. IEEE Trans Inform Technol Biomed 2000; 4(1)30–6

- Hiemenz L L. Force models for needle insertion created from measured needle puncture data. Proceedings of Medicine Meets Virtual Reality 2001, J D Westwood, H M Hoffman, G T Mogel, D Stredney. IOS Press, Amsterdam 2001; 180–6

- Susil R C, Krieger A, Derbyshire J A, Tanacs A, Whitcomb L L, Fichtinger G, Atalar E. System for MRI guided prostate interventions: canine study. Radiology 2003; 228(3)886–94

- Crouch J R, Pizer S M, Chaney E L, Zaider M (2003) Medially based meshing with finite element analysis of prostate deformation. Proceedings of 6th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2003), MontréalCanada, November, 2003, R E Ellis, T M Peters. Springer, Berlin, 108–15

- Alterovitz R, Goldberg K, Pouliot J, Taschereau R, Hsu I-C. Sensorless planning for medical needle insertion procedures. Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, October, 2003, 3337–43