Abstract

This paper describes a novel image-guided system for precise automatic targeting in minimally invasive keyhole neurosurgery. The system consists of the MARS miniature robot fitted with a mechanical guide for needle, probe or catheter insertion. Intraoperatively, the robot is directly affixed to a head clamp or to the patient's skull. It automatically positions itself with respect to predefined targets in a preoperative CT/MRI image following an anatomical registration with an intraoperative 3D surface scan of the patient's facial features and registration jig. We present the system architecture, surgical protocol, custom hardware (targeting and registration jig), and software modules (preoperative planning, intraoperative execution, 3D surface scan processing, and three-way registration). We also describe a prototype implementation of the system and in vitro registration experiments. Our results indicate a system-wide target registration error of 1.7 mm (standard deviation = 0.7 mm), which is close to the required 1.0–1.5 mm clinical accuracy in many keyhole neurosurgical procedures.

Introduction

Precise targeting of tumors, lesions, and anatomical structures with a probe, needle, catheter or electrode inside the brain based on preoperative CT/MRI images is the standard of care in many keyhole neurosurgical procedures. These procedures include tumor biopsies, treatment of hydrocephalus, aspiration and evacuation of deep-brain hematomas, Ommaya catheter insertion, Deep Brain Stimulation, and minimal access craniotomies, among others. In all cases, misplacement of the surgical instrument may result in non-diagnostic tissue or catheter misplacement, as well as hemorrhage and severe neurological complications. These minimally invasive procedures are difficult to perform without the help of support systems that enhance the accuracy and steadiness of the surgical gestures.

All these procedures have four important common properties: 1) they are minimally invasive surgeries (MIS), performed via a keyhole of 3-30 mm diameter opened on the skull dura; 2) they require precise targeting and mechanical guidance support; 3) the targets and entry points are determined preoperatively in a CT/MRI image; and 4) it is assumed that little or no brain shift occurs due to the MIS approach. All the procedures follow a similar protocol, shown in , which we use to compare existing solutions and present our own.

Table I. Surgical protocol for minimally invasive keyhole neurosurgeries.

Four types of support systems for minimally invasive keyhole neurosurgery are currently in use: 1) stereotactic frames; 2) navigation systems; 3) robotic systems; and 4) interventional imaging systems. and summarize the advantages and disadvantages of the existing systems.

Table II. Characteristics of support techniques for minimally invasive keyhole neurosurgery according to the steps in . Steps 1(b) and 2(a) are common to all; + and − indicate a relative advantage/disadvantage.

Table III. Characteristics of support techniques for minimally invasive keyhole neurosurgery (+ + +indicates the most advantageous, + the least). Criteria assessed are as follows (from left to right): clinical accuracy, range of applicability, ease of use in the OR, intraoperative adaptability of preoperative plan, bulk (size and weight), patient morbidity, and cost.

Stereotactic frames provide precise positioning with a manually adjustable frame rigidly attached to the patient's skull. The advantages of these frames are that 1) they have been extensively used for a long time and are the current standard of care; 2) they are relatively accurate (within ≤ 2 mm of the target) and provide rigid support and guidance for needle insertion; and 3) they are relatively inexpensive (USD 50K) compared to other systems. Their disadvantages are that 1) they require preoperative implantation of the head screws under local anesthesia; 2) they cause discomfort to the patient before and during surgery; 3) they are bulky, cumbersome, and require manual adjustment during surgery; 4) they require immobilization of the patient's head during surgery; 5) selecting new target points during surgery requires new manual computations for frame coordinates; and 6) they do not provide real-time feedback or validation of the needle position.

Navigation systems show in real time the location of hand-held tools on the preoperative image on which targets have been defined Citation[4–6]. The registration between the preoperative data and the patient is performed via skin markers affixed to the patient's skull before scanning, or by acquiring points on the patient's face with a laser probe or by direct contact. Augmented with a manually positioned tracked passive arm (e.g., Phillips EasyTaxis™ or Image-Guided Neurologics Navigus™ Citation[12]), they also provide mechanical guidance for targeting. As nearly all navigation systems use optical tracking, careful camera positioning and maintenance of a direct line of sight between the camera and tracked instruments is required at all times. The main advantages of navigation systems are that 1) they provide continuous, real-time surgical tool location information with respect to the defined target; 2) they allow the selection of new target points during surgery; and 3) introduced in the 1990s, they are quickly gaining wide clinical acceptance. Their disadvantages are 1) cost (≥USD 200K); 2) the requirement for head immobilization; 3) the requirement to maintain the line of sight; 4) the requirement for manual passive arm positioning, which can be time-consuming and error-prone; and 5) the requirement for intra-operative registration, the accuracy of which depends on the positional stability of the skin.

Robotic systems provide frameless stereotaxy with a robotic arm that automatically positions itself with respect to a target defined in the preoperative image Citation[7–11]. They have the potential to address steps 2b, 2c, and 2d in with a single system. Two floor-standing commercial robots are NeuroMate™ (Integrated Surgical Systems, Davis, CA) and PathFinder™ (Armstrong HealthCare Ltd., High Wycombe, UK). Their advantages are that 1) they provide a frameless integrated solution; 2) they allow for intraoperative plan adjustment; and 3) they are rigid and accurate. Their disadvantages are that 1) they are bulky and cumbersome due to their size and weight, thus posing a potential safety risk; 2) they require head immobilization or real-time tracking; 3) they are costly (USD 300–500K); and 4) they are not commonly used.

Interventional imaging systems produce images showing the actual needle/probe position with respect to the brain anatomy and target Citation[1–3]. Several experimental systems also incorporate real-time tracking (e.g., that developed by Stereotaxis, Inc., St. Louis, MO) and robotic positioning devices. The main advantage is that these systems provide real-time, up-to-date images that account for brain shift (a secondary issue in the procedures under consideration) and needle bending. Their main drawbacks are 1) limited availability; 2) cumbersome and time-consuming intraoperative image acquisition; 3) high nominal and operational costs; and 4) the requirement for room shielding with intraoperative MRI.

To date, only a few clinical studies have been performed comparing the clinical accuracy of these systems. These studies compared frameless navigation with frame-based stereotaxy Citation[13], and frameless robotics with frame-based stereotaxy Citation[10], Citation[14], Citation[15]. The desired Target Registration Error (TRE) is 1–2 mm, and this is critically dependent on the registration accuracy.

Motivation and goals

Our motivation for developing a new system for precise targeting in minimally invasive keyhole neurosurgery system is two-fold. First, precise targeting based on CT/MRI is a basic surgical task in an increasingly large number of procedures. Moreover, additional procedures, such as tissue and tumor DNA sampling, which cannot be performed using anatomical imaging, are rapidly gaining acceptance. Second, existing support systems do not provide a fully satisfactory solution for all minimally invasive keyhole neurosurgeries. Stereotactic frames entail patient morbidity, require head immobilization and manual adjustment of the frame, and do not allow intraoperative plan changes. Navigators are frameless but require maintenance of the line of sight between the position sensor and the tracked instruments, and time-consuming manual positioning of a mechanical guiding arm. Existing robotic systems can perform limited automatic targeting and mechanical guidance, but are cumbersome, expensive, difficult to use, and require head immobilization. Interventional imaging systems do not incorporate preoperative planning, have limited availability, are time-consuming, and incur high costs.

We are developing a novel image-guided system for precise automatic targeting of structures inside the brain that combines the advantages of the stereotactic frame (accuracy, relatively low cost, and mechanical support) and robotic systems (reduced patient morbidity, automatic positioning, and intraoperative plan adaptation) with small system bulk and optional head immobilization Citation[16], Citation[17]. Our goal is to develop a safe and easy-to-use system based on a miniature robot, with a clinical accuracy of 1–2 mm Citation[23]. The system is based on the same assumptions regarding brain shift and needle bending as with stereotactic frames, navigation systems, and available robotic systems.

Materials and methods

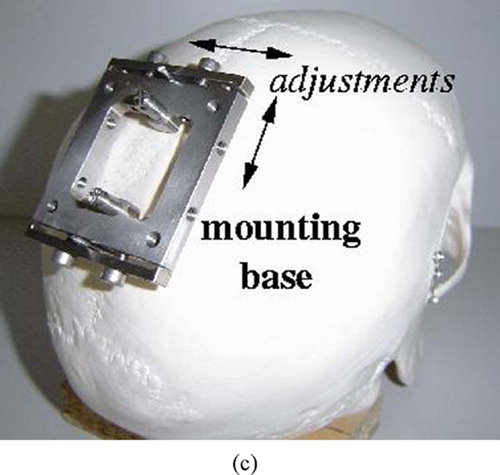

Our system concept is to automatically position a mechanical guide to support keyhole drilling and insertion of a needle, probe or catheter based on predefined entry point and target locations in a preoperative CT/MRI image. The system incorporates the miniature MARS robot Citation[18] mounted on the head immobilization clamp or directly on the patient's skull via pins (). (MARS was originally developed by co-author Prof. Shoham for spinal pedicle screw insertion and is currently commercialized for this application in the SpineAssist system by Mazor Surgical Technologies, Caesarea, Israel.)

Figure 1. System concept: (a) The MARS robot mounted on the skull. (b) Skull-mounted pins. (c) The robot mounting base. [colour version available online.]

![Figure 1. System concept: (a) The MARS robot mounted on the skull. (b) Skull-mounted pins. (c) The robot mounting base. [colour version available online.]](/cms/asset/d7bebd34-33d5-486c-b7cd-905b60d1fae2/icsu_a_190854_f0001_b.jpg)

Registration to establish a common reference frame between the preoperative CT/MRI image, the intraoperative location of the patient's head, and the location of the robot is based on intraoperative surface scanning. It is accomplished by acquiring intraoperative 3D surface scans of the patient's upper facial features (eyes and forehead) or ear Citation[20] and a custom registration jig, and matching the scans to their respective preoperative geometric models. Once this registration is performed, the transformation that aligns the planned and actual robot targeting guide locations is computed. The robot is then automatically positioned and locked in place so that its targeting guide axis coincides with the entry point/target axis.

The system hardware consists of 1) the MARS robot and its controller; 2) a custom robot mounting base, targeting guide, and registration jig; 3) an off-the-shelf 3D surface scanner; 4) a standard digital video camera; and 5) a standard PC. The adjustable robot mounting jig attaches the robot base to either the head immobilization frame or to skull-implanted pins. The system software modules are 1) preoperative planning; 2) intraoperative robot positioning; 3) 3D surface scan processing; and 4) three-way registration. We next describe the MARS robot, the surgical work-flow, and the system modules.

The MARS robot

MARS is a miniature parallel structure with six degrees of freedom that can be directly mounted on the patient bony structure or on a fixed stand near the surgical site. It consists of a fixed platform that attaches to the bone or fixed stand and a moving platform connected in parallel by six independent linear actuators. The moving platform is fitted with a custom targeting guide. The robot dimensions are 5 × 8 × 8 cm, its weight is 250 g, its work volume approximately 15 cm3, and its positional accuracy is 0.1 mm, which is more than sufficient for clinical applications and better than that of stereotactic frames and commercial tracking systems.

The robot is designed to operate in semi-active mode: it positions and orients the targeting guide to a predefined location, and locks itself there, becoming a rigid structure. It can withstand lateral forces of 10 N Citation[19]. The robot is placed in a fully sterilizable cover and is operated via a single cable from a controller housed in a separate unit.

As mentioned above, MARS is part of SpineAssist, a commercial FDA-approved system for supporting pedicle screw insertion in spinal fusion Citation[21], Citation[22]. In this system, MARS is directly mounted on the vertebra spinous process or bridge into which the pedicle screws will be inserted. Using a few intraoperative fluoroscopic X-rays, the robot location is registered to the preoperative CT images on which the desired pedicle screw locations have been defined. The robot then positions itself so that its targeting guide axis coincides with that of the planned pedicle screw axis. The surgeon then inserts the drill into the guiding sleeve held by the robot and drills the pilot hole. This operation is repeated for each pedicle and vertebra. Clinical validation studies report an accuracy of 1 ± 0.5 mm. A similar application was developed for distal locking in intramedullary nailing Citation[23].

MARS is best viewed as a precise fine-positioning device Citation[18]. Its advantages stem from its small size and design: 1) it can be mounted directly on the patient's bone, and thus requires no tracking or head immobilization; 2) it is intrinsically safe, as its power and motion range are restricted; 3) it is unobtrusive; 4) it is covered by a sterilizable sleeve; and 5) it costs much less (approximately USD 50K) than larger robots. Its disadvantage is that its work volume and range of motion are small, so it requires compensation with a coarse positioning method.

The similarities in technical approach between the orthopaedic procedures for which MARS was developed and the keyhole neurosurgeries considered in this paper are 1) the requirement for precise targeting with mechanical guidance; 2) the small working volume of the surgery; and 3) the need for preoperative image planning. The main differences are 1) the software used for preoperative planning; 2) the registration basis and procedure; 3) the robot fixation and targeting guides; and 4) the need to place the robot in the vicinity of the entry point (coarse positioning).

Surgical protocol

The surgical protocol of the new system is as follows. A preoperative markerless and frameless volume CT/MRI image of the patient is acquired. Next, using the preoperative planning module, the surgeon defines on the image the entry points and target locations, and determines the type of robot mounting (head clamp or skull, depending on clinical criteria) and the desired location of the robot.

Intraoperatively, under general or local anesthesia and following sterile draping of the scalp, guided by a video-based intraoperative module, the surgeon places the robot approximately in its planned location. When the robot is mounted on the head frame, the robot base is attached to an adjustable mechanical arm affixed to the head clamp. When mounted on the skull, two 4-mm pins are screwed into the skull under local anesthesia and the robot mounting base is attached to them. Next, the registration jig is placed on the robot mounting base and a surface scan is acquired that includes both the patient's forehead or ear (frontal or lateral scan, depending on the robot's location) and the registration jig. The registration jig is then replaced by the robot fitted with the targeting guide, and the registration module automatically computes the offset between the actual and desired targeting guide orientations. It then positions and locks the robot so that the actual targeting guide axis coincides with the planned needle insertion trajectory. The surgeon can then manually insert the needle, probe or catheter to the desired depth and have MARS make small translational adjustments (±10 mm) of the needle along the insertion axis. On surgeon demand, the system automatically positions the robot for each of the predefined trajectories. The intraoperative plan can be adjusted during the surgery by deleting and adding new target points. The system automatically computes the trajectories and the corresponding robot positions.

Preoperative planning

The preoperative planning module inputs the CT/MRI image and geometric models of the robot, its work volume, and the targeting guide. It automatically builds from the CT/MRI the skull and forehead/ear surfaces and extracts four landmarks (eyes or ear) for use in coarse registration. The module allows interactive visualization of the CT/MRI slices (axial, cranial and neurosurgical views) and the 3D skull surface, and enables the surgeon to define entry and target points, visualize the resulting needle trajectories, and make spatial distance measurements ().

Figure 2. Preoperative planning module screens. (a) Entry and target point selection. (b) Robot base location and range. [colour version available online.]

![Figure 2. Preoperative planning module screens. (a) Entry and target point selection. (b) Robot base location and range. [colour version available online.]](/cms/asset/95e93487-2ae1-413f-b2ee-57e6392c075a/icsu_a_190854_f0002_b.jpg)

The skull and forehead/ear surface models are constructed in two steps. First, the CT/MRI images are segmented with a low-intensity threshold to separate the skull pixels from the air pixels. The ear tunnel pixels are then identified and filled. Next, the surfaces are reconstructed with an enhanced Marching Cubes surface reconstruction algorithm. The four eye/ear landmarks are computed by identifying the areas of maximum curvature as described in reference Citation[24].

Based on the surgeon-defined entry and target points, and the robot mounting mode (skull or head clamp), the module automatically computes the optimal placement of the robot base and its range. The optimal robot base placement is such that the planned needle trajectory is at the center of the robot's work volume. Other placements are assigned a score based on their distance from this work volume center. The results are graphically shown to the surgeon (), who can then select the actual position that also satisfies clinical criteria, such as not being near the cranial sinuses, temporal muscle, or emissary vein. The output includes the surgical plan (entry and target points), the planned robot base placement, and the face surface mesh and landmarks.

Referring to , the goal is to compute the transformation that aligns the planned trajectory , defined by the entry and target points in image coordinates,

and

, to the targeting guide axis with the robot in home position, in robot coordinates,

. The location of the guide along the planned trajectory axis is determined by placing the tip of the robot guide in its home position,

, onto the closest point

on the planned trajectory,

. The location of the robot tip guide

in the home position with respect to the robot base origin

is known. The transformation is such that the robot base origin

and Z axis coincide with the desired robot placement in image coordinates

and its outward normal,

. When the robot is skull-mounted, this is the normal to the skull surface at that point; otherwise, it is simply the upward normal at the point. The X axis of the robot coincides with the projection of the axis from the robot base origin

to the entry point

on the robot base plane defined by the robot base origin and the Z axis defined above. The Y axis is perpendicular to the Z and X axes.

The method for computing the optimal robot placement is as follows. First, the transformation that aligns the robot and image coordinate frames is computed by matching three points,

, i = 1,2,3, along the X,Y,Z axes at unit distance from the robot origin

with Horn's closed-form solution Citation[25]. Then, the planned trajectory is computed in robot coordinates, and the closest point to the robot guide is obtained with

The transformation translational vector is directly obtained from . The transformation rotation matrix is obtained from the angle between the planned trajectory axis and current robot guide axis with the formula of Rodriguez.

As the optimal placement might not be clinically feasible, the module also computes the transformations of alternative robot placements on a uniform 5 × 5 mm grid over the skull (head-mounted) or cube near the skull (frame-mounted) and scores them against the optimal one. The scoring is as follows: Let ,

,

be the current and maximum robot rotations and translations, respectively, with respect to each axis i. The ratio between the current move and the maximum possible move is computed for each axis. From the above, the 6-dimensional vector

is constructed. Its norm, divided by √6 (each component is normalized) yields a normalized score where zero represents no robot movement and one represents large robot movements.

Intraoperative robot positioning

The intraoperative robot positioning module helps the surgeon place the robot base close (within 5 mm) to its planned position for both skull and frame-mounted cases. Given the small robot work volume and the lack of anatomical landmarks on the skull, this coarse positioning is necessary to avoid deviations of 10 mm or more from the planned position. These deviations can severely restrict or invalidate altogether the preoperative plan.

The module shows the surgeon a real-time, augmented reality image consisting of a video image of the actual patient skull and a positioning jig, and, superimposed on it, a virtual image of the same jig indicating the robot base in its desired location (). The surgeon can then adjust the position and orientation of the positioning jig until it matches the planned location. The inputs are the preoperative plan, the geometric models of the robot base and the patient's face, the real-time video images, and a face/ear scan.

Figure 4. Intraoperative robot positioning augmented reality images. (a) Starting position. (b) Middle position. (c) Final position. [colour version available online.]

![Figure 4. Intraoperative robot positioning augmented reality images. (a) Starting position. (b) Middle position. (c) Final position. [colour version available online.]](/cms/asset/141b923f-a7e3-4d97-9b5e-80a5e2d02311/icsu_a_190854_f0004_b.jpg)

The goal is to compute the planned robot base position with respect to the video camera image so that the robot base model can be projected on the video image at its desired planned position (). The video camera is directly mounted on the 3D surface scanner and is pre-calibrated, so that the transformation between the two coordinate systems, is known in advance. A 3D surface scan of the face is acquired and matched to the geometric face model with the same method used for three-way registration described below. This establishes the transformation between the preoperative plan and the scanner,

. By composing the two transformations, we obtain the transformation between the preoperative plan and the video,

.

Surface scan processing

The surface scan processing module automatically extracts three sets of points from the intraoperative 3D surface scan: 1) the forehead (frontal scan) or ear (lateral scan) cloud of points; 2) four eye or ear landmark points; and 3) the registration jig cloud of points (when the jig is present in the scan). The forehead/ear cloud of points is computed by first isolating the corresponding areas and removing outliers. The landmark points are extracted by fitting a triangular mesh and identifying the areas of maximum curvature, as in the CT/MRI images. The jig cloud of points is computed by isolating the remaining points.

Three-way registration

The three-way registration module computes the transformation that establishes a common reference frame between the preoperative plan and the intraoperative locations of the robot mounting base and patient's head. Two transformations are computed to this end:

- preoperative plan to the intraoperative patient face/ear scan data; and

- robot mounting base to scanner. The inputs are the preoperative forehead/ear surface and landmark points (preoperative planning module) and the intraoperative face/ear cloud of points, the intraoperative landmark points, and the registration jig cloud of points (surface scan processing module).

The transformation is computed by first computing a coarse correspondence between the four pairs of preoperative/intraoperative landmark points with Horn's closed-form solution. The transformation is then refined with robust Iterative Closest Point (ICP) registration Citation[26], which is performed between a small (1,000–3,000) subset of the surface scan points and the CT/MRI points on the face/ear surface. shows an example of this registration.

Figure 6. Registration between intraoperative surface scan and preoperative surface model from CT/MRI. (a) Before registration. (b) After registration. [colour version available online.]

![Figure 6. Registration between intraoperative surface scan and preoperative surface model from CT/MRI. (a) Before registration. (b) After registration. [colour version available online.]](/cms/asset/3cc983d4-7629-4320-8277-5ba77c071b63/icsu_a_190854_f0006_b.jpg)

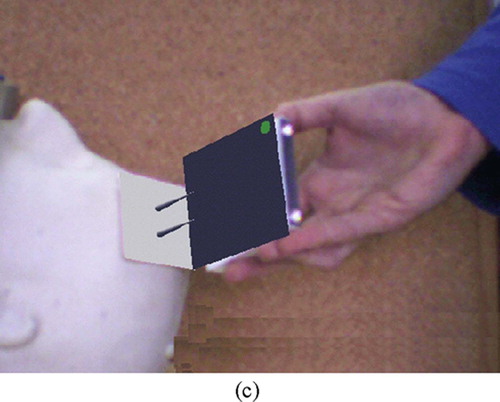

The transformation is obtained from the custom design of the registration jig. The registration jig is a 75 × 75 × 6 mm aluminum base with a wide-angled tetrahedron of 9 mm height that is placed on the robot mounting base (). It is designed so that all four planes can be seen from a wide range of scanning viewpoints, with sufficient area for adequate scan sampling. To facilitate plane identification, all pairwise plane angles are different.

Figure 7. The registration jig. (a) Photograph. (b) A scan showing the four vertex points. [colour version available online.]

![Figure 7. The registration jig. (a) Photograph. (b) A scan showing the four vertex points. [colour version available online.]](/cms/asset/ad81392a-1868-4194-aa52-71d921fa65c6/icsu_a_190854_f0007_b.jpg)

The registration jig model is matched to the surface scanner data as follows. First, a Delaunay triangulation of the registration jig cloud of points is computed. Next, the normals of each mesh triangle vertex are computed and classified into five groups based on their value: four groups correspond to each of the planes of the registration jig, and one to noise. For each group, a plane is then fitted to the points. The four points at the intersection between any three planes are the registration jig vertices. The affine transformation between these four points and the corresponding ones in the model is then computed. Finally, an ICP rigid registration on the plane points is computed to further reduce the error. The actual robot mounting base location with respect to the preoperative plan is determined from this transformation, and from it and the robot characteristics the targeting guide location is found.

Results

We have implemented a complete prototype of the proposed system and have conducted experiments to validate it and quantify its accuracy. The first set of experiments quantifies the accuracy of the MRI to surface scan registration. The second set of experiments quantifies the in vitro registration error of the entire system.

MRI to surface scan registration

This set of experiments determines the accuracy of the MRI/surface scan registration. In the first experiment, we obtained two sets of clinical MRI and CT data for the same patient and used the face surface CT data to simulate the surface scans. The MRI scans are 256 × 256 × 80 pixels with voxel size of 1.09 × 1.09 × 2.0 mm, from which 300,000 face surface points are extracted. The CT scans are 512 × 512 × 30 pixels with voxel size of 0.68 × 0.68 × 1.0 mm, from which 15,600 points from the forehead were uniformly sampled. The surface registration error, defined as the average RMS distance between the MRI and CT surface data, is 0.98 mm (standard deviation = 0.84 mm), computed in 5.6 seconds.

In the second experiment, we measured the accuracy of the MRI/surface scan registration by acquiring 19 pairs of MRI/3D surface scans of the student authors with different facial expressions – worried or relaxed, eyes open or closed. The MRI scans are 256 × 256 × 200 pixels with voxel size of 0.93 × 0.93 × 0.5 mm, from which 100,000–150,000 face surface points are extracted. The surface scans were obtained with a laser scanner (Konica Minolta Vivid 910 – accuracy of 0.1 mm or better – with a normal lens (f = 14 mm) in the recommended 0.6–1.2 m range). The 35,000–50,000 surface scan points were uniformly downsampled to 1,600–3,000 points. summarizes the results. The registration RMS error was 1.0 mm (standard deviation = 0.95 mm), computed in 2 seconds. No reduction in error was obtained with data sets of 3,000 points or more. These results show an adequate error and compare very favorably with those obtained by Lueth et al. Citation[20].

Table IV. MRI/laser scan registration error for 19 data-set pairs of two patients. Columns indicate MRI scans and patient attitude (worried or relaxed, eyes closed or open). Rows indicate surface scans, attitude (worried or relaxed, eyes closed in all cases) and the distance between the surface scanner and the patient's face. Each entry shows the mean (standard deviation) surface registration error in millimetres. The overall RMS error is 1 mm (SD = 0.95 mm) computed in 2 seconds.

In vitro system-wide experiments

The second set of experiments aims at testing the in vitro registration accuracy of the entire system. For this purpose, we manufactured the registration jig, a precise stereolithographic phantom replica of the outer head surface of one of the authors (M. Freiman), from an MRI dataset () and a positionable robot mounting base (). Both the phantom and the registration jigs include fiducials at known locations for contact-based registration. In addition, the phantom includes fiducials inside the skull that simulate targets inside the brain. The phantom is attached to a base with a rail onto which slides a manually adjustable robot mounting base. The goal is to measure the Fiducial and Target Registration Errors (FRE and TRE).

Figure 8. Two views of the phantom model created from MRI data, augmented with registration fiducials and target fiducials inside the skull. The detail shows the geometry of the fiducial. (a) Side view. (b) Top view. [colour version available online.]

![Figure 8. Two views of the phantom model created from MRI data, augmented with registration fiducials and target fiducials inside the skull. The detail shows the geometry of the fiducial. (a) Side view. (b) Top view. [colour version available online.]](/cms/asset/7560def2-c2d4-4f44-9c42-0720f257f403/icsu_a_190854_f0008_b.jpg)

Figure 9. In vitro experimental setup. (a) Front view, with registration jig. (b) Side view with robot. [colour version available online.]

![Figure 9. In vitro experimental setup. (a) Front view, with registration jig. (b) Side view with robot. [colour version available online.]](/cms/asset/4fb82c1b-60dc-490e-816d-2ef8276e284f/icsu_a_190854_f0009_b.jpg)

We used an optical tracking system (Polaris, Northern Digital Inc., Waterloo, Ontario, Canada − 0.3 mm accuracy) as a precise coordinate measuring machine to obtain the ground-truth relative locations of the phantom and registration jig. Their spatial location is determined by touching the phantom and registration jig fiducials with a calibrated tracked pointer. The positional error of the tracked pointer at the tip is estimated at 0.5 mm. The phantom and the registration jig were scanned with a video scanning system (Optigo200, CogniTens, Ramat Hasharon, Israel – 0.03 mm accuracy). The phantom manufacturing error with respect to the MRI model is 0.15 mm, as measured by the Optigo200.

To determine the accuracy of the three-way registration, we compute two registration chains (). The first one defines the transformation from the robot base to the phantom coordinates computed with the 3D surface scanner

, as described in the three-way registration section. The second one defines the same transformation, this time computed with an optical tracker

. The transformations

and

are obtained by matching the phantom and registration jig fiducial locations in the model to those obtained by contact with the optical tracker. The disparity between the transformations T1 and T2 at different points (robot base fiducials, phantom fiducials, targets) determines the different types of error.

Figure 10. Registration chains for the in vitro experiment. Each box corresponds to an independent coordinate system. The location of the phantom targets with respect to the robot base origin is computed once via the surface scanner (phantom/scanner and robot/scanner transformations) using the face and registration face surfaces, and once via the optical tracker (phantom tracker and robot/tracker transformations) using the registration jig and the face fiducials. By construction, the phantom and the MRI are in the same coordinate system.

The first experiment quantifies the accuracy of the three-way registration algorithm at the robot mounting base. First, we measured the accuracy of the registration between the real faces and the CogniTens scans, taken several months apart, as in the earlier experiment. The RMS error is 0.7 mm (SD = 0.25 mm), which shows that registration based on facial features is accurate and stable over time. Second, we measured the accuracy and repeatability of the mechanical interface between the robot base and registration jig mounting with the optical tracker by putting the registration jig on and off 10 times and measuring the fiducial offset location. The FRE is 0.36 mm (SD = 0.12 mm), which is within the measuring error of the optical tracker. Next, we computed all the transformations in the registration chains, quantified their accuracy, and computed TRE and FRE for points on the registration jig. shows the results of five runs. The TRE is 1.7 mm (SD = 0.7 mm) for three targets on the registration jig.

Table V. In vitro registration results (in mm) of five experiments. The second and third columns are the surface scanner phantom and robot base surface registration errors. The fourth and fifth columns are the fiducial tracker phantom and registration jig registration errors (both FRE – Fiducial Registration Error – and TRE – Target Registration Error – for a target approximately 150 mm from the mounting base. The last column is the error between the target scanner and tracker fiducial locations.

The second experiment quantifies the accuracy of the three-way registration algorithm, this time for several targets inside the skull. In each trial, we performed three-way registration with the registration jig, and for each of the targets labeled A to F the robot was moved so that the needle guide axis coincided with the planned target axis. We inserted the optically tracked needle into the needle guide and recorded the points on its trajectory to the target. We then computed the best-fit, least-squares line equation of these points, the shortest Euclidean distance between the planned and actual entry and target points, and the relative angle between the axes. shows the results for four runs. The TRE is 1.74 mm (SD = 0.97 mm) at the entry point, 1.57 mm (SD = 1.68 mm) at the target point, and 1.60° (0.58°) for the axis orientation.

Table VI. In vitro registration results (in mm) of four trial experiments. The first column shows the run number. The second column shows the surface scanner registration error with respect to the phantom. The third column shows the target name inside the brain. The fourth, fifth, and sixth columns show needle errors at the entry point and target, and the trajectory angular error. The bottom row shows the average and standard deviation over all 22 trials and targets.

Discussion

Our in vitro experimental results suggest that the proposed concept has the potential to be clinically feasible. They establish the viability of the surface scan concept and the accuracy of the location error of the actual phantom targets with respect to their MRI location to 1.7 mm, which is close to the required 1.0–1.5 mm clinical accuracy in many keyhole neurosurgical procedures.

A closer examination of the experimental results helps us identify the sources of error and devise ways to address them. The first observation is that the ground truth is itself a source of error: the optically tracked pointer at the tip has an intrinsic accuracy of 0.5 mm. Replacing it with a more accurate coordinate measuring device will very likely decrease the error by a few tenths of a millimetre. The second observation is that a spatial accuracy of 0.3 mm or less, with several tens of thousands of points for the 3D surface scanner, is sufficient. This is because most of the error comes from the inaccuracy of the MRI data set and the facial features motion (RMS error of 0.7 mm). Thus, a less expensive video-based scanner can be used. Finally, since our experiments, the MARS robot has been upgraded and improved by Mazor Surgical Technologies, the manufacturer, with an RMS positional accuracy of 0.1 mm irrespective of the robot's orientation.

Conclusion and future work

We have described a system for automatic precise targeting in minimally invasive keyhole neurosurgery that aims to overcome the limitations of existing solutions. The system, which incorporates the miniature parallel robot MARS, will eliminate the morbidity and head immobilization requirements associated with stereotactic frames, eliminate the line-of-sight and tracking requirements of navigation systems, and provide steady and rigid mechanical guidance without the bulk and cost of large robots. The system-wide in vitro experimental results suggest that the desired clinical accuracy could be within reach.

Further work is, of course, necessary and is currently under way. The first issue is to quantify the clinical accuracy of the registration of preoperative MRI to intraoperative 3D surface scan data. We are currently conducting a study on a dozen patients scheduled for neurosurgery for whom preoperative MRI studies are prescribed. Intraoperatively, before the surgery begins, we acquire several frontal and lateral 3D surface scans of the patients' heads. In the laboratory, we register the two data sets and compare them as described in the Results section. We study both frontal and lateral matching, and determine their accuracy, variability, and robustness in the presence of intubation and nasal canulation. Our preliminary results indicate a mean registration error of 1 mm.

In case the accuracy of the preoperative MRI to intraoperative 3D surface scan registration is not satisfactory, we have devised a fallback plan using skin markers similar to those used for navigation-based neurosurgery. Preoperatively, the skin markers are placed on the patient's forehead and skull, and an MRI dataset is acquired. In the planning step, the fiducials are automatically identified and their locations computed. Intraoperatively, before the surgery starts, a 3D surface scan is acquired in which most or all of the skin fiducials are visible to the scanner, and the fiducial locations are computed (this is the equivalent of touching the skin markers with a tracked pointer). A closed form registration between the two skin marker locations is then performed. The accuracy of this procedure is expected to be at least as good as that of the optical system used for navigation, since the accuracy of the 3D surface scanner is higher than that of the optical tracking system. Note that a hybrid approach, in which both a subset of surface points and skin markers are used, is also viable. This has the advantage of using all the data available.

Further validation will be undertaken with respect to clinical viability of the intraoperative robot positioning, system performance, assumptions validation, ergonomy, surgical workflow, and other metrics.

Acknowledgements

This research is supported in part by a Magneton grant from the Israel Ministry of Industry and Trade. We thank Arik Degani from Mabat 3D Technologies Ltd. and Dr. Tamir Shalom from CogniTens for their generous assistance in acquiring the 3D surface scans, and Haim Yeffet for manufacturing the experimental setup platform.

References

- Tseng C-S, Chen H-H, Wang S-S, et al. Image guided robotic navigation system for neurosurgery. J Robotic Systems 2000; 17(8)439–447

- Chinzei K, Miller K. MRI guided surgical robot. Australian Conference on Robotics and Automation, SydneyAustralia, November, 2001

- Kansy K, Wikirchen P, Behrens U, Berlage T, Grunst G, Jahnke M, Ratering R, Schwarzmaier H J, Ulrich F (1999) LOCALITE - a frameless neuronavigation system for interventional magnetic resonance imaging. Proceedings of the Second International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI '99), CambridgeUK, September, 1999, C Taylor, A Colchester. Springer, Berlin, 1679: 832–841, Lecture Notes in Computer Science

- Kosugi Y, Watanabe E, Goto J, Yoshimoto S, Takakura K, Ikebe J. An articulated neurosurgical navigation system using MRI and CT images. IEEE Trans Biomed Eng 1998; 35(2)147–152

- Akatsuka Y, Kawamata T, Fujii M, Furuhashi Y, Saito A, Shibasaki T, Iseki H, Hori T (2000) AR navigation system for neurosurgery. Proceedings of the Third International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2000), Pittsburgh, PA, October, 2000, S L Delp, A M DiGioia, B Jaramaz. Springer, Berlin, 833–838, Lecture Notes in Computer Science 1935

- Grimson E, Leventon M, Ettinger G, Chabrerie A, Ozlen F, Nakajima S, Atsumi H, Kikinis R, Black P (1998) Clinical experience with a high precision image-guided neurosurgery system. Proceedings of the First International Conference on Medical Image Computing and Computer-Assisted Interventions (MICCAI '98), Cambridge, MA, October, 1998, W M Wells, A Colchester, S Delp. Springer, Berlin, 63–72

- Chen M D, Wang T, Zhang Q X, Zhang Y, Tian Z M. A robotics system for stereotactic neurosurgery and its clinical application. Proceedings of IEEE International Conference on Robotics and Automation (ICRA) 1998, LeuvenBelgium, May, 1998; 2: 995–1000

- Masamune K, Ji L H, Suzuki M, Dohi T, Iseki H, Takakura K (1998) A newly developed stereotactic robot with detachable drive for neurosurgery. Proceedings of the First International Conference on Medical Image Computing and Computer-Assisted Interventions (MICCAI '98), Cambridge, MA, October, 1998, W M Wells, A Colchester, S Delp. Springer, Berlin, 215–222

- Davies B, Starkie B, Harris S J, Harris, Agterhuis E, Paul V, Auer L M. Neurobot: A special-purpose robot for neurosurgery. In:. Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), San Francisco, CA, April, 2000; 4104–4109

- Hang Q, Zamorano L, Pandya A K, Perez R, Gong J, Diaz F. The application accuracy of the NeuroMate Robot: a quantitative comparison with frameless and frame-based surgical localization systems. Comput Aided Surg 2002; 7(2)90–98

- McBeth P B, Louw D F, Rizun P R, Sutherland G R. Robotics in neurosurgery. Am J Surg 2004; 188: 68S–75S

- Hall W A, Liu H, Truwit C L. Navigus trajectory guide. Neurosurgery 2000; 46(2)502–504

- Dorward N L, Paleologos T S, Alberti O, Thomas D GT. The advantages of frameless stereotactic biopsy over frame-based biopsy. Br J Neurosurg 2002; 16(2)110–118, 2

- Morgan P S, Carter T, Davis S, Sepehri A, Punt J, Byrne P, Moody A, Finlay P (2003) The application accuracy of the Pathfinder neurosurgical robot. Proceedings of the 17th International Congress and Exhibition (CARS 2003), LondonUK, June, 2003, H U Lemke, M W Vannier, K Inamura, A G Farman, K Doi, J HC Reiber. Elsevier, Amsterdam, 561–567, Computer Assisted Radiology and Surgery

- Morgan P S, Holdback J, Byrne P, Finlay P. Improved accuracy of PathFinder Neurosurgical Robot. In:. Proceedings of the Second International Symposium on Computer Aided Surgery around the Head (CAS-H), BernSwitzerland, September, 2004

- Joskowicz L, Shoham M, Shamir R, Freiman M, Zehavi E, Shoshan Y (2005) Miniature robot-based precise targeting system for keyhole neurosurgery: concept and preliminary results. Proceedings of the 19th International Congress and Exhibition (CARS 2005), BerlinGermany, June, 2005, H U Lemke, K Inamura, K Doi, M W Vannier, A G Farman. Elsevier, Amsterdam, 618–623, Computer Assisted Radiology and Surgery

- Shamir R, Freiman M, Joskowicz L, Shoham M, Zehavi E, Shoshan Y (2005) Robot-assisted image-guided targeting for minimally invasive neurosurgery: planning, registration, and in vitro experiment. Proceedings of the 8th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2005), Palm Springs, CA, October, 2005, J S Duncan, G Gerig. Springer, Berlin, 3750: 131–138, Part II. Lecture Notes in Computer Science

- Shoham M, Burman M, Zehavi E, et al. Bone-mounted miniature robot for surgical procedures: concept and clinical applications. IEEE Trans Robotics Automation 2003; 19(5)893–901

- Wolf A, Shoham M, Shnider M, Roffman M. Feasibility study of a mini robotic system for spinal operations: analysis and experiments. 2004; 29(2)220–228, Spine

- Marmulla R, Hassfeld S, Lueth T. Soft tissue scanning for patient registration in image-guided surgery. Comput Aided Surg 2003; 8(2)70–81

- Togawa D, Lieberman I H, Benzel B C, Kayanja M M, Reinhardt M K, Zehavi E. Bone-mounted miniature robot for spinal surgery - accurate insertion for pedicle screws and translaminar facet screws in cadaveric experiments. Transactions of the 12th International Meeting on Advanced Spine Techniques, Banff, AlbertaCanada, July, 2005

- Shoham M, Lieberman I, Benzel B C, Zehavi E, Zilberstein A, Fridlander A, Knoller N. Robotic assisted spinal surgery - from concept to clinical practice. Comput Aided Surgery, [submitted]

- Yaniv Z, Joskowicz L. Precise robot-assisted guide positioning for distal locking of intramedullary nails. IEEE Trans Med Imag 2005; 24(5)624–635

- Taubin G (1995) Estimating the tensor of curvature of a surface from a polyhedral approximation. Proceedings of the 5th International Conference on Computer Vision (ICCV), Cambridge, MA, June, 1995. IEEE Computer Society Press, Los Alamitos, CA, 902–907

- Horn B KP. Closed-form solution of absolute orientation using quaternions. J Optical Soc Am 1987; 4(4)119–148

- Besl P J, McKay N D. A method for registration of 3D shapes. IEEE Trans Pattern Anal Machine Intell 1992; 14(2)239–256