Abstract

Background: The neurologic examination is a challenging component of the physical examination for medical students. In response, primarily based on expert consensus, medical schools have supplemented their curricula with standardized patient (SP) sessions that are focused on the neurologic examination. Hypothesis-driven quantitative data are needed to justify the further use of this resource-intensive educational modality, specifically regarding whether using SPs to teach the neurological examination effects a long-term benefit on the application of neurological examination skills.

Methods: This study is a cross-sectional analysis of prospectively collected data from medical students at Weill Cornell Medical College. The control group (n=129) received the standard curriculum. The intervention group (n=58) received the standard curriculum and an additional SP session focused on the neurologic examination during the second year of medical school. Student performance on the neurologic examination was assessed in the control and intervention groups via an OSCE administered during the fourth year of medical school. A Neurologic Physical Exam (NPE) score of 0.0 to 6.0 was calculated for each student based on a neurologic examination checklist completed by the SPs during the OSCE. Composite NPE scores in the control and intervention groups were compared with the unpaired t-test.

Results: In the fourth year OSCE, composite NPE scores in the intervention group (3.5±1.1) were statistically significantly greater than those in the control group (2.2±1.1) (p<0.0001).

Conclusions: SP sessions are an effective tool for teaching the neurologic examination. We determined that a single, structured SP session conducted as an adjunct to our traditional lectures and small groups is associated with a statistically significant improvement in student performance measured 2 years after the session.

Neurologic conditions account for a large proportion of the global burden of medical illness and are a leading contributor to hospital admissions Citation1. To be effective physicians in any area of clinical practice, medical students must become proficient in the performance of the neurologic examination. Despite its importance, it is a skill that is being lost with the decline in bedside teaching and neurology training at medical schools Citation2 Citation3. Any curricular change to counter this trend requires a significant investment in faculty participation and financial resources. An evidence-based approach to use the most effective teaching methods is needed.

The neurologic examination is traditionally taught in small group and lecture format. Standardized patients (SPs) are a modality widely used for teaching and assessing clinical skills Citation4 Citation5. Medical schools have also been implementing SP sessions dedicated specifically to the neurologic examination based on the opinion of authorities, such as the pioneering educator, Howard S. Barrow; the Consortium of Neurology Clerkship Directors; and the Undergraduate Education Subcommittee of the American Academy of Neurology Citation1 Citation6. Seventy-five percent of US neurology clerkship directors also report that their medical schools have a clinical skills laboratory, and 68% would use this laboratory to teach the neurological examination. Additionally, they report that 88% of their medical schools have a third or fourth year OSCE to assess student clinical skills Citation7. Despite the expanding use of this modality in teaching and assessing the neurological examination, the majority of the evidence supporting the use of SP sessions is derived from other areas of medicine Citation8 Citation9 Citation10 Citation11 . There exists some limited published data on the short-term effectiveness of an SP session as a tool for enhancing medical student competence with regard to a specific portion of the neurologic examination Citation12. To our knowledge, no published data exist on the long-term effectiveness of an SP session as a tool for enhancing performance on any portion of the neurological examination or on the complete neurological examination.

We hypothesized that the addition of an SP session dedicated to providing structured practice and feedback on the neurologic examination during the second year of a medical school curriculum would be associated with an improvement in the performance of the neurologic examination as measured by a fourth year objective-structured clinical exercise (OSCE).

Methods

Study design

The Standardized Patient Outcomes Trial (SPOT) in neurology is a cross-sectional analysis of prospectively collected data from the graduating classes of 2008, 2009, and 2010 at Weill Cornell Medical College (WCMC). The neurologic examination was taught to three consecutive classes in small group and lecture format by the neurology faculty at WCMC as a non-graded component of ‘Brain and Mind,’ a second year preclinical course that integrates neuroscience, psychopathology, clinical neurology, and neuroanatomy. Students in the class of 2010 took part in an additional SP session focused solely on the neurologic examination at the WCMC Margaret and Ian Smith Clinical Skills Center during Brain and Mind. No other pertinent changes were made to the curriculum, allowing the class of 2008 and 2009 to serve as a control group to the class that received the intervention, the class of 2010. The course directors for the Brain and Mind course did not change over the study period, nor was there a change to the pedagogical methods in the course overall.

The SP session was designed to provide structured practice and feedback on the neurologic examination. The students assigned to the intervention were told that this session was part of their formal course curriculum and that they would be assessed by the SP based on a checklist, but that it was a non-graded exercise. Prior to the encounter, they were provided with the checklist that covers the components of a standard neurologic examination as agreed upon by the course leadership. This checklist was peer-reviewed by faculty in the WCMC Department of Neurology and Neuroscience and included the examination of mental status, cranial nerves, motor function, sensation, cerebellar function, reflexes, and gait. All SPs who participated in this session were trained using this checklist by the same neurologist and clinical skills center staff.

During the session, the students assigned to the intervention were presented with an SP and instructed to perform a complete neurologic examination. Once the examination was complete, the students received 10 min of individualized feedback from the SP regarding completion of the checklist items. The students then received 15 min of immediate group feedback from the neurologist who observed the students during the SP encounters.

The control and intervention groups’ ability to perform a neurologic examination was compared by analyzing the results of an OSCE administered during the fourth year of medical school at the Clinical Skills Center. Students in both groups were informed that although this OSCE was part of their formal curriculum, their performance would not be graded since this session was primarily designed for feedback purposes. During the OSCE, students rotated through 10 case stations. At each station, students were allotted 15 min to elicit a focused history and perform a focused physical examination on the SPs.

For each OSCE case, members of the faculty developed a scripted medical history and physical examination for the SP, as well as a checklist that outlines relevant history and physical examination items for the case. The checklist is completed by the SP after each student encounter. Faculty members train the SPs by reviewing the script and checklist of the cases and by role-playing; these training sessions are typically 2–3 h in duration. One of the 10 cases on the OSCE was specifically designed to assess the students’ ability to perform the neurologic examination. The SP at that station presented a scripted history of a transient ischemic attack (hemiparesis and aphasia that resolved prior to being brought to the hospital) that was expected to trigger the performance of a neurologic examination. Following the encounter, the SP scored the student's performance using a 20 question checklist on the physical examination. This scoring methodology has been extensively studied and validated Citation13 Citation14 Citation15 Citation16 . There were no meaningful changes in the script or checklist used by the SP to evaluate the intervention and control groups. The same OSCE case script and checklist were used for all three classes and a consistent, limited pool of SPs were trained by the same faculty member and staff. The SPs were blinded to both the intervention and study.

Based on the OSCE checklist data, a composite Neurologic Physical Exam (NPE) score was developed that took into account the cranial nerve, motor strength, sensory, reflex, coordination, and gait examination. Each of these components is worth 1 point. The checklist contains 10 items that assess the cranial nerve exam, 2 items that assess the reflex exam, and 1 item for each remaining category. These checklist items were weighted to generate the 1 point per component for a total possible 6 points (). This score was designed prior to evaluation of the data by a neurologist and educators from the Margaret and Ian Smith Clinical Skills Center.

Table 1. Calculation of NPE score

Participants

Within the class of 2010, 58 students participated continuously in the standard 4-year schedule from matriculation to graduation; in the classes of 2008 and 2009, 129 students followed the standard 4-year schedule. The control and intervention groups were divided into two subgroups for further analysis: a subgroup that had not taken the neurology clerkship at the time of the OSCE and a subgroup that had started or completed the neurology clerkship at the time of the OSCE. Within the class of 2010, 23 students were excluded from the intervention group because they had taken an academic leave of absence and completed the curriculum in 5 years. These students did not receive the intervention because they completed their preclinical years with the class of 2009. Because they completed their final year during the 2009–2010 school year, their performance on the OSCE was scored by the same SPs during the same sessions as the intervention group and, thus, served as an ‘OSCE control’ group.

Statistical analysis

The primary analysis was the comparison of the composite NPE score in the intervention group versus the control group. Secondary analyses included stratified analysis according to timing of the neurology clinical clerkship and analysis of composite NPE scores in the OSCE control group.

The unpaired t-test was used to compare composite NPE scores. Assuming a two-sided alpha of 0.05 and a standard deviation of 1.1 points based on data from the classes of 2008 and 2009, with 129 students in the control group and 63 anticipated in the intervention group, we expected to have 80% power to detect a difference of 0.64 points on the composite NPE score in the primary analysis. Statistical analysis was performed using Excel 2007 (Microsoft Corporation).

Standard protocol approvals

The study received approval from the Institutional Review Board of WCMC.

Results

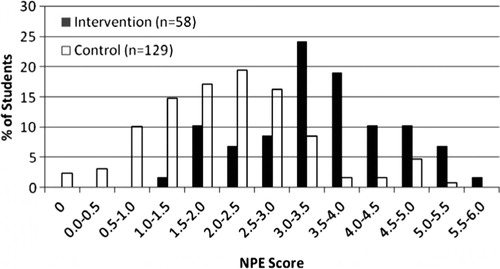

The intervention and control groups have normally distributed NPE scores (). The intervention group has a mean score of 3.5 and the control group has a mean score of 2.2 (). By the unpaired t-test, this constitutes a statistically significant difference (3.5 vs. 2.2, t=7.9, p<0.0001) ().

Table 2. Mean NPE scores in each group and comparisons via a two-tailed unpaired t-test

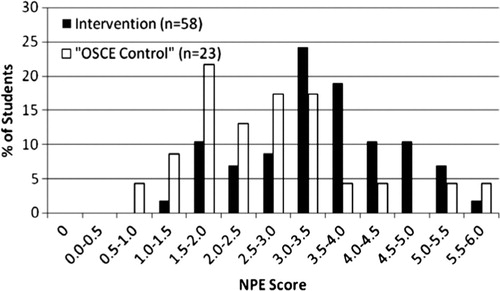

The intervention group's mean score of 3.5 is significantly higher than the OSCE control group's mean score of 2.7 (3.5 vs. 2.2, t=2.9, p=0.004). This difference achieved significance even though the OSCE control and intervention groups were evaluated by the same SPs during the same OSCE sessions (, ). The difference between the OSCE control and control group was significant (2.7 vs. 2.2, t=2.2, p=0.03) even though these two groups received the same standard preclinical curriculum, which did not include the intervention.

On subgroup analysis, the students who had not yet taken the neurology clerkship at the time of the OSCE had a lower mean NPE score than the students who had started or completed the clerkship by the time of the OSCE. The difference in mean scores was small (). In the control group, the mean NPE score was significantly higher in students who had started or completed the neurology clinical clerkship prior to the OSCE than those who had not (2.5 vs. 1.9, t=3.0, p=0.004). In the intervention group, the mean NPE score was also higher in students who had started or completed the neurology clinical clerkship prior to the OSCE than those who did not, but this difference did not achieve statistical significance (3.8 vs. 3.4, t=1.5, p=0.1) ().

Table 3. Mean NPE scores stratified by clerkship timing

The intervention group outperformed the control group on the neurologic examination in the subgroup that took the OSCE before the clerkship (3.4 vs. 1.9, t=7.2, p<0.0001) as well as in the subgroup that took the OSCE after the clerkship (3.8 vs. 2.5, t=4.5, p<0.0001), demonstrating a difference between the intervention and control groups regardless of neurology clerkship timing relative to the OSCE ().

Discussion

The students who received the intervention performed significantly better on the neurologic examination than the control group. The SP session may have been effective at improving performance on the neurologic examination because it reinforced knowledge acquired from the small group and lecture sessions during Brain and Mind. Participants reviewed the examination using the peer-reviewed checklist that outlined the components of a standard neurologic examination prior to the encounter and received immediate individualized feedback from both the SP on completion of checklist items in addition to group feedback from a faculty member based on observation of the SP encounter.

The students in the intervention group also outperformed the OSCE control group. The OSCE control group was a subset of the class of 2010 that did not receive the intervention because they followed a 5-year schedule from matriculation to graduation. The OSCE control group performed significantly worse even though they were scored by the same blinded SPs during the same OSCE session. The OSCEs have been shown to retain their validity when SPs score students according to a checklist, and this scoring methodology is used by the NBME for credentialing physicians Citation5.

Of interest, the mean NPE score of the OSCE control group was significantly better than the control group's mean NPE score. The reason for the difference in performance between the two groups is not entirely clear. One possibility is that the OSCE control group is unique from other students in that it is a self-selected group of individuals who have elected to take 1 year off from medical school to focus in more depth on research or other academic disciplines.

Besides differences in scoring, another potential confounding variable is the neurology clerkship. At WCMC, medical students are required to take a 4-week clerkship in neurology during their third or fourth year of medical school. After they are taught the neurologic examination as second year students, the neurology clerkship is their main opportunity to practice and receive feedback on their examination performance. Since medical students at WCMC are able to take the neurology clerkship at any time during their third or fourth year, timing of the clerkship relative to the OSCE could potentially influence the result.

Within the subgroup that took the OSCE before the clerkship, a significant difference was detected between the intervention and control group. In a similar manner, a significant difference was detected between the intervention and control groups within the subgroup that took the OSCE after the clerkship. Hence, the difference in performance between the control and intervention groups cannot be attributed to the clerkship (). The actual impact of the clerkship on student performance is relatively small. The subgroup that participated in the OSCE after the clerkship within both the intervention and control groups had slightly higher average scores than the subgroup that participated in the OSCE before the clerkship. This difference achieved statistical significance in the control group but not the intervention group for two possible reasons. The clerkship may have resulted in a greater improvement in the control group because they started from a lower baseline. Alternatively, the difference may be attributed to power since the intervention group has a smaller sample size. In either case, the improvement that results from the neurology clerkship is small compared to the improvement that resulted from the SP session. The benefits of the SP session remain significant after controlling for the effects of the neurology clerkship.

This study is limited by the single-center design. It is not known if the use of the SPs to teach the neurological examination would be effective at other medical schools. A multicenter study would be required to determine whether the results can be generalized. The study is also limited by the relatively small sample size of the intervention group, although there was enough power to detect a significant difference. Another limitation of the study is the varied range of student exposure to neurology at the time of the OSCE. This was partially accounted for in the results by analyzing the effect of the neurology clerkship specifically, but certainly students have variable exposure to patients with neurologic illnesses in other clerkships such as medicine, pediatrics, and primary care. Finally, this study is limited by the use of an OSCE itself to evaluate neurological examination skills. The OSCE is a more controlled setting, and student performance of the neurological examination on a real patient in an actual clinical setting could potentially differ.

In conclusion, students that received the SP session neurological examination session in the second year of medical school demonstrated superior performance of the neurologic examination in a simulated patient care setting during the fourth year of medical school. This difference, which was demonstrated to be statistically significant 2 years after the intervention, cannot be attributed to a difference in clerkship timing between the two groups, and is unlikely to represent a change in grading patterns by the SPs. The SP sessions can be effective as a tool for teaching the neurologic examination, an important and challenging component of the physical examination. Additional research is needed to document the impact of the SP sessions on neurologic examination skills in an actual clinical setting. Further research is also needed to determine its effectiveness at teaching specialized physical examination techniques within neurology and in other areas of medicine.

Conflict of interest and funding

The authors have not received any funding or benefits from industry or elsewhere to conduct this study.

References

- Gelb DJ, Gunderson CH, Henry KA, Kirshner HS, Jozefowicz RF. The neurology clerkship core curriculum. Neurology. 2002; 58: 849–52.

- Frank SA, Jozefowicz RF. The challenges of residents teaching neurology. The Neurologist. 2004; 10: 216–20.

- Charles PD, Scherokman B, Jozefowicz RF. How much neurology should a medical student learn? A Position Statement of the AAN Undergraduate Education Subcommittee. Acad Med. 1999; 74: 23–26.

- Howley LD, Gliva-McConvey G, Thornton J. Standardized patient practices: initial report on the survey of US and Canadian medical schools. Med Educ Online. 2009; 14: 7. Available from: http://med-ed-online.net/index.php/meo/article/view/4513 [cited 4 November 2010].

- USMLE Step 2CS Examination Guide. (2010). Available from: http://www.usmle.org/Examinations/step2/step2cs_content.html [cited 1 November 2010]..

- Barrows HS. An overview of the uses of standardized patients for teaching and evaluating clinical skills. AAMC. Acad Med. 1993; 68: 443–51.

- Consortium of Neurology Clerkship Directors. (2006). Neurology clerkship directors survey. Am Acad Neurol. Available from: http://www.aan.com/go/education/clerkship/consortium [cited 26 October 2010]..

- Fletcher KE, Stern DT, White C, Gruppen LD, Oh MS, Cimmino VM. The physical examination of patients with abdominal pain: the long-term effect of adding standardized patients and small-group feedback to a lecture presentation. Teaching Learn Med. 2004; 16: 171–4.

- Hasle JL, Anderson DS, Szerlip HM. Analysis of the costs and benefits of using standardized patients to help teach physical diagnosis. Acad Med. 1994; 69: 567–70.

- Sachdeva AK, Wolfson PJ, Blair PG, Gillum DR, Gracely EJ, Friedman M. Impact of a standardized patient intervention to teach breast and abdominal examination skills to third-year medical students at two institutions. Am J Surg. 1997; 173: 320–5.

- Elman D, Hooks R, Tabak D, Regehr G, Freeman R. The effectiveness of unannounced standardized patients in the clinical setting as a teaching intervention. Med Educ. 2004; 38: 969–73.

- Fox R, Dacre J, McLure C. The impact of formal instruction in clinical examination skills on medical student performance – the example of the peripheral nervous system examination. Med Educ. 2001; 35: 371–3.

- Swartz MH, Colliver JA, Bardes CL, Chrlon R, Fried ED, Moroff S. Validating the standardized-patient assessment administered to medical students in the New York City consortium. Acad Med. 1997; 72: 619–26.

- MacRae HM, Vu NV, Graham B, Word-Sims M, Colliver JA, Robbs RS. Comparing checklists and databases with physicians’ ratings as measures of students’ history and physical-examination skills. Acad Med. 1995; 75: 313–7.

- De Champlain AF, Margolis MJ, King A, Klass DJ. Standardized patients’ accuracy in recording examinees’ behaviors using checklists. Acad Med. 1997; 72: S85–7.

- Vu NV, Marcy MM, Colliver JA, Verhulst SJ, Travis TA, Barrows HS. Standardized (simulated) patients’ accuracy in recording clinical performance check-list items. Med Educ. 1992; 26: 99–104.