Abstract

Introduction

The purpose of this study was to compare students’ performance in the different clinical skills (CSs) assessed in the objective structured clinical examination.

Methods

Data for this study were obtained from final year medical students’ exit examination (n=185). Retrospective analysis of data was conducted using SPSS. Means for the six CSs assessed across the 16 stations were computed and compared.

Results

Means for history taking, physical examination, communication skills, clinical reasoning skills (CRSs), procedural skills (PSs), and professionalism were 6.25±1.29, 6.39±1.36, 6.34±0.98, 5.86±0.99, 6.59±1.08, and 6.28±1.02, respectively. Repeated measures ANOVA showed there was a significant difference in the means of the six CSs assessed [F(2.980, 548.332)=20.253, p<0.001]. Pairwise multiple comparisons revealed significant differences between the means of the eight pairs of CSs assessed, at p<0.05.

Conclusions

CRSs appeared to be the weakest while PSs were the strongest, among the six CSs assessed. Students’ unsatisfactory performance in CRS needs to be addressed as CRS is one of the core competencies in medical education and a critical skill to be acquired by medical students before entering the workplace. Despite its challenges, students must learn the skills of clinical reasoning, while clinical teachers should facilitate the clinical reasoning process and guide students’ clinical reasoning development.

In medical education, the use of objective structured clinical examination (OSCE) in the assessment of clinical competence has become widespread since it was first described by Harden and Gleeson (Citation1).

Although the OSCE assesses clinical skills (CSs), the concept of ‘CS’ is not clearly defined in the literature. CS seems to mean different things to different people, with a lack of clarity as to what is, and what is not a CS (Citation2). Different authors (Citation2–Citation5) include different domains within their definitions of CS. Michels and colleagues (Citation2) include physical examination skills, communication skills, practical skills, treatment skills, and clinical reasoning or diagnostic skills as CSs. However, Junger and colleagues (Citation3) refer to CS as physical examination skills only whilst Kurtz and colleagues (Citation4) also consider communication skills as a CS. The Institute for International Medical Education (Citation5) adopts a broader perspective in which history taking, physical examination, practical skills, interpretation of results, and patient management come under the headings of CSs.Footnote

According to Michels and colleagues (Citation2), acquiring CSs involves learning how to perform the skills (procedural knowledge), the rationale for doing (underlying basic sciences knowledge), and interpretation of the findings (clinical reasoning). Without these three components, CS is merely a mechanical performance with limited clinical application. However, clinicians are often unaware of the complex interplay between different components of a CS that they are practicing and consequently do not teach all these aspects to students (Citation2).

One of the core competencies in medical education is clinical reasoning (Citation6). Clinical reasoning plays a major role in the ability of doctors to make a diagnosis and reach treatment decisions. According to Rencic (Citation7), clinical reasoning is one of the most critical skills to teach to medical students. Case presentation is frequently used in most clinical teaching settings and although the key role of clinical teachers is to facilitate and evaluate case presentations and give suggestions for improvement (Citation8), clinical teachers rarely have adequate training on how to teach clinical reasoning skills (CRSs) (Citation7). Consequently, learners often receive only vague coaching on the clinical reasoning process (Citation9).

Clinical reasoning has been a topic of research for several decades, yet there still exists no clear consensus regarding what clinical reasoning entails and how it might best be taught and assessed (Citation10). Durning and colleagues (Citation10) found this lack of consensus could be due to contrasting epistemological views of clinical reasoning and the different theoretical frameworks held by medical educators.

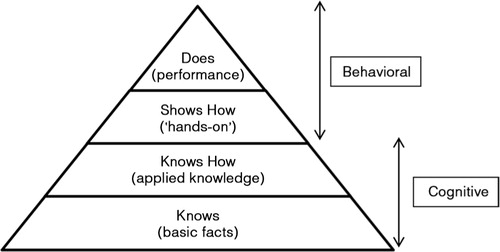

This study adopted Miller's pyramid of clinical competence (Citation11) as the conceptual basis for the assessment of CSs. Miller's pyramid outlines the issues involved when analysing validity. The pyramid conceptualises the essential facets of clinical competence. It proposes clinical competence in multiple levels: ‘knows’, ‘knows how’, ‘shows how’, and ‘does’ (). A candidate ‘knows’ first before progressing to ‘knows how’. ‘Knows’ is analogous to factual knowledge whereas ‘knows how’ is equivalent to concept building and understanding. At a higher level, a candidate ‘shows how’, that is, develops the competence to ‘perform’. At the highest level, the candidate ‘does’, that is, actually carries out the tasks competently in real-life situations.

Fig. 1. Miller's pyramid of clinical competence.

Adapted from Ref. (Citation11).

Although there are numerous studies on OSCEs, most of these studies focused on issues of validity, reliability, objectivity, and standard setting of OSCEs (Citation12–Citation16). In this study, we sought to examine and compare students’ performance in the six CSs assessed in the OSCE. Instead of interpreting OSCE stations as a whole, this investigation would reveal students’ specific strengths and weaknesses in the different CSs assessed. This study attempted to answer the research question: ‘Is there any significant difference in students’ performance among the six CSs assessed in the OSCE?’

In the context of this study, the six CSs were history taking, physical examination, communication skills, CRS, procedural skills (PSs), and professionalism. These six skills were included in the OSCE as they were considered by the authors’ institutional OSCE and curriculum committee as core competencies to be attained as a reasonable requirement at graduation for the degrees of Bachelor of Medicine and Bachelor of Surgery (MBBS) candidates in the Malaysian context.

Methods

This is a retrospective study analysing secondary data. The study population comprised 185 final year medical students who took the OSCE in their exit examination.

Institutional setting

MBBS is a 5-year programme. The programme is divided into three phases: Phase 1 (1 yr), Phase 2 (1 yr), and Phase 3 (3 yrs). Phase 3 (clinical years) is further divided into Phase 3A and Phase 3B of 1½ yrs each. During each phase of study, course assessment consists of continuous assessment and professional examinations. Phase 3B students take the final MBBS Examination that comprises four components: Component A (end-of-posting tests), Component B (two theory papers), Component C (one long case and three short cases), and Component D (OSCE).

The OSCE

The OSCE comprised 16 work stations and 1 rest station. A time of 5 min was allocated for each station, with a 1-min gap between stations. Hence, each OSCE session took approximately 100 min. The examination was run in three parallel circuits of 17 stations each and was conducted over four rounds from morning until late afternoon. For the 16-station OSCE, nine were interactive and seven were non-interactive. provides a summary of the OSCE stations.

Table 1 The OSCE stations

Each station's score sheet contained a detailed checklist of items examined (total=10 marks). A global rating was also included for the examiner to indicate the global assessment for the station. For interactive stations, both checklists and global ratings were used for scoring. For non-interactive stations that involved data interpretation, no examiner was present and only checklists were used.

Validity and reliability of the OSCE

Various measures were taken to ensure a high validity and reliability for the OSCE.

Content validity was determined by how well the test content mapped across the learning objectives of the course (Citation17). Content validity of the OSCE was established by blueprinting. This ensured adequate sampling across subject areas and skills, in terms of the number of stations covering each skill and the spread over the content of the course being tested. For quality assurance of the OSCE stations, question vetting was conducted at both the department and the faculty level.

Station materials were written well in advance of the examination date. For each station, there were clear instructions for the candidates and notes for the examiners, list of equipment required, personnel requirements, scenario for standardised patients, and marking schedule. The stations were reviewed and field-tested prior to the actual examination.

Consistency of marking among examiners contributes to reliability. Consistent performance of standardised patients ensures each candidate is presented with the same challenge. To ensure consistency and fairness of scores, training of examiners and standardised patients was conducted by the Faculty of Medicine OSCE Team. To further enhance reliability, structured marking schedules allowed for more consistent scoring by examiners according to predetermined criteria on the checklists.

To increase reliability, it is better to have more stations with one examiner per station than fewer stations with two examiners per station (Citation18). As candidates have to perform a number of different tasks across different stations, this wider sampling across different cases and skills results in a more reliable picture of a candidate's overall competence. Furthermore, as the candidates move through all the stations, each is examined by a number of different examiners. Multiple independent observations are collated while individual examiner bias is attenuated.

In this study, the 16 OSCE stations from 11 clinical departments allowed wider sampling of content. Furthermore, most of the stations assessed multiple skills (). Hence, both the validity and reliability of the examination were enhanced.

Data collection and data analysis

After obtaining approval to perform the study from the Faculty of Medicine, University of Malaya, raw scores for each of the 16 stations for all the 185 candidates were obtained from examination section, Faculty of Medicine. Subsequently, retrospective analysis of data was conducted using IBM SPSS version 22. An alpha level of 0.05 was set for all the statistical tests.

Statistical analysis

To check for internal consistency of the OSCE, Cronbach's alpha was computed across the 16 stations for all the candidates (n=185).

Since each OSCE station assessed one or more skills (), mean scores for each of the six CSs assessed across the 16 stations were also computed. For example, to compute the mean score for PS, the mean score for stations S03, S09, S11, S14, S15, and S16 would be computed. Hence, mean score for PS=(7.60+7.00+7.69+5.27+5.92+6.08)/6=6.59.

Because the 185 candidates constituted a single group, means for the six different CSs assessed were compared using repeated measures ANOVA or within-subjects ANOVA (Citation19). Repeated measures ANOVA is the equivalent of the one-way ANOVA but for related, not independent groups, and is the extension of the paired sample t-test (Citation19–Citation21). In this study, the independent variable or factor was CSs with six levels (history taking, physical examination, communication skills, CRS, PS, and professionalism). The dependent variable was the mean score for each CS assessed.

The design of repeated measures ANOVA is based on the assumption of sphericity, which is equivalent to Levene's test for equality of variance in one-way ANOVA. The statistic used in repeated measures ANOVA is F, the same statistic as in simple ANOVA. If the F value is significant, post-hoc tests were conducted using pairwise multiple comparisons with Bonferonni correction for type I error (Citation19).

In this study, a repeated measures design was used because 1) in using the same subject, a repeated measure allows the researcher to exclude the effects of individual differences that could occur in independent groups (Citation22), 2) the sample size is not divided between groups and thus inferential testing becomes more powerful, 3) this design is economical when sample members are difficult to recruit because each member is measured under all conditions (Citation22), and 4) the results can be directly compared, which can be problematic for independent measures (Citation22).

Results

Reliability analysis reported an alpha value of 0.68. This indicated the OSCE had moderate reliability.

Mean scores for all six CSs fell below seven out of a total possible score of ten, indicating the CSs of these students (n=185) were just at the satisfactory level. Means and standard deviations for history taking, physical examination, communication skills, CRS, PS, and professionalism were 6.25±1.29, 6.39±1.36, 6.34±0.98, 5.86±0.99, 6.59±1.08, and 6.28±1.02, respectively. Mean for PSs was the highest while mean for CRS was the lowest, among the six CSs assessed.

Results of the repeated measures ANOVA are shown in –. shows the results of Mauchly's test of sphericity. displays the results of the main ANOVA test and summarises the results of multiple comparisons between each pair of CS assessed.

Table 2 Mauchly's Test of sphericitya

Table 3 Tests of within-subjects effects

Table 4 Multiple comparisons (pair wise) of mean scores for the clinical skills assessed

Mauchly's test of sphericity is significant [x 2 (14)=546.824, p<0.001]. This indicates that the assumption of sphericity had been violated (). Hence, adjustment of the degrees of freedom (df) for the test of within-subjects effects needs to be done. Because Epsilon<0.75, the Greenhouse–Geisser correction factor of 0.596 was used to compute the new df (Citation23). New df1=2.980, new df2=548.332 ().

Based on the new df, repeated measures ANOVA still show a significant difference in the mean scores of the six CSs assessed [F(2.980, 548.332)=20.253, p<0.001] ().

Post-hoc tests were subsequently conducted to determine which pairs of means were significantly different. Pairwise multiple comparisons with Bonferroni correction revealed significant differences between the mean of eight pairs of CSs assessed, at p<0.05 ().

Discussion

From the findings of the study, it could be concluded that students were weakest in their CRS. CRS (mean=5.86) is probably the most demanding compared to the other five CSs assessed (Citation24–Citation27). Clinical reasoning is a complex process that requires cognition and metacognition, as well as case and content-specific knowledge to gather and analyse patient's information, evaluate the significance of the findings, and consider alternative actions (Citation24). According to Dennis (Citation25), CRS may in some cases require the student to integrate findings from the enquiry plan (which involves history taking, physical examination, and investigations) into a clinical hypothesis and consider these findings in the overall context of the patient. This may include an ability to integrate history and physical examination to develop an accurate and comprehensive problem list, as well as a logical list of differential diagnoses (Station S08); an ability to interpret clinical data (Stations S02, S06, and S17); to recognise common emergency situations and demonstrate knowledge of an appropriate response (Station S04); and patient management (Station S07) (). Qiao and colleagues (Citation26) found that clinical reasoning is a high-level, complex cognitive skill. Because clinical reasoning involves synthesising details of patient information into concise yet accurate clinical assessment, it is a higher order thinking skill (Citation27). According to Krathwohl (Citation27), synthesising is the highest cognitive level in the cognitive domain of the revised Bloom's taxonomy. Given the fact that each station was only allocated a time of 5 min, CRS could be a high cognitive resource activity for the candidates in a high-stakes exam, for instance, an OSCE in the exit examination.

In this study, the low mean score for CRS could be due to candidates’ inadequate knowledge of basic and clinical sciences (BCS) or the inability to apply this knowledge appropriately and reflectively in a clinical setting, or both. The assumption is made that clinical reasoning depends heavily on a relevant knowledge base. According to Miller's pyramid of clinical competence () (Citation11, Citation16), an OSCE lies in the level of ‘show how’, which is mainly behavioural (Citation11). To perform at the level of ‘show how’ (behavioural), students need to have a strong knowledge base at the levels of ‘know’ and ‘know how’ (cognitive). Therefore, knowledge of BCS must be available and needs to be activated and retrieved before the student is able to perform or demonstrate the skill to ‘show how’. Students need to be able to apply their knowledge of BCS to help them better understand patients’ problems. Further study is needed to collect empirical data to verify these assumptions.

A longitudinal study at the authors’ institution revealed that students in the clinical years encountered challenges in recalling their knowledge of BCS that had been learned in preclinical years (Citation28). The previous study echoes findings of this study in which the curriculum in the clinical years should provide more opportunities for students to revisit their knowledge of BCS learned during preclinical years. Information Process Theory (Citation29) suggests learners need to retrieve and rehearse their learned knowledge regularly to retain the knowledge in their long-term memory. Formal lectures could be an appropriate platform for rehearsal (Citation30) in addition to existing modes of teaching whereby students clerk patients, write case summaries, and present cases. Meanwhile, the acquisition of CRS primarily results from dealing with multiple patients over a period of time. Such patient–doctor interaction facilitates the availability and retrieval of conceptual knowledge through repetitive, deliberate practice (Citation31). Exposure to multiple cases is crucial as clinical reasoning is not a generic skill. It is case or content specific (Citation32). In the context of the authors’ institution, students are mainly observers who are neither directly involved in actual diagnosis of real patients nor explicitly required to practise CRS to fulfil the logbook requirements. Hence, it is up to the clinical teachers on how to teach CRS. It is suggested that during clerkships, ward rounds, or bedside teaching, clinical teachers should emphasise the clinical reasoning processes that show how a clinician arrives at a particular diagnostic or treatment decision to help students develop an understanding of how clinical decisions are made (Citation25). More emphasis should be placed on diagnosis and management rather than basic mechanisms to prepare the students for the workplace. In addition, students should be given more hands-on experience of the clinical reasoning process, under the guidance and supervision of their clinical teachers. Although Gigante (Citation9) pointed out that deliberate teaching of clinical reasoning may appear overwhelming and at times impossible, he explored how the clinical reasoning process can be taught in a stepwise fashion to students. Fleming and colleagues (Citation33) used the concepts of problem representation, semantic qualifiers, and illness scripts to show how clinical teachers can guide students’ clinical reasoning development. Nonetheless, these authors cautioned that although clinical teachers can maximise students’ clinical exposure and experience, they cannot build illness scripts for them. Students must construct their own illness scripts based on real patients they have seen.

Several limitations of this study should be considered. The 5-min duration of each OSCE station may be relatively short compared to other medical schools. Data obtained from only one cohort of final year medical students from a single institution may limit generalisability of the findings. Hence, findings of this study need to be interpreted with caution when applied to other institutional settings.

Conclusions

The final year of undergraduate medical education is crucial in transforming medical students into competent and reflective practitioners. Students’ unsatisfactory performance in CRS needs to be addressed as it is a core competency in medical education and a critical skill to be acquired by medical students before entering the workplace as health care practitioners. Despite its challenges, students must learn the skills of clinical reasoning for better patient care. Clinical teachers should facilitate the clinical reasoning process and guide students’ clinical reasoning development. Relying on time and experience to develop these skills is inadequate.

As research to uncover students’ educational needs for learning clinical reasoning during clerkships is limited (Citation34), it is an area to explore in future studies.

Conflict of interest and funding

The authors report no conflicts of interest.

Acknowledgements

This research was funded by Bantuan Kecil Penyelidikan (BK010/2014), University of Malaya.

Notes

An oral presentation on the content related to this manuscript was presented by the first author during the International Medical Education Conference at the International Medical University, Malaysia, on 13 March 2014.

References

- Harden RM , Gleeson FA . ASME medical educational booklet no 8: assessment of medical competence using an objective structured clinical examination (OSCE). J Med Educ. 1979; 13: 41–54.

- Michels ME , Evans DE , Blok GA . What is a clinical skill? Searching for order in chaos through a modified Delphi process. Med Teach. 2012; 34: e573–81.

- Junger J , Schafer S , Roth C , Schellberg D , Friedman Ben-David M , Nikendei C . Effects of basic clinical skills training on objective structured clinical examination performance. Med Educ. 2005; 39: 1015–20.

- Kurtz SM , Silverman J , Draper J . Teaching and learning communication skills in medicine. 1998; Abingdon: Radcliffe Medical Press.

- IIME. Global minimum essential requirements in medical education. Med Teach. 2002; 24: 130–5.

- Smith CS . A developmental approach to evaluating competence in clinical reasoning. J Vet Med Educ. 2008; 35: 375–81. doi: 10.3131/jvme.35.3.375. [PubMed Abstract].

- Rencic J . Twelve tips for teaching expertise in clinical reasoning. Med Teach. 2011; 33: 887–95.

- Onishi H . Role of case presentation for teaching and learning activities. Kaohsiung J Med Sci. 2008; 24: 356–60.

- Gigante J . Teaching clinical reasoning skills to help your learners ‘Get’ the diagnosis. Pediatr Therapeut. 2013; 3: e121. doi: 10.4172/2161-0665.1000e121.

- Durning SJ , Artino AR , Schuwirth L , van der Vleuten C . Clarifying assumptions to enhance our understanding and assessment of clinical reasoning. Acad Med. 2013; 88: 442–8.

- Miller GE . The assessment of clinical skills/competence/performance. Acad Med. 1990; 65: s63–7.

- Barman A . Critiques on the objective structured clinical examination. Ann Acad Med. 2005; 34: 478–82.

- Gupta P , Dewan P , Singh T . Objective structured clinical examination (OSCE) revisited. Indian Pediatr. 2010; 47: 911–20.

- Pell G , Fuller R , Homer M , Roberts T . How to measure the quality of the OSCE: a review of metrics – AMEE guide no. 49. Med Teach. 2010; 32: 802–11.

- Turner JL , Dankoski ME . Objective structured clinical exams: a critical review. Fam Med. 2008; 40: 574–8. [PubMed Abstract].

- Wass V , van der Vleuten C , Shater J , Jones R . Assessment of clinical competence. Lancet. 2001; 357: 945–9.

- Downing SM . Validity: on the meaningful interpretation of assessment data. Med Educ. 2003; 37: 830–7.

- van der Vleuten CPM , Swanson DB . Assessment of clinical skills with standardised patients: state of the art. Teach Learn Med. 1990; 2: 58–76.

- Field A . Discovering statistics using SPSS: and sex and drugs and rock ‘n’ roll. 2009; Los Angeles, CA: Sage. 3rd ed.

- Repeated measures ANOVA – Laerd statistics; 2013. Available from: https://statistics.laerd.com/statistical-guides/repeated-measures-anova-statistical-guide.php [cited 28 January 2014]..

- Sullivan LM . Repeated measures. Circulation. 2008; 117: 1238–43. doi: 10.1161/CIRCULATION.107.654350. [PubMed Abstract].

- Howitt D , Crammer D . Introduction to research methods in psychology. 2011; Harlow: Pearson Education. 3rd ed.

- Girden ER . ANOVA: repeated measures. Sage University paper series on quantitative applications in the social sciences. 1992; Thousand Oaks, CA: Sage.

- Simmons B . Clinical reasoning: concept analysis. J Adv Nursing. 2010; 66: 1151–8.

- Dennis C . Stage 3 handbook (version 1). Sydney Medical School, University of Sydney. 2013. Available from: http://smp.sydney.edu.au/compass/block/list [cited 28 January 2014]..

- Qiao YQ , Shen J , Liang X , Ding S , Chen FY , Shao L , etal. Using cognitive theory to facilitate medical education. BMC Med Educ. 2014; 14: 79. doi: 10.1186/1472-6920-14-79.

- Krathwohl DR . A revision of Bloom's taxonomy: an overview. 2002; 212–18. Available from: http://www.unco.edu/cetl/sir/stating_outcome/documents/Krathwohl.pdf [cited 28 November 2014]..

- Foong CC, Lee SS, Daniel EGS, Vadivelu J, Tan NH. Graduating medical students' confidence in their professional skills: a longitudinal study. Int Med J (in press).

- Atkinson RC , Shiffrin RM , Spence KW , Spence JT . Chapter: human memory: a proposed system and its control processes. 1968; New York: Academic Press. 89–195. The psychology of learning and motivation Vol. 2.

- Jeffries WB , Huggett K , Jeffries WB . Large group teaching. 2014; New York: Springer. 11–26. An introduction to medical teaching.

- Norman G . Research in clinical reasoning: past history and current trends. Med Educ. 2005; 39: 418–27.

- Amin Z , Chong YS , Khoo HE . Practical guide to medical student assessment. 2012; Singapore: World Scientific.

- Fleming A , Cutrer W , Reimschisel T , Gigante J . You too can teach clinical reasoning!. Pediatrics. 2012; 130: 795–7.

- Wingelaar TT , Wagter JM , Arnold AE . Students’ educational needs for clinical reasoning in first clerkships. Perspect Med Educ. 2012; 1: 56–66.