Abstract

This paper studies the role of sparse regularisation in a properly chosen basis for variational data assimilation (VDA) problems. Specifically, it focuses on data assimilation of noisy and down-sampled observations while the state variable of interest exhibits sparsity in the real or transform domains. We show that in the presence of sparsity, the -norm regularisation produces more accurate and stable solutions than the classic VDA methods. We recast the VDA problem under the

-norm regularisation into a constrained quadratic programming problem and propose an efficient gradient-based approach, suitable for large-dimensional systems. The proof of concept is examined via assimilation experiments in the wavelet and spectral domain using the linear advection–diffusion equation.

1. Introduction

Environmental prediction models are initial value problems and their forecast skills highly depend on the quality of their initialisation. Data assimilation (DA) seeks the best estimate of the initial condition of a (numerical) model, given observations and physical constraints coming from the underlying dynamics (see, Daley, Citation1993; Kalnay, Citation2003). This important problem is typically addressed by two major classes of methodologies, namely sequential and variational methods (Ide et al., Citation1997; Law and Stuart, Citation2012). The sequential methods are typically built on the theory of mathematical filtering and recursive weighted least-squares (WLS) (Ghil et al., Citation1981; Ghil, Citation1989; Ghil and Malanotte-Rizzoli, Citation1991; Evensen, Citation1994a; Anderson, Citation2001; Moradkhani et al., Citation2005; Zhou et al., Citation2006; Van Leeuwen, Citation2010, among others), while the variational methods are mainly rooted in the theories of constrained mathematical optimisation and batch mode WLS (e.g. Sasaki, Citation1970; Lorenc, Citation1986, Citation1988; Courtier and Talagrand, Citation1990; Zupanski, Citation1993, among others).

Although recent sequential methods have received a great deal of attention, the variational methods are still central to the operational weather forecasting systems. Classic formulation of the variational data assimilation (VDA) typically amounts to defining a (constrained) WLS problem whose optimal solution is the best estimate of the initial condition, the so-called analysis state. This penalty function typically encodes the weighted sum of the costs associated with the distance of the unknown true state to the available observations and previous model forecast, the so-called background state. Indeed, the penalty function enforces the solution to be close enough to both observations and background state in the weighted mean squared sense, while the weights are characterised by the observations and the background error covariance matrices. On the other hand, the constraints typically enforce the analysis to follow the underlying prognostic equations in a weak or strong sense (see, Sasaki, Citation1970; Daley, Citation1993, p. 369). Typically, when we constrain the analysis only to the available observations and the background state at every instant of time, the VDA problem is called 3D-Var (e.g. Lorenc, Citation1986; Parrish and Derber, Citation1992; Lorenc et al., Citation2000; Kleist et al., Citation2009). On the contrary, when the analysis is also constrained to the underlying dynamics and available observations in a window of time, the problem is called 4D-Var (e.g. Zupanski, Citation1993; Rabier et al., Citation2000; Rawlins et al., Citation2007).

Inspired by the theories of smoothing spline and Kriging interpolation in geostatistics, the first signs of using regularisation in VDA trace back to the work by Wahba and Wendelberger (Citation1980) and Lorenc (Citation1986), where the motivation was to impose smoothness over the class of twice differentiable analysis states. More recently, Johnson et al. (Citation2005b) argued that, in the classic VDA problem, the sum of the squared or -norm of the weighted background error resembles the Tikhonov regularisation (Tikhonov et al., Citation1977). Specifically, by the well-known connections between the Tikhonov regularisation and spectral filtering via singular value decomposition (SVD) (e.g. see Hansen, Citation1998; Golub et al., Citation1999; Hansen et al., Citation2006), a new insight was provided into the interpretation and stabilising role of the background state on the solution of the classic VDA problem (see, Johnson et al., Citation2005a). Instead of using the

-norm of the background error, Freitag et al. (Citation2010) and Budd et al. (Citation2011) suggested to modify the classic VDA cost function using the sum of the absolute values or

-norm of the weighted background error. This assumption requires to statistically suppose that the background error is heavy tailed and can be well approximated by the family of Laplace densities (e.g. Tibshirani, Citation1996; Lewicki and Sejnowski, Citation2000). For DA of sharp atmospheric fronts, Freitag et al. (Citation2012) kept the classic VDA cost function while further proposed to regularise the analysis state by constraining the

-norm of its first order derivative coefficients.

In this study, inspired by our previous evidence on sparsity of rainfall fields (Ebtehaj and Foufoula-Georgiou, Citation2011; Ebtehaj et al., Citation2012), we extend the previous studies (e.g. Freitag et al., Citation2012; Ebtehaj and Foufoula-Georgiou, Citation2013) in regularised variational data assimilation (RVDA) by: (a) proposing a generalised regularisation framework for assimilating low-resolution and noisy observations, while the initial state of interest exhibits sparse representation in an appropriately chosen basis; (b) demonstrating the promise of the methodology in assimilation test problems using advection–diffusion dynamics with different error structure; and (c) proposing an efficient solution method for large-scale DA problems.

The concept of sparsity plays a central role in this paper. By definition, a state of interest is sparse in a pre-selected basis, if the number of non-zero elements of its expansion coefficients in that basis (e.g. wavelet coefficients) is significantly smaller than the overall dimension of the state in the observational space. Here, we show that if sparsity in a pre-selected basis holds, this prior information can serve to improve the accuracy and stability of DA problems. To this end, using prototype studies, different initial conditions are selected, which are sparse under the wavelet and spectral discrete cosine transformation (DCT). The promise of the -norm RVDA is demonstrated via assimilating down-sampled and noisy observations in a 4D-Var setting by strongly constraining the solution to the governing advection-diffusion equation. In a broader context, we delineate the roadmap and explain how we may exploit sparsity, while the underlying dynamics and observation operator might be nonlinear. Particular attention is given to explain Monte Carlo driven approaches that can incorporate a sparse prior in the context of ensemble DA.

Section 2 reviews the classic VDA problem. In Section 3, we discuss the concept of sparsity and its relationship with -norm regularisation in the context of VDA problems. Results of the proposed framework and comparisons with classic methods are presented in Section 4. Section 5 is devoted to conclusions and ideas for future research, mainly focusing on the use of ensemble-based approaches to address sparse promoting VDA in nonlinear dynamics. Algorithmic details and derivations are presented in Appendix.

2. Classic VDA

At the time of model initialisation t

0, the goal of DA can be stated as that of obtaining the analysis state as the best estimate of the true initial state, given noisy and low-resolution observations and the erroneous background state, while the analysis needs be to consistent with the underlying model dynamics. The background state in VDA is often considered to be the previous-time forecast provided by the prognostic model. By solving the VDA problem, the analysis is then being used as the initial condition of the underlying model to forecast the next time-step and so on. In the following, we assume that the unknown true state of interest at the initial time t

0 is an m-element column vector in discrete space denoted by , the noisy and low-resolution observations in the time interval

are

,

, where

. Suppose that the observations are related to the true states by the following observation model

1

where denotes the nonlinear observation operator that maps the state space into the observation space, and

is the Gaussian observation error with zero mean and covariance

.

Taking into account the sequence of available observations, ,

, and denoting the background state and its error covariance by

and

, the 4D-Var problem amounts to obtaining the analysis at initial time as the minimizer of the following cost function:

2

while the solution is constrained to the underlying model equation,3

Here, denotes the quadratic-norm, while A is a positive definite matrix and the function

is a nonlinear model operator that evolves the initial state in time from t

0 to t

i.

Let us define M

0,i

to be the Jacobian of and restrict our consideration only to a linear observation operator, that is

, and thus the 4D-Var cost function reduces to

4

By defining , where

,

, and

the 4D-Var problem (4) further reduces to minimisation of the following cost function:5

Clearly, eq. (5) is a smooth quadratic function of the initial state of interest x

0. Therefore, by setting the derivative to zero, it has the following analytic minimizer as the analysis state,6

Throughout this study, we used Matlab built-in function pcg.m, described by Bai et al. (Citation1987), for obtaining classic solutions of the linear 4D-Var in eq. (6).

Accordingly, it is easy to see (e.g. Daley, Citation1993, p. 39) that the analysis error covariance is the inverse of the Hessian of eq. (5), as follows:7

It can be shown that the analysis in the above classic 4D-Var is the conditional expectation of the true state given observations and the background state. In other words, the analysis in the classic 4D-Var problem is the unbiased minimum mean squared error (MMSE) estimator of the true state (Levy, Citation2008, chap.4).

3. Regularised variational data assimilation

3.1. Background

As is evident, when the Hessian (i.e. ) in the classic VDA cost function in eq. (5) is ill-conditioned, the VDA solution is likely to be unstable with large estimation uncertainty. To study the stabilising role of the background error, motivated by the well-known relationship between the Tikhonov regularisation and spectral filtering (e.g. Golub et al., Citation1999), Johnson et al. (Citation2005a, Citationb) proposed to reformulate the classic VDA problem analogous to the standard form of the Tikhonov regularisation (Tikhonov et al., Citation1977). Accordingly, using a change of variable

, letting

and

, where C

B and

are the correlation matrices, the classic variational cost function was proposed to be reformulated as follows:

8

where the -norm is

,

,

, and

. Hence, by solving

the analysis can be obtained as, . Having the above reformulated problem, (Johnson et al., Citation2005a) provided new insights into the role of the background error covariance matrix on improving the condition number of the Hessian in (5), that is the ratio between its largest and the smallest singular values, and thus stability of the classic VDA problem.

To tackle DA of sharp fronts, following the above reformulation, Freitag et al. (Citation2012) suggested to add the smoothing -norm regularisation as follows:

9

where the -norm is

, the non-negative λ is called the regularisation parameter, and Φ is proposed to be an approximate first-order derivative operator as follows:

Notice that eq. (9) is a non-smooth optimisation as the derivative of the cost function does not exist at the origin. Freitag et al. (Citation2012) recast this problem into a quadratic programing (QP) with both equality and inequality constraints where the dimension of the proposed QP is three times larger than that of the original problem. Note that by quadratic programming we refer to minimisation or maximisation of a quadratic function with linear constraints. It is also worth noting that, the reformulations in eqs. (8) and (9) assume that the error covariance matrices are stationary (i.e. ,

) and the error variance is distributed uniformly across all of the problem dimension. However, without loss of generality, a covariance matrix

can be decomposed as

, where

is the vector of standard deviations (Barnard et al., Citation2000). Therefore, while one can have an advantage in stability of computation in eqs. (8) and (9), the stationarity assumptions and computations of the square roots of the error correlation matrices might be restrictive in practice.

In the subsequent sections, beyond regularisation of the first order derivative coefficients, we present a generalised framework to regularise the VDA problem in a properly chosen transform domain or basis (e.g. wavelet, Fourier, DCT). The presented formulation includes smoothing

and

-norm regularisation as two especial cases and does not require any explicit assumption about the stationarity of the error covariance matrices. We recast the

-norm RVDA into a QP with lower dimension and simpler constraints compared to the presented formulation by Freitag et al. (Citation2012). Furthermore, we introduce an efficient gradient-based optimisation method, suitable for large-scale DA problems. Some results are presented via assimilating low-resolution and noisy observations into the linear advection–diffusion equation in a 4D-Var setting.

3.2. A generalised framework to regularise variational data assimilation in transform domains

In a more general setting, to regularise the solution of the classic VDA problem, one may constrain the magnitude of the analysis in the norm sense as follows:10

where c>0, is any appropriately chosen linear transformation, and the

-norm is

with p>0. By constraining the

-norm of the analysis, we implicitly make the solution more stable. In other words, we bound the magnitude of the analysis state and reduce the instability of the solution due to the potential ill-conditioning of the classic cost function. Using the theory of Lagrange multipliers, the above-constrained problem can be turned into the following unconstrained one:

11

where the non-negative λ is the Lagrange multiplier or regularisation parameter. As is evident, when λ tends to zero the regularised analysis tends to the classic analysis in eq. (6), while larger values are expected to produce more stable solutions but with less fidelity to the observations and background state. Therefore, in eq. (11), the regularisation parameter λ plays an important trade-off role and ensures that the magnitude of the analysis is constrained in the norm sense while keeping it sufficiently close to observations and background state. Notice that although in special cases there are some heuristic approaches to find an optimal regularisation parameter (e.g. Hansen and O'Leary, Citation1993; Johnson et al., Citation2005b), typically this parameter is selected empirically via statistical cross-validation in the problem at hand.

It is important to note that, from the probabilistic point of view, the regularised eq. (11) can be viewed as the maximum a posteriori (MAP) Bayesian estimator. Indeed, the constraint of regularisation refers to the prior knowledge about the probabilistic distribution of the state as . In other words, we implicitly assume that under the chosen transformation Φ, the state of interest can be well explained by the family of multivariate generalised Gaussian density (e.g. Nadarajah, Citation2005), which includes the multivariate Gaussian (p=2) and Laplace (p=1) densities as special cases. As is evident, because the prior term is not Gaussian, the posterior density of the above estimator does not remain in the Gaussian domain and thus characterisation of the a posteriori covariance is not straightforward in this case.

From an optimisation view point, the above RVDA problem is convex with a unique global solution (analysis) when p≥1; otherwise, it may suffer from multiple local minima. For the special case of the Gaussian prior (p=2), the problem is smooth and resembles the well-known smoothing norm Tikhonov regularisation (Tikhonov et al., Citation1977; Hansen, Citation2010). However, for the case of the Laplace prior (p=1), the problem is non-smooth, and it has received a great deal of attention in recent years for solving sparse ill-posed inverse problems (see Elad, Citation2010, and references there in). It turns out that the -norm regularisation promotes sparsity in the solution. In other words, using this regularisation, it is expected that the number of non-zero elements of

be significantly less than the observational dimension. Therefore, if we know a priori that a specific Φ projects a large number of elements of the state variable of interest onto (near) zero values, the

-norm is a proper choice of the regularisation term that can yield improved estimates of the analysis state (e.g. Chen et al., Citation1998, Citation2001; Candes and Tao, Citation2006; Elad, Citation2010).

In the subsequent sections, we focus on the 4D-Var problem under the -norm regularisation as follows:

12

It is important to note that the presented formulation in eq. (12) shares the same solution with the problem in eq. (9) while in a more general setting, it can handle non-stationary error covariance matrices and does not require additional computational cost to obtain their square roots. It is worth nothing that the -norm regularised 4D-Var in eq. (12) may be alternatively recast into the following form:

13

where . This problem is a quadratically constrained linear programing problem and is closely related to the original formulation of the well-known basis pursuit approach by Chen et al. (Citation1998). In this problem formulation, the

-norm cost function assures that we seek an analysis with sparse projection onto the subspace spanned by the chosen basis, while the constraint enforces the analysis to be sufficiently close to the available observations.

Conceptually, by adding relevant regularisation terms, we improve the stability of the VDA problem and enforce the analysis state to follow a certain regularity. Improved stability of the regularised solution comes from the fact that the regularisation term constrains the solution magnitude and prevents it from blowing up due to the possible ill-conditioning of the VDA problem. In ill-conditioned classic VDA problems, it is easy to see that the inverse of the Hessian in (7) may contain very large elements which can spoil the analysis. However, by adding a proper regularisation term and making the problem well-posed, we shrink the size of the elements of the covariance matrix and reduce the estimation error. According to the law of bias-variance trade-off, this improvement in the analysis error covariance naturally comes at the cost of introducing a small bias in the solution, whose magnitude can be kept small by proper selection of the regularisation parameter λ (e.g. Neumaier, Citation1998; Hansen, Citation2010). Regularisation may also impose a certain degree of smoothness or regularity on the analysis state. For instance, if we think of Φ as a first order derivative operator, using the smoothing -norm regularisation (

), we enforce the energy of the analysis increments to be minimal, which naturally reduces the analysis variability and makes it smoother. Therefore, using the smoothing

-norm regularisation in a derivative space, is naturally suitable for continuous and sufficiently smooth state variables. On the other hand, for piece-wise smooth states with isolated singularities and jumps, it turns out that the use of the

-norm regularisation (

) in a derivative space is very advantageous. Using this norm in a derivative space, we implicitly constrain the total variation of the solution, which prevents imposing extra smoothness over edges and jump discontinuities.

3.2.1. Solution method via QP.

Due to the separability of the -norm, one of the most well-known methods, often called basis pursuit (see, Chen et al., Citation1998; Figueiredo et al., Citation2007), can be used to recast the

-norm RVDA problem in eq. (12) to a constrained quadratic programming. Here, let us assume that c

0=Φx0, where x

0 and c

0 are in

and split c

0 into its positive u

0=max (c

0, 0) and negative v

0=max (−c

0, 0) components such that c

0=u

0–v

0. Having this notation, we can express the

-norm via a linear inner product operation as

, where

and

. Thus, eq. (12) can be recast as a smooth constrained QP problem on non-negative orthant as follows:

14

where, , b=−Φ−T

, and

denotes element-wise inequality.

Clearly, given the solution from eq. (14), one can easily retrieve

and thus the analysis state is

.

Euclidean projection onto the constraint set of the QP problem in eq. (14) is simpler than the formulation suggested by (Freitag et al., Citation2012) and allows us to use efficient and convergent gradient projection methods (e.g. Bertsekas, Citation1976; Serafini et al., Citation2005; Figueiredo et al., Citation2007), suitable for large-scale VDA problems. The dimension of the above problem seems twice that of the original problem; however, because of the existing symmetry in this formulation, the computational burden remains at the same order as the original classic problem (see Appendix). Another important observation is that, choosing an orthogonal transformation (e.g. orthogonal wavelet, DCT, Fourier) for Φ is very advantageous computationally, as in this case .

It is important to note that, for the -norm regularisation in eq. (14), it is easy to show that the regularisation parameter is bounded as

where the infinity-norm is

. For those values of λ greater than the upper bound, clearly the analysis state in eq. (14) is the zero vector with maximum sparsity (see Appendix).

4. Examples on linear advection–diffusion equation

4.1. Problem statement

The advection–diffusion equation is a parabolic partial differential equation with a drift and has fundamental applications in various areas of applied sciences and engineering. This equation is indeed a simplified version of the general Navier–Stocks equation for a divergence-free and incompressible Newtonian fluid where the pressure gradient is negligible. In a general form, this equation for a quantity of x(s, t) is15

where a(s, t) represents the velocity and denotes the viscosity constant.

The linear (a=const.) and inviscid form () of eq. (15) has been the subject of modelling, numerical simulation, and DA studies of advective atmospheric and oceanic flows and fluxes. For example, Lin et al. (Citation1998) argued that the mechanism of rain-cell regeneration can be well explained by a pure advection mechanism, Jochum and Murtugudde (Citation2006) found that Tropical Instability Waves (TIWs) need to be modelled by horizontal advection without involving any temperature mixing length. The nonlinear inviscid form (e.g. Burgers’ equation) has been used in the shallow water equation and has been subject of oceanic and tidal DA studies (e.g. Bennett and McIntosh, Citation1982; Evensen, Citation1994b). The linear and viscid form (

) has fundamental applications in modelling of atmospheric and oceanic mixing (e.g. Lanser and Verwer, Citation1999; Jochum and Murtugudde, Citation2006; Smith and Marshall, Citation2009, chap. 6), land-surface moisture and heat transport (e.g. Afshar and Marino, Citation1978; Hu and Islam, Citation1995; Peters-Lidard et al., Citation1997; Liang et al., Citation1999), surface water quality modelling (e.g. Chapra, Citation2008, chap. 8), and subsurface mass and heat transfer studies (e.g. Fetter, Citation1994).

Here, we restrict our consideration only to the linear form and present a series of test problems to demonstrate the effectiveness of the -norm RVDA in a 4D-Var setting. It is well understood that the general solution of the linear viscid form of eq. (15) relies on the principle of superposition of linear advection and diffusion. In other words, the solution at time t is obtained via shifting the initial condition by at, followed by a convolution with the fundamental Gaussian kernel as follows:

16

where the standard deviation is . As is evident, the linear shift of size at also amounts to obtaining the convolution of the initial condition with a Kronecker delta function as follows:

17

4.2. Assimilation set-up and results

4.2.1. Prognostic equation and observation model.

It is well understood that (circular) convolution in discrete space can be constructed as a (circulant) Toeplitz matrix–vector product (e.g. Chan and Jin, Citation2007). Therefore, in the context of a discrete advection–diffusion model, the temporal diffusivity and spatial linear shift of the initial condition can be expressed in a matrix form by D 0,i and A 0,i , respectively. In effect, D 0,i represents a Toeplitz matrix, for which its rows are filled with discrete samples of the Gaussian Kernel in eq. (16), while the rows of A 0,i contain a properly positioned Kronecker delta function.

Thus, for our case, the underlying prognostic equation; i.e. x

i

=M

0,i

x

0, may be expressed as follows:18

In this study, the low-resolution constraints of the sensing system are modelled using a linear smoothing filter followed by a down-sampling operation. Specifically, we consider the following time-invariant linear measurement operator19

which maps the higher dimensional state to a lower dimensional observation space. In effect, each observation point is then an averaged and noisy representation of the four adjacent points of the true state.

4.2.2. Initial states.

To demonstrate the effectiveness of the proposed -norm regularisation in eq. (12), we consider four different initial conditions which exhibit sparse representation in the wavelet and DCT domains (). In particular, we consider: (a) a flat top-hat (FTH), which is a composition of zero-order polynomials and can be sparsified theoretically using the first order Daubechies wavelet (DB01) or the Haar basis; (b) a quadratic top-hat which is a composition of zero and second order polynomials and theoretically can be well sparsified by wavelets with vanishing moments of order greater than three (Mallat, Citation2009, p. 284); (c) a window sinusoid (WS); and (d) a squared exponential function which exhibits nearly sparse behaviour in the DCT basis. All of the initial states are assumed to be in

and are evolved in time with a viscosity coefficient

and velocity a=1[L/T]. The assimilation interval is assumed to be between 0 and T=500[T], where the observations are sparsely available over this interval at every 125[T] time-steps ( and 2).

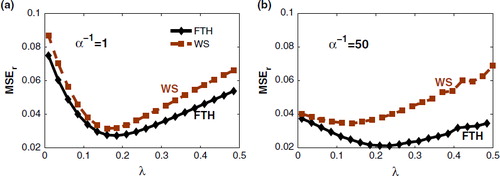

Fig. 1 Initial conditions and their evolutions with the linear advection–diffusion equation: (a) flat top-hat (FTH), (b) quadratic top-hat (QTH), (c) window sinusoid (WS), and (d) squared-exponential (SE). The first two initial conditions (a, b) exhibit sparse representation in the wavelet domain while the next two (c, d) show nearly sparse representation in the discrete cosine domain (DCT). Initial conditions are evolved under the linear advection–diffusion eq. (15) with and a=1[L/T]. The broken lines show the time instants where the low-resolution and noisy observations are available in the assimilation interval.

![Fig. 1 Initial conditions and their evolutions with the linear advection–diffusion equation: (a) flat top-hat (FTH), (b) quadratic top-hat (QTH), (c) window sinusoid (WS), and (d) squared-exponential (SE). The first two initial conditions (a, b) exhibit sparse representation in the wavelet domain while the next two (c, d) show nearly sparse representation in the discrete cosine domain (DCT). Initial conditions are evolved under the linear advection–diffusion eq. (15) with and a=1[L/T]. The broken lines show the time instants where the low-resolution and noisy observations are available in the assimilation interval.](/cms/asset/ca6be971-31d4-4186-abf2-ce8011886556/zela_a_11817030_f0001_ob.jpg)

Fig. 2 A sample representation of the available low-resolution (solid lines) and noisy observations (broken lines with circles) in every 125 [T] time-steps in the assimilation window for the flat top-hat (FTH) initial condition. Here, the observation error covariance is set to with σ

r

=0.08 equivalent to

.

![Fig. 2 A sample representation of the available low-resolution (solid lines) and noisy observations (broken lines with circles) in every 125 [T] time-steps in the assimilation window for the flat top-hat (FTH) initial condition. Here, the observation error covariance is set to with σ r =0.08 equivalent to .](/cms/asset/65f4cb3c-90c1-4278-9b02-13be7863f32d/zela_a_11817030_f0002_ob.jpg)

4.2.3. Observation and background error.

The observations and background errors are important components of a DA system that determine the quality and information content of the analysis. Clearly, the nature and behaviour of the errors are problem-dependent and need to be carefully investigated in a case-by-case study. It needs to be stressed that from a probabilistic point of view, the presented formulation for the -norm RVDA assumes that both of the error components are unimodal and can be well explained by the class of Gaussian covariance models. Here, for observation error, we only consider a stationary white Gaussian distribution,

, where

().

However, as discussed in (Gaspari and Cohn, Citation1999), the background error can often exhibit a correlation structure. In this study, the first and second order auto-regressive (AR) Gaussian Markov processes, are considered for mathematical simulation of a possible spatial correlation in the background error; see Gaspari and Cohn (Citation1999) for a detailed discussion about the error covariance models for DA studies.

The AR(1), also known as the Ornestein–Ulenbeck process in infinite dimension, has an exponential covariance function . In this covariance function, τ denotes the lag either in space or time, and the parameter α determines the decay rate of the correlation. The inverse of the correlation decay rate l

c

=1/α is often called the characteristic correlation length of the process. The covariance function of the AR(1) model has been studied very well in the context of stochastic process (e.g. Durrett, Citation1999) and estimation theory (e.g. Levy, Citation2008). For example, it is shown by Levy (Citation2008, p. 298) that the eigenvalues are monotonically decreasing which may give rise to a very ill-conditioned covariance matrix in the discrete space, especially for small α or large characteristic correlation lengths. The covariance function of the AR(2) is more complicated than the AR(1); however, it has been shown that in special cases, its covariance function can be explained by

(Gaspari and Cohn, Citation1999; Stein, Citation1999, p. 31). Note that, both of these covariance models are stationary and also isotropic as they are only a function of the magnitude of the correlation lag (Rasmussen and Williams, Citation2006, p. 82). Consequently, the discrete background error covariance is a Hermitian Toeplitz matrix and can be decomposed into a scalar standard deviation and a correlation matrix as

, where

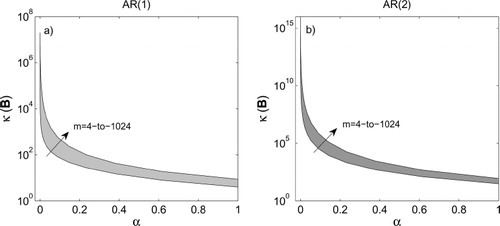

For the same values of α, it is clear that the AR(2) correlation function decays slower than that of the AR(1). shows empirical estimation of the condition number of the reconstructed correlation matrices at different dimensions ranging from m=4 to 1024. As is evident, the error covariance of the AR(2) has a larger condition number than that of AR(1) for the same value of the parameter α. Clearly, as the background error plays a very important role on the overall condition number of the Hessian of the cost function in eq. (5), an ill-conditioned background error covariance makes the solution more unstable with larger uncertainty around the obtained analysis.

Fig. 3 Empirical condition numbers of the background error covariance matrices as a function of parameter α and problem dimension (m) for the AR(1) in (a) and AR(2) in (b). The parameter α varies along the x-axis and m varies along the different curves of the condition numbers with values between 4 and 1024. We recall that is the ratio between the largest and smallest singular values of B. In (a) the covariance matrix is

and in (b)

,

. It is seen that the condition numbers of the AR(2) model are significantly larger than those of the AR(1) model for the same values of the parameter α.

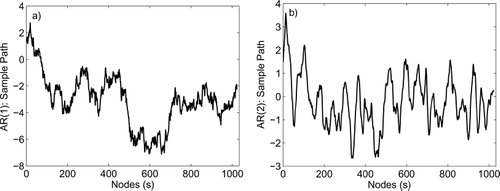

shows a sample path of the chosen error models for the background error. Generally speaking, a correlated error contains large-scale (low-frequency) components that can corrupt the main spectral components of the true state at the same frequency range. Therefore, this type of error can superimpose with the large-scale characteristic features of the initial state and its removal is naturally more difficult than that of the white error via a DA methodology.

Fig. 4 Sample paths of the used correlated background error: (a) the sample path for the AR(1) covariance matrix with α

−1=150, and (b) the sample path for the AR(2) covariance matrix with α

−1=25. The paths are generated by multiplying a standard white Gaussian noise from the left by the lower triangular matrix L, obtained by Cholesky factorisation of the background error covariance matrix, that is B=LL

T. It is seen that for small α, the sample paths exhibit large-scale oscillatory behaviour that can potentially corrupt low-frequency components of the underlying state.

4.3. Results of assimilation experiments

In this subsection, we present the results of the proposed regularised DA as expressed in eq. (12). We first present the results for the white background error and then discuss the correlated error scenarios. As previously explained, the first two initial conditions exhibit sharp transitions and are naturally sparse in the wavelet domain. For those initial states (a, b) we have used classic orthogonal wavelet transformation by Mallat (Citation1989). Indeed, the rows of in this case contain the chosen wavelet basis that allows us to decompose the initial state of interest into its wavelet representation coefficients, as c=Φx (forward wavelet transform). On the other hand, due to the orthogonality of the chosen wavelet ΦΦT=I, columns of ΦT contain the wavelet basis that allows us to reconstruct the initial state from its wavelet representation coefficients, that is, x=ΦT

c (inverse wavelet transform). We used a full level of decomposition without any truncation of wavelet decomposition levels to produce a fully sparse representation of the initial state. For example, in our case where

, we have used 10 levels of decomposition.

For the last two initial states (c, d), we used DCT transformation (e.g. Rao and Yip, Citation1990), which expresses the state of interest by a linear combination of the oscillatory cosine functions at different frequencies. It is well understood that this basis has a very strong compaction capacity to capture the energy content of sufficiently smooth states and sparsely represent them via a few elementary cosine waveforms. Note that this transformation is also orthogonal (ΦΦT=I) and contrary to the Fourier transformation, the expansion coefficients are real.

4.3.1. White background error.

For the white background and observation error covariance matrices (,

), we considered σ

b

=0.10

and σ

r

=0.08

, respectively. Some results are shown in for the selected initial conditions. It is clear that the

-norm regularised solution markedly outperforms the classic 4D-Var solutions in terms of the selected metrics. Indeed, in the regularised analysis the error is sufficiently suppressed and filtered, while characteristic features of the initial state are well-preserved. On the other hand, classic solutions typically over-fitted and followed the background state rather than extracting the true state. As a result, we can argue that for the white error covariance the classic 4D-Var has a very weak filtering effect, which is an essential component of an ideal DA scheme. This over-fitting may be due to the redundant (over-determined) formulation of the classic 4D-Var; see Hawkins (Citation2004) for a general explanation on over-fitting problems in statistical estimators and also see Daley (Citation1993, p. 41).

Fig. 5 The results of the classic 4D-Var (left panel) versus the results of -norm R4D-Var (right panel) for the tested initial conditions in a white Gaussian error environment. The solid lines are the true initial conditions and the crosses represent the recovered initial states or the analysis. In general, the results of the classic 4D-Var suffer from overfitting while the background and observation errors are suppressed and the sharp transitions and peaks are effectively recovered in the regularised analysis.

The average of the results for 30 independent runs is reported in . Three different lump quality metrics are examined as follows:20

Table 1. Expected values of the MSE r , MAE r , and BIAS r , defined in eq. (20), for 30 independent runs

namely, relative mean squared error (MSE

r

), relative mean absolute error (MAE

r

), and relative Bias (BIAS

r

). In eq. (20), denotes the true initial condition,

is the analysis, and overbar denotes the expected value. It is seen that based on the selected lump quality metrics, the

-norm R4D-Var significantly outperforms the classic 4D-Var. In general, the MAE

r

metric is improved more than the MSE

r

metric in the presented experiments. The best improvement is obtained for the FTH initial condition, where the sparsity is very strong compared to the other initial conditions. The MSE

r

metric is improved almost three orders of magnitude, while the MAE

r

improvement reaches up to six orders of magnitude in the FTH initial condition. We need to note that although the trigonometric functions can be sparsely represented in the DCT domain, here we used a WS, which suffers from discontinuities over the edges and cannot be perfectly sparsified in the DCT domain. However, we see that even in a weaker sparsity, the results of the

-norm R4D-Var are still much better than the classic solution.

4.3.2. Correlated background error.

In this part, the background error is considered to be correlated. As previously discussed, typically longer correlation length creates ill-conditioning in the background error covariance matrix and makes the problem more unstable. On the other hand, the correlated background error covariance imposes smoothness on the analysis (see, Gaspari and Cohn, Citation1999), improves filtering effects, and makes the classic solution to be less prone to overfitting. In this subsection, we examine the effect of correlation length on the solution of DA and compare the results of the sparsity promoting R4D-Var with the classic 4D-Var. Here, we do not apply any preconditioning as the goal is to emphasise on the stabilising role of the

-norm regularisation in the presented formulation. In addition, for brevity, the results are only reported for the top-hat and WS initial condition, which are solved in the wavelet and DCT domains, respectively.

a)Results for the AR(1) background error

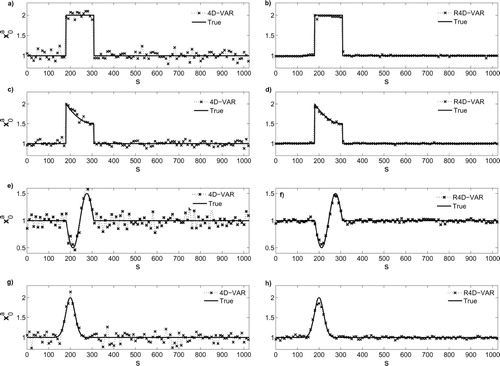

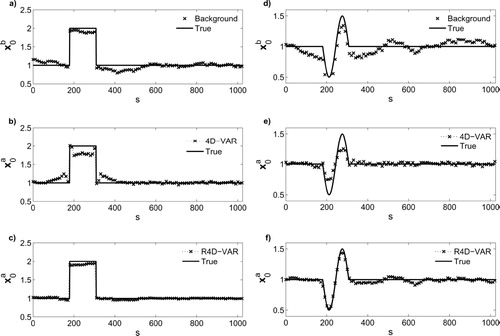

As is evident, in this case, the background state is defined by adding AR(1) correlated error to the true state (6a,d) which is known to us for these experimental studies. demonstrates that in the case of correlated error, the classic 4D-Var is less prone to overfitting compared to the case of the uncorrelated error in . Typically in the FTH initial condition with sharp transitions, the classic solution fails to capture those sharp jumps and becomes spoiled around those discontinuities (b). For the trigonometric initial condition (WS), the classic solution is typically overly smooth and cannot capture the peaks (e). These deficiencies in classic solutions typically become more pronounced for larger correlation lengths and thus more ill-conditioned problems. On the other hand, the -norm R4D-Var markedly outperforms the classic method by improving the recovery of the sharp transitions in FTH and peaks in WS ().

Fig. 6 Comparison of the results of the classic 4D-Var (b, ; e) and -norm R4D-Var (c, ; f) for the top-hat (left panel) and window sinusoid (right panel) initial conditions. The background states in (a) and (d) are defined by adding correlated errors using an AR(1) covariance model of

, where α=1/250. The results show that the

-norm R4D-Var improves recovery of sharp jumps and peaks and results in a more stable solution compared to the classic 4D-Var; see for quantitative results.

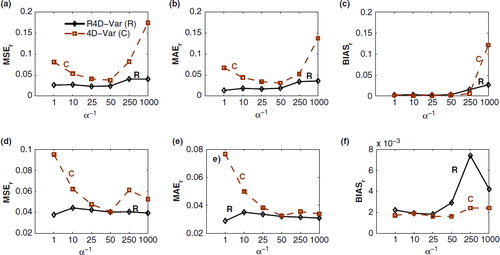

We examined a relatively wide range of applicable correlation lengths, , which correspond to the condition number

of the background error covariance matrices ranging from 101 to 106 (see a). The assimilation results using different correlation lengths are demonstrated in . To have a robust conclusion about comparison of the proposed R4D-Var with the classic 4D-Var, the plots in this figure demonstrate the expected values of the quality metrics for 30 independent runs.

Fig. 7 Comparison of the results of the proposed -norm R4D-Var (solid lines) and the classic 4D-Var (broken lines) under the AR(1) background error for different correlation characteristic length scales (α

−1). Top panel: (a–c) the chosen quality metrics for the top-hat initial condition (FTH); Bottom panel: (d–f) the metrics for the window sinusoid initial condition (WS). These results, averaged over 30 independent runs, demonstrate significant improvements in recovering the analysis state by the proposed

-norm R4D-Var compared to the classic 4D-Var.

It can be seen that for small error correlation lengths (), the improvement of the R4D-Var is very significant while in the medium range (

) the classic solution becomes more competitive and closer to the regularised analysis. As previously mentioned, this improvement in the classic solutions is mainly due to the smoothing effect of the background covariance matrix. However, for larger correlation lengths (

), the differences of the two methods are more drastic as the classic solutions become more unstable and fail to capture the underlying structure of the initial state of interest. In general, we see that the MSE

r

and MAE

r

metrics are improved for all examined background error correlation lengths. As expected, the regularised solutions are slightly biased compared to classic solutions; however, the magnitude of the bias is not significant compared to the mean value of the initial state (see ). also shows a very important outcome of regularisation which implies that the R4D-Var is almost insensitive to the studied range of correlation length and thus condition number of the problem. This confirms the stabilising role of regularisation and needs to be further studied for large-scale and operational DA problems. Another important observation is that, for extremely correlated background error, the classic 4D-Var may produce analysis with larger biases than the proposed R4D-Var (c). This unexpected result might be due to the presence of spurious bias in the background state coming from a strongly correlated error. In other words, a strongly correlated error may shift the mean value of the background state significantly and create a large bias in the solution of the classic 4D-Var. In this case, the improved performance of the R4D-Var may be due to its stronger stability and filtering properties.

b) Results for the AR(2) background error

The AR(2) model is suitable for errors with higher order Markovian structure compared to the AR(1) model. As is seen in Fig. (4), the condition number of the AR(2) covariance matrix is much larger than the AR(1) for the same values of the parameter α in the studied covariance models. Here, we limited our experiments to fewer characteristic correlation lengths of . We constrained our considerations to

, because for larger values (slower correlation decay rates), the condition number of B exceeds 108 and almost both methods failed to obtain the analysis without any preconditioning effort.

In our case study, for , where

, the proposed R4D-Var outperforms the 4D-Var similar to what has been explained for the AR(1) error in the previous subsection. However, we found that for

, where

, without proper preconditioning, the used conjugate gradient algorithm fails to obtain the analysis state in the 4D-Var (). On the other hand, due to the role of the proposed regularisation, the R4D-Var remains sufficiently stable; however, its effectiveness deteriorated compared to the cases where the condition numbers were lower. This observation verifies the known role of the proposed regularisation for improving the condition number of the VDA problem.

Table 2. Expected values of the MSE r , MAE r , and BIAS r , defined in (20), for 30 independent runs

4.3.3. Selection of the regularisation parameters.

As previously explained, the regularisation parameter λ plays a very important role in making the analysis sufficiently faithful to the observations and background state, while preserving the underlying regularity of the analysis. To the best of our knowledge, no general methodology exists which will produce an exact and closed form solution for the selection of this parameter, especially for the proposed -norm regularisation (see, Hansen, Citation2010, chap. 5). Here, we chose the regularisation parameter λ by trial and error based on a MMSE criterion (). As a rule of thumb, we found that in general

yields reasonable results. We also realised that under similar error signal-to-noise ratio, the selection of λ depends on some important factors such as, the pre-selected basis, the degree of ill-conditioning of the problem, and more importantly the ratio between the dominant frequency components of the state and the error.

5. Summary and discussion

We have discussed the concept of sparse regularisation in VDA and examined a simple but important application of the proposed problem formulation to the advection–diffusion equation. In particular, we extended the classic formulations by leveraging sparsity for solving DA problems in wavelet and spectral domains. The basic claim is that if the underlying state of interest exhibits sparsity in a pre-selected basis, this prior information can serve to further constrain and improve the quality of the analysis cycle and thus the forecast skill. We demonstrated that the RVDA not only shows better interpolation properties but also exhibits improved filtering attributes by effectively removing small scale noisy features that possibly do not satisfy the underlying governing physical laws. Furthermore, it is argued that the -norm RVDA is more robust to the possible ill-conditioning of the DA problem and leads to more stable analysis compared to the classic methods.

We explained that, from the statistical point of view, this prior knowledge speaks for the spatial intrinsic non-Gaussian structure of the state variable of interest, which can be well parameterised and modelled in a properly chosen basis. We discussed that selection of the sparsifying basis can be seen as a statistical model selection problem which can be guided by studying the distribution of the representation coefficients.

Note that the examined initial conditions in this study are selected under strict sparsity in the pre-selected basis, which may be compromised under realistic conditions. Additional research is required to reveal sparsity of geophysical signals in the strict and weak sense. From theoretical perspectives, further research needs to be devoted to developing methodologies to: (a) characterise the analysis covariance, especially using ensemble-based approaches; (b) automatise the selection of the regularisation parameter and study its impact on various applications of DA problems; (c) apply the methodology in an incremental setting to tackle non-linear observation operators (Courtier et al., Citation1994); and (d) study the role of preconditioning on the background error covariance for very ill-conditioned DA problems in RVDA settings.

Furthermore, a promising area of future research is that of developing and testing -norm RVDA to tackle non-linear measurement and model equations in a variational-ensemble DA setting. Basically, a crude framework can be cast as follows: (1) given the analysis and its covariance at previous time-step, properly generate an ensemble of analysis state; (2) use the analysis ensembles to generate forecasts or background ensembles via the model equation and then compute the background ensemble mean and covariance; (3) given the background ensembles, obtain observation ensembles via the observation equation and then obtain the ensemble observation covariance; (4) solve an

-norm RVDA problem similar to that of eq. (12) for each ensemble to obtain ensemble analysis states at present time; (5) compute the ensemble analysis mean and covariance and use them to forecast the next time-step; and (6) repeat the recursion.

6. Acknowledgements

This work has been mainly supported by a NASA Earth and Space Science Fellowship (NESSF-NNX12AN45H), a Doctoral Dissertation Fellowship (DDF) of the University of Minnesota Graduate School to the first author, and the NASA Global Precipitation Measurement award (NNX07AD33G). Partial support by an NSF award (DMS-09-56072) to the third author is also greatly acknowledged. Special thanks also go to Arthur Hou and Sara Zhang at NASA-Goddard Space Flight Center for their support and insightful discussions.

References

- Afshar A , Marino M. A . Model for simulating soil-water content considering evapotranspiration. J. Hydrol. 1978; 37: 309–322.

- Anderson J. L . An ensemble adjustment Kalman Filter for data assimilation. Mon. Weather Rev. 2001; 129: 2884–2903.

- Bai Z , Demmel J , Dongarra J , Ruhe A , Van Der Vorst H . Templates for the Solution of Algebraic Eigenvalue Problems: A Practical Guide. 1987; 11 Philadelphia: SIAM.

- Barnard J , McCulloch R , Meng X . Modeling covariance matrices in terms of standard deviations and correlations, with application to shrinkage. Stat. Sin. 2000; 10: 1281–1312.

- Bennett A. F , McIntosh P. C . Open ocean modeling as an inverse problem: tidal theory. J. Phys. Oceanogr. 1982; 12: 1004–1018.

- Bertsekas D . On the Goldstein-Levitin-Polyak gradient projection method. IEEE Trans. Automat. Contr. 1976; 21: 174–184.

- Bertsekas D. P . Nonlinear Programming. 1999; Belmont, MA: Athena Scientific. 794. 2nd ed.

- Boyd S , Vandenberghe L . Convex Optimization. 2004; New York: Cambridge University Press. 716.

- Budd C , Freitag M , Nichols N . Regularization techniques for ill-posed inverse problems in data assimilation. Comput. Fluids. 2011; 46: 168–173.

- Candes E , Tao T . Near-optimal signal recovery from random projections: universal encoding strategies?. IEEE Trans. Inform. Theor. 2006; 52: 5406–5425.

- Chan R. H.-F , Jin X.-Q . An Introduction to Iterative Toeplitz Solvers. 2007; Philadelphia: SIAM.

- Chapra S. C . Surface Water Quality Modeling. 2008; long grove, IL, USA: Waveland Press, Inc..

- Chen S , Donoho D , Saunders M . Atomic decomposition by basis pursuit. SIAM Rev. 2001; 43: 129–159.

- Chen S. S , Donoho D , Saunders M . Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 1998; 20: 33–61.

- Courtier P , Talagrand O . Variational assimilation of meteorological observations with the direct and adjoint shallow-water equations. Tellus A. 1990; 42: 531–549.

- Courtier P , Thépaut J.-N , Hollingsworth A . A strategy for operational implementation of 4D-VAR, using an incremental approach. Q. J. Roy. Meteorol. Soc. 1994; 120: 1367–1387.

- Daley R . Atmospheric Data Analysis. 1993; New York, NY, USA: Cambridge University Press. 472.

- Durrett R . Essentials of Stochastic Processes. 1999; New York, NY, USA: Springer-Verlag, New York Inc..

- Ebtehaj A. M , Foufoula-Georgiou E . Statistics of precipitation reflectivity images and cascade of Gaussian-scale mixtures in the wavelet domain: a formalism for reproducing extremes and coherent multiscale structures. J. Geophys. Res. 2011; 116: D14110.

- Ebtehaj A. M , Foufoula-Georgiou E . On variational downscaling, fusion and assimilation of hydro-meteorological states: a unified framework via regularization. 2013; 49: 5944–5963.

- Ebtehaj A. M , Foufoula-Georgiou E , Lerman G . Sparse regularization for precipitation downscaling. J. Geophys. Res. 2012; 116: D22110.

- Elad M . Sparse and Redundant Representations: From Theory to Applications in Signal and Image Processing. 2010; New York, NY, USA: Springer-Verlag, New York Inc.. 376.

- Evensen G . Sequential data assimilation with a nonlinear quasi-geostrophic model using Monte Carlo methods to forecast error statistics. J. Geophys. Res. 1994a; 99: 10143–10162.

- Evensen G . Inverse methods and data assimilation in nonlinear ocean models. Physica D. 1994b; 77: 108–129.

- Fetter C . Applied Hydrogeology. 1994; 4th ed, New Jersey: Prentice Hall.

- Figueiredo M , Nowak R , Wright S . Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Topics Signal Process. 2007; 1: 586–597.

- Freitag M. A , Nichols N. K , Budd C. J . L1-regularisation for ill-posed problems in variational data assimilation. PAMM. 2010; 10: 665–668.

- Freitag M. A , Nichols N. K , Budd C. J . Resolution of sharp fronts in the presence of model error in variational data assimilation. Q. J. Roy. Meteorol. Soc. 2012; 139: 742–757.

- Gaspari G , Cohn S. E . Construction of correlation functions in two and three dimensions. Q. J. Roy. Meteorol. Soc. 1999; 125: 723–757.

- Ghil M . Meteorological data assimilation for oceanographers. Part I: description and theoretical framework. Dynam. Atmos. Oceans. 1989; 13: 171–218.

- Ghil M , Cohn S , Tavantzis J , Bube K , Isaacson E , Bengtsson L , Ghil M , Källén E . Applications of estimation theory to numerical weather prediction. Dynamic Meteorology: Data Assimilation Methods, Applied Mathematical Sciences. 1981; New York: Springer. 139–224. Vol. 36.

- Ghil M , Malanotte-Rizzoli P . Data Assimilation in Meteorology and Oceanography. 1991; B.V. Amsterdam, Netherlands: Elsevier Science. 141–266.

- Golub G , Hansen P , O'Leary D . Tikhonov regularization and total least squares. SIAM J. Matrix Anal. Appl. 1999; 21: 185–194.

- Hansen P . Rank-Deficient and Discrete Ill-Posed Problems: Numerical Aspects of Linear Inversion. 1998; 4 Philadelphia: Society for Industrial Mathematics (SIAM).

- Hansen P . Discrete Inverse Problems: Insight and Algorithms Vol 7. 2010; Philadelphia, PA: Society for Industrial & Applied Mathematics (SIAM).

- Hansen P , O'Leary D . The use of the L-curve in the regularization of discrete ill-posed problems. SIAM J Sci Comput. 1993; 14: 1487–1503.

- Hansen P , Nagy J , O'Leary D . Deblurring Images: Matrices, Spectra, and Filtering Vol 3. 2006; Philadelphia, PA: Society for Industrial & Applied Mathematics (SIAM).

- Hawkins D. M . The problem of overfitting. J. Chem. Inf. Comput. Sci. 2004; 44: 1–12.

- Hu Z , Islam S . Prediction of ground surface temperature and soil moisture content by the force–restore method. Water Resour. Res. 1995; 31: 2531–2539.

- Ide K , Courtier P , Gill M , Lorenc A . Unified notation for data assimilation: operational, sequential, and variational. J. Met. Soc. Japan. 1997; 75: 181–189.

- Jochum M , Murtugudde R . Temperature advection by tropical instability waves. J. Phys. Oceanogr. 2006; 36: 592–605.

- Johnson C , Hoskins B. J , Nichols N. K . A singular vector perspective of 4D-Var: filtering and interpolation. Q. J. Roy. Meteorol. Soc. 2005a; 131: 1–19.

- Johnson C , Nichols N. K , Hoskins B. J . Very large inverse problems in atmosphere and ocean modelling. Int. J. Numer. Meth. Fluids. 2005b; 47: 759–771.

- Kalnay E . Atmospheric Modeling, Data Assimilation, and Predictability. 2003; New York: Cambridge University Press. 341.

- Kim S.-J , Koh K , Lustig M , Boyd S , Gorinevsky D . An interior-point method for large-scale l1-regularized least squares. IEEE J. Sel. Topics Signal Process. 2007; 1: 606–617.

- Kleist D. T , Parrish D. F , Derber J. C , Treadon R , Wu W. S , co-authors . Introduction of the GSI into the NCEP global data assimilation system. Weather. Forecast. 2009; 24: 1691–1705.

- Lanser D , Verwer J . Analysis of operator splitting for advection–diffusion–reaction problems from air pollution modelling. J. Comput. Appl. Math. 1999; 111: 201–216.

- Law K. J. H , Stuart A. M . Evaluating data assimilation algorithms. Mon. Weather. Rev. 2012; 140(11): 3757–3782.

- Levy B. C . Principles of Signal Detection and Parameter Estimation. 2008; 1st ed, New York: Springer Publishing Company. 639.

- Lewicki M , Sejnowski T . Learning overcomplete representations. Neural Comput. 2000; 12: 337–365.

- Liang X , Wood E. F , Lettenmaier D. P . Modeling ground heat flux in land surface parameterization schemes. J. Geophys. Res. 1999; 104: 9581–9600.

- Lin Y.-L , Deal R. L , Kulie M. S . Mechanisms of cell regeneration, development, and propagation within a two-dimensional multicell storm. J. Atmos. Sci. 1998; 55: 1867–1886.

- Lorenc A . Optimal nonlinear objective analysis. Q. J. Roy. Meteorol. Soc. 1988; 114: 205–240.

- Lorenc A. C . Analysis methods for numerical weather prediction. Q. J. Roy. Meteorol. Soc. 1986; 112: 1177–1194.

- Lorenc A. C , Ballard S. P , Bell R. S , Ingleby N. B , Andrews P. L. F , co-authors . The Met. Office global three-dimensional variational data assimilation scheme. Q. J. Roy. Meteorol. Soc. 2000; 126: 2991–3012.

- Mallat S . A theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989; 11: 674–693.

- Mallat S . A Wavelet Tour of Signal Processing: The Sparse Way. 2009; 3rd ed, Elsevier. 805.

- Moradkhani H , Hsu K.-L , Gupta H , Sorooshian S . Uncertainty assessment of hydrologic model states and parameters: sequential data assimilation using the particle filter. Water Resour. Res. 2005; 41: W05012.

- Nadarajah S . A generalized normal distribution. J. Appl. Stat. 2005; 32: 685–694.

- Neumaier A . Solving Ill-conditioned and singular linear systems: a tutorial on regularization. SIAM Rev. 1998; 40: 636–666.

- Parrish D. F , Derber J. C . The National Meteorological Center's spectral statistical-interpolation analysis system. Mon. Weather Rev. 1992; 120: 1747–1763.

- Peters-Lidard C. D , Zion M. S , Wood E. F . A soil–vegetation–atmosphere transfer scheme for modeling spatially variable water and energy balance processes. J. Geophys. Res. 1997; 102: 4303–4324.

- Rabier F , Järvinen H , Klinker E , Mahfouf J.-F , Simmons A . The ECMWF operational implementation of four-dimensional variational assimilation. I: experimental results with simplified physics. Q. J. Roy. Meteorol. Soc. 2000; 126: 1143–1170.

- Rao K , Yip P . Discrete Cosine Transform: Algorithms, Advantages, Applications. 1990; Boston: Academic Press.

- Rasmussen C , Williams C . Gaussian Processes for Machine Learning Vol 1. 2006; Cambridge, MA: MIT press.

- Rawlins F , Ballard S. P , Bovis K. J , Clayton A. M , Li D , co-authors . The Met Office global four-dimensional variational data assimilation scheme. Q. J. Roy. Meteorol. Soc. 2007; 133: 347–362.

- Sasaki Y . Some basic formalisms in numerical variational analysis. Mon. Weather Rev. 1970; 98: 875–883.

- Serafini T , Zanghirati G , Zanni L . Gradient projection methods for quadratic programs and applications in training support vector machines. Optim. Methods Softw. 2005; 20: 353–378.

- Smith K. S , Marshall J . Evidence for enhanced Eddy mixing at middepth in the Southern ocean. J. Phys. Oceanogr. 2009; 39: 50–69.

- Stein M. L . Interpolation of Spatial Data. 1999; Springer-Verlag, New York.

- Tibshirani R . Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 1996; 58: 267–288.

- Tikhonov A , Arsenin V , John F . Solutions of Ill-Posed Problems. 1977; Washington, DC: Winston & Sons.

- Van Leeuwen P. J . Nonlinear data assimilation in geosciences: an extremely efficient particle filter. Q. J. Roy. Meteorol. Soc. 2010; 136: 1991–1999.

- Wahba G , Wendelberger J . Some new mathematical methods for variational objective analysis using splines and cross validation. Mon. Weather. Rev. 1980; 108: 1122–1143.

- Zhou Y , McLaughlin D , Entekhabi D . Assessing the performance of the ensemble Kalman filter for land surface data assimilation. Mon. Weather Rev. 2006; 134: 2128–2142.

- Zupanski M . Regional four-dimensional variational data assimilation in a quasi-operational forecasting environment. Mon. Weather Rev. 1993; 121: 2396–2408.

7. Appendix

A.1 Quadratic Programming form of the

-norm RVDA

To obtain the quadratic programming (QP) form presented in eq. (14), we follow the general strategy proposed in the seminal work by Chen et al. (Citation2001). To this end, let us expand the -norm regularised variational data assimilation (

-RVDA) problem in eq. (12) as follows:

A.1

Assuming , then the above problem can be rewritten as,

A.2

where, and b=−Φ−T

. Having

, where u

0=max(c

0, 0)

and

encode the positive and negative components of c

0, problem (A.2) can be represented as follows:

A.3

Stacking u

0 and v

0 in , the more standard QP formulation of the problem is immediately followed as:

A.4

Obtaining as the solution of (A.4), one can easily recover

and thus the initial state of interest

.

The dimension of the QP representation (A.4) is twice that of the original -RVDA problem (A.1). However, using iterative first order gradient-based methods, which are often the only practical option for large-scale data assimilation problems, it is easy to show that the effect of this dimensionality enlargement is minor on the overall cost of the problem; this is because one can easily see that obtaining the gradient of the cost function in (A.4) only requires to compute

which mainly requires matrix-vector multiplication in (e.g. Figueiredo et al., Citation2007).

A.2 Upper Bound of the Regularisation Parameter

Here, to derive the upper bound for the regularisation parameter in the -RVDA problem, we follow a similar approach as suggested for example by Kim et al. (Citation2007). Let us refer back to the problem (A.2), which is convex but not differentiable at the origin. Obviously,

is a minimizer if and only if the cost function

in (A.2) is sub-differentiable at

and thus

where, denotes the sub-differential set at the solution point or analysis coefficients in the selected basis. Given that

we have

and thus for ,

, one can obtain the following vector inequality

which implies that . Therefore λ must be less than

to obtain nonzero analysis coefficients in problem (A.2) and thus (A.1).

A.3 Gradient Projection Method

Gradient projection (GP) method is an efficient and convergent optimisation method to solve convex optimisation problems over convex sets (see, Bertsekas, Citation1999, p. 228). This method is of particular interest, especially, when the constraints form a convex set with simple projection operator. The cost function

in eq. (14) is a quadratic function that needs to be minimised on non-negative orthant

as follows:

A.5

For this particular problem, the GP method amounts obtained the following fixed point:A.6

where β is a step-size along the descent direction and for every element of w

0

A.7

denotes the Euclidean projection operator onto the non-negative orthant. As is evident, the fixed point can be obtained iteratively asA.8

Thus, if the descent at step k is feasible, that is , the GP iteration becomes an ordinary unconstrained steepest descent method, otherwise the result is mapped back onto the feasible set by the projection operator in (A.7). In effect, the GP method iteratively finds the closest feasible point in the constraint set to the solution of the original unconstrained minimisation.

In our study, the step-size β

k

was selected using the Armijo rule, or the so-called backtracking line search, that is a convergent and very effective step-size rule. This step-size rule depends on two constants ,

and is assumed to be

, where m

k

is the smallest non-negative integer for which

A.9

A closer look at this line search scheme shows that it begins with a unit step-size in the direction of the negative of the gradient and reduces it by the parameter until the stopping criterion in (A.9) is met. In our experiments, the backtracking parameters are set to

and

(see, Boyd and Vandenberghe, Citation2004, pp. 464 for further explanation). In our coding, the iterations terminate if

or if the number of iterations exceeds 100.