?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Many researchers have tried to model how environmental knowledge is learned by the brain and used in the form of cognitive maps. However, previous work was limited in various important ways: there was little consensus on how these cognitive maps were formed and represented, the planning mechanism was inherently limited to performing relatively simple tasks, and there was little consideration of how these mechanisms would scale up. This paper makes several significant advances. Firstly, the planning mechanism used by the majority of previous work propagates a decaying signal through the network to create a gradient that points towards the goal. However, this decaying signal limited the scale and complexity of tasks that can be solved in this manner. Here we propose several ways in which a network can can self-organize a novel planning mechanism that does not require decaying activity. We also extend this model with a hierarchical planning mechanism: a layer of cells that identify frequently-used sequences of actions and reuse them to significantly increase the efficiency of planning. We speculate that our results may explain the apparent ability of humans and animals to perform model-based planning on both small and large scales without a noticeable loss of efficiency.

Introduction

Statement of research question

How might a biologically plausible neural network based on the architecture and operational principles of the brain learn to perform hierarchical model-based action selection as it explores a sensory environment?

Broad rationale for research question

The experiments of Tolman (Tolman Citation1938, Citation1948; Tolman et al. Citation1946) suggested that rats attain an internal model of their environment, called a cognitive map. Furthermore, they suggested that this cognitive map was necessary to respond quickly to changes in the reward available in an environment or to changes in the structure of the environment.Footnote1 Examples of these are latent learning tasks and detour tasks, described more fully in Section 1.3.

Extensive modelling efforts (Dolan and Dayan Citation2013) have validated this claim. Despite the development of sophisticated “model-free” algorithms it has thus far been impossible to replicate certain observed behaviours without using a cognitive map representation (Russek et al. Citation2016; Fakhari et al. Citation2018).

Several neural network models have studied the formation and use of cognitive maps.Footnote2 However, many of the major questions around map-based planning have not yet been fully answered. How are spatial and non-spatial cognitive maps formed, stored, retrieved and used at the neural level? Furthermore, how are these processes affected by scale? It is uncertain how the storage and usage of cognitive maps differs between the small-scale mazes used in rat-based experiments and the large-scale maps of, say, a town, that a human would use to navigate. These problems are considered in Sections 3 and 5.

There is some evidence that map-based planning can take place on very short timescales but also on much longer timescales, frequently abstracted from much detail about individual muscle movements (Botvinick et al. Citation2009; Ramkumar et al. Citation2016). Behavioural research is currently investigating such “high-level” or “hierarchical” environment representations and map-based planning, and the results of these investigations are detailed in Sec. 1.5. Furthermore, a considerable amount of work in artificial intelligence research has been dedicated to investigating different forms of hierarchical planning in the context of Markov Decision Processes (MDPs) and Reinforcement Learning (RL); this research is discussed further in Sec. 1.6.

We believe that by modelling hierarchical, map-based planning in a biologically plausible neural network architecture – one which relies on local Hebbian learning to model synaptic plasticity and which self-organizes its connectivity from sensory and motor inputs as the simulated agent explores its environment – we can begin to produce predictions of the neural and behavioural correlates that might accompany this form of planning. In particular, to produce predictions about how an unsupervised neural architecture could learn to represent useful sections of previously experienced trajectories and use these to plan at larger scales with greater efficiency, yet still in a biologically plausible fashion. Furthermore, by investigating the constraints of such a model, we can predict the features that a planning model needs to have in order to begin planning hierarchically, therefore constraining the space of possible action selection models.

Neurological investigation of cognitive maps

Although Tolman’s experiments were not conclusive, the cognitive map hypothesis has been greatly strengthened by further experimental evidence since it was originally proposed. In particular, the discovery of place cells in the hippocampus (O’Keefe and Nadel Citation1978) gave a neural substrate in which the cognitive map might be stored. The later discovery of grid cells in the entorhinal cortex (Hafting et al. Citation2005) gave rise to theories that combinations of grid cell inputs might give rise to place cell responses (Rolls et al. Citation2006; McNaughton et al. Citation2006) and so about how the cognitive map might arise. A little later still, the work of Johnson and Redish 2007 (Johnson and Redish Citation2007) showed apparent neural correlates of map-based planning in place cells: rats at a choice point sent waves of activity along the place cells that represented various future paths, apparently selecting between them in a process dubbed “mental time-travel”.Footnote3

Several experiments have provided indications that cognitive maps also exist for non-spatial domains. In particular, Kurth-Nelson et al. (Kurth-Nelson et al. Citation2016) analysed whole-brain magneto-encephalographic (MEG) data while subjects performed a non-spatial navigation taskFootnote4 and found that (a) the current state could be reliably decoded from MEG data and that (b) once the task had been learned, spontaneous MEG activity encoded legal paths through the environment. These paths appeared as reverse sequences of up to four states.

An experiment by Aronov et al (Aronov et al. Citation2017) has also shown the existence of “place cell” representations for non-spatial state-spaces. Mice were given the ability to alter auditory stimuli along a continuous frequency axis and cells developed in the hippocampal CA1 and the medial entorhinal cortex that have discrete firing fields along particular areas of the frequency axis. These cells overlapped with spatial place cells and grid cells but not in any organized fashion, which suggested that some spatial cells were being randomly repurposed for this new representation. Interestingly, these neurons appeared to be task-selective; when Aronov et. al. presented the same mice with the same stimuli (sweeps of the same frequency and duration) outside the context of the task, they found very little activity in the cells that had earlier fired reliably at different parts of the same axis.

Algorithms for stimulus-response and cognitive-map based planning

Historically, two kinds of animal behaviour tasks have been presented as arguments against purely model-free theories of planning in the brain: revaluation tasks, which examine whether animals adjust their behaviour appropriately following changes in the reward function, and contingency change tasks, which examine whether animals change their behaviour appropriately following changes in the transition structure of the environment (for example, a blocked or opened passageway in a maze) (Tolman Citation1948; Russek et al. Citation2016). Model-free reinforcement learners perform poorly in these tasks because they cache the cumulative expected rewards that they expect from different state-action combinations and – without a transition model of the environment – have no way of updating these cached values post-manipulation except to relearn its Q-function. In comparison, model-based reinforcement learners can immediately update their value-functions and policies, continuing to produce adaptive behaviour in the face of environmental changes or altered rewards (Russek et al. Citation2016). Russek et al. 2016 found that a model-free approach, even augmented with a successor representation (Russek et al. Citation2016), could not solve contingency change tasks. These tasks, in particular a variant of the Tolman detour task described later in this paper, required an explicitly model-based reinforcement learning approach.

The role of hierarchical representations in planning behaviour

An important objective of this paper is to investigate the production and use of hierarchical map elements in a biologically plausible neural network model. Accordingly, this section will discuss the nature of these hierarchical elements in vivo as far as it can be deduced from behavioural studies.

Extensive evidence shows that humans represent space in a regional and hierarchical fashion. The regional representation of spaces affects the ability of participants to judge the spatial relationship between locations in different regions (Hirtle and Jonides Citation1985) and their behaviour when searching for a specified location (Hölscher et al. Citation2008). Regional effects seem to occur even in spaces whose hierarchical structure is not explicitly defined (Hirtle and Jonides Citation1985).

Experimental work has also indicated that humans plan routes at different levels of the hierarchical representation. When giving route directions, either in advance or during travel, participants reliably produce high-level route segments in order to optimize information-to-memory-load (Klippel et al. Citation2003). Furthermore, participants reference elements of a city in order from the most general to highly specific local references (Tomko et al. Citation2008), signifying that most people store hierarchical spatial information in the same way and are able to predict what level of information other people are likely to recognize. Timpf et. al. (2003) break down highway navigation into three levels of abstraction: route planning operates on a very high level, finding routes given certain constraints; driving is performed at a fine level; and people produce driving instructions at a level partway between the two, dividing the route into segments that amalgamate a certain amount of driving activity (Timpf and Kuhn Citation2003). Wiener & Mallot (2003) similarly show that humans appear to use a fine-to-coarse planning heuristic when navigating: a route plan contains fine-space information for the close surroundings and coarse space information for the rest of the route (Wiener and Mallot Citation2003). There is also evidence that route familiarity is an important element in human navigation (Payyanadan Citation2018).

We believe that the scale on which high-level route segments are generated and used depends on the size of the task in question. People give directions of a roughly similar length for routes whose lengths differ by orders of magnitude, provided that route fragments of the appropriate scale exist, indicating that the existence of high-level actions (driving instructions) allows complex routes to be compressed into a practical length. At the same time, planning tasks performed at the same level of abstraction show planning times are partially proportional to absolute path length (Howard et al. Citation2014).

We believe that a model of map-based planning should explain both the generation of high-level path fragments as well as how planning operates at any given level of abstraction.

Hierarchical representations in markov decision processes

If we consider MDP solving – and behavioural planning more generally – as the process of searching for a nearly optimal solution to a problem in a very large space of potential actions, it becomes clear that as that space grows, the process of searching for a solution becomes more difficult, until the problem becomes computationally intractable for a naive planner.

For an algorithm to successfully obtain its goals within a very large state space, it must either find a way to shrink that space or to search through that space more efficiently. An example of the former is the set of algorithms that try to abstract away irrelevant state attributes to describe the task in the minimum number of states (Ebitz et al. Citation2018). An example of the latter is the option framework, defined by Sutton and Barto in their 1999 paper “Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning” (Sutton et al. Citation1999). The original formulation of the option framework speeds up the planning process in an MDP through the provision of Options, little chunks of policy that can be predefined by the coder or learned/imported from previous tasks. Each option consists of an initiation set (states where the option may be used), a termination set (states where the option ends and the agent starts choosing actions normally again) and a policy (a set of state-action combinations that moves the agent towards a state in the termination set). Hierarchical Reinforcement Learning, as seen in Botvinick 2009 (Botvinick et al. Citation2009), implements options in order to allow the agent to explore the environment in a faster and more rewarding fashion.

Options can be specified by modellers, as in Botvinick 2009, but ideally an agent using the option framework should be able to discover useful options on its own. The two main approaches can be summed up as sub-goal based or policy based.Footnote5 Subgoal-based option discovery uses various techniques to identify useful states as subgoals. These states are usually either bottlenecks – states that connect together two otherwise-isolated sets of states – or frequently visited states. Having identified these subgoals, a secondary reinforcement learning process is used to create a policy for reaching those subgoals, rewarding the agent with a pseudo-reward when it reaches those subgoals (Botvinick et al. Citation2009; Taghizadeh and Beigy Citation2013; Kulkarni et al. Citation2016; Tessler et al. Citation2016; Vezhnevets et al. Citation2017).

While subgoal-based option discovery focuses on the termination of an option, identifying a useful sub-goal and then learning an option policy that can lead the agent to that subgoal, policy-based option discovery ignores subgoals and tries to identify useful sub-policies directly. One common approach is to search many solutions for common policy elements (Pickett and Barto Citation2002; Girgin et al. Citation2010). Florensa et al. (Florensa et al. Citation2017) have produced a hierarchical deep reinforcement learner along policy-based lines by producing a framework for learning skills and applying them in different tasks. Their framework consists of an unsupervised procedure to learn a repertoire of skills using proxy rewards, as well as a hierarchical structure for reusing those skills in future tasks. Skills appear to be represented as policies, similar to other approaches in this subsection.

Literature review

Representing an environment within a cognitive map

There are various ways for a cognitive map to encodes the topology of an environment. One potential representation is the use of state (place) cells. These place cells are connected together by recurrent synaptic connectivity in such a way that each state is connected to its neighbouring states. This is probably the most simple form of cognitive map, and Matsumoto 2011 (Matsumoto et al. Citation2011) shows that it is possible to use this map to plan (using a mechanism described below). However, this form of map does not encode any information on the actions that are required to move the agent from one state to another. Planning in this context can therefore only mean assigning value to different states, marking some as more desirable and some as less desirable. This form of cognitive map therefore implicitly predicts that some other, unmodelled, mechanism must exist that knows how to move the agent from one state to another. In other words, this form of cognitive map does not reproduce an extremely important part of the planning mechanism.

Another form of cognitive map, used by Friedrich 2016 (Friedrich and Lengyel Citation2016), is based on state-action cells. These cells encode a combination of a particular state with a particular action taken in that state. They are connected together with recurrent synaptic connectivity that encodes a causal relationship. If a cell encodes a state-action combination that produces a transition to a particular successor state, that cell will be synaptically connected to other cells encoding the successor state. This means that a state-action map can encode not only how different states are connected but also what actions an agent should take to move between these states.

A variation on the state-action map is the transition map used by Cuperlier 2007 (Cuperlier et al. Citation2007), which uses several different kinds of transition cells to encode the cognitive map. Some of these cells encode a unique transition between two states, without encoding the action that produces that transition, and these cells effectively work in the same way as state cells. Other cells encode a combination of a given transition and a motor action that produces that transition and so end up resembling state-action cells. A problem with this form of map representation is that it does not seem likely to generalize well to nondeterministic environments. In certain environments, a single state-action combination may produce many state transitions. In a state-action model, these transitions may all be represented using recurrent synaptic weights. By contrast, in a transition cell model each transition requires its own cell. As the ratio of transitions to state-action combinations increases, the ratio of required transition cells to required state-action cells increases.

More complex cognitive maps (Hasselmo 2005, Martinet 2011 (Hasselmo Citation2005; Martinet et al. Citation2011)) still fundamentally use a state-action encoding for the cognitive map but incorporate these cells within complex minicolumn structures designed to facilitate various elements of the planning process. Functionally, minicolumns allow for much more detailed circuitry to be easily modelled and iterated across the cognitive map representation. This makes minicolumn models more powerful than models using other representations but also more complex and less clear.

The form of an agent’s cognitive map will inevitably affect the mechanisms that are required to plan using this map. From this perspective, the most important distinction between the various forms of maps is between those maps that incorporate actions implicitly (a pure state cell map) and those that incorporate actions explicitly within the cognitive map (all others). A pure state cell map inherently predicts that planning takes place at the state level, and that some other mechanism exists which encodes the causal knowledge of how to move from one state to another and that outputs actions to do so. In other words, a pure state model predicts that planning and acting are two fundamentally separate processes, which output a set of desired transitions and a set of actions respectively. By contrast, a state-action representation predicts that these two processes are inherently interlinked. This claim could be explored experimentally by looking for cases (if they exist) where people appear to be able to plan a solution to a problem (i.e. to give a set of transitions between states) but are not able to give a set of actions which would perform those transitions. This would demonstrate that it is possible to disrupt the action-production process without disrupting the planning process. The existence or non-existence of such cases might support a state-based or state-action based account.

Another open question is how these representations should scale. Of the models cited, all of them except for Matsumoto 2011 and Friedrich 2016 are discrete: they assume that states and actions are separable from one another. This has two consequences. The first is that in many cases the associated cognitive maps encode an artificial separation between different states and different actions. Either they are described purely in the context of tasks with discrete state spaces (such as the grid word tasks used throughout this paper, where you are either in one grid square or another grid square), or the models designate certain points in a continuous state space as “states” and ignores all of the points in between. The same is true of actions, where a limited set of discrete actions is made available for the agent to choose between. The second consequence of using a discrete state space – at least in the reviewed models – is that they do not handle scaling well. As the size of the environment increases, either the number of discrete states must increase or the ability of the map to encode the state space must decrease. For this reason, the models reviewed in Sec. 2 all have small state spaces.

The alternative is a continuous representation, where each input neuron represents some form of function in the joint space of states and/or actions (e.g. a place cell with a gaussian receptive field). In this case states and/or actions can be represented as a unique combination of distributed input neuron firing. Two models deal with such continuous representations: Matsumoto 2011 and Friedrich 2016 (Martinet et al. Citation2011; Friedrich and Lengyel Citation2016), but these models are also the most limited in terms of explaining the mechanisms around the formation and use of these maps: Matsumoto 2011 does not address the encoding of causal relationships between states, Friedrich does not address the formation of the cognitive map, and neither of them model the process of reading out the results of the planning process using neural mechanisms. It seems likely that the complexity of modelling continuous representations is responsible for the more limited scope of these models.

Encoding of the cognitive map in recurrent connectivity

All of the reviewed networks encode the cognitive map as a set of synapses that connect the cellular substrate (state/place cell, state-action cells or neocortical minicolumns). These synapses can be assigned algorithmically or they can be self-organized using a variety of methods.

In Cuperlier 2007, Martinet 2011 and Friedrich 2016 (Cuperlier et al. Citation2007; Martinet et al. Citation2011; Friedrich and Lengyel Citation2016), the connectivity is not self-organized using biological processes. Instead, as the agent explores its environment, the appropriate cells are connected together algorithmically. In the case of Cuperlier 2007 (Cuperlier et al. Citation2007), which uses transition cells (see above) this means setting the synapses between the current and previous transition cell to an arbitrary positive value after a state transition. In Martinet 2011 and Friedrich 2016 (Friedrich and Lengyel Citation2016), it means doing the same with state-action cells.

Erdem & Hasselmo 2012 (Erdem and Hasselmo Citation2012) model a mechanism which is slightly more consistent with the known neurobiology. In this model, the topology cells (which represent the topology of the environment) are essentially place cells, but are connected together using a learning rule which does not incorporate any firing information related to the topology cells that are being connected together. Instead, the equation used implicitly combines activation, competition and recency cell activity to connect topology cells together as a function of a “recency signal”. This implicit modelling seems to presuppose that recency cell activity would activate the appropriate topology cells in certain ways, that certain forms of competition could be applied to the topology cells such that their firing came to encode the desired function of their inputs, and that Hebbian learning could then connect the still-firing topology cells together in such a way as to obtain the desired connectivity. Essentially, the learning rule implicitly describes an extended sequence of mechanisms that are not explicitly modelled. Since these mechanisms are not explicitly simulated it cannot be assumed without reservation that they would operate as hypothesized and without unforeseen side-effects. The possibility that – if these mechanisms were implemented explicitly – Erdem & Hasselmo 2012 might be unable to learn a cognitive map is a limitation in this work.

Hasselmo 2005 (Hasselmo Citation2005) uses a Hebbian local learning rule that implements a “memory buffer” which holds activity from the input state, thus allowing self-organization of the synapses between the minicolumns that encode the cognitive map. The actual self-organization is highly complex but appears to be Hebbian.

Finally, Matsumoto 2011 (Matsumoto et al. Citation2011), which encodes the cognitive map between place cells with large, overlapping fields, is able to encode the relationship between states using a simple Hebbian rule. Each location in the state space is signified by the combined activation of multiple state cells. As the agent moves through the environment, it will move from one receptive field to another, and as it moves between the two receptive fields both place cells will be firing simultaneously. A simple Hebbian learning rule is therefore able to connect neighbouring place cells.

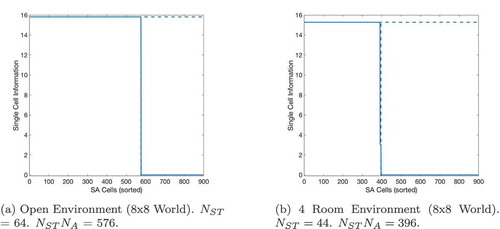

Planning mechanisms

Most current models of biologically plausible cognitive map-based planning (Cuperlier et al. Citation2007; Martinet et al. Citation2011; Friedrich and Lengyel Citation2016) are based on the principle of propagating activity from a goal representation through the synaptically encoded cognitive map.

The basic paradigm is that of propagation with decaying activation. In this paradigm, the cells encoding the cognitive map are stimulated at one location, or several locations, encoding the goal state that the agent is required to navigate to using its cognitive map. This activity propagates through the cells encoding the cognitive map, such that cells close to the goal receive high activity and fire at a high rate, while cells further from the goal receive much less activity and fire at a much lower rate. In effect, a gradient of activity is created with the cells that represent the goal state(s) having the highest firing rate and the state cells or state-action cells that are far away in the map (separated by many transitions) having very low or no firing.

If the map is made up of state cells, as in (Matsumoto et al. Citation2011), this gradient effectively encodes a value-function: states represented by high-firing cells are highly valuable, states represented by low-firing cells are not valuable.

If the map is made up of state-action cells, as in (Friedrich and Lengyel Citation2016), this gradient effectively encodes a Q-function: state-action combinations represented by high-firing cells are valuable, state-action combinations represented by low-firing cells are not so valuable.

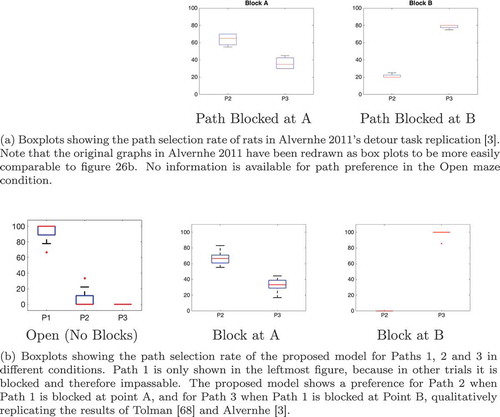

The decaying-activation paradigm allows successful planning. In particular, Martinet 2011 show that a decaying-activation model is able to reproduce the characteristic behaviour of rats performing a Tolman detour task (Martinet et al. Citation2011; Alvernhe et al. Citation2011). It also reproduces the latent learning phenomenon, in which rats which have previously been allowed to explore a maze in the absence of explicit external reward are able to successfully navigate to a reward in that maze much faster than rats which lack this experience (Tolman Citation1948). Various refinements and extensions have been proposed that allow decaying-activation models to memorize the path to previous goals (Matsumoto et al. Citation2011), take shortcuts (Erdem and Hasselmo Citation2012) and store environmental maps at different resolutions (Martinet et al. Citation2011). However, although these extensions can produce interesting effects under certain conditions, they tend to compromise either the biological plausibility of the model or its robustness. For example, the linear probe mechanism proposed by Erdem & Hasselmo 2012 (Erdem and Hasselmo Citation2012) allows agents to take shortcuts through unexplored space but compromises the model’s ability to navigate around obstacles.

Although a decaying-activation planner can reproduce basic planning behaviour, as well as replicate seminal tasks such as the Tolman detour task, the behaviour of a decaying-activation planner does not appear to match experimental observations in certain ways. The first and most important of these is that if the goal is sufficiently distant, and a sufficiently large number of actions are required to reach it, then the decaying activity will decay to zero before it reaches the agent’s current state. Since it is (the gradient of) this activity that defines either a value function or a q-function for each state, if no activity reaches a state or set of states then the agent has no value information available about those states. The agent will therefore not be able to produce a useful action. A decaying-activation planner will therefore fail to produce appropriate actions if the distance to the goal is too great. This does not seem to be consistent with evidence that animals can plan and navigate in large-scale environments (Geva-Sagiv et al. Citation2015).

A second issue with planning using decaying activation is that it may be vulnerable to noise. If the gradient of decaying activity spreads over a large number of states, then the relative firing rates of cells representing different states (or different actions within the same state) are likely to be similar. The firing rates of these cells are used to indicate the relative values of the associated states or state-actions; if they are very similar then small changes due to noise may make a big difference to their relative values and cause very different actions to be selected. A decaying-activation mechanism may take a relatively long time to output actions. Cuperlier 2007 (Cuperlier et al. Citation2007) reads out an action only when the activity in the network is considered “stable”, which presumably requires either a set period of stabilization or a mechanism that judges the stability of the activity dynamics. Hasselmo 2005 waits for a specific period of time before reading out an action and thereby risks reading out too early (before activity has reached the right part of the map) or too late (when the situation has changed or time has been wasted).

Hierarchical behaviour

In general, current models of biologically plausible map-based planning do not consider how cognitive map representations and map-based planning should scale. As observed above, these models use a decaying-activation mechanism and so are unlikely to produce appropriate scaling because the activity is likely to decay too much in larger maps. To our knowledge, the only exception at present is work by Martinet 2011 (Martinet et al. Citation2011), which introduces an extra map at a lower resolution. This mechanism uses proprioceptive feedback to merge states that are considered to be functional aliases of each other, extending the range over which their model can plan before the decaying activation disappears completely. This could potentially explain the relationship between planning time and distance described above. In this paper, we propose an alternative explanation: that over time, useful sequences of small-scale state-action combinations are encoded as functional behaviours or skills, allowing an agent to produce faster but more stereotyped behaviour at larger spatial scales.

A hardwired model for producing hierarchical behaviour using sequence cells

Hypotheses

We hypothesize that the hierarchical route representations observed experimentally (Klippel et al. Citation2003; Timpf and Kuhn Citation2003; Wiener and Mallot Citation2003) could be produced by using behaviourally significant sequences of state-action combinations to represent certain elements of the route more concisely and abstractly. We further hypothesize that these sequences could subsequently be implemented during planning as “mental shortcuts”. In this case, the agent would be able to plan partially at a more abstract level; it would select sequences rather than individual state-action combinations. By representing a long route as 3 sequences rather than 500 individual state-action combinations, the agent would greatly reduce the number of choices required to plan that route. In effect, these sequences would function as high-level actions or blocks of policy that move the agent from one state to a far-off state, allowing the agent to plan across large sections of the state-action space concisely and efficiently.

This section will discuss how these sequences can be encoded within the network and how they can be used to plan faster and more efficiently. As such, this section will not discuss the process responsible for learning sequence representations. Section 5 is dedicated to the process of learning these sequences.

Model overview

The proposed neural architecture is described by . Essentially, the agent’s current state is input by the state cells and the agent’s goal is input by the goal cells. The state-action cells and the sequence cells co-operate to produce an action that will move the agent towards its goal. The gating cells read out the planned action and propagate it to the action cells, which produce movement.

Figure 1. The figure shows the architecture of the proposed hierarchical neural network model. The current state of the agent is input by the state cells (State) and the goal of the agent is input by the goal cells (Goal). Actions that moves the agent towards its goal are produced by the co-operation of the state-action cells (State Action) and the sequence cells (Sequence). Finally, the planned action is read out by the gating cells (Gate) and propagated to the action cells (Action), which produce movement

Map representation – State-Action (SA) cells

We hypothesize that, for a cognitive map to be useful for the production of actions, it should record how the actions of an agent in different states cause the agent to move between states. In Markovian terms, a cognitive map should encode the state transition probabilities within the environment.

For the sake of simplicity, we initially assume that transition probabilities are always 100%. Sec. 4.4 demonstrates planning when this is not true.

We therefore hypothesize that the proposed model encodes this information in terms of state-action combinations and it follows that the network should encode information using a neural substrate of SA cells, each of which encodes a unique combination of one state and one action.Footnote6

If SA cells respond to a combination of state and action information, they are therefore most likely to occur in an area which receives high-level sensory and motor feedback. The prefrontal cortex is located in the right part of the sensorimotor pathway and prefrontal cells are activated by stimuli from all sensory modalities, before and during a variety of actions, and in anticipation of expected events and behavioural consequences (Wallis et al. Citation2001). They are also modulated by motivational state (Wallis et al. Citation2001). Prefrontal neurons also output to the premotor cortex, which is known to represent and perform high-level actions (Tanji and Hoshi Citation2008).

In general, the prefrontal cortex is considered to be responsible for executive function at the top of the motor hierarchy (Fuster Citation2001). Lesions of lateral prefrontal cortex produces an inability to generate novel or complex sequences of behaviour, and patients find it difficult even to consciously represent such sequences, indicating that the lateral prefrontal cortex is at least necessary for generating such sequences and may contain the substrate which represents them (Fuster Citation2001).

More specifically, single-neuron recordings have shown that over the course of a task, cells in the primate prefrontal cortex come to represent and respond to combinations of sensory cues and motor actions by a process of associative learning (Asaad et al. Citation1998). Later work has strongly suggested that abstract and hidden states are represented in the orbitofrontal cortex (Wilson et al. Citation2014; Schuck et al. Citation2016) and recent work has found that cells in the rat orbitofrontal cortex encode a mixture of stimulus and choice information about the rat’s previous decision (Nogueira et al. Citation2017).

Map representation – minicolumns

We further hypothesize that these state-action cells are likely to be organized into minicolumns. These are stereotyped structures commonly found throughout the neocortex, usually with approximately 80–100 neurons, and are subject to an array of cortical inhibitory interneurons (Buxhoeveden Citation2002). This allows a variety of inhibition to occur within and between minicolumns, with very different levels of specificity and suppressive effect. We propose that such inhibition plays an important part in the formation of SA cells (see Sec. 4) and allows activity to be controlled and sustained during planning (see below). In this paper, the term “column” refers to a minicolumn unless otherwise stated.

In the proposed model, the state-action cells are structured into state columns. Each of these state columns contain several state-action cells which respond to the same state but to different actions (e.g. movement north, south, east and west) taken within that state. Section 4 shows how the state column structures can naturally self-organize using lateral inhibition within columns.

Map representation – encoding backward causal models using recurrent connectivity

One way that a layer of state-action cells can encode the topology of an environment is in the recurrent connectivity between them. If each state-action cell encodes a certain state-action combination possible in the environment, transitions can therefore be encoded in the recurrent weights between cells encoding a state-action and cells encoding the resultant successor state. If this recurrent connectivity is extensive enough, the network as a whole would encode the topology of the state space. Furthermore, activity propagating through the layer of state-action cells would do so according to the pattern of recurrent connectivity and so would be influenced by its topology, allowing planning.

In the model described below, the recurrent connectivity between these state-action cells encodes a backwards causal model of the environment; such a model encodes how each state can be reached as the result of performing particular state-action combinations. This is in contrast to a forward causal model that encodes the reverse information: the predicted results of performing certain state-action combinations. Another way of thinking about this is that a forward model records how causes produce effects, and therefore models the causal relationship forward in time, while a backward model records how effects are produced by causes, and therefore models the causal relationship backwards in time.

This distinction is significant because it determines what kind of information the model stores and is able to output. A backward causal model is able to give a set of state-action combinations that will lead to a desired state, but cannot output predictions about the result of performing any given state-action combinations. A forward model is the opposite.

A navigating model receives a desired end goal at the beginning of a task and is then required to output actions in order to move an agent from its current state to the desired state. The format of a backwards causal model is much more suited to this task and so we hypothesize that the planning mechanism in the brain uses a backward causal model.

As stated previously, we have hypothesized that the model encodes causal relations between states by means of synaptic connectivity between state-action cells. Because we have also hypothesized that these causal relations are encoded using a backwards causal model, the hypothesized connectivity must reflect that. A state-action cell that moves an agent into a new state

therefore receives an excitatory synapse from any state-action cell that encodes that new state, so that activity can propagate backwards from the desired state to identify suitable state-action combinations to bring about that state. We have illustrated the proposed connectivity between state-action cells in .

Figure 2. The pattern of connectivity between SA Cells encoding a reverse causal model. All SA cells within a given column encode the same state, and each SA cell encodes a different state-action combination. We hypothesize that each SA cell should receive a set of afferent synapses from all of the SA cells encoding its predicted successor state, allowing cells in a successor state to activate the SA cell responsible for entering that successor state. Likewise, each SA cell sends an efferent synapse to any SA cells that will result in the SA cell’s own encoded state

Map-based planning

A cognitive map is encoded by the strengthened recurrent connections within the layer of state-action cells. The proposed network feeds goal signals into the state-action layer at the goal location and allows these signals to propagate outwards through the topological connectivity of the cognitive map.

This propagation through the cognitive map is the primary element of the planning process. The quickest path to take from the agent’s current state to the goal location is that requiring fewest state transitions. Since each state-action cell represents, in effect, a state transition, the path requiring fewest state transitions will also be the path that activates fewest state-action cells between the agent’s current state and the goal location.

A common mechanism in the literature is to use decaying propagation, so that some activity is lost every time activity propagates between state-action cells. Consider a goal signal propagating through the recurrent connections towards the state-action cells associated with the current state of the agent. In this decaying-activation paradigm, the optimal state-action cell for each current state is connected to the goal location via the fewest state transitions and so receives the least-decayed activation; in other words the most active state-action cell for each state will move the agent towards the goal fastest. However, this mechanism has the important limitation that the activity will eventually decay to a negligible level and so this mechanism (in a naïve form) is unable to plan over a sufficiently long distance.

The proposed model uses an alternative mechanism to identify the fastest path to the goal. This mechanism plans using the timing of goal-based activity propagation through cells encoding the cognitive map, rather than how much activation those cells receive. We do not hypothesize that activity decays as it propagates through the state-action layer. Instead, we hypothesize that activity will propagate along the shortest path to the goal fastest, because that path involves the fewest state transitions and so the activity does not have to transfer through very many state-action cells. The relative values of different actions in each state – the speed with which those actions move the agent towards the goal – are therefore determined by the temporal order in which those SA cells receive activation from the goal and become active.

The first SA cell to receive activation in each state now represents the optimal action to take in each state, and the level of activation is not relevant. This allows the level of activation to be kept at a constant level as the wave propagates through the map, allowing it to plan over longer distances. This mechanism also does not require time for activity to “settle” in the layer, or for cells to integrate activity.

This mechanism owes inspiration to work by Ponulak & Hopfield 2013 (Ponulak and Hopfield Citation2013). Their paper described a model in which a wave of activity propagates through a 2D layer of topologically organized pure state cells (neighbouring state cells were recurrently connected) and showed that this wave carried information about the direction of the goal. Specifically, if the goal is east of a state cell, the wavefront will “hit” the state cell from that direction. Ponulak showed that an anti-STDP mechanism could record this information in the recurrent synaptic connectivity of the layer by strengthening the synapses between cells to indicate the direction from which they were “hit” by the wave.

The mechanism that we have proposed retains the insight that the propagation of a wavefront can carry information about the direction of a goal, but uses this information in a rather different way. Rather than use a propagating wavefront to adjust the synapses between pure state cells and thus produce a synaptic vector field, we have proposed that a propagating wavefront can identify and output the most valuable state-action combination for a given state.

The proposed mechanism is therefore able to improve on the mechanism described by Ponulak & Hopfield in several key ways. Firstly, because the proposed planning mechanism is able to work in a state-action map, it is able to output explicit actions that move the agent towards the goal (see Output below). Secondly, because the proposed planning mechanism is able to perform planning without requiring a period of synaptic plasticity, it seems likely that an agent using this mechanism would be able to plan more quickly, and would not have to “undo” the new synaptic weights if there is a change in the goal state or the transition structure of the environment. Thirdly, because the proposed planning mechanism is able to plan without altering the synaptic weights that encode the cognitive map, we can store information in these weights. We show in this section that the proposed planning mechanism is able to interface with a mechanism for producing hierarchical behaviour.

Output

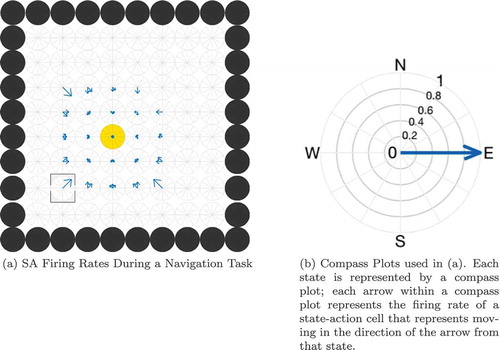

The proposed network is able to read off the optimal action at the current state from the planning process using biologically plausible neural mechanisms. An action read-out mechanism must perform two important roles. It must read out the recommended action for the current state whilst not reading out anything associated with any other states, a problem illustrated in . In the case of the proposed network shown in , this is effected by a set of gating cells that propagate activity from the appropriate section of the cognitive map (that which represents the current location of the agent) to the action cells, where it produces motor output. The gating cells are under heavy inhibition such that they can only produce firing if they are receiving both SA and state input, meaning that they only pass on activity from an SA cell when that SA cell matches the current state.

Figure 3. Illustration of the problem of reading out the correct action for the current state. (a) shows the firing rates of a layer of SA cells partway through a planning task. Each compass plot in (a) represents the firing rate of all SA cells which encode a particular state as illustrated in (b). The golden state is the goal location and the boxed state is the agent’s current state. The planning wavefront has activated a large number of SA cells, most of which encode an action (NW, SW, W) that will not move the agent towards the goal. The model cannot therefore simply sum or average the activity in the SA layer but has to gate this activity by the current state of the agent before it is passed to the action output layer. Furthermore, this read-out must happen at a specific time

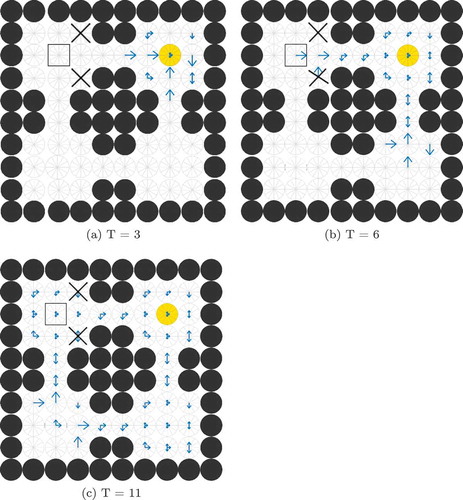

Figure 4. The Gating Problem. This figure shows compass plots of state-action activity during planning at three different times T = 3, 6 and 11. If the agent is occupying the state marked by the square, then the model should only read out activity from the SA cells marked by the square, and not (for example) those marked by crosses. Also, the agent should not read out activity from the marked SA cells at t = 3 (when the wave of activity has not yet reached the agent’s state) or at t = 11 (when the wave of activity has passed the agent’s state) but only at t = 6

Hierarchy

As explained above, the wave-propagation planning mechanism used by the proposed model relies exclusively on the timing of goal-based activity propagation through cells encoding the cognitive map, rather than how much activation those cells receive. The first SA cell to receive activation in each state represents the optimal action to take in that state, and the level of activation is not relevant. Since the model does not require time for the activity to “settle”, or for cells to integrate activity, the main factor limiting planning time is the speed of wavefront propagation. By increasing the speed at which activity propagates through the network, the planning process can be made much faster, provided that the spread of activity still produces viable plans. We hypothesize that frequently-used sequences of state-action combinations can act as shortcuts through state space, allowing activation to spread faster and further without sacrificing planning power.

Such a hierarchical planning mechanism would influence the model’s choice of actions during planning. As explained above, the speed of activity propagation through the recurrent connections of the SA layer is used to determine optimal routes during planning. If frequently-used sequences of state-action combinations are used to speed up the propagation of activity, these familiar sequences may be selected in preference to an optimal route. The preference for known routes has been observed in experimental studies (Brunyé et al. Citation2017; Payyanadan Citation2018). The model might also display this habitual behaviour in non-navigation tasks.

The use of “shortcuts” through the SA layer might also make planning more robust to noise, since transmission of activity between neurons is a primary source of noise and the use of shortcuts is intended to significantly reduce the number of transmissions of activity between SA cells. However, since the activity of SA cells in active columns is kept constant, and since planning in the model is dependent on the timing of activation propagation rather than on the precise activation value of SA cells, the effect of the hierarchical mechanism on planning in noisy conditions would likely be relatively small. Please note that the term “column” refers to cortical minicolumns (Buxhoeveden Citation2002) unless otherwise specfied.

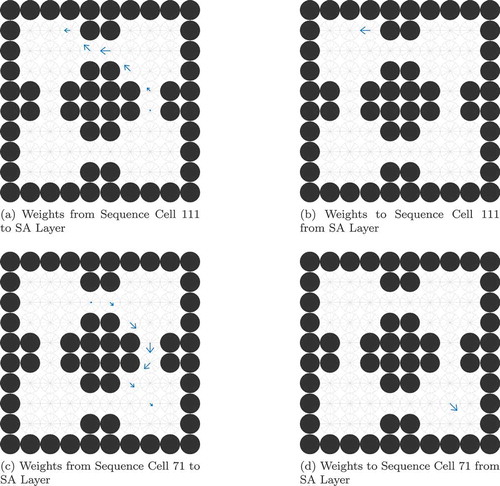

We hypothesize that this hierarchical planning mechanism can be implemented by an extra layer of cells, called sequence cells, () whose connectivity to the state-action layer allows them to represent frequently used sequences of actions. These sequences encode behaviourally useful sequences which provide relatively direct routes through the state space. In determining the most likely form of sequence representation, there are two primary concerns:

A sequence cell must represent a useful sequence of state-action combinations. To do this, it must be linked to a particular set of state-action cells that represent a behaviourally useful sequence.

The planning mechanism implemented by the state-action cell layer should be able to call up and use sequences during the planning process. In other words, state-action cells must be able to activate sequence cells at an appropriate time – via the synapses from state-action cells to sequence cells – and sequence cells must be able to influence the activity of state action cells, via the synapses from sequence cells to state-action cells.

We hypothesize that a sequence cell (representing a sequence of state-actions) should be activated when the propagating goal-centred wavefront reaches the end of that sequenceFootnote7 and should in turn activate the rest of the SA cells in that sequence immediately. This means that the wave can propagate through a sequence of state-actions (which would normally take

timesteps) in 2 timesteps: one timestep for activity to propagate to the sequence cell, causing it to fire, and another timestep for that sequence cell to activate all of the

SA cells that it is connected to.

As explained above, the time taken to output an action is dependent on how quickly the SA wavefront propagates from the goal to the agent’s current position. Put briefly, since the optimal action for each state is encoded by the state-action cell linked to the goal location by fewest transitions (and so fewest synapses), a wave of activity beginning at the goal and propagating at a constant speed will reach the optimal state-action cell before the rest of the state-action cells linked to that same state. The gating cells detect this activation and express the appropriate action to the action cells, which produce motor output.

As such, we hypothesize that incorporating sequence cells into the network model should enable the agent to plan a route of a given length in less time. Furthermore, because the speed at which activity propagates through a sequence does not depend on the length of the sequence, we hypothesize that the agent should receive larger gains in larger and more complex routes, where the path lengths are longer and thus the required planning time (without sequences) is longer.

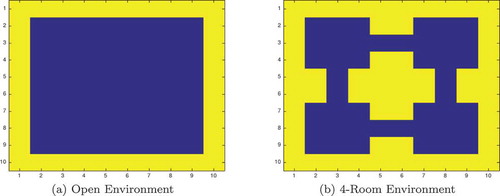

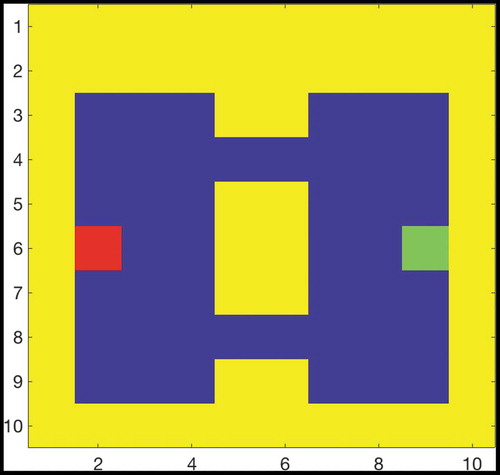

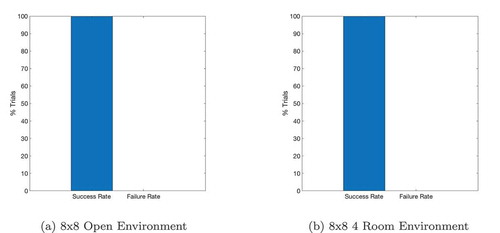

Task

The agent is placed at a random position in one of four grid worlds. Two of these worlds are the small (10x10) open and maze worlds depicted by . The other two worlds are the same but scaled by 2:1, so that they are 20 × 20. The agent can move one space at a time in eight compass directionsFootnote8 or stay still, giving nine possible actions.

Figure 5. The 2-dimensional grid-world state space used to test the simulated network agents. The agent can move between blue squares, which are free states, and cannot move into yellow squares, which are walls. Each state has a unique index value which is used to fire a specific state cell

A random state is designated as the goal and the agent is required to navigate to this state to complete the task. If the agent reaches the goal location then the task has been been completed successfully. The number of timesteps required to reach the goal is recorded, as is the number of physical actions.

A set of 100 tasks is performed in each of the four environments, by two agents. The first agent has access to a set of sequence cells that encode various trajectories through the environment. The second agent has no access to sequence cells.

Model equations

The proposed model’s architecture is depicted in and contains:

State cells

Goal cells

State-action (SA) cells

Gating cells

Action cells

Sequence cells

Algorithm 1 Planning in the Hardwired Model with Sequence Cells. This describes the network’s operations during one timestep in the planning process. Steps in brackets only occur if there is activity in the action cell layer (signifying that an action has been selected for the agent’s current state).

Cell Firing State Cells & Goal Cells Fire

Activity Propagation SA Cells to Sequence Cells (1)

Activity Propagation SA Cells, Goal Cells & Sequence Cells to SA Cells (3)

Inhibition SA Cells: Rescale SA Activity in All Active States (4)

Activity Propagation State Cells & SA Cells to Gating Cells (5)

Inhibition Gating Cells: Threshold (6)

Activity Propagation Gating Cells to Action Cells (7)

Inhibition Action Cells: Winner-Take-All (8)

(Agent) Agent Moves to Successor State

(Reset) All Cells Reset to Zero Activation

Algorithm 1 describes one timestep of the planning process. The state cells fire, encoding the current location of the agent. These cells are one-hot: each state cell uniquely represents a single state and only one state cell fires at a time, denoting the current state. These cells are stimulated automatically, and are considered to be the output of unmodelled sensory processes. At the same time, the goal cells fire, encoding the desired state to be navigated to (i.e. the goal).

Activity spreads out from the goal location through the SA layer as a propagating wave. During this process (at every timestep) there is back-and-forth propagation of activation from the SA layer to the sequence layer (EquationEquation 1(1)

(1) ) and back to the SA network (EquationEquation 3

(3)

(3) ). The sequence cell sends synapses to all SA cells in the sequence, but only receives synapses from the last SA cell in the sequence.

Sequence cells receive activation propagated from the SA cell layer as follows:

where is the firing rate of SA cell

and

is the synaptic weight from that SA cell to a sequence cell

, and these sequence cells – when active – pass activation back to the SA cells according to EquationEquation 3

(3)

(3) .

SA cells are stimulated by a combination of goal input, recurrent SA activity and input from sequence cells as follows:

where is the input received from the goal cells,

is the recurrent SA input, and

is the input from the sequence cells (see below). SA cells experience divisive inhibition, rescaling the activity of SA columns so that each currently active column is rescaled to sum to 1, and each inactive column remains inactive (with a sum of 0):

where is the sum of the activations of all SA cells in a given column.

A layer of gating cells has been hardwired such that each gating cell receives afferent synapses from one state cell and one state-action cell, and sends synapses to one action cell.Footnote9 Activity propagates to the gating cells as follows:

The gating cells are under heavy inhibition such that they can only produce firing if they are receiving both SA and state input:

where is a thresholding constant.

Activity propagates from the gating cells to the action cells as follows:

where is the activation of an action cell

,

is the weight of a synapse from a gating cell

to that action cell,

is the firing rate of gating cell

and

is the final firing rate of the action cell after thresholding inhibition using the constant

.

The thresholding inhibition (EquationEquation 8(8)

(8) ) has the effect of preventing any action cell from firing unless it is strongly stimulated by a gating cell. If any action cell begins to fire despite this inhibition, winner-take-all inhibition is applied to the action cell layer to select a single action. The agent takes the action represented by the winning action cell (i.e. it moves one space in the specified direction) and updates the world. The firing rate of all cells is then reset to zero.

This section is focused on investigating the mechanism of planning and the role that sequence cells may play in the planning process. We therefore do not discuss how the synapses in this model self-organize: the self-organization of the basic network is covered by Section 4, while the following Section 5 will discuss the self-organization of connectivity between the state-action layer and the sequence cell layer. The diagram in depicts the overall architecture of the model, and gives relevant model parameters.

Table 1. Table of parameters for the hardwired model with sequence cells described in Section 3

Results

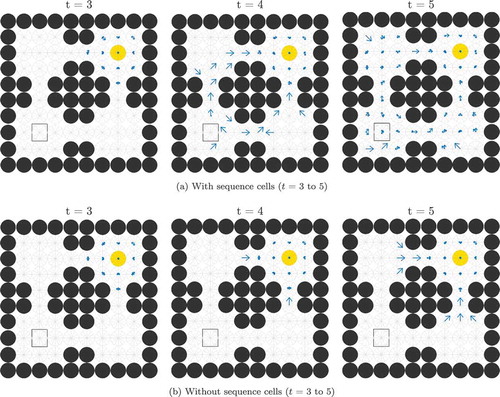

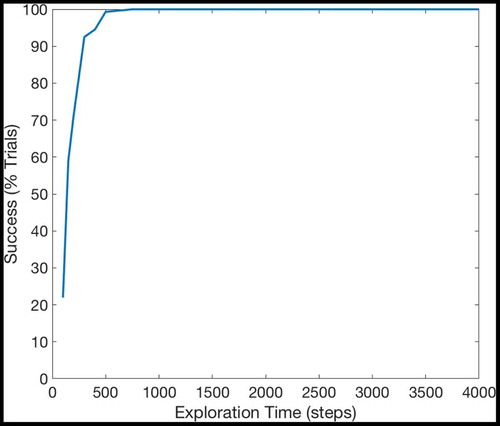

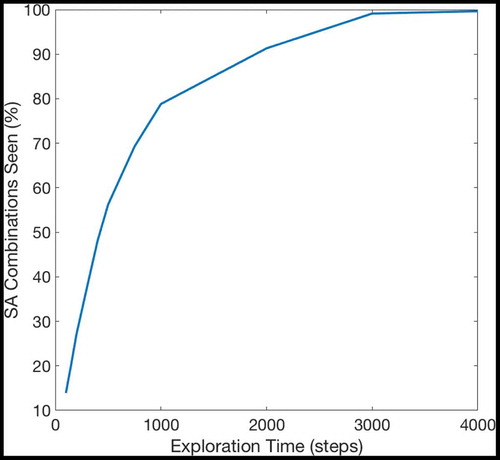

If a given sequence cell projects efferent synapses to all of the SA cells in a sequence but only receives an afferent synapse from the last SA cell in the sequence, that sequence cell will become active if the last SA cell in the sequence that it encodes becomes active. It will then immediately activate all of the other SA cells in that sequence. In effect, it allows the propagating wave to “skip” those cells, propagating through the entire sequence at once. This allows the propagating wave to travel faster through certain areas of state-action space, an effect demonstrated in , which shows the same navigation task with and without connectivity between the SA and sequence cells. In effect, the sequence cells have produced a two-level hierarchical planning mechanism, where planning happens on the level of individual state-actions but also on the level of larger state-action sequences.

Figure 6. This figure shows the propagation of activity from the goal location through the SA layer during a simulated navigation task in the four-room environment shown in ). Results are shown for simulations with and without sequence cells. Each state is represented by a compass plot which depicts the activity of the SA cells representing that state (see )). The golden state represents the goal, and the boxed state represents the agent’s current location. The agent moves once activity reaches its current state. We see that activity propagates considerably faster when sequence cells are available – compare (a) vs (b) – and that the propagating activity therefore reaches considerably more states after five timesteps

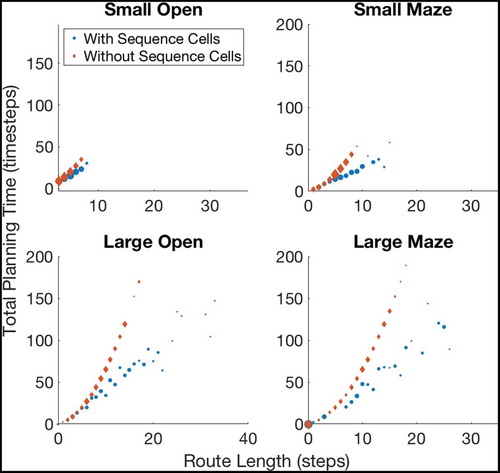

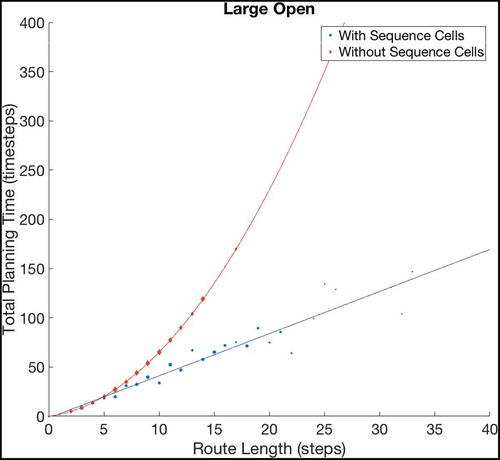

shows the effect of using or not using sequences on tasks with different path lengths. We see that use of the sequence cell mechanism has relatively little effect on short paths, but provides a significant (~40–60%) decrease in total planning time on path lengths of ~10 steps. These gains continue to increase as path length and environment complexity increase. shows that the total planning time of the model seems to grow quadratically without the sequence mechanism and linearly when augmented with sequence cells.

Figure 7. A figure showing how the total planning time varies with navigation tasks whose solutions are of different lengths. Results are shown for open environments as illustrated in ) (left column) and 4-room mazes illustrated in ) (right column). This demonstrates that use of sequences in planning produces low efficiency benefits during short tasks but increasingly large benefits as the required route length increases

Figure 8. Fitting curves to the results of navigating within Large Open environments. The basic model, without using sequences, produces planning times that increase quadratically with the route length, while the augmented model, using sequences, plans linearly with the route length. Extrapolating these fits predicts that the gains should grow very large as the size and complexity of environments increase, although we cannot currently verify this claim as the discrete state-action representation we use does not scale well beyond 20 × 20 states (discussed further in Sec. 6.1). To check the accuracy of both fits, we have calculated the Adjusted- values for each fit. The Adjusted-

is a statistical measure of how close the data are to the fitted lines, adjusted for the number of terms that describe the fitted line. These values are 1 for the model without sequence cells and 0.9243 for the model with sequence cells. The value of Adjusted-

must be between 0 and 1, and so these values show that both lines fit the data extremely well

The kind of state spaces experienced by a mammalian intelligence (especially a primate one) are very much more complicated and thus larger than the state spaces used in this experiment, and problems in such large state spaces are difficult to solve using naive Markovian decision processes because the computation requirements in such environments are too large. The relationship between efficiency gains and environment size & complexity suggest that the efficiency gains would be much larger in real-life state spaces.

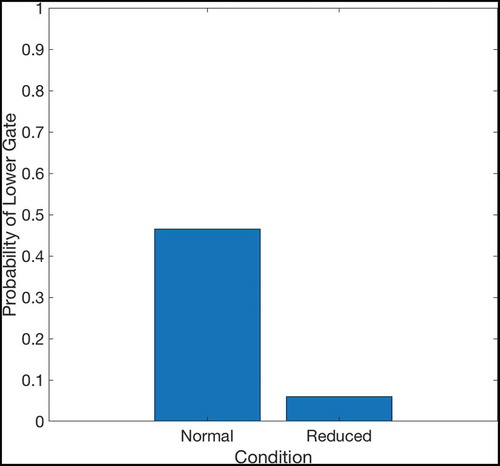

shows that if two paths of equal length exist from the agent’s starting position to its goal, then the hierarchical model incorporating sequence cells will tend to prefer familiar routes (those that have associated sequences) over other routes of equal distance. The model was trained in an 8 × 8 two-gate environment () and given a set of sequence cells encoding routes through the lower gate but not the upper gate. We see that the model is considerably more likely to choose the lower gate if it has access to these sequences. Note that the agent used probabilistic propagation (described more fully in sec. 4.4) for this planning task; this adds a nondeterministic element such that activity propagates from one cell to another with a given probability. Since the use of sequences to encode a route reduces the number of cells that activity must propagate through in order to choose that route, such a route has a higher probability of being chosen.

Figure 9. Two Gate Environment (8x8). As in , the agent can move between blue squares, which are free states, and cannot move into yellow states, which are walls. The green square represents the goal, and the red square represents the agent’s starting state. The agent therefore starts equidistant from both gates, and can reach the goal from either gate in the same number of timesteps

Discussion

The hierarchical mechanism should be extensible to an arbitrary degree by adding more layers of sequence cells, such that higher layers learn sequences of sequences. This explains the observation that people plan on different scales and levels of abstraction (Klippel et al. Citation2003; Timpf and Kuhn Citation2003; Wiener and Mallot Citation2003) and are able to navigate over distances orders of magnitude apart, from short room-to-room movements within a house or workplace to long drives between cities and/or countries. These abilities have also been observed in bats, one of the few mammals whose large-scale navigation has been extensively studied. Bats have been observed to recall the three-dimensional position of objects with an accuracy of 1–2 cm but can also navigate reliably and regularly to targets dozens or thousands of kilometres away (Geva-Sagiv et al. Citation2015). Wild rodents have a similar range of navigational capabilities (Geva-Sagiv et al. Citation2015). We believe that although planning-time-to-path-length correlations seem to exist for problems of the same scale (Ward and Allport Citation1997; Howard et al. Citation2014), they do not seem to apply strongly to problems of different scales. For example, taxi drivers report being able to produce routes through London almost instantly (Spiers and Maguire Citation2008) even though these routes may be several kilometres in length.

The properties of the sequence cells depend on their connectivity with the state-action layer. The sequence cell should receive activity from the SA cell that occurs at the end of that sequence, so that the sequence cell will become active as soon as the activity wavefront reaches the end of the SA sequence. Likewise the sequence cell should be able to propagate activity to all SA cells in the sequence, so that it can quickly stimulate all of the SA cells encoding the sequence and thereby produce the maximal efficiency in planning. Self-Organizing mechanisms for learning this connectivity are described in Sec. 5. The sequences of actions that they encode are very similar to the high-level route segments described by Klippel et. al. (Klippel et al. Citation2003), and the action of the hierarchical mechanism utilizing sequence cells reproduces the fine-to-coarse planning heuristic described by Wiener and Mallot 2003 (Wiener and Mallot Citation2003). This hierarchical mechanism also appears to produce a preference for familiar routes – those for which the agent has available sequences – as seen in . This preference has been experimentally demonstrated in human navigation (Brunyé et al. Citation2017; Payyanadan Citation2018). It also suggests that, in more complex tasks and environments, the hierarchical mechanism may produce a form of habitual behaviour, where inefficient but familiar solutions are preferred over optimal but planning-intensive solutions.

Figure 10. This figure compares the probability of an agent occupying various states in a two-gate environment () over the course of 100 planning trials. (a) shows these occupancy probabilities when the model has access to sequence cells that encode a route through the lower gate. (b) shows the same model without access to these cells. The agent is required to move from position (2,6) to (9,6), which can be achieved in the same number of actions by passing through either gate. We see that the existence of these sequences biases the agent to use the familiar lower gate rather than the higher gate in (a). By contrast, when the agent does not have access to these sequences it uses both gates with approximately equal probability (b)

Sequence cells in pre-SMA

Sequence-selective cells have been found in the supplementary motor area (SMA) and pre-supplementary motor area (pre-SMA). These cells fire before a particular (previously learned) sequence is performed but do not fire during the motor performance of that sequence (Shima and Tanji Citation2000). The pre-SMA is known to be connected to the prefrontal cortex (Luppino et al. Citation1993), allowing interactions between sequence cells in the pre-SMA and state-action cells in PFC to occur as seen in the model.

shows a simulated sequence cell firing during a modified version of the navigation task, and compares it to experimental recordings performed by Shima et. al (Shima and Tanji Citation2000). As in other navigation tasks, the agent must navigate from its starting location to the goal. In this case, however, the starting location and goal are chosen manually to ensure that the only route to the goal included the sequence encoded by the sequence cell. The figure shows the recorded firing rates of this sequence cell before, during and after the sequence of actions encoded by the sequence cell.

Figure 11. Comparison of a simulated sequence cell to a recorded pre-SMA cell. In both the simulated and recorded data, we see that the cell has strong firing before but not during a specific sequence of actions (a dashed line marks the onset of this sequence). However, the simulated data displays periodicity, unlike the recorded data. This is discussed in the main text. The proposed model is rate-coded and so the raster plot (a) was generated from recorded firing rates using a Poisson distribution

As explained in Section 3.1, the network plans by propagating goal-based activation through the SA layer and recording which of the state-action cells responsible for the current state receive activation first. Sequence cells function as “shortcuts” for the propagation of this wave. For this reason, the sequence cell will be strongly active before the agent performs the relevant sequence of actions. The propagating wave activates the sequence cell on its way to the agent’s current state, so that it becomes active early and remains active until the propagating wave reaches the agent’s current state. The activity then activates a gating cell and action cell as described by Sec. 3.3, and the agent takes an action that moves it towards the goal. The activity in the SA layer resets and the activity begins propagating again from the goal state.

This cycle of activity propagation and movement repeats until the agent reaches the first state that is involved in the sequence described by the connectivity of the sequence cell. Previously, the sequence cell was active for an extensive period of time while activity propagated further through the SA layer, beyond the sequence encoded by the sequence cells. However, SA cells responsible for the agent’s current location are now within the encoded sequence and so can be stimulated directly by the sequence cell without requiring further time for activity propagation. This means that as soon as the sequence cell becomes active, so do the relevant SA cells, gating cells, and action cells. The sequence cell is therefore only active very briefly when the agent is performing the sequence which the sequence cell encodes.

To put it another way, the sequence cell is only active after the propagating activity reaches the sequence cell’s preferred sequence and until that activity reaches the SA cell encoding the agent’s current state. All SA cells in the sequence become active simultaneously, so if the agent’s current state is in the encoded sequence, the sequence cell will only be active for a very short period of time because activity is not propagating further through the SA layer.

) shows the simulated firing rate of the sequence cell, and ) shows a spiking raster plot with the spikes generated according to the firing rates by a poisson distribution for comparison to the experimentally recorded data in ). We can see that the sequence cells in the proposed model have similar properties to the pre-SMA cells recorded in this figure (Shima and Tanji Citation2000). Not only do they both encode a particular sequence of actions (Shima and Tanji Citation2000), but they become active immediately before the encoded sequence is carried out and then cease to fire as the sequence is performed. Furthermore, impairing either SMA or pre-SMA inhibits the ability of monkeys to perform the learned sequences of actions when cued, even though they retain the ability to perform any of those actions individually (Shima and Tanji Citation2000).

The main difference between the simulated data and recorded data is the periodicity seen in . This periodicity arises from the fact that the simulated agent took several steps before the onset of the sequence, and so several “cycles” of activity occured as the activity spread out from the goal state to the agent’s location to plan the next action. There are several possible explanations for this difference.

Firstly, the model artificially resets activity in the SA layer after each single action during a movement sequence. This is a result of implementing a simplified discrete model. Further work with models that operate in continuous time may eliminate such periodic behaviour in the sequence cells.

Secondly, the recordings took place under different task conditions. The pre-SMA recording in ) was taken during a task in which monkeys were trained to perform three different movements, separated by short waiting times, in four or six different orders. The monkeys then reproduced one of these sequences several times based on visual cues. In other words, the sequences were not initiated as part of a goal-directed set of free movements, but as a cue-induced sequence. The difference in cell behaviour may therefore be partly task-based, accessing the same representations through a slightly different mechanism.

Self-organization of the basic network model without hierarchical planning

The previous experimental section of this paper described a neural network model for planning solutions to a navigation task in a simple grid environment. However, the synaptic connections in the above model simulations were pre-wired, with no explanations of how these connections might be embedded in the brain through learning. The question of how such a network should self-organize its synapses is not trivial. We break the process down into several parts: the self-organization of the SA cells in order to encode unique combinations of state and action, the self-organization of the recurrent connectivity between these SA cells in order to encode the structure of the environment, and finally the self-organization of the gating cells that output the results of planning to the action cells. We will discuss self-organization of the connectivity to and from the sequence cells (the hierarchical mechanism) in Section 5.

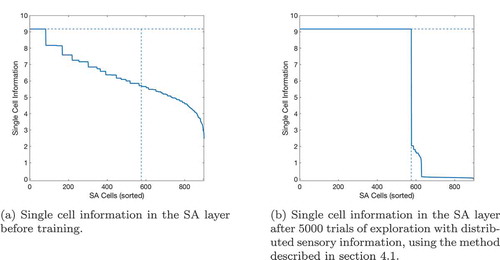

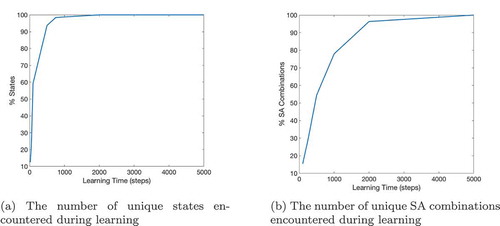

Formation of SA cells

During learning, the SA cells must form afferent connections from the state cells and action cells such that each SA cell learns to respond to a unique combination of state and action. Moreover, all possible combinations of state and action must be represented by an even distribution of SA cells. We hypothesized that the required connectivity may be set up by competitive learning. In this scenario, a layer of SA cells receive afferent connections from a mixture of state cells and action cells. These connections are modified by associative Hebbian learning as the network agent explores its sensory training environment. During this process, the layer of SA cells is put under heavy mutual inhibition, such that very few cells can fire at the same time. This inhibition means that cells’ receptive fields become distributed evenly across the input space, because each cell can only fire if it learns to respond to part of the input space that has not been “claimed” by other cells.

An agent’s state is usually defined by several pieces of (partially) independent information. In this case the neural representation of the state will be distributed, with multiple state cells active simultaneously. Moreover individual state cells will be active for multiple different states. For example, when making coffee, the amount and position of the coffee grinds are an important indicator of your state, but so is the position of the cup, the kettle, etc. It is therefore important to see whether the model can learn to represent states that are defined in this fashion. A simple way to do so is to replace the single one-hot state representation with an xy-coordinates representation, thus defining the state with two independent pieces of information.

If the agent’s current state is and it then moves west, encountering the state

for the first time, it will need to learn a new SA cell or state column to represent this new state. If there were only one piece of information, as in the previous sections, this would not be a problem: none of the existing SA cells have a connection to this new state, so the unused SA cells will compete to represent it. One of them will win that competition and thereafter will represent this new state. However, when the distributed xy-coordinate representation is used, there is already an SA cell that is partly associated with the state

because that state shares the same y-coordinate as the SA cell’s true state: