ABSTRACT

Despite the increasing emphasis on interprofessional education (IPE) in curricula and the potential benefits for student learning, there appears to be a lack of evidence directing authentic and accurate assessment of student-learning outcomes and translation of assessment data into scores and grades. Given the increasing importance of reflection and simulation in IPE, the purpose of this systematic review was to identify, appraise, and synthesize published literature using reflection and simulation as summative assessment tools to evaluate student outcomes following IPE activities. The Crowe Critical Appraisal Tool was used to appraise the included articles for quality. This systematic review yielded only five studies of marginal quality that could highlight the limited rigorous use of either reflection or simulation for summative assessment purposes. This review has identified a need for summative IPE assessment alongside formative assessments. Furthermore, training needs to be offered to both faculty assessors, to ensure they are competent and results are reproducible, and students, to equip them with the knowledge, critical thinking skills, and attitude for becoming reflective practitioners who are able to practice interprofessionally. The assessment of IPE remains a challenge, and there is a clear gap in the literature where research needs to grow.

Introduction

Interprofessional education (IPE) is of great significance for health professionals’ training programs worldwide (Buring et al., Citation2009). Accreditation agencies for many professional programs now mandate IPE as a core curricular component (Ascione et al., Citation2021; Grymonpre et al., Citation2021; Zorek & Raehl, Citation2013). Institutions and departments are also incorporating IPE development, implementation, and evaluation into strategic and operational plans. The impact of IPE on student learning and the adoption of collaborative care is yet to be fully captured. Still, available data suggests IPE experiences facilitate the development of various team-related competencies, including professional accountability, communication, shared decision-making, and person-centered care (Brashers et al., Citation2016). Students also generally enjoy IPE and positively perceive it as a preferred learning modality with other healthcare students (Fox et al., Citation2018). IPE learning outcomes are usually based on one of the existing IPE shared competency or capability-based frameworks (e.g., Brewer et al., Citation2012; Interprofessional Education Collaborative Expert Panel, Citation2011; Orchard et al., Citation2010). Despite the increasing emphasis on IPE in curricula and the potential benefits for student learning, there appears to be a lack of evidence directing how to authentically and accurately assess student-learning outcomes and translate assessment data into scores and grades including pass-fail grades.

Although formal assessment of IPE is encouraged and promoted, summative assessment of IPE competencies is not well documented within the literature and is one of the most significant challenges for designing an IPE program or event (Reeves et al., Citation2015; Stone, Citation2010). Despite a wealth of knowledge about student and faculty attitudes and perceptions about IPE and satisfaction with specific events or programs, there is a paucity of data available to inform robust assessment of student learning based on IPE activities (Rogers et al., Citation2017). The articles and studies available that describe assessment procedures primarily focus on formative feedback and do not assign grades or summative scores to student work or performance. Reasons for this phenomenon include: difficulty standardizing assessment weighting across professional groups and/or difficulty in targeting IPE competencies within usual assessment practices (Reeves et al., Citation2015; Rogers et al., Citation2017). Examining the impact of IPE on student performance has been recommended (Kahaleh et al., Citation2015). As IPE evolves and becomes embedded within different training programs, research-supported assessment systems must be built to ensure students are meeting the target outcomes and competencies of IPE activities (Dagenais et al., Citation2018; Rogers et al., Citation2017).

Two approaches that are known to be useful in IPE assessment are simulation and reflection (Cullen et al., Citation2003; Domac et al., Citation2015). On the one hand, simulation, a performance-based assessment that allows students to demonstrate what they would do in a given scenario, may be useful for assessing IPE competencies relating to communication, shared decision-making, or teamwork. Objective Structured Clinical Examinations (OSCEs) and Team Objective Structured Clinical Examinations (TOSCEs) are common forms of simulation for the assessment of these competencies (Murray-Davis et al., Citation2013; Simmons et al., Citation2011). On the other hand, reflection may be better suited to assess students’ self-awareness and critical insight into competencies relating to professional roles and values/ethics (Roy et al., Citation2016). There may be overlap between these assessment methods, and others, when designing competency-based assessment programs. Despite the increased use of both simulation and reflection within IPE activities, there appears to be very limited information regarding student outcomes assessment. In particular, there is a need to know how scores/grades can be assigned to these assessments to assess student learning using well-defined rubrics to grade the individual student or the team (Reeves, Citation2012; Rogers et al., Citation2017).

Given the increasing importance of reflection and simulation in IPE training programs, yet the lack of evidence on how these are used to assess student outcomes, the purpose of this systematic review was to identify, appraise, and synthesize published literature using reflection and simulation as summative assessment tools to evaluate student outcomes following IPE activities. The research questions for this systematic review were:

What is the nature of IPE activities associated with using reflection and simulation as summative assessment tools?

What grading rubrics for summative assessment have been used following the reflection and simulation IPE activity?

Which of the IPE shared competencies were assessed with the use of reflection and simulation as summative assessment tools?

Method

Data sources and search strategy

A detailed literature search was conducted to select potentially eligible original studies addressing the use of simulation and/or reflection in IPE assessment. We searched six databases and search engines for eligible articles including Medline/Pubmed, EMBASE, CINHAL, Scopus, Cochrane library, and Google scholar. The Journal of Interprofessional Care and BMC Medical Education were selected as journals focusing on IPE and manually screened. Reference lists of included articles were manually screened for additional studies. Two independent authors searched each database (AE&MJ, KJ&KW). The time frame for eligible articles was from 2002 until 1st April 2021. The starting period is the year IPE was officially defined and described in the literature by the UK Center for the Advancement of Interprofessional Education as given two or more health professions the opportunity to “learn with, from and about each other to improve collaboration and the quality of care” (Centre for the Advancement of Interprofessional Education, Citation2002).

The following search terms were used to identify relevant papers and were combined using appropriate Boolean connectors (AND/OR):

Category A (population): Search of student, undergraduate, university, and pre-licensure in the text

Category B (intervention): Search of IPE, interprofessional, interdisciplin*, inter-disciplin*, multidisciplinary, multi-disciplin*, cross-disciplinary, multiprofession*, multi-profession*, Interprofession*, and inter-profession* in the title and abstract

Category C: Search of reflect*, debriefing, simulation, and Objective Structured Clinical Exam* in the title and abstract

Category D (assessment): Search of assessment, evaluation, grade, exam, examination, assignment, and test in the title and abstract

The only limit applied in the data was for the language: English.

Study selection and inclusion criteria

Primary studies evaluating undergraduate student, from different health professions, attending IPE sessions were included if the study described the evaluation methodology used and focused on reflection and/or simulation. Articles were also included if the IPE activity took place as part of a course in which undergraduate students were enrolled and the assessment form was summative in nature. Results identified from the search strategy were divided among the authors and reviewed by at least two authors for the title and abstract, then full text. Any discrepancies were resolved through team discussion. Articles were excluded for the following reasons: inclusion of practicing healthcare professionals, evaluation of students’ attitude/ behaviour/ change/perceptions/readiness/experience with IPE rather than summative assessment, and qualitative research if it focused on students/faculty perceptions and/or was not part of a summative assessment. Unpublished studies and conference proceedings were not included.

Data extraction and synthesis

Two authors (AE & MJ) independently reviewed and recorded study data using a data extraction template. The extracted data encompassed author(s), publication year, country, setting, definition of IPE, primary objectives, study design, sample recruitment method, inclusion criteria, exclusion criteria, observation period, method of analysis, number of professions included, type of professions included, number of participating students, student year/level, IPE topic covered, a brief description of the course, competencies assessed, the assessment used, assessment grading, key findings, conclusion, limitations, funding, and quality assessment.

Study quality

The Crowe Critical Appraisal Tool (CCAT) version 1.4 was used to assess the quality of the included articles (Crowe, Citation2013). This tool was developed to systematically review the quality of different research articles regardless of study design and leads to a final comparative score. The tool has eight main categories, (preliminaries, introduction, design, sampling, data collection, ethical matters, results, and discussion); all are further divided into 22 items, which were further divided into 98 item descriptors. The user guide is provided with the tool to ensure reliability and validity of scoring. Each of the eight categories is allocated a discrete score from zero to five. The total score (out of 40) was then converted to its corresponding percentage where the higher score indicates better quality. Two reviewers (AE & MJ) independently evaluated the quality of the included articles with the mean score being calculated. A discrepancy in quality score of 20% required a third reviewer to assess the quality of the paper and take the average of the nearest score.

Results

Characteristics of included articles

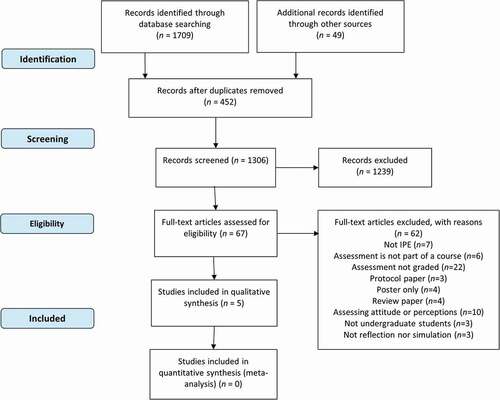

A total of 1,758 articles were identified from the searched databases. After removing duplicates (n = 452), 1,306 were further screened by title and abstract. Five articles were finally included after two rounds of screening of full text (n = 67) by all authors. Common reasons for exclusion included providing an ungraded assessment or assessing only students’ perceptions and attitude change (n = 32; ).

The studies identified were conducted in the UK, USA, Malaysia, and Canada. They collectively involved 939 students from 10 professions. Three of the included studies (Machin & Jones, Citation2014; Root & Waterfield, Citation2015; Tan et al., Citation2014) characterized IPE and used the definition of the UK Center for the Advancement of Interprofessional Education (CAIPE); the rest did not. The number of participating health professions ranged from two to eight. Included studies described IPE activities conducted for students as early as the first year and as late as the final pre-licensure year. Undergraduate students from medicine, occupational therapy, pharmacy, and physiotherapy were part of at least three of the included studies. Two studies described student IPE assessment through simulation activities; three did so through post-IPE reflection assignments. Although scores from these IPE activity assessments contributed to an overall course grade, only one study indicated its weight (Tan et al., Citation2014). The overall quality of the included studies ranged from 28% to 78%, with a mean score of 57.4% as shown in .

Table 1. Characteristics of included studies.

IPE activity assessment

The IPE activities of included studies are described in . These included virtual simulation of a patient’s home environment (Sabus et al., Citation2011), implementation of a service improvement cycle Plan-Do-Study-Act (PDSA) in a practice setting (Machin & Jones, Citation2014), interprofessional visits to patients home and related community-based organizations (Tan et al., Citation2014), interprofessional case-based discussion (Root & Waterfield, Citation2015), and managing a simulated patient with stroke (MacKenzie et al., Citation2017). IPE activities were short and episodic (e.g., one for 90 minutes) or longitudinal (e.g., 16-weeks). One of the included articles in this review considered the curriculum context amongst the different healthcare professions and variability in practice placements in terms of length and timing (Machin & Jones, Citation2014). In another study, the activity was compulsory for all second-year medical students and elective for third-year pharmacy students (Tan et al., Citation2014).

One of the included articles referred to the Canadian Interprofessional Health Collaborative (CIHC) interprofessional competency framework (MacKenzie et al., Citation2017; Orchard et al., Citation2010). These competencies include interprofessional communication, team functioning, role clarification, collaborative patient/family-centered approach, conflict management/resolution, and collaborative leadership. The remaining articles assessed some of these competencies without a reference to an existing shared IPE competencies framework.

Three articles described their assessment instrument for IPE activity. One study used a rubric to grade an assignment provided following a virtual simulation activity (Sabus et al., Citation2011). The rubric used was developed by the research team and encompassed four criteria arising from the IPE activity learning objectives: patient-centered environmental recommendations, interprofessional collaborative effort, consideration of contextual factors, and appreciation of unintended consequences. Each learning objective was graded using a 3 point grading rubric and completed independently by two course coordinators (physical therapy and occupational therapy). Another study similarly used a rubric to grade student reflections after two 3-hour IPE activities involving team-building exercises (Root & Waterfield, Citation2015). Three of the seven grading rubric items were directly related to reflective practice, including student expressed analysis and evaluation of the IPE activity, identification of personal learning and, devised action plan for personal development. These outcomes were rated on a 4-point grading rubric classified as: Beginning, Developing, Competent or Proficient. Overall, the reflective piece of work was then marked as Pass or Fail. However, for many students, it was more descriptive than reflective writing, which that could be attributed to ambiguity about what was being assessed in terms of learning outcomes (Root & Waterfield, Citation2015).

On the other hand, MacKenzie et al. (Citation2017) used a rubric to grade team care plans that students submitted after a simulation activity related to stroke. The rubric assessed best practice recommendations provided and application across individual and interprofessional practice competencies. These were also assessed on a 5-point grading scale and classified as: excellent, very good, good, satisfactory, and fail. However, no definitions of the levels were provided. This IPE activity was part of a skills-based course, but the authors did not describe the course content or objectives ().

Student outcomes

Three studies used reflections after an IPE activity (Machin & Jones, Citation2014; Root & Waterfield, Citation2015; Tan et al., Citation2014). However, one study did not indicate how well the students had performed in the assessment but did a content analysis of the reflections, including several IPE competencies (Machin & Jones, Citation2014). Although Root and Waterfield indicated that 89% (n = 147) of the students had passed the assignment, the authors said that students were competent at analyzing the situation and describing the event but struggled at linking the activity to their personal development (Root & Waterfield, Citation2015). The authors of another study demonstrated that about 35% of the students scored high in reflections (20 or above of 25; Tan et al., Citation2014). These included 26% of pharmacy students and 38% of medical students (both from the uniprofessional and interprofessional groups). However, the authors of the included articles did thematic analysis of the reflection content and did not indicate where the students were struggling or where they did best. Nonetheless, the authors highlighted that these reflective journals generally represent good quality reflections that go beyond descriptive writing and contain richer data.

Two studies used an assignment related to the IPE simulation activity (MacKenzie et al., Citation2017; Sabus et al., Citation2011). In one study, students completed an assignment and did well in meeting the assignment objectives, with only a few groups struggling at patient-centered recommendations and interprofessional collaboration (Sabus et al., Citation2011). The authors did not provide more details regarding the students’ performance in this assignment. Finally, one of the studies indicated that the care plans provided the grade distribution of the care plans, which was 5% (n = 3) excellent, 18% (n = 10) very good, 67% (n = 37) good, and 9% (n = 5) satisfactory. Nonetheless, the authors did not indicate where the students struggled most (MacKenzie et al., Citation2017).

Discussion

The purpose of this systematic review was to characterize the published literature evaluating student outcomes summatively following IPE activities using reflection and simulation. Although a wealth of literature is available on various assessment tools for IPE, our findings indicate minimal rigorous use of either reflection or simulation for summative assessment purposes. Most of the excluded articles focused on assessing students’ perceptions and attitude change rather than summative assessment. This systematic review yielded only five studies of variable overall quality scores ranging from 28 to 78% with an average score of 57.4%, highlighting the insufficient evidence available on IPE assessments and rubrics used.

Crucial to the success of IPE implementation is assessment integration of IPE activities, as it is widely accepted that assessment drives learning (Ferris & O’Flynn, Citation2015; Wormald et al., Citation2009). Formative assessment for IPE has been encouraged due to the interactive nature of IPE and to enable the integration of supportive feedback to provide the students with a learning opportunity to reflect with their instructors and peers on strengths, weaknesses, and areas for improvement (Morison & Stewart, Citation2005). However, CAIPE urged a cultural change with both formative and summative assessment utilized and integrated into existing curricula and aligned to IPE teaching and delivery for greater student accountability (Barr et al., Citation2016). Furthermore, they added that both students and faculty may value assessment more when it is summative in nature (Barr et al., Citation2016). The weight of an assessment assigned toward a final course grade has a profound effect on students’ motivation to learn (Wormald et al., Citation2009). Ongoing reliance on formative assessment to evaluate student outcomes perpetuates views that IPE is optional with limited significance to the learner (Stone, Citation2010).

A summative approach to assessment may be neglected by health professional programs developing and administering IPE activities for many reasons. IPE brings different health professions, so there is difficulty in developing shared assignments for use in profession-specific courses. Other factors to consider are the curriculum context, the level of previous IPE exposure or previous content exposure and proficiency, and unequal distribution in the number of students from the different professions. Equitable IPE learning is an essential factor in planning for shared IPE assessment, especially when it is related to experiential learning (Machin & Jones, Citation2014). The potential value of summative assessment in IPE cannot be ignored considering the dedicated resources needed for the development and implementation of IPE programs. In addition, there is a growing need for equitable interprofessional-based competency assessment across all professions to ensure fair exposure to IPE by healthcare students (Barr et al., Citation2016). An IPE assessment committee has been recommended with members from all involved professions to collaborate to formalize an overall IPE assessment plan of student competencies, ensure commitment and delivery across the different professions, and validate the necessity of IPE within health programs (Kahaleh et al., Citation2015; Stone, Citation2010). The committee could look at the overall plan to avoid overassessment and underassessment of IPE competencies (Kahaleh et al., Citation2015).

Summative reflection needs to be part of a continuous process of reflecting, planning, applying, and evaluating and not an endpoint to an individual IPE activity (Root & Waterfield, Citation2015). Although a summative reflective portfolio has been shown to foster the development of reflective practice capabilities (O’Sullivan et al., Citation2012), rubrics for reflective writing have been viewed as a limitation to the essence of the reflection process, as students tend to categorize their reflection according to the rubric domain rather than reflecting on their learning and linking their concepts together (Root & Waterfield, Citation2015). Some argue that assessed reflection may lead to student dishonesty, fabrication, and exaggeration about their experiences to achieve the assessment’s objective and satisfy the assessor (Genua, Citation2021; Maloney et al., Citation2013). A key factor influencing students’ engagement in honest reflection is whether students have been educated to develop their critical thinking skills to promote student reflection and understand these activities’ relevance to becoming reflective practitioners. Additionally, the assessment approach (i.e. summative vs. formative) can also have an influence on students reflective ability ((Maloney et al., Citation2013). Targeted strategies considering these factors are essential to developing reflective practitioners who are able to practice interprofessionally with an appreciation of their own roles and the role of other team members within the healthcare team (Zarezadeh et al., Citation2009).Changing the assessment from having a score or a percentage to a grade of complete/incomplete or pass/ fail has been recommended (Genua, Citation2021; Maloney et al., Citation2013).

It has been suggested that IPE assignments need to be aligned with the IPE shared core competencies and the intended learning outcomes of the IPE program to assess students’ interprofessional capabilities (Kahaleh et al., Citation2015). These can include interprofessional communication, role clarification, collaborative decision making, team functioning, reflexivity, interprofessional values, and ethics (Rogers et al., Citation2017). Competencies need to be readily observed or detected in writing to be graded to document that student performance meets expected predetermined standards. Students need to be oriented on the format of IPE assessments. In one of the included studies, students were oriented on reflective writing through a series of lectures and workshops. However, student reflections were primarily descriptive with limited focus on teamwork. More clarity around expected learning outcomes from the reflection would enhance student reflective ability (Root & Waterfield, Citation2015). An appropriate remedial action plan is required for students who do not pass these assessments.

Individual or team-based IPE assessment and whether giving a grade to the individual student or the team will need to be considered by faculty members when planning the IPE assessments (Thislethwaite & Vlasses, Citation2017). For example, simulation through OSCE is commonly used in various health professions’ education, and hence it is appropriate to use to assess IPE competencies (Morison & Stewart, Citation2005). However, OSCE scoring criteria need to be valid, reliable, and reproducible by different assessors in measuring intended knowledge, skills, and competencies, which can be challenging due to the inherent variability and low generalizability of team scores on assessing the team (Lie et al., Citation2017; Oermann et al., Citation2016). Furthermore, some simulations include complex clinical content focusing on appropriate intervention rather than interprofessional work (Anderson et al., Citation2016). In addition to the logistical challenges of coordinating IPE simulation activity, TOSCEs have been suggested to prepare for summative evaluation (Lie et al., Citation2017). This is in accordance with the findings of this systematic review where graded written reflection or team-based collaborative plan followed IPE simulation activities (MacKenzie et al., Citation2017; Sabus et al., Citation2011). Furthermore, class time can be reserved for teamwork to enhance student acceptability of group assignments and successful collaboration amongst students (Sabus et al., Citation2011). Other factors to consider for IPE summative assessments include the weighting in terms of allocated grades or pass/ fail, timing, professions to be involved, uniprofessional or interprofessional assessors, the format of faculty development needed, and the impact of these assessments (Thislethwaite & Vlasses, Citation2017). Moving away from traditional assessment tools such as examinations that have multiple-choice, true/false, and short answers questions, which are based on memorizing and discourage collaboration, to tools that encourage collaboration has been suggested to ensure deep learning and understanding within the IPE context (Stone, Citation2010). An example included in this review was the development of a team-based collaborative care plan that was assessed following the IPE simulation (MacKenzie et al., Citation2017).

An international consensus statement on the assessment of interprofessional learning outcomes highlighted the following gaps in need for future exploration: including the impact of IPE assessment and its connection to patient-centered care, the minimum number of required IPE assessments, faculty development, and strategies for optimal feedback (Rogers et al., Citation2017). Future researchers need to document assessed IPE activities, and share rubrics used to provide more clarity on the assessment method used. There is a need to study, investigate, and develop different approaches for IPE assessments that are valid and reliable with cultural adaptation and validation within an interprofessional learning environment that assessors can use with confidence. This will determine whether students have met the intended competencies and ensure graduates have the needed capabilities to practice collaboratively and improve patient outcomes (Rogers et al., Citation2017). There is a need to longitudinally assess and evaluate the learner’s knowledge, skills, behavior, and performance related to IPE during their journey in the program (Roberts et al., Citation2019; Stone, Citation2010).

There are several limitations of this systematic review. A strict selection process was followed to answer the review-specific questions, and hence a very limited number of articles were included. Studies where the assessment tool was not part of the summative assessment were excluded. The number of articles included in this systematic review was limited to only five; some IPE programs may utilize reflection and simulation for summative assessment but have not published this information and merely used it for internal evaluation. There may have been other assessment approaches where IPE was graded that are not by reflection or simulation. Furthermore, authors prefer or tend to publish innovations in the delivery of IPE content with a focus on readiness and attitude rather than assessment of student performance or IPE competencies acquired.

Conclusion

The assessment of interprofessionalism remains a challenge, and there is a clear gap in the literature about the use of reflection or simulation for summative assessment purposes where research needs to grow. This review identified a need for both formative and summative IPE assessment. However, these assessments need to be valid, reliable, mapped to IPE shared competencies, and part of an overall IPE assessment plan to ensure commitment and delivery across the different participating professions. Furthermore, training needs to be offered to both faculty assessors, to ensure they are competent and results are reproducible, and to students, to equip them with the knowledge, critical thinking skills, and attitude for becoming reflective practitioners who are able to practice interprofessionally. Future researchers should give priority to including information about the rubric used and longitudinal evaluation. It is time to raise the bar for innovative IPE assessment approaches.

Acknowledgments

Open Access funding provided by the Qatar National Library.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Anderson, E., Smith, R., & Hammick, M. (2016). Evaluating an interprofessional education curriculum: A theory-informed approach. Medical Teacher, 38(4), 385–394. https://doi.org/10.3109/0142159X.2015.1047756

- Ascione, F. J., Najjar, G., Barnett, S. G., Benkert, R. A., Ludwig, D. A., Doll, J., Gallimore, C. E., Pandey, J., & Zorek, J. A. (2021). A preliminary exploration of the impact of accreditation on interprofessional education using a modified Delphi analysis. Journal of Interprofessional Education & Practice, 25, 100466. https://doi.org/10.1016/j.xjep.2021.100466

- Barr, H., Gray, R., Helme, M., Low, H., & Reeves, S. (2016). Interprofessional education guidelines 2016. Centre for the Advancement of Interprofessional Education. https://www.caipe.org/resources/publications/caipe-publications/barr-h-gray-r-helme-m-low-h-reeves-s-2016-interprofessional-education-guidelines

- Brashers, V., Erickson, J. M., Blackhall, L., Owen, J. A., Thomas, S. M., & Conaway, M. R. (2016). Measuring the impact of clinically relevant interprofessional education on undergraduate medical and nursing student competencies: A longitudinal mixed methods approach. Journal of Interprofessional Care, 30(4), 448–457. https://doi.org/10.3109/13561820.2016.1162139

- Brewer, M., Gribble, N., Lloyd, A., Robinson, P., & White, S. (2012). Interprofessional capability assessment tool (ICAT). http://healthsciences.curtin.edu.au/wpcontent/uploads/sites/6/2015/10/M4_Interprofessional_Capability_Assessment_Tool_2014.pdf

- Buring, S. M., Bhushan, A., Broeseker, A., Conway, S., Duncan-Hewitt, W., Hansen, L., & Westberg, S. (2009). Interprofessional education: Definitions, student competencies, and guidelines for implementation. American Journal of Pharmaceutical Education, 73(4), 59. https://doi.org/10.5688/aj730459

- Centre for the Advancement of Interprofessional Education. (2002). Interprofessional education – A definition. Retrieved April 25, 2015, from http://www.caipe.org.uk/resources/

- Crowe, M. (2013). Crowe critical appraisal tool (CCAT) user guide. Conchra House.

- Cullen, L., Fraser, D., & Symonds, I. (2003). Strategies for interprofessional education: The interprofessional team objective structured clinical examination for midwifery and medical students. Nurse Education Today, 23(6), 427–433. https://doi.org/10.1016/S0260-6917(03)00049-2

- Dagenais, R., Pawluk, S. A., Rainkie, D. C., & Wilby, K. (2018). Team based decision making in an objective structured clinical examination (OSCE). Innovations in Pharmacy, 9(3), 14–14. https://doi.org/10.24926/iip.v9i3.1255

- Domac, S., Anderson, L., O’Reilly, M., & Smith, R. (2015). Assessing interprofessional competence using a prospective reflective portfolio. Journal of Interprofessional Care, 29(3), 179–187. https://doi.org/10.3109/13561820.2014.983593

- Ferris, H., & O’Flynn, D. (2015). Assessment in medical education: What are we trying to achieve? International Journal of Higher Education, 4(2), 139–144. https://doi.org/10.5430/ijhe.v4n2p139

- Fox, L., Onders, R., Hermansen-Kobulnicky, C. J., Nguyen, T.-N., Myran, L., Linn, B., & Hornecker, J. (2018). Teaching interprofessional teamwork skills to health professional students: A scoping review. Journal of Interprofessional Care, 32(2), 127–135. https://doi.org/10.1080/13561820.2017.1399868

- Genua, J. A. (2021). The relationship between the grading of reflective journals and student honesty in reflective journal writing. Nursing Education Perspectives, 42(4), 227–231. https://doi.org/10.1097/01.NEP.0000000000000826

- Grymonpre, R. E., Bainbridge, L., Nasmith, L., & Baker, C. (2021). Development of accreditation standards for interprofessional education: A Canadian case study. Human Resources for Health, 19(1), 12, 1–10. https://doi.org/10.1186/s12960-020-00551-2

- Interprofessional Education Collaborative Expert Panel. (2011). Core competencies for interprofessional collaborative practice: Report of an expert panel. https://ipec.memberclicks.net/assets/2016-Update.pdf

- Kahaleh, A. A., Danielson, J., Franson, K. L., Nuffer, W. A., & Umland, E. M. (2015). An interprofessional education panel on development, implementation, and assessment strategies. American Journal of Pharmaceutical Education, 79(6), 78. https://doi.org/10.5688/ajpe79678

- Lie, D. A., Richter-Lagha, R., Forest, C. P., Walsh, A., & Lohenry, K. (2017). When less is more: Validating a brief scale to rate interprofessional team competencies. Medical Education Online, 22(1), 1314751. https://doi.org/10.1080/10872981.2017.1314751

- Machin, A. I., & Jones, D. (2014). Interprofessional service improvement learning and patient safety: A content analysis of pre-registration students’ assessments. Nurse Education Today, 34(2), 218–224. https://doi.org/10.1016/j.nedt.2013.06.022

- MacKenzie, D., Creaser, G., Sponagle, K., Gubitz, G., MacDougall, P., Blacquiere, D., Miller, S., & Sarty, G. (2017). Best practice interprofessional stroke care collaboration and simulation: The student perspective. Journal of Interprofessional Care, 31(6), 793–796. https://doi.org/10.1080/13561820.2017.1356272

- Maloney, S., Tai, J. H.-M., Lo, K., Molloy, E., & Ilic, D. (2013). Honesty in critically reflective essays: An analysis of student practice. Advances in Health Sciences Education, 18(4), 617–626. https://doi.org/10.1007/s10459-012-9399-3

- Morison, S. L., & Stewart, M. C. (2005). Developing interprofessional assessment. Learning in Health and Social Care, 4(4), 192–202. https://doi.org/10.1111/j.1473-6861.2005.00103.x

- Murray-Davis, B., Solomon, P., Malott, A., Marshall, D., Mueller, V., Shaw, E., Dore, K., & Burns, S. (2013). A team observed structured clinical encounter (TOSCE) for pre-licensure learners in maternity care: A short report on the development of an assessment tool for collaboration. Journal of Research in Interprofessional Practice and Education, 3(1). https://doi.org/10.22230/jripe.2013v3n1a89

- O’Sullivan, A. J., Howe, A. C., Miles, S., Harris, P., Hughes, C. S., Jones, P., Scicluna, H., & Leinster, S. J. (2012). Does a summative portfolio foster the development of capabilities such as reflective practice and understanding ethics? An evaluation from two medical schools. Medical Teacher, 34(1), e21–e28. https://doi.org/10.3109/0142159X.2012.638009

- Oermann, M. H., Kardong-Edgren, S., & Rizzolo, M. A. (2016). Summative simulated-based assessment in nursing programs. Journal of Nursing Education, 55(6), 323–328. https://doi.org/10.3928/01484834-20160516-04

- Orchard, C., Bainbridge, L., Bassendowski, S., Stevenson, K., Wagner, S. J., Weinberg, L., Curran, V., Di Loreto, L., & Sawatsky-Girling, B. (2010). A national interprofessional competency framework. Canadian Interprofessional Health Collaborative. https://ipcontherun.ca/wp-content/uploads/2014/06/National-Framework.pdf

- Reeves, S., Boet, S., Zierler, B., & Kitto, S. (2015). Interprofessional education and practice guide No. 3: Evaluating interprofessional education. Journal of Interprofessional Care, 29(4), 305–312. https://doi.org/10.3109/13561820.2014.1003637

- Reeves, S. (2012). The rise and rise of interprofessional competence. Journal of Interprofessional Care, 26(4), 253–255. https://doi.org/10.3109/13561820.2012.695542

- Roberts, S. D., Lindsey, P., & Limon, J. (2019). Assessing students’ and health professionals’ competency learning from interprofessional education collaborative workshops. Journal of Interprofessional Care, 33(1), 38–46. https://doi.org/10.1080/13561820.2018.1513915

- Rogers, G. D., Thistlethwaite, J. E., Anderson, E. S., Abrandt Dahlgren, M., Grymonpre, R. E., Moran, M., & Samarasekera, D. D. (2017). International consensus statement on the assessment of interprofessional learning outcomes. Medical Teacher, 39(4), 347–359. https://doi.org/10.1080/0142159X.2017.1270441

- Root, H., & Waterfield, J. (2015). An evaluation of a reflective writing assessment within the MPharm programme. Pharmacy Education, 15(1), 142–144.

- Roy, V., Collins, L. G., Sokas, C. M., Lim, E., Umland, E., Speakman, E., Koeuth, S., & Jerpbak, C. M. (2016). Student reflections on interprofessional education: Moving from concepts to collaboration. Journal of Allied Health, 45(2), 109–112.

- Sabus, C., Sabata, D., & Antonacci, D. (2011). Use of a virtual environment to facilitate instruction of an interprofessional home assessment. Journal of Allied Health, 40(4), 199–205.

- Simmons, B., Egan-Lee, E., Wagner, S. J., Esdaile, M., Baker, L., & Reeves, S. (2011). Assessment of interprofessional learning: The design of an interprofessional objective structured clinical examination (iOSCE) approach. Journal of Interprofessional Care, 25(1), 73–74. https://doi.org/10.3109/13561820.2010.483746

- Stone, J. (2010). Moving interprofessional learning forward through formal assessment. Medical Education, 44(4), 396–403. https://doi.org/10.1111/j.1365-2923.2009.03607.x

- Tan, C.-E., Jaffar, A., Tong, S.-F., Hamzah, M. S., & Mohamad, N. (2014). Comprehensive healthcare module: Medical and pharmacy students’ shared learning experiences. Medical Education Online, 19(1), 25605–25605. https://doi.org/10.3402/meo.v19.25605

- Thislethwaite, J. E., & Vlasses, P. H. (2017). Interprofessional education. In J. A. Dent, R. M. Harden, & D. Hunt (Eds.), A practical guide for medial teachers (5th ed., pp. 128–133). Elsevier.

- Wormald, B. W., Schoeman, S., Somasunderam, A., & Penn, M. (2009). Assessment drives learning: An unavoidable truth?. Anatomical Sciences Education, 2(5), 199–204. https://doi.org/10.1002/ase.102

- Zarezadeh, Y., Pearson, P., & Dickinson, C. (2009). A model for using reflection to enhance interprofessional education. International Journal of Education, 1(1). https://doi.org/10.5296/ije.v1i1.191

- Zorek, J., & Raehl, C. (2013). Interprofessional education accreditation standards in the USA: A comparative analysis. Journal of Interprofessional Care, 27(2), 123–130. https://doi.org/10.3109/13561820.2012.718295