ABSTRACT

Many observed that the rise of GenAI is causing an ‘erosion of trust’ between students and teachers in higher education. Such distrust mainly stems from concerns about student cheating, which is believed to be massively facilitated by recent technological breakthroughs in GenAI. Despite anecdotal discussions, little empirical research has explored students’ experiences with trust in the GenAI age. This study engaged university students in concept mapping activities followed by interviews to investigate how they navigate trust-building with teachers in this AI-mediated assessment landscape. The findings highlight an absence of ‘two-way transparency’ – while students are required to declare their AI use and even submit chat records, the same level of transparency is often not observed from the teachers (e.g. ambiguities around teachers’ grading process of AI-mediated work). The transparency issue reinforces teacher-student power imbalances and top-down surveillance mechanisms, resulting in a low-trust environment where students feel unsafe to freely explore GenAI use.

Introduction

Trusting relationships between teachers and students are crucial in developing effective teaching, learning and assessment (Felten, Forsyth, and Sutherland Citation2023). However, teacher-student relationships have come under increasing pressure in higher education in recent times. For example, the scaling of higher education makes it harder for teachers to connect with students on a personal level whereas more individualised approaches are needed to address the increasingly diverse student body. The intensified focus on research performance metrics (e.g. the number of external grants, h-index) could also deemphasise the relational aspect of university teachers’ work.

The recent rise of generative AI (GenAI) adds another layer of complexity to teacher-student relationships in higher education. In a recent report released by Turnitin, it was found that one in 10 university essays is partly AI-written (Williams Citation2023). Although similar forms of cheating (e.g. outsourcing one’s work to ghostwriters) can be traced long before the introduction of ChatGPT to the public, technological breakthroughs in GenAI have massively reduced the cost and effort required for students to engage in cheating behaviour (Lodge, Thompson, and Corrin Citation2023). Unlike cases of plagiarism where a source document can be identified, the absence of a referenceable source for AI cheating often ‘leaves the door for teacher bias to creep in’ (Fowler Citation2023).

In this context, there is discernible concern among the higher education community that GenAI is causing an ‘erosion of trust’ between students and their teachers (Plé Citation2023; Gratiot Citation2023). Sokol (Citation2023) commented that ‘it is too easy to falsely accuse a student of using AI’ and that students are constantly judged in the shadow of suspicion. In July 2023, Times Higher Education reported the story of Emily – an undergraduate law student who was falsely accused of using ChatGPT to write her essay (Sokol Citation2023). It was not easy for Emily to prove her innocence, and the whole process took an emotional toll on her. As more and more universities subscribe to AI-detection software, students may end up spending more time ensuring their work avoids being ‘flagged’ rather than productively engaging with GenAI for learning purposes. Fowler (Citation2023) went further to argue that there is no way for students to prove their innocence unless ‘your teacher trusts you as a student’. However, due to increased class size and widening participation in higher education, it can be challenging to build trusting relationships between teachers and students.

At present, little is known about how students navigate trust-building with their teachers in this AI-mediated assessment landscape. For example, what strategies do students employ to establish trust with their teachers? What challenges or concerns do they experience? How do they perceive the role of emerging technologies (i.e. GenAI) in influencing teacher-student trust? Giving voice to students is important because how students perceive and experience trust will likely impact teacher-student relationships, their academic achievement and engagement with GenAI for learning purposes. This study will shed light on the above questions through concept mapping activities supplemented by in-depth interviews with 11 university students. With a focus on student experiences, this study is expected to inform the development of more supportive, inclusive and student-centred GenAI policies and practices in higher education.

Defining trust

Although trust and its importance have been extensively discussed across different disciplines (e.g. social psychology, business, education, marketing), it remains an elusive concept whose definition depends upon context. For this study, we follow Hoy and Tshannen-Moran’s (Citation1999) well-cited definition of trust in the education context – i.e. ‘an individual’s or group’s willingness to be vulnerable to another party based on the confidence that the latter party is benevolent, reliable, competent, honest and open’ (189). This ‘willingness to be vulnerable’ can extend in both directions in, for example, teacher-student relationships (e.g. students being willing to admit mistakes to their teachers, and teachers showing willingness to seek constructive feedback from students without defensiveness). Carless (Citation2013) believed that all the five features mentioned by Hoy and Tshannen-Moran have relevance to establishing trust in higher education assessment, such as benevolence and competence in feedback delivery, reliability in judgement-making, honest communication of strengths and weaknesses, and openness about assessment processes and criteria. Hoy and Tshannen-Moran’s definition of trust fits well with the research goal as it highlights the interpersonal dimensions of trust and is applicable to the assessment context.

Trust between students and teachers in higher education

Trust is one of the most significant factors contributing to a healthy, positive, and mutually beneficial teacher-student relationship (Felten, Forsyth, and Sutherland Citation2023). To date, many studies have used quantitative methods to evidence that trust – as an important element of teacher-student relationships – has a major influence on students’ academic motivation and performance (Lee Citation2007; Adams Citation2014), perception of safety (Mitchell, Kensler, and Tschannen-Moran Citation2018), self-regulation (Adams Citation2014) and identification with school (Tschannen-Moran et al. Citation2013; Mitchell, Kensler, and Tschannen-Moran Citation2018). Teachers also benefit from a trusting relationship with students – teachers who have low trust in their students are found prone to burnout (Roffey Citation2012).

It should be noted that most of the above studies were conducted in the school setting rather than in higher education. Kovac and Kristiansen (Citation2010) argued that trust between university teachers and students differs from that between teachers and younger pupils, in that it is primarily based on ‘knowledge trust’ (e.g. trust of teacher competence) as opposed to ‘personal trust’ (i.e. trust of teacher care and kindness). Kovac and Kristiansen’s argument underscores the need for more research to unveil what teacher-student trust looks like in higher education rather than simply extrapolating findings from school settings. Indeed, the massification of higher education results in less space for developing close interpersonal teacher-student relationships than in traditional schools (Carless Citation2013). Compared to research, many university professors may not regard teaching as their primary duty and more emphasis could have been placed on guiding students to learn independently (Macfarlane Citation2009).

At present, teacher-student trust in higher education is underexplored, with most research focusing on institutional trust (trust towards higher education institutions from neoliberal angles) (Macfarlane Citation2009; Kovač and Kristiansen Citation2010; Felten, Forsyth, and Sutherland Citation2023). A notable exception is Macfarlane (Citation2009) who proposed 25 actions that undermine student trust in university teachers based on four principles of trust – i.e. benevolence, integrity, competence and predictability. Some other scholars developed conceptual frameworks to theorise teacher-initiated trust moves (Felten, Forsyth, and Sutherland Citation2023) and trust-building between underserved students and practitioners in online learning (Payne, Stone, and Bennett Citation2022).

Empirical research on trust between teachers and students is particularly scarce in higher education (Felten, Forsyth, and Sutherland Citation2023). One valuable example is Cavanagh and colleagues’ (Citation2018) study which examined the correlation between physiology undergraduates’ trust towards their instructors and their commitment to active learning. However, similar to the reviewed studies in school settings, Cavanagh et al.’s study also relied heavily on self-report Likert scale surveys to measure trust. Such methodology is limited in that it can only capture surface-level perceptions of trust and tends to oversimplify trust-building into a linear process.

Trust, assessment and technology

Some of the most well-cited studies on trust in higher education assessment are authored by Carless (Citation2009, Citation2013). Carless (Citation2009, Citation2013) made a compelling argument that trust between teachers and students is a crucial factor impacting students’ involvement in learning-oriented assessment and their feedback uptake. This is because assessment and feedback experiences often engender strong emotions and trust contributes to an open, dialogic and supportive learning environment for students to proactively engage in these experiences.

With accelerated digitalisation in higher education assessment, some studies started to explore how technology mediates teacher-student trust in the assessment context. In these studies, however, trust is often discussed in an anecdotal manner or as a secondary theme rather than recognised as a topic worthy of independent investigation (Carless Citation2013). Here are a few exceptions: Ross and Macleod (Citation2018) presented a theoretical discussion regarding the negative impacts of plagiarism detection software on teacher-student trust in the assessment of students’ work. Fawns and Schaepkens (Citation2022) critically reflected on the trust relations produced through high-stakes online proctoring exams. Macfarlane (Citation2022) wrote about the growing distrust towards university students, in particular relation to the use of anti-plagiarism software and learning analytics to trace student engagement. While these studies contribute valuable insights, they remain conceptual and do not include student perspectives in their investigations. Students are often positioned as ‘trustees’ (i.e. whether they are trusted by teachers) rather than ‘trustors’ with the agency to navigate their trust-building experiences.

With the advent of GenAI, the trust dynamics between university teachers and students in assessment will arguably become more nuanced. A handful of discussion papers have voiced concerns about how GenAI may reinforce a relationship of distrust between university teachers and students (e.g. Kramm and McKenna Citation2023; Rudolph Citation2023), but very limited empirical studies can be found on this topic. One example is Gorichanaz (Citation2023) who reviewed 49 Reddit posts to understand how students respond to allegations of ChatGPT on assessment. Although ‘trust’ is not a major focus in this study, the author reported that some students attempted to build trust with their teacher earlier in the semester so the teacher may ‘give them the benefit of doubt’ should suspected cases of AI cheating occur (191).

The current study

The literature review reveals a critical gap in researching trust-building between university teachers and students in an AI-mediated assessment landscape. This line of research will particularly benefit from (1) incorporating qualitative research designs to provide nuanced insights, and (2) ensuring the inclusion of student perspectives to better understand the dynamics of trust-building. With these two goals in mind, this study adopts qualitative research methods to explore:

How do university students navigate trust-building with their teachers in an AI-mediated assessment landscape?

Method

Participant recruitment

Recruitment posters with a brief introduction to the research goal and procedures were published on an online forum hosted by the author’s university. The study did not set specific requirements on the participants’ demographics (e.g. gender, programmes) except that they should have prior experiences with GenAI in their assignments.

Eleven students agreed to participate, and their demographics can be found in . Most of the recruited students were either studying to become teachers or planning to work in the education field because the university is a major teacher education provider in Asia. Despite limitations in sampling, the dual identity of these students – as university students and future teachers – provides nuanced perspectives as they navigate trust-building with teachers not only as students but also as individuals preparing to enter the education profession.

Table 1. Participant demographics.

GenAI policies at the research site

The University adopts a liberal approach to regulate students’ use of different GenAI tools, as long as the use is considered ‘ethical and appropriate’. ChatGPT (GPT-3.5) service has been provided to all students at this university since September 2023 through its intranet and each user is given a monthly credit of 500,000 to use the service. The University has put in place an overarching framework to guide students’ GenAI use, but the framework remains general to allow for contextualisation from course teachers.

Data collection

The study used a three-pronged data collection technique. First, the participants entered a one-on-one interview with the researcher on Zoom to introduce their background information, such as year of study, programme, and prior experience with using GenAI to assist in their assessment.

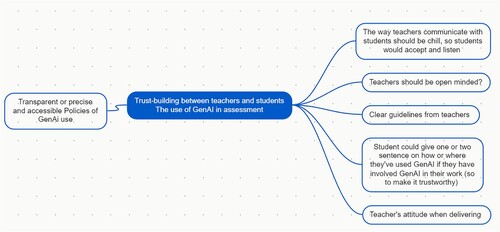

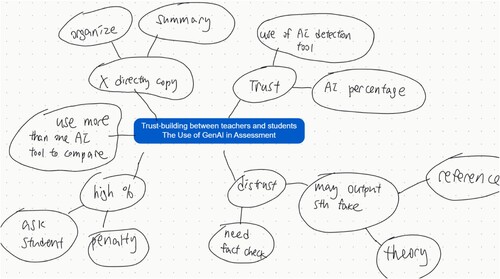

Second, participants were invited to draw a concept map based on the central theme (i.e. ‘Trust-building between Teachers and Students: The Use of GenAI in Assessment’) shown on Zoom’s whiteboard function. Participants were given 15 minutes to visualise their perceptions and experiences related to the theme (See and for some examples). Although some scholars insist on a more restrictive definition of concept map (e.g. hierarchically organised map), this study followed Wheeldon and Faubert’s (Citation2009) advice that concept maps can and should include a wide variety of visual representations to encourage the free expression of participants. Concept mapping was used in this study because it represents a ‘participant-centric’ and ‘user-generated’ data collection method which allows participants themselves to frame their past experiences and understanding of concepts (Legard, Keegan, and Ward Citation2003; Wheeldon and Faubert Citation2009, 75). Concept mapping has also been widely adopted in social sciences research and is regarded useful in ‘gathering more unsolicited reflection [and] providing a visual snapshot of experience’ (Wheeldon and Faubert Citation2009, 79). Prior to the concept mapping, participants received instructions explaining the meaning and format of concept maps and were assured that there were no right or wrong answers. They were also informed that the wording did not need to be delicate, as follow-up interviews would be conducted to gather additional information.

Third, follow-up interviews were conducted to clarify the contents represented in students’ concept maps. Participants were first invited to walk the researcher through their concept maps. Open-ended questions were then asked for a more elaborate exploration of their perceptions and experiences (e.g. Did you experience something similar to this? Can you elaborate? How is this related to trust and distrust?). The duration of the entire interview with concept mapping ranged from 30 minutes to one hour.

Data analysis

Each student’s data was treated as a separate case, in which the concept map and interview data were analysed in an integrated manner. This is because some nodes in the maps are vague and must be interpreted alongside the interviews; students’ background information provided at the start of the interviews also contributes to a more comprehensive understanding of students’ trust-building experiences.

In each student’s dataset, the concept map provided an initial framework for open coding, while the interviews offered contextual information and elaboration (Wheeldon and Faubert Citation2009). Following the open coding process for each student case, all the open codes were reviewed and categorised into broader themes to address the research question. shows an example of how some open codes under one student’s data were categorised into broader themes. In sum, a within-case analysis was first conducted on each student’s map and interview, followed by cross-case analysis to compare the codes and identify the overall themes. The author conducted all the interviews and performed the data analysis. A trained research assistant independently coded one-third of the dataset (i.e. three student cases) and then reviewed all the coding results. The two researchers discussed discrepancies in coding until a consensus was reached.

Table 2. Example of coding.

Findings

The study identified four major themes related to how students navigate trust-building with their teachers (i.e. navigating the use of AI, navigating the acknowledgement of AI, navigating interpersonal relationship building, and navigating expectations of teachers). Four evocative student quotes were intentionally chosen as sub-section titles to convey the key messages delivered through these themes.

‘I’m scared, so I turned off all AI tools when I do my assignments’

All students expressed concerns about the use of GenAI in assessment and academic misconduct. They mentioned either personal experiences or anecdotes about students being (mistakenly) accused of incorporating AI-generated content into their work. Student LK, for example, talked about a student in her programme who received a high ‘AI score’ in Turnitin and was subsequently disciplined in the form of a six-month study deferral. Other students also shared cases they read online, in which students had to go through investigation hearings or oral viva to defend themselves.

In view of these consequences, it is found that some students’ preferences for trust-building are orientated towards ‘risk control’. This results in very self-defensive approaches where students intentionally refuse to use GenAI in their assignments.

They say when you are put on that investigation hearing, it’s not just about whether your teacher trusts you. There will be other university staff, even the faculty dean, and many others whom you may have never met before. […] I can’t take this risk, so I just did not use any AI tools to help with my assignments. (Student YY)

I’m a frequent ChatGPT user. […] But you know the policies are kind of vague – what is appropriate use? How much can we use? These courses and grades are very important to me. So every time I do my assignments, I stay away from ChatGPT. I even turn off Grammarly on my computer, just in case. (Student VL)

Others complained that although GenAI policies are available at the university level, they fail to provide enough practical guidance on how GenAI can/should be used in assignments. Without specific instructions from course teachers, students were unsure whether they had ‘stepped over the line’ and felt vulnerable to unintended academic misconduct. For example,

I use Quillbot and Grammarly to polish my writing, but it seems that they can be detected and regarded as AI cheating as well. I’ve been using them before we have ChatGPT, but now I’m more cautious. (Student HC)

I sometimes use ChatGPT to help me generate my essay structure and some basic ideas. But I don’t know if this will be calculated into my ‘AI score’ in Turnitin. The teacher wasn’t being specific on this matter too. (Student MZ)

‘If I acknowledge my use of AI, who knows I won’t be penalised in the marking?’

During the interviews, students mentioned the university’s requirement to submit an ‘AI declaration’ for each assignment. In this declaration, students need to explicitly report whether they have used GenAI to produce their work. One student also noted that a professor asks students to submit chat records with ChatGPT if it has been used in the assignment.

The study found students’ experiences with AI declaration are nuanced and entangled with the level of trust they had in their professor – and specifically – in whether the professor is being honest and transparent about how such disclosure will be viewed and treated in the marking process. The below quotes present illustrative examples:

The professor said we can use ChatGPT for brainstorming, grammar, structuring and feedback purposes. […] I know these are allowed, but I still have concerns about whether to acknowledge this. What if the professor thinks I’ve used too much AI? Or will this put me at a disadvantage compared to those who did not use it? (Student JY)

(If you acknowledge AI use), the teacher may feel you are not independent enough, your language skills are poor, or even you’ve used AI to write most parts of the assignment. (Student YY)

Two students further noted that for them to trust teachers and feel comfortable sharing details about their AI use, it requires more than just transparency into teachers’ marking process. Specifically, Student JP emphasised the additional need to address stereotypical views some teachers may hold about GenAI and what it signifies for a student’s study behaviour or abilities.

Student JP: I think this requires a change of mindset. […] Most people see using AI as an easy way out, like we are not putting in any effort, which is not true.

Researcher: What leads to these mindsets, as you said, seeing the use of AI as an easy way out?

Student JP: The way the media portrays it; the earlier policies that ban its use. Too much focus on the bad sides! Teachers need to spread the idea that each software has its pros and cons.

If the Professor emphasises that students must acknowledge their AI use or else will face penalties for cheating, this will only make students even more concerned that AI use is inherently ‘bad’. This will make it more difficult for us to trust teachers that our use of AI won’t be penalised in some way. If teachers are chill about it, we will be chill about it.

‘There is no trust because the professor doesn’t know me as a person’

Students believed that personal connection plays a significant role in the trust-building between teachers and students. One student said she intentionally stays after lectures to ask questions and participates actively in class. By doing so, she hopes that the professors can ‘get to know her [me] better’ and potentially give her the benefit of doubt if her assignment is flagged by the AI detection system.

Others were more pessimistic about building personal connections with teachers in higher education. Student MX described students as ‘anonymised’ in front of professors due to the size and arrangement of university courses. Student VL shared the same concern, saying this is the reason why she avoids using GenAI in assignments. As a pre-service schoolteacher, Student MX was less concerned about similar issues in her profession and believed the rise of GenAI ‘presents special challenges’ to teacher-student trust in higher education.

In school it’s different. You see the kids every day, and you know most of their character, their behaviour and motivation. […] University professors may not have a very deep understanding of each student; some barely know my name. It’s just easier for them (teachers) to assume the worst of us.

She said it was fine. But I can’t stop wondering if she really trusted what I said. I don’t think she knows who I am. It’s a big class. If I were a professor and I got the Turnitin AI score, of course I would believe the number.

‘I now have more expectations for the teacher’

Another prominent theme is that students have heightened expectations for professors to demonstrate AI literacy in the design and implementation of assessment practices. Students expect their teachers to demonstrate a sophisticated understanding of AI technologies and competence in guiding students to navigate many new complexities in the age of AI. Student JY’s quote very well illustrates how these expectations are intertwined with students’ trust in teachers:

Professor X is very open to new technologies. In the first few months when ChatGPT came out, he would spare 15 min per session guiding us to ask questions to GPT and then reflect on its outputs. […] He reminds us that AI detection can be inaccurate. […] I feel more comfortable acknowledging my AI use in his class because I think he understands this is not necessarily cheating.

In relation to the comments about fairness, other students argued that university teachers should re-examine their assignments to ‘make sure they can’t be easily generated by AI’ (Student LM). Students also expect teachers to design assessment that addresses the opportunities brought by AI to education. For example, Student VL shared an example where a professor in early childhood studies guided students to develop AI chatbots that can offer advice on various aspects of parenthood.

Still others pointed out that teachers should be role models in the ethical use of AI. Student LM found one of her courses’ outlines was mostly AI-generated after running it through an AI detector. She called it ‘hypocrisy’ – ‘the teacher said we cannot use AI, but she’s using it!’ Student LM believed that if teachers do not lead by example, it will severely undermine students’ trust in them. While Student LM raised an important point, she did not seem to realise that AI detection might be inaccurate.

Discussion

Students from this research were found to have a relatively low level of trust towards their teachers. While the finding is not generalisable, it highlights many nuances as students navigate trust-building with teachers in the age of GenAI. For example, the issue of fear appears to be particularly prominent in students’ trust-building experiences. As students recounted their assessment experiences with GenAI, many expressed concerns about being wrongly accused of AI cheating (e.g. ‘I’m scared, so I turned off all AI tools when I do my assignments.’) or potential penalties from teachers if they admitted to using GenAI assistance in their assignments (e.g. ‘If I acknowledge my use of AI, who knows I won’t be penalised in the marking?’). Some students feared that their teachers did not know them personally and hence would assume the worst of them. Students’ fear of negative outcomes created barriers to open communication and willingness to take risks, which are important in developing trust.

Arguably, this is due to a lack of ‘two-way transparency’ between teachers and students regarding the use of GenAI in assessment. Students in this study are required to openly acknowledge AI assistance in their work or even submit chat records for accountability purposes, but the same level of transparency is not observed from the teacher’s side. According to the student interviews, few professors have explicitly stated their GenAI policies or explained how students’ work, if mediated by GenAI, will be defined and judged in the grading process. The ‘AI score’ of students’ submitted assignments is only accessible to the teachers in this university. The one-way transparency may be interpreted as a surveillance mechanism that reinforces top-down control and a power imbalance between students and teachers. As transparency is mostly required from the student’s side, students may be ‘placed in disempowered surveilled positions’ and feel constantly monitored (Selwyn et al. Citation2021, 161). Ross and Macleod (Citation2018) argued that being monitored is fundamentally not a neutral act because it is grounded in a relationship of distrust. The lack of transparency from teachers creates a low-trust environment where students do not feel safe in exploring or disclosing their use of GenAI. Carless’ (Citation2013) argument from over a decade ago remains just as relevant today – to support the development of trust, students should be shown and helped to understand different aspects of assessment, including some of the more implicit assumptions around assessment criteria and moderation process.

In addition, the way teachers communicate GenAI policies to students may have further contributed to their fears, breeding an environment of distrust. For example, Student RW’s teacher warned students that failure to acknowledge AI use in their assignments would result in severe disciplinary action. Students were also warned that AI detection would be used to scan all submitted assignments and misbehaviour would be identified and caught. In a recent study that reviewed the GenAI policies of 20 leading universities worldwide, Luo (Citation2024) also found that the policies have a strong focus on preventing student cheating. These discourses frame students as potential cheaters who are inherently untrustworthy and frame students’ work as a space of rampant dishonesty (Ross and Macleod Citation2018). According to the findings, when GenAI policies are communicated to students in a surveillance tone, students tend to adopt a self-defensive approach to avoid any perceived risks of wrongdoings. Such a punitive style of communication may also be interpreted by students as a lack of benevolence from their teachers, which undermines a fundamental component of trust (Hoy and Tschannen-Moran Citation1999). Under the current discourse, teachers could be ‘subjectified less as educators but more as gatekeepers to avoid academic misconduct’ (Luo Citation2024, 10).

Another prominent issue in students’ trust-building experiences concerns their expectations of teacher competence. Reina and Reine (Citation2006) used ‘competence trust’ to describe the belief that another person has the necessary skills and competencies to perform a given role. According to the interviews, if the student perceives their professor as open to AI technologies, they are more likely to feel comfortable and safe to explore GenAI for their learning. This echoes Carless’ (Citation2013) previous observation that trust in a teacher’s competence will contribute to students’ willingness to share information and ideas.

While previous discussions of competence trust in education focused mainly on teachers’ subject knowledge (e.g. Macfarlane Citation2009; Zhou Citation2023), this study provides an expanded view of the ‘competence’ students expect from their teachers in an AI-mediated assessment landscape. With the rise of GenAI, students now also expect teachers to demonstrate AI literacy or, at the very least, a growth mindset towards continuously learning about emerging technologies (Chan Citation2023). Although professors are experts in their subject area, they may not necessarily be well-versed in AI-related matters. In a recent survey study conducted by Chan and Lee (Citation2023), they found that university students are more optimistic and enthusiastic about embracing GenAI in various aspects of life compared to their university teachers. As Chan and Lee (Citation2023) noted, this generation of university students belongs to ‘Generation Z’ who grew up in a time of rapid technological development and hence may have developed more liberal attitudes towards GenAI use. While more research is required to ascertain the influences of generational context, students’ expectations and needs must be considered when designing professional development programmes for university teachers.

Implications

Building on the discussion, this section summarises several key points to inform future policies and practices. It is hoped that these implications can contribute to fostering trust between teachers and students to co-navigate this AI-mediated assessment landscape.

Teachers need to be transparent about their beliefs, requirements, and expectations of GenAI use in their course assignments. This transparency includes but is not limited to clarifying how AI assistance in students’ work will factor into the grading process, the level of specificity required in students’ AI declaration, and types of AI assistance (e.g. grammar, essay structure) allowed in the assignments.

Building on prior calls to enhance teachers’ AI literacy through professional training (e.g. Chan Citation2023), this paper argues that teachers’ knowledge and attitudes towards GenAI should be explicitly demonstrated and communicated to students during class discussions or lectures. By doing so, teachers can instil confidence in students that teachers are well-equipped, or at least open-minded, to navigate the challenges presented by AI.

The study proposes that AI literacy training for students should familiarise them with the mechanisms underlying AI detection. This will help students reduce unwarranted fears and avoid an overly defensive approach to using GenAI. In cases of misidentification, students will feel more prepared to defend themselves rather than perceiving the detection result as infallible and beyond questioning.

The communication of GenAI policies and guidelines should move from a punitive approach to a more supportive, inclusive one (e.g. framing AI declarations as self-reflection opportunities rather than monitoring). It should be made clear to students that these measures do not aim to ‘catch them in misconduct’ but are important pathways towards achieving a consensus on ethical GenAI use.

In this evolving AI landscape, opportunities should be provided for teachers and students to collaborate on designing GenAI policies, assessment tasks and standards. For example, teachers can seek students’ input in the first class regarding how AI assistance should be accounted for in the marking. Following advice from previous research, assessment can include tasks that allow students to exercise and develop their evaluative judgement, particularly judgement of AI-generated outputs (Bearman and Ajjawi Citation2023; Luo and Chan Citation2023).

Limitations and future studies

One limitation is that this study mainly includes students from educational backgrounds. Compared to non-education majors, these students may have more nuanced insights regarding teacher-student trust as they possess a dual identity. Future studies can supplement this research by exploring the experiences of students from different disciplines and geographical backgrounds.

Another limitation regards our approach and interpretation of trust in this study. Trust is a broad and multidimensional concept, and teacher-student trust only constitutes an important subset of trust in the AI age. Future studies can look into how GenAI influences trust-building between students (i.e. trust between peers) and investigate the factors that affect students’ trust in emerging technologies. (i.e. trust in technology).

This study also provides multiple starting points for future research to pursue. For example, it will be interesting to compare our student-oriented findings with university teachers’ experiences with trust in this AI-mediated landscape. Building on the interview data, longitudinal and ethnographic research can be conducted to obtain a more comprehensive understanding of the trust dynamics between students and teachers. To elaborate on some of the concerns raised by students in our interviews (e.g. work being misjudged as AI-generated; confusion around ethical AI use), more research on how students actually use GenAI to assist their work will be illuminating.

Conclusions

The advent of powerful GenAI tools has necessitated rethinking and renovation of many established practices, mindsets and relationships in higher education. At this early stage, teachers and students are navigating a somewhat ‘uncharted landscape’ where there are many grey areas without well-established guidelines or consensus. Navigating this landscape entails risks – for example, students who acknowledge their use of GenAI in assessment may be implicitly penalised in the marking process; teachers who adopt innovative assessment could face backlash as traditional assessment methods (e.g. exams, essays) are often more well received by the public (Carless Citation2009). For teachers and students to be open to taking these risks, they need to have trust that the other party has good intentions. A trusting relationship between teachers and students is important because trust indicates a mutual willingness to take risks and collaboratively explore this evolving AI landscape. However, despite a growing number of GenAI-related studies, little attention has been paid to the relational aspects when it comes to researching GenAI’s impact on higher education.

Therefore, this paper presents a novel contribution to understanding and navigating GenAI’s influences in higher education by focusing on the relational dynamics between teachers and students. As illustrated in the findings, GenAI does add a new layer of complexities to teacher-student relationships in universities – a relationship that is already under question given the massification and widening participation trends of higher education. On a practical level, the study highlights critical factors that mediate the level of trust students invest in their teachers in this new AI-mediated assessment landscape (e.g. two-way transparency, the communication of policies, teacher AI competence), thereby informing future policy-making, pedagogical practices and teacher professional development. On a knowledge level, the study contributes to broadening discourses around GenAI beyond a functional focus (e.g. seeing GenAI as an instrumental tool; overemphasis on the accuracy of AI-generated outputs) and paves the way for future research to adopt a more human-centred and socio-relational approach to explore GenAI’s role in education. Overall, the study emphasises the importance of trust between key stakeholders to collaboratively navigate the many uncertainties and challenges brought by GenAI to higher education.

Ethics statement

This research is approved by the Human Research Ethics Committee at the Education University of Hong Kong (Reference number: 2023-2024-0027)

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Adams, C.M. 2014. Collective student trust: A social resource for urban elementary students. Educational Administration Quarterly 50, no. 1: 135–59.

- Bearman, M., and R. Ajjawi. 2023. Learning to work with the black box: Pedagogy for a world with artificial intelligence. British Journal of Educational Technology 54, no. 5: 1160–1173.

- Carless, D. 2009. Trust, distrust and their impact on assessment reform. Assessment & Evaluation in Higher Education 34, no. 1: 79–89.

- Carless, D. 2013. Trust and its role in facilitating dialogic feedback. In Feedback in higher and professional education, edited by D. Boud, and E. Molloy, 90–103. Oxford: Routledge.

- Cavanagh, A.J., X. Chen, M. Bathgate, J. Frederick, D.I. Hanauer, and M.J. Graham. 2018. Trust, growth mindset, and student commitment to active learning in a college science course. CBE—Life Sciences Education 17, no. 1: 1–8.

- Chan, C.K.Y. 2023. A comprehensive AI policy education framework for university teaching and learning. International Journal of Educational Technology in Higher Education 20, no. 1: 1–25.

- Chan, C.K.Y., and K.K.W. Lee. 2023. The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and millennial generation teachers? Smart Learning Environments 10, no. 1: 1–23.

- Fawns, T., and S. Schaepkens. 2022. A matter of trust: Online proctored exams and the integration of technologies of assessment in medical education. Teaching and Learning in Medicine 34, no. 4: 444–53.

- Felten, P., R. Forsyth, and K.A. Sutherland. 2023. Building trust in the classroom: A conceptual model for teachers, scholars, and academic developers in higher education. Teaching and Learning Inquiry 11: 1–9.

- Fowler, A.G. 2023, April 3. We tested a new ChatGPT-detector for teachers. It flagged an innocent student. The Washington Post. https://www.washingtonpost.com/technology/2023/04/01/chatgpt-cheating-detection-turnitin/.

- Gorichanaz, T. 2023. Accused: How students respond to allegations of using ChatGPT on assessments. Learning: Research and Practice 9, no. 2: 183–96. doi:10.1080/23735082.2023.2254787.

- Gratiot, C. (Host). 2023, April 26. AI and the erosion of trust in Higher Ed. [Podcast episode]. In The Bravery Media. https://bravery.co/podcast/ai-and-erosion-of-trust-in-higher-ed/.

- Hoy, W.K., and M. Tschannen-Moran. 1999. Five faces of trust: An empirical confirmation in urban elementary schools. Journal of School Leadership 9, no. 3: 184–208.

- Kovač, V.B., and A. Kristiansen. 2010. Trusting trust in the context of higher education: The potential limits of the trust concept. Power and Education 2, no. 3: 276–87.

- Kramm, N., and S. McKenna. 2023. AI amplifies the tough question: What is higher education really for? Teaching in Higher Education 28, no. 8: 2173–8.

- Lee, S.J. 2007. The relations between the student–teacher trust relationship and school success in the case of Korean middle schools. Educational Studies 33, no. 2: 209–16.

- Legard, R., J. Keegan, and K. Ward. 2003. In-depth interviews. In Qualitative research practice: A guide for social research students and researchers, eds. J. Ritchie, and J. Lewis, 138–69. Thousand Oaks, CA: Sage.

- Lodge, J.M., K. Thompson, and L. Corrin. 2023. Mapping out a research agenda for generative artificial intelligence in tertiary education. Australasian Journal of Educational Technology 39, no. 1: 1–8.

- Luo, J. 2024. A critical review of GenAI policies in higher education assessment: A call to reconsider the “originality” of students’ work. Assessment & Evaluation in Higher Education, 1–14.

- Luo, J., and C.K.Y. Chan. 2023. Conceptualising evaluative judgement in the context of holistic competency development: Results of a Delphi study. Assessment & Evaluation in Higher Education 48, no. 4: 513–28.

- Macfarlane, B. 2009. A leap of faith: The role of trust in higher education teaching. Nagoya Journal of Higher Education 9, no. 14: 221–38.

- Macfarlane, B. 2022. The distrust of students as learners: Myths and realities. In Trusting in higher education: A multifaceted discussion of trust in and for higher education in Norway and the United Kingdom, edited by Paul Gibbs and Peter Maassen, 89–100. Cham: Springer International Publishing.

- Mitchell, R.M., L. Kensler, and M. Tschannen-Moran. 2018. Student trust in teachers and student perceptions of safety: Positive predictors of student identification with school. International Journal of Leadership in Education 21, no. 2: 135–54.

- Payne, A.L., C. Stone, and R. Bennett. 2022. Conceptualising and building trust to enhance the engagement and achievement of under-served students. The Journal of Continuing Higher Education 71, no. 2: 134–51.

- Plé, L. 2023, September 22. Should we trust students in the age of generative AI? Times Higher Education. https://www.timeshighereducation.com/campus/should-we-trust-students-age-generative-ai.

- Reina, D.S., and M.L. Reine. 2006. Trust and betrayal in the workplace: Building effective relationships in your organization. Oakland: Berrett-Koehler.

- Roffey, S. 2012. Pupil wellbeing—teacher wellbeing: Two sides of the same coin? Educational and Child Psychology 29, no. 4: 8–17.

- Ross, J., and H. Macleod. 2018. Surveillance, (dis) trust and teaching with plagiarism detection technology. In 11th international conference on networked learning, edited by M. Bajić, N. Dohn, M. de Laat, P. Jandrić, and T. Ryberg, 235–42. Zagreb: Zagreb University of Applied Sciences. https://www.networkedlearningconference.org.uk/abstracts/papers/ross_25.pdf.

- Rudolph, J. 2023. ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? Journal of Applied Learning & Teaching 6, no. 1: 1–22.

- Selwyn, N., C. O’Neill, G. Smith, M. Andrejevic, and X. Gu. 2021. A necessary evil? The rise of online exam proctoring in Australian universities. Media International Australia 186, no. 1: 149–64.

- Sokol, D. 2023, July 10. It is too easy to falsely accuse a student of using AI: A cautionary tale. Times Higher Education. https://www.timeshighereducation.com/blog/it-too-easy-falsely-accuse-student-using-ai-cautionary-tale.

- Tschannen-Moran, M., R.A. Bankole, R.M. Mitchell, and D.M. Moore. 2013. Student academic optimism: A confirmatory factor analysis. Journal of Educational Administration 51, no. 2: 150–75.

- Wheeldon, J., and J. Faubert. 2009. Framing experience: Concept maps, mind maps, and data collection in qualitative research. International Journal of Qualitative Methods 8, no. 3: 68–83.

- Williams, T. 2023, July 25. Turnitin says one in 10 university essays are partly AI-written. Times Higher Education. https://www.timeshighereducation.com/news/turnitin-says-one-10-university-essays-are-partly-ai-written.

- Zhou, Z. 2023. Towards a new definition of trust for teaching in higher education. International Journal for the Scholarship of Teaching and Learning 17, no. 2: 1–13.