Abstract

Objective: The current update of the Ease of Language Understanding (ELU) model evaluates the predictive and postdictive aspects of speech understanding and communication.

Design: The aspects scrutinised concern: (1) Signal distortion and working memory capacity (WMC), (2) WMC and early attention mechanisms, (3) WMC and use of phonological and semantic information, (4) hearing loss, WMC and long-term memory (LTM), (5) WMC and effort, and (6) the ELU model and sign language.

Study Samples: Relevant literature based on own or others’ data was used.

Results: Expectations 1–4 are supported whereas 5–6 are constrained by conceptual issues and empirical data. Further strands of research were addressed, focussing on WMC and contextual use, and on WMC deployment in relation to hearing status. A wider discussion of task demands, concerning, for example, inference-making and priming, is also introduced and related to the overarching ELU functions of prediction and postdiction. Finally, some new concepts and models that have been inspired by the ELU-framework are presented and discussed.

Conclusions: The ELU model has been productive in generating empirical predictions/expectations, the majority of which have been confirmed. Nevertheless, new insights and boundary conditions need to be experimentally tested to further shape the model.

Background

Over the last decade and a half the research community has increasingly acknowledged that successful rehabilitation of persons with hearing loss must be individualised and based on assessment of the impairment at all levels of the auditory system. Studying the system means taking into account impairments from cochlea to cortex, and combining this with details of individual differences in the cognitive capacities pertinent to language understanding. Several views on and models of this top-down–bottom-up interaction have been formulated (e.g. Holmer, Heimann, and Rudner Citation2016; Luce and Pisoni Citation1998; Marslen-Wilson and Tyler Citation1980; Pichora-Fuller et al. Citation2016; Signoret et al. Citation2018; Stenfelt and Rönnberg Citation2009; Wingfield, Amichetti, and Lash Citation2015).

The ELU model (Rönnberg Citation2003; Rönnberg et al. Citation2008, Citation2010, Citation2013, Citation2016) represents one such attempt. It provides a comprehensive account of mechanisms contributing to ease of language understanding. It has a focus on the everyday listening conditions that sometimes push the cognitive processing system to the limit and thus may reduce ease of language understanding (cf. Mattys et al. Citation2012; Wingfield, Amichetti, and Lash Citation2015). As such, it is intimately connected with the field of Cognitive Hearing Science (CHS), which studies the cognitive mechanisms that come into play when we listen to impoverished input signals, or when speech is masked by background noise, or some combination of the two. Other factors of interest are familiarity of accent and language, speed and flow of conversation as well as the phonological, semantic and grammatical coherence of the speech. The characteristics of the listener in terms of sensory and cognitive status are also important, along with his/her linguistic and semantic knowledge and access to social and technical support. Furthermore, in a recent account of listening effort (i.e. the Framework for Understanding Effortful Listening, FUEL), Pichora-Fuller et al. (Citation2016) show that it is also heuristically useful and important to consider an individual’s intention and drive to take part in listening activities to reach listener goals, in combination with demands on (perceptual and cognitive) capacity. In the ELU account, the first step has been to address some of the cognitive mechanisms that contribute to the ease with which we understand language.

Cognitive functions that have been discussed and researched in relation to ease of language understanding are primarily working memory (WM) and executive functions such as inhibition and updating (e.g. Mishra et al. Citation2013a, Citation2013b; Rönnberg et al. Citation2013). According to the ELU model, WM and executive functions come into play in language understanding when there is a mismatch between input and stored representations (Rönnberg Citation2003), i.e. when the input signal does not automatically resonate with phonological/lexical representations in semantic Long-Term Memory (LTM) during language processing. Mismatch is mediated in the RAMBPHO buffer whose task is the Rapid, Automatic, Multimodal Binding of PHOnology. When mismatch occurs, the success of language understanding becomes dependent on WM and executive functions. However, it is also important to note that when mismatch is extreme, cognitive resources and drive to reach goals may not be sufficient to ultimately achieve understanding (Ohlenforst et al. Citation2017).

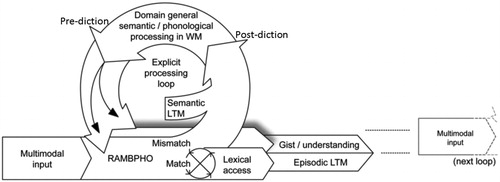

In some more detail, the RAMBPHO component is assumed to be an efficient phonological buffer that binds representations based on the integration of multimodal information (typically audiovisual) relating to syllables. These representations feed forward in rapid succession to semantic LTM (see ). If the RAMBPHO-delivered sub-lexical information matches a corresponding syllabic phonological representation in semantic LTM, then lexical access will be successful and understanding ensured. Conversely, lexical activation may be unsuccessful or inappropriate if there is a mismatch between the output of RAMBPHO and representations in semantic LTM. In this case, understanding is likely to be compromised unless sufficient context is available to allow disambiguation.

Figure 1. The Rönnberg et al. (2018) version of the ELU-model, based on Rönnberg et al. (Citation2013) in Frontiers in Systems Neuroscience.

The original observations made by Lunner (Citation2003), Lunner and Sundewall-Thorén (Citation2007) and Rudner et al. (Citation2008, Citation2009) showed that familiarisation during 9 weeks to a certain kind of signal processing (i.e. fast or slow wide dynamic range compression in this case), reduced dependence on WMC of speech recognition in noise with the same kind of signal processing. When speech-recognition-in-noise testing was done with the non-familiarized signal processing mode, however, dependence on WMC was substantial and significant. The interpretation given was in terms of phonological mismatch (Rudner et al. Citation2008, Citation2009). We have later generalised the mismatch notion to also include semantic attributes (due to joint storage functions), although we still assume that the bottle-neck of lexical retrieval depends on phonological attributes (Rönnberg et al. Citation2013). However, it should be noted that these results were found for matrix sentences.

Matrix sentences are based on a grammar which is simple and predictable but where the actual lexical name of e.g. an object can only be guessed if it has not been heard. For example: The Hagerman (Citation1982) (Hagerman and Kinnefors, Citation1995) and Dantale2 (Hernvig and Olsen, Citation2005) materials consist of five-word sentences comprising a proper noun, verb, numeral, adjective, and noun, presented in that order. Thus, they are similar as regards both form and to some extent the content. Similar grammatical structures apply e.g. the Oldenburg matrix test (Wagener, Brand, and Kollmeier Citation1999), and have been adopted in many other languages including English and Russian.

However, other kinds of sentence materials higher on contextual support (e.g. the Swedish Hint sentences, Hällgren, Larsby, and Arlinger Citation2006) or high on predictability (e.g. SPIN sentences, Wilson et al. Citation2012), deliver an initial support in sentence beginnings that facilitate predictions of final words. We have been able to show that the dependence on WMC decreases rather than increases in such a case (Rönnberg et al. Citation2016). With high lexical predictability and a tight context, the reliance on cognitive “repair” functions such as WM is reduced. In other words, intelligent guesswork and inference-making does not seem to be needed to the same extent as for matrix sentences (e.g. Rudner et al. Citation2009). The data in those studies show that the correlation with WMC was practically nil, regardless of familiarisation procedures (Rudner et al. Citation2009). This pattern of results was recently replicated in a large sample drawn from the so called the n200 data-base (Rönnberg et al. Citation2016). The pattern is further corroborated in gating studies from our lab, where we have compared gating of final words in predictable vs. less predictable sentences (for details see Moradi, Lidestam, Rönnberg Citation2013, Citation2016; Moradi, Lidestam, Saremi, et al. Citation2014; Moradi, Lidestam, Hällgren Citation2014). Again, the dependence on WMC is lower with predictable sentences.

Details regarding the number of phonological/semantic attributes needed for a successful match—i.e. the threshold at which the degree of overlap of attributes triggers lexical activation (Rönnberg et al. Citation2013)—is left open and can only be addressed by studies using well-controlled linguistic materials. In general, when the threshold(s) for triggering lexical access are not achieved, the basic prediction is that explicit, elaborative and relatively slower WM-based inference-making processes are invoked to aid the reconstruction of what was said but not heard. Also, at the general level, mismatches may be due to one or more variables, including but not limited to hearing status, hearing aid fittings, energetic and informational masking and speech rate (Rönnberg et al. Citation2013, Citation2016).

The collective evidence of the predicted association between speech recognition in noise performance under mismatching conditions and WMC has been obtained in a number of studies investigating speech recognition in noise in individuals with hearing loss (in our studies, Lunner Citation2003; Foo et al. Citation2007; Rudner et al. Citation2009; Rudner, Rönnberg, and Lunner Citation2011, as well as in other labs, Arehart et al. Citation2013, Citation2015; Souza and Arehart Citation2015; Souza and Sirow Citation2014; Souza et al. Citation2015) and this work has been seminal in the development of the field of Cognitive Hearing Science (CHS). Akeroyd (Citation2008) reviewed work showing an association between speech recognition in noise and cognition and reached the conclusion that WMC is a key predictor. Besser et al. (Citation2013) performed a more extensive review of the burgeoning body of work, showing that although WMC seems to be a consistent predictor of speech recognition in various listening conditions for individuals with hearing loss, other groups only show associations under certain conditions. And recently, an experimental study corroborated correlational findings by showing that simultaneous cognitive load interferes with speech recognition in noise (Hunter and Pisoni Citation2018, see also Gennari et al. Citation2018, for neural correlates of cognitive load on speech perception).

And, even more recent evidence by Michalek, Ash, and Schwartz (Citation2018) supports the view that systematic manipulation of the signal-to-noise ratio in babble noise backgrounds show increased dependence on WMC with more challenging SNRs, even for normal hearing individuals. It could be the case that for babble noise there is a more general group-independent role for WMC (Ng and Rönnberg Citation2018). It seems that this issue is forcing a more nuanced view of WMC-dependence.

There is also evidence that degradation of the language signal per se increases cognitive load, and hence, the probability of mismatch. Individuals with normal hearing show greater pupil dilation (Zekveld et al. Citation2018) and increasing alpha power (Obleser et al. Citation2012), both established indices of cognitive load, with increasing degradation of the speech signal. Individuals with hearing loss show a similar effect but when impairment was most severe and the task hardest, alpha power dropped, indicating a breakdown in the recruitment of task-related neural processes (Petersen et al. Citation2015).

Thus, there is evidence to suggest that explicit cognitive processing occurs in participants with both normal hearing and impaired hearing under challenging listening conditions. Below, we address the dual role played by WMC in speech understanding.

Prediction and postdiction

Rönnberg et al. (Citation2013) distinguished between two aspects of the role played by WMC under the ELU framework, viz. prediction and postdiction (see ). The postdictive role refers to the cognitive mechanism which is thought to pertain post factum when mismatch has already occurred. As a matter of fact, Rönnberg (Citation2003) postulated that the actual mismatch triggers a signal which in turn invokes explicit processing resources of WM. This signal is in principle produced every time the ratio of explicit over implicit processing is larger than 1 (standardized variables). The assumption of a match-mismatch mechanism was supported by the experimental data in the Foo et al. (Citation2007) study. Given a certain kind of signal processing (FAST or SLOW compression) that was applied in experimental hearing aids for an intervention and acclimatisation period of 9 weeks, and then testing in the other phonologically mismatching condition (e.g. SLOW-FAST) caused a higher dependence on WM than e.g. in the FAST-FAST matching condition.

Hence, observed correlations between measures of WM and speech recognition in noise are assumed to reflect triggering of explicit WM functions by a certain degree of phonological mismatch. WM is the mental resource where fragmentary information can be explicitly manipulated, pieced together and used for making inferences and decisions. This is important for postdiction purposes, where the individual may need to combine contextual information with clarification from the interlocutor to be able to reconstruct what was said but not heard to access meaning and continue with the dialogue. This postdictive function is assumed to be relatively slow and deliberate and operates on a scale of seconds (Stenfelt and Rönnberg Citation2009).

The predictive role of WM, on the other hand, is assumed to involve priming and pre-tuning of RAMBPHO as well as focussing of attention in goal pursuit. In contrast to the postdictive aspect of WM, the prediction function is fast, in many instances implicit and automatic, and presumably operates on a scale of tenths of seconds (Stenfelt and Rönnberg Citation2009). Both phonological and semantic information can be used for predictive purposes (Rönnberg et al. Citation2013; Signoret et al., Citation2018; Signoret & Rudner, Citation2018). Behavioural work provides evidence that the ability to use information predictively to perceive speech is related to WMC when the speech is degraded but not when it is clear (Hunter and Pisoni Citation2018; Zekveld, Kramer, and Festen Citation2011; Zekveld et al. Citation2012). Evidence of the role of WMC in prediction is also based on the findings that WMC and WM load are related to early attention processes in the brainstem (cf. Sörqvist, Stenfelt, and Rönnberg Citation2012; Anderson et al. Citation2013; Kraus, Parbery-Clark, and Strait Citation2012; cf. the early filter model by Marsh and Campbell Citation2016). This kind of WMC-modulated attention process seems also to propagate to the cortical level. At the cortical level, when attention is e.g. deployed to a visual WM task, the brain will (cross-modally) attenuate the temporal cortex responses involved in sound processing (Molloy et al. Citation2015; Sörqvist et al. Citation2016; Wild et al. Citation2012).

In general, postdiction and prediction are dynamically related on-line processes and presumably feed into one another during the course of a dialogue. Prediction—which is fast and implicit—is assumed to be nested under the relatively slower postdiction process, i.e. several predictions may occur before explicit inference-making takes place (Rönnberg et al. Citation2013; cf. Poeppel, Idsardi, and van Wassenhove Citation2008). Related to predictive and postdictive functions of WM, we (Rönnberg et al. Citation2013) offered a set of expectations, which we use as points of departure for some more detail on recent research related to the ELU-model (see ).

Follow-up on the Rönnberg et al. (Citation2013) summary expectations

First, based on the ELU model it is expected that if RAMBPHO output is of low quality the probability of achieving a match with phonological-lexical representations in semantic memory will also be low and WM resources will have to be deployed to achieve understanding during speech processing. This expectation can be tested with different manipulations of both the speech signal and the background noise, applies primarily to participants with hearing impairment, and is related to the postdictive function of the model.

One type of study that has addressed the quality of RAMBPHO output has dealt with different kinds of signal processing in hearing instruments (e.g. Arehart et al. Citation2013). Hearing aid signal processing is intended to simplify speech processing for persons with hearing impairment, but sometimes it also causes unwanted alterations or artefacts that distort the percept in a manner that is cognitively demanding. Souza et al. (Citation2015) reviewed studies which had examined the effects of signal processing and WMC. Three types were considered: fast-acting wide dynamic range compression (WDRC), digital noise reduction, and frequency compression. Souza et al. (Citation2015) found that for fast-acting WDRC, the evidence suggests that WMC (especially tapped by the reading span task) is important for speech in noise performance. The data they reviewed also suggest that persons with low WMC may not tolerate such fast signal alterations and may benefit from slower compression times (e.g. data from our own lab Foo et al. Citation2007; Lunner and Sundewall-Thorén Citation2007; Ohlenforst et al. Citation2017; Rudner et al. Citation2009; Rudner, Rönnberg, and Lunner Citation2011). The general conclusion drawn by Souza et al. (Citation2015) was that the dependence on WMC is high for individuals with hearing impairment when listening to speech-in-noise processed by fast-acting WDRC.

The effect of hearing aid signal processing interacts with both the nature of the target speech and the type of degradation (Lunner, Rudner, and Rönnberg Citation2009; see review by Rönnberg et al. Citation2010). Rudner, Rönnberg, and Lunner (Citation2011) showed that for individuals with mild to moderate sensorineural hearing loss who were experienced hearing aid users, speech recognition in noise with fast-acting WDRC was dependent on WMC in high levels of modulated noise but only when the speech materials were lexically unpredictable, i.e. for matrix-type sentences (Hagerman and Kinnefors Citation1995). WMC dependency was not found for the more ecologically valid Hearing in Noise Test (HINT) sentences, where the sentential context aids speech processing (Hällgren et al. Citation2006; see also Rönnberg et al. Citation2016). Note that there is always going to be a dynamic ratio between implicit and explicit processes (see original mathematical formulations in Rönnberg Citation2003), depending on the current conditions. When explicit processes dominate over implicit processes, then a mismatch signal is assumed to be triggered.

Other very recent data from the n200 study (Ng and Rönnberg Citation2018; Rönnberg et al. Citation2016), also using matrix-type sentences, suggest that the type of background noise is important, across different kinds of signal processing conditions. The name n200 refers to the number of participants in one group with HL who use hearing aids in daily life (age range: 37–77 years, Rönnberg et al. Citation2016). Ng and Rönnberg (Citation2018) compared 4-talker babble and stationary noise and found for the 180 hearing aid users included in that sub-study that the dependence on WMC was significantly higher for 4-talker babble than for stationary noise. This also holds true for another n200 study by Yumba (Citation2017), where babble noise seemed to draw on WMC, irrespective of signal processing conditions. In addition, the study by Ng and Rönnberg (Citation2018) demonstrates that dependence on WMC may persist for much longer periods of time (2 to 5 years) than expected on the basis of our previous work, i.e. Ng et al. (Citation2014; 6 months) and Rudner et al. (Citation2009; 9 weeks).

For other types of hearing aid signal processing such as noise reduction and frequency compression, WMC dependence is not as clear as for WDRC (Souza et al. Citation2015, but see Yumba Citation2017). However, there are certain kinds of exceptions. In the studies by Ng et al. (Citation2013, Citation2015) it was shown that implementation of binary masking noise reduction can lead to improved memory for HINT materials for experienced hearing aid users with mild to moderate hearing loss. This improvement was found even though there was no perceptual improvement at the favourable signal-to-noise ratios (SNRs) that characterise everyday listening situations. This shows an ecologically important aspect of postdiction that targets memory for spoken sentences and not only what can be directly perceived and understood.

Finally, we want to acknowledge the pioneering work by Gatehouse, Naylor, and Elberling (Citation2003, Citation2006), who demonstrated that there were important associations between cognitive function (i.e. the letter/digit monitoring test) and benefit obtained from fast WRDC. We can now summarise and conclude that these initial observations have been generalised in many subsequent cognitive studies involving the use of other WM tests such as the reading span test (Daneman & Merikle Citation1996).

In this follow-up, we have also seen that the degree of hearing loss plays a role as well as type of background noise, but the ELU prediction is most clearly borne out for fast WRDC of the signal processing algorithms investigated, while the evidence is mixed for other kinds of signal processing. Presumably, the ELU-model needs to be further developed with respect to which signal-noise processing combinations tend to mismatch more than others and why.

It should be noted that the studies reviewed concern hearing-impaired participants. Nevertheless, we know that also for normal hearing participants WMC dependence may increase when cognitive load is high or grammatical or sentence complexity is high (see under Task demands in speech processing: more on postdiction and prediction for a fuller discussion).

The second expectation is about how WMC actively modulates early attention mechanisms. As such, this expectation is related to the overall priming and prediction mechanism of the ELU model. More precisely, this expectation was based on a study by Sörqvist, Stenfelt, and Rönnberg (Citation2012) which showed that the auditory brain stem responses (ABR) of young individuals with normal hearing were dampened (linearly), as the working memory load in a concurrent visual task increased. This effect was modulated by individual differences in WMC such that in persons with higher WMC, the ABR was dampened even further. Sörqvist et al. (Citation2016) followed up the ABR study with an fMRI study with exactly the same paradigm and found that under high WM load critical auditory cortical areas responded less than under no concurrent visual WM load while the WMC-related attention system responded more. These results are in line with other work (Molloy et al. Citation2015; Wild et al. Citation2012) and show rapid and flexible allocation of neurocognitive resources between tasks.

The interpretation put forward in the Sörqvist et al. article (2016) was that WM plays a crucial role when the brain needs to concentrate on one thing at the time. In a similar vein, we also found that the amygdala reduced its activity during the high WM-load conditions (Sörqvist et al. Citation2016), which could be a means of lowering inner distractions. This reduction may prove to be an important prediction aspect of WM and crucial for persons who want to stay involved in communicative tasks without losing focus.

Third, in the ELU model WMC is assumed to mediate the use of phonological and semantic cues. Earlier work suggests that high WMC may help compensate for phonological imprecision in semantic LTM in persons with hearing impairment during visual rhyme judgment, especially when there is a conflict between orthography and phonology. The mechanism involved is a higher ability to keep items in mind to enable double-checking of spelling and sound (Classon, Rudner, and Rönnberg Citation2013). WMC is also related to the ability to use semantic cues during speech recognition in noise for young individuals with normal hearing (Zekveld, Kramer, and Festen Citation2011; Zekveld et al. Citation2012). In addition, it is related to the increase in perceptual clarity obtained from phonological and semantic cues when young individuals with normal hearing listen to degraded sentences (Signoret et al. Citation2018). However, although WMC has been shown to be related to the ability to resist the maladaptive priming offered by mismatching cues during speech recognition in noise in low intelligibility conditions by young individuals with normal hearing, it was not found to be related to the ability to resist maladaptive priming during verification of non-degraded auditory sentences, the so-called auditory semantic illusion effect (Rudner, Jönsson, et al. Citation2016). In addition, WMC predicts facilitation of encoding operations (and subsequent episodic LTM) in conditions of speech-in-speech maskers (Sörqvist and Rönnberg Citation2012). In Zekveld et al. (Citation2013) two effects were demonstrated related to WMC. First, it was shown that for speech maskers, high WMC was important for cue-benefit in terms of intelligibility gain. Second, word cues also enhanced delayed sentence recognition.

In short, participants with high WMC are expected to adapt better to different task demands than participants with low WMC, and hence are more versatile in their use of semantic and phonological coding and re-coding after mismatch.

Fourth, LTM but not WM (or STM) is assumed to be negatively affected by hearing loss. Rönnberg et al. (Citation2011) showed that while poorer hearing was associated with poorer LTM, no such association was found with WMC. The explanation for this finding was that of relative disuse between memory systems; when mismatches occur, fewer encodings into and therefore subsequent retrievals from episodic LTM will occur than when language understanding is rapid and automatic. Then, the episodic memory system is activated all the time. In particular, over the course of a day we expect that several hundreds of mismatches will occur, especially for individuals with hearing loss, and hence, that executive WM functions will be activated to process fragments of speech in relation to the contents of semantic LTM. However, in many cases, mismatch will not be satisfactorily resolved and thus, lexical access will not be achieved (i.e. disuse), leading to weakened encoding and fewer retrievals from episodic LTM. Relative disuse will then reduce the amount of practice that the episodic memory system gets. Therefore, episodic LTM will start to deteriorate, and presumably the quality of episodic representations as well. WM, on the other hand is fully occupied with resolving mismatches much of the time, and so this memory system will get sufficient practice to maintain its function.

Another interesting aspect that emerged from those data (Rönnberg et al. 2011) was that poorer episodic memory LTM function was observed despite the fact that all persons in the study were hearing aid users. Further, the decline in LTM was generalisable to non-auditory encoding of memory materials. As a matter of fact, in the best-fit Structural Equation Model (SEM), free verbal recall of Subject Performed Tasks (i.e. motor-encoded imperatives like “comb your hair”) loaded the highest among cognitive tasks on the episodic LTM construct. Hence, the potential argument that poorer episodic LTM performance with poorer hearing status was due to resource-demanding auditory encoding must be deemed as less likely. Instead it seemed that the effect of hearing loss struck at the memory system level and the ability to form episodic memories rather than being tightly coupled to a specific encoding modality.

In the follow-up study by Rönnberg, Hygge, Keidser and Rudner (Citation2014), we showed a similar pattern of data, viz that the association between poorer episodic LTM and functional hearing loss was stronger than the association between poorer visuospatial WM and hearing loss. Visual acuity did not add to the explanation. Recently, the pervasiveness of effects of untreated hearing loss on memory function was investigated by Jayakody et al. (Citation2018). They found significant negative effects with hearing thresholds for a WM test as well. This suggests that when mismatches occur too frequently or are too great, then there are too few perceived fragments to work with in WM. Hence, our additional hypothesis is that WM will also suffer from relative disuse to a larger extent for persons with untreated hearing loss than for persons with treated age-related hearing loss.

So, the new and general insight from these studies is that hearing loss has a negative effect on multi-modal memory systems, especially episodic LTM, but may be manifest also for other memory systems such as semantic memory and WM (Jayakody et al. Citation2018).There may also be neural correlates to these effects. There is evidence to indicate a loss of grey matter volume in the auditory cortex as a consequence of hearing loss (see review by Peelle and Wingfield Citation2016). These morphological changes may be related to right-hemisphere dependence of episodic retrieval (Habib, Nyberg, and Tulving Citation2003) and age-related episodic memory decline (Nyberg et al. Citation2012). In a recent study by Rudner et al. (Citation2018) based on imaging data from 8701 participants in the UK Biobank Resource, we revealed an association between poorer functional hearing and lower brain volume in auditory regions as well as cognitive processing regions used for understanding speech in challenging conditions (see Peelle and Wingfield Citation2016).

Furthermore, these morphological changes may be part of a mechanism explaining the association between hearing loss and dementia. In particular, recent evidence suggests such a link between hearing loss and the incidence of dementia (Lin et al. Citation2011, Citation2014). This notion chimes in with the general memory literature which shows that episodic LTM is typically more sensitive to aging and brain damage than semantic and procedural memory (e.g. Vargha-Khadem et al. Citation1997). The observed association between episodic LTM and hearing loss has opened up a whole new field of research (e.g. Hewitt Citation2017).

Fifth, based on the ELU model, we expect that the degree of explicit processing needed for speech understanding is positively related to effort. Since WM is one of the main cognitive functions that becomes involved when the demands on explicit processing is involved, we suggest that WMC also plays a role in the perception of effort during speech processing (Rönnberg, Rudner, and Lunner Citation2014).

One indication of this is that experienced hearing aid users’ higher WMC is associated with less listening effort (measured using ratings on a visual analogue scale) in both steady state noise and modulated noise (Rudner et al. Citation2012). McGarrigle et al. (Citation2014) wrote a “white paper” on effort and fatigue in persons with hearing impairment, wherein they used a dictionary definition of listening effort, as follows: “the mental exertion required to attend to, and understand, an auditory message”. In an invited reply to the McGarrigle et al. (Citation2014) article, we (Rönnberg, Rudner, and Lunner Citation2014) argued that the concept of effort must be related to a model of the underlying mechanisms rather than to dictionary definitions. One such mechanism is the degree to which a person must deploy explicit processing, e.g. in terms of use of WMC and executive functions (Rönnberg et al. Citation2013). Thus, the degree to which explicit processes are engaged, is generally assumed to reflect the amount of effort invested in the task. Persons with high WMC presumably have a larger spare capacity to deal with the communicative task at hand, resulting in less effortful WM processing. These notions are incorporated in the FUEL (Pichora-Fuller et al. Citation2016), which defines listening effort as “the deliberate allocation of mental resources to overcome obstacles in goal pursuit when carrying out a listening task”.

As commented on in the “white paper” (McGarrigle et al. Citation2014) pupil dilation has typically been accepted to index cognitive load and associated effort. Larger pupil dilation, indicating greater cognitive load, is found with lower speech intelligibility (Koelewijn, Zekveld, Festen, et al. Citation2012) and for single-talker compared to fluctuating or steady state maskers (Koelewijn Zekveld, Festen et al. Citation2012; Koelewijn, Zekveld, Rönnberg et al. Citation2012; Zekveld et al. Citation2018). However, pupil dilation amplitude is generally lower in older individuals (Zekveld, Kramer, and Festen Citation2011), making it a less sensitive load metric for this group. Interestingly, Koelewijn, Zekveld, Rönnberg et al. (Citation2012) found that a larger Size Comparison span (SiC span), which is a measure of WM with an inhibitory processing component (Sörqvist and Rönnberg Citation2012), was associated not only with higher speech recognition performance in a single-talker babble condition, but also with a larger pupil dilation. This pattern of findings was suggested to show that individuals with higher WMC invest more effort in listening (cf. Rönnberg, Rudner, and Lunner Citation2014; Zekveld, Kramer, and Festen Citation2011), which may seem contradictory to the above findings. However, the increase in pupil size may also reflect a more extensive/intensive use of brain networks (Koelewijn, Zekveld, Rönnberg, et al. Citation2012; see Grady Citation2012) rather than an increase in the actual load (cf. the FUEL, Pichora-Fuller et al. Citation2016). At the same time, there are other studies showing a larger pupil dilation in individuals with low WMC when performing the reading span task (e.g. Heitz et al. Citation2008), suggesting that the same cognitive task makes higher cognitive demands on low capacity individuals.

One solution to these conflicting interpretations could be that investing effort in performing a reading span task may in itself be qualitatively different from investing effort in understanding speech in adverse listening conditions (Mishra et al. Citation2013a, Citation2013b). Although a person with high WMC may show smaller pupil dilation than a person with low WMC during a WM task, the person with high WMC may show larger pupil dilation during a task that requires communication under adverse conditions, even if memory load is comparable, simply because WM resources can be more usefully deployed. This may be because there is no obvious upper limit to how adverse listening conditions can get before speech understanding breaks down. The brain probably makes the most of its available resources to understand speech (cf. Reuter-Lorenz and Cappell Citation2008). Nevertheless, recent data seems to suggest an inverted U-shaped brain function that defines the SNRs that generate maximum pupil size, given a certain intelligibility criterion (Ohlenforst et al. Citation2017). We have also shown in a recent study that concurrent memory load is more decisive than auditory characteristics of the signal in generating pupil dilation (Zekveld et al. Citation2018).

In all, the conceptual issue of what pupil size actually measures in different contexts makes the ELU expectation in terms of degree of explicit involvement (and concomitant WM processing) only an approximation of what might be a true effort-related mechanism. In addition, it seems to be hard to cross-validate effort by other physiological measures (McMahon et al. Citation2016), and certainly, other dimensions—such as task difficulty and motivation to participate in conversation—play a role as well (Pichora-Fuller et al. Citation2016).

Sixth, expectations relating to ease of language understanding are assumed to hold true for both sign and speech-based communication. One of the key features of the ELU model is its claim to multimodality. Already in RAMBPHO stage of processing, multimodal features of phonology are assumed to be bound together (Rönnberg et al. Citation2008). However, earlier work showed that there are modality-specific differences in WM for sign and speech that seem to be related to explicit processing (e.g. in bilateral parietal regions, Rönnberg, Rudner, and Ingvar Citation2004; Rudner et al. Citation2007, 2009; Rudner and Rönnberg Citation2008) and this was reflected in an early version of the ELU model (Rönnberg et al. Citation2008).

Sign languages are natural languages in the visuospatial domain and thus well suited to testing the modality generality of ELU predictions. In recent work, we have investigated how sign-related phonological and semantic representations that may be available to sign language users, but not non-signers, influence sign language processing (for a review, see Rudner Citation2018). We found no evidence that existing semantic representations influence the neural networks supporting either phoneme monitoring (Cardin et al. Citation2016) or WM (Cardin et al. Citation2017) even though we did find independent behavioural evidence that existing semantic representations do enhance WM for sign language (Rudner, Orfanidou, et al. Citation2016; see e.g. Hulme, Maughan, and Brown (Citation1991), for a similar effect for spoken language). Further, we found no effect of existing phonological representations on WM for sign language (Rudner et al. Citation2016). This was all the more intriguing as we established in a separate study that the brains of both signers and non-signers distinguished between phonologically legal signs and phonologically illegal non-signs (Cardin et al. Citation2016). However, deaf signers do have better sign-based WM performance than hearing non-signers, probably because of expertise developed through early engagement of visually based linguistic activity (Rudner and Holmer Citation2016), a notion which also explains better visuospatial WM in deaf than hearing individuals (Rudner et al. Citation2016). Furthermore, during WM processing deaf signers recruit superior temporal regions that in hearing individuals are reserved for auditory processing (Cardin et al. Citation2017; Ding et al. Citation2015).

Together, these results show, in line with other work (Andin et al. Citation2013) that although sign-based phonological information is perceptually available, even to non-signers, it does not seem to be capitalised on during WM processing. What does seem to be important in WM for sign language is modality-specific expertise supported by cross-modal plasticity. In terms of the ELU model, work relating to sign language demonstrates that phonology must be treated as a modality-specific phenomenon. This puts a spot-light on the importance of modality-specific expertise. While deaf individuals have visuospatial expertise that can be deployed during sign language processing, musical training may afford auditory expertise that can be deployed during speech processing (Good et al. Citation2017; Strait and Kraus Citation2014). Future work should continue to examine the role of modality-specific expertise on ease of language understanding.

We have started to investigate the effect of visual noise on sign language processing. Initial results show that for hearing non-signers (i.e. individuals with no existing sign-based representations) decreasing visual resolution of signed stimuli decreases WM performance and that this effect is greater when WM load is higher (Rudner et al. Citation2015). Future work should focus on visual noise manipulations and on semantic maskers to assess the role of WMC in understanding sign language under challenging conditions (Cardin et al. Citation2013; Rönnberg, Rudner, and Ingvar Citation2004; Rudner et al. Citation2007). By testing whether WMC is also invoked in conditions with visual noise, the analogous mechanism to mismatch in the spoken modality could be evaluated.

Summary of results related to the Rönnberg et al. (Citation2013) expectations

All six predictions have generated new research and new insights, which further shapes the ELU-model. With respect to the first prediction, not all kinds of hearing aid signal processing induce a dependence of WMC, but there is substantial evidence in favour of the “mismatch” assumption with respect to fast WDRC for low context speech materials. This is a postdictive aspect of the model.

Secondly, WMC also plays predictive roles, and our work on early effects on attention has proven to be robust at the cortical as well as the subcortical levels.

Third, the versatility in the use of phonological and semantic codes, has by now been investigated with several paradigms, and is the hallmark of high WMC for spoken stimuli, which may be less pronounced for phonological aspects of signed language.

Fourth, the effects of hearing loss on memory systems, especially episodic LTM, seem to be multi-modal in nature. This means that the effect of hearing loss is relatively independent of encoding and test modality. Furthermore, episodic memory decline due to hearing loss may prove to be an important link to an increased risk of dementia.

Fifth, WMC is part of the explicit function of the ELU-model, which means that explicit involvement of cognitive functions in language processing is related to subjectively experienced and objectively measured effort. We have also seen that physiological indices of effort partially measure different dimensions of effort. For pupil size, our claim is that high WMC involves an efficient brain that also coordinates and integrates several brain functions, presumably a higher intensity of brain work that also is reflected in larger pupil dilations.

Sixth and finally, sign language research has taught us several things. One aspect is that there is some modality specificity in representations of signs in the brain, which was made clear as an assumption in the Rönnberg et al (Citation2008) version of the model and important for formulating the developmental D-ELU model, with its implications for a learning mechanism (Holmer, Heimann, and Rudner 2016, see further under “Development of representations”) .

Task demands in speech processing: more on postdiction and prediction

Listening may involve a range of perceptual and cognitive demands irrespective of hearing status. There are several levels of perception and comprehension (Edwards Citation2016; Rönnberg et al. Citation2016). One key difference between perception and comprehension is that the latter, but not necessarily the former, involves explicitly inferring what a particular sentence means in the light of preceding sentences or other cues. Both functions are vital to conversation and turn-taking, especially under everyday communication conditions. Although not made explicit in previous publications on the ELU-model, the connection to task demands for prediction and postdiction processes is that prediction typically involves recall or recognition of heard items as such, while postdiction by its very nature implies additional inference-making and reconstruction of missing or misperceived information. Prediction processing that is facilitated by hypotheses or prior cues held in WM is by its implicit mechanism assumed to be direct and rapid, improving the phonological/semantic recognition of targets. Indirect, postdictive processing may involve piecing together or inferring new information that was not present during encoding, or that was sufficiently mismatching to trigger explicit WM-processing. Speech processing tasks may vary on a continuum from being very direct (template matching) to very indirect (generation of completely new information).

Postdiction

Related to the reasoning above, Hannon and Daneman (Citation2001) developed test materials to assess complex text-based, inference-making functions that are dependent on WMC. They showed that the ability to make inferences about new information, combined with the ability to integrate accessed knowledge with the new information, was dependent on WMC. It seems likely that versatility in deploying different kinds of inference-making will support continued listening under the most adverse listening conditions; the listener may e.g. have to rely on subtle ways of recombining information and may sometimes settle for getting the gist only. An analogous measure of inference-making ability, implemented for auditory conditions is now part of a more comprehensive test battery for testing both hard-of-hearing and normal hearing participants (see Rönnberg et al. Citation2016). These latter functions definitively represent a level of complexity beyond that of merely repeating or recalling words. This complexity is a prominent aspect of conversations in everyday life and calls for postdiction in all listeners, irrespective of hearing status.

Few studies in the area of speech understanding have addressed the complexity of the inference-making involved in postdiction. However, some studies have begun to take some first steps toward this angle to speech understanding. Keidser et al. (Citation2015) used an ecological version of a speech understanding test. Normal hearing participants listened to passages of text (2–4 min) and received 10 questions per passage. Here it was shown that WMC (measured by reading span) played a crucial role for task completion and for keeping things in mind while answering questions about the contents of the passage.

In another postdiction study, Sörqvist and Rönnberg (Citation2012) were able to show that episodic LTM for prose, encoded in competition with a background speech masker compared to a masker of rotated speech, was related to performance on a complex WM test called SiC span. In this task, the participant is asked to compare the size of objects (“is a dog larger than an elephant?”), after which an additional word from the same semantic category appears on the screen (the actual to-be remembered word). The SiC span test especially emphasises the participants’ ability to inhibit semantic intrusions from previous comparison items when recalling each list of to-be-remembered words. Translated to the episodic LTM task, this aspect of storage of target speech and inhibition of distracter speech, processed in WM, was thus predictive of episodic recall of facts in each story. An example of a question that had to be inferred rather than explicitly recalled verbatim was: “Why was the king angry with the messenger?” Typically, the answers were contained within a single sentence (e.g. “He refused to bow”) and were scored as correct if they contained a specific key word (e.g. “refused/bow”) or described the accurate meaning of the key words (e.g. “He did not want to bow”).

Moreover, Lin and Carlile (Citation2015) found that cognitive costs are associated with following a target conversation that shifts from one spatial location to another (in a multi-talker environment). In particular, recall of the final three words in any given sentence and comprehension of the conversation (multiple choice questions) both declined. Switching costs were significant for complex questions requiring multiple cognitive operations. Reading span scores were positively correlated with total words recalled, and negatively correlated with switching costs and word omissions. Thus, WM seems to be important for maintaining and keeping track of meaning during conversations in complex and dynamic multi-talker environments.

Independent but related work suggests that the plausibility of sentences is an important factor for postdiction. For example, Amichetti, White, and Wingfield (Citation2016) showed a stronger association between the ability to recognise spoken sentences in noise and reading span performance when the sentences were less plausible. Observe that in the reading span test, semantic plausibility judgements of “absurdity”, “abnormality” or “plausibility” of the to-be-recalled sentences (initial or final words) have been used in many studies (examples:” The train sang a song”, or the more plausible sentence, “The girl brushed her teeth”). The common denominator is a kind of semantic judgement that demands more explicit semantic processing and effort to be comprehended. As a hypothesis, the kind of semantic judgement involved in reading span is also involved in postdiction.

Generally, then, WMC is important for postdiction in speech understanding tasks that require recall and semantic processing, in particular inference-making. Since the reading span task has been designed to tap into recall and semantic processing, it is reasonable that it is positively associated with postdictive performance under these task demands (Daneman and Merikle Citation1996). The advantage of using the reading span and SiC span tasks is that their inherent complexity sometimes leads to stronger empirical associations than tasks like digit span which tap one component only (see e.g. Hannon and Daneman Citation2001). Therefore, it seems that the generality and usability of such tests outweighs “process-pure” tests in applications to real-life conversation.

Prediction

If postdiction is mainly concerned with filling-in, piecing together, or inferring what has been misperceived, prediction is more concerned with preparing the participant via prior cues or primes for what kind of materials to expect. Common to prediction studies is that semantically associated or phonologically related primes have a fast, direct and implicit framing effect on speech understanding. In one semantic priming study by Zekveld et al. (Citation2013), participants used prior word cues that were related (or not) to each sentence to-be-perceived in noise. Compared to a control condition with no cues provided, WMC was strongly correlated with cue benefit at SRT50% (in dB SNR) with a single talker distractor. Thus, in this case WMC presumably served a predictive and integrative function, whereby semantic cues were used to distinguish the semantically related linguistic content of the target voice from the unrelated content of the distracter voice. More precisely, fast priming (via cues or context held in WM) affects RAMBPHO and constrains phonological combinations (as in sentence gating), and in that sense lowers the probability of mismatch. However, given a mismatch, the explicit processes feedback to (“primes”) RAMBPHO, and onwards.

In a prediction task like final word-gating in sentences (i.e. where participants have listened to a sentence and are to guess the final word from a successively larger (gated) portion of the word, it is reasonable to assume that a predictive, semantic context unloads WM (Moradi, Lidestam, Hällgren, et al. Citation2014; Moradi, Lidestam, Saremi, et al. Citation2014; Wingfield, Amichetti, and Lash Citation2015). In the extremely predictable case (for example, “Lisa went to the library to borrow a book” compared to a low predictable sentence, for example, “In the suburb there is a fantastic valley”), we only have to rely to a small extent on the signal to achieve sentence completion and comprehension (Moradi, Lidestam, Saremi, et al. Citation2014; Moradi, Lidestam, Hällgren, et al. Citation2014).

In a recent experimental study (Signoret et al. Citation2018) we showed that predictive effects of both plausibility and text primes on the perceptual clarity of noise-vocoded speech were to some extent dependent on WMC, although at low sound quality levels the predictive effect of text primes persisted even when individual differences in WMC were controlled for. This suggests that text primes have a useful role to play even for individuals with low WMC.

Still other work on prediction, using eye movements and the visual-world paradigm (Hadar et al. Citation2016), show that WM is involved. The task in that study consists of a voice saying “point at that xxx”, and the participant has to point at one of four depicted objects on a screen (unrelated or phonologically related to the target). Eye movements toward the target object are co-registered. Hadar et al. (Citation2016) were able to show that increasing the WM load by a four-digit load delayed the time point of visual discrimination between the spoken target word and its phonological competitor. This is just another example where WMC proves to be important for ideal listening conditions even for normal hearing individuals, and is contrary to claims from the correlational review study by Füllgrabe and Rosen (Citation2016).

In all, WMC serves both predictive and postdictive purposes (cf. Rönnberg et al. Citation2013). Predictive aspects are represented by mechanisms associated with inhibition of distracting semantic information and pre-processing of facilitating semantic information (e.g. cues). When sentence predictability is high, WM is less important. However, even if a sentence is perceived and understood, the postdictive dependence on WMC may still be of a reconstructive character, demanding WMC for recall of sentences or for more complex inference-making and comprehension (see Rönnberg et al. Citation2016 test battery).

In real-life turn-taking in conversation, prediction and postdiction feed into each other. However, we offer as a speculation that the degree to which prediction/postdiction functions dominate may also be part of the motivation by an individual to engage in certain types of conversations (cf. Pichora-Fuller et al. Citation2016). Turn-taking may for example be much more effortful in a context that demands more explicit inference-making and postdiction. On the other hand, predictive expectations of a delightful conversation may either make listening less effortful or spur the listener to devote the effort necessary to achieve successful listening.

In sum, we submit that WMC plays an attention-steering and priming role for prediction by means of “zooming” in on or preloading relevant phonological/lexical representations in LTM. For postdiction, high WMC is important for inference-making in the absence of context. Prediction is dependent on storage of information necessary for priming, whereas postdictive processes are dependent on both storage and processing functions.

Recent ELU-model definitions and issues

Stream segregation

There have been several recent attempts at refining the component concepts of the ELU-model. The ELU-model has generated several important predictions and ways of investigating and testing them. In an interesting article by Edwards (Citation2016), he suggests that just before RAMBPHPO processing takes place, a process is needed that accomplishes early perceptual segregation of the auditory object from the background, so called Auditory Scene Analysis (ASA, Dolležal et al. Citation2014). His discussion is based on the Rönnberg et al. (Citation2008) version of the ELU model, where RAMBPHO processing focuses on how different streams of sensory information are integrated and bound into a phonological representation (see also Stenfelt and Rönnberg Citation2009).

However, closer scrutiny of the Rönnberg et al. (Citation2013) version reveals a mechanism that allows for such ASA processes to occur as well. In the explicit processing loop there is (1) room for explicit feedback to lower levels of e.g. syllabic processing in RAMBPHO, and (2) attentional steering and priming of RAMBPHO to earlier levels of feature extraction (cf. Marsh and Campbell Citation2016; Sörqvist, Stenfelt, and Rönnberg Citation2012; Sörqvist et al. Citation2016). Both these processes (i.e. postdiction and prediction, respectively) are mediated by WM and executive functions. Thus, a separate ASA component in the Rönnberg et al. (Citation2013) version of the ELU model is only needed when task demands do not gear explicit attention and prediction mechanisms toward early features of the auditory stream. Even simple stream segregation between tones presented in an ABA format appears to be driven by predictability (Dolležal et al. Citation2014). This implies that even at very early levels of the auditory system, there is an element of prediction that affects stream segregation, and hence, the formation of auditory and linguistic objects in RAMBPHO. It is likely that stream predictability is reduced when the signal is degraded or the listener is hard of hearing, resulting in poorer stream segregation and thus poorer quality input to RAMBPHO. This is what the ELU model is about. The additional preattentive or automatic ASA processing component suggested by Edwards (Citation2016) may therefore not be key to the ELU model and the proposed function of such a component is at least partially accounted for by Rönnberg et al. (Citation2013). Functions for coping with ambiguity and mismatch (i.e. postdiction processes) are crucial features of the model. These postdictive processes provide explicit feedback from WMC and WMC-related mechanisms, which in turn feed into RAMBPHO, continuously and dynamically, during the course of a dialogue.

Development of representations

Sign language may be able to shed light on the way in which the representations that are the key to ease of language understanding come to be established. We have shown that deaf and hard of hearing (DHH) signing children are more precise than hearing non-signing children in imitating manual gestures, but only after having had the opportunity to practice imitation and thus establish item-specific representations (Holmer, Heimann, and Rudner 2016, cf. Mann et al. Citation2010). For both groups, precision of imitation after practice was related to language comprehension skill, but in the DHH group, language modality specific phonological skill was also implicated (Holmer Heimann, and Rudner 2016). This suggests that for the establishment of functional representations, both domain general, likely to involve semantically mediated postdictive processes, and language-modality specific processing are required, and this has led to the proposal of a Developmental ELU model (D-ELU, Holmer Heimann, and Rudner 2016. This notion is corroborated by work showing that it is the reduced language experience sometimes experienced by DHH children rather than auditory deprivation as such that may compromise the development of WM (Marshall et al. Citation2015; Rudner and Holmer Citation2016). Further, studies on word learning in hearing children (e.g. Hoover, Storkel, and Hogan Citation2010) and adults (e.g. Storkel , Ambrüster, and Hogan Citation2006 ) reveal an important role of LTM representations in development. Investigating interactions between WMC and LTM representations in developing ease of language understanding, both during “noisy” and normal conditions, will be an important future task in relation to the general ELU framework.

RAMBPHO and LTM

RAMBPHO processing is also important for the build-up of phonological and semantic representations in LTM. This is in line with an exemplar-based model view of word encoding and learning (e.g. Schmidtke Citation2016), which assumes that every encounter with a word leaves a memory trace which strengthens the connection(s) to the representation of the meaning of the word. Individual vocabulary size is the number of encountered words (including phonological neighbors/variants) encoded in LTM. Speed of access to lower frequency words (Brysbaert et al. Citation2016; Goh et al. Citation2009) is likely to be slower if this account is correct (Caroll et al. Citation2016). According to Schmidtke (Citation2016), because bilinguals are exposed to each of their two languages less often than monolinguals are exposed to their single language, they also encounter each individual word less frequently. This may lead to poorer phonetic representations of all words compared to monolinguals (Schmidtke Citation2016).

If input to the RAMBPHO mechanism is compromised due to hearing impairment, then phonological representations may become more fuzzy. According to the D-ELU model (Holmer, Heimann, and Rudner 2016), a mismatch condition that is not solved by postdictive processes (i.e. the output of RAMBPHO cannot find an exact match to a stored representation) pushes the system towards change, i.e. restructuring of existing or adding novel phonological representations. If hearing impairment is progressive and not compensated for, the change to the system at time n might not help at time n + 1 since the input to RAMBPHO is continuously changing, over time and across context. Phonological exemplars in LTM will morph but might not become fixed as exemplars that can be used for efficient processing, i.e. the summation over many attempted word encoding events during the course of days and months results in less distinct LTM representations (Classon, Rudner, and Rönnberg Citation2013). Fitting of hearing aids might interfere with this process, and help the system to develop useful representations after acclimatisation (Ng and Rönnberg Citation2018). However, it seems to be a matter of 2–5 years rather than the usual recommendations for acclimatisation to hearing aids (Ng and Rönnberg Citation2018). Many assembled fuzzy traces may even obscure the phonemic contrasts that support LTM representation of word meaning. This can be a reason why hearing impairment has such a decisive effect on the match/mismatch function, which reflects the relationship between language understanding and WMC (Füllgrabe and Rosen Citation2016; Rönnberg et al. Citation2016). With less distinct or fuzzy LTM representations, the probability of mismatch during listening in everyday conditions will increase, and with it dependence on WMC. Empirically, additional predictors of ease of language understanding for hearing-impaired participants are the degree of preservation of hearing sensitivity and temporal fine structure (Rönnberg et al. Citation2016). This applies especially when contextual support is low and explicit processing becomes more prominent.

Temporal fine structure information is important for pitch perception and for the ability to perceive speech in the dips of fluctuating background noise (Moore Citation2008; Qin and Oxenham Citation2003). Perceiving speech in fluctuating noise is also being modulated by WMC (e.g. Lunner and Sundewall-Thorén Citation2007; Rönnberg et al. Citation2010; Füllgrabe, Moore, and Stone Citation2015). Thus, one important role of preserved temporal fine structure may according to the ELU model be accomplished by the fast, implicit and predictive processes of WM—from the brainstem (Sörqvist, Stenfelt, and Rönnberg Citation2012) and upwards to the cortical level—where hippocampus serves a binding function in the evolution of auditory scenes as well as for WM (i.e. Yonelinas Citation2013). Recent evidence also suggest that it is possible to dissociate the effects of age and hearing impairment on neural processing of temporal fine structure (Vercammen et al. Citation2018). For a fuller discussion on the topic of WM and temporal fine structure, see Rönnberg et al. (Citation2016, pp. 14–15.)

The multimodality aspect

The talker’s face provides information that is supplementary to the auditory signal. Thus, it is not surprising that it enhances speech perception, especially when the auditory signal is degraded. A set of interesting gating studies by Moradi et al. (Citation2013, 2014a, Citation2014b, Citation2016) supports two major conclusions: (1) that seeing the talker’s face reduces dependence on WMC during perception of gated phonemes and words, and (2) that individuals who perform an audiovisual gating task subsequently show improved auditory speech recognition immediately and after 1 month, an effect not found with audio-only gating. We have named this latter phenomenon, perceptual “doping”(see Moradi et al. in press; Moradi et al., Citation2017).

With respect to the phenomenon, some of the important details were: The participants underwent gated speech identification tasks comprising Swedish consonants and words presented at 65 dB sound pressure level with a 0 dB signal-to-noise ratio, in audiovisual or auditory-only training conditions. A Swedish HINT test was employed to measure the participants’ sentence comprehension in noise before and after training. The results show that audiovisual gating training confers a benefit not seen after auditory only gating training such that auditory HINT performance improved, even at follow-up a month later. The interpretation of this finding is based on a change of the cortical maps/representations. These representations are more accessible (doped or enriched maps) and are maintained after the (gated) audiovisual speech training, which subsequently facilitates auditory route mapping to those phonological and lexical representations in the mental lexicon. It is probably the case that the specific task demands of the gating task reinforces the fine-grained phonological analysis which we know is facilitated by the visual component of speech (Moradi et al. Citation2013). Another way of phrasing this effect is to state that the visual component helps RAMBPHO processing, which also is a reason why the first version of the ELU model (Rönnberg Citation2003) stressed the multimodality aspect of speech and the fact that phonological information comes in different modes in the natural communication situation.

Although seeing the talker’s face generally enhances speech perception in adverse conditions, we showed in a set of studies (Mishra et al. Citation2014) that it does not always enhance higher level processing of speech. Indeed, in two separate studies (Mishra et al. Citation2013a, Citation2013b), we showed that the presence of congruent visual information actually reduced the ability of young adult participants with normal hearing to perform a cognitive spare capacity task (CSCT). In the CSCT, series of spoken digits are presented either audiovisually or in the auditory mode only, and the participant is instructed to retain the two digits that meet certain criteria (e.g. highest value spoken by each of two speakers). This task requires memory (retention of two digits) and executive function (updating in the given example). We reasoned that when the auditory signal is fully intelligible, the visual component provides no additional information to support cognitive processing and instead acts as a distractor. However, for older adults with hearing impairment, seeing the talker’s face did provide a benefit in terms of CSCT performance (where executive functioning rather than maintenance per se is a key, Mishra et al. Citation2014) but not in terms of immediate recall (where it is maintenance per se rather than executive functioning that is the key, Rudner, Mishra et al. Citation2016b). We explained this discrepancy in terms of differences in task demands and their interaction with, on the one hand, different aspects of audiovisual integration and, on the other hand, individual differences in executive skills. Consequently, we propose that the benefit of seeing the talker’s face during speech processing is contingent on the specific cognitive demands of the task as well as the cognitive abilities of the listener and the quality of the signal. It could even be the case that non-linguistic social functions are triggered by the face, which in turn might modulate the executive function processes. Most importantly, there is no straightforward explanation of the role of visual information in ease of language understanding.

Hearing status

One important aspect of the ELU-model and moreover one of the driving forces behind its inception in Rönnberg (Citation2003) is that the probability of mismatches increases with severity of hearing impairment. For normal hearing listeners, it seems less likely that postdictive functions need to be invoked as a function of mismatch. However, depending on the difficulty and context of the speech understanding task, predictive and postdictive functions may still be important for persons with normal hearing. Nonetheless, one potentially important constraint on the ELU model is hearing status.

This constraint has been addressed by Füllgrabe and Rosen (Citation2016) in a meta-analysis of their own and some other correlational studies in the area. In particular, they focussed on the role played by WM and they found for the studies—where data had been shared—that in normal hearing listeners only a few percent of the variance in the speech understanding/recognition criterion is accounted for by WMC. Other independent published data support the ELU prediction that WMC is important for listeners with hearing impairment, especially those who are older (e.g. Füllgrabe and Rosen Citation2016; Rönnberg et al. Citation2016; Smith and Pichora-Fuller Citation2015). This is in keeping with the original intentions, but was (perhaps prematurely) generalised to the case of all participants, as long as adversity was severe enough (Rönnberg et al. Citation2013).

Nevertheless, in a recent article by Gordon-Salant and Cole (Citation2016), convincing evidence has been adduced regarding the importance of WMC—even for participants with age-appropriate hearing—concerning speech perception in noise. Obtained correlations between WMC and speech in noise thresholds (at 50%) are very similar to those obtained in work by e.g. Foo et al. (Citation2007) and Lunner (2003), using hearing-impaired participants. Gordon-Salant and Cole (Citation2016) also subdivided their normal hearing participants into high and low WMC subgroups, holding age constant between the two subgroups for each of two general age groups (older listeners =68 years vs. younger listeners =20 years). Both age and WMC caused main effects for perception of context-free, single word tests in noise, whereas there was an additional and interesting interaction between the two variables for sentence materials (with limited context) in noise (e.g. “The birch canoe slid on the smooth planks”), revealing that high WMC facilitated efficient use of sentence cues more for older than for younger participants (Gordon-Salant and Cole Citation2016).

WMC and context interactions

Although the ELU model has been less specific about the more precise role of context (Wingfield, Amichetti, and Lash Citation2015), the model can account for data patterns (e.g. Gordon-Salant and Cole Citation2016; Rönnberg et al. Citation2013), showing that a noisy speech signal accompanied by semantic cues is easier to resolve for a person with higher WMC. This phenomenon is likely to be especially prominent in older listeners who often have preserved semantic memory despite declining episodic LTM and executive skills (see Gordon-Salant and Fitzgibbons Citation1997; Peelle and Wingfield Citation2016; Sheldon, Pichora-Fuller, and Schneider Citation2008), but it has also been observed for participants with normal hearing (Michalek, Ash, and Schwartz Citation2018; Signoret et al. Citation2018).

Focusing on contextual demands rather on hearing status, we (Rönnberg et al. Citation2016) predicted and found a data pattern analogous to the pattern reported by Gordon-Salant and Cole (Citation2016). Based on the n200 data-base, we (Rönnberg et al. Citation2016) have now documented in exploratory factor analyses—for 200 participants with an average age of 61 and mild to moderate hearing loss—that WMC (with a high loading on a cognition factor, statistically derived from a set of cognitive tests)—is substantially associated with outcome conditions in which context plays a lesser role (i.e. the no context factor constituted by e.g. Hagerman matrix sentences, Hagerman and Kinnefors Citation1995). This replicates earlier studies from our lab (Foo et al. Citation2007; Rudner et al. Citation2011), which suggest a dissociation in the dependence on WMC: no context outcomes depend on WMC, whereas context outcome conditions (e.g. Swedish HINT sentences with naturalistic context, example: “She got shampoo in her eyes”, Hällgren, Larsby, and Arlinger Citation2006) depend less on WMC.

Context presumably helps the listener unload WM, as predictions are facilitated by the context of the sentence. This may at first glance, seem contradictory to the Gordon-Salant and Cole (Citation2016) data, but the similarity lies in the fact that the no context conditions in Rönnberg et al. (Citation2016) were primarily based on the Hagerman matrix sentences (i.e. Hagerman and Kinnefors Citation1995), which consists of stereotypical sentences (e.g. “Ann had five red boxes”). Albeit being different in terms of surface structure, the Hagerman and the Gordon-Salant and Cole sentences share the property of low redundancy, which means there is relatively little help in terms of prediction of sentence content.

Thus, we argue that the results in relation to context or no context in the Gordon-Salant and Cole (Citation2016)/Rönnberg et al. (Citation2016) studies are not necessarily conflicting. It seems mainly to be a matter of labelling of tests (and their contrasting relations to other tests in the respective test batteries) rather than a difference in actual task demands. Generally speaking, both sentence types depend on the internal sentence context (if any), which is why we argue here that the observed correlations replicate each other, across participant populations, keeping the type of outcome test and contextual support constant. Based on this reasoning, a case can be made for a further, seventh ELU–prediction about contextual use by WM.

Further support for this reasoning comes from a recent study by Meister et al. (Citation2016), who used low context matrix sentences (Oldenburg sentence materials, OLSA) to test speech perception in noise in participants with hearing impairment. A post hoc subdivision of participants into high and low WMC shows exactly the predicted pattern indicated above: high WMC participants could compensate for their hearing loss in low context conditions (Meister et al. Citation2016, see also Amichetti, White, and Wingfield Citation2016, for plausibility).

In the Rönnberg et al. (Citation2016) study, the context outcome factor tests that loaded the highest were the Swedish HINT (Hällgren, Larsby, and Arlinger Citation2006) and the Samuelsson and Rönnberg sentences (1993). The HINT sentences are naturalistic sentences and the Samuelsson and Rönnberg sentences have previously been used for lip-reading research. Lip-reading is hard without contextual cues. Participants listened to the sentences in noise with contextual cues selected from common everyday scripts and provided before the actual sentence (e.g. being at a “restaurant”, “Can we pay for our dinner with a credit card?”). For these kinds of speech-in-noise sentences, the Rönnberg et al. (Citation2016) study thus concludes that the correlation with WM is reduced relative to perception of Hagerman matrix sentences (but still significant, even after partialling out for age) because predictability is higher.

Likewise, in the case of auditory gating studies from our laboratory, highly predictive sentences do not necessitate the explicit involvement of WM, i.e. showing a lack of correlation between WMC and the amount of time required for the correct identification of sentence-final words. This result holds true for both hearing-impaired (Moradi et al. Citation2014a) and normal hearing participants (Moradi et al. Citation2014b). However, when it comes to gating of consonants and words, no supportive sentence context is present, and in this case, WMC comes into play for both participant groups. In a very recent study based on the n200 sample, Moradi et al. (Citation2017) replicated the gating results for consonants and vowels, showing significant correlations with WMC in those stimulus conditions. Finally, if we accept the data pattern concerning contextual variability and associated deployment of WMC, the key challenge seems to be how to conceptualise the mechanism of exact interplay between the two, given that e.g. sensitivity and temporal fine structure in part account for aging and hearing impairment effects (e.g. Gordon-Salant and Cole Citation2016; Rönnberg et al. Citation2016).

In summarising the argument for a seventh prediction by the ELU model, we use the original ELU formulation (Rönnberg Citation2003) as one point of departure to generally characterise the conditions under which WMC plays a role. In that theoretical article it was argued that for intermediate levels of phonological precision and lexical access speed (depending on the actual perceptual input or the phonological/lexical abilities of the listener), there is supposedly a larger opportunity for contextual use due to WMC. Thus, with either a precise perceptual and contextual input, or with a very poor input, the modulation by means of WMC was not assumed to be critical: in the former case the perceptual platform is sufficiently stable and precise, and hence, there is no additional explicit processing necessary; in the latter case, the input is too poor to instigate meaningful processing, leaving the cognitive machinery without input.

Concluding discussion