ABSTRACT

This article sheds light on criticism of the increasing degree of formalisation in collating and synthesising research findings in the systematic review format in education. A textual analysis of two systematic reviews produced by the Swedish Institute for Educational Research unpacks the significance of interaction between formalisation and professional judgement. The article also shows that there is a mismatch between the “front-stage” and “back-stage” of the review process. Interestingly, criticism is aimed at the ideal image of scholarly practice rather than the actual review process.

Introduction

The Swedish Institute for Educational Research (SIER) was established in 2015 with the mandate to synthesise research that can provide knowledge support for professionals at various organisational levels, such as classroom teaching, or at the policy level.Footnote1 SIER is a Swedish equivalent of organisations such as the What Works Clearinghouse in the US, the London-based Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI-Centre), and the Norwegian Knowledge Centre for Education. The remit to synthesise and disseminate research within the Swedish education system is not new: before the advent of SIER, it was handled by other government agencies and educational organisations, and it still is. What differentiates SIER from other Swedish agencies publishing research reviews is the use of the systematic review format.

The key characteristic of systematic reviews is their degree of formalisation: the rigorous scientific techniques adopted allegedly vouch for the reliability and replicability of the findings produced (Cooper & Hedges, Citation1994; Glass et al., Citation1982). The method of meta-analysis, which was subsequently developed into the systematic reviews format, arose within psychology and educational research, with the aim of managing and combining findings from quantitative studies. The method was subsequently picked up in clinical medicine, where there was a need to synthesise large numbers of quantitative studies in order to evaluate the efficacy of various medical interventions. In medicine and education alike, the aim of meta-analyses and systematic reviews is often to answer the question, “What works?”

In education, organisations with the main task of producing systematic reviews have been operating since the early 2000s, and in Scandinavian countries since 2006. This type of organisation has been subjected to criticism from the outset. The main criticism directed at these organisations argues that methodologically, they favour an “aggregative logic” in synthesising primary studies (Levinsson & Prøitz, Citation2017, p. 224), i.e., a logic based on aggregating data from primary studies and answering “what works”-question; this is often referred to as the conventional method of reviewing (Levinsson & Prøitz, Citation2017 209). Reviews of this kind, it is argued, are not suitable for the field of education due to its complexity, contextuality and local variation (Biesta, Citation2007). A related criticism, focusing on the systematic review methodology with its formalised stages has been levelled specifically at SIER, (Adolfsson et al., Citation2019). Critics of the use of systematic reviews in education typically emphasise, moreover, that the methodology has been adopted from medicine (e.g., Levinsson, Citation2013, pp. 24–25). The fact that it was originally developed by social scientists is not widely known (Bohlin, Citation2010, p. 168).

To analyse the way systematic reviews are conducted in the Swedish education sector, this paper draws on concepts from the interdisciplinary research field of Science and technology studies (STS). STS regards science as a complex and socially constructed activity. The field investigates how scientific knowledge is shaped and how technological artefacts are created (Sismondo, Citation2010, p. 11). This perspective challenges the traditional view of science as a formal activity developed through systematic methods in a linear fashion (Kuhn, Citation1970, p. 139). While STS scholars have long addressed objectivity, formalisation and professional judgement in research practice, little attention has been paid to the significance of those concepts to the systematic review format. Studies of systematic reviewing carried out within STS, all with a focus on healthcare, include Moreira (Citation2007), Bohlin (Citation2012), and Sager and Pistone (Citation2019).

Methodologically, this article is based on a close reading of two systematic reviews conducted by SIER, Digital learning resources in mathematics education and Classroom dialogue in mathematics education. Our aim is to contribute to a deeper understanding of the interplay of formalisation and professional judgement in reviewing practices, and to analyse the importance of this for current critiques of systematic reviews in educational research. To the best of our knowledge, no study with this focus has previously appeared in the STS literature, nor have reviewing practices been examined at this level of detail in the field of educational research before (but see MacLure, Citation2005).

The article begins with a brief account of the emergence of the systematic review format and the establishment of SIER. Our analytical framework follow, aiming at framing the article based on STS theory and providing a theoretical background to different logics of synthesis found in the review process. This is followed by a presentation of the empirical material on which our discussion and conclusion is based.

Background

Evidence-based medicine (EBM) was introduced in the early 1990s as a new concept in the healthcare sector. The aim of EBM is to ensure the quality of health care provision, based on the best available knowledge. The fundamental idea behind EBM is to systematically collate all relevant available literature within a selected area of knowledge and to draw conclusions based on that synthesis as a basis for decisions. The concept of evidence-based practice rapidly spread to several segments of healthcare including nursing, dentistry, physiotherapy, psychotherapy, and from there to social work, criminal justice, and education (Bohlin, Citation2010, p. 165). The diffusion of the concept from one area to another is often referred to as the evidence movement.

Systematic reviews serve a crucial function in evidence-based practices and are the dominant format in the specialty known as research synthesis. This specialty originated from meta-analysis, which comprises a set of formalised procedures, such as defined search strategies and data extraction (Bohlin, Citation2012, p. 299). The concept of systematic review, which grew out of meta-analysis, includes methods for the synthesis of qualitative as well as quantitative research, while meta-analysis is currently used to refer both to the statistical techniques frequently employed in systematic reviews and to a rigorously quantitative type of review. At the opposite end of the spectrum of synthesis formats, the approach of meta-ethnography is used to develop theoretical concepts employed in qualitative research. Introduced in response to what was taken to be a failed attempt at combining by meta-analysis the results of a series of ethnographic studies in school settings, meta-ethnography is a technique for synthesising results of qualitative studies in general (Noblit & Hare, Citation1988). Meta-ethnography is aimed at comparing and interpreting study findings, translating them in terms of concepts used and explanations offered in other studies, while meta-analysis is designed to aggregate data and calculate average effects.

The production of systematic reviews of educational research in the Scandinavian countries has increased (Forsberg & Sundberg, Citation2019, p. 37). Internationally, there is a longer tradition of systematic reviews in educational research and an accompanying intense debate on their strengths and limitations (Bohlin, Citation2018, Citation2010). The format was not introduced in Sweden until a few years ago, after a government inquiry report had recommended the creation of a new government agency responsible for synthesising and disseminating research (U2014:02S, 2014). SIER was set up in 2015, with the mandate to “enable those who work in the Swedish school system to plan, carry out and evaluate teaching on the basis of research-based methods and procedures”.Footnote2 This objective is supposed to be achieved by “carrying out systematic reviews and other research summaries of high scientific quality and presenting the results in a way that is useful to those who work in the school system”.Footnote3

The concept of systematic review entails the use of protocols specifying predetermined steps. At SIER an internal document, comprising a long sequence of formal steps, is aimed at guiding the institute’s reviewing projects. The steps described are aimed at “ensuring scientific quality and relevance” (SIER, Citation2017a, Citation2017b, Citation2017c, p. VII) while simultaneously displaying transparency. A proviso is included:

All projects differ and the descriptions in this document thus need not be followed mechanically, but departures should always be carefully considered and justified. (SIER, Citation2018, p. 1)

After the prestudy has been completed two (or more) external researchers, within fields relevant to the topic of the review, are recruited (SIER, Citation2018, p. 6). The assignments for the external researchers include carrying out relevance and quality assessments of the studies found, carrying out data extraction from the included studies, and in some cases, assisting with writing text for the final report (ibid. p.19). This is an interesting feature of SIER’s protocol: it is a formal requirement to include discipline-specific experts by virtue of their capacity to exercise professional discretion going beyond any formalisation, as such discretion cannot in itself be fully formalised. We will return to this issue in a later section.

The origins of systematic reviews within quantitative research mean that the review format is associated with the logic of quantitative studies. The format thus represents an established understanding, based on assumptions on objectivity, transparency, and reproducibility, of what characterises scientific rigour. These assumptions are all linked to the high value commonly placed on formalisation (Bohlin, Citation2010, p. 169). The formalisation of reviewing practices is not universally embraced, however. In the context of educational research, criticism has been levelled at SIER as well as at comparable organisations in other countries. A common criticism of this type of organisation is that “they operate within the frame of the conventional methodology” (Levinsson & Prøitz, Citation2017, p. 224). Levinsson (Citation2018) more specifically argues that SIER’s systematic reviews ought not follow the conventional methodology, and that “pluralism is preferable when it comes to the matter of what should be considered valuable research for teachers” (p. 17).

Other critics of SIER have complained that, in line with “a strict delegation principal [sic]”, the review process used thus far has led to a situation where “researchers become technicians or methodological experts” (Adolfsson et al., Citation2019, p. 109). The same critics suggest that SIER’s systematic reviews process reproduces practices adopted in clinical research, and that their “restricted review questions, evidence, generalisations and effectiveness outweigh conceptualisations, values, contextualisation and understanding” (Adolfsson et al., Citation2019, p. 109). Outside the Swedish context, a similar critique of systematic reviews of educational research has been put forth by Hammersley (Citation2001), who argues that systematic reviews are based on the assumption that “studies can be assessed in purely procedural terms, rather than on the basis of judgements which necessarily rely on broader, and often tacit, knowledge of a whole range of methodological and substantive matters” (p. 548). Along similar lines, MacLure (Citation2005) criticises EPPI-Centre’s rhetoric in connection with the review process, arguing that certain elements of the review process, specifically “interpretation, argument and analysis”, remain tacit while others, “scanning, screening, mapping, data extraction and synthesis” (p. 397) are emphasised. According to MacLure (Citation2005, p. 407), this tends to make reviews not more, but less, transparent.

The criticism directed at SIER and comparable organisations specifically addresses efforts to formalise procedures, suggesting that the emphasis on formal stages entails a dismissive attitude towards the researcher’s craft. This position is indicative of the controversy prevalent in the field; positions on methods of synthesising educational research are highly polarised, rather than assessing the strengths and limitations of different methodologies in answering different types of question.

Theoretical framework

Scientific rigour or quality is widely defined in terms of strictly formalised procedures. Formal tools are assumed to minimise judgement and hence bias. Within STS this idealised “algorithmical model” of scientific practice, the notion that explicit rules remove the need for professional judgement, has been called into question by a number of scholars (cf. Sundqvist et al., Citation2015), most notably Harry Collins (Citation1992, p. 57). Collins (Citation1992) argues that scientific knowledge, on closer inspection, is advanced by communities of experts in accordance with what he has dubbed the “enculturation model” (p. 159). This analytic model claims that professional competence encompasses a set of skills developed within small scholarly communities. This competence cannot be replaced by a defined set of rules (Collins, Citation1998, p. 497, p. 513) since the rules must be interpreted (Sismondo, Citation2010, p. 143). Sometimes formalisation and professional judgement come into conflict, since formalised knowledge tends to be considered of value in public arenas while professional judgement is valued locally (Sismondo, Citation2010, p. 142).

To understand why there is such a strong link in many areas between the pursuit of objectivity and the prevalence of formal procedures, Porter (Citation1995) uses various historical case studies to offer a broad conceptual framework surrounding how allegedly objective knowledge is attained in various areas. In what Porter (Citation1995) has dubbed “mechanical” objectivity, the significance of the expertise of researchers is minimised through rule following (cf Megill, Citation1994; Sismondo, Citation2010, p. 139). According to Porter (Citation1995), the reason mechanical objectivity has become established in numerous areas is linked to rising external pressure, to which the response is a reference to formal rules and procedures so as to legitimate the decisions made. The credibility of groups of experts then shifts from trust in expertise to trust in rule-following (Porter, Citation1995, p. 214).

The conventional method of systematic review may be regarded as an expression of mechanical objectivity. A belief that objective decisions are free of subjectivity, such as the researcher’s personal opinions, and free of external influence, such as politics, is created by following predetermined procedures (Porter, Citation1995, p. 8). The strength of mechanical objectivity is that it can elevate the status of a profession while reducing arbitrary opinions within it (Berg et al., Citation2000, pp. 777–778; Bohlin & Sager, Citation2011, p. 220). The drawback is that this effort to eliminate the subjective can create rigid formal steps that can generate difficulties for the user. This in turn can make the work more difficult and ultimately influence the recipient perspective (Berg et al., Citation2000, p. 781).

A major reason for introducing systematic reviews and formalised steps in educational research reviews was to correct a lack of transparency related to the role of experts in the review process. According to Gough et al. (Citation2017, p. 5–6), a standard work on research synthesis methods, trust in expert is associated with several risks. Unless the theoretical perspective of the expert is made explicit in the review process, they argue, the basis on which quality is assessed, relevance appraised, and different pieces of evidence synthesised is not clear. Even so, however, Gough et al. (Citation2017) suggest that there is no avoiding expert judgements, as interpretation of data and categories are a necessary and unavoidable component of all forms of systematic review.

Gough et al. (Citation2017) present a division applicable to two types of questions when conducting a systematic review, a division that can support the choice of synthesis method: “questions that assume particular theories and concepts and … questions that are more concerned with developing and generating concepts” (p. 62). According to Gough et al. (Citation2017), this dimension of existing differences in relation to which type of question is asked is present throughout the review process. Based on the choice of question, the synthesis process relies on either an aggregative or a configurative logic. These logics predominate to different extents in the synthesis of different types of primary studies. An aggregative logic predominates in the synthesis of quantitative studies, while a configurative logic predominates in the synthesis of qualitative studies (Voils et al., Citation2008, pp. 14–15). To a greater extent than aggregative syntheses, moreover, configurative syntheses are based on the reviewers’ familiarity with the subject addressed and their judgement and creativity, as the synthesis itself requires constant interpretation (Gough & Thomas, Citation2017, p. 65). This is why configurative syntheses are less amenable to formalisation than aggregative syntheses. Though the term configuration does not appear in Noblit and Hare (Citation1988), the approach they introduced, meta-ethnography, is widely regarded as the archetypal configurative reviewing format.

There is, however, no synthesis method that can be entirely categorised under either logic, as the process of aggregation is invariably preceded and followed by certain configurative steps. The distinction between aggregation and configuration is nevertheless a very useful tool for understanding how syntheses are structured. While the distinction between formalisation and professional judgement derives from STS, with affinities to scholarship on mechanical objectivity, the distinction between aggregative and configurative logics pertains more closely to actual orientations, self-understanding and practices within systematic reviewing in education.

In this study, the congruity between a configurative logic and professional judgement, on the one hand, and between an aggregative logic and formalisation, on the other, is a key theoretical resource. These congruities allow us to link our analysis, of an interplay of formalisation and professional judgement in the systematic reviews we have examined, to Porter’s concept of mechanical objectivity (see ).

Table 1. The key theoretical resources.

Method and materials

In order to explore the interplay of formalisation and professional judgement in reviews of educational research, we selected two “critical cases” (Flyvbjerg, Citation2001, p. 78) as our empirical material. A critical case has “strategic importance to the general problem” (Flyvbjerg, Citation2001, p. 78), in this case, the role of formalisation in systematic reviews. The first review, on digital learning resources in mathematics education (SIER, Citation2017a, Citation2017b), exemplifies the most aggregative and formalised ambitions seen so far at SIER. The second review, summarising research on classroom dialogue in mathematics education (SIER, Citation2017c), is very similar to most of the SIER reviews subsequently produced. The two cases cannot be assumed to represent every systematic review in education, of course, but this selection of material allows us to conduct a careful empirical examination of the claim that formalisation excludes professional judgement.

We offer an abductive textual analysis based on repeated close readings allowing us to interrogate the empirical material by drawing on the theoretical resources described above. The abductive process in this case comprises three steps: (1) revisiting the review texts repeatedly and conducting a word-by-word analysis; leading to (2), defamiliarising the text for the reader and analyst; and finally (3), seeing the text in an alternative casing by relating it to other cases and theoretical formulations (Timmermans & Tavory, Citation2012, p. 176 ff.). This process, which is itself configurative and iterative, preferably “ends with models of relationships [between a set of seemingly unrelated findings]“ (Sandelowski et al., Citation2012, s. 326). The relationships are established by juxtaposing articulations within and between texts and linking them together by using notions that are not necessarily made explicit in these texts and are often brought in from other theoretical contexts. In our case, these notions were derived from scholarship on mechanical objectivity, formalisation and professional judgment, as well as the closely related distinction between configurative and aggregative logics presented in the previous section.

More specifically, step 1 was pursued by the first and second authors in an open-ended manner, the aim being to understand the purposes, methods, content and conclusions of the reports. Having fully mapped these aspects, in step 2 we deployed two conceptual distinctions to categorise the material; primarily the one between configurative and aggregative logics and, secondarily, the one between formalisation and professional judgment. The first and second authors coded all passages with relevance for the two distinctions. On the basis of the congruity pointed out in the theoretical section between the two distinctions, signs of an aggregative logic in the presentation prompted analyses of the degree of formalisation potentially involved in these manifest articulations while, conversely, signs of a configurative logic in the presentation led us to examine the degree of professional judgment likely to have informed reviewing process (see ).

Table 2. The theoretical congruity between aggregation/configuration, formalisation/professional judgment, and the levels of analytic progress and abductive steps.

Usually, signs of a configurative or an aggregative logic were more manifest in the texts, while the reliance on formalisation or professional judgments were more latent, often derived through interpretation. For instance, questions or results can be categorised as primarily configurative or aggregative in kind without expressly stating the need for professional judgment. The reliance on professional judgment follows from the occurrence of configurative elements in the analysed texts. (See step 2 in ).

Table 3. The abductive process.

Based on these codings, all three authors, in step 3, iteratively sought to understand the interplay of formalisation and professional judgment, and to analyse the implications of this for current critiques of systematic reviews in educational research. It was in this final phase that the model of relationship presented in the Conclusion was shaped. See below for a simplified representation of this process.

Results

The review (1) Digital learning resources in mathematics education (SIER, Citation2017a, Citation2017b) covers, after the relevance and quality assessment, a total of 85 controlled experiments studies and is divided into two parts, one report on preschool that includes 10 studies and one on primary and secondary school that includes 75 studies. As a whole, the review is intended to answer the following two questions:

What are the effects on learner achievement of teaching mathematics with the support of digital learning resources?

What might explain whether teaching with the support of digital learning resources has, or fails to have, an effect on learner achievement in mathematics?

In the review (2) Classroom dialogue in mathematics education (SIER, Citation2017c), the aim is to summarise the results of research describing various patterns in the interaction between teachers and pupils during the teaching of mathematics. Such knowledge could help teachers recognise and understand these patterns and give them tools for guiding the interaction in a way that strengthens pupils’ prerequisites for participation and learning. Following the relevance and quality assessment, the review includes 18 studies. All studies included are based on classroom observations and are thus qualitative. The review is supposed to answer the following question:

What characterises classroom dialogues where pupils engage in mathematical reasoning and where their individual qualities are respected, and what marks teacher guidance of such dialogues?

Digital learning resources in mathematics education (preschool and primary/secondary school)

Neither the report on preschool nor the one on primary/secondary school states any specific synthesis method for summarising the studies that the review’s questions are supposed to address. However, numerous references to meta-analysis in the methods section, which is the same in both reports, are indications of an aggregative logic. In this section, the authors state that they have decided to

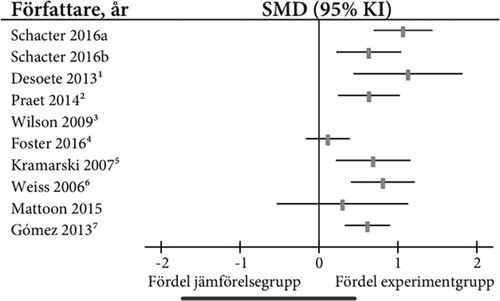

perform meta-analyses and present the study results on knowledge development in mathematics in forest plot diagrams, but without reporting aggregate effect sizes (see ). (SIER, Citation2017a, p. 46, 2017b, p. 102)

Figure 2. SIER’s example of a forest plot diagram, which differs from conventional forest plot diagrams in that no aggregated effect size is reported (SIER, Citation2017a, p. 16). The picture is reproduced from SIER, with permission.

arriving at an aggregated effect size for the studies included is not the sole purpose of a meta-analysis. [Meta-analysis] is also a tool for analysing and presenting the results of the studies. Do all the studies point in the same direction? Are there large variations among the studies? Are there any studies that clearly stand out from the rest? Is there reason to seek explanations for the differences in results between different studies? The meta-analysis, especially when combined with an illustrative diagram, can thus also be regarded as an analytical and pedagogical instrument. (SIER, Citation2017a, p. 46, 2017b, p. 100)

find apparent patterns in how the effect results can be explained by comparing how the various studies and their results relate to each other. (SIER, Citation2017a, p. 9, Citation2017b, p. 11)

by repeatedly and cyclically analysing the reported results of the studies in relation to the research design, information about the intrinsic characteristics of the digital learning resources, and how they have been used in teaching. (SIER, Citation2017a, p. 48, Citation2017b, p. 104)

This configurative logic can also be identified in other parts of the report. This is especially apparent in how support for the results is presented and how the results were arrived at. In both sub-reports, there is transparency regarding the support found for each finding, as the results section is presented at three levels. At the first level, the conclusions that have emerged as answers to the two research questions of the review are offered. At level two, brief quotations that support the conclusions drawn at level one are presented. Finally, at level three, each individual study is described, based on the author(s), content, mathematical capacity, design, and effect size.

A configurative logic is apparent in several of the central findings in both sub-reports. Providing a possible answer to question number two, concerning what might explain whether teaching with the support of digital learning resources has an effect on learner achievement in mathematics, the conclusion in the preschool report identifies a key factor. The key factor concerns the extent to which a certain initiative invites conversation. Rather than being directly linked to any characteristic of the digital learning resource itself, this factor pertains to whether and how the resource supports a particular working method:

… it is difficult to draw any clear conclusions from the material about the characteristics that may distinguish well-designed digital learning resources in mathematics for children in preschool. But it seems to be beneficial if the working method encourages conversation between children and with the educators. … A key factor is that the mathematics tasks that the children are working with have the character of inviting conversation about actual tasks. (SIER, Citation2017a, p. 12)

that it is always a matter of finding a balance between giving the children an opportunity to talk and ensuring that the talk is not occurring at the expense of focus on solving the mathematical problems. (SIER, Citation2017a, p. 15)

In addition, an assumption is made concerning the significance of the key factor for the body of material included in the review. Exploring links between individual studies and drawing conclusions on this basis instantiates a configurative logic. The fact that the detailed account of each individual study provides a marginally deeper understanding of the conclusions offered is further evidence that, to a certain extent, a configurative logic was adopted in the reviewing process. The conclusions are thus not apparent in the individual studies, but only become so on being mutually translated in each other, and patterns that were not apparent individually become so as the studies are combined.

The conclusions for primary/secondary school are more complex, and their number far exceeds those offered in the preschool report, but there are clearly configurative elements here as well, both in the nuanced interpretations and in the seeking of general principles rather than effective methods. The report provides a relatively favourable picture of the benefits of using digital learning resources for teaching mathematics in primary and secondary schools, and the first general conclusion argues

that it is clearly possible to design digital learning resources that can be used to develop various mathematical skills, especially if they are used in an otherwise rich teaching environment. (SIER, Citation2017b, p. XIII)

that equally effective teaching could not be designed in other ways, without digital learning resources. (SIER, Citation2017b, p. 16)

in such a way that the pupils perceive that they are doing maths. Several studies in the material can be interpreted as supporting this. (SIER, Citation2017b, p. 54)

It may be, therefore, that these studies combined are heading towards a general principle, namely, that what is good is the combination of digital learning resources that focus on conceptual aspects of mathematical content with teaching that contains a component in which the mathematics experience is discussed and problematised. (SIER, Citation2017b, p. 37)

Classroom dialogue in mathematics education

Unlike the review on digital learning resources, whose object is to provide answers about the effect of a certain intervention, this review seeks to determine what characterises fruitful classroom dialogue in the education of mathematics. The review is thus based on qualitative studies, and its methodology is described as being based on a configurative logic “inspired by meta-ethnography” (SIER, Citation2017c, p. 55). According to SIER protocol, two researchers affiliated with Swedish universities were recruited to the project team, and this resulted in them conducting much of the extraction of results as well as much of the synthesis itself. The researchers are said to have written “summaries of the results of each study”. After having discussed their interpretations of the studies with the other members of the project team, the researchers were responsible for working out a possible “structure” for the synthesis, upon which the subsequent work was based:

The summaries and suggestions for collation by the outside researchers then guided the results text written by the project leader in collaboration with the project team. The outside researchers read and responded to text outlines on two occasions. (SIER, Citation2017c, p. 55)

A tripartite distinction between types of classroom dialogue is made: “disputational talk” is distinguished from “cumulative talk” and “exploratory talk” (SIER, Citation2017c, p. 9). This distinction is later used as the basis for the entire argument presented; by stressing the contrast to disputational and cumulative talk, exploratory talk is suggested to be critical to pupils’ engagement and learning. The term “exploratory talk” is found in two of the studies included in the review; a footnote explains why the term was given overarching significance in the analysis:

The studies use different terms for this type of dialogue, but as we understand it, there are great similarities in what they are talking about in all cases, and the term “exploratory talk” captures the main elements of what is described. We have therefore decided to use this term. (SIER, Citation2017c, p. 9)

The question addressed in this review differs from those asked in the review on digital learning resources. Despite this, a configurative logic clearly informs both reviews, though in the review on digital learning resources it is combined with an aggregative logic. The weight attached, in practice, to configuration in these syntheses means that the degree of professional judgement required to conduct them has been high; without a thorough understanding of the research areas covered, the project teams would hardly have been able either to describe and summarise the studies selected with the precision required, or to explore links between them in an appropriate manner. This is entirely consonant with SIER’s formal requirement to involve external researchers for the purpose of exercising discipline-specific discretion in analysing the studies included in any review. It also contradicts the claim that formalised procedures of the kind adopted by SIER reduce reviewers to technicians or methodological experts (Adolfsson et al., Citation2019, p. 109).

To date, SIER has published fourteen systematic reviews. These thirteen reviews ask different questions and the empirical material on which they are based differs. Space does not allow us to go into detail, but one may note that there are common features in how SIER has methodologically handled the synthesis of the primary studies included. A common feature of all these reviews is the significant role of outside researchers in the review process as a whole, and in the synthesis process in particular, as well as the predominant status of a configurative logic in the synthesis of individual studies, which is used as the basis for the conclusions drawn.

Conclusion

It is evident from this examination of SIER’s efforts to summarise primary research that, by taking a highly formalised approach in describing their internal project process, SIER is attempting to benefit from the status that the systematic review format has acquired in other fields. The project process consists of a list of a priori steps that the project team is expected to follow because this, assumedly, facilitates transparency and assures quality. This perspective on the structure of knowledge is based on a mechanical view of objectivity, aimed at eliminating subjective elements from the process. However, the connection between a high degree of formalisation and a low degree of discretionary decision-making is far from being that simple.

The close reading reported here of the first two systematic reviews carried out by SIER indicate a mismatch: the actual composition of the reviews has not been as mechanical as the project process suggests. This mismatch, namely, that the outline of the process given front-stage is more formalised than the actual process, is common in scientific practice in general. One reason for the mismatch may be the pressure and external expectations on scientific practice that few disciplines can live up to. Within STS, scholars have demonstrated the importance of informal judgement in scientific practice (Collins, Citation1992). The close reading of the two systematic reviews, Digital learning resources in mathematics education and Classroom dialogue in mathematics education, similarly shows that configuration and judgements are constantly present in the review process, in contrast to the façade of formalisation.

This picture of systematic reviewing practices as unproblematic in rhetoric and presentation depends on, as Collins (Citation1992, p. 145) puts it, “distance lends enchantment”, that is, the further away from the construction of knowledge one gets in both time and space, the more a simplified view of how the knowledge was actually constructed is adopted. The distance from practice to finished product lends the finished object, in this case, the systematic review, an enchanted status. This status blurs the nuances that exist in practice, which MacLure (Citation2005) describes in her criticism of EPPI-Centre reviews. Parts of the process remain invisible, to the benefit of other parts of the process that are considered more scientific. This chase after formalisation is based on a view of knowledge production in accordance with Collins’s (Citation1992) algorithmical model (p. 159).

What is highly interesting is that, in some areas, this way of seeking to bolster legitimacy by eliminating nuance may produce a contrary response and generate resistance. The criticism that formalised syntheses of educational research frequently provoke resonates with objections to the widespread, idealised image of scientific practice. Similarly, an idealised image of systematic reviewing practices engenders denunciation. The presentation of scientific practice as unproblematic and polished creates a de facto problem: the front-stage work, erected to offer protection, is exactly what attracts criticism (e.g., Adolfsson et al., Citation2019; Levinsson, Citation2018; Levinsson & Prøitz, Citation2017). Even though reviewers themselves are likely to be well aware of the importance of professional judgement as an unavoidable component of many stages of the process, professional judgement may need to be brought to light.

Our findings, however, do not suggest that professional judgement is exercised in an isolated or self-sufficient manner. In line with Collins’s enculturational model of scientific practice, two central aspects are evident in our analysis of SIER’s review practices: first, the necessary interaction between formal tools and professional judgement; and second, the relationship between a certain level of formalisation and the circumstances that apply in a specific situation. With respect to the conduct of systematic reviews, this implies that non-formalised procedures may be necessary to uphold the formal standards adopted. In contrast to current critiques of systematic reviews, then, formalisation does not necessarily involve a dismissive attitude towards professional discretion. The issue is not one of choosing between two alternatives, but rather one of balance and interaction.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1 In addition, SIER allocates funds for research projects.

2 Web: https://www.skolfi.se/om-oss/styrdokument/ 220822 kl 14.30

3 Web: https://www.skolfi.se/om-oss/styrdokument/ 220822 kl 14.30

References

- Adolfsson, C.-H., Sundberg, D., & Forsberg, E. (2019). Evidently, the broker appears as the New Whizz-kid on the educational agora. In D. Pettersson & C. E. Mølstad (Eds.), New practices of comparison, quantification and expertise in education: Conducting empirically based research. Routledge. https://doi.org/10.4324/9780429464904

- Berg, M., Horstman, K., Plass, S., & Van Heusden, M. (2000). Guidelines, professionals and the production of objectivity: Standardisation and the professionalism of insurance medicine. Sociology of Health & Illness, 22(6), 765–791. https://doi.org/10.1111/1467-9566.00230

- Biesta, G. (2007). Why “what works” won’t work: Evidence-based practice and the democratic deficit in educational research. Educational Theory, 57(1), 1–22. https://doi.org/10.1111/j.1741-5446.2006.00241

- Bohlin, I. (2010). Systematiska översikter, vetenskaplig kumulativitet och evidensbaserad pedagogik. Pedagogisk Forskning I Sverige, 15(2–3), 164–186.

- Bohlin, I. (2012). Formalizing syntheses of medical knowledge: The rise of meta-analysis and systematic reviews. Perspectives on Science, 20(3), 273–309. https://doi.org/10.1162/POSC_a_00075

- Bohlin, I. (2018). Från begreppet evidence till metoder för syntes av kvalitativ utbildningsvetenskaplig kunskap: Formalisering, integration, strategier för generalisering. D. Alvunger & N. Wahlström (Red), Den Evidensbaserade Skolan: Svensk Skola I Skärningspunkten Mellan Forskning Och Praktik, 2018, pp. 171–205.

- Bohlin, I., & Sager, M. (2011). Evidensens många ansikten: Evidensbaserad praktik i praktiken. Arkiv.

- Collins, H. M. (1992). Changing order: Replication and induction in scientific practice, 2. ed. with a new afterword. ed. University of Chicago Press.

- Collins, H. M. (1998). Socialness and the undersocialized conception of society. Science, Technology and Human Values, 23(4), 494–516. https://doi.org/10.1177/016224399802300408

- Cooper, H., & Hedges, L. V. (1994). Research synthesis as a scientific enterprise. In H. Cooper & L. V. Hedges (Eds.), The handbook of research synthesis (pp. 3–14). Russell Sage Foundation.

- Flyvbjerg, B. (2001). Making social science matter: Why social inquiry fails and how it can succeed again. Cambridge University Press.

- Forsberg, E., & Sundberg, D. (2019). Att utveckla undervisningen – en fråga om evidens eller professionellt omdöme. In Y. Ståhle, M. Waermö, & V. Lindberg (Eds.), Att Utveckla Forskningsbaserad Undervisning: Analyser, Utmaningar Och Exempel (pp. 29–50). Natur & Kultur.

- Glass, G., Cahen, L. S., Smith, M. L., & Filby, N. N. (1982). School class size: Research and policy. Sage.

- Gough, D., Oliver, S., & Thomas, J. (2017). An introduction to systematic reviews (2nd ed.). SAGE.

- Gough, D., & Thomas, J. (2017). Commonality and Diversity in Reviews. In Gough, Oliver & Thomas 2017, 35–65.

- Hammersley, M. (2001). On ‘systematic’ reviews of research literatures: A ‘narrative’ response to Evans & Benefield. British Educational Research Journal, 27(5), 543–554. https://doi.org/10.1080/01411920120095726

- Kuhn, T. S. (1970). The structure of scientific revolutions, [2d ed., enl. ed, International encyclopedia of unified science. Foundations of the unity of science, v. 2, no. 2. University of Chicago Press, Chicago.

- Levinsson, M. (2013). Evidens och existens: evidensbaserad undervisning i ljuset av lärares erfarenheter. Diss. (sammanfattning) Göteborg: Göteborgs universitet, 2013.

- Levinsson, M. (2018). Skolforskningsinstitutet, konfigurativa översikter och undervisningens komplexitet. pfs 24, 5–24. https://doi.org/10.15626/pfs24.1.02

- Levinsson, M., & Prøitz, T. S. (2017). The (Non-)use of configurative reviews in education. Education Inquiry, 8(3), 209–231. https://doi.org/10.1080/20004508.2017.1297004

- MacLure, M. (2005). ‘Clarity bordering on stupidity’: Where’s the quality in systematic review? Journal of Education Policy, 20(4), 393–416. https://doi.org/10.1080/02680930500131801

- Megill, A. (1994). Rethinking objectivity. Duke University Press.

- Moreira, T. (2007). Entangled evidence: Knowledge making in systematic reviews in healthcare. Sociology of Health & Illness, 29(2), 180–197. https://doi.org/10.1111/j.1467-9566.2007.00531.x

- Noblit, G. W., & Hare, R. D. (1988). Meta-ethnography: Synthesizing qualitative studies. Sage Publications.

- Porter, T. M. (1995). Trust in numbers the pursuit of objectivity in science and public life. Princeton University Press.

- Sager, M., & Pistone, I. (2019). Mismatches in the production of a scoping review: Highlighting the interplay of (in)formalities. Journal of Evaluation in Clinical Practice, 25(6), 930–937. https://doi.org/10.1111/jep.13251

- Sandelowski, M., Voils, C. I., Leeman, J., & Crandlee, J. (2012). Mapping the mixed methods–mixed research synthesis terrain. Journal of Mixed Methods Research, 6(4), 317–331. https://doi.org/10.1177/1558689811427913

- SIER. (2017a). Digitala lärresurser i matematikundervisningen. Delrapport förskola., systematisk översikt 2017:02 (2/2). ed. Skolforskningsinstitutet.

- SIER. (2017b). Digitala lärresurser i matematikundervisningen. Delrapport skola., systematisk översikt 2017:02 (1/2). ed. Skolforskningsinstitutet.

- SIER. (2017c). Klassrumsdialog i matematikundervisningen – matematiska samtal i helklass i grundskolan., systematisk översikt 2017:01. Ed. Skolforskningsinstitutet.

- SIER. (2018). Projektprocess systematiska översikter, Dnr: Skolfi 2018/8. Skolforskningsinstitutet.

- Sismondo, S. (2010). An introduction to science and technology studies, 2nd ed. Ed. John Wiley & Sons, Ltd.

- Sundqvist, G., Bohlin, I., Hermansen, E. A., & Yearley, S. (2015). Formalization and separation: A systematic basis for interpreting approaches to summarizing science for climate policy. Social Studies of Science, 45(3), 416–440. https://doi.org/10.1177/0306312715583737

- Timmermans, S., & Tavory, I. (2012). Theory construction in qualitative research: From grounded theory to abductive analysis. Sociological Theory, 30(3), 167–186. https://doi.org/10.1177/0735275112457914

- U2014:02. Slutrapport från Utredningen om inrättande av ett skolforskningsinstitut. Utbildningsdepartementet.

- Voils, C. I., Sandelowski, M., Barroso, J., & Hasselblad, V. (2008). Making sense of qualitative and quantitative findings in mixed research synthesis studies. Field Methods, 20(1), 3–25. https://doi.org/10.1177/1525822X07307463