ABSTRACT

This study captures student perceptions of the effectiveness of remote learning and assessment in two associated engineering disciplines, mechanical and industrial, during the COVID-19 pandemic in a cross-national study. A structured questionnaire with 24 items on a 5-point Likert scale was used. Parallel and exploratory factor analyses identified three primary subscales. The links between student perceptions and assessment outcomes were also studied. There was a clear preference for face-to-face teaching, with the highest for laboratories. Remote live lectures were preferred over recorded. Although students found the switch to remote learning helpful, group work and communication were highlighted as concern areas. Mean scores on subscales indicate a low preference for remote learning (2.23), modest delivery effectiveness (3.05) and effective digital delivery tools (3.61). Gender effects were found significant on all subscales, along with significant interactions with university and year-group. Preference for remote delivery of design-based modules was significantly higher than others.

1. Introduction

The education sector was massively impacted by the COVID-19 pandemic. Many universities were shut down or forced to conduct classes remotely. Fast-paced measures were adopted by higher educational institutions (HEIs) to meet governmental restrictions and deliver on study programmes with minimal detriment to student morale, learning, assessment, and accommodation. Closures of HEIs involved in this study occurred between the 12th and 25th of March 2020. The wide range of reoriented learning activities has meant certain measures have fallen short of expectations (Smalley Citation2020). While some learning activities such as lectures could be delivered using video conferencing software, others such as tutorials, laboratories, etc., were difficult to organise.

One advantage many HEIs worldwide have today is the adoption of virtual learning environments (VLE) supported by a learning management system (LMS). Despite this, it has been challenging for staff and students who lack adequate digital resources with the required specifications or internet with sufficient bandwidth/data. A recent study by Gonzales, McCrory Calarco, and Lynch (Citation2018) indicates about 20% of students lack access to laptops/PCs and high-speed internet. The internal operations of universities moved online, requiring adjustment by staff from different age groups and computer literacy to join online meetings with limited open discussion.

The challenges have varied from discipline to discipline. For instance, science, technology, engineering, and mathematics (STEM) disciplines are often delivered with a judicious combination of lectures, tutorials, laboratory exercises, group work and fieldwork. Among all STEM disciplines, one area of interest is mechanical and industrial engineering (M&IE), where students adopt a 3D visual approach to learning and interaction with real-life machines and manufacturing processes is crucial. Some elements in M&IE, such as Computer-Aided Design, Engineering and Manufacturing (CAD/CAE/CAM) require virtual tools and are suitable for remote learning (Fernandes et al. Citation2020).

Multiple stakeholders play a role in the success or failure of remote learning delivery through electronic means (e-learning) (Choudhury and Pattnaik Citation2020). Key challenges experienced by lecturers include functionalities in the LMS, adapting curriculum to modes such as blended/dual-mode and limited technical support (Mathrani, Mathrani, and Khatun Citation2020). Al-Fraihat, Joy, and Sinclair (Citation2020) identified factors such as system quality, information quality, service quality, satisfaction and usefulness using a detailed survey to assess e-learning success in non-pandemic conditions. These factors incorporate research into information systems success modelling, prominent among which is the DeLone and McLean model, which has been updated every ten years (DeLone and McLean Citation1992, Citation2003; Petter, DeLone, and McLean Citation2013). A simplified conceptual model presented suggests three intermediate factors, viz.: satisfaction, usefulness and usage (Al-Fraihat, Joy, and Sinclair Citation2020). These factors have provided the theoretical underpinnings for the research hypotheses.

The incorporation of computer-mediated communication during the pandemic required students to use written modes of expressing themselves, articulating ideas, contrasting viewpoints, and putting forward opinions. While this can improve their self-reflection (Hawkes Citation2001), critical thinking (Garrison, Anderson, and Archer Citation2001) and writing skills (Winkelmann Citation1995), the benefits depend on student perceptions of remote delivery based on attitudes and pedagogical needs of their discipline (Ozkan and Koseler Citation2009). The student perceptions, in turn, are closely correlated with their satisfaction with the two delivery modes, i.e. face-to-face and remote delivery, which is one of the three intermediate factors in the e-learning success models (Choudhury and Pattnaik Citation2020). Therefore, the first research hypothesis is informed by the need to assess student perceptions of different modes of remote delivery of M&IE modules.

Early studies evaluating e-learning effectiveness during the pandemic have emerged. Aboagye, Yawson, and Appiah (Citation2020) found accessibility issues to be the most important in determining effectiveness. A study on Malaysian universities found gender issues to be quite significant (Shahzad et al. Citation2020). Alqahtani and Rajkhan (Citation2020) identified success factors such as student awareness and motivation, management support and skills levels of instructors and students. Bawa (Citation2020) provided results of learners’ performance and perceptions using a study on 397 students. A positive picture emerged with no significant effect on learner’s grades. While these studies outline the need for assessing the delivery effectiveness during the pandemic, they are limited by geography and student demographics and understanding the interactions between variables such as year of study, discipline, gender and university location. Hence, this has motivated the second research hypothesis, which looks closely at the effectiveness of the transition, with specific insights into M&IE disciplines. This aligns with the intermediate factor of usefulness in the success model.

The intermediate factor of usage of digital delivery tools informs the third research question. The usage of various aspects of the VLEs has a direct impact on their educational outcomes (Al-Fraihat, Joy, and Sinclair Citation2020). Given the numerous adaptations that were required during the pandemic, this study aims to investigate the effects of these transformations to provide insights into:

how these changes affected students in terms of their learning experience,

how their experiences can inform future preparedness,

what were the specific issues in moving to remote learning for M&IE disciplines and

what innovations are needed to facilitate education in emergency/pandemic conditions

A survey based on the research hypotheses was administered online to students at five universities to perform this investigation. Additional data was also collected through university groups and survey forums to test further the interpretations emerging from the dataset and correlated with historical and current assessment outcomes. This paper reports results from these studies and uses them to provide indicators and guidelines for future preparedness.

2. Background

2.1. E-learning and remote assessment

Teaching has systematically moved from traditional blackboard-based lectures to increased use of e-learning (Patkar Citation2009). Initially, instructors created specific web pages as repositories for digital learning content. This has been overtaken by integrated VLEs (Weller, Pegler, and Mason Citation2005). Some VLEs are custom-made at universities, while others are procured through digital-learning solutions vendors (e.g. Moodle, Canvas, Blackboard etc.). Smartphones have enabled mobile learning (m-learning), letting students participate interactively from remote locations accessing content on the move (Keegan Citation2002). Roschelle (Citation2003) noted that they would enhance learning due to low cost and communications features. Another advantage is exploiting location information using pure connection, pure mobility or a combination (Asabere Citation2012). However, there are design constraints imposed by phone models and applications such as browsers (Haag Citation2011). This may be remedied as users adopt newer models enabling capabilities such as live streaming.

Lately, blended learning tools have emerged, which can mean combinations of: (i) instructional modalities and delivery media, (ii) instructional methods, and (iii) online and face-to-face instruction (Graham Citation2006). This usually implies text-based asynchronous e-learning combined with face-to-face learning (Garrison and Kanuka Citation2004). Despite the adoption of advanced video conferencing tools in remote teaching, a fundamental limitation is the inability to read facial expressions and body language and the lack of intimacy and immediacy required for effective teaching (Perry, Findon, and Cordingley Citation2021). Another critical issue is maintaining students’ motivation, as they miss social contact with other students and their lecturers (Rahiem Citation2021).

One fundamental expectation in higher education is assessment (Sadler Citation2005). It is central to experiences and follow-on opportunities after graduation (Struyven, Dochy, and Janssens Citation2005), bureaucratic requirements for universities (Barnett Citation2007) and the teaching practice of educators (Boud Citation2007). A primary requirement is they must be effective in testing key learning outcomes. Past work has looked at efficacy via desired and unintended results (Hay et al. Citation2013). Kane (Citation2001) identified a few characteristics determining assessment validity and found criterion-based models less effective than construct models.

McLoughlin and Luca (Citation2002) report online assessments can lead to collaborative learning by facilitating analysis, communication and higher-order thinking. In the early days of online assessment, tasks given to learners were of a surface-assessment type leading to a focus on objectivist knowledge (Northcote Citation2003). A balanced approach is needed to evaluate a range of learning outcomes. This is possible if educators consider their self-epistemologies and understand pedagogical implications. A significant issue relates to ‘test fairness’ (Camilli Citation2006). It is not enough to just look at statistical measures of an assessment given to two groups and conclude it as fair. Other factors affecting fairness are gender, test accommodations and linguistic diversity.

While VLEs provided by software vendors usually have integrated assessment tools, some universities depend on bespoke software tools. For example, QuestionMark Perception is used in many fields (Velan et al. Citation2002). Using web-based/automated tools reduces marking loads for lecturers, thereby providing time for didactical realisation. Specific smartphone-based apps such as Socrative are also increasingly used to improve engagement (Dervan Citation2014).

2.2. Digital delivery of M&IE programmes

The disciplines of M&IE are broad-ranging covering diverse topics with differing pedagogical requirements. Mechanical engineering students study mechanics, design/manufacturing, thermodynamics, fluid mechanics, robotics, etc. (Tryggvason et al. Citation2001). In contrast, industrial engineering degree programmes often have some mechanical modules and maths-based modules, viz.: operations research, production planning, simulation, logistics, etc. As such, digital delivery of these wide-ranging modules requires different presentation methods/styles.

Learners often need to use engineering software packages (Schonning and Cox Citation2005). Examples are computer-aided design tools (e.g. Solidworks, NX, Autodesk Inventor), finite element analysis tools (e.g. ABAQUS, ANSYS), numerical computing tools (e.g. MATLAB, Mathematica), system design platforms (e.g. LabView, STELLA) and statistical tools (e.g. R, SPSSS). These tools often operate with expensive licenses (Nixon and Dwolatzky Citation2002). While some offer network/student licenses, often, students use physical university computers.

While some universities use conventional VLEs, bespoke solutions have also emerged. Ivanova, Ivanov, and Radkov (Citation2019) developed a 3D VLE for a cutting tools module that simulates laboratory equipment. Sievers et al. (Citation2020) have built a mixed reality environment for collaborative robotics. Kikuchi, Kenjo, and Fukuda (Citation2001) set up a client-server system for brushless DC motors laboratories, where the remote student conducted experiments through a client machine. Remon and Scott (Citation2007) delivered content for design engineering modules with graded-difficulty webcasts helping students gain expertise in CAE. A technical equipment provider has created a versatile data acquisition system (VDAS), allowing students to remotely participate in laboratory experiments (Ltd. Citation2021). Despite these efforts, engineering involves practice, and merely grasping theory cannot ensure success (Feisel and Rosa Citation2005). The importance of hands-on laboratory work cannot be understated, and delivering content virtually can only go so far.

2.3. COVID-19 pandemic and efforts at modifying education delivery

During the pandemic, the effectiveness of remote delivery came under scrutiny, with special support needed for digitally challenged students. The efforts at changing education delivery varied in the countries involved in this study and are summarised below.

2.3.1. United Kingdom

UK higher education responded with measures under the guidance of Universities UK (Universities_UK Citation2020). The initial response concerned campus cases requiring deep cleaning of residence-halls/facilities (Bristol_Live Citation2020). The University of Cambridge suspended examinations (BBC Citation2020). Laboratories in national interest were kept open. Oxford University provided accommodation for students unable to return (Oxford_University Citation2020). Durham University decided to provide ‘online-only’ degrees (The_Guardian Citation2020). Staff members not on essential roles were asked to work from home. A ‘no detriment’ policy was used at several universities, which meant students would not be disadvantaged, yet had to pass their modules (Barradale Citation2020). Many universities relaxed durations for online examinations and enabled flexibility in the admissions process for the new academic year, so students who couldn’t appear at school examinations were judged using other metrics (University_of_Leeds Citation2020).

2.3.2. Portugal

In Portugal, the computational scientific authority, FCCN, made available professional licenses of Zoom videoconferencing tools for instructors. Starting in early March, universities drew up contingency plans, such as moving classes to digital platforms and dropping mandatory attendance (New_University_of_Lisbon Citation2020; Portuguese_Catholic_University Citation2020; University_of_Coimbra Citation2020). The University of Aveiro set up call lines to provide psychological support (University_of_Aveiro Citation2020). Instituto Superior Técnico used teleworking regimes (Instituto_Superior_Técnico Citation2020). At the University of Aveiro, a campus agreement with Microsoft gave access to Office365 and cloud storage. Following the Higher Education General Office (DGES) (DGES_Higher_Education_General_Office Citation2020), graduate lab activities resumed from May’20.

2.3.3. Romania

In Romania, measures were taken by the National Committee of Urgent Affairs and Ministry of Education and Research. The Politehnica University of Bucharest founded a council for emergencies (Polytechnic_University_of_Bucharest Citation2020). The first case of a student testing positive occurred on 17 March (Alexandru_Ioan_Cuza_University_of_Iași Citation2020). Following this, all didactical activities there were conducted using Zoom. The dormitories at Technical University of Cluj-Napoca remained open for internationals (Technical_University_of_Cluj_Napoca Citation2020). At the Lucian Blaga University of Sibiu (Sibiu Citation2020), the migration to online lectures involved using the university web platform and later changed to Google Classroom.

2.3.4. United States of America

US universities responded under the guidance of Centre for Disease Prevention and Control. The University of Washington (University_of_Washington Citation2020) became the first major university to cancel in-person classes. Within a couple of weeks, more than 1100 HEIs switched to remote instruction (Smalley Citation2020). Many eased admissions requirements (University_of_California Citation2020) and leveraged existing distance-learning platforms for regular degree programmes. Some used in-person instruction for 2021–2022 while others adopted limited reopening with blended learning (Anderson Citation2020).

2.3.5. Brazil

Many universities promoted digital inclusion programmes in Brazil and distributed internet kits (UFRJ Citation2020). In southern Brazil, federal universities interrupted teaching activities in March and restarted in August (UFGRS Citation2020; UFSC Citation2020). Supply of masks, laser thermometers, comprehensive signage, occupancy limits, etc. are some examples of actions taken at many HEIs (PUCRS Citation2020). Face-to-face entrance exams were replaced by online ones (PUCPR Citation2020). Private HEIs used candidates’ ENEM scores (National High School Exam) and letters of interest for admission (INEP Citation2020).

3. Methods

3.1. Research design

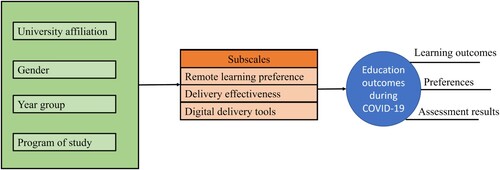

The aim of the current study was to evaluate remote learning and assessment outcomes for M&IE programmes of study during the COVID-19 pandemic for various groups of students based on their university affiliation, gender, year group and programme of study. illustrates the research methodology design. In developing this design, it was noted that educational outcomes were dependent on whether students prefer different modes of remote learning or not (Corter et al. Citation2004). Their preferences may influence their usage of these learning modes and the usage, in turn, correlates with their learning outcomes and assessment results. Secondly, the adoption of digital delivery tools also affects their remote learning outcomes (Condie and Livingston Citation2007). Thirdly, mere adoption does not guarantee effective educational outcomes unless it is also accompanied by effective delivery (Leung Citation2003). Based on these theoretical constructs, the specific research questions that were explored are:

RQ1: How does preference for different modes of face-to-face and remote instruction vary amongst students studying M&IE modules?

RQ2: How effective was the transition to remote education in M&IE during the pandemic as perceived by the students? Moreover, did this perception depend on factors such as gender, university location, year group and program?

RQ3: What steps can be taken to improve the effectiveness of remote delivery of M&IE programs? Also, are there specific subject areas where it is perceived that remote instruction is better suited while others where face-to-face instruction is better suited?

RQ4: How did student preferences for remote learning correlate with assessment outcomes during the pandemic?

Figure 1. Research methodology design: the scale on educational outcomes was determined by three subscales, which were dependent on four key variables and their interactions.

It was hypothesised that face-to-face instruction would be preferred, and this preference would be higher for laboratories compared to lectures and tutorials. This hypothesis was based on typical M&IE curriculum, where there is a strong emphasis on interaction with machines, lab equipment, 3D visualisation and mathematics. This is consistent with several studies (Nowak et al. Citation2004; Vaidyanathan and Rochford Citation1998).

On the second research question, it was hypothesised that the transition to remote teaching will have mixed reactions. While most students would have appreciated continuity in learning, there were several challenges in adapting to new teaching modes, and is well supported by recent studies (Daniel Citation2020; Perets et al. Citation2020).

On the third research question, it was hypothesised that specific modules would suffer more from remote instruction, such as product design and manufacturing, while others with computer simulation and numerical analysis could be delivered with high quality remotely. This hypothesis is backed by studies highlighting the successful transition to remote learning in simulation courses (Ertugrul Citation1998; Vaidyanathan and Rochford Citation1998).

On the fourth question, it was hypothesised that due to competing factors in play, assessment scores would stay the same or be marginally higher for remote assessment. While the sudden transition to remote learning under a global pandemic would likely lower student performance, grade leniency and use of open book examinations could extenuate the effects leading to nominal scores similar to that before the pandemic or even higher as observed by Scoular et al. (Citation2021).

3.2. Sample and data collection

Earlier, Behera (Citation2019) conducted a study on the effectiveness and appreciation of m-learning and blended learning tools in two mechanical engineering modules, where students filled out detailed questionnaires under non-COVID conditions. The student survey for the current study built on the survey questions and inferences from that earlier work. A descriptive open-ended questionnaire was distributed among the co-authors, who served as an expert panel. A survey was then designed by combining questions from the earlier study and responses provided by the panel.

The research hypotheses were tested by conducting this survey on students enrolled in M&IE modules in 5 different universities. Details of the universities involved in this study are provided in Appendix 1. There were 34 multiple choice questions and 7 open-ended descriptive questions. Among the multiple-choice questions, 24 questions were on a 5-point Likert-scale on questions relating to the hypotheses. The primary method of recruiting students was through the module instructors. The secondary method was through a departmental email sent to students. The programmes are accredited in the respective nations and as such, the teaching content is similar to that at other universities in the same country. A minority of responses were also obtained outside these universities through external sites such as Survey Circle. 408 responses were analysed (31.9% female, 67.1% male, 1% not specified) (Behera et al. Citation2021). The sample size at each university as a proportion of total enrolled students varied from 0.14% to 4.84%, as detailed in Appendix 2, together with the gender ratios. 2 also provides details of study participants and modules they were studying. The gender ratio on the engineering modules was skewed with more males than females at all the HEIs, although this is not reflective of the overall student population at all these HEIs. The gender ratio follows the observed trends in STEM subjects (Legewie and DiPrete Citation2014).

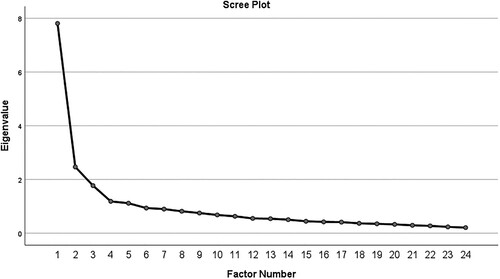

Exploratory factor analyses (EFAs) were conducted on the survey data, which mapped the 24 Likert items to three related characteristics or subscales of educational outcomes, shown in . These subscales are henceforth denoted as subscale 1 (remote learning preference), subscale 2 (delivery effectiveness) and subscale 3 (digital delivery tools). EFA enables highly correlated questions to be linked to the same underlying factor and remove misfit questions (Decoster Citation1998). Parallel analysis was carried out as part of the EFA analyses to select the three subscales (Osborne Citation2007). Maximum likelihood extraction and varimax rotation with Kaiser normalisation was used for all EFAs. shows the scree plot of eigenvalues that helped set the number of subscales to 3. Factor loadings of 0.3 or higher were sought in interpreting the EFAs, as recommended by Rietveld and Roeland (Citation1993). For items with lower factor loadings than desired, a reliability analysis was carried out and items retained if deleting the item did not improve Cronbach’s alpha. This ensured subscales had coherent logic to them despite the EFAs, which are blind to the underlying structure caused by latent variables (Costello and Osborne Citation2005). This explains the even split of the subscales into eight questions each.

The items with their maximum rotated factor loadings and assigned codes are available in Appendix 3. While 23 of the 24 items loaded on to the three factors with loadings greater than 0.3, S2-5 loaded marginally with 0.27. On its removal from subscale 2, the Cronbach’s alpha reduced from 0.717 to 0.708. Hence, it could be retained. Regression analyses were run to complement the EFAs, following procedures for refining psychological scales (Egede and Ellis Citation2010). The mean score for each subscale together with the standard deviation provided an overall measure of the construct.

3.3. Reliability and validity of the instrument

As a questionnaire specifically designed for an evolving pandemic was used, it was not feasible to obtain reliability coefficients from prior work. Cronbach’s analysis was conducted to quantify internal consistency between items in the survey. The three subscales were found to have reliability coefficients of 0.865, 0.717 and 0.822, respectively. As these exceeded 0.7, it can be inferred the questionnaire is internally consistent (Cortina Citation1993). Another important factor in developing new surveys is validity. To ensure validity, correlations between an average measure from each section were obtained. The preference for remote teaching sub-scale was positively correlated with subscales for use and appreciation of digital delivery tools (r(407) = 0.440, p < 0.01) and effectiveness of digital delivery mechanisms (r(407) = 0.615, p < 0.01). Likewise, the subscale for use/appreciation of digital delivery tools was positively correlated with effectiveness of digital delivery mechanisms (r(407) = 0.568, p < 0.01).

3.4. Data analysis

The analysis was carried out in three phases. First, an overall analysis for the entire survey sample was performed. Next, comparisons were made between the universities, genders, year groups and study programmes. Finally, interactions between these factors were investigated. However, sample sizes varied across universities because cohort sizes are different and investigators taught different modules. Although the investigators are all affiliated with M&IE departments, the modules they taught had students from other disciplines, and hence, the effect of enrolled programme on survey outcomes was analysed. The scale for the Likert items was set from 1(Strongly disagree) to 5(Strongly agree). Two-way analysis of variance (ANOVA) was used to compare scores on each individual Likert item and individual subscales. Assumptions for ANOVA were met, i.e. inclusion of random and independent samples and equal variance between samples and approximate normal variation in the data, as analysed from skewness, kurtosis and q-q plots. The two-sampled pooled t-test was considered but was not chosen as population standard deviation is better predicted by mean squared error in ANOVA (Ott and Longnecker Citation2015). Interactions were studied using multivariate analysis of variance (MANOVA). The software SPSS was used for all analyses.

4. Results

4.1. Overall analysis

Analysis of the first sub-scale on remote learning preference indicates a strong preference for face-to-face teaching. Detailed frequency distributions as well as means, standard deviations and modes are tabulated in Appendix 4. Among the instruction modes, there was higher preference for face-to-face laboratories (mean(M) = 4.42). Recorded lectures fared similarly to remote live lectures. Among different module types, design-based modules had a significantly higher score (M = 2.68) on being replaced with remote content compared with science, mathematics and mechanics modules with low effect sizes ().

Table 1. Pairwise comparison of preferences for remote delivery of different module types.

The subscale on delivery effectiveness revealed that remote live lectures were preferred over recorded lectures (M = 3.57). This indicates students perceive benefits from interactions with instructors and motivation in learning activities being simultaneously in class with classmates, even if virtually. A majority found the switch to remote learning to be quite helpful (M = 3.54). However, they did not think remote teaching was as effective as face-to-face teaching (lectures: M = 2.9; tutorials: M = 3.05). Most felt remote group work was difficult (M = 3.53). There was a slight preference for remote assessments over traditional sit-in exams (M = 3.18). Most felt remote teaching was not equally effective for all modules, which provides the possibility for selecting specific modules for remote delivery (M = 2.66). Students were ambivalent about the ability to ask questions remotely (M = 3.04). This is linked to students turning off video and microphones and not having the intent or courage to interrupt a video conference, as observed by academics at all the HEIs.

The subscale on digital delivery tools showed positive feedback on most items, except for communication between learners (M = 3.15). Analysis of non-Likert questions indicated a majority did not face issues in accessing online learning content (55.4%) or had issues that were resolved quickly (35.3%). Together, these make up 90.3% of the survey sample. Likewise, a majority indicated they had unlimited high-speed broadband (67.6%) or unlimited dial-up (12.3%) or pay-as-you-use high-speed internet access (13%). Only 1.7% indicated they had a computer with no internet while 1% had no computer at all. The combined inference indicates remote learning preference was not largely determined by digital access issues.

Prior to COVID-19 lockdowns, a larger fraction accessed VLEs from home (47.6%) as compared to university campus (34.1%). The remaining (18.3%) split their time between off-campus and on-campus access. Most had access to a smartphone, either with limited data (34.1%), without data but using wi-fi (30.1%), or with unlimited data (33.8%). As a significant fraction had limited data, it may not be useful to livestream mobile content involving significant data usage. The majority (73.3%) used smartphones to access module content. VLEs were accessed regularly with 44.9% indicating daily access and 40.7% indicating few times every week.

4.2. Comparative analysis for sub-groups of dataset

4.2.1. Results across universities

There was a significantly higher preference for face-to-face teaching in the UK and US universities compared to Romania, Brazil and Portugal, which had means below 4 for lectures and tutorials (Appendix 4). records p-values for comparisons with overall means. The higher preference for face-to-face laboratories compared to lectures and tutorials is apparent for all universities.

Table 2. Comparison of preference at universities for face-to-face teaching.

On the preference for specific modules for remote teaching, means between 2 and 3 were obtained for all modules across all universities. This indicates clear preference for face-to-face teaching in line with results for the generic questions in subscale 1 (remote learning preference). Students at the UK-HEI favoured mathematics-based modules for remote teaching (M = 2.52). In Romania and Brazil, the highest preference was for design-based modules (Romania: M = 2.72; Brazil: M = 2.83), while in Portugal, students preferred science-based modules (M = 2.75). In USA, students favoured mechanics-based modules (M = 2.73).

The means for comparisons between remote live lectures and recorded lectures was found to be between 3 and 4 for all universities. The Brazil-HEI had the highest preference for remote live lectures (M = 3.75) while the Portugal-HEI had the highest preference for recorded lectures (M = 3.22). Students at all universities felt the switch to remote learning was helpful with means between 3 and 4, with the highest mean for the Brazil-HEI (3.94). Students were ambivalent on whether remote lectures were as effective as face-to-face lectures, with means varying from 2.68 (UK-HEI) to 3.20 (Brazil-HEI). Responses for remote tutorials followed similar trends, albeit with slightly higher means for four universities. On remote group work, the means varied from 3.19 (Brazil-HEI) to 3.86 (Portugal-HEI). The means for remote assessments varied in close range between 3.05 (Portugal-HEI) and 3.37 (USA-HEI). The means for effectiveness of remote teaching for all modules varied between 2.39 (Portugal-HEI) and 2.84 (Romania-HEI). The means for enhanced ability to ask questions varied between 2.64 (UK-HEI) and 3.22 (Romania-HEI).

On the subscale for digital delivery tools, the means for individual Likert items varied in close range. For the UK-HEI, the highest mean was on the ability to revise due to VLE (M = 4.36). For the Romanian HEI, three items had identical highest means of 3.71. The highest means for Brazil, Portugal and USA-HEIs were recorded at 4.25, 4.18 and 3.98 for a variety of resources on VLE.

4.2.2. Influence of study programme

As shown in Appendix 5, industrial engineering (IE) students had the highest preference for face-to-face lectures and tutorials (lectures: M = 4.24; tutorials: M = 4.20). They also had a high preference for face-to-face laboratories (M = 4.47). The means for mechanical engineering (ME) students for face-to-face interaction were lower than economics students. IE students also had the highest preference for face-to-face lectures when compared to recorded lectures (M = 4.11). On the remaining questions on subscale 1 (remote learning preference), the means were comparable for all disciplines, showing preference for face-to-face interaction, with means between 2 and 3.

On subscale 2 (delivery effectiveness), the ME students were the most appreciative of switching to remote learning and had the highest mean on whether remote lectures and tutorials were as effective as face-to-face (lectures:3.01; tutorials:3.25). The means for other questions were comparable across disciplines.

On subscale 3 (digital delivery tools), the means for ‘other’ disciplines were the highest for 5 out of 8 questions, indicating they were more satisfied than ME, IE and economics students. Overall, means were mostly comparable across disciplines, indicating digital delivery tools were being used well across all disciplines. The economics students were the most supportive of mobile apps (M = 3.88) and the use of VLE for communicating with other learners (M = 3.31).

4.2.3. Gender influence

The opinion of both genders had clear trends on the different subscales (Appendix 4). On subscale 1 (remote learning preference), females had higher preference for face-to-face teaching compared to males for all 3 teaching modes. The p-values for comparison of means were recorded as 0.028, 0.030 and 0.086 respectively for lectures, tutorials and laboratories, indicating significance at p<.05 for lectures and tutorials. For all module types, i.e. design, mechanics, science and mathematics, males had higher preference for remote live lectures compared to females. On subscales 2 (delivery effectiveness) and 3 (digital delivery tools), means for most questions were quite close for both genders. The question where there was a significant difference was on the preference for remote assessments to sit-in examinations (males: 3.32; females: 2.94).

4.2.4. Influence of year group

On subscale 1 (remote learning preference), the highest preference for face-to-face instruction was by the second-year undergraduate (lectures: 4.24, tutorials: 4.48, laboratories: 4.59) and PhD students (lectures: 4.31, tutorials: 4.44, laboratories: 4.56) (Appendix 6). These two groups recorded an equal highest mean for face-to-face vs. recorded lectures (4.38). The third-year undergraduates had the highest preference for remote teaching on design-based modules (M = 2.82) while the Master’s students had the highest preference for mechanics (M = 2.73), science (M = 2.74) and mathematics-based modules (M = 2.66).

On subscale 2 (delivery effectiveness), the highest preference for remote live lectures vis-à-vis recorded lectures was observed in the unclassified group (M = 4.1) followed by the 2nd-year undergraduates (M = 3.93). The 4th-year undergraduates showed highest preference for switch to remote learning (M = 3.70), effectiveness of remote lectures (M = 3.03), and remote tutorials (M = 3.32). The means for the difficulty of remote group work lay between 3 and 4 for all year groups, with the highest mean observed for 2nd-year undergraduates (M = 3.83). The Master’s students had highest preference for remote assessments (M = 3.31). The 1st-year undergraduates had the highest mean on whether remote live instruction was equally effective for all modules (M = 2.85). As all year groups had means below 3, this indicates the general opinion is effectiveness of different modules varied. The first-year undergraduates had the highest mean for being able to ask questions in remote lectures (M = 3.21).

On subscale 3 (digital delivery tools), the means varied in close range. The 4th-year undergraduates had highest means for (i) presence of variety of resources (M = 4.03), (ii) studying effectively (M = 3.48) and (iii) preference for mobile apps (M = 3.7). The 3rd-year students scored highest on being able to read before lectures (M = 3.66) and being able to revise at convenient places/times (M = 3.87). The first-year undergraduates had the highest mean for communicating with other learners (M = 3.39). The unclassified students recorded the highest mean for being able to revise after lectures (M = 4.20) while PhD students had the highest mean for announcements on VLE (M = 3.81).

4.3. Interactions between factors

The interaction effects of the independent variables were studied for the subscales and individual Likert items by carrying out multivariate analysis (MANOVA). Subscale scores were first evaluated by taking an average of scores for individual Likert scale items, reverse recoding items (S1-1, S1-2, S1-3, S1-4 and S2-5) as necessary to ensure correct interpretation of the subscale’s effect. Statistics for various subscales are summarised in . The mean scores indicate an overall low preference for remote learning (2.23), modest delivery effectiveness (3.05) and effective digital delivery tools (3.61).

Table 3. Distribution statistics for various subscales.

There was a significant difference between the genders when considered jointly on all subscales, Wilk’s Λ = 0.952, F(6,634) = 2.650, p = .015, partial-η2 = .024. Two interactions with gender were found significant: (i) university by gender interaction (Wilk’s Λ = 0.906, F(18,897) = 1.772, p = .024, partial-η2 = .032) and (ii) gender by year of study (Wilk’s Λ = 0.903, F(21,910.8) = 1.573, p = .049, partial-η2 = .034).

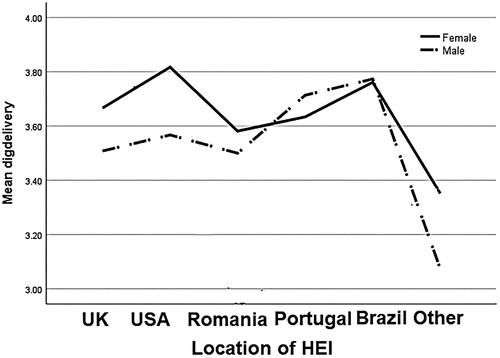

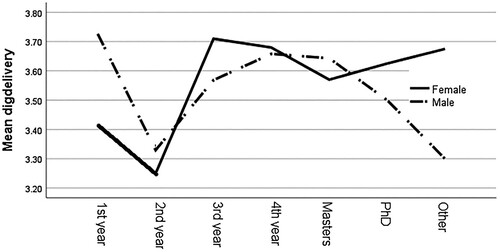

Supplement 1 shows the interaction effects on the subscales. By using Bonferroni adjusted levels, two interactions are particularly significant. These are: university by gender for subscale 3 (F(6,319) = 3.153, p = .005, partial-η2 = .056) and gender by year interaction for subscale 3 (digital delivery tools) (F(7,319) = 2.887, p = .006, partial-η2 = .06). For three of the five universities, females scored higher for subscale 3, while for Portugal and Brazil-HEIs, males scored higher. This is illustrated in . Males from 1st-year UG, 2nd-year UG and Master’s years scored higher than females. Females from 3rd-year UG, 4th-year UG and PhD programmes scored higher than males. This is illustrated in .

Figure 3. Interaction between university and gender for subscale 3.

Note: error bars are not shown for ease of interpretation.

Figure 4. Interaction between year of study and gender for subscale 3.

Note: error bars are not shown for ease of interpretation.

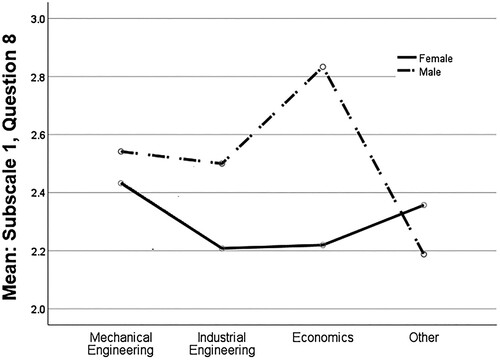

Supplement 2 shows interaction effects for various Likert scale items. Again, using Bonferroni adjusted levels, significant interaction could be deduced. For the question regarding student preference for remote live lectures on mathematics-based modules (subscale 1 on remote learning preference), the gender by programme interaction was found to be significant (F(3,319) = 4.916, p = .002, partial-η2 = 0.044). shows this interaction effect. While the mean for males is higher for all three disciplines, it is significantly higher for economics than it is for the remaining two disciplines.

Figure 5. Interaction effect between study programme and gender for question 8 of subscale 1.

Note: error bars are not shown for ease of interpretation.

Interaction effects were studied for all combinations of interaction levels between the four key independent variables. This resulted in 745 comparisons for which effect sizes were calculated. The most important comparisons for each subscale are reported in Supplements 3–5. The red lines separate the comparison groups within each subscale.

4.4. Assessment outcomes

The assessment outcomes between the pandemic (2019–2020 and 2020–2021) and non-pandemic (2018–2019) years were compared (see Appendices 7–9). Appendix 10 provides assessment outcomes for the UK-HEI for a six-year span from 2015 to 2021. Seven modules observed increases in class averages from 2018–2019 to 2019–2020, while three observed decreases. Most changes were statistically non-significant except the module on advanced statics at the UK-HEI, where there was a jump from 55% to 83%. The pass percentages increased for six modules, decreased for three and stayed the same for one. The pass% for the metal forming module at the Romania-HEI observed a significant jump from 66% to 76.3%, while for the finite-element method module, there was a fall from 92.9% to 84.9%.

At the USA-HEI, assessment outcomes did not vary much during the pandemic. This could be because this is a postgraduate module and students had better capabilities to adopt the changes and challenges from online learning, and hence, scored well. Moreover, the university and instructor also tried their best to minimise the impact by offering live lectures and office hours. The student preferences were strongly in favour of face-to-face teaching and the USA-HEI had the highest preference for remote assessments (M = 3.37) but, these did not change the assessment outcomes.

At the UK-HEI, for the advanced statics module, there was a significant increase in the means for individual components as well as the overall mean. This can be attributed to changes in assessment modes and high preference for remote assessments (M = 3.32). Students had to attempt a take-home exam instead of the usual face-to-face closed book exams. They had to then present the solutions to the examination questions. This increased their accountability and they had to put in the effort so that they could stand up to the oral viva, which potentially improved the accuracy of their solutions. The overall mean for the second year of the pandemic was similar to the year before the pandemic as the assessment method did not include an oral viva, although it was still open book. Some students also resorted to academic malpractice in the second year which brought down the overall mean. The lower means on the laboratory exams can be potentially linked to their high preference for face-to-face laboratories. For the advanced dynamics module, similar effects were observed with means increasing for the first year of the pandemic and then falling back to normal during the second year. Students at the UK-HEI had the strongest preference for mechanics-based modules to be taught face-to-face, which might explain the mean of 40.87 for the written exams during the second pandemic year.

At the Brazil-HEI, on the module ‘Introduction to Engineering’, a modest increase in the overall mean was observed during the second year of the pandemic fuelled by higher scores on the laboratory exams where the mean rose by more than 20%. On the CAD/CAM module, a modest increase in the overall mean was observed during the first year of the pandemic due to higher scores on the written exams where the mean rose by more than 8%. The means during the second year of the pandemic were substantially lower by nearly 20% on all components. This could be linked to stronger preference for face-to-face laboratories (M = 4.7) compared to preference for face-to-face lectures (M = 3.8) and tutorials (M = 3.66). On the welding module, the overall means were higher by ∼ 8% and ∼ 5% for the two years of the pandemic largely due to increase in the means for the written exams.

At the Romania-HEI, on the finite element method (FEM) module for the mechatronics students, the overall and components means during the second year of the pandemic were significantly lower than the non-pandemic year. On the FEM module for the IE students, there was a modest increase in the means (overall and components) during the first year of the pandemic, but the marks returned back to normal during the second year. On the metal forming module, the mean for the written exam dropped during both years of the pandemic. These outcomes at Romania cannot be coherently linked to the student preferences, but seem to be random variations depending on the cohorts and the examinations set in each year.

The only significant change in assessment outcomes at Portugal was for the written exam mean during the second year of the pandemic (an increase from 46.1% in 2018–2019 to 61.2% in 2020–2021). Since the first lockdown was implemented in emergency mode, study materials had to be prepared quickly. For the second year of the pandemic, there was plenty of time to prepare both asynchronous and synchronous sessions. Also, students were more adapted to the new remote teaching paradigm. So, despite Portuguese students having the least preference for remote assessments among all the HEIs, the assessment outcomes did not vary significantly.

5. Discussion: indicators and guidance for future preparedness

There is a preference for face-to-face instruction regardless of the sub-groups studied. This is understandable given these students had enrolled for face-to-face education as opposed to students attending an open university. Within the three types of instruction investigated, the preference for face-to-face laboratories was the highest. This can be explained as laboratories are key to understanding engineering concepts and require physical presence, except work that primarily involves software. As this preference for face-to-face laboratories was on expected lines, it provided confidence in answers provided by the survey population.

The students at the UK and USA HEIs preferred face-to-face teaching more than the others. A review of answers to free text questions reveals this is linked to high tuition fees in UK and USA, which gives students the feeling they are not getting value from their education, if conducted remotely. Wilkins, Shams, and Huisman (Citation2013) pointed to anxiety in UK students due to fee changes introduced, following the Brown review in 2010. Besides, students in UK tended to enrol in universities far from their parental homes, with an expectation of face-to-face teaching (McCaig Citation2011). In the remaining three countries, university education is highly subsidised by the government. The Portugal-HEI had the highest preference for recorded lectures. This can be explained by the length of the recorded videos, which was the shortest in Portugal at ∼25 min. Longer recorded lectures tend to be disliked by students.

There was also a clear preference for design-based modules to be moved online. This can potentially be attributed to the presence of long mathematical derivations in other modules, which students followed better face-to-face where they could ask questions. Likewise, the IE students had higher preference for face-to-face teaching than the other disciplines. While this variation could partly be attributed to different sample sizes, one possible explanation is that industrial engineering is a more mathematical discipline requiring rigorous derivations on modules such as operations research. However, this merits further investigation.

While students generally felt moving teaching online was helpful, the effectiveness of delivered education merits discussion. It is evident from the ambiguous responses (means centered around ‘neither agree nor disagree’) that it was not a smooth transition. Students missed out on valuable instruction in a manner that was made available to prior or future batches unaffected by the pandemic. Of particular interest is remote group work that became difficult due to the pandemic. This can thus be identified as another area which needs planning to enhance teaching delivery.

The survey also revealed one key shortcoming of current VLEs, that of communication between students. This item scored the least on the delivery tools subscale (M = 3.15). This is one area where e-learning companies and universities can work together to improve.

Gender was a strong source of variation. This is not surprising considering in previous studies, significant differences between males and females had been reported, such as more stable attitude in males and more stable participation by females (Rizvi, Rienties, and Khoja Citation2019). Also, Diep et al. (Citation2016) report gender influencing online interactions between learners. The results from this study indicate females preferred face-to-face teaching more than males. In addition, gender showed significant interaction with university, year group and programme.

The interactions observed indicate specific variations within subgroups where action is merited. Particularly, in the UK and Romania HEIs, VLE-based digital delivery has scope for improvement, while in the USA-HEI, gender gap in delivery through VLE needs to be addressed so males are able to effectively use VLEs as much as females. Likewise, for mathematics-based modules, females prefer face-to-face teaching more and this can be addressed by conducting such classes in person. This is particularly required for female students studying IE. Also, the 2nd-year undergraduates have been most affected by the transition and need further attention in coming academic years to strengthen educational outcomes.

The assessment outcomes have shown different patterns across the two years of the pandemic at different HEIs. Some of it is linked to student preferences and others linked to change in assessment styles (e.g. from closed book to open book). The variation for advanced statics at the UK-HEI could be explained by a new assessment regime where students were given time to draft solutions and present them orally. In Brazil, there was a drop in scores for 2020–2021 that could be linked to student preference for face-to-face teaching. In Romania, USA and Portugal, no such links could be established.

Many universities that operate in primarily face-to-face mode have moved to a blended learning approach. However, this comes with several challenges. Condie and Livingston (Citation2007) point out this leads to academics feeling loss of identity, control and uncertainty of intervention. Further, they perceive this as a threat in supporting students engaged in self-learning parts of the curriculum. Besides, the online part of the blended learning approach is not suitable to all abilities. Self-motivation and maturity are required for success in educational endeavors. Engineering educators and administrators need to ask critical questions on how to address the challenges identified. Based on the analysis from the survey, the experience and exposure of the authors and current remote learning trends, a set of tools were identified (), which can be used for future preparedness.

Table 4. Tools for remote learning future preparedness.

6. Limitations of this study

This study used a cross-sectional design, which limits some conclusions. For instance, it is not feasible to find causal links between length of exposure to online learning to preferences for remote teaching. While for the 1st-year undergraduates, the exposure to face-to-face university education was limited, the latter years had learnt primarily face-to-face. As the pandemic continues and online teaching is persisted with, student preferences after a sustained period might change. The inability to measure persistence over time and to carry out psycho-social analysis of individual cases is a common limitation of cross-sectional study designs (Hazari et al. Citation2010).

The study did not evaluate responses based on certain attributes such as age, region of origin, poverty level, previous educational outcomes, variations in learning design at the different universities, cultural differences, interactions between learners, etc. which affect student perception (Rizvi, Rienties, and Khoja Citation2019). This study was conducted during a once-in-a-century pandemic, with different governments and universities applying different measures. This could impact some study outcomes as it is hard to link government and university policies to student preferences. These aspects can be explored in future studies.

Although the study was conducted in M&IE departments, there were a sizeable number of students from other disciplines (notably, economics (N = 59) and an amalgamation of other disciplines (N = 75)) who were also taking some of the interdisciplinary modules. The overall trends for each subscale remain intact and since discipline-wise analysis is presented, the results are clearly presented for future use. So, if practitioners from other disciplines wanted to look at a more generic picture, the means to individual Likert items under the classification of ‘Other’ could be used. However, the survey sample was targeted and cross-sectional.

Likert scales suffer from limitations such as central tendency bias, acquiescence bias and social desirability bias (Nadler, Weston, and Voyles Citation2015). As a 5-point scale was used, central tendency bias could not be completely avoided. However, the results indicate that only 5 of the 24 questions had the mode ‘3’, and hence, to that extent, central tendency bias was not evident across the entire survey, which indicates a high degree of survey reliability. The survey also had free-form text box questions to ensure that the responses could be checked for acquiescence bias. As an online, anonymous survey was used, social desirability bias was avoided to a great extent.

The intention with this study was to evaluate student preferences during the pandemic that could provide guidance for modifying future delivery. While expert panel evaluations could have yielded further insights, those would have had limited capabilities for data collections (Cash, Stanković, and Štorga Citation2016). Threats to the internal validity of the survey were reduced by using random selection within the same sampling time frame from all institutions. The results indicate that despite any missing causal links, clear trends emerged from the survey, which can be used for modifying M&IE teaching delivery to benefit academic communities.

7. Implications for future studies

The study can be further improved by bringing in missing attributes identified in Section 6. The survey questions can be made more specific to a list of modules rather than grouping as ‘mathematics-based’ or ‘mechanics-based’ or ‘design-based’. However, this needs careful work as curricula vary by university and geography.

The Brazil-HEI had the highest appreciation for digital delivery tools. This provides pointers to one or both of two things: (i) VLEs were used more efficiently, (ii) students were concerned face-to-face instruction was not safe (either due to Brazil being a developing country or due to governmental policies). The exact cause could not be ascertained from the survey, as this result was not one of the hypotheses and was evident only post analysis. However, the cause merits some investigation.

The questionnaire length also poses some issues in gathering reliable data. A longer questionnaire leads to lower student interest in answering all questions honestly or mental fatigue. Hence, the questionnaire can be broken down into sections administered separately at different instances of time, with limited number of questions which can elicit more honest responses. As Cronbach’s alpha numbers were well over 0.7, this indicates more items are not necessarily needed. However, it is possible to trim the survey by removing questions that yield similar outcomes, such as questions on subscale 3 (delivery tools).

8. Conclusions

The results from this study provide key trends and guidance to universities for future action in reorganising their online teaching delivery in M&IE in coping with this pandemic and future disruptions. Both quantitative and qualitative answers to the research questions were obtained which are summarised below:

The preference for face-to-face teaching in all instruction modes was evident, although ERT was acceptable to most students due to the nature of the pandemic, where it became unavoidable. Laboratories were the most preferred for face-to-face delivery. Remote live lectures were preferred over recorded lectures. These results are significant in that the trends were clear across all HEIs and the first clear results establishing these student preferences in M&IE disciplines.

One key challenge was ensuring continuity in learning in a discipline dominated by practice and where insights gained through hands-on learning is key to emerging as a skilled engineer valued by industry and society. The students echoed that need by showing stronger preference for face-to-face laboratories. Past work has highlighted that online laboratories are not satisfactory solutions for engineering disciplines (Hyder, Thames, and Schaefer Citation2009). The delivery effectiveness score (3.05) indicated that while the transition to ERT was not a complete disaster, there was still significant room for improvement. One key problem area was groupwork and communication between students which can be improved with digital innovations.

The uptake and usage of digital delivery tools was promising with a score of 3.61. However, there is still room for improvement, as the score for this subscale as well as the others was below 4. There was higher preference for design-based modules to be moved online. This aspect is a novel finding that can be potentially used by HEIs.

The study highlighted several factors that influence online teaching delivery in M&IE. These included university, gender, programme and year of study. These factors and their interactions correlated with the preference for remote delivery, delivery effectiveness and impact of digital delivery tools. Despite being a survey targeting M&IE students, the general trends remain intact for other disciplines. The perception of economics students studying engineering modules indicates the underlying factors in external disciplines studying M&IE.

The progression of assessment scores with the evolution of the pandemic indicated links with student preferences, assessment modes (open book vs. closed book) and gradual adaptation from the first pandemic year to the next.

The factors underlying student perceptions as identified in this research need to be accounted for in responding to disaster scenarios and building resilient remote engineering education infrastructure globally. The outcome of the study is important as the pandemic is yet to be contained in many countries, with new waves of propagation appearing. In doing so, the importance of tailored remote learning in engineering education was never so big and may become the new normal in the coming years.

Supplemental Material

Download Zip (513.8 KB)Acknowledgements

The authors would like to thank the Research Ethics Committee at the Faculty of Science and Engineering at the University of Chester for processing the ethics clearance for the survey used in this paper in a timely manner (Reference: 158/20/AB/ME). The research participants have provided informed consent for this survey (done using Google Forms) at the start of the survey before answering questions, where an information sheet is provided with all the details of the survey, and where it clearly states the below: By taking part in this survey, you agree to the following: (1) I confirm that I have read, or had read to me, and understand the information sheet dated 08/05/2020 for the above study. I have had the opportunity to ask questions and these have been answered fully. (2) I understand that my participation is voluntary and I am free to withdraw at any time, without giving any reason and without my legal rights being affected. (3) I understand the study is being conducted by researchers from five universities and that my personal information will be held securely on their university premises and handled in accordance with the provisions of the Data Protection Act 2018. (4) I understand that data collected as part of this study may be looked at by authorised individuals from the universities and regulatory authorities where it is relevant to my taking part in this research. I give permission for these individuals to have access to this information. (5) I agree to take part in the above study.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

Data openly available in a public repository that issues datasets with DOIs.

The data that support the findings of this study are openly available ReShare (UK Data Service) at https://reshare.ukdataservice.ac.uk/855089/ or https://dx.doi.org/10.5255/UKDA-SN-855089 [Reference Number: 855089].

Full citation for dataset

Behera, Amar Kumar and Alves de Sousa, Ricardo and Oleksik, Valentin and Dong, Jingyan and Fritzen, Daniel (2021). Multi-metric Evaluation of the Effectiveness of Remote Learning in Mechanical and Industrial Engineering During the COVID-19 Pandemic: Indicators and Guidance for Future Preparedness, 2020. [Data Collection]. Colchester, Essex: UK Data Service. https://dx.doi.org/10.5255/UKDA-SN-855089.

Additional information

Notes on contributors

Amar Kumar Behera

Amar Kumar Behera is a Senior Lecturer and Program Lead in Mechanical Engineering at the University of Chester. He holds a BTech in Manufacturing Science and Engineering, MTech in Industrial Engineering and Management and Minor in Electronics & Electrical Communication Engineering from Indian Institute of Technology, Kharagpur. He also has MS in Mechanical Engineering from the University of Illinois at Urbana-Champaign, a PhD from Katholieke Universiteit Leuven, Belgium and a Post Graduate Certificate in Higher Education Teaching from Queen's University Belfast. He is also a Fellow of the Higher Education Academy. His past work in engineering education has focussed on active learning using robotics systems, peer assisted learning, e-learning, m-learning and blended learning tools.

Ricardo Alves de Sousa

Ricardo Alves de Sousa is a tenured Professor at the University of Aveiro, Portugal. He has obtained BSc and MSc degrees in Mechanical Engineering from the University of Porto in 2000 and 2003. In 2006 and 2017, he obtained the PhD and Habilitation degrees in Mechanical Engineering from the University of Aveiro, Portugal. In 2011, he received the international scientific ESAFORM (European Association for Material Forming) prize for outstanding contributions in the field and in 2013, the innovation prize from APCOR (Portuguese Association of Cork Material), Portugal. He presides the Portuguese Association of Forensic Biomechanics. He is author of more than a 100 works in peer reviewed international journals.

Valentin Oleksik

Valentin Oleksik is a full-time Professor at the Faculty of Engineering of Lucian Blaga University of Sibiu, Romania. He received the B.S. degree in Industrial Engineering in 1995 and the M.S. degree in 1997 from the Lucian Blaga University of Sibiu, Romania. In 2005 he obtained the Ph.D and in 2016 he obtained the Habilitation degree in Industrial Engineering at the same University. Since 1995, he is a Faculty Member in the Department of Industrial Machinery and Equipment Since 2012 he is Vice-Dean with Scientific Research and Doctoral Studies in the Faculty of Engineering. His current research fields include metal forming technologies, numerical simulations using finite element method and biomechanics.

Jingyan Dong

Jingyan Dong received the B.S. degree in automatic control from the University of Science and Technology of China in 1998, the M.S. degree from the Chinese Academy of Sciences, Beijing, in 2001, and the Ph.D. degree in mechanical engineering from University of Illinois, Urbana- Champaign, in 2006. Since 2008, he has been a Faculty Member in the Department of Industrial and Systems Engineering, North Carolina State University. His current research interests include micro/nanomanufacturing, mechatronics, and flexible and stretchable devices. In the engineering education area.

Daniel Fritzen

Daniel Fritzen is a Professor of the UNISATC University Center, Criciúma, Brazil. He has obtained BSc degrees in Industrial Automation Technology from UNESC (2005). In 2012 and 2016, he received M.S. and Ph.D. degree, respectively, in metallurgical engineering both from the Federal University of Rio Grande do Sul - UFRGS. He is the coordinator of the Laboratory of Prototyping and New Technologies Oriented to 3D - PRONTO 3D, and has interests in the development of new processes / tools that assist in higher education, using Active Learning Methodologies, and the use of rapid prototyping technologies in teaching and research.

References

- Aboagye, E., J. A. Yawson, and K. N. Appiah. 2020. “COVID-19 and E-Learning: The Challenges of Students in Tertiary Institutions.” Social Education Research 2 (1): 1–8. https://doi.org/10.37256/ser.212021422

- Al-Fraihat, D., M. Joy, and J. Sinclair. 2020. “Evaluating E-Learning Systems Success: An Empirical Study.” Computers in Human Behavior 102: 67–86.

- Alexandru_Ioan_Cuza_University_of_Iași. 2020. Press Review. March 18. https://www.uaic.ro/revista_presei/revista-presei-18-martie-2020/.

- Alqahtani, A. Y., and A. A. Rajkhan. 2020. “E-Learning Critical Success Factors During the Covid-19 Pandemic: A Comprehensive Analysis of E-Learning Managerial Perspectives.” Education Sciences 10 (9): 216.

- Anderson, N. 2020. “A Tuition Break, Half-Empty Campuses and Home-Testing Kits: More Top Colleges Announce Fall Plans.” https://www.washingtonpost.com/education/2020/07/06/harvard-reopen-with-fewer-than-half-undergrads-campus-because-coronavirus/.

- Asabere, N. 2012. “Towards a Perspective of Information and Communication Technology (ICT) in Education: Migrating from Electronic Learning (E-Learning) to Mobile Learning (M-Learning).” International Journal of Information and Communication Technology Research© 2012 ICT Journal 2 (8). http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.301.3049&rep=rep1&type=pdf

- Barnett, R. 2007. “Assessment in Higher Education: An Impossible Mission?” In Rethinking Assessment in Higher Education, edited by David Boud and Nancy Falchikov, 39–50. Oxon and New York: Routledge.

- Barradale, G. 2020. “Compare How Your UNI Is Changing Exams and Assessments to Cope with Coronavirus.” https://thetab.com/uk/2020/03/30/compare-how-your-uni-is-changing-exams-and-assessments-to-cope-with-coronavirus-149959.

- Bawa, P. 2020. “Learning in the age of SARS-COV-2: A Quantitative Study of Learners’ Performance in the Age of Emergency Remote Teaching.” Computers and Education Open 1: 100016.

- BBC. 2020. “Coronavirus: Cambridge University Urges Students to ‘Return Home’.” https://www.bbc.co.uk/news/uk-england-cambridgeshire-51950678

- Behera, A. 2019. Effectiveness and Appreciation of M-Learning and Blended Learning Tools in Engineering Education. Research Report: Queen's University Belfast.

- Behera, A. K., R. A. de Sousa, V. Oleksik, J. Dong, and D. Fritzen. 2021. “Multi-Metric Evaluation of the Effectiveness of Remote Learning in Mechanical and Industrial Engineering During the COVID-19 Pandemic: Indicators and Guidance for Future Preparedness, 2020 [Data Collection].” Colchester, Essex: 10.5255/UKDA-SN-855089. UK Data Service.

- Boud, D. 2007. “Reframing Assessment as if Learning Were Important.” In Rethinking Assessment in Higher Education, edited by David Boud and Nancy Falchikov, 24–36. Oxon and New York: Routledge.

- Brazilian-Institute-of-Geography-and-Statistics. 2020. “Projection of the Population of Brazil and the Federation Units.” https://www.ibge.gov.br/apps/populacao/projecao/index.html.

- Bristol_Live. 2020. “‘Deep Clean’ at University Halls after Student Tests Positive for Coronavirus.” https://www.bristolpost.co.uk/news/bristol-news/deep-clean-university-halls-after-3949851.

- Camilli, G. 2006. “Test Fairness.” Educational Measurement 4: 221–256.

- Cash, P., T. Stanković, and M. Štorga. 2016. Experimental Design Research. Cham: Springer International Publishing.

- Choudhury, S., and S. Pattnaik. 2020. “Emerging Themes in E-Learning: A Review from the Stakeholders’ Perspective.” Computers & Education 144: 103657.

- Condie, R., and K. Livingston. 2007. “Blending Online Learning with Traditional Approaches: Changing Practices.” British Journal of Educational Technology 38 (2): 337–348. doi:10.1111/j.1467-8535.2006.00630.x.

- Corter, J. E., J. V. Nickerson, S. K. Esche, and C. Chassapis. 2004. “Remote Versus Hands-on Labs: A Comparative Study.” Paper Presented at the 34th Annual Frontiers in Education, 2004. FIE 2004, Savannah, GA.

- Cortina, J. M. 1993. “What Is Coefficient Alpha? An Examination of Theory and Applications.” Journal of Applied Psychology 78 (1): 98.

- Costello, A. B., and J. Osborne. 2005. “Best Practices in Exploratory Factor Analysis: Four Recommendations for Getting the Most from Your Analysis.” Practical Assessment, Research, and Evaluation 10 (1): 7.

- Daniel, S. J. 2020. “Education and the COVID-19 Pandemic.” Prospects 49: 91–96.

- Decoster, J. 1998. “Overview of Factor Analysis.” http://www.stat-help.com/factor.pdf.

- DeLone, W. H., and E. R. McLean. 1992. “Information Systems Success: The Quest for the Dependent Variable.” Information Systems Research 3 (1): 60–95.

- Delone, W. H., and E. R. McLean. 2003. “The DeLone and McLean Model of Information Systems Success: A Ten-Year Update.” Journal of Management Information Systems 19 (4): 9–30.

- Dervan, P. 2014. “Increasing In-Class Student Engagement Using Socrative (an Online Student Response System).” AISHE-J: The All Ireland Journal of Teaching and Learning in Higher Education 6: 3.

- DGES_Higher_Education_General_Office. 2020. “Skills for Post-COVID – Skills for the Future.” https://www.dges.gov.pt/pt/content/skills-4-pos-covid-competencias-para-o-futuro.

- Diep, N. A., C. Cocquyt, C. Zhu, and T. Vanwing. 2016. “Predicting Adult Learners’ Online Participation: Effects of Altruism, Performance Expectancy, and Social Capital.” Computers & Education 101: 84–101.

- Egede, L. E., and C. Ellis. 2010. “Development and Psychometric Properties of the 12-Item Diabetes Fatalism Scale.” Journal of General Internal Medicine 25 (1): 61–66.

- Ertugrul, N. 1998. “New Era in Engineering Experiments: An Integrated and Interactive Teaching/Learning Approach, and Real-Time Visualisations.” International Journal of Engineering Education 14 (5): 344–355.

- Feisel, L. D., and A. J. Rosa. 2005. “The Role of the Laboratory in Undergraduate Engineering Education.” Journal of Engineering Education 94 (1): 121–130.

- Fernandes, F. A., N. Fuchter Júnior, A. Daleffe, D. Fritzen, and R. J. Alves de Sousa. 2020. “Integrating CAD/CAE/CAM in Engineering Curricula: A Project-Based Learning Approach.” Education Sciences 10 (5): 125.

- Garrison, D. R., T. Anderson, and W. Archer. 2001. “Critical Thinking, Cognitive Presence, and Computer Conferencing in Distance Education.” American Journal of Distance Education 15 (1): 7–23.

- Garrison, D. R., and H. Kanuka. 2004. “Blended Learning: Uncovering its Transformative Potential in Higher Education.” The Internet and Higher Education 7 (2): 95–105.

- Gonzales, A. L., J. McCrory Calarco, and T. Lynch. 2018. “Technology Problems and Student Achievement Gaps: A Validation and Extension of the Technology Maintenance Construct.” Communication Research. doi:10.1177/0093650218796366.

- Graham, C. R. 2006. “Blended Learning Systems.” In The Handbook of Blended Learning: Global Perspectives, Local Designs, edited by Curtis J. Bonk and Charles R. Graham, 3–21. San Francisco: Pfeiffer.

- Haag, J. 2011. “From Elearning to Mlearning: The Effectiveness of Mobile Course Delivery.” Paper Presented at the Interservice/Industry Training, Simulation & Education Conference (I/ITSEC), Orlando, FL.

- Hawkes, M. 2001. “Variables of Interest in Exploring the Reflective Outcomes of Network-Based Communication.” Journal of Research on Computing in Education 33 (3): 299–315.

- Hay, P. J., C. Engstrom, A. Green, P. Friis, S. Dickens, and D. Macdonald. 2013. “Promoting Assessment Efficacy Through an Integrated System for Online Clinical Assessment of Practical Skills.” Assessment & Evaluation in Higher Education 38 (5): 520–535.

- Hazari, Z., G. Sonnert, P. M. Sadler, and M. C. Shanahan. 2010. “Connecting High School Physics Experiences, Outcome Expectations, Physics Identity, and Physics Career Choice: A Gender Study.” Journal of Research in Science Teaching 47 (8): 978–1003.

- Hyder, A. C., J. L. Thames, and D. Schaefer. 2009. “Enhancing Mechanical Engineering Distance Education Through IT-Enabled Remote Laboratories.” Paper Presented at the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, San Diego, California, USA.

- INEP. 2020. “ENEM Exam Dates.” https://enem.inep.gov.br/.

- Instituto_Superior_Técnico. 2020. “Coronavirus (COVID-19).” http://tecnico.ulisboa.pt/coronavirus/.

- Ivanova, G., A. Ivanov, and M. Radkov. 2019. “3D Virtual Learning and Measuring Environment for Mechanical Engineering Education.” Paper Presented at the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO).

- Kane, M. T. 2001. “Current Concerns in Validity Theory.” Journal of Educational Measurement 38 (4): 319–342.

- Keegan, D. 2002. The Future of Learning: From eLearning to mLearning.

- Kikuchi, T., T. Kenjo, and S. Fukuda. 2001. “Remote Laboratory for a Brushless DC Motor.” IEEE Transactions on Education 44 (2): 12.

- Legewie, J., and T. A. DiPrete. 2014. “The High School Environment and the Gender gap in Science and Engineering.” Sociology of Education 87 (4): 259–280.

- Leung, H. K. 2003. “Evaluating the Effectiveness of E-Learning.” Computer Science Education 13 (2): 123–136.

- Ltd., T. 2021. “V-DAS E-Lab Demonstrator.” https://www.tecquipment.com/vdas/vdas-e-lab.

- Mathrani, S., A. Mathrani, and M. Khatun. 2020. “Exogenous and Endogenous Knowledge Structures in Dual-Mode Course Deliveries.” Computers and Education Open, 1:100018.

- McCaig, C. 2011. “Trajectories of Higher Education System Differentiation: Structural Policy-Making and the Impact of Tuition Fees in England and Australia.” Journal of Education and Work 24 (1–2): 7–25. doi:10.1080/13639080.2010.534772.

- McLoughlin, C., and J. Luca. 2002. “A Learner–Centred Approach to Developing Team Skills Through Web–Based Learning and Assessment.” British Journal of Educational Technology 33 (5): 571–582.

- Nadler, J. T., R. Weston, and E. C. Voyles. 2015. “Stuck in the Middle: The Use and Interpretation of Mid-Points in Items on Questionnaires.” The Journal of General Psychology 142 (2): 71–89.

- National-Institute-of-Statistics. 2020a. Resident Population * on January 1, 2020p Decreased by 96.5 Thousand People. https://insse.ro/cms/sites/default/files/com_presa/com_pdf/poprez_ian2020r.pdf.

- National-Institute-of-Statistics. 2020b. Special Highlight Statistics Portugal COVID-19. https://www.ine.pt/xportal/xmain?xpid=INE&xpgid=ine_main&xlang=en.

- New_University_of_Lisbon. 2020. Information on COVID-19. https://www.unl.pt/en/news/general/march-10-contingency-measures-covid-19.

- Nixon, K., and B. Dwolatzky. 2002. “Computer Laboratory Infrastructure in Engineering Education – a Case Study at Wits.” Paper Presented at the IEEE AFRICON. 6th AFRICON Conference in Africa, George, South Africa.

- Northcote, M. 2003. “Online Assessment in Higher Education: The Influence of Pedagogy on the Construction of Students’ Epistemologies.” Issues in Educational Research 13 (1): 66–84.

- Nowak, K. L., J. Watt, J. B. Walther, C. Pascal, S. Hill, and M. Lynch. 2004. “Contrasting Time Mode and Sensory Modality in the Performance of Computer Mediated Groups Using Asynchronous Videoconferencing.” Paper Presented at Proceedings of the 37th Annual Hawaii International Conference on System Sciences, 2004.

- Office-for-National-Statistics. 2019. “Population Estimates for the UK, England and Wales, Scotland and Northern Ireland: Mid-2019.” https://www.ons.gov.uk/peoplepopulationandcommunity/populationandmigration/populationestimates/bulletins/annualmidyearpopulationestimates/mid2019estimates.

- Osborne, J. W. 2007. Best Practices in Quantitative Methods. Los Angeles, CA: Sage.

- Ott, R. L., and M. T. Longnecker. 2015. An Introduction to Statistical Methods and Data Analysis. Boston, MA: Cengage Learning.

- Oxford_University. 2020. “Coronavirus (COVID-19): Advice and Updates.” https://www.ox.ac.uk/coronavirus/advice?wssl=1#content-tab--7.

- Ozkan, S., and R. Koseler. 2009. “Multi-Dimensional Students’ Evaluation of E-Learning Systems in the Higher Education Context: An Empirical Investigation.” Computers & Education 53 (4): 1285–1296.