ABSTRACT

This study explored the way in which detailed data about how time is spent on classroom activities, generated by the FILL+ tool (Framework for Interactive Learning in Lectures), can stimulate professional conversations about teaching practices and aid reflection for STEMM lecturers. The lecturers felt that personalised data provided an unbiased view of the lecture, overcoming the difficulty of relying on their memory alone. They indicated that this approach would help them to reflect on their teaching, particularly when the data was surprising, and many felt that it would encourage them to make changes to their teaching practice.

Introduction

Reflection is widely touted as being an important part of teaching practice (Beauchamp, Citation2015) and is seen as particularly vital if the demand for high quality teaching in Higher Education is to be met (Mälkki & Lindblom-Ylänne, Citation2012). Reflective practice is often described as a prerequisite for high quality teaching (Bell, Citation2001; Kreber & Castleden, Citation2009) and is associated with more experienced teachers (Kane et al., Citation2004).

Despite the widespread use of reflective practice in academic development programmes, there have been criticisms raised in the literature. For example, there is a lack of clarity surrounding what exactly is meant by reflection (Moon, Citation2000) and Cornford (Citation2002, p. 226) concludes that the concept of reflection is ‘too general and vague, for effective, real world application’. It is therefore not surprising to see evidence that teachers overestimate how much actual reflection they are doing (Kreber & Castleden, Citation2009). Even when reflection does takes place, researchers have raised concerns that it may not lead to action that will be of benefit to students’ learning (Akbari, Citation2007; Fendler, Citation2003; Mälkki & Lindblom-Ylänne, Citation2012). In Science Technology Engineering Mathematics and Medicine (STEMM) fields, reflection is particularly problematic as STEMM graduates often have less training in this type of thinking, potentially driving educators to considering reflection as a tick-box exercise (MacKay et al., Citationin press).

In order to improve reflective practice, Walsh and Mann (Citation2015, p. 10) have called for an approach that is more ‘data-led, collaborative and dialogic’. They argue that not only is it easier to reflect when in possession of some kind of evidence, but that ‘teachers are more engaged when they use data from their own context and experience’ (Walsh & Mann, Citation2015, p. 4). Furthermore, traditional approaches to reflective practice rely either on teachers’ recollection about what has taken place, or on reports from others (e.g. students), both of which are notoriously unreliable.

In this paper we address this issue by providing teachers with accurate and detailed data about their teaching practices. We used the Framework for Interactive Learning in Lectures, FILL+ (Kinnear et al., Citation2021; Wood et al., Citation2016) to generate objective data about the time spent on different classroom activities. Data is presented in the form of three graphs. We propose that such concrete data can provide a vehicle for discussions about teaching, thus enabling collaborative, dialogic conversations. This study aligns with other work such as Reisner et al. (Citation2020) who created a guide to help lecturers interpret data from a different observation protocol (The Classroom Observation Protocol for Undergraduate STEM). It extends this work by enabling not only a data-informed approach, but also a dialogic exercise through introducing collegial conversation into the process. We expect that our approach may be particularly useful for practitioners of STEMM teaching, as there is evidence that STEMM faculty may not be comfortable with theoretical approaches to academic development (Chadha, Citation2021), particularly where it takes a realist perspective which may be at odds with their positivist scientific epistemologies (Staller, Citation2013).

Walsh and Mann (Citation2015) also highlight that while details about reflective practices tend to be sparse in the literature they commonly involve writing tasks such as reflective journals. As such, reflection is often seen as an activity that is undertaken by individuals rather than in collaboration with others. However, there has been growing interest in the role of conversations about teaching to aid reflection as evidenced by the recent special issue of this journal (Pleschová et al., Citation2021). Such ‘significant conversations’ (Roxå & Mårtensson, Citation2009) between teachers and/or academic developers can help teachers to reflect on their identity as teachers, on their teaching practices and ultimately support them to become more effective teachers (Thomson, Citation2015).

In order to generate a dialogic approach we follow the approach of Mooney and Miller-Young (Citation2021), in the use of ‘educational development interviews’, guided conversations about teaching approaches in a safe environment with a non-disciplinary colleague which provide the opportunity to talk about teaching and to reflect on classroom practices. In this way the interviews become a ‘site of interpretive practice’ (Holstein & Gubrium, Citation2011) where knowledge is co-constructed between teacher and interviewer. As suggested by Mooney and Miller-Young (Citation2021), such interviews have a dual purpose, acting as both the opportunity for reflective discussion with teachers while also being a semi-structured research interview through which qualitative research data are collected. In this study, interviews were conducted with seventeen participants from across STEMM disciplines guided by the following research questions:

In what ways is it beneficial (if at all) for teachers to see graphical representations of their classroom practices data?

In what ways does discussing the data help teachers to reflect on their teaching practices and to develop a reflective practice?

Which forms of data do teachers find the most useful?

Reflective practice

Much of the literature on reflection builds on the seminal work of Dewey (Citation1938) who saw the act of reflection as central to human learning. At a similar time, Kolb (Citation1984) also developed ideas about reflection, but where Kolb was focussed on experience as being the starting point for reflection, Dewey (Citation1989) believed that for reflection to be triggered a person must be perplexed, experience a discrepancy, or notice a disturbance.

More recent interest in reflection for teaching stems from the work of Schon (Citation1979) who highlighted how reflection can help professionals learn about and improve their practices. Schön proposed two types of cognitive behaviours: reflection-in-action and reflection-on-action. The first refers to the change in thinking that takes place during an event (e.g. while giving a lecture) and the second is reflection that takes place after the event and is more purposeful. Russell (Citation2005, p. 2), reflecting on Schön’s ideas, noted that it is likely to be a ‘puzzling or surprising event during teaching’ that causes teachers to think differently about what they were doing, and subsequently change to a different approach. Similar to Dewey’s (Citation1989) approach, this highlights the idea that reflection is triggered by something unusual or unexpected.

Much of the early discussion has highlighted the importance of reflection as a ‘vehicle for turning experience into learning’ (McAlpine & Weston, Citation2002, p. 63). More recently it has been recognised that in itself learning is not sufficient and that the ultimate aim of reflection must be for reflection and learning to be enacted. In other words, the reflection should lead to meaningful change. Nilsen et al. (Citation2012, p. 404) describe reflection as ‘a mechanism … .to draw conclusions to enable better choices or responses in the future’. For Finlay (Citation2002, p. 209) these changes only happen when reflection becomes reflexivity, which they describe as ‘explicit self-aware meta-analysis’. Only through this intentional thinking can there be changes in ‘practice, expectations, and beliefs’ (Mooney & Miller-Young, Citation2021, p. 2).

Although reflective practice is often considered primarily an individual process (Finlayson, Citation2015), there is a growing move towards developing reflective practices which involve dialogic and collaborative discussions (Thomson, Citation2015; Walsh & Mann, Citation2015). Dialogue has long been considered an effective way to catalyse deeper critical understanding of actions, beliefs, and knowledge (Bray et al., Citation2000). Peer observation activities are one way to support reflective practice that include dialogic processes. One approach known as ‘stimulated recall’ (Lyle, Citation2003), involves watching a video of a teaching event followed by a discussion with a critical friend (Tripp & Rich, Citation2012; Wright, Citation2008). Wright (Citation2008) found that using videos in this way led to reflection that was meaningful and overcame long lasting barriers such as lack of time and support. Bell (Citation2001) proposed a more structured and supported peer observation programme which comprised written feedback, reflection and discussion which helped academic staff to improve their teaching practice and feel more confident. Similarly, O’Keeffe et al. (Citationin press) explored cross-institutional peer observation of teaching, as a way to create safe and supportive professional conversations.

While it has been shown that academics typically engage in professional conversations within small groups of trusted colleagues (Roxå & Mårtensson, Citation2009), such significant conversations can also take place with someone outside the discipline. Mooney and Miller-Young (Citation2021, p. 4) have proposed the ‘educational development interview’ which they describe as a conversation ‘where reflexive practice is intentionally prompted through guiding and probing questions and in conversations that elicit the interviewee’s beliefs, blind spots, and stories of experience in teaching and curricular practice’. These interviews are facilitated between a teacher and someone who is not familiar with the discipline or teaching practice of the participant, thus giving important space and neutrality to the conversation. Taking together the value of dialogic processes in teacher’s professional learning and peer observation, this study introduces a reflective interview to expand on the FILL+ tool. It does so in order to examine the use of the tool through conversation to aid reflection on teaching practice.

Methodology

Classroom practices analysis

In 2016 the first author developed the Framework for Interactive Learning in Lectures – (FILL), a peer observation tool which is used to accurately characterise the activities that take place in lectures (Wood et al., Citation2016). The analysis focused on what both the lecturer and the students are actually (assumed to be) doing during the lecture for example, the lecturer talking, students asking and answering questions and students thinking about problems. In addition to the primary coding, each activity was assigned one of three levels of interactivity: interactive (e.g. students discussing in small groups), non-interactive (e.g. lecturer talking) and vicarious interactive (e.g. questions to and from individual students). This final category describes activities where there is some interaction between students and the lecturer, such as questions to and from the lecturer, which feel substantially different to listening to a monologue, but as most students are not themselves engaged in the interaction it does not fall into the fully interactive category. More recently the FILL+ framework was developed by Kinnear et al. (Citation2021) as an extension of the FILL framework. The FILL+ protocol was used on recorded lectures and demonstrated that minimal training of coders was required to produce reproducible characterisations of lecture behaviour. The codes used for FILL+ analysis adapted from (Smith et al., Citation2020) are shown in .

Table 1. Overview of all FILL+ codes.

Data presentation

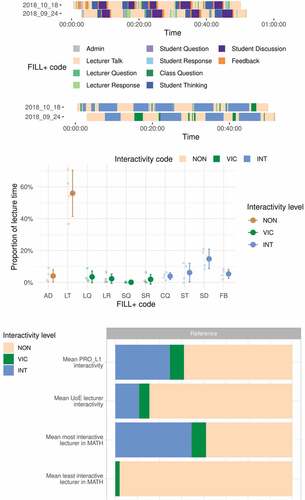

Three types of graphs (see ) generated from FILL+ data were developed to illustrate the classroom practices of each lecturer: 1) Timelines for each lecture, 2) Activity as a Proportion of Lecture Time Graph and 3) Intra-subject Comparisons allowing the participant to contextualise their lecture behaviour with others in the same field.

Figure 1. The three graphs created from FILL+ data, in all graphs colours denote type of interactivity: noninteractive (pink), vicarious interactive (green) and interactive (blue): 1) Timelines of classroom activities a) with all 10 codes and b) simplified version, 2) Proportion of the lecture spent on each of the different activities showing each lecture (small dots), mean and standard error (larger dots and bars), 3) Comparisons graphs shows the average time spent in each of the three interactivity levels across all lectures from (a) the participant, (b) our entire sample of all STEMM subjects, (c) the most interactive lecturer from the participant’s subject area, (d) the least interactive lecturer from the participant’s subject area.

The timelines show a temporal representation of activities as they take place during the lecture. Two different versions were developed for this project. The first uses the full data set of 10 codes while the second is a simplified version which groups the codes into one of three categories (interactive, vicarious interactive and non-interactive), which we felt would be easier to make sense of during the interview. The proportionality graph shows the proportion of the lecture spent on each of the different activities. Small dots represent each lecture while the larger dots and bars show the mean and standard error.

The comparisons graphs show the average time spent in each of the three interactivity levels across all lectures from (a) the participant, (b) our entire sample of all STEMM subjects, (c) the most interactive lecturer from the participant’s subject area, (d) the least interactive lecturer from the participant’s subject area. This enables participants to compare their level of interactivity with that of their colleagues.

Data collection

From a previous project we had access to FILL+ data from 247 lectures from 45 lecturers (Kinnear et al., Citation2021). Seventeen participants for the current project were recruited from that cohort with the aim of achieving participants from across the STEMM disciplines: (Mathematics (8), Physics (3), Veterinary Medicine (3), Biology (2), and Chemistry (1)). The teaching approaches varied from those whose lectures took a primarily didactic approach to those who spent around 50% of the lecture time on active learning strategies such as Peer Instruction (Mazur, Citation1999).

Approach to interviews

Interviews lasting approximately 50 minutes were carried out by the first author using MS Teams and were in two parts. In the first half, participants were asked about their approaches to teaching, course design and reflective practices. In the second half, which formed the ‘educational development interview’ section, participants were presented with their personal FILL+ graphs as described above and asked about their reaction to the data, whether they felt it was useful, and in what ways, if at all, it would help them to reflect on their teaching. Central to our approach was that the analysis and conversations were non-judgemental, particularly in relation to the teaching approach used and that negative views were welcome.

Results

We present the results in three sections which reflect our research questions; 1) General responses to the data, 2) Aiding reflective Practices, and 3) Data Preferences.

General responses to the data

Valuing the data

All but two of the participants felt that there was intrinsic value in seeing the data and almost all the participants said that they would recommend having their data analysed in this way. For example L7 said that the data ‘make[s] you more aware about when you’re doing things’ and L15 commented that ‘it gives a picture of how the lecture goes’. L14 agreed, pointing out that the value in the data was that it was unbiased and gave ‘rather fine grained information’ which helped them to be aware of activities that were happening in their lecture.

Accurate data

Participants valued the detailed and accurate nature of the data. They commented that it was very hard to judge what had taken place in the lecture when giving the lecture yourself. For example, L17 said that there was no time ‘to think about it as you’re doing it’ because they needed to concentrate on what they were saying on the next slide. This was particularly true when student-centred activities were taking place. For example L14 observed ‘time goes on in your own mind a lot longer than it maybe really does’, and similarly L6 commented that when ‘you ask questions and you pause for a moment, it looks and feels like an eternity’.

Reassurance

The overview of the data provided reassurance for participants that they were broadly achieving the type of teaching that they aimed to provide. L3 commented that ‘I can recognise from what I have in my head on the graph so that’s reassuring’. Similarly L9 (who used Peer Instruction in their lecturers) agreed that it ‘provide[d] some evidence that we are actually implementing what we say we implement’.

Comparing to others

Participants noted that the data made them aware of different ways of approaching teaching that they had not previously considered. L16 commented ‘It’s more helpful to see how that compares to people that are in a similar situation to yourself’.

Others felt the data was useful because there were not many opportunities for finding out how colleagues taught: ‘it’s useful … you can compare to what other people are doing cause we don’t really see [that] much’. [L13]

Negative responses to the data

Not everyone found the data or the discussion about teaching helpful. L8 commented that they took part ‘just for people like you to amuse yourself’ and went on to say ‘I didn’t really think of it as a terribly useful exercise’. L16 felt that the data was not surprising and therefore not useful: ‘it tells me something I already know’. It is worth noting that those who were more negative about the process tended to have lower levels of interactivity – both L8 and L16 for example spent over 90% of the lecture talking.

However, a more common response was for participants to feel that the data was interesting but that they either would not or could not make changes to their teaching. For example, L2 felt that the data was ‘thought provoking’ but that they would not change their teaching approach. Others noted a number of barriers to change, for example that the type of content in the lecture course constrained their teaching, or that the demands of a large volume of content gave no space for more interactive teaching even when that would have been their preferred approach.

Aiding reflective practice

While most of the participants found it was useful to see their data, we also found evidence that it helped participants to be more reflective practitioners. For example L7 said ‘it makes you reflect a little bit about what you do and how you do it’, L14 felt that seeing the data ‘is the first step towards improving the delivery’. Practical steps suggested by participants included reviewing their lecture notes ‘I’d go back and look at, I have these one pager A4 lesson plans for my lectures [L1]’

Indeed some participants even did so during the interview as the data had piqued their curiosity. This particularly happened when the data was surprising in some way and different to what they had expected.

Change to teaching

Another common practical step that participants discussed was increasing the amount of interactivity in their lectures. For example L5 commented that the data, ‘would prompt me to try and make sure I replace that with interactivity a little bit more in the lectures where there’s previously been a bit less of it’.

In these comments there was an indication that the data had triggered participants to think about how the class was experienced from the students’ perspective. For instance, L13 stated ‘I think that actually might convince me to try and do a bit more of the student thinking part’ and other participants found that the data prompted them to think about the placement of activities throughout the lecture. L11 noted that ‘it might be better to think about where does it actually fall in the time that I have in terms of breaking up activity a bit’.

Data preferences

One aim of the research was to understand which forms of data participants felt were most useful. Most participants preferred the timelines since they showed ‘not just the proportion but actually how the time is distributed’ [L11].

Some participants commented that the simplified version was ‘much more straightforward’ [L1], as it was easier to ‘remember what the three colours indicate’ [L11] but that the detailed version would be useful to take away and look at in their own time. As noted above a few participants found it helpful to compare to others’, the comparison graph was therefore useful to see ‘where you stand compared to other people’.[L6]

Discussion

In combining detailed data about classroom practices with dialogue, as proposed by Walsh and Mann (Citation2015), we have demonstrated a powerful new approach to reflective practice on teaching. Three attributes of the data contributed to its success in supporting reflection: it was personalised, objective, and numerical. Providing personalised data helped the participants to develop self-awareness of their teaching approaches, an important pre-requisite for turning reflection into reflexivity (Finlay, Citation2002). The timeline graphs in particular triggered a shift in the participants’ thinking from ‘teacher centred’ (focussed on what they were doing during the class) to one that was ‘student centred’ (awareness of how the students were experiencing the class).

Secondly, teachers noted that the data was objective and it gave a more accurate picture than could be obtained by relying on their own memory of events. They observed that the data showed the pattern of classroom activities that they expected which reassured them and gave them confidence that the data was trustworthy. Having trust in the data is likely to increase participants’ willingness to engage with the data more fully, which is important as reflective practice requires the need for ‘serious, active and persistent engagement’ (Dewey, Citation1933, p. 1). We also observed that the numerical nature of the data was familiar to STEMM lectures from their disciplinary practice, and therefore meant they felt comfortable engaging with the data. MacKay et al. (Citationin press) found that veterinary educators were often ‘put off’ reflection because of the perceived subjectivity of this type of professional development activity. This may be because STEMM lecturers tend to have positivist scientific epistemologies (Staller, Citation2013) whereas academic development takes a realist perspective (Chadha, Citation2021).

Reflection was triggered when the teachers found the data surprising or unexpected, for example when they noticed that they spent a large chunk of time talking without any interactions. The importance of something causing a disruption to the normal way of thinking for initiating reflection has been theorised by Dewey (Citation1989), who discussed the need for a doubt or puzzle, and an umlaut to Schon (Citation1979), who talks about an event that is surprising, triggering reflection. However, reflection should be embedded into practice, not limited to a single event or discussion. We found evidence that presenting both a detailed and a simplified form of the data could help to foster long-term reflection. Participants noted that the simplified graph was helpful during the educational development interview, but the detailed version was one they could use to reflect on at a later date.

However, there is no guarantee that even when reflection is triggered that it will lead to change (Mezirow, Citation1994) and even if it does, that change may not result in improved practice (Mälkki & Lindblom-Ylänne, Citation2012). We found evidence that our approach encouraged participants to think about how they might change their teaching in positive ways, for example, by increasing the amount of interactivity or by splitting up long periods of talking with more active approaches. Linking reflection to future action is a practice associated with teachers who successfully use reflection to change their teaching (McAlpine & Weston, Citation2002). We believe that the value in our approach is in the combination of the provision of personalised data about teaching practices together with the educational development interview.

Conversations about teaching have recently been shown to be an effective academic development strategy to aid reflection (Roxå & Mårtensson, Citation2009; Thomson, Citation2015; Walsh & Mann, Citation2015). Combined with personalised data which relates to teachers’ own experiences and contexts, talking allows teachers’ beliefs to be challenged in a supportive environment. As Mooney and Miller-Young (Citation2021) suggest, we found that discussions about the data led to insights into teaching practices that they had not previously considered. These conversations may help teachers to develop ‘pedagogical discontentment’, a state of conflict when there is a mismatch between pedagogical goals and classroom practices which can lead to change (Southerland et al., Citation2011, p. 1).

That a minority of participants did not find the process beneficial highlights that only teachers who are willing to engage with the data are likely to gain benefits from it. For this reason we should guard against the instrumentalization of approaches like this, which could be used to evaluate or make judgements about teaching. This is particularly important as it was clear from the data and from the literature (e.g. McAlpine & Weston, Citation2002; Mälkki & Lindblom-Ylänne, Citation2012) that some teachers faced barriers to making changes to their teaching. For example, some teachers who wanted to use active learning felt that the quantity of the content or the type of subject matter restricted them to a transmission based approach. This was particularly the case in the School of Veterinary Sciences, where large volumes of content are required by professional bodies. Other lecturers worried about the time needed for more innovative teaching approaches, that it would hinder their career progression, and that they lacked the skills needed. For these reasons we believe that if we want to engender change it has be done in a supportive and non-judgemental environment and using this tool to evaluate teachers will undermine its potential benefits.

The key limitation of this research is that our data is limited to teachers’ responses in a single conversation. We do not know if the reflection that was triggered was embedded into long-term practice or if any changes to teaching were made in response to the data. In future work we intend to conduct a follow-up interview to determine the long-term impact of our approach. Further limitations are the need for access to lecture captures and the time (and associated cost) required to analyse the lectures. However, our previous research found that undergraduate research assistants could carry out the analysis reliably after minimal training (Kinnear et al., Citation2021). Work is currently underway by the authors to explore issues around the practical implementation and sustainability of our approach in formal academic development settings.

Implications for academic practice

We propose a number of ways that our data-led and dialogic approach could be used in practice, such as for use in peer observation of teaching. Used in this way it could be beneficial to both the observer and the teacher being observed (Hendry & Oliver, Citation2012), particularly as some teachers intimated that they were not aware of what their colleagues were doing, or what was possible in their subject area. One tool designed explicitly for this purpose is REFLECT (Redesigning Education For Learning through Evidence and Collaborative Teaching) (Dillon et al., Citation2020) which encourages reflection and discussion about the dimensions that interest the teacher being observed. While this is likely to lead to deeper reflection about those dimensions it removes the opportunity for surprise from seeing data about other aspects of teaching, which we have found to be important for eliciting reflection and change. It also requires substantial time commitment, as pre-discussion, post-discussion and training in using the protocol are required. Although the data generated by our tool is more general, it is completely independent, eliminating worry about the subjectivity of peer observation (Bell, Citation2001). Furthermore, as data is supplied by lecture recording, there is no additional burden of being observed, normally faced in peer observation.

Our approach would also be ideally suited for use in continuous professional development courses and in PGCAP (Postgraduate Certificate in Academic Practice) courses for those new to teaching. Used in this way it could give a ‘before’ and ‘after’ comparison for those wishing to explore the impact of making changes to their teaching approach.

Conclusions

The ultimate goal of academic development is to support teachers to provide high quality learning opportunities for students. While reflection is important, reflective practice must have the potential to lead to effective change (McAlpine & Weston, Citation2002; Nilsen et al., Citation2012). In this study we explored the benefits of combining accurate and detailed data about classroom practices using a FILL+ analysis (Kinnear et al., Citation2021) with the opportunity to discuss the data in educational development interviews. We found this approach can trigger reflection, particularly when it was surprising, and, critically, had the potential to empower teachers to make changes to their teaching practice. Our study suggests that if reflective practice is to play an important role in academic development programmes it needs to be data-driven, supportive and dialogic.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Anna K. Wood

Anna Wood is an education researcher working in the School of Mathematics and has a PhD in Physics and an MSc in e-learning. Her research interests focus on the use of technology in large classes and the role of dialogue in learning.

Hazel Christie

Hazel Christie is the Head of the CPD Framework for Learning and Teaching and Programme Director for the PG Certificate in Academic Practice. She is based in the based in the Institute for Academic Development at the University of Edinburgh.

Jill R. D. MacKay

Jill MacKay MSc, PhD, SFHEA is a Lecturer in Veterinary Education at the Royal (Dick) School of Veterinary Studies, University of Edinburgh. Specific interests include research methodology, digital education, and student experience.

George Kinnear

George Kinnear is a Lecturer in Technology Enhanced Mathematics Education in the School of Mathematics at the University of Edinburgh. He completed both his undergraduate degree in mathematics and PhD in pure mathematics at the University of Edinburgh. His research interests are in effective uses of technology to support undergraduate mathematics teaching.

References

- Akbari, R. (2007). Reflections on reflection: A critical appraisal of reflective practices in L2 teacher education. System, 35(2), 192–207. https://doi.org/10.1016/j.system.2006.12.008

- Beauchamp, C. (2015). Reflection in teacher education: Issues emerging from a review of current literature. Reflective Practice, 16(1), 123–141. https://doi.org/10.1080/14623943.2014.982525

- Bell, M. (2001). Supported reflective practice: A programme of peer observation and feedback for academic teaching development. International Journal for Academic Development, 6(1), 29–39. https://doi.org/10.1080/13601440110033643

- Bray, J. N., Lee, J., Smith, L. L., & Yorks, L. (2000). Collaborative inquiry in practice: Action, reflection, and making meaning. Sage.

- Cornford, I. R. (2002). Reflective teaching: Empirical research findings and some implications for teacher education. Journal of Vocational Education and Training, 54(2), 219–236. https://doi.org/10.1080/13636820200200196

- Deesha, Chadha. (2021). Continual professional development for science lecturers: using professional capital to explore lessons for academic development. Professional Development in Education, https://doi.org/10.1080/19415257.2021.1973076

- Dewey, J. (1933). How We Think: A Re-statement of the Relation of Reflective Thinking to the Education Process. Boston, ME: D.C. Heath & Co Publishers.

- Dewey, J. (1938). Logic-The theory of inquiry. Holt, Rinehart and Winston.

- Dewey, J. (1989). The later works of John Dewey: 1929-1930, essays, the sources of a science of education, individualism, old and new, and construction of a criticism ( J.A. Boydston. Carbondale and Edwardsville, Ed.; Vol. 5). Southern Illinois University Press.

- Dillon, H., James, C., Prestholdt, T., Peterson, V., Salomone, S., & Anctil, E. (2020). Development of a formative peer observation protocol for STEM faculty reflection. Assessment & Evaluation in Higher Education, 45(3), 387–400. https://doi.org/10.1080/02602938.2019.1645091

- Fendler, L. (2003). Teacher reflection in a hall of mirrors: Historical influences and political reverberations. Educational Researcher, 32(3), 16–25. https://doi.org/10.3102/0013189X032003016

- Finlay, L. (2002). Negotiating the swamp: The opportunity and challenge of reflexivity in research practice. Qualitative Research, 2(2), 209–230. https://doi.org/10.1177/146879410200200205

- Finlayson, A. (2015). Reflective practice: Has it really changed over time? Reflective Practice, 16(6), 717–730. https://doi.org/10.1080/14623943.2015.1095723

- Hendry, G. D., & Oliver, G. R. (2012). Seeing is believing: The benefits of peer observation. Journal of University Teaching & Learning Practice, 9(1), 7. https://doi.org/10.53761/1.9.1.7

- Holstein, J. A., & Gubrium, J. F. (2011). Animating interview narratives. Qualitative Research, 3, 149–167. https://epublications.marquette.edu/socs_fac/48

- Kane, R., Sandretto, S., & Heath, C. (2004). An investigation into excellent tertiary teaching: Emphasising reflective practice. Higher Education, 47(3), 283–310. https://doi.org/10.1023/B:HIGH.0000016442.55338.24

- Kinnear, G., Smith, S., Anderson, R. et al. Developing the FILL+ Tool to Reliably Classify Classroom Practices Using Lecture Recordings. Journal for STEM Educ Res 4, 194–216 (2021). https://doi.org/10.1007/s41979-020-00047-7

- Kolb, D. (1984). Towards an applied theory of experiential learning. John Wiley & Sons.

- Kreber, C., & Castleden, H. (2009). Reflection on teaching and epistemological structure: Reflective and critically reflective processes in ‘pure/soft’and ‘pure/hard’fields. Higher Education, 57(4), 509–531. https://doi.org/10.1007/s10734-008-9158-9

- Lyle, J. (2003). Stimulated recall: A report on its use in naturalistic research. British Educational Research Journal, 29(6), 861–878. https://doi.org/10.1080/0141192032000137349

- MacKay, J., Salvesen, E., Rhind, S., Loads, D., & Turner, J. (in press). Development and evaluation of a faculty based accredited continued professional development route for teaching and learning. Journal of Veterinary Medical Education, e20210019. https://doi.org/10.3138/jvme-2021-0019

- Mälkki, K., & Lindblom-Ylänne, S. (2012). From reflection to action? Barriers and bridges between higher education teachers’ thoughts and actions. Studies in Higher Education, 37(1), 33–50. https://doi.org/10.1080/03075079.2010.492500

- Mann, S., & Walsh, S. (2013). RP or ‘RIP’: A critical perspective on reflective practice. Applied Linguistics Review, 4(2), 291–315. https://doi.org/10.1515/applirev-2013-0013

- Mazur, E. (1999). Peer instruction: A user’s manual. AAPT.

- McAlpine, L., & Weston, C. (2002). Reflection: Issues related to improving professors’ teaching and students’ learning. In N. Hativa & P. Goodyear (Eds.), Teacher thinking, beliefs and knowledge in higher education (pp. 59–78). Springer.

- Mezirow, J. (1994). Understanding transformative learning theory. Adult Education Quarterly, 44(4), 222–232. https://doi.org/10.1177/074171369404400403

- Moon, J. A. (2000). Reflection in learning and educational development. Kogan Page.

- Mooney, J. A., & Miller-Young, J. (2021). The educational development interview: A guided conversation supporting professional learning about teaching practice in higher education. International Journal for Academic Development, 26(3), 224–236. https://doi.org/10.1080/1360144X.2021.1934687

- Nilsen, P., Nordström, G., & Ellström, P.-E. (2012). Integrating research-based and practice-based knowledge through workplace reflection. Journal of Workplace Learning, 24(6), 403–415. https://doi.org/10.1108/13665621211250306

- O’Keeffe, M., Crehan, M., Munro, M., Logan, A., Farrell, A. M., Clarke, E., Flood, M., Ward, M., Andreeva, T., Van Egeraat, C., Heaney, F., Curran, D., & Clinton, E. (in press). Exploring the role of peer observation of teaching in facilitating cross-institutional professional conversations about teaching and learning. International Journal for Academic Development, 26(3), 266–278. https://doi.org/10.1080/1360144X.2021.1954524

- Pleschová, G., Roxå, T., Thomson, K. E., & Felten, P. (2021). Conversations that make meaningful change in teaching, teachers, and academic development. International Journal for Academic Development, 26(3), 201–209. https://doi.org/10.1080/1360144X.2021.1958446

- Reisner, B. A., Pate, C. L., Kinkaid, M. M., Paunovic, D. M., Pratt, J. M., Stewart, J. L., Raker, J. R., Bentley, A. K., Lin, S., & Smith, S. R. (2020). I’Ve been given COPUS (Classroom Observation Protocol for Undergraduate STEM) data on my chemistry class … now what? Journal of Chemical Education, 97(4), 1181–1189. https://doi.org/10.1021/acs.jchemed.9b01066

- Roxå, T., & Mårtensson, K. (2009). Significant conversations and significant networks–exploring the backstage of the teaching arena. Studies in Higher Education, 34(5), 547–559. https://doi.org/10.1080/03075070802597200

- Russell, T. (2005). Can reflective practice be taught? Reflective Practice, 6(2), 199–204. https://doi.org/10.1080/14623940500105833

- Schon, D. A. (1979). The reflective practitioner. Temple Smith.

- Smith, S., Anderson, R., Grant, T., & George, K. (2020). FILL+ training manual. OSF Pre-Print. https://doi.org/10.17605/OSF.IO/27863

- Southerland, S. A., Sowell, S., Blanchard, M., & Granger, E. M. (2011). Exploring the construct of pedagogical discontentment: A tool to understand science teachers’ openness to reform. Research in Science Education, 41(3), 299–317. https://doi.org/10.1007/s11165-010-9166-5

- Staller, K. M. (2013). Epistemological boot camp: The politics of science and what every qualitative researcher needs to know to survive in the academy. Qualitative Social Work, 12(4), 395–413. https://doi.org/10.1080/03075079.2014.950954

- Thomson, K. (2015). Informal conversations about teaching and their relationship to a formal development program: Learning opportunities for novice and mid-career academics. International Journal for Academic Development, 20(2), 137–149. https://doi.org/10.1080/1360144X.2015.1028066

- Tripp, T., & Rich, P. (2012). Using video to analyze one’s own teaching. British Journal of Educational Technology, 43(4), 678–704. https://doi.org/10.1111/j.1467-8535.2011.01234.x

- Walsh, S., & Mann, S. (2015). Doing reflective practice: A data-led way forward. Elt Journal, 69(4), 351–362. https://doi.org/10.1093/elt/ccv018

- Wood, A. K., Galloway, R. K., Donnelly, R., & Hardy, J. (2016). Characterizing interactive engagement activities in a flipped introductory physics class. Physical Review Physics Education Research, 12(1), 010140. https://doi.org/10.1103/PhysRevPhysEducRes.12.010140

- Wright, G. A. (2008). How does video analysis impact teacher reflection-for-action?. Brigham Young University.