?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Extraction of urban objects, and analysis of two- and three-dimensional (2D and 3D) morphological parameters, as well as 2D and 3D landscape metrics in urban environments are valuable for updating GIS databases and for civic applications, urban planning, disaster risk assessment, and climate and sustainability studies. However, few studies have reported the extraction of 3D buildings at different scales in urban areas or developed a method for accuracy evaluation. This study aims at developing a method of multi-scale 3D building information extraction (MS3DB) to fill this research gap. Surface flatness and the variance in the normal direction were extracted from the point cloud data, and gray-level co-occurrence matrix was extracted from normalized digital surface models as labeling features, which were fused into a graph-cut the algorithm to determine building labeling. In addition, 2D and 3D building morphological parameters were extracted at the grid-scale, and a set of 3D building landscape metrics were designed at the city-block scale. Accuracy of the extraction of 3D building information was evaluated at the object, grid, and block scales. The model achieved high accuracy in extracting the building labels using data from the northern part of Brooklyn, New York, USA. The results show that the MS3DB method yields limited accuracy in extracting the building edges, whereas other parameters (e.g., area, volume, and planar area index) were extracted with high accuracy at the grid scale (R2 > 0.92). The block-scale landscape analysis shows the advantages of integrating 2D and 3D features (e.g., differences in the vertical landscape) in characterizing the structure of urban buildings and exhibits moderate accuracy (R2 > 0.79).

1 Introduction

Automatic extraction of urban buildings from imagery can provide basic information for urban planning (Tomljenovic et al. Citation2015; Deng et al. Citation2019), disaster risk assessment (Duarte et al. Citation2018), and Geographical Information System (GIS) database updates (Griffiths and Jan 2019; Cao et al. Citation2020). Besides, two- and three-dimensional (2D and 3D) building morphological parameters (e.g., planar area index and frontal area index) and 2D and 3D building landscape patterns (e.g., Building area coverage and building height) significantly affect the local climate of urban areas (Adolphe Citation2001; Kanda, Kawai, and Nakagawa Citation2005a; Hung, James, and Hodgson Citation2018) and energy consumption trends (Yu et al. Citation2010; Yang et al. Citation2015). Building information is essential for urban managers, planners, and urban data users because urban building analysis can be conducted at different spatial scales. Therefore, it is of great value to extract building objects, 2D/3D morphological parameters, and landscape metrics at different spatial scales.

With the development of remote sensing technology, the use of new sensors and advanced image processing techniques to automatically extract 3D building information has become an important research topic (Rottensteiner et al. Citation2014). The acquisition of full 3D building information requires information on the object height and 2D labeling. Height information is commonly extracted from two types of data sources: Light Detection and Ranging (LiDAR) data (Murakami et al. Citation1999; Pang et al. Citation2014; Hao et al. Citation2015) and very-high-resolution (VHR) stereo-paired images (Bouziani, GoTa, and He Citation2010; Tian et al. Citation2013; Tian, Cui, and Reinartz Citation2014; Qin, Tian, and Reinartz Citation2016). Height information extracted from the VHR stereo-paired images in urban areas is often affected by the discrepancies in illumination, perspective variations, and increased spectral ambiguity (Baltsavias Citation1999). LiDAR remote sensing technology provides an alternative solution to the problems with the detection of 3D building information in urban environments. LiDAR technology is capable of measuring the height of the ground objects accurately, reliably, and quickly.

In building extraction, using the building height data can improve the extraction accuracy because traditional 2D image classification methods may lead to significant errors due to shadows and occlusions, especially in densely developed areas (Awrangjeb and Fraser Citation2014; Qin, Tian, and Reinartz Citation2016). Research on building labeling using height data can be divided into two categories according to the data source: 1) studies that use fused 2D-3D data; and 2) those that only use 3D data. For the first category, the use of sufficient 2D and 3D information from images and LiDAR data, such as spectral, texture, geometric information, and some indices (e.g., normalized difference vegetation index and normalized difference water index), can improve the automatic extraction of buildings and the accuracy of building footprint extraction (Gerke and Xiao Citation2014; Zarea and Mohammadzadeh Citation2016). However, the method that uses fused 2D-3D information may cause errors in the process of building labeling (Liu et al. Citation2013). First, registration is the premise of fusing LiDAR data and images, but it is difficult to perform automatic registration of LiDAR data and images due to their different features, i.e., spatial resolution and detected information. Only when the accuracy of the registration of different data sources is less than one pixel, the advantages of fusion can be fully utilized (Parmehr et al. Citation2014). Second, the spatial resolution of LiDAR data is diverse; thus, although small structures (chimneys, gutters) can be recognized on very high spatial resolution images, they cannot be captured in LiDAR data (Awrangjeb and Fraser Citation2014). Third, it is difficult to obtain LiDAR data and images in the same region at the same time. Therefore, a single LiDAR data source can be used to perform building labeling in practical applications.

The methods for the classification of urban buildings can be divided into two categories: supervised and unsupervised classification methods. The supervised classification is based on the collection of training samples and classification/machine learning algorithms. Zhou and Neumann (Citation2008) used different geometrical features and a method of unbalanced Support Vector Machine to classify and identify buildings; they showed the efficiency and accuracy of their algorithm in extracting the roof boundaries and generating the building footprint. Niemeyer, Rottensteiner, and Soergel (Citation2014) combined a random forest algorithm with a framework of the conditional random domain to perform building labeling using LiDAR point clouds; they found that the main buildings (larger than 50 m2) can be detected reliably using their method with a correctness value of larger than 96% and a completeness of 100%. The unsupervised classification method segments the LiDAR data and does not require training samples. Meng, Wang, and Currit (Citation2009) removed non-building pixels using size, shape, height, building structure, and height difference data between the first and last return of laser scanning and illustrated that using a single LiDAR data source can achieve an overall accuracy of 95.46%. Du et al. (Citation2017) used multiple features such as flatness, variance of the normal direction, and gray-level co-occurrence matrix (GLCM) texture, and the graph-cut algorithm to complete the building labeling. They achieved completeness of 94.9% and correctness of 92.2% at the per-area level. The results of these studies suggest that the use of a single LiDAR data source can achieve reasonable building labeling accuracy and avoid problems of automatic registration between LiDAR data and aerial images.

The extraction of building information goes beyond the acquisition of building label and height data. The 2D and 3D building morphological parameters at the grid scale and building landscape metrics at the city block scale are valuable to researchers and urban planners (Huang et al. Citation2017). 2D and 3D building morphological parameters are essential for urban climate studies. Previous studies report that 3D building morphological characteristics affect local winds (Kubota et al. Citation2008), building solar radiation acquisition (Lam Citation2000; Robinson Citation2006), building temperature (Mills Citation1997), surface thermal conditions (Streutker Citation2003), and air pollutant diffusion (Qi et al. Citation2018). A large number of 2D landscape metrics have been developed and applied in the field of urban ecology and landscape planning (Forman and Godron Citation1986; McGarigal and Marks Citation1995; Sundell-Turner and Rodewald Citation2008; Lausch et al. Citation2015). These studies demonstrated that it is important to use quantitative 2D landscape metrics to evaluate the ecological patterns and understand their processes. However, by adding the height information extracted from the LiDAR data, these 2D metrics can further be improved. The lack of quantitative information in the vertical direction leads to inaccurate or indistinguishable descriptions of vertical heterogeneity and landscape patterns in urban areas (Liu, Hu, and Li Citation2017; Chen, Xu, and Devereux Citation2019). Only a few studies have explored the 3D landscape analysis at the city block scale.

In this study, a method of multi-scale building 3D information extraction (MS3DB) was developed for urban areas, and the northern part of Brooklyn, New York City, USA was selected as the study area to evaluate the model performance and accuracy. The specific objectives of this study are (1) to use a single LiDAR data source for multi-feature extraction, including flatness, the variance of normal direction, and GLCM homogeneity of the normalized Digital Surface Model (nDSM), and to fuse these features in the graph-cut algorithm for building labeling; (2) to extract grid-based building morphological parameters and block-based 3D building landscape metrics; and (3) to develop a set of evaluation methods for 3D building information extraction at the object, grid, and block scale.

2 Study area and data

2.1 Study area

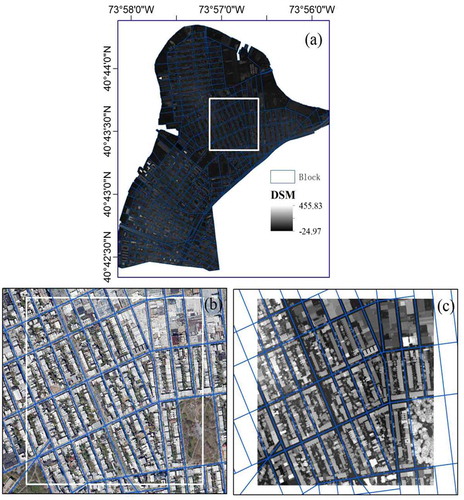

shows the study area, which is located in the northern part of Brooklyn, New York City, USA and includes large commercial and residential areas. The study area covers 6.12 km2 land and contains approximately 8,000 buildings, 8,146 parcels, and 493 blocks. The elevation of the area ranges from −23 to 384 m. The land cover types in the area include buildings, vegetation, roads, bare soil, and oil tanks; the eastern and northern parts of the area are along the East River. Buildings in the area are complex in terms of size, texture, and shape.

2.2 Data

The LiDAR point cloud data from the New York City Department of Information Technology and Telecommunications (NYCDITT Citation2019) covering the study area in 2017 were used to extract the building label information, 2D and 3D building morphological parameters, and landscape metrics. The data were collected using a Cessna 402 C or Cessna Caravan 208B aircraft equipped with Leica ALS80 and Riegl VQ-880-G laser systems from May 3 to 26 May 2017. The density of the point clouds is 8.0 points/m2, and vegetated and non-vegetated vertical accuracy values are 15.8 and 6.4 cm, respectively, at the 95% confidence level (NYCDITT Citation2019). High-resolution orthophotos of red, green, blue, and near-infrared bands with a 0.3 m (1.0 ft) spatial resolution were collected from the New York City Office of Information Technology Services (NYITS Citation2019) as a reference to verify the extraction results.

3 Methodology

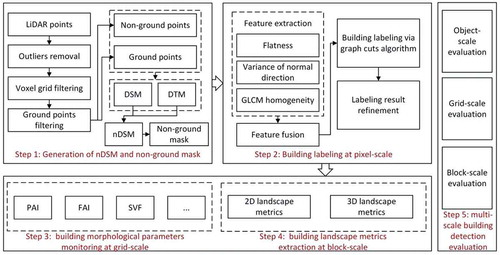

shows the workflow of the MS3DB method, which entails five key steps: (1) generation of normalized Digital Surface Model (nDSM) and non-ground mask; (2) building labeling at the pixel scale; (3) monitoring of 2D and 3D building morphological parameters; (4) extraction of 2D and 3D building landscape metrics; and (5) evaluation of multi-scale 3D building information.

3.1 Generation of nDSM and non-ground points

The “StatisticalOutlierRemove” filter operation of PCL 1.6 was used for the point clouds to eliminate the noise in the data (Rusu et al. Citation2008). Then, the modified voxel grid filtering program (Du et al. Citation2017) was executed to reduce the excess points, where the size of the voxel was set to be slightly lower than the density of the original point clouds. The points nearest the voxel centroid were retained in each voxel to maintain the accuracy of the original point clouds.

The “lasground” filter operation of the LasTools (Rapidlasso Citation2020) was used to separate the noise-free point clouds into ground and non-ground points. Further, DSM and digital terrain model (DTM) data were produced using an interpolation algorithm (i.e., binning approach) with all points and ground points, respectively. The binning approach provides a cell assignment method to determine each output cell using the points that fall within its extent, along with a void fill method to determine the value of the cells that do not contain any LiDAR points (ESRI Citation2020). Here, the types of the cell assignment and void fill methods were set as the average and the natural neighbor, respectively (Dong and Chen Citation2018). Finally, nDSM was obtained by subtracting DTM data from DSM data. Besides, a non-ground mask of the point clouds with a height that was higher than zero was generated.

3.2 Building labeling at the object scale

3.2.1 Multi-feature extraction

provides the details of the multi-feature extraction method developed in this study. Three features, flatness, variance of normal direction (Vnd), and GLCM homogeneity of nDSM, were selected for building labeling. Flatness was used because buildings often appear as plain surfaces, while vegetation can be considered to have an irregular surface. Vnd was used as another geometric feature because the normal vectors of vegetation are scattered and irregular in many directions, while those of buildings are fixed in a few directions. Lastly, in a height image, GLCM homogeneity of vegetation possesses a rich texture, while that of buildings has a simple texture.

Table 1. Multiple features used for building labeling

3.2.2 Feature fusion

The features have different value ranges; thus, the logistic function was used for normalization:

where t0 is the feature threshold, and its value greatly affects the labeling result (see Section 4.1 for the t0 values for flatness, Vnd, and GLCM). k controls the steepness of the logistic function and has little effect on the labeling result. In this study, we set the values of k as −35.0, 2.0, and 0.2 for flatness, Vnd, and GLCM, respectively.

3.2.3 Graph-cut based building labeling

Because flatness, Vnd, and GLCM homogeneity describe the geometric characteristics of a pixel, they do not consider the consistency with surrounding pixels. Therefore, the extracted features were fused into a framework of minimum energy. We used the graph-cut algorithm (Boykov and Kolmogorov Citation2004) to perform the building labeling. The goal of the algorithm is to identify a label for each candidate pixel using the following energy function:

where represents the data cost, and

refers to the smoothness cost; Dp(lp) is used to measure how well label lp fits the node p; lp refers to the building or non-building. Dp(lp) can be calculated as follows:

where Ffl, Fvnd, and Fth are the normalized values of flatness, Vnd, and GLCM, respectively; ,

, and

are the weights of flatness, Vnd, and GLCM, respectively (see Section 4.1 for more details).

The second term in EquationEq. (2)(2)

(2) represents the degree of consistency between a node and its surrounding pixels. We used DSM to measure the consistency because the height difference of the building areas is small, whereas that between the areas of buildings and non-buildings is often large. The smoothness term can be calculated as follows:

where hp and hq are the heights of pixels p and q. Constant ε was used to ensure that the denominator is greater than zero (Zarea and Mohammadzadeh Citation2016). Here, we set =0.2 m (Awrangjeb and Fraser Citation2014).

There is another parameter β in EquationEq. (2)(2)

(2) . β is used to control the weight of the smooth term. It relates to the urban environment. If the buildings in the area are dense and tall, a high value of β is set. In this study, the study area was divided into 21 zones with a same size of 2500*2500 pixels (). For accurate building labeling, the zones were set with various β values (see Table S1 for more details). In addition, we used zones 9 and 13 as experimental areas to determine t0 values in EquationEq. (1)

(1)

(1) for flatness, Vnd, and GLCM because buildings in these two zones represent typical tall and small ones, respectively. The graph-cut algorithm was employed to examine the influence of t0 on a feature.

3.2.4 Labeling refinement

shows the procedure for building labeling refinement. First, the height and area thresholds were used to remove irrelevant low buildings and small building patches, respectively (see Table S1 for details).

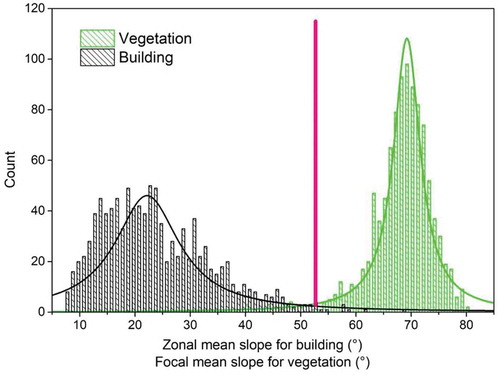

In addition, a zonal slope threshold was applied to remove small vegetation patches. The zonal mean slope threshold was determined by the difference in the slope histogram between vegetation and buildings (). 1000 building footprints and 1000 medium/high vegetation points were randomly selected to determine their zonal mean slope values and focal mean slopes. A mask of 21 × 21 pixels (10.5 m × 10.5 m) was used to calculate the value of the focal mean slope. The maximum value of the zonal mean slope of buildings was selected as the threshold. As shown in , the zonal mean slope threshold was set to 53° to identify and remove small vegetation patches.

Finally, because some holes existed on building surfaces after labeling, a morphological opening operation followed by a closing operation was used to refine the labeling results. The kernel for opening and closing operations was set to the 3 × 3 window size. In this way, an accurate building footprint can be obtained and subsequently used in the extraction of parameters and landscape metrics.

3.3 Extraction of building morphological parameters at the grid scale

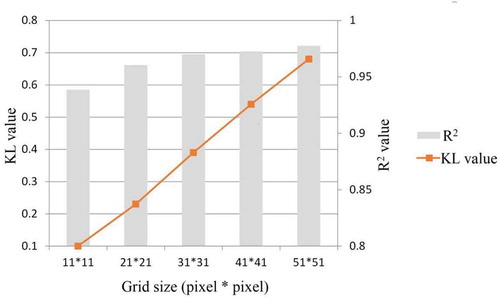

There is a trade-off between the accuracy and detail loss of extracted parameters (Susaki, Kajimoto, and Kishimoto Citation2014; Huang et al. Citation2017). As the size of a grid increases, the accuracy of extracted parameters gradually increases but the details are lost. However, when the size of a grid is sufficiently large, the accuracy of the extracted parameters becomes stable (Susaki, Kajimoto, and Kishimoto Citation2014). The Pearson coefficient between morphological parameters extracted from the labeling and ground truth building data was computed to measure the changes in accuracy at different sizes of a grid. The Kullback-Leibler (KL) value was calculated to assess the differences in the details of extracted parameters at various grid sizes:

where KL (P||Q) denotes the KL value, and P and Q refer to the discrete probability distributions of the ground truth building density with refined spatial resolution and the extracted building density with coarse spatial resolution, respectively. Various grid sizes were considered, 7 × 7, 11 × 11, 21 × 21, 31 × 31, 41 × 41, and 51 × 51 pixels. The distributions of urban density [0%, 100%] with an interval of 1% at different grid sizes were compared to those at the 7 × 7 pixels. shows the 2D and 3D building morphological parameters as well as the calculation method used in this study. Parameters listed in were selected for grid-scale monitoring.

Table 2. Building 2D/3D morphological parameters used in this study

3.4 Extraction of building landscape metrics at the city-block level

shows the 2D and 3D landscape metrics used in this study. The 2D landscape metrics included building area coverage (BAC) and the mean building area (MBA). A set of 3D building landscape metrics for the city block, such as high building ratio (HBR), mean building height (MBH), mean building structure index (MBSI), and floor area ratio (FAR), were integrated. Note that following Craighead (Citation2009), in the United States, high buildings are defined as those with a height ≥ 22.5 m.

Table 3. Building landscape metrics at the block-scale

3.5 Evaluation of multi-scale extraction of buildings

3.5.1 Object scale evaluation

Pixel-based, object-based, and area-normalized object-based methods were used to assess the accuracy of building labeling at the object scale.

(1) Pixel-based method

A true positive (TP) pixel is a pixel labeled as “building” that corresponds to the “building” in the reference; a false negative (FN) pixel corresponds to the building that is labeled as “non-building” in the reference; a false positive (FP) pixel is labeled as “building” that corresponds to “non-building” in the reference (Rutzinger, Rottensteiner, and Pfeifer Citation2009). Based on these definitions, three metrics were defined to evaluate the accuracy of the results: completeness (Comp), correctness (Corr), and Quality.

where NTP represents the number of TP pixels; NFN represents the number of FN pixels; and NFP represents the number of FP pixels.

(2) Object-based method

For each detected building, the percentage of the area of the detected building that overlaps with the reference label image is evaluated. A percent threshold of 70% was selected to classify each detected building either as a TPcorr or FP (Rutzinger, Rottensteiner, and Pfeifer Citation2009). Similarly, for each building in the reference, the percentage of the area of the building in the reference that overlaps with the detected label image was calculated. The percent thresholds were compared to classify each building in the reference either as TPcomp or FN.

(3) Area-normalized object-based method

Besides pixel-based and object-based methods, an area-normalized object-based method was also employed for more accurate and comprehensive accuracy assessment:

where Comparea, Corrarea, and Qualityarea are the completeness, correctness, and quality metrics calculated via the object-based method normalized by area; Ntpcomp and Nfn are the number of TPcomp and FN buildings; Area_tpcompi is the area of TPcomp building i; Area_fni is the area of FN building i; Ntpcorr and Nfp are the number of TPcorr and FP buildings; Area_tpcorri is the area of TPcorr building i; and Area_fpi is the area of FP building i.

3.5.2 Grid- and block-scale evaluation

Grid- and block-scale results were compared with the visualization results. The Pearson correlation coefficient and parameters of the regression equation in EquationEq. (8)(8)

(8) were used to evaluate the grid- and block-scale results.

where x and y refer to the parameters/landscape metrics extracted from the MS3DB method and those extracted from the visualization results; a and b are the regression coefficients. Higher correlation coefficients (R2) associated with a values closer to one and b values closer to zero in the regression equation indicate greater similarity between parameters/landscape metrics extracted from the MS3DB method and those extracted from visualization results.

4. Results

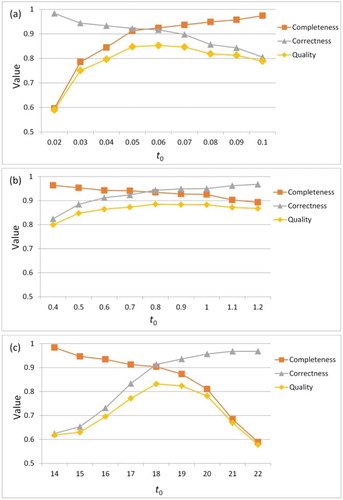

4.1 Determination of parameters

Several key parameters in this study can affect the accuracy of the MS3DB method. For example, parameter t0 in EquationEq. (1)(1)

(1) greatly affects the labeling results. shows the effect of t0 on flatness, Vnd, and GLCM of the labeling results. Comp, Corr, and Quality of the labeling results using the pixel-based evaluation method for different t0 values were acquired. We selected the highest Quality to determine the optimal t0 value. The highest values of Quality for flatness, Vnd, and GLCM were determined when t0 was 0.06, 0.80, and 18.00, respectively.

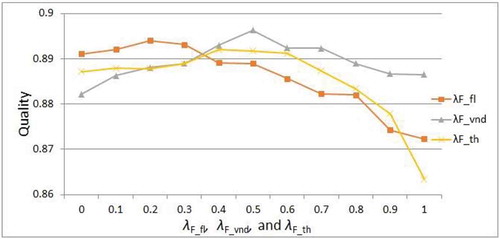

In addition to t0, other parameters, λF_fl, λF_vnd, and λF_th in EquationEq. (3)(3)

(3) also affect the labeling results. λF_fl, λF_vnd, and λF_th refer to the weight of Ffl, Fvnd, and Fth, respectively. Since zones 9 and 13 were selected as the experimental areas, we calculated Quality values of the pixel-based evaluation method to analyze the effect of these weighting parameters on the labeling results. The values of λF_fl, λF_vnd, and λF_th were normalized from 0.1 to 1.0 at the interval of 0.1. When one weight value was a, the other two values were calculated as (1-a)/2 to ensure that their sum is equal to 1. shows the parameter analysis of λF_fl, λF_vnd, and λF_th. The highest Quality value was obtained when λF_vnd = 0.5. Therefore, we set λF_fl, λF_vnd, and λF_th as 0.25, 0.50, and 0.25, respectively.

4.2 Building labeling at the object scale

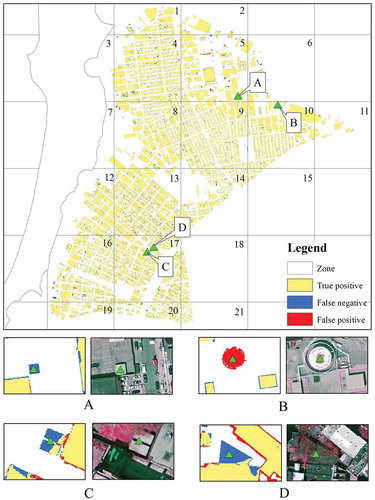

shows the evaluation of the building labeling methods. Results of the pixel-based, object-based, and area-normalized object-based evaluation methods for 21 zones were consistent. Comp, Corr, and Quality in the entire area were 94.49%, 95.54%, and 90.51%; 94.21%, 97.99%, and 92.42%; and 98.38%, 99.41%, and 97.81% using pixel-based evaluation, object-based evaluation, and area-normalized object-based, respectively. These results suggest that the MS3DB method achieved a reasonable accuracy for large-area buildings. All the experiments confirm the feasibility of the methods.

Table 4. Accuracy of building labeling

shows the refined labeling results extracted from the MS3DB method at the pixel scale. Wrong labeling was detected in three situations: (1) some small buildings were missing, (2) some oil tanks were wrongly labeled as buildings, and (3) some buildings were wrongly labeled as non-buildings due to the influence of vegetation on some rooftops. These errors are expected because a single LiDAR data source can only obtain the height information of urban features. It can be challenge to distinguish oil tanks from buildings and to identify vegetations on rooftops.

4.3 Extraction of morphological parameters at the grid scale

The spatial resolution of the building labeling results was set as 0.5 m × 0.5 m. For 2D and 3D building morphological parameters at the grid scale, the grid size should be determined by fully considering the accuracy and loss of detail. shows the effect of different grid sizes on the extracted results. The R2 value became stable at the grid size of 31 × 31 pixels, while the KL value still increased at that size. A smaller KL value indicates that the urban density distribution of the size is more similar to that of 7 × 7 pixels. Therefore, considering the trade-off between accuracy and loss of detail, we selected 31 × 31 pixels as the final grid size.

Figure 8. Comparison between the extraction accuracy (R2) and loss of detail (KL value) at different grid sizes

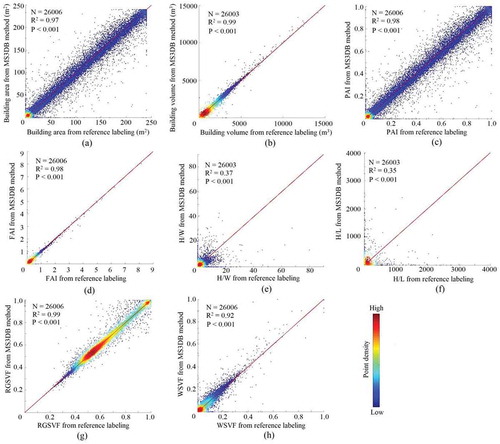

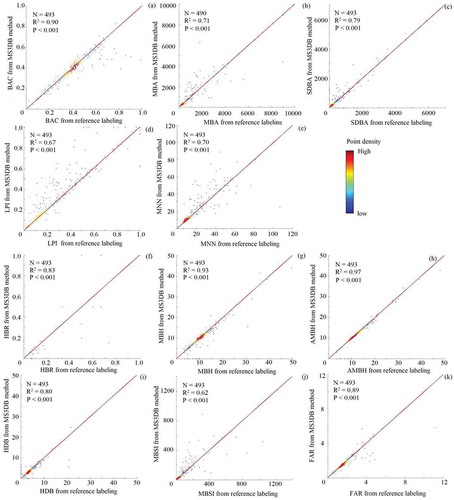

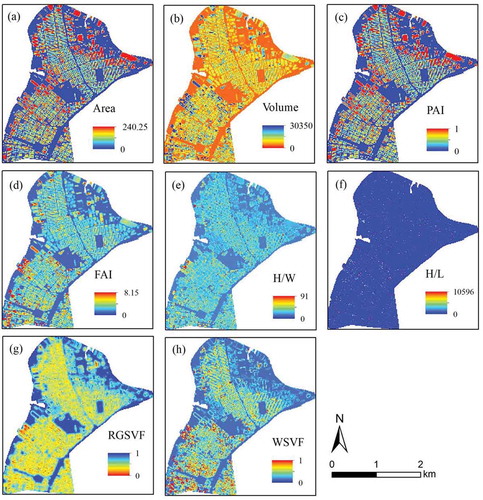

shows the extraction of the grid-scale parameters using the MS3DB method, e.g., area, volume, PAI, and FAI (see for definitions). shows the scatter plots of the 2D and 3D building morphological parameters against those from the visual interpretation, which include building area, volume, PAI, FAI, RGSVF, and WSVF (see for definitions). Most parameters yielded R2 values greater than 0.92, except for H/W and H/L, which had R2 values of 0.37 and 0.35, respectively. These results imply that the MS3DB method extracted the building edges with limited accuracy because of the “salt and pepper” noise in the grid-scale results.

Figure 9. Extraction results of grid-scale building parameters: (a)–(g) refer to the area, volume, Planer area index (PAI), Frontal Area Index (FAI), ratio of street height and building width (H/W), ratio of street height and width (H/L), sky view factor of rooftop or ground (RGSVF), and sky view factor of vertical wall (WSVF), respectively

4.4 Extraction of building landscape metrics at the block scale

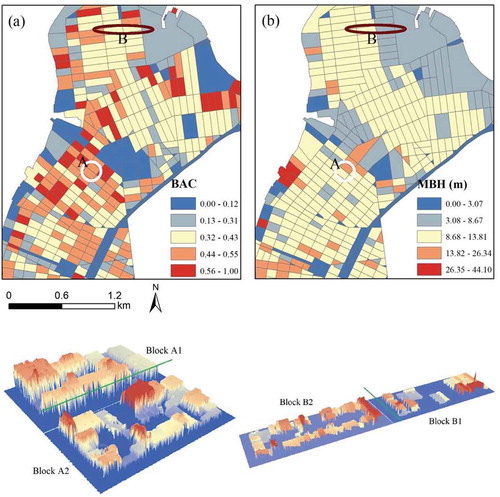

shows the block-scale results of BAC and MBH. BAC refers to the ratio of the total building area and the block area, whereas MBH refers to the average height of the block. The natural breakpoint method was used to divide the landscape metrics into five categories (Smith Citation1986). Blocks at locations A and B shared the same BAC; however, large differences in their MBH were observed. Such differences in the vertical landscape at the block-scale were detected using the MS3DB method. This shows the benefits of integrating 2D and 3D landscapes for a better understanding of the structure of urban buildings.

Figure 11. Block-scale building landscape metrics: (a) building area coverage (BAC); and (b) mean building height (MBH)

demonstrates the comparison of the results of the landscape metrics extracted using the MS3DB method and those extracted by visual interpretation. The results in imply that overall, refined extraction accuracy was achieved for most metrics. AMBH showed the highest accuracy with R2 of 0.97. The accuracy for MBSI and LPI was lower than for the other metrics with R2 values of 0.62 and 0.67.

5 Discussion

In this study, a new 3D building detection method (MS3DB) was developed at the pixel, grid, and block scales using both morphological parameters and landscape metrics. The new method is a useful tool for city managers to solve urban problems because it ensures GIS data accuracy and reliability for urban analysis at different spatial scales and thus optimizes urban planning costs. This method can also provide support for urban climate and energy studies because 2D/3D building morphological parameters and landscape patterns are important variables in urban climate and energy budget models (Kanda, Kawai, and Nakagawa Citation2005a; Yang et al. Citation2015; Zheng and Weng Citation2019).

We further evaluated the performance of the proposed method by using the point clouds data from Areas 1–3 of the ISPRS Test Project on Urban Classification and 3D Building Reconstruction (Rottensteiner et al. Citation2014). In this way, our method can be compared with existing methods by using the same data from the ISPRS benchmark dataset. presents the comparison of the MS3DB method with the existing methods in terms of accuracy, data sources, verification method, and spatial scale. The advantages of the MS3DB method can be summarized as follows. First, the MS3DB method produces more accurate results than most existing methods using a single LiDAR data source. Larger-area buildings (area >50 m2) yield high accuracy in the MS3DB method. The Comp, Corr, and Quality values of the object-based method were 100%, indicating no false extraction of the building objects.

Table 5. Comparison between existing methods and the MS3DB method

Second, the accuracy of the extraction of the 3D building information was evaluated at the pixel, object, grid, and block-scales. This information is essential for urban managers, planners, and urban data users because urban building analysis is often conducted at different spatial scales.

Third, several 3D building landscape metrics were integrated into MS3DB for block-scale detection. By adding height information extracted from the LiDAR data, 2D landscape metrics can be further improved (). It is of practical importance for urban planners to perform urban analysis at the city-block level because city blocks are often the basic urban management units.

This study has several limitations. First, this study focuses on 3D building information detection; however, more urban elements are required to perform multi-scale urban analysis, e.g., tree object identification and canopy height evaluation. Second, the use of a single LiDAR data source generated some errors in the labeling results (), e.g., missing small buildings and confusing buildings with oil tanks. Thus, future research should focus on the detection of additional urban features and synthesizing multi-source remote sensing data (e.g., VHR data) to take advantage of multi-modal data for more accurate building labeling.

6 Conclusions

In this study, a method of multi-scale 3D building information extraction (MS3DB) was developed for urban areas using a single LiDAR point cloud and tested in the northern part of Brooklyn, New York City, USA. The method effectively extracted accurate building labeling information at the object scale, building 2D and 3D morphological parameters at the grid scale, and 3D building landscape metrics at the city-block scale. Several accuracy evaluation methods were integrated into the MS3DB method at the object, grid, and block scales. The findings of this study contribute to the existing knowledge on the extraction of multi-scale 3D building information. The major results of the study can be summarized as follows:

The extraction accuracy of building labeling over the entire study area was highly consistent. The Comp, Corr, and Quality metrics of the object-based evaluation method were 94.21%, 97.99%, and 92.42%, respectively. Most of the grid-scale parameters, i.e., building area, volume, PAI, FAI, RGSVF, and WSVF, achieved high extraction accuracy with R2 > 0.92, except for H/W and H/L, with R2 of 0.37 and 0.35, respectively. This indicates that the MS3DB method did not extract building edges successfully. For the block-scale extraction of the landscape metrics, AMBH achieved the highest accuracy (R2 = 0.97), while the accuracy of MBSI and LPI was lower than the other metrics (R2 = 0.62 and 0.67, respectively).

The main contributions of this study can be summarized as follows. First, it successfully combines the building objects, 2D/3D morphological parameters, and landscape metrics in a holistic detection method, which is rarely reported in the literature. Second, various evaluation methods of building extraction at the object, grid, and block scale were designed in the MS3DB. This is useful for determining the reliability of the results at different spatial scales. Finally, the proposed MS3DB method can extract both 2D and 3D landscape metrics, instead of the traditional methods that extract only 2D landscape metrics.

Highlights

Multi-scale 3D building information extraction (MS3DB) method was proposed.

MS3DB extracts building labels, 2D/3D morphological parameters, & landscape metrics.

3D building landscape metrics was integrated into MS3DB for block-scale detection.

Accuracy of MS3DB at object-, grid-, and block-scale was evaluated.

Acknowledgements

The authors would like to thank the editors and anonymous reviewers for their valuable time and efforts in reviewing this manuscript and the New York City Department of Information Technology and Telecommunications for their efforts to make the ALS data free to download.

Data availability statement

Data in this study can be available within the article or its supplementary materials. 2017 ALS data used in this study can be download from http://gis.ny.gov/elevation/lidar-coverage.htm.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Adolphe, L. 2001. “A Simplified Model of Urban Morphology: Application to an Analysis of the Environmental Performance of Cities.” Environment and Planning B: 28 (2): 183–200. doi:10.1068/b2631.

- Awrangjeb, M., and C. S. Fraser. 2014. “Automatic Segmentation of Raw LiDAR Data for Extraction of Building Roofs.” Remote Sensing 6 (5): 3716–3751. doi:10.3390/rs6053716.

- Baltsavias, E. P. 1999. “A Comparison between Photogrammetry and Laser Scanning.” ISPRS Journal of Photogrammetry and Remote Sensing 54: 83–94. doi:10.1016/s0924-2716(99)00014-3.

- Bouziani, M., K. GoTa, and D. C. He. 2010. “Automatic Change Detection of Buildings in Urban Environment from Very High Spatial Resolution Images Using Existing Geodatabase and Prior Knowledge.” ISPRS Journal of Photogrammetry and Remote Sensing 65 (1): 143–153. doi:10.1016/j.isprsjprs.2009.10.002.

- Boykov, Y., and V. Kolmogorov. 2004. “An Experimental Comparison of Min-cut/maxflow Algorithms for Energy Minimization in Vision.” IEEE Transactions on Pattern Analysis and Machine Intelligence 26 (9): 1124–1137. doi:10.1109/tpami.2004.60.

- Cao, S., M. Du, W. Zhao, Y. Hu, Y. Mo, S. Chen, Y. Cai, Z. Peng, and C. Zhang. 2020. “Multi-level Monitoring of Three-dimensional Building Changes for Megacities: Trajectory, Morphology, and Landscape.” ISPRS Journal of Photogrammetry and Remote Sensing 167 (2020): 54–70. doi:10.1016/j.isprsjprs.2020.06.020.

- Chen, Q., and M. Sun 2020. “Brief Description of Building Detection from Airborne LiDAR Data Based on Extraction of Planar Structure.” Accessed 1 March 2020. http://ftp.ipi.uni-hannover.de/ISPRS_WGIII_website/ISPRSIII_4_Test_results/papers/Brief_description_Qi_Chen_WHU.pdf

- Chen, Z., B. Xu, and B. Devereux. 2019. “Urban Landscape Pattern Analysis Based on 3d Landscape Models.” Applied Geography 55: 82–91. doi:10.1016/j.apgeog.2014.09.006.

- Craighead, G. 2009. Chapter 1 – High-Rise Building Definition, Development, and Use in Book High-Rise Security and Fire Life Safety. Oxford, UK: Elsevier’s Science & Technology Rights Department.

- Deng, L., Y. N. Yan, Y. He, Z. H. Mao, and J. Yu. 2019. “An Improved Building Detection Approach Using L-band Polsar Two-dimensional Time-frequency Decomposition over Oriented Built-up Areas.” GIScience & Remote Sensing 56 (1–2): 1–21. doi:10.1080/15481603.2018.1484409.

- Diego González-Aguilera, Eugenia Crespo-Matellán, David Hernández-López, and Pablo Rodríguez-Gonzálvez. 2013. Automated Urban Analysis Based on LiDAR-Derived Building Models. IEEE Transactions on Geoscience and Remote Sensing, 51(3): 1844–1851.

- Dong, P., and Q. Chen. 2018. LiDAR Remote Sensing and Applications. Boca Raton: CRC Press.

- Du, S., Y. Zhang, Z. Zou, S. Xu, X. He, and S. Chen. 2017. “Automatic Building Extraction from Lidar Data Fusion of Point and Grid-based Features.” ISPRS Journal of Photogrammetry and Remote Sensing 130: 294–307. doi:10.1016/j.isprsjprs.2017.06.005.

- Duarte, D., F. Nex, N. Kerle, and G. Vosselman. 2018. “Multi-Resolution Feature Fusion for Image Classification of Building Damages with Convolutional Neural Networks.” Remote Sensing 10: 1636. doi:10.3390/rs10101636.

- ESRI. 2020. “Las Dataset to Raster.” Accessed 1 March 2020. https://pro.arcgis.com/zh-cn/pro-app/tool-reference/conversion/las-dataset-to-raster.htm

- Feng, L., 2020. “Detection Building Point Clouds from Airborne LiDAR Data Using Roof Points’ Attributes.” Accessed 1 March 2020. http://ftp.ipi.uni-hannover.de/ISPRS_WGIII_website/ISPRSIII_4_Test_results/papers/DetectionBuildingfromAirborneLiDARDataUsingRoofpointsAttributes.pdf

- Forman, R. T. T., and M. Godron. 1986. Landscape Ecology. New York: Wiley.

- Gerke, M., and J. Xiao. 2014. “Fusion of Airborne Laser Scanning Point Clouds and Images for Supervised and Unsupervised Scene Classification.” ISPRS Journal of Photogrammetry and Remote Sensing 87: 78–92. doi:10.1016/j.isprsjprs.2013.10.011.

- Griffiths, D., and B. Jan. 2019. “Improving Public Data for Building Segmentation from Convolutional Neural Networks (Cnns) for Fused Airborne Lidar and Image Data Using Active Contours.” ISPRS Journal of Photogrammetry and Remote Sensing 154: 70–83. doi:10.1016/j.isprsjprs.2019.05.013.

- Hao, X., C. Liang, L. Manchun, C. Yanming, and Z. Lishan. 2015. “Using Octrees to Detect Changes to Buildings and Trees in the Urban Environment from Airborne LiDAR Data.” Remote Sensing 7 (8): 9682–9704. doi:10.3390/rs70809682.

- Haralick, R. M. 1979. “Statistical and Structural Approaches to Texture.” Proceedings of the IEEE 67 (5): 786–804. doi:10.1109/proc.1979.11328.

- Huang, X., D. Wen, J. Li, and R. Qin. 2017. “Multi-level Monitoring of Subtle Urban Changes for the Megacities of China Using High-resolution Multi-view Satellite Imagery.” Remote Sensing of Environment 196: 56–75. doi:10.1016/j.rse.2017.05.001.

- Hung, C., L. James, and M. Hodgson. 2018. “An Automated Algorithm for Mapping Building Impervious Areas from Airborne LiDAR Point-cloud Data for Flood Hydrology.” GIScience & Remote Sensing 55 (6): 793–816. doi:10.1080/15481603.2018.1452588.

- Kanda, M., T. Kawai, M. Kanega, R. Moriwaki, K. Narita, and A. Hagishima. 2005b. “A Simple Energy Balance Model for Regular Building Arrays.” Boundary-Layer Meteorology 116 (3): 423–443. doi:10.1007/s10546-004-7956-x.

- Kanda, M., T. Kawai, and K. Nakagawa. 2005a. “A Simple Theoretical Radiation Scheme for Regular Building Arrays.” Boundary-Layer Meteorology 114 (1): 71–90. doi:10.1007/s10546-004-8662-4.

- Kubota, T., M. Miura, Y. Tominaga, and A. Mochida. 2008. “Wind Tunnel Tests on the Relationship between Building Density and Pedestrian-level Wind Velocity: Development of Guidelines for Realizing Acceptable Wind Environment in Residential Neighborhoods.” Building and Environment 43 (10): 1699–1708. doi:10.1016/j.buildenv.2007.10.015.

- Lam, J. C. 2000. “Shading Effects Due to Nearby Buildings and Energy Implications.” Energy Conversion and Management 41 (7): 647–659. doi:10.1016/s0196-8904(99)00138-7.

- Lausch, A., T. Blaschke, D. Haase, F. Herzog, R.-U. Syrbe, L. Tischendorf, et al. 2015. “Understanding and Quantifying Landscape Structure E a Review on Relevant Process Characteristics, Data Models and Landscape Metrics.” Ecological Modelling 295:31–41. doi:10.1016/j.ecolmodel.2014.08.018.

- Lin, C. H., J. Y. Chen, P. L. Su, and C. H. Chen. 2014. “Eigen-feature Analysis of Weighted Covariance Matrices for LiDAR Point Cloud Classification.” ISPRS Journal of Photogrammetry and Remote Sensing 94: 70–79. doi:10.1016/j.isprsjprs.2014.04.016.

- Liu, C., B. Shi, X. Yang, N. Li, and H. Wu. 2013. “Automatic Buildings Extraction from LiDAR Data in Urban Area by Neural Oscillator Network of Visual Cortex.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 6 (4): 2008–2019. doi:10.1109/jstars.2012.2234726.

- Liu, M., Y. M. Hu, and C. L. Li. 2017. “Landscape Metrics for Three-dimensional Urban Building Pattern Recognition.” Applied Geography 87: 66–72. doi:10.1016/j.apgeog.2017.07.011.

- McGarigal, K., and B. J. Marks, 1995. “Spatial Pattern Analysis Program for Quantifying Landscape Structure.” Gen. Tech. Rep. PNW-GTR-351. US Department of Agriculture, Forest Service, Pacific Northwest Research Station. doi: 10.2737/pnw-gtr-351.

- Meng, X., L. Wang, and N. Currit. 2009. “Morphology-based Building Detection from Airborne LiDAR Data.” Photogrammetric Engineering & Remote Sensing 75 (4): 437–442. doi:10.14358/pers.75.4.437.

- Mills, G. 1997. “Building Density and Interior Building Temperatures: A Physical Modelling Experiment.” Physical Geography 18 (3): 195–214. doi:10.1080/02723646.1997.10642616.

- Murakami, H., K. Nakagawa, H. Hasegawa, T. Shibata, and E. Iwanami. 1999. “Change Detection of Buildings Using an Airborne Laser Scanner.” ISPRS Journal of Photogrammetry and Remote Sensing 54 (2): 148–152. doi:10.1016/s0924-2716(99)00006-4.

- Niemeyer, J., F. Rottensteiner, and U. Soergel. 2014. “Contextual Classification of LiDAR Data and Building Object Detection in Urban Areas.” ISPRS Journal of Photogrammetry and Remote Sensing 87: 152–165. doi:10.1016/j.isprsjprs.2013.11.001.

- NYCDITT. 2019. “2017 ALS data.” Accessed February 1. http://gis.ny.gov/elevation/lidar-coverage.htm

- NYITS. 2019. “High-resolution Orthophotos of Red, Green, Blue, and Near-infrared Bands.” Accessed 1 February 2020. http://gis.ny.gov/gateway/mg/metadata.cfm

- Pang, S., H. Xiangyun, W. Zizheng, and L. Yihui. 2014. “Object-based Analysis of Airborne Lidar Data for Building Change Detection.” Remote Sensing 6 (11): 10733–10749. doi:10.3390/rs61110733.

- Parmehr, E. G., C. S. Fraser, C. Zhang, and J. Leach. 2014. “Automatic Registration of Optical Imagery with 3D LiDAR Data Using Statistical Similarity.” ISPRS Journal of Photogrammetry and Remote Sensing 88: 28–40. doi:10.1016/j.isprsjprs.2013.11.015.

- Qi, M., L. Jiang, Y. Liu, Q. Xiong, C. Sun, X. Li, W. Zhao, and X. Yang. 2018. “Analysis of the Characteristics and Sources of Carbonaceous Aerosols in Pm2.5 In the Beijing, Tianjin, and Langfang Region, China.” International Journal of Environmental Research and Public Health 15 (7): 1483. doi:10.3390/ijerph15071483.

- Qin, R., J. Tian, and P. Reinartz. 2016. “3D Change Detection: Approaches and Applications.” ISPRS Journal of Photogrammetry and Remote Sensing 122: 41–56. doi:10.5220/0007260607600767.

- Rapidlasso. 2020. “Lastools Introduction.” Accessed 1 March 2020. https://rapidlasso.com/lastools/lasground/

- Robinson, D. 2006. “Urban Morphology and Indicators of Radiation Availability.” Solar Energy 80 (12): 1643–1648. doi:10.1016/j.solener.2006.01.007.

- Rottensteiner, F., G. Sohn, M. Gerke, J. D. Wegner, U. Breitkopf, and J. Jung. 2014. “Results of the ISPRS Benchmark on Urban Object Detection and 3D Building Reconstruction.” ISPRS Journal of Photogrammetry and Remote Sensing 93: 256–271. doi:10.1016/j.isprsjprs.2013.10.004.

- Rusu, R. B., Z. C. Marton, N. Blodow, M. Dolha, and M. Beetz. 2008. “Towards 3D Point Cloud Based Object Maps for Household Environment.” Robotics and Autonomous Systems 56 (11): 927–941. doi:10.1016/j.robot.2008.08.005.

- Rutzinger, M., F. Rottensteiner, and N. Pfeifer. 2009. “A Comparison of Evaluation Techniques for Building Extraction from Airborne Laser Scanning.” Ieee J-stars 2 (1): 11–20. doi:10.1109/JSTARS.2009.2012488.

- Siddiqui, F., S. Teng, G. Lu, and M. Awrangjeb 2020. “Extraction of Buildings below the Height Threshold from Remotely Sense Data.” Accessed 1 March 2020. http://ftp.ipi.uni-hannover.de/ISPRS_WGIII_website/ISPRSIII_4_Test_results/papers/MON4.pdf

- Smith, R. M. 1986. “Comparing Traditional Methods for Selecting Class Intervals on Choropleth Maps.” The Professional Geographer 38 (1): 62–67. doi:10.1111/j.0033-0124.1986.00062.x.

- Streutker, D. R. 2003. “Satellite-measured Growth of the Urban Heat Island of Houston, Texas.” Remote Sensing of Environment 85 (3): 282–289. doi:10.1016/S0034-4257(03)00007-5.

- Sundell-Turner, N. M., and A. D. Rodewald. 2008. “A Comparison of Landscape Metrics for Conservation Planning.” Landscape and Urban Planning 86: 219–225. doi:10.1016/j.landurbplan.2008.03.001.

- Susaki, J., M. Kajimoto, and M. Kishimoto. 2014. “Urban Density Mapping of Global Megacities from Polarimetric SAR Images.” Remote Sensing of Environment 155: 334–348. doi:10.1016/j.rse.2014.09.006.

- Tian, J., S. Cui, and P. Reinartz. 2014. “Building Change Detection Based on Satellite Stereo Imagery and Digital Surface Models.” IEEE Transactions on Geoscience and Remote Sensing 52 (1): 406–417. doi:10.1109/TGRS.2013.2240692.

- Tian, J., P. Reinartz, P. d’Angelo, and M. Ehlers. 2013. “Region-based Automatic Building and Forest Change Detection on Cartosat-1 Stereo Imagery.” ISPRS Journal of Photogrammetry and Remote Sensing 79: 226–239. doi:10.1016/j.isprsjprs.2013.02.017.

- Tomljenovic, I., B. Höfle, D. Tiede, and T. Blaschke. 2015. “Building Extraction from Airborne Laser Scanning Data: An Analysis of the State of the Art.” Remote Sensing 7 (4): 3826–3862. doi:10.3390/rs70403826.

- Yang, B. 2020. “Procedure of Building Extraction.” Accessed 1 March 2020. http://ftp.ipi.uni-hannover.de/ISPRS_WGIII_website/ISPRSIII_4_Test_results/papers/BuildingExtraction_WHU_YD.pdf

- Yang, B., W. Xu, and Z. Dong. 2013. “Automated Extraction of Building Outlines from Airborne Laser Scanning Point Clouds.” IEEE Geoscience and Remote Sensing Letters 10 (6): 1399–1403. doi:10.1109/LGRS.2013.2258887.

- Yang, J., M. S. Wong, M. Menenti, and J. Nichol. 2015. “Study of the Geometry Effect on Land Surface Temperature Retrieval in Urban Environment.” ISPRS Journal of Photogrammetry and Remote Sensing 109: 77–87. doi:10.1016/j.isprsjprs.2015.09.001.

- Yu, B., H. Liu, J. Wu, Y. Hu, and L. Zhang. 2010. “Automated Derivation of Urban Building Density Information Using Airborne Lidar Data and Object-based Method.” Landscape Urban Plan 98 (3–4): 0–219. doi:10.1016/j.landurbplan.2010.08.004.

- Zakšek, K., K. Oštir, and Ž. Kokalj. 2011. “Sky-view Factor as a Relief Visualization Technique.” Remote Sensing 3 (2): 398–415. doi:10.3390/rs3020398.

- Zarea, A., and A. Mohammadzadeh. 2016. “A Novel Building and Tree Detection Method from LiDAR Data and Aerial Images.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 9 (5): 1864–1875. doi:10.1109/JSTARS.2015.2470547.

- Zheng, Y., and Q. Weng. 2019. “Modeling the Effect of Climate Change on Building Energy Demand in Los Angeles County by Using a GIS-based High Spatial- and Temporal-resolution Approach.” Energy 176: 641–655. doi:10.1016/j.energy.2019.04.052.

- Zhou, Q. Y., and U. Neumann,2008. “Fast and Extensible Building Modeling from Airborne LiDAR Data.” In: Proceedings of the 16th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems. ACM GIS 2008 (on CD-ROM), Irvine, CA, USA.