ABSTRACT

In this White Paper, we outline recommendations from the perspective of health psychology and behavioural science, addressing three research gaps: (1) What methods in the health psychology research toolkit can be best used for developing and evaluating digital health tools? (2) What are the most feasible strategies to reuse digital health tools across populations and settings? (3) What are the main advantages and challenges of sharing (openly publishing) data, code, intervention content and design features of digital health tools? We provide actionable suggestions for researchers joining the continuously growing Open Digital Health movement, poised to revolutionise health psychology research and practice in the coming years. This White Paper is positioned in the current context of the COVID-19 pandemic, exploring how digital health tools have rapidly gained popularity in 2020–2022, when world-wide health promotion and treatment efforts rapidly shifted from face-to-face to remote delivery. This statement is written by the Directors of the not-for-profit Open Digital Health initiative (n = 6), Experts attending the European Health Psychology Society Synergy Expert Meeting (n = 17), and the initiative consultant, following a two-day meeting (19–20th August 2021).

Introduction

Digital health tools, defined here as the use of technology solutions (e.g., computers, phones, wearables, virtual reality) to deliver health interventions, are increasingly used to promote health and wellbeing across different populations and settings (World Health Organization, Citation2018). Digital health tools can improve disease prevention and healthcare delivery (Auerbach, Citation2019). The increasing popularity of mobile and wearable devices adds to their potential to deliver effective health promotion interventions and to support health behaviour change (Arigo et al., Citation2019; Burchartz et al., Citation2020). The potential of digital health tools to revolutionise health promotion and healthcare delivery is widely recognised by healthcare providers across the globe, with national and international policy efforts focused on stimulating digital health innovation and regulation (e.g., the EU’s eHealth policies, the UK National Institute for Health and Care Excellence’s Evidence Standard Framework for Digital Health Technologies).

The increased use of digital health tools has been particularly notable during the COVID-19 pandemic (Scott et al., Citation2020), characterised by limited in-person or physical healthcare interactions. The pandemic has further emphasised the urgency of a digital health revolution, with digital health tools acting as potentially powerful means to alleviate pressure on existing health and social care systems (Fagherazzi et al., Citation2020; Gunasekeran et al., Citation2021). However, the COVID-19 pandemic has also further emphasised issues of health equity in general (Lyles et al., Citation2021), and digital health equity in particular (Crawford & Serhal, Citation2020).

The potential of digital health tools notwithstanding, we face key challenges to their rapid evaluation and scalability. Although some tools are effective, their robust evaluation and successful implementation remain limited to specific geographical settings and disease areas (Gordon et al., Citation2020; Schreiweis et al., Citation2019). Consequently, digital health tools have yet to significantly and sustainably impact health and wellbeing at a large scale and are yet to be applied equitably across populations and settings with differing needs (Brewer et al., Citation2020; Rodriguez et al., Citation2020).

To achieve their full potential, digital health tools need to be evaluated using appropriate study designs, integrated into healthcare systems, and effectively scaled. There is a need for a comprehensive platform to openly share evidence-based digital health tools and associated evidence. This White Paper addresses three key gaps in the literature pertaining to digital health provision: (1) effective and fit-for-purpose evaluation of digital health tools is often lacking, (2) digital health tools are not effectively reused and applied across populations and settings, which results in wasted resources, and limited documentation and use of key learnings across users, providers and regulators, (3) the main advantages and challenges of sharing data, code, intervention content, and design features of digital health tools need to be acknowledged when building an open digital health platform. In the following section, we address these three aforementioned core knowledge gaps and make suggestions for future improvements to each area. Open Digital Health (ODH) is defined as a movement to make digital health tools open, scalable and accessible to all. The ODH is also a registered not-for-profit organisation that aims to encourage health scientists, practitioners, and technology developers to share evidence-based digital health tools.

(1) What methods in the health psychology research toolkit can be best used for developing and evaluating digital health tools?

Digital health tools are abundant on digital marketplaces; however, only a fraction of these has been rigorously evaluated in clinical trials (Zhao et al., Citation2016). Although early iterations of evidence standard frameworks for digital health tools are available from institutions such as the European Commission, the UK Medicines and Healthcare Products Regulatory Agency, and the US Food and Drug Administration, the number of digital health tools that have undergone rigorous evaluation is relatively low. To illustrate, in 2017, only 0.18% of 325,000 published health apps on consumer platforms had undergone formative scientific evaluation (IQVIA Institute, Citation2017). In addition, user engagement with health apps tends to be suboptimal. It has been estimated that 25% of health apps are only used once by each user and that less than 10% of users continue to engage seven days after download (Appboy, Citation2016).

First, conventional randomised controlled trials (RCTs) are seen as the gold-standard evaluation method as they are helpful for assessing the efficacy and effectiveness of digital health tools (van Beurden et al., Citation2019; Wunsch et al., Citation2020). However, RCTs are not always fit-for-purpose in the context of dynamically changing digital health tools, with alternative evaluation methods needed (Ainsworth et al., Citation2021; Knox et al., Citation2021; Smit et al., Citation2021). The lengthy and costly nature of RCTs, along with their typical requirements for interventions to remain static throughout a trial, often contradict the intrinsic qualities of digital health tools, including their rapid and iterative development, and relatively fast real-world implementation. Also, a traditional (intervention versus wait-list control) RCT does not allow researchers to easily distil which components of multicomponent interventions are causing the behaviour change (Peters et al., Citation2015). Therefore, we need to supplement and expand the toolbox of methods available for evaluating the effectiveness of digital health tools that allow for capturing dynamic changes of digital health tools over time. Additionally, we need to use and expand strategies to tackle the lengthy and costly nature of RCTs (Riley et al., Citation2013). Digital health tools contribute to this because they offer opportunities, such as facilitating randomisation to different versions or rapid recruitment through other channels outside health care and academia, including social media platforms.

Second, digital health tools are typically used by individuals but evaluated in between-group study designs (e.g., conventional parallel-arm RCTs). Here, researchers investigate average intervention effects across the group that was assigned to use, versus the group that was not assigned to use, the digital health tool. As digital health tools need to meet the needs of individuals, it is a plausible assumption that they need to be tailored to the individual to maximise effectiveness (Alqahtani & Orji, Citation2020), and need to account for individual differences in user preferences in order to promote acceptability and user engagement (König et al., Citation2021; Nurmi et al., Citation2020; Torous et al., Citation2018). Therefore, the evaluation of digital health tools using mainly between-group study designs and focusing on average treatment effects is not always informative. Learning about between-group effects will not answer research questions regarding for whom the tool is effective, influences on engagement with the tool, and consequently success in terms of short, medium and long-term health improvements. In other words, alternative methods might be more useful for answering different research questions (e.g., about within-person changes or tool feasibility).

We therefore argue that several innovative frameworks, research designs and accompanying statistical methods are more closely aligned with the iterative nature of digital health tools. Some of these are beginning to be successfully applied in behavioural science, including the Multiphase Optimisation Strategy (MOST) framework (Collins, Citation2018; Guastaferro & Collins, Citation2019), the Plan-Do-Check-Act (PDCA) Cycles (Isniah et al., Citation2020), factorial trials (Kahan et al., Citation2020), Micro-Randomised Trials (MRTs) (Dimairo et al., Citation2020), N-of-1 trials (Kwasnicka et al., Citation2019; Vohra et al., Citation2015), Sequential Multiple Assignment Randomised Trials (SMARTs) (Almirall et al., Citation2014; Collins et al., Citation2007), and Rapid Optimisation Methods (Morton et al., Citation2021). However, these innovative designs are heavily underutilised (McCallum et al., Citation2018; Pham et al., Citation2016), mainly because only a limited number of digital health researchers have the necessary access, skills and understanding of when and how to best apply them. However, accessible guidelines and training materials are becoming increasingly available (Dohle & Hofmann, Citation2018; Kwasnicka et al., Citation2019; Kwasnicka & Naughton, Citation2019; Liao et al., Citation2016; Wyrick et al., Citation2014). Despite not always being fit-for-purpose, key stakeholders (e.g., funders, reviewers, and regulators) often expect that digital health tools will be evaluated in large-scale, parallel-arm RCTs.

Recommendation 1: We recommend that digital health researchers use a variety of research methods to design, evaluate and support the effective implementation of digital health tools.

Recommendation 2: We also recommend that digital health tools are more frequently evaluated through within-person (as opposed to between-group) study designs in order to advance our understanding of how to best tailor them to individuals.

(2) What are the most feasible strategies to reuse digital health tools across populations and settings?

The observation that digital health tools are typically not reused and reapplied across populations and settings drastically limits the health and societal impact of international digital health developments. In practice, the duplication of efforts and the development of new tools from scratch means time and resources are often wasted (e.g., re-creating feedback algorithms, persuasive design elements or tailored content). This duplication of effort is particularly wasteful in the context of digital health tools, as a key advantage is their potential for reuse, either as a whole or in part. They could very well be (but are currently not yet often) designed to be modular in structure with source code, content, and mechanisms that can be easily shared and modified under appropriate licencing provisions.

Despite their potential to be evaluated and implemented across different populations and settings, digital health tools – like most interventions – are still typically evaluated in specific contexts only (Mathews et al., Citation2019), i.e., in a specific country/region within a specific healthcare system and/or with a small subgroup of patients. Additionally, none of the extant curated health app portals and repositories (e.g., the UK National Health Service’s (NHS) Apps Library) include direct links to the underlying evidence and/or well-defined descriptions of the contexts in which they were developed and evaluated (e.g., using the TIDieR checklist (Hoffmann et al., Citation2014)). This means that we lack an understanding of the generalisability or applicability of digital health tools to different contexts.

For developers, researchers, clinicians, funders, and users, it is therefore currently impossible to assess which of the myriad of available digital health tools is appropriate for a specific health need. Consequently, many developers and researchers choose to build a new tool from scratch, requiring further costly evaluation, thus resulting in a highly fragmented digital health ecosystem with poorly validated and often not scalable digital health tools.

To address these issues, the not-for-profit Open Digital Health initiative (www.opendigitalhealth.org) was established with the aim to give digital health tools ‘a second life’ by promoting the reuse of existing evidence-based tools (or components of these) and their replication and application across different populations and settings. We expect that this will ultimately help reduce costs and increase the reach of existing digital health tools. By doing so, we aim to systematise, better integrate and scale existing digital health tools nationally and internationally and to create a community of researchers, developers and practitioners who advocate for, and benefit from, open digital health tools.

The Open Digital Health initiative aims to target issues associated with appropriate and systematic description, evaluation, reuse and scaling of digital health tools. To achieve these aims, the following underlying issues must be addressed: (1) establishing a shared language; (2) sharing the resources effectively; and (3) defining best practices.

(1) Establishing a shared language

We need a common language across scientific disciplines involved in the development, evaluation and implementation of digital health tools to better describe the context in which they have been evaluated. Digital health requires synergy between healthcare providers and practitioners; health, behavioural, and Information and Communication Technology (ICT) scientists; national and international funders; public health experts and policy makers; industry/business partners; start-ups and non-governmental organisations (NGOs); and consumers/end-users (Tran Ngoc et al., Citation2018). We currently lack a common understanding and language (or ‘standardised descriptors’) for outlining the context in which evidence has been/needs to be generated, and a limited number of people have the skills and experience required to bridge these disciplines.

Recommendation 3: We recommend the development of shared definitions of context in which digital health tools were tested and applied.

(2) Sharing the resources effectively

Digital health tools are typically validated in specific contexts: in a specific geographic region, with a specific population and/or in a specific healthcare setting (e.g., primary, secondary or tertiary care). This is not a problem in itself and is often beneficial to address local issues, but the way in which data are currently generated and presented makes it difficult to predict how a digital health tool may perform in a new setting or population. Digital health tools serving the same purpose are continuously reinvented in different contexts and settings. A more resource efficient practice would be to build upon each other's successes and develop digital health solutions that are based on previously developed ones. We acknowledge that structural changes are needed (e.g., changes to the incentive structures and moving away from the ‘publish or perish’ culture at universities and research institutes) to encourage adoption and maintenance of open science practices, including the reuse of open digital health tools (O’Connor, Citation2021).

Recommendation 4: We recommend that, based on in-depth understanding of behavioural science, appropriate digital health solutions ought to be reused across populations and settings, and that data, code, intervention content, and design features are made openly available and shared.

(3) Establishing a platform and best practices for sharing (and peer review) of evidence-based digital health tools

Dedicated platforms for sharing source code and data exist (e.g., the Open Science Framework, OSFFootnote1 [https://osf.io/], GitHubFootnote2 [https://github.com/]) but not together with relevant (peer-reviewed) evidence and standardised context descriptors. Platforms such as Qeios (www.qeios.com) are built to facilitate this, but they are more general (i.e., a preprint server for scientific articles with open peer review and the ability to link to available descriptors). Condition-specific platforms also exist (e.g., www.onemindpsyberguide.org to help people navigate the myriad of available mental health apps), but do not provide a comprehensive assessment of digital health tools across several mental and physical health domains.

In the UK, ‘app library’ repositories (e.g., ORCHA (O’Connor, Citation2021)) include large bespoke reviews and evaluations of digital health tools. Through commercial collaborations with healthcare organisations, such repositories can support implementation. However, specific outcomes upon which evaluations are based vary substantially (including clinical assurance, data privacy, usability and regulatory approval) and the degree to which such evaluations validate clinical effectiveness can be unclear. Progress in this area is difficult due to the lack of clear user and healthcare professional guidelines for how to select the most appropriate tools for varying implementation pathways, and for researchers and developers to establish intellectual property (IP) and licencing agreements.

Recommendation 5: We recommend the development and maintenance of an open platform that supports the sharing of digital health tools (together with relevant evidence).

Many issues related to interoperability (Crutzen, Citation2021; Lehne et al., Citation2019), privacy (Zegers et al., Citation2021), data protection (Crutzen et al., Citation2019) and security (Filkins et al., Citation2016) remain to be improved. The Open Digital Health initiative is not set to achieve all these improvements, as our main goal is to create a network of various stakeholders who can benefit from sharing digital health tools and applying and testing them across different populations and settings. To accelerate the digital revolution in health settings, the initiative aims to develop consensus on context-specific descriptors and evaluation methods, define quality standards for scalable validation, connect researchers, and establish means to share evidence-based components and tools for easy reuse and adaptation in new settings. The Open Digital Health initiative aims to set the standards and provide the necessary methods and practices to enable the next generation of new and improved digital health tools to effectively build upon each other, thus increasing their quality and impact.

In order to be effective, this initiative will need to mobilise a critical mass and engender change by facilitating open innovation, open science and synergy among professionals across sectors (e.g., academia, healthcare, industry), disciplines (e.g., ICT, medicine, health sciences, behavioural sciences, psychology, public health), and countries. Currently there are stakeholders from 51 countries involved in this initiative with a good representation of countries with diverse income levels and stages of digital health tools provision and use. We aim to co-create the principles and educate developers and researchers on how to effectively and reliably share their results (e.g., algorithms, source code, data, intervention content) in such a way that they can be reused elsewhere. Rather than reinventing wheels across the world, researchers will be able to identify trusted components that benefit their intervention and quickly translate these to their specific context, thus increasing the overall quality and decreasing the cost of digital health development and evaluation.

The incentives for scientists to join this initiative are to learn from each other, build upon each other’s successes and failures, and save time and money working collaboratively on evidence-based digital health tools that can be scalable. Three behavioural science societies are already affiliated with the Open Digital Health, namely: the European Health Psychology Society, the Society of Behavioural Medicine, and the International Society of Behavioural Nutrition and Physical Activity, and we are aiming to expand our affiliations and collaborations with the relevant societies, academia, and industry. The incentives for commercial partners are the ability to partner with highly skilled researchers to help validate their tools and gather feedback on how to improve their products. For patients, consumers, practitioners and non-governmental organisations, the key incentive will be to help enact coordinated change to the digital health field, which will improve population health. Policy-makers, regulators and funders will benefit through providing them with systematic and coordinated efforts to describe, evaluate, optimise and scale digital health tools across different populations and settings.

Sharing (openly publishing) data, code, intervention content, and design features of digital health tools does not come without its advantages and challenges. Below we highlight the main advantages and challenges (and potential solutions) of sharing each of them as discussed and elaborated on during the European Health Psychology Society Synergy Expert meeting in 2021 hosted online. This meeting was led by the Directors of the not-for-profit Open Digital Health (n = 6) and experts (behavioural scientists with broad range of expertise and experience in digital health research) attending the European Health Psychology Society Synergy Expert Meeting in 2021 (n = 17). The Open Digital Health's main mission is to share evidence-based digital health tools.

(3) What are the main advantages and barriers/challenges of sharing (openly publishing) data, code, intervention content and design features of digital health tools?

1. Openly publishing data – main advantages and barriers/challenges

When conducting an experimental or observational study, individual-level data on important baseline characteristics (e.g., age, gender, country of residence, educational attainment, level of motivation) and time-varying outcomes such as user engagement (e.g., the number of logins, time spent on a digital health tool), symptoms (e.g., pain, fatigue), biomarkers (e.g., blood glucose levels) and behaviours (e.g., physical activity, cigarettes smoked) are collected. The key advantages to changing the status quo and publishing data include error detection and scrutiny (Peters et al., Citation2012), encouraging accurate data collection and labelling practices (i.e., to facilitate reuse by others, encouraging others to spend more time on the labelling and potential recording of variables), supporting accurate reproducibility of study results, and facilitating collaboration across research teams and data syntheses in meta-analyses. We also argue that the sharing of data is necessary for progressing behavioural science.

However, researchers and industry professionals typically do not publish datasets alongside peer reviewed articles or reports. Through discussion, we identified several important barriers to openly publishing data and suggestions for how to overcome these. First, the sharing of data requires time and effort up-front to ensure that studies are set up for this purpose at the outset. For example, researchers need to ensure that participant consent processes are in line with data sharing, and they need to spend time ensuring that data and meta-data are organised in such a way that others can easily reuse these without having to repeatedly contact the corresponding author/team lead. Furthermore, stakeholders such as ethics committees may not always be attuned to such requirements (although many institutions actively encourage the sharing of research data).

Second, researchers may have questions about where to store data long-term (e.g., what specific platforms can safely be used?). We note that it may be useful to, where possible, use an institutional repository to ensure longevity as commercial services, despite being free, may change/be discontinued over time (e.g., https://dataverse.nl/dataverse/tiu). There are also dedicated scientific journals for the sharing of datasets, e.g., Nature Scientific Data (https://www.nature.com/sdata/) and Data in Brief (https://www.journals.elsevier.com/data-in-brief); however, the data and articles published there are often not open. The owner of the dataset needs to be mindful of specific publishing and data licencing agreements, ensuring that the rights to the data remain with the author. The challenges faced in sharing data can be addressed by making data FAIR, i.e., findable, accessible, interoperable, and reusable (Wilkinson et al., Citation2016).

Third, the sharing of data to an international community of researchers/practitioners highlights the importance of standardised questionnaires and language barriers when translating these to different languages. For example, variables may need to be renamed if there is no equivalent in the language of interest and researchers need to be wary that items may be interpreted differently depending on country/culture. Fourth, it may be particularly challenging to share data from in-depth qualitative work or N-of-1 studies given the richness of the data, thus making anonymisation difficult.

Fifth, researchers and industry professionals may be worried about ‘scooping’ (i.e., other teams analysing the data and publishing findings) or large industries such as the tobacco or food industry making use of the data to optimise marketing and sales. However, we also note that there are clear benefits to multiple teams collaboratively working on the same dataset, as other teams likely bring expertise and complementary skills. Moreover, the reward structure for both scientists and participants needs to be revisited to reflect changing practices. For example, institutions and possibly scientific societies and organisations need to start rewarding open science practices for researchers to be able to prioritise these. Finally, we also need to consider how to encourage data solidarity (i.e., sharing or donating one's health-related data so that researchers and eventually other patients can benefit) and we need to raise the question if research participants should be appropriately rewarded for the continuous use of their data (e.g., including additional remuneration for data reuse).

2. Openly publishing intervention content – main advantages and barriers/challenges

Each digital health tool has specific content that is delivered to the end user. This content (often delivered as text, pictures, audio or video), is typically used to change specific behavioural determinants (i.e., attitudes) or even to prompt actions. Some of this content is freely available, and some content can only be accessed if paid or registered. The extent to which digital health tool content is behind such a (paywall/register) barrier has both advantages and disadvantages.

The main advantages of sharing the content are often for noble causes, i.e., the greater good and full transparency. By openly sharing content, other researchers or developers (but also patients and clinicians) do not have to spend extra time and/or resources if specific content is already available and found to be functional and usable. This may aid future (direct) replications in different cultures and contexts, further and faster advancing the field (Hekler et al., Citation2016). By sharing content, not only the developers are asked to think more critically about the products they share, but it also helps to find out what components, and how different operationalisations of content affect results.

However, sharing content is not always easy, and several barriers exist: some scientists and developers are against openly sharing due to intellectual property concerns. Additionally, making content readable, understandable and usable by third parties is time consuming, and even if the content is shared perfectly, developers still run the risk that content is taken out of context, competitive advantage (and with that money) is lost, or credits are not given (or stolen). Therefore – to save time and to overcome ownership issues, standardised databases are suggested, where open licence options are given (i.e., Creative Commons licencing making the usage of shared content more or less permissive/restrictive).

3. Openly publishing code – main advantages and barriers/challenges

There are now repositories available for developers of digital health tools to openly share their source code (e.g., https://github.com). Openly publishing the code of complex digital health tools can have many advantages, including minimising research (and resource) waste, working collaboratively, and personalisation of existing tools to new user groups and settings. An inventory of openly accessible source code provides designers with a useful starting point for developing their own digital health tools. Good documentation and instructions for future coders can further facilitate the reuse, adaptation or improvement of existing tools. This will help reduce development costs and enable more fine-grained optimisation of existing tools. Openly publishing source code can also facilitate interdisciplinary collaborations between behavioural experts, programmers and members of the public. For example, in the Netherlands, programmers openly shared the code for a national COVID-19 track and trace app (i.e., CoronaMelder). This project applied participatory design to involve members of the public in the design of the app. The project highlighted the need for a robust governing structure to monitor and approve any changes to the app. Lastly, open sharing of source code enables the adaptation of existing tools to new users and settings by providing other teams with a baseline from which they can advance the development of a tool.

Potential challenges to sharing code openly include complexity of coding language, questions of sustainability and maintainability, risk of data breaches, and ethical and intellectual property concerns (including potential requirements of industry partners to remain profitable in order to implement effective interventions within healthcare settings). Complexity of openly published source code is one of the major challenges to reuse of digital health tools. For source code to be sustainable and reusable, it needs to be well-documented both internally (describing what the code does) and with respect to deployment (how to run it). Digital health tool developers could also consider using customisable modular app templates that enable future code users to make adaptations/additions to the code.

Openly sharing source code bears the risk of viruses or harmful code getting introduced, if everyone is allowed to contribute to the code repository. One way of preventing the introduction of harmful code is to institute a well-designed contribution and code review policy by a core team of developers who can accept or reject any changes to the source code. Ethical challenges include the risk that adaptations to the original source code of a digital health tool could transform the tool into (or out of) a medical device which falls under strict regulations. Other challenges include intellectual property and commercialisation of openly accessible source code. Information on licencing can be obtained from University’s technology transfer offices or from freely available resources (e.g., https://choosealicense.com/). Furthermore, academics have the option to archive and assign a Digital Object Identifier (DOI) number to their source code (e.g., https://about.zenodo.org), and make it citeable (https://citation-file-format.github.io/). The landscape of research software is undergoing a lot of innovation at the moment. As an example, the FAIR for Research Software (FAIR4RS) working group is investigating how to apply the FAIR principles to research software (Katz et al., Citation2021).

4. Openly publishing design features – main advantages and barriers/challenges

Design features of digital health tools include the overall layout, including the type of text and how it is visualised, graphics, illustrations, and animations, which are often created by a professional (e.g., a user experience (UX) designer who ensures that the UX for individuals using websites or applications is as efficient and pleasurable as possible). The purpose of such features include promoting the user experience, making the tool more interesting and entertaining, and, in turn, improving the user’s engagement and adherence (Yardley et al., Citation2015). Openly sharing design features of digital health tools comes with advantages, but also challenges.

As a key advantage, many design features can easily be used in, and transferred to, other countries, cultures, and contexts (e.g., an avatar accompanying the user’s journey) as they are non-verbal and no translation into another language would be needed. This would tremendously support research groups, especially when no funding for a UX designer is available. Another advantage refers to the potential adaptability of such design features and their improvement over time. For instance, a research group could adopt an openly shared design template, adapt it to their context, openly share it again and report why they adapted it and how. Future research groups would benefit from these openly shared reports on design features, cite the authors of the original design feature (win-win situation), and no one would need to ‘reinvent the wheel’. In this situation, a particularly important issue relates to the need to pay close attention to issues of copyright and correct citation of the source of the design feature.

A key challenge refers to the fact that different software and operating systems limit reproducibility of shared digital health tools. For instance, design features could look differently on iOS-based or Android devices, and again within different versions of the respective operating systems, as these allow for different design options. For openly shared digital health tools programmed with cost-intensive software, access and reuse could be impeded when no funding is available to buy the relevant software. As another challenge, interpretation and acceptability of such design features vary across target groups (e.g., the avatar being a man or a woman could lead to different acceptability in different cultures), which should be considered when adapting the openly shared design feature. Empirical evidence from other fields, such as human–computer interaction, could be consulted to learn more about which design features work for which target groups (e.g., based on their visualisation literacy when designing visual representations of the data for feedback) and what types of data (e.g., individual- vs. group-level data, continuous vs. discrete data) (Epskamp et al., Citation2012; Meloncon & Warner, Citation2017). As a way forward, guidelines or a checklist on how to share and report on design features could help the creation of future design features. Contracts with, for example, UX designers ought to be explicit about transfer of copyright to the service procurer. As mentioned above, Creative Commons (https://creativecommons.org/share-your-work/), for example, are easy-to-use copyright licences that provide a simple, standardised way to give permission to share and use creative work – on conditions of choice (e.g., regarding attribution, commercial use, and sharing requirements).

The Open Digital Health Initiative – ways forward

Within the Open Digital Health initiative, we intend to explore the possibility of establishing a matching system to facilitate easy collaboration between researchers and industry partners, and for consumers and patients to join the initiative in an influential way as active participants that help co-create new, improved context-specific methods and practices. Within this initiative, we foresee the development of a strategic agenda for the scalability of digital health tools across populations and settings. We ultimately aim to develop a comprehensive scalability framework, consisting of:

An improved classification system (‘standardised descriptors’) for digital health tools (e.g., systematising them by population, setting, behaviour of interest, type of technology, level of openness, type of intervention modules, and functions that the underlying code provides), including a proposal for a quality rating system. To achieve this, we will draw on consensus methodology and build upon existing checklists, e.g., TIDiER (Hoffmann et al., Citation2014), and the mERA checklist (Agarwal et al., Citation2016) which provide a useful basis but have insufficient depth as they do not capture specific details about the setting of intervention delivery and users’ characteristics.Footnote3

An accessible toolbox of validation methods that are appropriate in the context of digital health tools, which considers that different types of digital health tools have different validation needs (Mathews et al., Citation2019).

A set of best practice guidelines for the sharing, adaptation and optimisation of digital health tools, also addressing legal concerns around licencing, intellectual property, and protection of economic interests of funders and developers (Sharon, Citation2018).

To realise this strategic scalability agenda, we will take the following steps: create awareness of the wider issues associated with the lack of scalability of digital health tools, connect researchers across diverse scientific disciplines and existing networks to share best practices and provide (equal) networking opportunities for researchers, professionals and practitioners across the world.

Establish governing principles for the generation and presentation of high-quality, context-specific evidence within digital health (Sharma et al., Citation2018).

Establish consensus on standardised descriptors for the context in which evidence of effectiveness of digital health tools was generated, the resulting efficacy, how this efficacy could be generalised to other contexts, and what local parameters are expected to affect implementation and performance.

Establish a searchable, living database of evidence-based digital health tools that includes context-specific descriptors for easy and trusted translation to new contexts and provides a platform for peer review of digital health tools and their scalable components.

Providing guidance and tools for implementation of digital health tools in local contexts.

Generation of design rules and standard operating procedures for the development of the next generation of high-quality and evidence-based digital health tools.

The investigation, development and uptake of better methods to capture context-specific evidence for the optimisation of digital health tools.

This initiative will build a multidisciplinary and trans-domain network of key stakeholders, covering seven distinct domains that are required to realise scalable digital health: (1) Basic and Translational research in medicine and health sciences, (2) Basic and Translational research in psychology and behaviour; (3) ICT research and development; (4) Industrial Dimension (including small and medium-sized enterprises, digital health incubators); (5) Public health and funders; (6) Legal and regulatory experts; and (7) Methodological and statistical experts.

This initiative will bring together relevant stakeholders to create a critical mass including (but not limited to) stakeholders that: (a) develop digital health tools; (b) use digital health tools; (c) prescribe (clinicians) and pay for digital health tools (reimbursement agencies, public health agencies), and devise policies; (d) understand economic incentives; (e) develop, investigate and endorse novel applications of existing methods for (context-specific) evidence generation and (f) develop enabling software or algorithms, including data platforms. By connecting these different stakeholder groups, we plan to build a network that is uniquely and ideally placed to scale up evidence-based digital health tools across different contexts.

Discussion

Digital health has increased in popularity over the past two decades and has shown unprecedented growth (Labrique et al., Citation2018; Petersen, Citation2018). Yet, its potential still needs to materialise for patients, providers and society (Arigo et al., Citation2019). The growth has come with great fragmentation, inefficiency, and varying quality (Bhatt et al., Citation2017; Safavi et al., Citation2019). Now is the time to consolidate our learnings, reorganise and prepare for a next generation of digital health where quality, openness, sharing and scalability are intrinsic to the way in which digital health operates (Kwasnicka et al., Citation2020). Other (international) initiatives focus either on digital health in general, specific health issues (e.g., tobacco smoking or energy-balance related behaviours), specific sub-populations (e.g., adolescents or adults), specific sectors (academic or industrial) or health sub-sectors (e.g., primary care, secondary care). Extant initiatives focus on specific challenges such as interoperability (Lehne et al., Citation2019), privacy (Filkins et al., Citation2016), data protection and security (e.g., differential user permission, data control at patient level) (Nebeker et al., Citation2019), but none – as far as we are aware – focus on the issue of open, evidence-based and context-specific evaluation, implementation and scalability in the way that is described here.

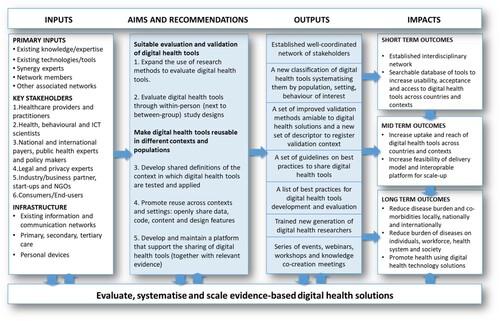

The key in realising the potential, sizable societal impact through digital health lies in effective collaboration and building upon each other’s success (Hesse et al., Citation2021). This requires networking to mobilise a critical mass, and to provide better means for the sharing of best practices in best-fitting contexts: ‘(i) when and where did a particular digital health intervention work, (ii) what was the context that it worked in and to what extent, and (iii) how did the particular context change efficacy?’. At present, we lack consensus on how to systematically describe that context. In the short-term (1), the Open Digital Health initiative will build and strengthen the digital health community and realise a platform for systematic indexing and peer review of available digital health tools. In the medium-term (2), it will upskill researchers and professionals, and share knowledge across scientific disciplines and countries. In the long-term (3), this initiative will develop joint agendas for the development and uptake of more effective and affordable validation methods that will further spur the impact of digital health at the population level ().

Interdisciplinary work must be prioritised in real, tangible ways to effectively implement open digital health. Researchers should receive training and guidelines on how to connect with industry, and how to form successful cross-sectoral partnerships between industry and academia (Austin et al., Citation2021). Many universities are slowly developing a culture of working with industry and research partnership offices are formed to enable science commercialisation and to help researchers network and form partnerships with relevant organisations. Open digital health will only be feasible if these partnerships are meaningful, maintained, and serve the shared goals of industry and academia

The development of digital health tools does not need to be constrained by geographical boundaries. Yet, digital health currently has difficulties escaping geographical and sectoral constraints for the reasons described above. The involvement of a diverse network of partners (in terms of expertise, geography, culture, healthcare systems and innovation capacity) is crucial in order to develop an effective toolbox for the scalability of digital health tools. This works in two ways: first, our stakeholder groups will learn from best practices, gathered from around the world, to improve the quality of outputs; and second, the direct involvement of international collaborators, we will ensure the outputs are relevant across a diverse range of countries, maximising further dissemination and scalability. Promoting and scaling open-source digital health tools has the potential to decrease health and social inequalities and to improve health internationally, building on each other’s success and reusing effective digital health tools in new contexts, also ensuring that end-users are continuously involved and engaged in this work.

Socio-demographic characteristics such as age, education, income, perceived health and social isolation might also influence health literacy and access to digital health tools. However, such characteristics affecting access is a commonly held belief, which is not necessarily always accurate (Knox et al., Citation2022). Therefore, our proposed movement towards digitisation of health information and services and popularisation of open digital health tools will need to consider the potential of a ‘digital divide’, but strongly recommends investigation in each new context to see if a divide exists that requires responding to. We need to acknowledge the variety of existing and potential users and their diverse and contextual needs (e.g., digital skills development) to enable people to use digital technology more effectively, especially among under-served and -privileged communities (Ehrari et al., Citation2022; Estacio et al., Citation2019). The principles of digital inclusion and serving the under-privileged should also be reflected in future guidelines for technology developers and policy makers.

The appeal of digital health tools is evident: their relatively low cost allow for scalable, wide-reaching interventions and their omnipresent nature has the potential for widely accessible, preventative, personalised and data-driven solutions. Digital health tools are flexible and can be used with the patient wherever and whenever needed (Knox et al., Citation2021); they therefore have unique intervention potentials when compared with face-to-face programs. This could increase the effectiveness of current interventions, make them more affordable and allow for new interventions. However, our scientific processes need to adapt to accommodate the fast-paced nature of digital health. To compete with industry-funded digital health solutions, the funding for digital health research needs to change. As we have argued elsewhere, more funding should be assigned to the developmental stages of digital health research – which require iteration – with funding allocated to testing the efficacy of tools in confirmatory trials only when this is appropriately supported by research that indicates that key assumptions have been met (Kwasnicka et al., Citation2021).

In addition, digital health tools have the potential to mitigate health inequities, by increasing access to healthcare to people who live remotely, and those who seek out more traditional health care channels less often (or only in emergencies). Digital tools can help reduce long waiting lists for care (if people can be helped with online tools), and using data-science approaches can help improve prediction of which treatment will work for whom, allowing a more tailored approach. This would include options for special risk groups such as patients from deprived communities. However, during the development of digital tools ethical and societal issues need to be considered. For example, we need to make sure that people with low digital literacy do not receive a lower standard of care.

Equally important, the convenience of digital health tools promotes engagement of patients in the healthcare process and can hence help to improve adherence to behavioural or pharmacological treatments. Although there are still many challenges to overcome, digital health is expected to become a cornerstone of healthcare and can contribute to the sustainability of healthcare systems. Having open digital health solutions shared openly, will allow us to test them and evaluate them across context and settings, continuously improving the science of behaviour change, testing theories, and developing new evidence in real life. The price of this progress can also be minimised if based on the principles of collaboration and reuse.

Conclusions

In the longer term, digital health provides an opportunity to enhance health equity (Lyles et al., Citation2021). If indeed digital health can become scalable, the Open Digital Health initiative may help closing these gaps. Governments are attempting to systematise digital health efforts looking for evidence-based tools that they can recommend to their healthcare providers and subsequently to patients. There is a need to provide digital health tools that facilitate health promotion and disease prevention; however, the efforts are fragmented and they lack central coordination and multidisciplinary guidelines on best practices. What is missing is clear guidance on the standards of the tools, best methods to evaluate the quality of the tools and their effectiveness. The COVID-19 pandemic has further emphasised the need for a flexible and affordable healthcare system with a strong emphasis on prevention and remote healthcare delivery (Crawford & Serhal, Citation2020). Furthermore, most tools are developed and tested at small scale and they are not open source. Even though funding for evidence-based tools often comes from public sources, the tools developed are often not benefiting society at large. By addressing these issues, this initiative will help healthcare funders and governments to realise the potential of their investments.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1 OSF is an online, free, open source, public repository that connects and supports the research workflow, enabling researchers to collaborate, document, archive, share, and register research projects, materials, and data. OSF is the main product of the not-for--profit Center for Open Science.

2 GitHub is a website and cloud-based service that helps developers store and manage their code, and track and control changes to their code.

3 TIDiER (Hoffmann et al., Citation2014) is a template for intervention description and replication and mERA (Agarwal et al., Citation2016) comprises a set of guidelines and a checklist for the reporting of mobile health interventions.

References

- Agarwal, S., LeFevre, A. E., Lee, J., L’engle, K., Mehl, G., Sinha, C., & Labrique, A. (2016). Guidelines for reporting of health interventions using mobile phones: Mobile health (mHealth) evidence reporting and assessment (mERA) checklist. BMJ, 352. https://doi.org/10.1136/bmj.i1174

- Ainsworth, B., Miller, S., Denison-Day, J., Stuart, B., Groot, J., Rice, C., Bostock, J., Hu, X.-Y., Morton, K., Towler, L., Moore, M., Willcox, M., Chadborn, T., Gold, N., Amlôt, R., Little, P., & Yardley, L. (2021). Infection control behavior at home during the COVID-19 pandemic: Observational study of a web-based behavioral intervention (germ defence). Journal of Medical Internet Research, 23(2), e22197. https://doi.org/10.2196/22197

- Almirall, D., Nahum-Shani, I., Sherwood, N. E., & Murphy, S. A. (2014). Introduction to SMART designs for the development of adaptive interventions: With application to weight loss research. Translational Behavioral Medicine, 4(3), 260–274. https://doi.org/10.1007/s13142-014-0265-0

- Alqahtani, F., & Orji, R. (2020). Insights from user reviews to improve mental health apps. Health Informatics Journal, 26(3), 2042–2066. https://doi.org/10.1177/1460458219896492

- Appboy. (2016). Spring 2016 mobile customer retention report: An analysis of retention by day.

- Arigo, D., Jake-Schoffman, D. E., Wolin, K., Beckjord, E., Hekler, E. B., & Pagoto, S. L. (2019). The history and future of digital health in the field of behavioral medicine. Journal of Behavioral Medicine, 42(1), 67–83. https://doi.org/10.1007/s10865-018-9966-z

- Auerbach, A. D. (2019). Evaluating digital health tools—prospective, experimental, and real world. JAMA Internal Medicine, 179(6), 840–841. https://doi.org/10.1001/jamainternmed.2018.7229

- Austin, D., May, J., Andrade, J., & Jones, R. (2021). Delivering digital health: The barriers and facilitators to university-industry collaboration. Health Policy and Technology, 10(1), 104–110. https://doi.org/10.1016/j.hlpt.2020.10.003

- Bhatt, S., Evans, J., & Gupta, S. (2017). Barriers to scale of digital health systems for cancer care and control in last-mile settings. Journal of Global Oncology, 4, 1–3. https://doi.org/10.1200/jgo.2016.007179

- Brewer, L. C., Fortuna, K. L., Jones, C., Walker, R., Hayes, S. N., Patten, C. A., & Cooper, L. A. (2020). Back to the future: Achieving health equity through health informatics and digital health. JMIR MHealth and UHealth, 8(1), e14512. https://doi.org/10.2196/14512

- Burchartz, A., Anedda, B., Auerswald, T., Giurgiu, M., Hill, H., Ketelhut, S., Kolb, S., Mall, C., Manz, K., Nigg, C. R., Reichert, M., Sprengeler, O., Wunsch, K., & Matthews, C. E. (2020). Assessing physical behavior through accelerometry – state of the science, best practices and future directions. Psychology of Sport and Exercise, 49, 101703. https://doi.org/10.1016/j.psychsport.2020.101703

- Collins, L. M. (2018). Optimization of behavioral, biobehavioral, and biomedical interventions: The multiphase optimization strategy (MOST). Springer.

- Collins, L. M., Murphy, S. A., & Strecher, V. (2007). The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): New methods for more potent eHealth interventions. American Journal of Preventive Medicine, 32(5), S112–S118. https://doi.org/10.1016/j.amepre.2007.01.022

- Crawford, A., & Serhal, E. (2020). Digital health equity and COVID-19: The innovation curve cannot reinforce the social gradient of health. Journal of Medical Internet Research, 22(6), e19361. https://doi.org/10.2196/19361

- Crutzen, R. (2021). And justice for all? there is more to the interoperability of contact tracing apps than legal barriers. Comment on “COVID-19 contact tracing apps: A technologic tower of babel and the gap for international pandemic control.”. JMIR MHealth and UHealth, 9(5), e26218. https://doi.org/10.2196/26218

- Crutzen, R., Ygram Peters, G.-J., & Mondschein, C. (2019). Why and how we should care about the general data protection regulation. Psychology & Health, 34(11), 1347–1357. https://doi.org/10.1080/08870446.2019.1606222

- Dimairo, M., Pallmann, P., Wason, J., Todd, S., Jaki, T., Julious, S. A., Mander, A. P., Weir, C. J., Koenig, F., Walton, M. K., Nicholl, J. P., Coates, E., Biggs, K., Hamasaki, T., Proschan, M. A., Scott, J. A., Ando, Y., Hind, D., & Altman, D. G. (2020). The Adaptive designs CONSORT Extension (ACE) statement: a checklist with explanation and elaboration guideline for reporting randomised trials that use an adaptive design. BMJ, 369. http://dx.doi.org/10.1136/bmj.m115

- Dohle, S., & Hofmann, W. (2018). Assessing self-control: The use and usefulness of the experience sampling method.

- Ehrari, H., Tordrup, L., & Müller, S. D. (2022). The digital divide in healthcare: A socio-cultural perspective of digital health literacy. In The digital divide in healthcare: A socio-cultural perspective of digital health literacy.

- Epskamp, S., Cramer, A. O., Waldorp, L. J., Schmittmann, V. D., & Borsboom, D. (2012). Qgraph: Network visualizations of relationships in psychometric data. Journal of Statistical Software, 48(4), 1–18. https://doi.org/10.18637/jss.v048.i04

- Estacio, E. V., Whittle, R., & Protheroe, J. (2019). The digital divide: Examining socio-demographic factors associated with health literacy, access and use of internet to seek health information. Journal of Health Psychology, 24(12), 1668–1675. https://doi.org/10.1177/1359105317695429

- Fagherazzi, G., Goetzinger, C., Rashid, M. A., Aguayo, G. A., & Huiart, L. (2020). Digital health strategies to fight COVID-19 worldwide: Challenges, recommendations, and a call for papers. Journal of Medical Internet Research, 22(6), e19284. https://doi.org/10.2196/19284

- Filkins, B. L., Kim, J. Y., Roberts, B., Armstrong, W., Miller, M. A., Hultner, M. L., Castillo, A. P., Ducom, J.-C., Topol, E. J., & Steinhubl, S. R. (2016). Privacy and security in the era of digital health: What should translational researchers know and do about it? American Journal of Translational Research, 8(3), 1560. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4859641/

- Gordon, W. J., Landman, A., Zhang, H., & Bates, D. W. (2020). Beyond validation: Getting health apps into clinical practice. NPJ Digital Medicine, 3(1), 1–6. https://doi.org/10.1038/s41746-019-0212-z

- Guastaferro, K., & Collins, L. M. (2019). Achieving the goals of translational science in public health intervention research: The multiphase optimization strategy (MOST).

- Gunasekeran, D. V., Tseng, R. M. W. W., Tham, Y.-C., & Wong, T. Y. (2021). Applications of digital health for public health responses to COVID-19: A systematic scoping review of artificial intelligence, telehealth and related technologies. NPJ Digital Medicine, 4(1), 1–6. https://doi.org/10.1038/s41746-021-00412-9

- Hekler, E. B., Klasnja, P., Riley, W. T., Buman, M. P., Huberty, J., Rivera, D. E., & Martin, C. A. (2016). Agile science: Creating useful products for behavior change in the real world. Translational Behavioral Medicine, 6(2), 317–328. https://doi.org/10.1007/s13142-016-0395-7

- Hesse, B. W., Conroy, D. E., Kwaśnicka, D., Waring, M. E., Hekler, E., Andrus, S., Tercyak, K. P., King, A. C., & Diefenbach, M. A. (2021). We’re all in this together: Recommendations from the society of behavioral medicine’s open science working group. Translational Behavioral Medicine, 11(3), 693–698. https://doi.org/10.1093/tbm/ibaa126.

- Hoffmann, T. C., Glasziou, P. P., Boutron, I., Milne, R., Perera, R., Moher, D., Altman, D. G., Barbour, V., Macdonald, H., & Johnston, M. (2014). Better reporting of interventions: Template for intervention description and replication (TIDieR) checklist and guide. Bmj, 348(mar07 3), g1687. https://doi.org/10.1136/bmj.g1687

- IQVIA Institute. (2017). The growing value of digital health. https://www.iqvia.com/insights/the-iqvia-institute/reports/the-growing-value-of-digital-health.

- Isniah, S., Purba, H. H., & Debora, F. (2020). Plan do check action (PDCA) method: literature review and research issues. Jurnal Sistem dan Manajemen Industri, 4(1), 72–81. http://dx.doi.org/10.30656/jsmi.v4i1

- Kahan, B. C., Tsui, M., Jairath, V., Scott, A. M., Altman, D. G., Beller, E., & Elbourne, D. (2020). Reporting of randomized factorial trials was frequently inadequate. Journal of Clinical Epidemiology, 117, 52–59. https://doi.org/10.1016/j.jclinepi.2019.09.018

- Katz, D. S., Gruenpeter, M., & Honeyman, T. (2021). Taking a fresh look at FAIR for research software. Patterns, 2(3), 100222. https://doi.org/10.1016/j.patter.2021.100222

- Knox, L., Gemine, R., Rees, S., Bowen, S., Groom, P., Taylor, D., Bond, I., Rosser, W., & Lewis, K. (2021). Using the technology acceptance model to conceptualise experiences of the usability and acceptability of a self-management app (COPD. Pal®) for chronic obstructive pulmonary disease. Health and Technology, 11(1), 111–117. https://doi.org/10.1007/s12553-020-00494-7

- Knox, L., McDermott, C., & Hobson, E. (2022). Telehealth in long-term neurological conditions: The potential, the challenges, and the key recommendations. Journal of Medical Engineering and Technology. Advance online publication. https://doi.org/10.1080/03091902.2022.2040625

- König, L. M., Attig, C., Franke, T., & Renner, B. (2021). Barriers to and facilitators for using nutrition apps: Systematic review and conceptual framework. JMIR MHealth and UHealth, 9(6), e20037. https://doi.org/10.2196/20037

- Kwasnicka, D., Inauen, J., Nieuwenboom, W., Nurmi, J., Schneider, A., Short, C. E., Dekkers, T., Williams, A. J., Bierbauer, W., Haukkala, A., Picariello, F., & Naughton, F. (2019). Challenges and solutions for N-of-1 design studies in health psychology. Health Psychology Review, 13(2), 163–178. https://doi.org/10.1080/17437199.2018.1564627

- Kwasnicka, D., & Naughton, F. (2019). N-of-1 methods: A practical guide to exploring trajectories of behaviour change and designing precision behaviour change interventions. Psychology of Sport and Exercise, 47, 101570. https://doi.org/10.1016/j.psychsport.2019.101570

- Kwasnicka, D., Ten Hoor, G. A., Hekler, E., Hagger, M. S., & Kok, G. (2021). Proposing a new approach to funding behavioural interventions using iterative methods. Psychology & Health, 36(7), 787–791. https://doi.org/10.1080/08870446.2021.1945061

- Kwasnicka, D., Ten Hoor, G. A., van Dongen, A., Gruszczyńska, E., Hagger, M. S., Hamilton, K., Hankonen, N., Heino, M. T. J., Kotzur, M., & Noone, C. (2020). Promoting scientific integrity through open science in health psychology: Results of the synergy expert meeting of the European health psychology society. Health Psychology Review, 15(3), 333–349. https://doi.org/10.1080/17437199.2020.1844037

- Labrique, A., Vasudevan, L., Mehl, G., Rosskam, E., & Hyder, A. A. (2018). Digital health and health systems of the future.

- Lehne, M., Sass, J., Essenwanger, A., Schepers, J., & Thun, S. (2019). Why digital medicine depends on interoperability. NPJ Digital Medicine, 2(1), 1–5. https://doi.org/10.1038/s41746-019-0158-1

- Liao, P., Klasnja, P., Tewari, A., & Murphy, S. A. (2016). Sample size calculations for micro-randomized trials in mHealth. Statistics in Medicine, 35(12), 1944–1971. https://doi.org/10.1002/sim.6847

- Lyles, C. R., Wachter, R. M., & Sarkar, U. (2021). Focusing on digital health equity. JAMA, 326(18), 1795–1796. https://doi.org/10.1001/jama.2021.18459

- Mathews, S. C., McShea, M. J., Hanley, C. L., Ravitz, A., Labrique, A. B., & Cohen, A. B. (2019). Digital health: A path to validation. NPJ Digital Medicine, 2(1), 1–9. https://doi.org/10.1038/s41746-018-0076-7

- McCallum, C., Rooksby, J., & Gray, C. M. (2018). Evaluating the impact of physical activity apps and wearables: Interdisciplinary review. JMIR MHealth and UHealth, 6(3), e9054. https://doi.org/10.2196/mhealth.9054

- Meloncon, L., & Warner, E. (2017). Data visualizations: A literature review and opportunities for technical and professional communication. 1–9.

- Morton, K., Ainsworth, B., Miller, S., Rice, C., Bostock, J., Denison-Day, J., Towler, L., Groot, J., Moore, M., Willcox, M., Chadborn, T., Amlot, R., Gold, N., Little, P., & Yardley, L. (2021). Adapting behavioral interventions for a changing public health context: A worked example of implementing a digital intervention during a global pandemic using rapid optimisation methods. Frontiers in Public Health, 9, 668197. https://doi.org/10.3389/fpubh.2021.668197

- Nebeker, C., Torous, J., & Ellis, R. J. B. (2019). Building the case for actionable ethics in digital health research supported by artificial intelligence. BMC Medicine, 17(1), 1–7. https://doi.org/10.1186/s12916-019-1377-7

- Nurmi, J., Knittle, K., Ginchev, T., Khattak, F., Helf, C., Zwickl, P., Castellano-Tejedor, C., Lusilla-Palacios, P., Costa-Requena, J., Ravaja, N., & Haukkala, A. (2020). Engaging users in the behavior change process with digitalized motivational interviewing and gamification: Development and feasibility testing of the precious app. JMIR MHealth and UHealth, 8(1), e12884. https://doi.org/10.2196/12884

- O’Connor, D. B. (2021). Leonardo da vinci, preregistration and the architecture of science: Towards a more open and transparent research culture. Health Psychology Bulletin, 5(1), 39–45. https://doi.org/10.5334/hpb.30

- Peters, G.-J. Y., Abraham, C., & Crutzen, R. (2012). Full disclosure: Doing behavioural science necessitates sharing. The European Health Psychologist, 14(4), 77–84. https://doi.org/10.31234/osf.io/n7p5m

- Peters, G.-J. Y., De Bruin, M., & Crutzen, R. (2015). Everything should be as simple as possible, but no simpler: Towards a protocol for accumulating evidence regarding the active content of health behaviour change interventions. Health Psychology Review, 9(1), 1–14. https://doi.org/10.1080/17437199.2013.848409

- Petersen, A. (2018). Digital health and technological promise: A sociological inquiry. Routledge.

- Pham, Q., Wiljer, D., & Cafazzo, J. A. (2016). Beyond the randomized controlled trial: A review of alternatives in mHealth clinical trial methods. JMIR MHealth and UHealth, 4(3), e107. https://doi.org/10.2196/mhealth.5720

- Riley, W. T., Glasgow, R. E., Etheredge, L., & Abernethy, A. P. (2013). Rapid, responsive, relevant (R3) research: A call for a rapid learning health research enterprise. Clinical and Translational Medicine, 2(1), 1–6. https://doi.org/10.1186/2001-1326-2-10

- Rodriguez, J. A., Clark, C. R., & Bates, D. W. (2020). Digital health equity as a necessity in the 21st century cures act era. Jama, 323(23), 2381–2382. https://doi.org/10.1001/jama.2020.7858

- Safavi, K., Mathews, S. C., Bates, D. W., Dorsey, E. R., & Cohen, A. B. (2019). Top-funded digital health companies and their impact on high-burden, high-cost conditions. Health Affairs, 38(1), 115–123. https://doi.org/10.1377/hlthaff.2018.05081

- Schreiweis, B., Pobiruchin, M., Strotbaum, V., Suleder, J., Wiesner, M., & Bergh, B. (2019). Barriers and Facilitators to the implementation of eHealth services: Systematic literature analysis. Journal of Medical Internet Research, 21(11), e14197. https://doi.org/10.2196/14197

- Scott, B. K., Miller, G. T., Fonda, S. J., Yeaw, R. E., Gaudaen, J. C., Pavliscsak, H. H., Quinn, M. T., & Pamplin, J. C. (2020). Advanced digital health technologies for COVID-19 and future emergencies. Telemedicine and E-Health, 26(10), 1226–1233. https://doi.org/10.1089/tmj.2020.0140

- Sharma, A., Harrington, R. A., McClellan, M. B., Turakhia, M. P., Eapen, Z. J., Steinhubl, S., Mault, J. R., Majmudar, M. D., Roessig, L., & Chandross, K. J. (2018). Using digital health technology to better generate evidence and deliver evidence-based care. Journal of the American College of Cardiology, 71(23), 2680–2690. https://doi.org/10.1016/j.jacc.2018.03.523

- Sharon, T. (2018). When digital health meets digital capitalism, how many common goods are at stake? Big Data & Society, 5(2), 2053951718819032. https://doi.org/10.1177/2053951718819032

- Smit, E. S., Meijers, M. H. C., & van der Laan, L. N. (2021). Using virtual reality to stimulate healthy and environmentally friendly food consumption among children: An interview study. International Journal of Environmental Research and Public Health, 18(3), 1088. https://doi.org/10.3390/ijerph18031088

- Torous, J., Nicholas, J., Larsen, M. E., Firth, J., & Christensen, H. (2018). Clinical review of user engagement with mental health smartphone apps: Evidence, theory and improvements. Evidence-Based Mental Health, 21(3), 116–119. https://doi.org/10.1136/eb-2018-102891

- Tran Ngoc, C., Bigirimana, N., Muneene, D., Bataringaya, J. E., Barango, P., Eskandar, H., Igiribambe, R., Sina-Odunsi, A., Condo, J. U., & Olu, O. (2018). Conclusions of the Digital Health hub of the Transform Africa Summit (2018): Strong Government Leadership and Public-Private-Partnerships are key Prerequisites for Sustainable Scale up of Digital Health in Africa, 12(11), 1–7. https://doi.org/10.1186/s12919-018-0156-3

- van Beurden, S. B., Smith, J. R., Lawrence, N. S., Abraham, C., & Greaves, C. J. (2019). Feasibility randomized controlled trial of ImpulsePal: Smartphone app–based weight management intervention to reduce impulsive eating in overweight adults. JMIR Formative Research, 3(2), e11586. https://doi.org/10.2196/11586

- Vohra, S., Shamseer, L., Sampson, M., Bukutu, C., Schmid, C. H., Tate, R., Nikles, J., Zucker, D. R., Kravitz, R., & Guyatt, G. (2015). CONSORT extension for reporting N-of-1 trials (CENT) 2015 statement. Bmj, 350(may14 17), h1738. https://doi.org/10.1136/bmj.h1738

- Wilkinson, M. D., Dumontier, M., Aalbersberg, I., Appleton, J., Axton, G., Baak, M., Blomberg, A., Boiten, N., da Silva Santos, J.-W., & Bourne, L. B., & E, P. (2016). The FAIR guiding principles for scientific data management and stewardship. Scientific Data, 3(1), 1–9. https://doi.org/10.1038/sdata.2016.18

- World Health Organization. (2018). Classification of digital health interventions v1. 0: A shared language to describe the uses of digital technology for health. World health organization.

- Wunsch, K., Eckert, T., Fiedler, J., Cleven, L., Niermann, C., Reiterer, H., Renner, B., & Woll, A. (2020). Effects of a collective family-based mobile health intervention called “SMARTFAMILY” on promoting physical activity and healthy eating: Protocol for a randomized controlled trial. JMIR Research Protocols, 9(11), e20534. https://doi.org/10.2196/20534

- Wyrick, D. L., Rulison, K. L., Fearnow-Kenney, M., Milroy, J. J., & Collins, L. M. (2014). Moving beyond the treatment package approach to developing behavioral interventions: Addressing questions that arose during an application of the multiphase optimization strategy (MOST). Translational Behavioral Medicine, 4(3), 252–259. https://doi.org/10.1007/s13142-013-0247-7

- Yardley, L., Morrison, L., Bradbury, K., & Muller, I. (2015). The person-based approach to intervention development: Application to digital health-related behavior change interventions. Journal of Medical Internet Research, 17(1), e30. https://doi.org/10.2196/jmir.4055

- Zegers, C. M., Witteveen, A., Schulte, M. H., Henrich, J. F., Vermeij, A., Klever, B., & Dekker, A. (2021). Mind your data: Privacy and legal matters in eHealth. JMIR Formative Research, 5(3), e17456. https://doi.org/10.2196/17456

- Zhao, J., Freeman, B., & Li, M. (2016). Can mobile phone apps influence people’s health behavior change? An evidence review. Journal of Medical Internet Research, 18(11), e287. https://doi.org/10.2196/jmir.5692