ABSTRACT

This paper presents a methodology aimed to acquire traffic flow data through the employment of unmanned aerial vehicles (UAVs). The study is focused on the determination of driving behavior parameters of road users and on the reconstruction of traffic flow Origin/Destination matrix. The methodology integrates UAV flights with video image processing technique, and the capability of geographic information systems, to represent spatiotemporal phenomena. In particular, analyzing different intersections, the attention of the authors is focused on users’ gap acceptance in a naturalistic drivers’ behavior condition (drivers are not influenced by the presence of instruments and operators on the roadway) and on the reconstruction of vehicle paths. Drivers’ level of aggressiveness is determined by understanding how drivers decide that a gap is crossable and, consequently, how their behavior is critical in relation to a moving stream of traffic with serious road safety implications. The results of these experiments highlight the usefulness of the UAVs technology, that combined with video processing technique allows the capture of real traffic conditions with a good level of accuracy.

KEYWORDS:

Introduction

Traffic control and management needs to be performed with the aim of reducing the effects of increasing congestion levels on transportation infrastructures. Therefore, researchers and technicians are focusing more and more on traffic congestion’s forecast activities. In order to accomplish this goal, many studies refer to traffic simulation models as a low-cost mean to analyze traffic data and provide the best solutions to be applied.

Nevertheless, traffic simulation models provide reliable outputs only if inputs are correctly defined; in fact, although many of the microscopic simulation models currently used by technicians and researchers are useful to provide a wide range of analysis options, some gaps and limitations still exist affecting their accuracy in reproducing the truth. Their ability to reproduce real traffic operations and vehicle interactions depends on a good calibration stage that involves several model input parameters. Without calibration, the resultant simulated traffic outputs are not verified with respect to the observed real-world conditions, and microsimulation models fail to accurately give responses to the analysts.

Furthermore, any traffic estimation model requires a stage for capturing traffic flow parameters aimed at the determination of the actual network traffic conditions. Therefore, vehicle tracking data detection is being a fundamental element for any activity which may affect studies on complex mobility systems. However, the acquisition of vehicle tracking data requires the use of costly traffic monitoring systems including both infrastructure-based and noninfrastructure-based techniques.

In most cases, data acquired through detector technologies are aggregate and do not guarantee the acquisition of the real tracks of individual vehicles on the road network. This may limit the use of these data in analyzing individual driving behavior, implementing simulation models and performing specific studies on the road network.

Nevertheless, some nonintrusive techniques, such as video image acquisition technologies, represent a low-cost procedure for capturing individual vehicle operations over time, and provide a useful tool for obtaining observational data. Among these technologies, unmanned aerial vehicles (UAVs) are recently improved to be used in tracking vehicles’ trajectories and estimating traffic parameters. Indeed, the spread of drones also used in urban areas is improving the acquisition of accurate vehicle tracking profiles based on video inputs. However, this technology, as it has been developed recently, suffers from problems still not solved definitively. The level of accuracy of the results of its applications to vehicular flow analysis has not been adequately tested in different road and traffic conditions.

The study described in this paper, which investigates new aspects from work by Salvo, Caruso, Scordo, Guido, and Vitale (Citation2014b), presents experimental results of a methodology to extract traffic data through the use of UAV-borne image. The proposed methodology is applied to case studies to test and demonstrate the usefulness of UAVs for acquiring reliable traffic data and providing useful information on driving behavior parameters for individual drivers (e.g. gap acceptance) and aggregate estimation of flow variables (e.g. Origin/Destination matrix).

The paper is organized as follows: “Literature review” section describes the state of the art of techniques used to detect traffic flow characteristics. “Equipment and methodology” section presents the applied methodology and the equipment used for the experimental stage. “Case studies” section describes a case study, and “Data processing” section analyzes the results obtained from the previous stage. The paper concludes with some comments and practical recommendations in “Conclusions” section.

Literature review

Traffic data acquirement has often been done by technologies that couple dedicated equipment, such as fixed sensors (i.e. inductive loops, magnetic detectors, piezoelectric sensors, microwave radar detectors and infrared detectors), characterized by high-installation and -maintenance costs (Leduc, Citation2008; Martin, Feng, & Wang, Citation2003). This kind of instrumentation may provide traffic data onto certain sections of the road, but it fails to provide detailed information about the vehicle trajectories. Also, their use is affected by several limits, especially in urban areas, in which real-time traffic data are difficult to acquire due to the complexity of the urban road networks.

Infrastructure-based techniques adopt intrusive detector technologies (Federal Highway Administration, Citation2006), such as inductive loops (Coifman, Dhoorjaty, & Lee, Citation2003; Sun & Ritchie, Citation1999), pneumatic road tubes (Federal Highway Administration, Citation2010; McGowen & Sanderson, Citation2011), magnetic detectors (Haoui, Kavaler, & Varaiya, Citation2008; Kwong, Kavaler, Rajagopal, & Varaiya, Citation2009) and piezoelectric sensors (Li & Yang, Citation2006).

Noninfrastructure-based techniques involve nonintrusive detector technologies, such as microwave radar detector (Ho & Chung, Citation2016; Zwahlen, Russ, Oner, & Parthasarathy, Citation2005), passive acoustic detector (Nooralahiyan, Kirby, & McKeown, Citation1998; Tyagi, Kalyanaraman, & Krishnapuram, Citation2012), infrared detector (Grabner, Nguyen, Gruber, & Bischof, Citation2008; Hinza & Stilla, Citation2006), ultrasonic detector (Kim, Citation1998; Song, Chen, & Huang, Citation2004) and beacon (Bai, Oh, & Jung, Citation2013; Sanguesa et al., Citation2013).

In the last years, probe vehicle data acquirement and video image processing, that are classified as noninfrastructure-based techniques, have been applied more and more frequently to capture traffic data. Probe vehicle data collection systems allow providing Lagrangian measurements of the vehicles’ trajectories using onboard tracking devices; these systems can be grouped into five types: Automatic Vehicle Identification systems (Smalley, Hickman, & McCasland, Citation1996), Automatic Vehicle Location (Guido, Vitale, & Rogano, Citation2016; Polk & Pietrzyk, Citation1995), Ground-Based Radio Navigation (Vaidya, Higgins, & TurnbulI, Citation1996), Cellular Geo-location based on cellular telephone call transmissions (Astarita, Bertini, d’Elia, & Guido, Citation2006; Sumner, Smith, Kennedy, & Robinson, Citation1994) and Global Positioning Systems (GPSs) (Choi & Chung, Citation2001; Yim & Cayford, Citation2001).

The wide spread of smartphones and other mobile devices equipped with GPS sensors allow the acquisition of positions of moving objects, 24 h a day, in any type of weather. However, the GPS technology does have some limitations (Zhang, Li, Dempster, & Rizos, Citation2010).

Herrera et al. (Citation2010) demonstrated that onboard electronic devices can be used as an alternative traffic sensing infrastructure. Thanks to the wide coverage provided by the cellular network, the authors used GPS-enabled smartphones as a traffic monitoring system. Data obtained during their experiments were processed real time and successfully broadcast on Internet.

In the work by Guido et al. (Citation2012), a procedure for extracting vehicle tracking data from smartphone sensors is introduced. The authors assessed the accuracy of vehicle tracking data obtained through onboard smartphone sensors by comparing them to high resolution GPS tracking measurements. Two other studies by Guido et al. (Citation2013, Citation2014) investigated the accuracy of speed measures obtained from smartphones. The authors demonstrated that onboard smartphones provide vehicles’ speed profiles within a 1 km/h margin of error.

On the other hand, video image processing represents a low-cost noninfrastructure-based technique for acquiring individual vehicle trajectories and provides a useful tool for obtaining observational data for traffic management and control.

In the last decades, several vehicle image processing techniques have been developed and applied to traffic flow analysis. Oh and Kim (Citation2010) provided a rear-end crash potential estimates using vehicle trajectory data obtained by a traffic surveillance system; they developed a statistic model to determine the probability of a lane change. In the work by Saunier and Sayed (Citation2008), a vision-based vehicle tracking system is used to estimate the probability of vehicles’ collision at an intersection.

Traffic information for management and control can be obtained through several commercial systems based on loop detectors such as AutoScope (Citation2014), Citilog (Citation2014) and Traficon (Citation2014), while other systems, such as PEEK Video (Citation2014) Trak-IQ and NGSIM-Video (Citation2014), using a vehicle tracking approach, need to be thoroughly calibrated prior to their application.

Despite these noninfrastructure-based techniques are getting popular, in recent years, UAV image acquisition technologies have been developed to overcome some limitations of the aforementioned systems. UAVs are quickly gaining popularity worldwide and are commonly employed in photogrammetry ambits, in which acquired images need to be georeferenced and combined with existing data in geographic information systems (GISs).

UAVs have many advantages compared to manned air vehicles including low purchase, management and operation costs. They may provide high-resolution images useful for traffic analysis through a video image processing, but they can fail in reproducing real traffic data because of some factors affecting their performance (e.g. weather conditions, technical instrumental problems, physical obstacles, regulatory issues).

The first on vision applied to UAV position estimation works date back to the nineties, when Amidi, Kanade, and Fujita (Citation1999) proposed a vision-based odometer through which it was possible to derive the relative helicopter position and velocity in real time by means of stereo vision. They demonstrated that moving objects can be autonomously tracked by using only onboard processing power. In the work by Schell and Dickmanns (Citation1994), a study on the applicability of vision for landing an airplane is presented. The BEAR project (Shakernia, Vidal, Sharp, Ma, & Sastry, Citation2002; Vidal, Sastry, Kim, Shakernia, & Shim, Citation2002) introduces a vision system for autonomous landing of UAVs that uses vision-based pose estimation relative to a planar landing target and vision-based landing of an aerial vehicle on a moving deck. Saripalli, Montgomery, and Sukhatme (Citation2003) presented a vision-based technique for landing on a slow-moving helipad. In the work by Cesetti, Frontoni, Mancini, Zingaretti, and Longhi (Citation2010), a multipurpose feature vision-based approach for guidance and safe landing of an UAV is discussed.

Researches from Oh, Kim, Shin, Tsourdos, and White (Citation2013); Remondino, Barazzetti, Nex, Scaioni, and Sarazzi (Citation2011); and Salvo, Caruso, and Scordo (Citation2014a) illustrate applications of UAVs image acquisition technologies to perform analysis on urban traffic conditions.

Recently, a new methodology for tracking moving vehicles from aerial video data acquired with UAV has been presented (Apeltauer et al., Citation2015). The results suggest a good accuracy in extracting vehicles’ trajectories and kinematic data useful for traffic analysis (root-mean-square error (RMSE) of track position is about 1 m).

Equipment and methodology

Two types of equipment have been used to perform the experimental stages described in this paper: a probe vehicle equipped with a differential GPS and a remote-controlled UAV equipped with a video camera.

In order to track the probe vehicle, a differential GPS was used, that received a correction via Global System for Mobile Communication (GSM) from a network of permanent stations, yielding a considerable accuracy (till 5–10 cm). It should be emphasized that accuracy could significantly decrease (till some meters) without a GSM covering. The GPS acquisition time was set to 1 s, using the “trajectory” function, and obtained data were processed in a GIS (Salvo & Caruso, Citation2007).

The equipment used during the experimental stage includes a UAV drone equipped with eight propellers and a video camera able to capture videos up to 4k, with a frame rate of 23 fps and images with a resolution of 12 megapixels.

The methodology introduced in the work by Salvo et al. (Citation2014a) has been applied to track the probe vehicle through the UAV drone, as discussed in the previous work (Salvo et al., Citation2014B), of which this paper represents an advancement. The trajectories of the probe vehicle yielded by the GPS were used to assess the accuracy in extracting reliable traffic data through the UAV application and to extend the proposed methodology to all the vehicles transiting on the analyzed sites; insights of these analyzes are illustrated in the study by Salvo et al. (Citation2014a). The average of the RMSE between the onboard GPS and the UAV location outputs has been evaluated; RMSE ranges from 10 to 20 cm.

This methodology is composed by three main steps: (1) the acquisition of a video recorded from a nadir point of view, (2) the video processing and (3) the identification of the vehicles’ trajectories.

During the first step, once the study area is identified, an appropriate number of ground control points (GCPs)needs to be defined before flight to simplify the video processing stage. These steps are followed by the flights’ planning and the setting of the sensors parameters.

The video processing step includes the following operations: removal of fish-eye effect, selection of significant parts of the video, extraction of frames and georeferencing of extracted frames.

The first operation reduces the curvature effect typical of videos recorded with a wide-angle lens. The second operation is finalized to cut parts of video that are not relevant for the study (takeoff and landing), while the third operation allows extracting frames from the video.

After these operations, each frame is georeferenced: this step consists in attributing the same reference system of a basic image to various frames without geographic references, through the use of some points positioned on the ground (GCPs). GCPs must be visible in all frames and placed homogeneously in the area of the analysis. A regression equation is normally adopted to associate every pixel to real-world coordinates. In the simplest case, a minimum of three GCPs generates a linear equation, which allows gaining good results in terms of low image distortion, but the photo-geometry distortion cannot be corrected. In order to reach better accuracy results, a second-order or a third-order equation (with respectively 6 or 10 GCPs required) is necessary for more complex photo-geometry distortion cases.

Finally, a GIS analysis is performed to identify the trajectories of all the vehicles transiting on the analyzed site and to determine the Lagrangian measurements useful to analyze the individual behavior.

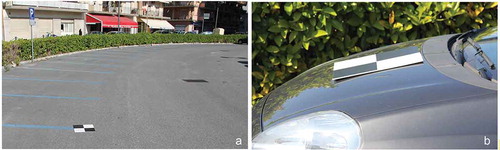

shows a probe vehicle with a differential GPS antenna on the roof of the car and a micro-UAV equipped with a video camera.

Figure 1. A probe vehicle with an antenna of differential GPS (a) and a micro-UAV equipped with a video camera (b).

summarizes the main features of the UAV and the V-Box unit.

Table 1. Technical features of UAV and differential GPS.

Case studies

The described survey methodology was applied to the city of Milazzo, an urban center in the province of Messina (Sicily, Italy) with over 30,000 people and characterized by significant industrial and commercial activities.

The analysis has been conducted in two different contexts, in particular:

a road intersection without traffic lights regulated by STOP signals, which is in a residential area (case study 1);

a compact urban roundabout along the road SS 113, situated in a commercial area, which is located near the motorway exit (case study 2).

Eight flights with the remote-controlled drone have been performed to capture vehicles’ trajectories for the two case studies; they allowed to acquire a nadir videos (full HD resolution) of the two areas of interest. During the experiments, weather conditions were good (sunny day) and the wind speed ranged from 0 to 3 m/s. shows a view of the two areas of interest.

Before starting each survey, 10 GCPs have been physically positioned on the ground to simplify the following elaboration phases. An additional control point has been located on the hood of the probe vehicle.

shows the GCPs positioned on the ground (a)) and on the hood of the probe vehicle (b)).

shows some information of the UAV’s surveys (i.e. total flight time, useful flight time, registered transits of the probe vehicle).

Table 2. UAV flights with indication of transits of the probe vehicle and total number of analyzed frames.

Data processing

The data processing stage of the videos acquired by the UAV has been realized by a semiautomatic procedure through the following operations:

removal of fish-eye effect and selection of significant parts of the video; for the experiment a GoPro Studio software has been used for the purpose, but other commercial photo editing softwares could be used to achieve the same results;

extraction of frames (one frame every second);

georeferencing of extracted frames using an open-source GIS software (QuatumGIS). This process, that consists in attributing the same reference system of a basic image to various frames without geographic references, has been realized using the ATA 2007–2008 orthophoto of Sicilian Region; it is freely reachable online at “http://www.sitr.regione.sicilia.it/geoportale and it has “WGS 84 /UTM zone 33N” as a reference system. Each frame has been georeferenced through the use of 10 GCPs distributed along the investigated roads. The total accuracy of this process can be evaluated through the average of the RMSE, that is the value of the standard deviation of the difference between GCPs position acquired though GPS device and their correct position in the reference system. The average of the RMSE has been always lower than 20 cm. This operation requires about 2 min per each analyzed frame.

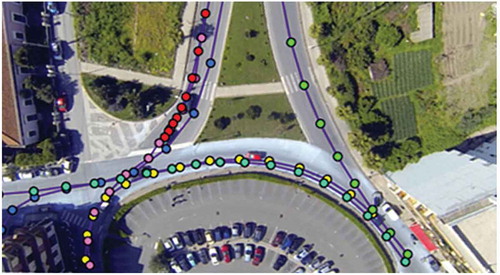

Once all the frames have been georeferenced, all the vehicles of the “video-recorded” traffic flow have been identified. A total of 73 vehicles’ trajectories were analyzed.

shows the trajectories of some vehicles that have transited during the survey.

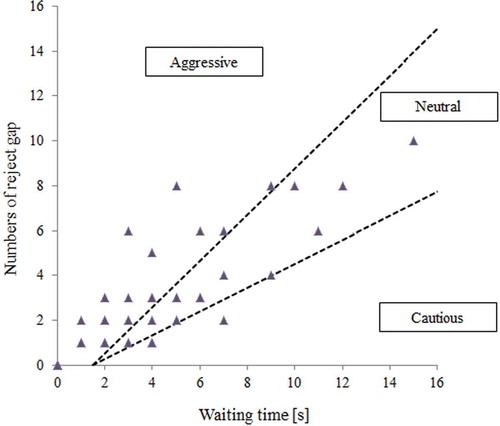

Two different surveys were carried out. In the first survey, the driver behavior was investigated through the analysis of the waiting time and the number of rejected gap before completing the entry in the main traffic stream for case study 1.

Results from this analysis are shown in and . refers to isolated or leader vehicles, while refers to the follower vehicles. Three different driving styles have been identified:

aggressive, where the number of rejected gap is greater than the waiting time;

neutral, where the waiting times are similar to the number of rejected gap;

cautious, where the waiting time is much greater than the number of rejected gap.

In the second survey, the proposed methodology was applied to case study 2 to demonstrate its usefulness in acquiring reliable traffic data for the Origin/Destination matrix estimation and, consequently, for analyzing any critical points in the network links. The survey was made in a typical weekday between 2:30 and 3:30 pm (an off-peak hour). An hourly Origin/Destination matrix sample () was then obtained starting from the elaboration of about 15 min of video from the useful flight time. With reference to b), the four road sections entering the roundabout have been identified as follows:

A: road section of SS 113 between the motorway exit and the roundabout;

B: connection to the industrial and commercial activities;

C: road section of SS 113 between the roundabout and a stop-controlled intersection;

D: connection to a residential area.

Table 3. Hourly Origin/Destination matrix sample.

The results provided by this preliminary analysis highlight that major flows are found on the SS 113 sections, not yet generating capacity problems; while a small number of vehicles was observed on the other two connections (road sections B and D), maybe due to the survey time (2:30–3:30 pm is the off-peak hour, especially for the commercial vehicles that normally serve these areas). Finally, only a few vehicles transiting along the SS 113 were found to use the roundabout for making a U-turn maneuver.

Conclusions

This study aims to verify the applicability of UAVs in the determination of vehicle trajectories and drivers’ behavior. The methodology combines UAV flights on road segments with video image processing techniques that allow to determine traffic flow parameters and vehicles’ maneuvers and paths. In particular, the proposed methodology was applied for monitoring different road intersections to understand the complex dynamics that led drivers to accept or reject a gap to cross the opposite stream and, consequently, how their behavior was critical, determining possible risky maneuvers. Moreover, the employment of an UAV guaranteed a naturalistic behavior of road users which were not disturbed by any instrument mounted on the roadway. The experimental results demonstrated that UAVs are a valid instrument for road traffic monitoring. Combined with other research techniques, such as microsimulation, UAVs could be very useful for the evaluation of road segments operation and safety performances. In contrast, however, their use is conditioned by some limiting factors such as climate factors (e.g. wind, rain, electromagnetic fields), factors related to the presence of physical obstructions (e.g. buildings, urban canyons), instrumental factors (e.g. modest autonomy of the battery, low payload) and legal factors (e.g. possible presence of “no-fly” zones). In future studies, attention will be focused on the possibility of calibrating simulation models with a high level of detail by using spatial information acquired from UAVs. At the same time, it would be interesting to explore the implementation of procedures for the automatic ad real-time video analysis and the integration of outputs from different traffic monitoring systems.

Acknowledgments

The authors gratefully acknowledge the company E. Lab s.r.l., Academic Spin-Off of University of Palermo, for their cooperation during data acquisition.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Amidi, O., Kanade, T., & Fujita, K. (1999). A visual odometer for autonomous helicopter fight. Robotics and Autonomous Systems, 28(2–3), 185-193.

- Apeltauer, J., Babinec, A., Herman, D., & Apeltauer, T. (2015). Automatic vehicle trajectory extraction for traffic analysis from aerial video data. The international archives of the photogrammetry. Remote Sensing and Spatial Information Sciences, 43(W2), 9–351.

- Astarita, V., Bertini, R., d’Elia, S., & Guido, G. (2006). Motorway traffic parameter estimation from mobile phone counts. European Journal of Operational Research, 175(3), 1435–1446. doi:10.1016/j.ejor.2005.02.020

- Autoscope, Image Sensing Systems. (2014). Retrieved February 25, 2014, from http://www.autoscope.com

- Bai, S., Oh, J., & Jung, J. (2013). Context awareness beacon scheduling scheme for congestion control in vehicle to vehicle safety communication. Ad Hoc Networks, 11, 2049–2058. doi:10.1016/j.adhoc.2012.02.014

- Cesetti, A., Frontoni, E., Mancini, A., Zingaretti, P., & Longhi, S. (2010). A vision-based guidance system for UAV navigation and safe landing using natural landmarks. Journal of Intelligent and Robot Systems, 57(1–4), 233–257. doi:10.1007/s10846-009-9373-3

- Choi, K., & Chung, Y. (2001). Travel time estimation algorithm using GPS probe and loop detectors data fusion. In Proceeding of the Transportation Research Board 80th Annual Meeting. Washington, DC.

- Citilog. (2014). Retrieved February 25, 2014, from http://www.citilog.fr

- Coifman, B., Dhoorjaty, S., & Lee, Z.H. (2003). Estimating median velocity instead of mean velocity at single loop detectors. Transportation Research Part C, 11C(3–4), 211–222. doi:10.1016/S0968-090X(03)00025-1

- Federal Highway Administration. (2006). Traffic detector handbook (3rd ed.). Volume I. FHWA-HRT-06-108. McLean, VA: US Department of Transportation.

- Federal Highway Administration. (2010). A new look at sensors. Public roads. US Department of Transportation, 71(3), 32–39.

- Grabner, H., Nguyen, T.T., Gruber, B., & Bischof, H. (2008). On-line boosting-based car detection from aerial images. ISPRS Journal of Photogrammetry and Remote Sensing, 63, 382–396. doi:10.1016/j.isprsjprs.2007.10.005

- Guido, G., Gallelli, V., Saccomanno, F.F., Vitale, A., Rogano, D., & Festa, D. (2014). Treating uncertainty in the estimation of speed from smartphone traffic probes. Transportation Research Part C: Emerging Technologies, 47(1), 100–112. doi:10.1016/j.trc.2014.07.003

- Guido, G., Vitale, A., Astarita, V., Saccomanno, F.F., Giofré, V.P., & Gallelli, V. (2012). Estimation of safety performance measures from smartphone sensors. Procedia - Social and Behavioral Sciences, 54, 1095–1103. doi:10.1016/j.sbspro.2012.09.824

- Guido, G., Vitale, A., & Rogano, D. (2016). A smartphone based DSS platform for assessing transit service attributes. Public Transport – Planning and Operations, 8, 315–340.

- Guido, G., Vitale, A., Saccomanno, F.F., Festa, D.C., Astarita, V., Rogano, D., & Gallelli, V. (2013). Using smartphones as a tool to capture road traffic attributes. Applied Mechanics and Materials, 432, 513–519. doi:10.4028/www.scientific.net/AMM.432

- Haoui, A., Kavaler, R., & Varaiya, P. (2008). Wireless magnetic sensors for traffic surveillance. Transportation Research Part C, 16(3), 294–306. doi:10.1016/j.trc.2007.10.004

- Herrera, J.C., Work, D.B., Herring, R., Ban, X., Jacobson, Q., & Bayen, A. (2010). Evaluation of traffic data obtained via GPS-enabled mobile phones: The Mobile Century field experiment. Transportation Research Part C, 18, 568–583. doi:10.1016/j.trc.2009.10.006

- Hinza, S., & Stilla, U. (2006). Car detection in aerial thermal images by local and global evidence accumulation. Pattern Recognition Letters, 27(4), 308–315. doi:10.1016/j.patrec.2005.08.013

- Ho, T.J., & Chung, M.J. (2016). Information-aided smart schemes for vehicle flow detection enhancements of traffic microwave radar detectors. Applied Sciences, 6(7), 196. doi:10.3390/app6070196

- Kim, S.W.E. (1998). Performance comparison of loop/piezo and ultrasonic sensor-based traffic detection systems for collecting individual vehicle information. In Proceedings of the 5th world congress on intelligent transport systems, 4083.

- Kwong, K., Kavaler, R., Rajagopal, R., & Varaiya, P. (2009). Arterial travel time estimation based on vehicle re-identification using wireless magnetic sensors. Transportation Research Part C, 17(6), 586–606. doi:10.1016/j.trc.2009.04.003

- Leduc, G. (2008).Road traffic data: Collection methods and applications. In Working papers on energy, transport and climate change. N.1. European Commission, Joint Research Centre, Institute for Prospective Technological Studies. Luxembourg: Office for Official Publications of the European Communities. Seville, Spain.

- Li, Z., & Yang, X. (2006). Application of cement-based piezoelectric sensors for monitoring traffic flows. Journal of Transportation Engineering, 132(7), 565–573. doi:10.1061/(ASCE)0733-947X(2006)132:7(565)

- Martin, P.T., Feng, Y., & Wang, X. (2003).Detector technology evaluation. In Technical report. Salt Lake City: University of Utah, University of Utah Traffic Lab.

- McGowen, P., & Sanderson, M. (2011). Accuracy of pneumatic road tube counters. In 2011 Western district annual meeting, institute of transportation engineers. Vol. 11. Anchorage, AK: Western Institute of Transportation Engineers.

- NGSIM, Next Generation Simulation Communty. (2014). Retrieved February 25, 2014 from http://ngsim-community.org

- Nooralahiyan, A.Y., Kirby, H.R., & McKeown, D. (1998). Vehicle classification by acoustic signature. Mathematical and Computer Modelling, 27(9–11), 205–214. doi:10.1016/S0895-7177(98)00060-0

- Oh, C., & Kim, T. (2010). Estimation of rear-end crash potential using vehicle trajectory data. Accident Analysis & Prevention, 42, 1888–1893. doi:10.1016/j.aap.2010.05.009

- Oh, H., Kim, S., Shin, H., Tsourdos, A., & White, B.A. (2013). Behaviour recognition of ground vehicle using airborne monitoring of unmanned aerial vehicles. International Journal of Systems Science, 45(12), 2499–2514.

- PEEK Traffic. (2014). Retrieved February 25, 2014, from http://www.peek-traffic.com

- Polk, A.E., & Pietrzyk, M.C. (1995). The Miami method: Using Automatic Vehicle Location (AVL) for measurement of roadway level-of-service. In Proceedings of the annual meeting of ITS America volume 1. Washington, DC: Intelligent Transportation Society of America. University of South Florida, Center for Urban Transportation Research. Tampa, FL.

- Remondino, F., Barazzetti, L., Nex, F., Scaioni, M., & Sarazzi, D. (2011). UAV photogrammetry for mapping and 3D modeling: Current status and future perspectives. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XXXVIII, 25–31.

- Salvo, G., & Caruso, L. (2007). L’utilizzo di sistemi di posizionamento GPS per la determinazione di curve di deflusso in ambito urbano. In Atti 11a conferenza nazionale ASITA (pp. 1–6). Torino: Federeazione delle Associazioni Scientifiche per le Informazioni Territoriali e Ambientali.

- Salvo, G., Caruso, L., & Scordo, A. (2014a). Urban traffic analysis through an UAV. Procedia - Social and Behavioral Sciences, 111, 1083–1091. doi:10.1016/j.sbspro.2014.01.143

- Salvo, G., Caruso, L., Scordo, A., Guido, G., & Vitale, A. (2014b). Comparison between vehicle speed profiles acquired by differential GPS and UAV. In 17th meeting of the Euro Working Group on Transportation. Compendium of papers. EURO Working Group on Transportation. Seville, Spain.

- Sanguesa, J.A., Fogue, M., Garrido, P., Martinez, F.J., Cano, J.C., Calafate, C.T., & Manzoni, P. (2013). An infrastructureless approach to estimate vehicular density in urban environments. Sensors, 13(2), 2399–2418. doi:10.3390/s130202399

- Saripalli, S., Montgomery, J.F., & Sukhatme, G.S. (2003). Visually guided landing of an unmanned aerial vehicle. IEEE Transactions on Robotics and Automation, 19(3), 371–380. doi:10.1109/TRA.2003.810239

- Saunier, N., & Sayed, T. (2008). Probabilistic framework for automated analysis of exposure to road collisions. Transportation Research Record, 2083, 96–104. doi:10.3141/2083-11

- Schell, F. R., & Dickmanns, E. D. (1994). Autonomous landing of airplanes by dynamic machine vision. Machine Vision and Applications, 7(3), 127-134.

- Shakernia, O., Vidal, R., Sharp, C., Ma, Y., & Sastry, S. (2002). Multiple view motion estimation and control for landing an aerial vehicle. In Proceedings of the international conference on robotics and automation (Vol. 3, pp. 2793–2798). Washington, DC: ICRA, IEEE.

- Smalley, D.O., Hickman, D.R., & McCasland, W.R. (1996). Design and implementation of automatic vehicle identification technologies for traffic monitoring in Houston. In Texas, draft report TX-97-1958-2F. College Station: Texas Transport Institute.

- Song, K.T., Chen, C.H., & Huang, C.H.C. (2004). Design and experimental study of an ultrasonic sensor system for lateral collision avoidance at low speeds. In IEEE intelligent vehicles symposium (pp. 647–652). Washington, DC: Institute of Electrical and Electronics Engineers.

- Sumner, R., Smith, R., Kennedy, J., & Robinson, J. (1994). Cellular based traffic surveillance - The Washington, D.C. area operational test. In Proceeding of the IVHS America Annual Meeting Volume 2. Washington, DC: Intelligent Vehicle Highway Society of America.

- Sun, C., & Ritchie, S. (1999). Individual vehicle speed estimation using single loop inductive waveforms. Journal of Transportation Engineering, 125(6), 531–538. doi:10.1061/(ASCE)0733-947X(1999)125:6(531)

- Traficon. (2014). Traffic Video Detection. Retrieved February 25, 2014, from http://www.traficon.com

- Tyagi, V., Kalyanaraman, S., & Krishnapuram, R. (2012). Vehicular traffic density state estimation based on cumulative road acoustics. IEEE Transactions on Intelligent Transportation Systems, 13(3), 1156–1166. doi:10.1109/TITS.2012.2190509

- Vaidya, N., Higgins, L.L., & TurnbulI, K.F. (1996). An evaluation of the accuracy of a radio trilateration automatic vehicle location system. In Proceedings of the annual meeting of ITS America. Washington, DC: Intelligent Transportation Society of America.

- Vidal, R., Sastry, S., Kim, J., Shakernia, O., & Shim, D. (2002). The Berkeley aerial robot project (BEAR). In Proceeding of the international conference on intelligent robots and systems (pp. 1–10). Washington, DC: IROS, IEEE/RSJ.

- Yim, Y.B., & Cayford, R. (2001). Investigation of vehicles as probes using global positioning system and cellular phone tracking: Field operational test. In California PATH working paper UCB-ITS-PWP-2001-9. Berkeley, CA: Institute of Transportation Studies, University of California.

- Zhang, J., Li, B., Dempster, A.G., & Rizos, C. (2010). Evaluation of high sensitivity GPS receivers. In Proceedings of 2010 international symposium on GPS/GNSS. Taipei: National Cheng Kung University.

- Zwahlen, H.T., Russ, A., Oner, E., & Parthasarathy, M. (2005). Evaluation of microwave radar trailers for nonintrusive traffic measurements. Transportation Research Records, 1917(1), 127–140. doi:10.3141/1917-15