Abstract

In this study, we examine the short-term effects of an intervention aimed at developing teachers’ responsive pedagogy in mathematics. The study analyses how an emphasis on responsive pedagogy in 9th grade classrooms over a period of seven months might strengthen students’ feedback, self-regulated learning, self-efficacy and achievement in mathematics. Nine schools attended as intervention group (N = 40 classes; 1003 students). 11 compatible schools (N = 37 classes; 896 students) were recruited as a control group. Students responded to a pre-post questionnaire and conducted a pre-post achievement test. Results show small, significant differences between the total scores for the pre- and post-measures in the intervention group for the variables elaboration, task value motivation, effort and persistence, self-efficacy and self-conception. Significant differences between the treatment and control group is found for the achievement emotions anxiety and enjoyment. No significant difference is found for achievement in mathematics.

PUBLIC INTEREST STATEMENT

Children of today, and citizens of the future, have to learn how to search for information and to be capable of taking control of their own learning to acquire relevant knowledge. An understanding of mathematics is central to preparedness for life in modern society. A growing proportion of problems and situations encountered in daily life, including in professional contexts, require some level of understanding of mathematics, mathematical reasoning and mathematical tools, before they can be fully understood and addressed. But we argue that children have to learn how to learn and believe in their own competence to develop. In this study, we examine the short-term effects of an intervention aimed at developing responsive pedagogy in mathematics. The study analyses how an emphasis on responsive pedagogy in 9th grade classrooms over a period of seven months might strengthen students’ achievement in mathematics, self-regulated learning, learning strategies, and self-efficacy beliefs in mathematics.

Potential conflict of interest

There are no conflicts of interests.

1. Introduction

The aim for this study is to examine the effects of an intervention aimed at developing teachers’ responsive pedagogy to strengthening student learning in mathematics in 9th grade (students age 13–14). Responsive pedagogy is defined as a learning dialogue with feedback as a central driver to foster students’ self-regulatory processes and students’ beliefs in their abilities (Smith, Gamlem, Sandal, & Engelsen, Citation2016). Responsive pedagogy is conceptualised as the recursive dialogue between a learner’s internal feedback and external feedback provided by a significant other (e.g., teacher, peer). An example of such a dialogue is a cumulative dialogue between a teacher and a student where the teacher supports the student’s metacognitive processes by asking questions for critical reflections or suggesting strategies to support the student’s self-regulation of learning, whilst at the same time communicating high expectations and strengthening the student’s self-efficacy. Thus, in the instructional encounter internal and external feedback processes can be cyclically emphasised and strengthened between a student and a teacher.

Literature highlights the importance of building a mutual dialogue, in contrast to more typical classroom conversation in which teachers ask questions, students respond, and teachers ask follow-up questions (Mehan, Citation1979; Sinclair & Coulthard, Citation1975). Panadero, Andrade, and Brookhart (Citation2018a) state that there is a need to examine the psychological and social effects of formative assessment to better understand how it affects self-regulated learning. “Only by understanding internal cognitive and affective processes, we can truly understand the power of formative assessment” (Panadero et al., Citation2018a, p. 28). Further, Black and Wiliam (Citation2018) claim that the role of assessment needs to be part of the pedagogy.

A theoretical understanding of how to promote assessment-based learning and self-regulated learning has received extensive attention over the past decade (Panadero et al., Citation2018a; Panadero, Jonsson, & Botella, Citation2017; Zimmerman, Citation2001). Although self-regulated learning recently has been studied, the concept is based on historical results from educational research (e.g. Bandura, Citation1989; Vygotsky, Citation1978). The idea that students should take responsibility for their own learning and play an active role in the learning process replaced instructional theories and assigned a proactive rather than a reactive role to the learner (Zimmerman, Citation2001). Based on this paradigm shift for student involvement, theories about assessment and self-regulated learning have evolved (Andrade, Citation2010; Dignath & Büttner, Citation2008). Over the last 20 years, theories have accounted for motivational and volitional components of learning and academic self-regulation (Boekaerts & Corno, Citation2005; Zimmerman, Citation2000a, Citation2001), and the relationships between self-assessment, self-regulated learning and self-efficacy have become the objects of empirical research (Panadero et al., Citation2018a, Citation2017).

There is evidence that cognitive strategies, particularly memory and monitoring processes, influence mathematical learning from primary school (Swanson & Jerman, Citation2006). Methods underlying cognitive strategy instruction is explicit instruction, which incorporates research-based practices and procedures such as modelling, verbal rehearsal, cueing, and feedback (Montague, Citation2003). The work of Niss and his Danish colleagues (e.g. Niss & Jensen, Citation2002) have identified eight capabilities—referred to as “competencies” by Niss and the PISA framework (OECD, Citation2004)—that are instrumental for mathematical behaviour. The PISA 2018 Mathematics framework uses a modified formulation and introduce seven capabilities and further state that these cognitive capabilities are available to or learnable by students in order to understand and engage with the world in a mathematical way, or to solve problems (OECD, Citation2019). Mathematical literacy frequently requires devising strategies for solving problems mathematically, and it involves communication. The PISA 2018 Mathematics framework (OECD, Citation2019) present capabilities (competencies) as e.g.; Communication and Devising strategies for solving problems. Reading, decoding and interpreting statements, questions, tasks or objects enables students to form a mental model of the situation, which is an important step in understanding, clarifying and formulating a problem. Later, once a solution has been found, the problem solver may need to present the solution, and perhaps an explanation or justification, to others (OECD, Citation2019, p. 80). Students’ capability to devising strategies for solving problems involves a set of critical control processes that guide a student to effectively recognise, formulate and solve problems. This skill is characterised as selecting or devising a plan or strategy to use mathematics to solve problems arising from a task or context, as well as guiding its implementation. This mathematical capability can be demanded at any of the stages of the problem-solving process (OECD, Citation2019, p. 81). To illustrate, for mathematical problem solving, students learn to read, analyse, evaluate, and verify math problems using comprehension processes such as paraphrasing, visualization, and planning as well as self-regulation strategies (Mevarech, Tabuk, & Sinai, Citation2006; OECD, Citation2019; Weinstein, Goetz, & Alexander, Citation1988). Further, self-regulation enhances learning by helping students to take control of their actions, seeking feedback and move toward independence as they learn.

In line with these former studies, the current paper seeks to answer the following question: In what ways and to what extent does a seven-month instructional intervention with teachers based on responsive pedagogy yield short-term effects on student learning in mathematics?

2. Responsive pedagogy

As already mentioned, responsive pedagogy is defined as a learning dialogue (Smith et al., Citation2016). An essential part of responsive pedagogy is the explicit intention of the teacher to make learners believe in their own competence and their ability to successfully complete tasks and meet challenges, thereby strengthening students’ self-regulation and self-efficacy in relation to a specific domain or task and increasing their overall self-concept. Responsive pedagogy has an initial emphasis on the iterative process of cyclically engaging with students’ thoughts and reflections in relation to the information and comments provided by significant others.

Responsive pedagogy can be understood as a formative assessment condition where students’ self-regulated learning and self-efficacy beliefs are emphasised (Panadero et al., Citation2018a; Smith et al., Citation2016). Formative assessment is classroom assessment that provides information about students’ progress and then uses that information to support adjustments and revisions to both teaching and learning with the goal of enhancing student learning (Black & Wiliam, Citation1998; Hattie & Timperley, Citation2007). Formative assessment, done well, promotes students’ understanding of what they are trying to learn, how they will know they are learning and how they will move forward (Gardner, Citation2012; Panadero et al., Citation2018a). Further, self-regulatory strategies and metacognitive skills need to be trained during education as self-regulated learning programmes have proved to be effective even at primary levels of education (Dignath, Buettner, & Langfeldt, Citation2008).

Responsive pedagogy is centred around the feedback dialogue between a learner and a significant other (Smith et al., Citation2016), and is a response to a proposal of embedding formative assessment in pedagogy (Black & Wiliam, Citation2018). Feedback is an inherent catalyst for all self-regulated activities (Butler & Winne, Citation1995). Still, feedback can preclude or impede students’ learning (Hattie & Timperley, Citation2007; Kluger & DeNisi, Citation1996). For feedback to enhance learning, it should be an integral part of the teaching and learning process (Andrade, Citation2010; Black & Wiliam, Citation2009; Hattie & Gan, Citation2011; Hattie & Timperley, Citation2007; Sadler, Citation1998), it should encourage thinking (Black & Wiliam, Citation2009; Perrenoud, Citation1998) and be understandable to the receiver (Black & Wiliam, Citation1998; Sadler, Citation1989, Citation1998). To reach this goal, teachers will need to provide embedded feedback in learning activities and take advantage of the ‘moments of contingencies’ elicited for building students’ learning (Black & Wiliam, Citation1998, Citation2009). Further, students’ active participation in seeking and using feedback will be important for their self-regulation processes (Andrade, Citation2010; Butler & Winne, Citation1995).

Zimmerman (Citation1998) claimed that self-regulated learning is an important factor for effective learning and the development of academic skills. According to a widely used definition, self-regulated learning is “self-generated thoughts, feelings, and actions that are planned and cyclically adapted to the attainment of personal goals” (Zimmerman, Citation2000b, p. 14). Self-regulated learners are thus understood as metacognitively, motivationally and behaviourally active participants in their own learning processes (Zimmerman, Citation1986) who self-regulate thoughts, feelings and actions to attain their learning goals (Zimmerman, Citation2001).

The cognitive and metacognitive strategies teachers use to facilitate and enhance students’ engagement in work with instructional content are important for student learning (Hafen et al., Citation2015; Wiliam & Leahy, Citation2007; Zimmerman, Citation2000b). Perels, Dignath, and Schmitz (Citation2009, p. 20) explained that training which combines the teaching of strategies with mathematics mostly focuses on cognitive strategies and rarely on self-regulatory or metacognitive learning strategies. The capability to judge one’s own and others’ work, also referred to as ‘evaluative judgement’ (Panadero, Broadbent, Boud, & Lodge, Citation2018b), has been identified as a key component in fostering student self-regulation. Self-assessment has also been highlighted as vital in promoting self-regulated strategies and self-efficacy (Panadero et al., Citation2017).

Self-efficacy beliefs have been found to be sensitive to subtle changes in students’ performance context, to mediate students’ academic achievement and to interact with self-regulated learning processes (Panadero et al., Citation2017; Zimmerman, Citation2000a). Self-efficacy is a belief about the personal capabilities to perform a task and reach established goals (Bandura, Citation1997). These efficacy beliefs influence students’ thoughts, feelings, motivations and actions (Bandura, Citation1993; Eccles et al., Citation1993; Zimmerman, Citation2000a). Students’ beliefs in their own capacity to exercise control over their lives are essential to enhancing learning.

Emotions facilitate or impede students’ self-regulation of learning (Pekrun, Goetz, Titz, & Perry, Citation2002). Zimmerman (Citation2000a) said that “self-efficacy beliefs have shown convergent validity in influencing such key indices of academic motivation as choice of activities, persistence, level of effort, and emotional reactions” (p. 86). A study by Bandura and Schunk (Citation1981) found that students’ mathematical self-efficacy beliefs were predictive of their choice of engaging in subtraction problems rather than other tasks. The choice of arithmetic activity was in relation to the learners’ sense of efficacy; the higher the sense of efficacy, the more advanced the choice of the arithmetic activity.

Students’ beliefs about their efficacy to manage academic tasks can also influence students emotionally by decreasing anxiety, depression and stress (Bandura, Citation1997). Positive activating emotions such as enjoyment of learning may generally enhance academic motivation, whereas negative emotions may be detrimental (Pekrun et al., Citation2002, p. 97). Research has found that students are more willing to invest effort and time in mathematics if learning activities are enjoyable and interesting rather than anxiety-laden or boring (Frenzel, Pekrun, & Goetz, Citation2007; Villavicencio & Bernardo, Citation2013). Pekrun et al. (Citation2002) explained that anger, anxiety and shame can be assumed to reduce intrinsic motivation because negative emotions tend to be incompatible with enjoyment as implied by interest and intrinsic motivation.

Self-efficacy has become one of the most important variables both in research on motivation and on self-regulated learning (e.g. Zimmerman, Bandura, & Martinez-Pons, Citation1992), and self-efficacy has therefore been integrated into self-regulated learning models (Panadero, Citation2017; Panadero et al., Citation2017).

The measurements of self-efficacy and self-regulated learning is a difficult area of research as these concepts belong to an internal process that cannot directly be assessed, and researchers need to find ways to assess it (Boekaerts & Corno, Citation2005; Panadero et al., Citation2017). The change of approaches to understanding self-efficacy and self-regulated learning, from a trait-based to a process-based perspective, affects the type of measurement required to capture the phenomenon. Panadero et al. (Citation2017) proposed that the research field of self-regulated learning is in a wave where a range of methods and instruments that combine different features promoting and measuring the progress of self-regulation are used. They further stated that the perspective that self-reporting can measure a static version of self-regulated learning has changed; self-reports are now used in more contextualised measures or in combination with other measures to triangulate the data (e.g. Panadero, Alonso-Tapia, & Huertas, Citation2012).

The position taken in this paper is that feedback, self-regulation and self-efficacy are core concepts of the responsive pedagogy, and that responsive pedagogy can enhance student learning.

3. Interventions to enhance student learning

In the interest of making learning processes more efficient, multiple intervention studies aimed at fostering assessment and self-regulated learning have been conducted in schools (e.g. Boekaerts & Corno, Citation2005; Dignath & Büttner, Citation2008; Panadero, Klug, & Järvelä, Citation2016; Perels et al., Citation2009). Dignath and Büttner (Citation2008) meta-analysis of 49 studies on primary schools and 35 on secondary schools found that the effect sizes for interventions fostering self-regulated learning in mathematics were higher among primary school students than for secondary school students.

Further, the effect sizes for mathematic performance at secondary school were found to be higher if the theoretical background of the intervention focused on motivational rather than metacognitive learning theories (Dignath & Büttner, Citation2008, p. 248). This aligns with Wigfield’s (Citation1994) findings that students’ achievement beliefs in mathematics are reported as a negative development as they get older. Still, teachers work in developing motivational strategies and metacognitive reflections are found to be effective in interventions that mainly focus on strengthen students’ cognitive strategies (Dignath & Büttner, Citation2008, p. 252). These findings are based on theories claiming that older students benefit more from elaborating on the application of metacognitive strategies that they might already possess to reach a more sophisticated level of strategy use (p. 253). Following Zimmerman’s (Citation2000b, Citation2002) model of developing self-regulated learning, students start learning by modelling and imitating. Only at the higher developmental levels can students control and regulate their own learning processes independently of others. In these stages, metacognitive reflection about when and how to use which strategy takes place independently of the teacher, while students require support in earlier stages (Zimmerman, Citation2002). Still, it takes time to develop competency in self-regulated learning.

Kim (Citation2005) conducted an experiment in Korea with 76 six graders in mathematics over nine weeks to study the effects of a constructivist approach on academic achievement, self-concept and learning strategies, and student preference. The study revealed that constructivist teaching was more effective than traditional teaching in terms of academic achievement. Further, the constructivist teaching was not effective in relation to self-concept and learning strategies, but had some effect upon motivation, anxiety towards learning and self-monitoring.

These former intervention studies show that an emphasis on self-regulated learning, achievement emotions and affective dimensions seem to be important for enhancing student learning in lower secondary school. Further, cognitive and metacognitive strategies seem to be effective for enhancing students learning processes.

4. Methodology

4.1. Sample—recruitment and participants

Principals from schools in western Norway were invited to an information meeting about the project. This was done in accordance to research emphasising a need for involvement by the schools’ leadership (Borko, Citation2004). Nine schools that expressed an interest in the project received a letter of invitation with information about the intervention study. Math teachers teaching grade 9 were invited by the principals at each school to participate in the project. The number of classes amounted to 40, the same number as participating teachers. The schools agreed to take part as experimental schools, and the involved teachers signed consent forms to participate in accordance with the Norwegian Centre for Research Data (NSD). The schools were both rural and urban and differed in size. It should also be noted that the school principals (or vice principals) were asked to participate in the intervention activities at the project level as well as at the regional and school levels.

In addition, 11 compatible schools were recruited as a control group. Both in the experiment and the control group the students responded to a pre-post questionnaire and conducted a pre-post achievement test. An overview of the number of participants who answered throughout the study is found in Table .

Table 1. Overview of participants throughout the study

4.2. The intervention on responsive pedagogy

In this study an aim for the intervention was to develop the teachers’ responsive pedagogy to enhance student learning in mathematics through feedback dialogues, students’ self-regulatory processes, and by strengthen students’ beliefs in their abilities to master mathematics. The intervention period lasted for seven months and included three seminars hosted by the research team for all participating teachers. Each seminar focused on a central part of responsive pedagogy; feedback interactions, self-regulation and self-efficacy. In the first seminar about feedback interactions the participating teachers were introduced to theories on feedback dialogues (e.g. Gamlem, Citation2015; Hattie & Timperley, Citation2007) to enhance student learning, and some principles for feedback (e.g. timing). Further examples were shared and discussed among the participants. In the second seminar theories about self-regulation (e.g. Zimmerman, Citation1986; Zimmerman, Citation2001) were introduced to the teachers. Discussions about how self-regulation can be implemented in the classrooms were discussed among the participating teachers and researchers. In addition, professor Anna Sfard (University of Haifa) held a lecture and discussed the topic: “How to develop mathematical discourses”. In the third seminar the teachers worked with the concept self-efficacy. This seminar started with a lecture by Associate Professor Karin S. Street (Oxford University). Further, the teachers discussed their understanding of this concept and shared experiences of how this might be seen in students’ behaviour in the classroom.

A core principle of the intervention was that the teachers should not be told by the research team how to provide e.g. feedback, but rather be empowered by enriching their present responsive pedagogy so they should be able to develop practices addressing self-regulation and self-efficacy in dialogues with their students. By focusing on the individual professional development aspect, we realised the rigour of the study is reduced, as the teaching practices were not uniform. Support for this decision was found in Timperley’s work (Timperley, Citation2011; Timperley, Wilson, Barrar, & Fung, Citation2007) stating that by using established practice and meeting external expertise, teachers will be empowered to develop their own understanding and practice of responsive pedagogy, thereby enhancing affective, cognitive and metacognitive aspects of students’ learning of mathematics.

The intervention is thus a weak form of manipulation at the group level (Johnson, Russo, & Schoonenboom, Citation2017). The cause (responsive pedagogy) is manipulated in an experimental group at the individual level. Before the intervention, natural teacher-student interactions occur, and during the intervention, teachers emphasise responsive pedagogy in their interactions with their students to enhance student learning.

The average effect on the intervention will be obtained by comparing students with an emphasis on responsive pedagogy (the experimental group) to students who do not have a manipulation of responsive pedagogy (the control group). Johnson et al. (Citation2017, p. 8) explained that “such cases have been called counterfactual causation, but they contain manipulation as well because the independent variable is said to be manipulated”.

4.3. Data collection

The research design was a non-equivalent control group of pre-test/post-test design (see Table ). In this study, data were collected through a self-report questionnaire for students in paper-form and through a national achievement test in mathematics that was digitally (pre- and post-intervention). The achievement test was answered a few weeks prior to the questionnaire.

Table 2. Non-equivalent control group of pre-test/post-test design

The pre-measure questionnaire was administered in September 2016. The total response rate was 90%, N = 1899, (Intervention group, 94%, N = 1003; control group, 85%, N = 896).

The post-measure questionnaire was administered in April/May 2017, with a total response rate of 81.3 % for those participating in both the pre- and post-measures (n = 1612). The post-measure questionnaire took place immediately after the completion of the intervention.

Based on an information letter from the research group, the teachers administered the questionnaire and achievement test in their mathematics classes. Confidentiality was maintained by placing the answered questionnaires in an anonymous envelope with an ID number, and the envelopes were returned to the research group.

4.3.1. Questionnaire

To measure constructs as learning strategies and affective dimensions of self-regulation, items of the Norwegian version of the Cross-Curricular Competencies questionnaire (CCC) (Lie, Kjærnsli, Roe, & Turmo, Citation2001) were used. The research group contextualised the items into mathematics. Anxiety and enjoyment were measured by items from the Achievement Emotions Questionnaire–Mathematics (AEQ-M) (Pekrun, Goetz, & Frenzel, Citation2005). The anxiety scale consisted of six items, two items from each of the three contextual dimensions of anxiety (Class-related, learning-related and test-related). The enjoyment scale was based on eight items from the same manual (Pekrun et al., Citation2005) and was composed of items from the different contextual dimensions.

A 4-point Likert scale with the responses strongly disagree (1), disagree (2), agree (3) and strongly agree (4) was used. Negatively worded items were reversed before calculating the total score for the scales. The students were given 45 minutes to answer the questionnaire.

Table shows item examples and the Cronbach’s alpha for each subscale in the student questionnaire.

Table 3. Questionnaire, item examples and Cronbach’s alpha for outcome measures

4.3.2. Achievement test

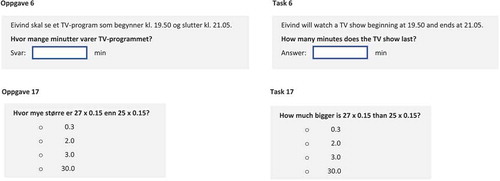

Achievement in mathematics was measured by the Norwegian national test in mathematics for 9th grade students. Two sample questions from the test is presented in Figure . The pre-test was the ordinary national test in mathematics organised digitally for all schools in Norway in September 2016. The participants had 90 minutes to answer the pre- and post-achievement test. The post-measure test was organised digitally by the research group in June 2017 using the same test as for the pre-measure (baseline). To counteract the potential ceiling effect, five assignments at the upper level of difficulty were added in the last part of the test. These five assignments were suggested by the teachers involved in the intervention (N = 40). The achievement tests were both completed digitally and manually paired with the questionnaire data in SPSS.

4.3.3. Intervention treatment-control variable

The questionnaire included an item pool of 32 items measuring different aspects of responsive pedagogy. Based on the content of responsive pedagogy in the intervention, the researchers marked items that reflected the intervention most. Items marked by five or all six researchers were included in an intervention-control variable. 12 items met these criteria, and these were used in a scale named the “Responsive pedagogy intervention index” (see Table for item example).

Only classes that showed improvement from pre- to post-measure or were stable on the responsive pedagogy intervention index were included in the analyses for the intervention group. Classes that had a negative development on the responsive pedagogy intervention index were excluded from further analysis. Therefore, the sample of the intervention group was reduced to 494 students (see Table ).

4.4. Data analysis

Descriptive analysis, Chi-square, independent samples t-test and the One-way ANCOVA analysis were done on IBM SPSS Statistics 24 for the pre- and post-questionnaire and the pre- and post-achievement test in mathematics.

4.4.1. Missing data

Analyses of missing data revealed that in the questionnaire, the single-item-missing percent was between 5.6% and 11.8% (M = 7.5, SD = 1.13). One possible reason for this discrepancy is that the questionnaires were optically scanned into a database, and that the students did not carefully fill in the answers within each box. This might indicate that the missing data are random. Missing data were replaced by a mean score at the single-item level, so no participants were excluded from the analysis. For the achievement test, only participants that had been part of both the pre- and post-test were included in the analysis.

5. Results

5.1. Demographic and descriptive statistics

Table displays the comparison of the intervention and control groups, revealing minor socio-cultural background and demographical differences between the groups.

Table 4. Comparison of the background variables of the intervention and control groups

Descriptive statistics for scales and variables used in the main analysis are shown in Table : learning strategies (rehearsal, elaboration, control strategies), affective dimensions (task value motivation, effort and persistence, self-efficacy, self-concept), achievement emotions (anxiety, enjoyment) and achievement (achievement in mathematics). These variables are presented at the pre-measure (t1) and post-measure (t2) for the intervention.

Table 5. Scales and variables in main analysis presented as pre- and post-measures of intervention

5.2. Correlation among the variables

The results showed significant correlations (p < .01) between all the variables, except for two correlations in the anxiety scale (see Table ). The significant correlations at the .01 level ranged from r = .07 to r = .76. The highest significant correlations were between variables in the affective dimensions—self-conception and self-efficacy, r = .76, p < .01—and the achievement emotions—enjoyment and task value motivation, r = .74, p < .01. The responsive pedagogy intervention index (RPII) showed significant correlations with all variables (p < .01), except for the anxiety variable (r = −.18, p < .01).

Table 6. Correlation matrix with the outcome-variables (N = 1165)

5.3. Inferential statistics, one-way ANCOVA

A series of 10 one-way between-groups analyses of covariance (ANCOVA) was conducted to investigate the effects of the intervention (See Table ). The independent variable was the intervention, and the dependent variables were learning strategies (rehearsal, elaboration, control strategies), affective dimensions (self-efficacy, self-conception, task value motivation, effort and persistence), achievement emotions (anxiety, enjoyment) and achievement in mathematics. Students’ scores on the questionnaire and the national test in mathematics served as a pre-measure and were used as the covariate in the analysis. Preliminary checks revealed no violation of the assumptions of normality, linearity, homogeneity of variances or homogeneity of regression slopes.

Table 7. Inferential statistics: Intervention outcomes (Main analysis, ANCOVA with pre-measure score as covariate)

After adjusting for pre-measure scores, there were significant differences between the intervention and control groups for seven of the dependent variables on post-measure scores. When computing for effect size (Cohen’s d) for the variables, very low effect sizes were found (See Table ).

5.4. Summary—non-equivalent control group of pre-test/post-test study

This study revealed small, significant short-term differences between the total scores for the pre- and post-measures in the intervention group for the variables: elaboration, task value motivation, effort and persistence, self-efficacy and self-conception. Furthermore, we found significant differences between the intervention group and the control group for the two variables in the scale achievement emotions anxiety and enjoyment. The results revealed no significant differences between the groups for the variables rehearsal, control strategies and achievement in mathematics.

6. Discussion

Small significant differences were found between the total scores for the pre- and post-measures for the intervention group in the cognitive scale learning strategies (elaboration), affective dimensions (task value motivation, effort and persistence, self-efficacy and self-concept) and achievement emotions (anxiety and enjoyment). These results align with former studies presented in this article (e.g. Frenzel et al., Citation2007; Hafen et al., Citation2015; Zimmerman et al., Citation1992). The results revealed no significant differences between the intervention and control groups for the variables rehearsal and control strategies. Montague (Citation2003) and Swanson and Jerman (Citation2006) claim that cognitive strategy instruction which incorporates research-based practices and procedures influences mathematical learning. Still, our analysis revealed no significant differences between the groups for achievement in mathematics. This indicates that responsive pedagogy does not necessarily strengthen students’ mathematical learning. It might be that responsive pedagogy gives better outcome in problem solving and modelling (e.g. Montague, Citation2003; OECD, Citation2019) than in obtaining elementary algebraic skills. Since this intervention study aimed to strengthen teachers’ responsive pedagogy for improving students’ learning outcomes for feedback interactions, self-regulation and self-efficacy beliefs—explained as cognitive, meta-cognitive and affective dimensions—this result might be reasonable. Working with teachers’ assessment practice might thus not necessarily lead to student achievement in e.g. mathematics. Baird, Andrich, Hopfenbeck, and Stobart (Citation2017) state that assessment practices to improve learning must be examined further, as the evidence for the learning effects of assessments is still inconclusive. Their claim is supported by Bennett (Citation2011), who suggested that the evidence of formative assessments having an impact on learning is not sufficiently convincing. Bennet argued that assessments for learning rooted in pedagogical skills might be insufficient and that they should be domain-specific. This might be a critique of our project since responsive pedagogy was not deeply integrated in mathematics but was conceptualised in a more general pedagogical approach. Another reason for not finding an increase in student mathematics achievement might be that the effect was measured simultaneously with the completion of the intervention and there might be more evident long-term effects that the current study did not grasp. Still, we notice a change in students’ attitude to learning mathematics, but this cannot be expected to have an immediate effect on their math achievements.

Our study revealed small significant differences for the pre- and post-measures for the intervention group on the affective dimensions (task value motivation, effort and persistence, self-efficacy and self-concept) and achievement emotions (anxiety and enjoyment). This is interesting since self-efficacy beliefs have shown convergent validity in influencing key indices of academic motivation such as choice of activities, persistence, level of effort and emotional reactions (Zimmerman, Citation2000a).

Emotions also predict academic achievement and are closely intertwined with essential components of students’ self-regulated learning such as interest, motivation, strategies of learning and internal versus external control of regulation (Pekrun et al., Citation2002, p. 103). Small significant improvement on the self-efficacy scale over the intervention period is promising, and outcome variables on affect, such as self-efficacy, have become important variables in research on self-regulated learning (e.g. Panadero, Citation2017; Panadero et al., Citation2017; Zimmerman et al., Citation1992). Dweck (Citation2008) claim that if an aim is to change students’ attributions for success and failure, teachers should emphasise the importance of systematically using learning strategies appropriate to the task at hand. The way in which teachers give feedback to students about their use of learning strategies will probably influence their attribution for success or failure more than feedback regarding their abilities or effort.

Further, significant differences between the intervention group and the control group were found for the variables: anxiety and enjoyment in the achievement emotions scale. We argue that this result is incremental for student learning outcomes since positive activating emotions such as enjoyment of learning may enhance academic motivation (Pekrun et al., Citation2002). Students seem thus more willing to invest their effort and time in mathematics if the learning activities are enjoyable and interesting rather than anxiety-laden or boring, something also former studies have found (e.g. Frenzel et al., Citation2007; Villavicencio & Bernardo, Citation2013).

7. Summary and limitations

This study analysed dimensions in a responsive pedagogy in natural classroom settings over seven months that might strengthen students’ self-regulation, self-efficacy and achievement in mathematics. We found effects on the cognitive dimension (learning strategy), elaboration and several affective dimensions, including self-efficacy, self-conceptions, task-value motivation and effort and persistence. Regarding metacognitive dimensions and mathematical achievement, we did not find any effects. Our contribution is therefore that our study points at the fact that immediate effect on learning achievements is not necessarily found when working with student learning on self-efficacy, self-regulation and feedback practices, and teachers, school leaders, and not least, policy makers should keep this in mind. However, this study revealed that aspects of learning, such as motivation, self-regulation and self-efficacy can be noticed immediately after intervention with teachers.

The analyses showed that a seven-month instructional intervention based on responsive pedagogy yielded significant short-term effects on student learning concerning students’ self-efficacy, motivation, overall self-concept and self-regulation. Further, significant differences were found between the intervention group and the control group for the two variables in the scale achievement emotions, anxiety and emotions. An emphasis on teachers’ practices in responsive pedagogy seemed to make learners believe in their own competence and ability to successfully complete tasks and meet challenges, thereby strengthening their self-efficacy in relation to mathematics and increasing their overall self-concept.

In this study the findings suggest that short-term effects can be noticed in the affective dimensions (more enjoyment, reduced anxiety) of learning mathematics. In relation to the cognitive dimensions of learning, specifically related to increased achievements in mathematics, the findings do not document any short-term effects. Further studies are needed to examine long-term achievement effects, for example, twelve months after the intervention. Furthermore, this study provides explanations for how learning in responsive pedagogy can be seen in classrooms and how it triggers aspects of cognition, metacognition, affects and emotions.

Regarding the limitations of the study, we would like to note that the responsive pedagogy intervention index (RPII) was constructed as a part of this research project and has not been validated. Still, based on the theoretical foundation for this article, the RPII shows good convergent validity with constructs of learning strategies, the affective dimensions and achievement in mathematics (see Table ).

Further, we measure progress in learning by asking students to answer claims like: “When I am working on mathematics, I find out how the task fits into what I have already learned”. It is difficult to know if the students understand these claims in the questionnaire, and therefore this should be regarded as a limitation.

Some of the sub-scales from the Cross-Curricular Competencies questionnaire (Lie et al., Citation2001) had a low number of items and showed insufficient Cronbach’s alpha reliability. Due to theoretical foundations and prior research, these sub-scales correlated as expected with other scales in the study (Lie et al., Citation2001) that support the construct validity of these scales.

Demographic variables indicate that the control and intervention groups is ‘similar’; still, interpretations must be made with caution due to sample size and the quasi-experimental design. Future research should investigate the long-term effects of responsive pedagogy interventions in longitudinal designs.

Acknowledgements

We are grateful to the research assistants: John Ivar Sunde and Jeanette Otterlei Holmar for their help in collecting and administrating datacollection. Further, we will thank Professor John Gardner (University of Stirling), Professor Louise Hayward (University of Glasgow), Professor Reinhard Pekrun (Ludwig-Maximilians-Universität München), Professor Mien Siegers (Maastricht University) for valuable feedback on the research project. We also want to thank all students, teachers and schools involved in this project for their willingness to participate and share practices and reflections.

Additional information

Funding

Notes on contributors

Siv M. Gamlem

Siv M. Gamlem, professor in pedagogy at Volda University College, Norway. Research interests are assessment, learning, and systematic observation, and she has published widely in these areas.

Lars M. Kvinge

Lars M. Kvinge, assistant professor at the department of Teacher Education, Western Norway University of Applied Sciences, Stord, Norway.

Kari Smith

Kari Smith, professor of Education and Head of the National Research School in Teacher Education at the Norwegian University of Science and Technology, Trondheim, Norway. Research interests are assessment, teacher education and professional learning, and she has published widely within these areas.

Knut Steinar Engelsen

Knut Steinar Engelsen, professor in Pedagogy and ICT in learning at Western Norway University of Applied Sciences, Stord, Norway. Engelsen has been an author and co-author of several books and scientific articles and book-chapters about assessment.

References

- Andrade, H. (2010). Students as the definitive source of formative assessment. In H. Andrade & G. Cizek (Eds.), Handbook of formative assessment (pp. 90–18). New York, NY: Routhledge.

- Baird, J., Andrich, D., Hopfenbeck, T. N., & Stobart, G. (2017). Assessment and learning: Fields apart? Assessment in Education: Principles, Policy and Practice, 24(3), 317–350. doi:10.1080/0969594X.2017.1319337

- Bandura, A. (1989). Social cognitive theory. In R. Vasta (Ed.), Annals of child development (Vol.6.) Six theories of child development (pp. 1–60). Greenwich, CT: JAI.

- Bandura, A. (1993). Perceived self-efficacy in cognitive development and functioning. Educational Psychologist, 28(2), 117–148. doi:10.1207/s15326985ep2802_3

- Bandura, A. (1997). Self-efficacy: The exercise of control. New York, NY: W.H. Freeman and Company.

- Bandura, A., & Schunk, D. H. (1981). Cultivating competence, self-efficacy, and intrinsic interest through proximal self-motivation. Journal of Personality and Social Psychology, 41, 586–598. doi:10.1037/0022-3514.41.3.586

- Bennett, R. E. (2011). Formative assessment: a critical review. assessment in education: principles. Policy & Practice, 18(1), 5-25.

- Black, P., & Wiliam, D. (1998). Inside the black box. Raising standards through classroom assessment. Phi Delta Kappan, 80(2), 139–148.

- Black, P., & Wiliam, D. (2009). Developing the theory of formative assessment. Educational Assessment, Evaluation and Accountability, 21(1), 5–31. doi:10.1007/s11092-008-9068-5

- Black, P., & Wiliam, D. (2018). Classroom assessment and pedagogy. Assessment in Education: Principles, Policy & Practice, 25(6), 551–575. doi:10.1080/0969594X.2018.1441807

- Boekaerts, M., & Corno, L. (2005). Self-regulation in the classroom: A perspective on assessment and intervention. Applied Psychology: An International Review, 54(2), 199–231. doi:10.1111/j.1464-0597.2005.00205.x

- Borko, H. (2004). Professional development and teacher learning: Mapping the terrain. Educational Researcher, 33(8), 3–15. doi:10.3102/0013189X033008003

- Butler, D. L., & Winne, P. H. (1995). Feedback and self-regulated learning: A theoretical synthesis. Review of Educational Research, 65(3), 245–281. doi:10.3102/00346543065003245

- Dignath, C., Buettner, G., & Langfeldt, H.-P. (2008). How can primary school students learn self-regulated learning strategies most effectively?: A meta-analysis on self-regulation training programmes. Educational Research Review, 3(2), 101–129. doi:10.1016/j.edurev.2008.02.003

- Dignath, C., & Büttner, G. (2008). Components of fostering self-regulated learning among students. A meta-analysis on intervention studies at primary and secondary school level. Metacognition Learning, 3, 231–264. doi:10.1007/s11409-008-9029-x

- Dweck, C. S. (2008). Mindset. The new psychology of success. New York: Ballantine Books.

- Eccles, J. S., Midgley, C., Wigfield, A., Buchanan, C. M., Reuman, D., Flanagan, C., & Iver, D. M. (1993). Development during adolescence: The impact of stage-environment fit on young adolescents’ experiences in schools and families. American Psychologist, 48(2), 90–101. doi:10.1037/0003-066X.48.2.90

- Frenzel, A. C., Pekrun, R., & Goetz, T. (2007). Perceived learning environment and students’ emotional experiences: A multilevel analysis of mathematics classrooms. Learning and Instruction, 17, 478–493. doi:10.1016/j.learninstruc.2007.09.001

- Gamlem, S. M. (2015). Tilbakemelding for læring og utvikling [Feedback for Learning and Development]. Oslo: Gyldendal Forlag.

- Gardner, J. (2012). Quality assessment practice. In J. Gardner (Ed.), Assessment and learning (2nd ed., pp. 103–122). London: Sage Publications.

- Hafen, C. A., Hamre, B. K., Allen, J. P., Bell, C. A., Gitomer, D. H., & Pianta, R. C. (2015). Teaching through interactions in secondary school classrooms: Revisiting the factor structure and practical application of the classroom assessment scoring system-secondary. The Journal of Early Adolescence, 35, 651–680. doi:10.1177/0272431614537117

- Hattie, J., & Gan, M. (2011). Instruction based on feedback. In R. E. Mayer & P. A. Alexander (Eds.), Handbook of research on learning and instruction (pp. 249–271). New York, NY: Routhledge.

- Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. doi:10.3102/003465430298487

- Johnson, B. R., Russo, F., & Schoonenboom, J. (2017). Causation in mixed methods research: The meeting of philosophy, science, and practice. Journal of Mixed Methods Research, 1–20. doi:10.1177/1558689817719610

- Kim, J. S. (2005). The effects of a constructivist teaching approach on student academic achievement, self-concept, and learning strategies. Asia Pacific Education Review, 6(1), 7-19.

- Kluger, A. N., & DeNisi, A. (1996). The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological Bulletin, 119, 254–284. doi:10.1037/0033-2909.119.2.254

- Lie, S., Kjærnsli, M., Roe, A., & Turmo, A. (2001). Godt rustet for framtida? Norske 15-åringers kompetanse i lesing og realfag i et internasjonalt perspektiv. [Well prepared for future? Norwegian 15-year-olds in reading and science in an international perspective]. Oslo: University of Oslo, Acta Didactica (4/2001).

- Mehan, H. (1979). “What time is it, Denise?” Asking known information questions in classroom discourse. Theory into Practice, 18(4), 285–294. doi:10.1080/00405847909542846

- Mevarech, Z. R., Tabuk, A., & Sinai, O. (2006). Meta-cognitive instruction in mathematics classrooms: Effects on the solution of different kinds of problems. In A. Desoete & M. Veenman (Eds.), Meta-cognition in mathematics (pp. 70–78). Hauppauge, NY: Nova Science.

- Montague, M. (2003). Solve It! A mathematical problem-solving instructional program. Reston, VA: Exceptional Innovations.

- Niss, M., & Jensen, T. (2002). Kompetencer og matematiklaering Ideer og inspiration til udvikling af matematikundervisning i Danmark. [Skills and Mathematics Learning Ideas and inspiration for development of mathematics education in Denmark]. Copenhagen: Ministry of Education. Retrieved from http://static.uvm.dk/Publikationer/2002/kom/hel.pdf

- OECD. (2004). The PISA 2003 assessment framework: Mathematics, reading, science and problem solving knowledge and skills, PISA. Paris: Author. doi:10.1787/9789264101739-en

- OECD. (2019). PISA 2018 mathematics framework”, in PISA 2018 assessment and analytical framework. Paris: Author. doi:10.1787/13c8a22c-en

- Panadero, E. (2017). A review of self-regulated learning: Six models and four directions for research. Frontiers in Psychology, 8(422). doi:10.3389/fpsyg.2017.00422

- Panadero, E., Alonso-Tapia, J. A., & Huertas, J. (2012). Rubrics and self-assessment scripts effects on self-regulation, learning and self-efficacy in secondary education. Learning and Individual Differences, 22(6), 806–813. doi:10.1016/j.lindif.2012.04.007

- Panadero, E., Andrade, H., & Brookhart, S. (2018a). Fusing self-regulated learning and formative assessment: A roadmap of where we are, how we got here, and where we are going. Australian Educational Researcher, 45, 13–31. doi:10.1007/s13384-018-0258-y

- Panadero, E., Broadbent, J., Boud, D., & Lodge, J. M. (2018b). Using formative assessment to influence self- and co-regulated learning: The role of evaluative judgement. European Journal of Psychology of Education. doi:10.1007/s10212-018-0407-8

- Panadero, E., Jonsson, A., & Botella, J. (2017). Effects of self-assessment on self-regulated learning and self-efficacy: Four meta-analyses. Educational Research Review, 22, 74–98. doi:10.1016/j.edurev.2017.08.004

- Panadero, E., Klug, J., & Järvelä, S. (2016). Third wave of measurement in the self-regulated learning field: When measurement and intervention come hand in hand. Scandinavian Journal of Educational Research, 60(6), 723–735. doi:10.1080/00313831.2015.1066436

- Pekrun, R., Goetz, T., & Frenzel, A. C. (2005). Achievements emotions questionnaire – Mathematics (AEQ-M) user’s manual. Munich, Germany: University of Munich, Department of Psychology.

- Pekrun, R., Goetz, T., Titz, W., & Perry, R. P. (2002). Academic emotions in students’ self-regulated learning and achievement: A program of qualitative and quantitative research. Educational Psychologist, 37, 91–105. doi:10.1207/S15326985EP3702_4

- Perels, F., Dignath, C., & Schmitz, B. (2009). Is it possible to improve mathematical achievement by means of self-regulation strategies? Evaluation of an intervention in regular math classes. European Journal of Psychology of Education, 24(17), 17–31. doi:10.1007/BF03173472

- Perrenoud, P. (1998). From formative evaluation to controlled regulation of learning processes: Towards a wider conceptual field. Assessment in Education: Principles, Policy & Practice, 5(1), 85–102. doi:10.1080/0969595980050105

- Sadler, R. (1989). Formative assessment and the design of instructional systems. Instructional Science, 18(2), 119–144. doi:10.1007/bf00117714

- Sadler, R. (1998). Formative assessment: Revisiting the territory. Assessment in Education: Principles, Policy & Practice, 5(1), 77–84. doi:10.1080/0969595980050104

- Sinclair, J. M., & Coulthard, R. M. (1975). Toward an analysis of discourse. The English used by teachers and pupils. London: Oxford University Press.

- Smith, K., Gamlem, S., Sandal, A. K., & Engelsen, K. S. (2016). Educating for the future: A conceptual framework of responsive pedagogy. Cogent Education, 3(1), 1227021. doi:10.1080/2331186X.2016.1227021

- Swanson, H. L., & Jerman, O. (2006). Math disabilities: A selective meta-analysis of the literature. Review of Educational Research, 76(2), 249–274. doi:10.3102/00346543076002249

- Timperley, H. (2011). Using student assessment for professional learning: Focusing on students’ outcomes to identify teachers’ needs (34). Melbourne, State of Victoria: Department of Education and Early Childhood Development.

- Timperley, H., Wilson, A., Barrar, H., & Fung, I. (2007). Teacher professional learning and development. Best evidence synthesis iteration. Education. Wellington, New Zealand: Ministry of Education. Retrieved from https://www.educationcounts.govt.nz/__data/assets/pdf_file/0017/16901/TPLandDBESentireWeb.pdf

- Villavicencio, F. T., & Bernardo, A. B. I. (2013). Positive academic emotions moderate the relationship between self-regulation and academic achievement. The British Journal of Educational Psychology, 83, 329–340. doi:10.1111/j.2044-8279.2012.02064.x

- Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Cambridge, MA: Harvard University Press.

- Weinstein, C. E., Goetz, E. T., & Alexander, P. A. (Eds). (1988). Learning and study strategies: Issues in assessment, instruction, and evaluation. San Diego: Academic Press.

- Wigfield, A. (1994). Expectancy-value theory of achievement motivation: A developmental perspective. Educational Psychology Review, 6, 49–77. doi:10.1007/BF02209024

- Wiliam, D., & Leahy, S. (2007). A theoretical foundation for formative assessment. In J. McMillan (Ed.), Formative classroom assessment. theory into practice (pp. 29–42). New York, NY: Teachers College Press.

- Zimmerman, B. J. (1986). Becoming a self-regulated learner; Which are the key subprocesses? Contemporary Educational Psychology, 11(4), 307–313. doi:10.1016/0361-476X(86)90027-5

- Zimmerman, B. J. (1998). academic studying and the development of personal skill: A self-regulatory perspective. Educational Psychologist, 33(2), 73–86. doi:10.1080/00461520.1998.9653292

- Zimmerman, B. J. (2000a). Self-efficacy: An essential motive to learn. Contemporary Educational Psychology, 25(1), 82–91. doi:10.1006/ceps.1999.1016

- Zimmerman, B. J. (2000b). Attaining self-regulation: A social cognitive perspective. In M. Boekaerts, P. R. Pintrich, & M. Zeidner (Eds.), Handbook of self-regulation (pp. 13–40). San Diego, California: Academic Press.

- Zimmerman, B. J. (2001). Theories of self-regulated learning and academic achievement; An overview and analysis. In B. J. Zimmerman & D. H. Schunk (Eds.), Self-regulated learning and academic achievement: Theoretical perspectives (pp. 1–37). Mahwah, NJ: Erlbaum.

- Zimmerman, B. J. (2002). Achieving academic excellence: A self-regulatory perspective. In M. Ferrari (Ed.), The pursuit of excellence through education (pp. 85–110). Mahwah, NJ: Lawrence Erlbaum.

- Zimmerman, B. J., Bandura, A., & Martinez-Pons, M. (1992). Self-motivation for academic attainment: The role of self-efficacy beliefs and personal goal setting. American Educational Research Journal, 29(3), 663–676. doi:10.3102/00028312029003663