Abstract

We investigated whether our virtual reality (VR) system task could detect extrapersonal neglect in patients with stroke and attempted to determine which targets were easiest/most difficult to detect for patients with unilateral spatial neglect. Thirty-six participants completed the VR task (neglect [NEG] group, n = 10; elderly healthy [EH] group, n = 11; and young healthy [YH] group, n = 15). The VR task consisted of 18 targets, each with different characteristics (front/side; right/left/both sides; static/dynamic/irregular). Participants verbally reported the detection of targets during the task. Detection percentages were significantly lower in the NEG group than in the EH and YH groups (p < 0.001). Difficult stimulations for the NEG group to detect in the leftward detection were a Signal (front/left side/static; detection percentage: NEG = 0%, EH = 100%), a Car (side/left side/static; detection percentage: NEG = 10.0%, EH = 100%), and Cars (side/both sides/static; detection percentage: NEG = 20.0%, EH = 100%). The easiest stimulation for the NEG group to detect was a Human (front/left side/static; detection percentage: NEG = 80.0%, EH = 100%). In conclusion, our VR task can be used to confirm extrapersonal neglect in patients with stroke, and we also identified detection difficulty in the different stimulations.

PUBLIC INTEREST STATEMENT

Unilateral spatial neglect (USN) is a typical brain dysfunction that occurs after a stroke and results in an omission of the left side of the space or object being visualised. USN is a risk factor for accidents during wheelchair driving or falls while walking. In lieu of this risk, we developed a virtual reality (VR) system task to detect USN in stroke patients. The apparatus used to build the VR system included a PC, a head-mounted display, and a pin microphone. A total of 36 participants were enrolled and were seated in a chair while performing the VR task. We compared the results of the VR task among three patient groups (neglect group, elderly healthy group, and young healthy group). Our proposed VR task successfully verified USN in stroke patients, and we also identified the degree of detection difficulty in different VR stimulations.

Competing interests

The authors report no conflict of interest.

1. Introduction

Unilateral spatial neglect (USN) refers to a condition characterised by an inability to report, respond to, or orient toward stimuli presented on the side contralateral to a cerebral lesion (Heilman & Valenstein, Citation1979). USN shows a distinct hemispheric asymmetry, wherein neglect is more frequent, more severe, and more permanent following right-hemisphere lesions (Kerkhoff, Citation2001). Patients with USN experience deterioration in activities of daily life (ADL) (Denes, Semenza, Stoppa, & Lis, Citation1982; Gialanella & Fondazione Clinica del Lavoro, Centra Medico di Gussago, Divisione di RRF, Guassago (Brescia), Italy Mattioli, Citation1992; Kalra, Perez, Gupta, & Wittink, Citation1997) and a reduction in the quality of life (Gillen, Tennen, & McKee, Citation2005; Jutai et al., Citation2003). However, typical assessments for USN cannot evaluate extrapersonal neglect, and recent studies (Kim, Chun, Yun, Song, & Young, Citation2011; Kim et al., Citation2007; Navarro, Lloréns, Noé, Ferri, & Alcañiz, Citation2013; Sedda et al., Citation2013) have reported that virtual reality (VR) using a TV monitor or display may have advantages over traditional pen-and-paper tests.

Typical assessments for USN include the Behavioural Inattention Test (BIT) (Halligan, Cockburn, & Wilson, Citation1991; Wilson, Cockburn, & Halligan, Citation1987) and the Catherine Bergego Scale (CBS) (Azouvi et al., Citation1996; Bergego et al., Citation1995). The BIT is composed of 15 subtests that assess different components (body-centred neglect, object-centred neglect, or representational neglect) of USN. The CBS assesses ADL in individuals with USN; however, CBS scores can be inconsistent because real-life environments (traffic density or arrangement of objects) are different on each occasion. Azouvi et al. (Citation2003) reported that USN symptoms (present/not present, severity) differ between pen-and-paper test scores for USN and USN in ADL observations. USN in ADL is a risk factor for accidents during wheelchair use or falls while walking.

It is likely that pen-and-paper tests cannot truly reflect the spatial or dynamic elements of USN. Indeed, Buxbaum et al. (Citation2004) classified spatial distance on neglect as that of personal space (in the body), peripersonal space (within arm’s reach), and extrapersonal space (beyond arm’s reach). Pen-and-paper tests can only assess peripersonal space and static elements. Extrapersonal neglect has been reported to be more frequent (Bisiach, Perani, Vallar, & Berti, Citation1986) and severe than personal or peripersonal neglect (Butler, Eskes, & Vandorpe, Citation2004). In recent years, several authors have reported the use of VR systems for USN assessment and treatment (Kim et al., Citation2011, Citation2007; Navarro et al., Citation2013; Sedda et al., Citation2013). Kim et al. (Citation2007) reported that the VR task result (i.e., deviation angle) was strongly correlated with the line crossing test score. According to Rose, Brooks, and Rizzo (Citation2005), the advantages of the VR task include its safety, ability to assess and/or provide treatment in extrapersonal space, ease of adjustment to individual environmental settings, the ability to assess the processing of dynamic targets, to anticipate risks in real life, and to assess reaction times and the right/left error ratio.

Despite the apparent advantages of VR, the qualitative characteristics of the targets and what factors make said targets easy or difficult to recognise are still not clear. Thus, we developed a VR task capable of evaluating extrapersonal neglect for use when in a wheelchair or while walking. Our VR task will be useful for assessment or treatment by understanding of extrapersonal neglect. This study aimed to confirm the validity of our VR task as an assessment tool for extrapersonal neglect in patients with stroke related deficits and to identify the characteristics of stimuli that affect their detection.

2. Materials and methods

2.1. Participants

A total of 36 participants (male: n = 20) were included in this study and divided into the following three groups: the neglect (NEG) group (n = 10), elderly healthy (EH) group (n = 11), and young healthy (YH) group (n = 15). Participants in the NEG group had left USN following right-hemisphere damage due to stroke. We confirmed right-hemisphere lesions by CT or MRI images. The diagnosis of USN was verified by total CBS scores, whereby scores of >0 point indicated the presence of USN. All participants were right-handed, as assessed using the Edinburgh Handedness Inventory (Oldfield, Citation1971).

The inclusion criteria for the NEG group were as follows: 1) single right-hemisphere damage and left USN; 2) ability to sit on a chair for the duration of evaluation; 3) no apparent cognitive impairment (a Mini-Mental State Examination (MMSE) score of more than 23 points); 4) no apparent speech, hearing, or psychological disorder; and 5) normal neck rotation motion.

The inclusion criteria for the EH and YH groups were as follows: 1) no previous history of stroke; 2) ability to sit in a chair for the duration of evaluation; 3) no apparent cognitive impairment; 4) no apparent speech, hearing, or psychological disorder; and 5) normal neck rotation motion. The characteristics of the three groups are summarised in Table . This study was approved by Kobe University Graduate School of Health Sciences Ethics Committee, and all participants provided informed consent prior to their participation in the study.

Table 1. Participant characteristics for the neglect group (NEG, n = 10), elderly healthy group (EH, n = 11), and young healthy group (YH, n = 15)

2.2. Evaluations

We assessed USN using the BIT, CBS, and Far Space Line Cancellation Task (FSLCT) for the NEG group. The BIT comprises 15 subtests that assess various aspects of USN and consists of two parts—namely, conventional subtests and behavioural subtests (Halligan et al., Citation1991; Wilson et al., Citation1987). The conventional subtests include the following 6 traditional pen-and-paper tests: 1) line crossing, 2) letter cancellation, 3) star cancellation, 4) figure copying, 5) line bisection, and 6) representative drawing. USN was defined as a score of less than 131 out of the total possible score of 146 for all conventional subtests. The behavioural subtests consist of the following 9 simulated daily living tasks: 1) picture scanning, 2) telephone dialling, 3) menu reading, 4) article reading, 5) setting the time, 6) coin sorting, 7) address and sentence copying, 8) map navigation, and 9) card sorting. USN was defined as a score of less than 68 out of the total possible score of 81 for all behavioural subtests.

The CBS can detect the effect of USN on ADL and consists of an observation assessment and a self-assessment, which are used to calculate anosognosia (Azouvi et al., Citation1996; Bergego et al., Citation1995). The observation assessment includes the following 10 items concerning daily life situations or rehabilitation scenes: 1) forgets to groom or shave the left part of his/her face; 2) experiences difficulty in adjusting his/her left sleeve or slipper; 3) forgets to eat food on the left side of his/her plate; 4) forgets to clean the left side of his/her mouth after eating; 5) experiences difficulty in looking toward the left, 6) forgets about a left part of his/her body; 7) has difficulty in paying attention to noise or people addressing him/her from the left; 8) collides with people or objects on the left side, such as doors or furniture (either while walking or while driving a wheelchair); 9) experiences difficulty in finding his/her way toward the left when traveling in familiar places or in the rehabilitation unit; and 10) experiences difficulty in finding his/her personal belongings in the room or bathroom when they are on the left side. The severity of neglect is rated from 0 to 3 points for each item (0 = no neglect; 1 = mild neglect; 2 = moderate neglect, 3 = severe neglect). A higher total score indicates a more severe USN (0 = no neglect; 1–10 = mild neglect; 11–20 = moderate neglect; 21–30 = severe neglect). The self-assessment includes the same 10 items, whereby, for each item, patients rate their difficulty on a 4-point scale (0 = no difficulty; 1 = mild difficulty; 2 = moderate difficulty; 3 = severe difficulty). We calculated anosognosia scores by subtracting observation assessment scores from self-assessment scores (but not vice versa). A greater difference indicates more severe anosognosia. The CBS is the only test that assesses performance in personal, peripersonal, and extrapersonal spaces.

We used the FSLCT (Aimola, Schindler, Simone, & Venneri, Citation2012; Butler, Lawrence, Eskes, & Klein, Citation2009) to assess extrapersonal neglect in the NEG group. The FSLCT was used in previous studies; however, the result of the FSLCT was never compared with the result of the VR task. Butler et al. (Citation2009) and Aimola et al. (Citation2012) used the FSLCT in previous studies, whereby the BIT line cancelation test was projected onto a wall using a PC and a projector, which is the standard method of presentation. In Butler et al.’s study, the FSLCT was at 250 cm from the participant, on a display. In Aimola et al.’s study, this distance was 320 cm. In the present study, we implemented a distance of 300 cm, and the screen size was approximately 105 cm in height and 140 cm in width. Participants sat on a chair (seat height = 40 cm) and used a laser pointer with their right hand to perform the FSLCT.

The hemianopia test was used to examine visual field deficits and VR task results; we used the confrontation test. The MMSE (Folstein, Folstein, & McHugh, Citation1975) was used to assess cognitive function. Cognitive impairment was defined as an MMSE score of less than 23 points out of a total possible score of 30. The MMSE was used to verify that VR task results were due to USN rather than cognitive impairment.

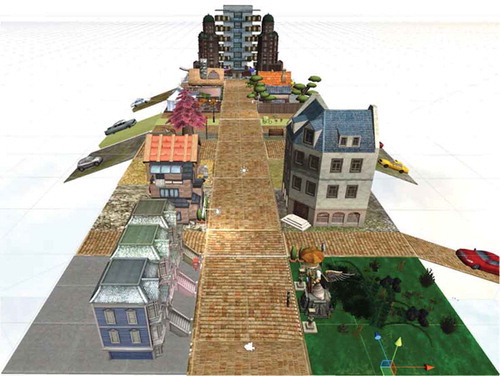

2.3. The VR task: the outdoor walking task

The equipment used for the VR task included a PC and an Oculus Rift head-mounted display (Oculus VR, Irvine, CA, USA) (Figure ). The VR task program was created by Unity (Unity Technologies, San Francisco, CA, USA) in the 3D image system, and the view angle was 110°. To collect responses during the VR task, Julius (Kyoto University, Kyoto, Japan) free voice recognition software was used, and a pin microphone (ELECOM, Osaka, Japan) detected voice input. The pin microphone was fitted to the Oculus Rift apparatus using an adhesive tape such that neck motion did not affect recordings.

Figure 1. Photograph of a participant using the virtual reality apparatus and a scene in the virtual reality task.

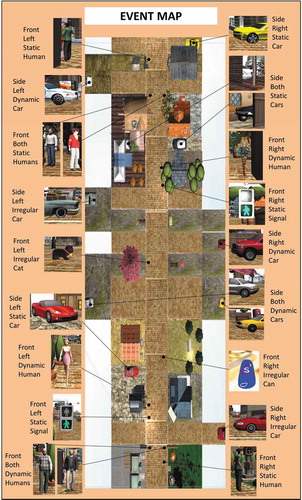

The VR task was VR outdoor walking (Figure ). The total VR walking length was approximately 100 meters, and participants went straight ahead through a central main street in the VR environment. The five kinds of targets that had to be recognised were a Signal (traffic light), a Human/Humans, a Car/Cars, a Cat, and a Can. The Signal was set in the upper field of vision, the Human/Humans and Car/Cars were located at eye level, and the Cat and Can appeared suddenly in the lower field of vision. Each target was presented in the peripheral visual fields. Target locations were at the side of the road (on the right, left, or both sides) (Figure ), and targets had either static or dynamic characteristics. In addition, target characteristics appeared regularly or irregularly. We considered the characteristics of each target and combined each characteristic to obtain a number from 1 to 3 (1: front or side; 2: right side, left side, or both sides; 3: static, dynamic, or irregular). There were 18 targets in total, and each target was presented the same number of times on the right and left sides. The 18 targets (Figure ), presented non-randomly in the following order, were as follows: 1) a Human (front/right side/static); 2) Humans (front/both sides/dynamic); 3) a Car (side/right side/irregular); 4) a Signal (front/left side/static); 5) a Human (front/left side/dynamic); 6) a Car (side/left side/static); 7) a Can (front/right side/irregular); 8) Cars (side/both sides/dynamic); 9) a Cat (front/left side/irregular); 10) a Car (side/right side/dynamic); 11) a Car (side/left side/irregular); 12) a Signal (front/right side/static); 13) a Human (front/right side/dynamic); 14) Cars (side/both sides/static); 15) Humans (front/both sides/static); 16) a Car (side/left side/dynamic); 17) a Human (front/left side/static); and 18) a Car (side/right side/static). The highest score for the VR task was 18 points, whereas the lowest score was 0 point. Missed targets were scored as 0 and correct detection was scored as 1. When participants recognised only one side for both-sides stimulation, a score of 0.5 points was assigned.

Participants sat on a chair (seat height: 40 cm) while performing the VR task. They were equipped with an Oculus Rift head-mounted display (Oculus VR) and a pin microphone (ELECOM). By pressing a keyboard button (the cursor button: ↑), the participants could move forward in the VR image. After we had explained the rules of the VR task, participants carried out a practice task that was similar to the VR task (walking down a street), but with a different context. The three kinds of targets were as follows: 1) a Signal (front/right side/static), 2) Humans (front/both sides/static), and 3) a Car (side/right side/dynamic). The practice task was carried out by each participant 3 times maximum (at least 1 time) after receiving proper instructions. Only when they understood the rules (when the right side of all the targets was recognisable) did the VR task begin.

The rules of the VR task were as follows: In the case of a red traffic light (a Signal), participants were told that they should stop in front of a white line (Figure ). Participants were asked to speak immediately when they detected any of the four kinds of target (a Human/Humans, Car/Cars, Cat, or Can). Specifically, they were asked to state the appearance location (right side, left side, or both sides) and the object itself (e.g. “Right side, a Car”). These spoken responses were used to identify correct or incorrect detection and recognition of the target (qualitative characteristic). The task was recorded using a video camera. When participants panicked (e.g. when the participants suddenly detected the targets), voice recognition scored the response as incorrect. Therefore, we determined the final VR task score by collating results of the PC score and the video recording score.

The following items in the VR task were measured: 1) total score (maximum of 18 points); 2) number of errors on the right or left side; 3) mean neck rotation angle (+: rightward bias; −: leftward bias); 4) maximum neck rotation angle on the right or left side; 5) total time of neck rotation (at an angle of more than 15°) to the right or left side; 6) time required to complete the task; and 7) correct detection percentage of each target.

2.4. Data analysis

Data were first tested for normality (Shapiro-Wilk test). Between-group comparisons of age and education were made using the Steel-Dwass multiple comparison test, and the MMSE scores between the NEG and EH groups were compared using the Mann-Whitney U test.

Between-group comparisons of the total score and the number of errors on the right side were performed using the Steel-Dwass multiple comparison test. Comparisons of the maximum neck rotation angle on the right side and the time required to complete the task were made using the Kruskal-Wallis test. Comparisons of the number of errors on the left side were made using Tukey’s multiple comparisons test, whereas comparisons of the mean neck rotation angle and the total time of neck rotation on the right side were made using Games-Howell’s multiple comparison test. Comparisons of the maximum neck rotation angle on the left side were made using Welch’s analysis of variance (ANOVA), whereas comparisons of the total time of neck rotation on the left side were made using a one-way ANOVA. The statistical method was selected based on whether data had a normal or non-normal distribution.

Spearman’s rank correlation coefficient was used to test the correlations between the total VR task score and USN test scores (BIT conventional subtests, BIT behavioural subtests, CBS observation assessment, and FSLCT) within the NEG group. Kappa coefficient was used to analyse the degree of agreement about whether a response was correct or not for each target in the NEG group. Chi-squared test was used to compare the correct detection percentage of each target between the NEG and EH groups. We analysed between-group differences in the NEG and EH groups so that we could determine the effects of USN rather than age. The YH group was used to exclude the effects of education on the VR score. We used R version 2.8.1 (CRAN, freeware) for all statistical analyses, and the level of significance was set at p < 0.05.

3. Results

Participant characteristics are shown in Table . Age, education, and MMSE scores were not significantly different between the NEG and EH groups. In the NEG group (n = 10), the mean age was 67.8 ± 12.1 years, and eight participants were males and two were females. In the EH group (n = 11), the mean age was 71.2 ± 4.0 years, and three participants were males and eight were females. In the YH group (n = 15), the mean age was 27.9 ± 5.7 years, and nine participants were males and six were females. In the NEG group, five patients had cerebral haemorrhage and five had cerebral infarction. Primary lesions were located in the frontal lobe (n = 6), parietal lobe (n = 8), temporal lobe (n = 4), occipital lobe (n = 2), thalamus (n = 3), and basal ganglia (n = 6). All patients presented with damage to the plural regions (from 2 to 5 lesion sites, as follows: frontal, parietal, temporal, occipital, thalamus, or basal ganglia). The time post-stroke was 28.2 ± 40.8 months. Patients were diagnosed with USN according to the BIT conventional subtest score (118.7 ± 18.0 points; cut-off score = 131; n = 8) and the BIT behavioural subtest score (64.5 ± 12.7 points; cut-off score = 68; n = 7). The CBS (observation score) was 11.8 ± 3.6 points, and the anosognosia score was 7.1 ± 3.5 points. The FSLCT score was 31.1 ± 8.6, and the total VR task score was 11.0 ± 3.4, with four patients detected as having left visual field defect (Table ).

Table 2. Clinical demographics and the neglect group test results

The results of the VR task for all three groups are shown in Table . The total VR task score was significantly lower in the NEG group than in both the EH and YH groups (NEG, 11.0 ± 3.4; EH, 17.7 ± 0.5; YH, 17.9 ± 0.4; p < 0.001). The NEG group made significantly more errors on the left side (NEG, 6.8 ± 3.1; EH/YH, 0.0 ± 0.0; p < 0.001) and had a significantly greater mean neck rotation (NEG, 10.3 ± 10.2; EH, −1.1 ± 1.8; YH, −0.3 ± 2.0; p < 0.05) than the EH and YH groups. The NEG group also committed significantly more errors on the right side than the YH group (NEG, 2.0 ± 1.9; YH, 0.4 ± 0.7; p < 0.01) and exhibited a longer total time of neck rotation angle on the right side than the EH group (NEG, 170.0 ± 106.0; EH, 58.1 ± 36.7; p < 0.05).

Table 3. The virtual reality task results [neglect (NEG), elderly healthy (EH), and young healthy (YH) groups]

We found no correlations between the total VR task scores and USN test scores (BIT conventional subtests, BIT behavioural subtests, CBS observation assessment, and FSLCT). Remarkably, Case 2 (Figure ) of the NEG group obtained the highest total BIT score (221 points) among all participants. However, she also scored the lowest (five points) in the VR task (Table ).

Figure 4. Lesion pattern of case 2 (magnetic resonance imaging fluid-attenuated inversion recovery).

We performed correlation analysis to investigate the similarity of the detection percentages of each target. Concerning the degree of correct or incorrect detection and recognition of the target (qualitative characteristic) in the NEG group, the correlation was the strongest (r = 0.80) between Cat (front/left side/irregular) and Humans (front/both sides/static). Correlations were also found between Cars (side/both sides/dynamic) and Cat (front/left side/irregular) (r = 0.78), between Cars (side/both sides/dynamic) and Cars (side/both sides/static) (r = 0.73), and between Signal (front/right side/static) and Human (front/left side/static) (r = 0.73).

Table indicates the mean correct detection percentages of each target (right side or left side) in the VR task for the NEG and EH groups. Between-group significant differences were found for Signal (front/left side/static; p < 0.001), Car (side/left side/static; p < 0.001), Human (front/left side/dynamic; p < 0.01), Cat (front/left side/irregular; p < 0.01), Car (side/left side/dynamic; p < 0.01), Car (side/left side/irregular; p < 0.01), and Car (side/right side/irregular; p < 0.01). Table indicates the mean correct detection percentages of each target (both sides) in the VR task for the NEG and EH groups. Between-group significant differences were found for Humans (front/both sides/dynamic; p < 0.001), Cars (side/both sides/static; p < 0.001), Humans (front/both sides/static; p < 0.01), and Cars (side/both sides/dynamic; p < 0.05). Correct detection percentages within the NEG group were lower for left-side stimulation than for right-side stimulation (Table ) and were lower for both-sides stimulation (correct detection percentages of less than 30% in 3 of the 4 targets) than for left-side stimulation (correct detection percentages of less than 30% in 2 of the 7 targets) (Tables and ). In a comparison of static stimulations, the NEG group showed lower detection percentages for both-sides stimulation (50.0%) than for left-side stimulation (80.0%) (a Human/Humans) at the front. In a comparison of dynamic stimulations, correct detection percentages were lower for both-sides stimulations (front: 30.0%; side: 30.0%) than for left-side stimulations (front: 40.0%; side: 50.0%) in targets (a Human/Humans or a Car/Cars) presented at the front and at the side together. Furthermore, Table indicates the mean correct detection percentages (right side or left side on both sides) within the NEG group. In both-sides stimulations, the NEG group had a marked visual extinction of the left side.

Table 4. Detection percentage of each target (left-side stimulation or right-side stimulation) in the virtual reality task [neglect (NEG) and elderly healthy (EH) groups]

Table 5. Detection percentage of each target (both-sides stimulation) in the virtual reality task [neglect (NEG) and elderly healthy (EH) groups]

Table 6. Detection percentage of both-sides stimulation in the virtual reality task (neglect group)

In the VR task, the most difficult stimulation target to detect for the NEG group was a Signal (front/left side/static; correct detection percentage: NEG, 0%; EH, 100%; p < 0.001), a Car (side/left side/static; correct detection percentage: NEG, 10.0%; EH, 100%; p < 0.001), Cars (side/both sides/static; correct detection percentage: NEG, 20.0%; EH, 100%; p < 0.001), and Humans (front/both sides/dynamic; correct detection percentage: NEG, 30.0%; EH, 100%; p < 0.001). Alternatively, the easiest stimulation target to detect in the NEG group was a Human (front/left side/static; correct detection percentage: NEG, 80.0%; EH, 100%; no significant difference).

4. Discussion

Typical assessments (BIT and CBS) for USN cannot quantitatively evaluate extrapersonal neglect. Therefore, developing a new tool to assess extrapersonal neglect is necessary. The purpose of this study was to verify whether our VR task could detect extrapersonal neglect in patients with stroke and to identify the most difficult and easiest stimulations for patients with USN to detect in the VR task. We focused on the effect of USN during locomotion (either while walking or while driving a wheelchair). The identification of the most difficult and easiest stimulations for patients with USN will be useful for future therapeutic strategies, considering that our VR task can assess the likelihood of accidents or falls in real life. Furthermore, our findings regarding the comparison of variations in the detection of similar targets can uncover the details that may lead to omission.

4.1. Ability of the VR task to detect extrapersonal neglect in patients with stroke related deficits

The total VR task score was significantly lower in the NEG group than in both the EH and YH groups. The NEG group made significantly more errors on the left side and had a significantly greater mean neck rotation angle than both the EH and YH groups. The NEG group also made significantly more errors on the right side than the YH group and had a longer total time of neck rotation on the right side than the EH group.

With respect to the results of the VR task, Navarro et al. (Citation2013) revealed that their USN group committed a significantly higher number of errors than their non-NEG group. Buxbaum et al. (Citation2008), Kim et al. (Citation2010), and Kim et al. (Citation2007) reported that patients with neglect had lower scores for left than right targets. The findings of our study were similar to the results of these previous studies.

Pedroli, Serino, Cipresso, Pallavicini, and Riva (Citation2015) reported that VR can be used to assess extrapersonal space in environments that are similar to real-life experiences. Rose et al. (Citation2005) and Tsirlin, Dupierrix, Chokron, Coquillart, and Ohlmann (Citation2009) reported that a VR environment is suitable for the diagnosis or treatment of extrapersonal neglect and can be used to identify potentially dangerous actions in the real world. Our VR task simulated real-life tasks and can detect extrapersonal neglect during walking or driving a wheelchair. Furthermore, our VR task can presume potentially dangerous scenarios in ADL. As with previous studies, our VR task used a VR environment, and the NEG group had significantly lower total scores, higher number of errors on the left side, larger rightward bias in the mean neck rotation angle, and longer total time of neck rotation on the right side than the EH group. Our VR task can assess more parameters (e.g., score, error, angle, time, and qualitative characteristics of omissions) than previous studies. Hence, our VR task seems to effectively detect extrapersonal neglect in patients with stroke.

We found no correlation between the total VR task score and the USN test scores (BIT conventional subtests, BIT behavioural subtests, CBS observation, and FSLCT) in the NEG group. Azouvi et al. (Citation2003) reported that USN symptoms (presence or absence, severity) differ between pen-and-paper test scores for USN and USN in ADL observations. Furthermore, extrapersonal neglect has been reported to be more frequent (Bisiach et al., Citation1986) and severe than personal or peripersonal neglect (Butler et al., Citation2004). Buxbaum et al. (Citation2004) and Heilman, Valenstein, and Watson (Citation2000) revealed that patients with neglect dissociated the results of USN tests between a patient, and there were several subtypes of USN in each patient. Hence, we demonstrated that our VR task is capable of confirming the qualitative characteristics of USN.

Despite having the highest total BIT score, Case 2 had the lowest total VR task score. Arene and Hillis (Citation2007) and Redding and Wallace (Citation2006) reported that patients with USN were not classified as having a particular type of USN as each patient had different subtypes of USN (the results of each USN test were different individually). The BIT can assess peripersonal neglect; however, standard assessment in extrapersonal neglect has not yet been established and future work needs to develop standard assessments for extrapersonal neglect.

4.2. Stimuli and target orientations associated with the highest degree of difficulty of detection

4.2.1. Comparison between front stimulation and side stimulation

The most difficult stimulation to detect was a Signal (front/left side/static), and this was most difficult with upper left stimulation. For this reason, most targets were positioned on the right or left side at eye level, because we considered that attention was focused on the centre of the VR screen. In general, humans mostly gaze at eye level or below rather than in the upper field during ADL. Karnath and Dieterich (Citation2006) reported that healthy subjects gaze below the eye level in a visual search. Alternatively, eye movements in patients with USN differ from those in healthy subjects and are biased toward the eye level of the right side and have a narrower range of eye movement. The second and third most difficult stimulations to detect were a Car and Cars, located on the side. Concerning the omission on the side (a Car/Cars). Butler et al. (Citation2009) revealed that searches in patients with USN were significantly rightward of the starting points compared with those in both non-neglect controls and healthy controls and that the NEG group had significantly shorter search sequences than both control groups. Our results indicate that a wide spatial range for assessment (the VR task) is needed to evaluate extrapersonal neglect.

4.2.2. Comparison between left-side stimulation and both-sides stimulation

The most difficult stimulation to detect was a Signal (front/left side/static), and the second was a Car (side/left side/static); difficult stimulations to detect were one-sided stimulations of the left side. The third most difficult stimulation to detect was Cars (side/both sides/static), and visual extinction of the left side was confirmed in the NEG group. Both-sides stimulation was more challenging than left-side stimulation for the detection of similar characteristics. Gainotti, D’Erme, and Bartolomeo (Citation1991) reported that patients with left USN who had right brain damage were attracted to the right side in both-sides stimulation, which was referred to as the phenomenon of “magnetic gaze attraction.” Karnath, Himmelbach, and Kuker (Citation2003), and Vallar, Rusconi, Bignamini, Geminiani, and Perani (Citation1994) revealed that visual extinction and USN were independent. The present results indicate that patients with USN should receive both-sides stimulation in the assessment or treatment of USN.

4.2.3. Comparison between static and dynamic stimulations

The most difficult stimulation to detect was a Signal (front/left side/static), the second was a Car (side/left side/static), and the third was Cars (side/both sides/static), all of which were static stimulations. These stimulations were far from the centre of the VR screen, and the detection of static stimulations required a more spontaneous search. More difficult stimulations in detection were found to be dependent on the positional relations. Kim et al. (Citation2011) reported a VR task that used dynamic stimulations. However, dynamic and static stimulations were not compared in that previous study. In the future, task settings should allow for comparisons between static and dynamic stimulations, much like our VR task.

4.3. The easiest stimulation to detect (either front or side; either left side or both sides; either static or dynamic)

The easiest left-side stimulation to detect was a Human (front/left side/static). This may be because humans located at eye level and in front can be easily recognised and a Human was not affected by visual extinction. Another explanation is that when patients with neglect only have a limited time to detect dynamic stimulations, a Human might disappear from the field of view. However, there was no time limit for the detection of static stimulations, and patients with neglect could detect static stimulations at their own pace. Our results suggest that the easiest left-side stimulation to detect is static stimulation at eye level and in front.

4.4. Dissociation between the FSLCT and the VR task scores (extrapersonal neglect) in the NEG group

Despite a high total FSLCT score, the NEG group had a low total VR task score (11.0 ± 3.4 points). Buxbaum et al. (Citation2008) reported that patients with neglect had a lower score in a complex VR task than in a simple VR task. Our VR task included several types of locations and stimulations (1: front or side; 2: right side, left side, or both sides; 3: static, dynamic, or irregular) and is therefore more likely to allow for the identification of deficits than the FSLCT. The screen size of the FSLCT was approximately 105 cm height and 140 cm in width. Additionally, the FSLCT incorporates only simple lines as stimuli. However, the field employed in our VR task more closely simulates real life during wheelchair use or while walking. In our VR task, participants were required to confirm targets in a wider field and at a greater distance, and in each condition (18 types). This could explain the difference between the VR task and FSLCT results. In extrapersonal space, Gamberini, Seraglia, and Priftis (Citation2008) reported that the results of assessments in real environments were similar to those obtained in the VR environment. From the results of previous studies (Pedroli et al., Citation2015; Tsirlin et al., Citation2009) and of our study, we can conclude that the VR task can detect extrapersonal neglect in ADL patients. Our VR task is capable of providing stimulations (static, dynamic, or irregular; right side, left side, or both sides) and space similar to that of a real-life environment.

4.5. Analysis from lesions and attention networks

In our study, 6 patients had damage to the right-hemisphere frontal lobe or temporal lobe, and the detection of extrapersonal space in these patients was less than that in the other groups (i.e., EH and YH groups). Furthermore, 9 patients exhibited damage to the dorsal attention network or ventral attention network.

In a previous study on neglect symptoms and subtypes, Committeri et al. (Citation2007) reported that the awareness of extrapersonal space is significantly related to right-hemisphere activity in the frontal and temporal lobes. Corbetta and Shulman (Citation2002) proposed that visual attention is controlled by two partially segregated neural systems. According to the authors, as well as to Vossel, Geng, and Fink (Citation2014), these two attentional neural networks are the dorsal attention network (with a functional connectivity between the frontal and parietal lobes, and which encodes active attention) and ventral attention network (with a functional connectivity between the frontal and temporal lobes, and which encodes passive attention). Vossel et al. (Citation2014) reported that the detection of static stimulations involves the dorsal attention network (active attention), whereas the detection of dynamic or irregular stimulations involves the ventral attention network (passive attention). Hence, left-side stimulations in our VR task were less easily detected.

4.6. Advantages of our VR task

Previous work (Kim et al., Citation2011, Citation2007, Citation2010) introduced the VR task using dynamic stimulations for patients with USN. However, these previous studies did not compare static stimulations with dynamic stimulations, one-side stimulation with both-sides stimulation, or front stimulations with side stimulations when considering target detection. To our knowledge, this is the first study to analyse the qualitative characteristics of stimuli and the effect on measurements of extrapersonal neglect. Our results indicate that our VR task is capable detecting the qualitative characteristics of extrapersonal neglect that may not be easily observed in ADL. For this reason, the environment (stimulations) is not constant with typical locomotion observed in real life. Our findings may prove useful in the determination of treatment options in the future. This technique can compare the easiest left-side stimulation in detection (errorless learning) with the more difficult left-side stimulations in detection (errorful learning). In doing so, we can identify the most effective approach in the VR task.

4.7. Limitations

Some limitations should be noted. First, all participants were sitting in a chair while performing the VR task. Our VR task therefore differed from walking or wheelchair driving in ADL. There is a possibility that sitting (static balance control) is different from walking or wheelchair driving (dynamic balance control) in the results of the VR task. Future studies could employ the VR task during walking or wheelchair driving to assess performance that is closer to actual ADL. Second, four patients in the NEG group had damage to the dorsal and ventral attention networks. Our study could not elucidate the differences in qualitative characteristics to detect the damages in these two attentional neural networks. Future studies could compare qualitative characteristics of the VR task between patients with dorsal attention network damage and those with ventral attention network damage. In addition, there is some concern regarding our small sample size, and the difference in the ratio of males to females in each group. Another potential issue is that the VR task did not include the various targets with equal frequency. For example a Human/Humans, a Car/Cars, or a Signal were used frequently but a Cat/and a Can may have been used only once, and order in which the targets emerged in the VR task not randomized. These factors may have influenced the VR task results.

This is the first study to analyse the qualitative characteristics of stimuli and the effect those may have on measurements of extrapersonal neglect. In future work, we plan to develop our VR task to verify its effects in the context of treatment. We aim to verify the effects of our VR task using the easiest left-side stimulation in detection (errorless learning) as well as during more difficult left-side stimulations in detection (errorful learning) by using visual or audio feedback, for example. In doing so, we will reveal the most effective approach (errorless learning or errorful learning) in the VR task.

5. Conclusions

Our VR task is capable of detecting extrapersonal neglect in patients with deficits due to stroke. Furthermore, we identified the most difficult and easiest stimulations for patients with USN to detect. In the future, we will further develop our VR task and verify its potential use for treatment.

Correction

This article has been republished with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

Notes on contributors

Masaki Tamura

Our group developed a method to assess unilateral spatial neglect (USN) using a virtual reality (VR) system in stroke patients. The VR task consisted of 18 identifiable targets. The participants were asked to immediately speak as they detected each target. The system measured the total score, the number of errors in the right and left sides, the mean and maximum rotation angle of the neck on the right and left sides, the total time of neck rotation to the right side and left sides, the time required to complete the task, and the detection percentage of correct identification of each target. We verified the results of the VR task in patients with USN, and identified the most difficult and most easily detectable targets in the VR task. In the future, we hope to develop this VR system for the treatment of stroke.

References

- Aimola, L., Schindler, I., Simone, A. M., & Venneri, A. (2012). Near and far space neglect: Task sensitivity and anatomical substrates. Neuropsychologia, 50, 1115–17. doi:10.1016/j.neuropsychologia.2012.01.022

- Arene, N. U., & Hillis, A. E. (2007). Rehabilitation of unilateral spatial neglect and neuroimaging. Europa Medicophysica, 43, 255–269.

- Azouvi, P., Marchal, F., Samuel, C., Morin, L., Renard, C., Louis-Dreyfus, A., … Pradat-Diehl, P. (1996). Functional consequences and awareness of unilateral neglect: Study of an evaluation scale. Neuropsychological Rehabilitation, 6, 133–150. doi:10.1080/713755501

- Azouvi, P., Olivier, S., de Montety, G., Samuel, C., Louis-Dreyfus, A., & Tesio, L. (2003). Behavioral assessment of unilateral neglect: Study of the psychometric properties of the Catherine Bergego Scale. Archives of Physical Medicine and Rehabilitation, 84, 51–57. doi:10.1053/apmr.2003.50062

- Bergego, C., Azouvi, P., Samuel, C., Marchal, F., Louis-Dreyfus, A., Jokic, C., … Deloche, G. (1995). Validation d’une échelle d’évaluation fonctionnelle de l’héminégligence danse la vie quotidienne: L’échelle CB. [Functional consequences of unilateral neglect: Validation of an evaluation scale, the CB scale.]. Annales De Réadaptation Et De Médecine Physique, 38, 183–189. doi:10.1016/0168-6054(96)89317-2

- Bisiach, E., Perani, D., Vallar, G., & Berti, A. (1986). Unilateral neglect: Personal and extra-personal. Neuropsychologia, 24, 759–767. doi:10.1016/0028-3932(86)90075-8

- Butler, B. C., Eskes, G. A., & Vandorpe, R. A. (2004). Gradients of detection in neglect: Comparison of peripersonal and extrapersonal space. Neuropsychologia, 42, 346–358. doi:10.1016/j.neuropsychologia.2003.08.008

- Butler, B. C., Lawrence, M., Eskes, G. A., & Klein, R. (2009). Visual search patterns in neglect: Comparison of peripersonal and extrapersonal space. Neuropsychologia, 47, 869–878. doi:10.1016/j.neuropsychologia.2008.12.020

- Buxbaum, L. J., Ferraro, M. K., Veramonti, T., Farne, A., Whyte, J., Ladavas, E., … Coslett, H. B. (2004). Hemispatial neglect: Subtypes, neuroanatomy, and disability. Neurology, 62, 749–756. doi:10.1212/01.WNL.0000113730.73031.F4

- Buxbaum, L. J., Palermo, M. A., Mastrogiovanni, D., Read, M. S., Rosenberg-Pitonyak, E., Rizzo, A. A., & Coslett, H. B. (2008). Assessment of spatial attention and neglect with a virtual wheelchair navigation task. Journal of Clinical and Experimental Neuropsychology, 30, 650–660. doi:10.1080/13803390701625821

- Committeri, G., Pitzalis, S., Galati, G., Patria, F., Pelle, G., Sabatini, U., & Pizzamiglio, L. (2007). Neural bases of personal and extrapersonal neglect in humans. Brain: A Journal of Neurology, 130, 431–441. doi:10.1093/brain/awl265

- Corbetta, M., & Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews. Neuroscience, 3, 201–215. doi:10.1038/nrn755

- Denes, G., Semenza, C., Stoppa, E., & Lis, A. (1982). Unilateral spatial neglect and recovery from hemiplegia. a follow-up study. Brain: A Journal of Neurology, 105, 543–552. doi:10.1093/brain/105.3.543

- Folstein, M. F., Folstein, S. E., & McHugh, P. R. (1975). “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research, 12, 189–198. doi:10.1016/0022-3956(75)90026-6

- Gainotti, G., D’Erme, P., & Bartolomeo, P. (1991). Early orientation of attention toward the half space ipsilateral to the lesion in patients with unilateral brain damage. Journal of Neurology, Neurosurgery, and Psychiatry, 54, 1082–1089. doi:10.1136/jnnp.54.12.1082

- Gamberini, L., Seraglia, B., & Priftis, K. (2008). Processing of peripersonal and extrapersonal space using tools: Evidence from visual line bisection in real and virtual environments. Neuropsychologia, 46, 1298–1304. doi:10.1016/j.neuropsychologia.2007.12.016

- Gialanella, B., & ; Centra Medico di Gussago, Divisione di RRF, Guassago (Brescia), Italy Mattioli, Clinica Neurologica University of Brescia, Brescia, Italy F. (1992). Anosognosia and extrapersonal neglect as predictors of functional recovery following right hemisphere stroke. Neuropsychological Rehabilitation, 2, 169–178. doi:10.1080/09602019208401406

- Gillen, R., Tennen, H., & McKee, T. (2005). Unilateral spatial neglect: Relation to rehabilitation outcomes in patients with right hemisphere stroke. Archives of Physical Medicine and Rehabilitation, 86, 763–767. doi:10.1016/j.apmr.2004.10.029

- Halligan, P. W., Cockburn, J., & Wilson, B. A. (1991). The behavioural assessment of visual neglect. Neuropsychological Rehabilitation, 1, 5–32. doi:10.1080/09602019108401377

- Heilman, K. M., & Valenstein, E. (1979). Mechanisms underlying hemispatial neglect. Annals of Neurology, 5, 166–170. doi:10.1002/(ISSN)1531-8249

- Heilman, K. M., Valenstein, E., & Watson, R. T. (2000). Neglect and related disorders. Seminars in Neurology, 20, 463–470. doi:10.1055/s-2000-13179

- Jutai, J. W., Bhogal, S. K., Foley, N. C., Bayley, M., Teasell, R. W., & Speechley, M. R. (2003). Treatment of visual perceptual disorders post stroke. Topics in Stroke Rehabilitation, 10, 77–106. doi:10.1310/07BE-5E1N-735J-1C6U

- Kalra, L., Perez, I., Gupta, S., & Wittink, M. (1997). The Influence of visual neglect on stroke rehabilitation. Stroke, 28, 1386–1391. doi:10.1161/01.STR.28.7.1386

- Karnath, H.-O., & Dieterich, M. (2006). Spatial neglect–A vestibular disorder? Brain: A Journal of Neurology, 129, 293–305. doi:10.1093/brain/awh698

- Karnath, H.-O., Himmelbach, M., & Kuker, W. (2003). The cortical substrate of visual extinction. Neuroreport, 14, 437–442. doi:10.1097/00001756-200303030-00028

- Kerkhoff, G. (2001). Spatial hemineglect in humans. Progress in Neurobiology, 63, 1–27. doi:10.1016/S0301-0082(00)00028-9

- Kim, D. Y., Ku, J., Chang, W. H., Park, T. H., Lim, J. Y., Han, K., … Kim, S. I. (2010). Assessment of post-stroke extrapersonal neglect using a three-dimensional immersive virtual street crossing program. Acta Neurologica Scandinavica, 121, 171–177. doi:10.1111/ane.2010.121.issue-3

- Kim, J., Kim, K., Kim, D. Y., Chang, W. H., Park, C.-I., Ohn, S. H., … Kim, S. I. (2007). Virtual environment training system for rehabilitation of stroke patients with unilateral neglect: Crossing the virtual Street. Cyberpsychology & Behavior: The Impact of the Internet, Multimedia and Virtual Reality on Behavior and Society, 10, 7–15. doi:10.1089/cpb.2006.9998

- Kim, Y. M., Chun, M. H., Yun, G. J., Song, Y. J., & Young, H. E. (2011). The effect of virtual reality training on unilateral spatial neglect in stroke patients. Annals of Rehabilitation Medicine, 35, 309–315. doi:10.5535/arm.2011.35.3.309

- Navarro, M. D., Lloréns, R., Noé, E., Ferri, J., & Alcañiz, M. (2013). Validation of a low-cost virtual reality system for training street-crossing. A comparative study in healthy, neglected and non-neglected stroke individuals. Neuropsychological Rehabilitation, 23, 597–618.

- Oldfield, R. C. (1971). The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia, 9, 97–113.

- Pedroli, E., Serino, S., Cipresso, P., Pallavicini, F., & Riva, G. (2015). Assessment and rehabilitation of neglect using virtual reality: A systematic review. Frontiers in Behavioral Neuroscience, 9, 226.

- Redding, G. M., & Wallace, B. (2006). Prism adaptation and unilateral neglect: Review and analysis. Neuropsychologia, 44, 1–20.

- Rose, F. D., Brooks, B. M., & Rizzo, A. A. (2005). Virtual reality in brain damage rehabilitation: Review. Cyberpsychology & Behavior: The Impact of the Internet, Multimedia and Virtual Reality on Behavior and Society, 8, 241–262.

- Sedda, A., Borghese, N. A., Ronchetti, M., Mainetti, R., Pasotti, F., Beretta, G., & Bottini, G. (2013). Using virtual reality to rehabilitate neglect. Behavioural Neurology, 26, 183–185.

- Tsirlin, I., Dupierrix, E., Chokron, S., Coquillart, S., & Ohlmann, T. (2009). Uses of virtual reality for diagnosis, rehabilitation and study of unilateral spatial neglect: Review and analysis. Cyberpsychology & Behavior: The Impact of the Internet, Multimedia and Virtual Reality on Behavior and Society, 12, 175–181.

- Vallar, G., Rusconi, M. L., Bignamini, L., Geminiani, G., & Perani, D. (1994). Anatomical correlates of visual and tactile extinction in humans: A clinical CT scan study. Journal of Neurology, Neurosurgery, and Psychiatry, 57, 464–470.

- Vossel, S., Geng, J. J., & Fink, G. R. (2014). Dorsal and ventral attention systems: Distinct neural circuits but collaborative roles. The Neuroscientist: A Review Journal Bringing Neurobiology, Neurology and Psychiatry, 20, 150–159.

- Wilson, B., Cockburn, J., & Halligan, P. (1987). Development of a behavioral test of visuospatial neglect. Archives of Physical Medicine and Rehabilitation, 68, 98–102.