ABSTRACT

This experimental-mixed methods investigates the impact of shifting from a traditional laboratory model to a hybrid laboratory model. The hybrid model consisted of (1) online instructions and pre-laboratory test, (2) compressed face-to-face laboratory time, and (3) post-laboratory data analysis. This study analyses whether student perceptions of a targeted intervention were correlated to a range of student performance indicators. Only a fractional improvement in student performance was observed, but evidence suggests that the use of online content led to more frequent student interaction with the learning material. The pre-laboratory tests encouraged a better preparation for the laboratory. Splitting the laboratory intervention into different phases was generally better perceived by students than the traditional style. The findings are expected to encourage course coordinators and developers to adopt concepts used in the delivery of hybrid solutions which is important due to the current emphasis on the use of online models of instruction.

1. Introduction

An important feature of engineering training is authentic skill development through practical student experiences. Problem-based (Gurses, Açıkyıldız, Doğar, & Sozbilir, Citation2007) and authentic experiences provide students with opportunities to explore their learning capabilities. Feisel & Rosa, (Citation2005) indicate a range of fundamental learning objectives for engineering instructional laboratories and recommend the development of a more thorough understanding of these as a critical component of the undergraduate experience. Accrediting bodies for engineering education define a number of learning outcomes for laboratories that are expected to provide students with a range of skills, experiences and understanding As such, there is an expectation that students undertake laboratory exercises in order to develop effective skills through hands-on experiences, which are underpinned by widely accepted learning theories for example those of scholars such as Biggs and Tang (Citation2011) and Dale (Citation1969). Because of the nature and purpose of engineering as a subject, face-to-face laboratories are often seen as the preferred way of delivering education in it. However, few studies of graduate competencies have shown specific consideration of engineering laboratories, and of those that have, most consider these in terms of student perceptions of the ease of conducting experiments.

Fluid mechanics is regarded as one of the most challenging subjects in the undergraduate engineering syllabus, due to its high level of complexity and its mathematical rigour (Alam, Hao, & Tu, Citation2004; Rahman, Citation2016), and it has been observed that students often (i) lack the necessary mathematical background and (ii) have difficulties with concepts involved (Rahman, Citation2016). As a consequence, there are often poor levels of engagement, which leads to subsequent impacts on students’ attainment of the intended learning outcomes. As a result, many university courses have introduced practical laboratories designed to develop competency in practical engineering problem solving (Gamez-Montero et al., Citation2015; Huilier, Citation2019; McClelland, Citation2013; Rahman, Citation2016; Webster, Majerich, & Luo, Citation2014). The use of laboratory practicals related to the characteristics and motion of fluids is instrumental to problem-based learning in large engineering classes (Feisel & Rosa, Citation2005; Lal et al., Citation2017).

The science of learning or ‘pedagogy’ is rapidly evolving, and many university educators are adjusting their teaching practices to meet these demands. The move to online learning environments through the use of Learning Management Systems (LMS) is one such solution. These are perceived as offering dynamic and interactive environments in which contemporary teaching and learning practices can be implemented using online and digital tools and resources. Today’s students have also developed an aptitude for using technological devices for learning, and the use of virtual learning platforms continues to expand. Online learning resources such as videos are not only developed for lecture sessions but also for laboratory activities and have been used extensively in Engineering (Coleman & Smith, Citation2019; Jaeger & Adair, Citation2014; Lal et al., Citation2017; Lindsay, Liu, Murray, & Lowe, Citation2007; Peña-Fernández et al., Citation2020; Vial, Nikolic, Ros, Stirling, & Doulai, Citation2015).

The use of traditional laboratories also faces new challenges due to increasing class sizes and constraints associated with time, laboratory space and equipment use, among others (Dawes, Murray, & Rasmussen, Citation2005). This is especially true in circumstances where students in large cohorts must be split into sub-groups with typically less than 10–15 students. Another challenge is the availability of suitably experienced laboratory instructors, usually PhD and Master’s students, who have a generally high turnover as they leave on completion of their studies. This induces a cycle which requires constant recruitment and training of new incoming laboratory instructors and introduces further variance to these laboratories. Some universities have introduced contemporary pedagogical approaches to teaching fluid mechanics laboratories, and as a result, there is a clear need to address and update the structure to include physical and online components in an effort to create a contemporary and engaging learning environment, in particular for practical-intensive classes in the engineering curriculum.

The importance of face-to-face interactions in the laboratory environment, however, cannot be underestimated, and Lal et al. (Citation2017) argue that there are a range of interactions that impact students’ attainment of laboratory learning outcomes. Interactions in the laboratory between students, their peers and the instructor support their understanding. Manipulation of the equipment enhances students’ ability to report, reflect and conclude findings obtained from the laboratory work. Knowledge of designing and conducting experiments comes from the interactions with equipment and instructors. Although these interactions are vital to student success, the traditional approach often used when designing laboratories tends to make students more reliant on instructors.

For this study, we decided to compare a traditional laboratory approach to a hybrid approach incorporating face-to-face laboratory teaching with online learning in an effort to overcome the challenges faced in the teaching of large fluid mechanics courses. The aim of the hybrid approach was to enhance student outcomes and engagement through self-directed learning, subsequently reducing student dependence on the instructor.

2. Background

According to Kolb (Citation1984), laboratory learning is done in a systematic fashion – known as Kolb’s cycle – where knowledge is gained before the laboratory class and then manifested through the transformation of experience (Abdulwahed & Nagy, Citation2009; Kolb, Citation1984). In laboratory exercises, ‘knowledge gain is achieved when students attempt to develop concrete knowledge of a topic on the basis of abstract concepts underpinning that knowledge acquired prior to commencing the practical work’ (Lal et al., Citation2017). The learning acquisition can be split into a number of steps in which i) students develop a tentative idea of what they are about to learn and observe in the laboratory as well as what is expected from them during the work they perform, typically through the laboratory instructions prior to the laboratory (Pre-laboratory work); ii) learning is achieved through demonstrations by the laboratory instructor, conducting the experiment themselves; and, finally, iii) assessment of the work is performed and knowledge gained. This last step transforms the concepts that students assimilated prior to the actual laboratory into a physical manifestation of that knowledge. Laboratories offer therefore multiple distinct learning outcomes which are specified in the accreditation guidelines for Engineering education (Feisel & Rosa, Citation2005; Felder & Brent, Citation2003) and include high-impact practices (HIP, (G. D. Kuh, Citation2008) such as timely and constructive feedback, opportunities to discover relevance of learning through real-world applications and (public) demonstration of competence as outlined by G. Kuh, O’Donnell, and Schneider (Citation2017).

The contemporary laboratory can be reconceptualised using a hybrid approach, often referred to as ‘blended learning’ in which online content is supported with face-to-face instruction. In our study, active learning was incorporated in the hybrid approach through the use of interactive lecture demonstrations (ILDs) that included a ‘predict, observe, discuss, synthesise’ learning mode (Sokoloff, Citation2006). These have been shown to help students overcome conceptual difficulties and lead to significant learning improvements in various areas of Science and Engineering (Meltzer & Manivannan, Citation2002; Sokoloff & Thornton, Citation1997; Thornton & Sokoloff, Citation1998)

The use of online quizzes as a teaching and assessment tool has become popular in education (Wallihan et al., Citation2018), particularly as a form of formative assessment (Cohen & Sasson, Citation2016). When integrated into learning activities a positive influence on students’ performance has been evidenced (Cheng, Lu, Du, & Lim, Citation2017; Salas-Morera, Arauzo-Azofra, & García-Hernández, Citation2012). Moodle quizzes implemented in a 3rd year engineering course (Gamage, Ayres, Behrend, & Smith, Citation2019) accounted for more positive student engagement. Moreover, the information gained from the quizzes was helpful in making decisions on how to improve other assessment items and modify the teaching plan.

These studies, and others, describe a framework that supports the positive impact on student learning made by the use of online laboratories and/or a combination of them with formative assessment and active learning elements. However, there is a scarcity of literature describing hybrid models that include features such as pre-laboratory demonstration videos and pre-laboratory tests combined with face-to-face laboratories, this is especially true in the field of fluid mechanics. Teaching efficiently depends on engaging students through the use of learning activities that achieve intended learning outcomes (Biggs & Tang, Citation2011). It is therefore important to analyse how a hybrid learning experience impacts students’ performance and to assess how well the intended objectives have been met (Feisel & Rosa, Citation2005). Transforming traditional engineering laboratories into engaging and meaningful learning activities is instrumental for contemporary engineering classroom settings, in particular with large classes. Implementing such learning activities is usually driven by the questions “how can the learning experience be achieved, sustained and improved? (Dawes et al., Citation2005) and ‘how can learning objectives be met and efficiencies assessed?’ (Feisel & Rosa, Citation2005).

AIM

The aim of this study was to create a more flexible learning environment for engineering laboratory classes by developing and testing a hybrid fluid mechanics laboratory prototype for undergraduate teaching that incorporated ideas from the multiple-step teaching approach outlined in many previous works (e.g. (Chowdhury, Alam, & Mustary, Citation2019; Kolb, Citation1984; Lal et al., Citation2017; Thornton & Sokoloff, Citation1998). In particular, this study investigates the impact of a hybrid laboratory model in which online resources and technologies compliment and assist the management of student laboratory experiences, reducing the face-to-face time required in a traditional laboratory approach. This is further broken down into two research questions (RQs):

RQ1: Does restructuring a traditional 3-hour laboratory exercise into smaller learning units with online learning material and streamlined face-to-face time lead to better student engagement?

RQ2: As a result of the restructured laboratory experience, what learning interventions have had a significant impact on the student learning experience?

3. The intervention

Course Design

The course Environmental Fluid Mechanics (CIVL2131) is taught as a compulsory second-year course with 150 to 200 students at the University of Queensland (UQ). The subject includes 65 contact hours, roughly divided into 40 hours of lectures, 13 hours of tutorials and 12 hours of experimental work (4 laboratories at 3 h each). The overall assessment for the course is a combination of a final exam (50%) and semester work (50%). The laboratory component of the coursework consists of four experiments, equivalent to 30% of the overall assessment. The course is very similar to those in other engineering schools covering topics such as fluid properties, fluid statics, Bernoulli, Energy and Momentum equations, dimensional analysis, flow and friction in conduits as well as fluid loading (drag and lift forces). The course consists of various assessments and learning activities which had different scores, proportionally, to the total grade ().

Table 1. Distribution of score proportions to total student mark. The online quiz for the Model 2 laboratories was on a pass/fail basis.

The Laboratory Practical Content

In Experiment 1 (EXP1), students examine several different mechanical flow meters making use of the continuity principle, Bernoulli’s equation, and Euler’s equations. Students use hydraulic benches with different pipes and constrictions (flow meters) to examine pressure differences measured along the pipes to calculate discharge and velocities.

In Experiment 2 (EXP2), students consider the behaviour of water flowing under a sluice gate and the force from the flowing water exerted on the gate. This experiment is conducted in a flume tank and relates to the momentum equation which students use to estimate the force exerted by the water on the sluice gate.

In Experiment 3 (EXP3), students examine the relationship between pipe flow and water, e.g. between head loss and flow rate under both laminar and turbulent conditions. Pressure differences are measured to determine the friction losses in pipes. Here, students have to determine the magnitude of the Darcy-Weissbach friction coefficient (f) by using the Moody diagram. Measurements are performed using the same hydraulic bench (and pipe system) as in EXP1.

In Experiment 4 (EXP4), students investigate drag forces (fluid loading) on objects. Forces exerted by the flow of fluid on objects of different shapes (round and square cylinders, air foil) are measured for various fluid velocities in a flume tank. From this, a load cell and the drag coefficients (CD) are determined based on the measurements.

For the purposes of this study, EXP1 and EXP2 were run as Model 1 (), whilst EXP3 and EXP4 were run as a hybrid model (Model 2) illustrated in .

Figure 1. Flowcharts of the two models compared in the study. a) Model 1: Traditional laboratory where students spend 3 h with the laboratory demonstrator b) Model 2: pre-laboratory preparation can be done online, including a feedback loop, a reduced face-to-face time of 1 h with the experimental rig and reflection of laboratory in a laboratory report that can be handed in 2–3 days after the face-to-face session.

The Laboratories

The laboratories require students to conduct experiments in which they apply basic fluid mechanic concepts to common (everyday) fluid mechanics problems and solve them through critical thinking strategies. As a result of these experiments, students are expected to meet a range of intended learning outcomes (). Apart from strengthening their knowledge from lectures, students are required to; learn to collaborate effectively with others; to work towards a common outcome; and to demonstrate their ability to collect, analyse and organise information and ideas and to convey those ideas clearly and fluently, in both written and spoken forms. On completion of each laboratory experiment students are expected to deliver a laboratory report which is assessed for the completeness of calculations and the accuracy of the data gathered. Thus, practical skill assessment is an essential part of the Fluid Mechanic laboratories (Lal et al., Citation2017) and the majority of learning objectives can be classified as high-impact practices (G. Kuh et al., Citation2017). With increasing reliance on distance education programs, a comparison between traditional laboratories and more contemporary laboratory settings through evaluation of students will add to the understanding of critical components in the undergraduate experience (Feisel & Rosa, Citation2005).

Table 2. Intended Learning Outcomes for CIVL2131 students.

Model 1 (Traditional Model)

As depicted in , the laboratory practical is traditionally a three-hour face-to-face experiment (Model 1). This incorporates conventional lectures and requires students to successfully complete an in class (paper-based) test before undertaking the laboratory (3 h face to face) and thereafter submitting a laboratory report. In the traditional model, the first step required students to complete a pre-laboratory form at the beginning of the laboratory class which takes approximately 15 to 30 minutes. After completion, the students then take measurements either on the hydraulic bench for experiments 1 and 3 or in the flume tank for experiments 2 and 4. Finally, students prepare a laboratory report based on the measurements taken during the experiment. During the traditional approach (Model 1), an in-class demonstration (1 hour per experiment) that incorporated a three (3)-minute demonstration video prepared students for the experiments. This was followed by an in-class quiz (worth 2.5% of the overall grade each) given in weeks 4 and 9 prior to the experiments.

Model 2 (Hybrid Model)

Model 2 is a three-phase model. During Phase 1, students worked on pre-laboratory questions which were linked to lecture material, the textbook, worked examples and a video (the video was only available for EXP3) before being allowed to take the laboratory session. It should be noted that, by having an online system, data/results from previous laboratories (e.g. those conducted in the previous year) can be easily used to inform the pre-laboratory process. During Phase 1, students could use resources such as lectures (lecture recordings, worked examples and their textbook) as well as instructions for the experiment and a quiz consisting of five questions completed as part of the pre-laboratory. The quiz served as a gatekeeper to the physical laboratory so that only students who showed mastery of the concepts introduced in Phase 1 could schedule a laboratory in the physical laboratory space (Phase 2).

In Phase 2, experiments were conducted in the hydraulic laboratory and managed by experienced tutor/demonstrators, allowing students to collect the necessary data. Phase 2 was run very similarly to the traditional laboratory, meaning that the face-to-face time and interaction between instructor and students remained relatively unchanged apart from a more streamlined (1 h laboratory time). This prompted the more efficient management of laboratory time/resources. In addition, the online material for EXP3 (Friction losses in pipes) also included a 3-minute laboratory demonstration video where two tutor/demonstrators explained the experimental procedure and underlying theory. Due to time constraints, the same instructional video was not available for EXP4. On accessing the physical laboratory space, tutors checked that students had successfully passed the quiz, explained the experimental set up and procedures and assisted students in collecting data from the experiment.

This was followed by Phase 3 in which students further analysed the data, prepared a laboratory report and solved a specific problem relating the experimental data set to a real-world scenario. The report included activities that connected experimental data with real-world applications and provides evidence of student engagement in the laboratories. This had to be submitted online within 72 hours of the completion of the experiment in Phase 2. As an additional task in EXP3 and EXP4, the data sets collected by students had to be used to solve a real-world problem, for example, determining the pressure in a pipe system of a brewery and best design practices to reduce fluid drag on a bridge crossing, respectively.

The new hybrid model (Model 2, ) provided a hybrid learning environment in which instructional resources including lecture presentations and videos were provided online, followed by an online pre-laboratory quiz in which five questions related to the specific laboratory experiment were chosen randomly from a pool of 10 questions and then a reduced face-to-face laboratory practical (~1-1.5 h) before online submission of their laboratory report.

The hybrid model was developed to streamline laboratory assignments, provide students with more flexible time-management and increase student engagement with the material. Significantly, students were required to complete the online pre-laboratory questions before being allowed to attend a laboratory session. By transitioning most of the teaching resources online and offering a more flexible ‘gating’ approach considerable benefits to both students and lecturers were anticipated, including greater flexibility for students to engage with the course content and reduced overall time required by the lecturer to manage students access to the laboratories.

Methodology/Analysis of data

Students experience and performance between the two laboratory models was assessed by: 1) a comparison of student grades between Model 1 and Model 2; 2) a student peer feedback obtained by a targeted in-class survey (see ) after completion of both laboratory models (3) The contribution of these different models to student’s overall performance in final closed book exam, and (4) the end of semester Student Evaluation of Course and Teacher (SeCAT) reports for the unit which are conducted by the university. (5) Tutor/demonstrator feedback through questionnaire and round table discussions.

Table 3. Question in student survey.

Forty students participated in the targeted survey outlined in . Each of the questions in was provided in a 5-point Likert scale (e.g. 1 = strongly disagree, 2 = disagree, 3 = undecided, 4 = agree, 5 = strongly agree). Questions 1–6 aim to answer RQ1, and Questions 7–12 target students’ satisfaction with the structure of the models (RQ 2). An ANOVA (described below) was used to estimate the significance of the student responses. Sixty-six students also participated in the end of year survey (general survey of the course). Student answers were not provided in a Likert scale, but comments used to support or contradict the hypothesis of model 1 and/or model 2 were successful.

The final exam at the end of semester was closed book, requiring students to have a good knowledge of the course content covered throughout the semester in order to solve the problems. The final exam score can be used as in indicator of how effective the individual assessment tasks were contributing to student learning experience (Bjælde, Jørgensen, & Lindberg, Citation2017; Boud & Falchikov, Citation2007) as the exam included a question for each concept covered in the laboratory experiments, e.g. Q1 in the exam was related to fluid mechanics concept applied in EXP1, Q2 related to EXP2 and so forth). The scores obtained from each of the coursework assessment tasks () and associated learning activities were standardised in SPSS. Exploratory statistics were run to identify outliers that may affect the results, while descriptive statistics and Pearson Correlation coefficients were applied to estimate the extent to which the mean score of each of the assessment associated or correlated with the final exam score, respectively. An ANOVA was used to estimate the significance of the activity’s contribution to the final exam score, while the coefficient of the Multiple Linear Regression was used to determine the contributing effects of each of the predictor variables (assessment and learning activities) to the final exam score. The Adjusted R2 explains the strength of the relationship existing between the predictor variables and the dependent variable (final exam score) on a scale between 0 and 100. For this study, R2 > 60% was considered to explain a strong relationship, while an R2 between 30% and 59% was considered a moderate relationship explaining the variability within the model. This is argued on the premise that there may be several other factors (including behavioural factors) that could influence the students’ performance in the assessment and learning activities that may not have been considered (Hair, Ringle, & Sarstedt, Citation2013; Henseler, Ringle, & Sinkovics, Citation2009; Mooi & Sarstedt, Citation2014). The Beta (B) coefficient is the multiplier that describes the size of the effect the independent has on the dependent variable (Y). The t-statistic is the B-coefficient divided by its standard error. The standard error is an estimate of the standard deviation of the coefficient and can be interpreted as a measure of the precision with which the regression coefficient is measured. The Sig.- coefficient is the level of significance and the typical threshold is p < 0.05, indication a 95% probability that the variable in question has some effect, assuming the statistical model is specified correctly.

The qualitative data was thematically analyzed through data reduction, organization and interpretation. By reading through all SeCAT responses, the researchers gained good understanding of the ideas and patterns of the ideas expressed. These allowed for the reduction of the data into emerging broad themes, which were further categorized into sub-themes as shown in . The categorizations were indexed by selecting fragments of information from the emerged themes to summarize all the information from the scripts. Hence, by identifying patterns within and across the dataset, the data was critically analyzed to understand their implications on the study (Braun & Clarke, Citation2012).

Table 4. Thematic analysis of the general student feedback from end-of-year survey (SeCAT).

Feedback from the tutors/demonstrators was obtained via focus group discussion at the end of the course. Four out of five demonstrators taught this course on at least one prior occasion, whilst one demonstrator was tutoring for the first time. Three out of the five demonstrators are PhD students in their 2nd year of their doctoral studies, whilst the other laboratory demonstrators were undergraduate students in the 4th year of their BEng degree, but both had experience in teaching this course before.

4. Results

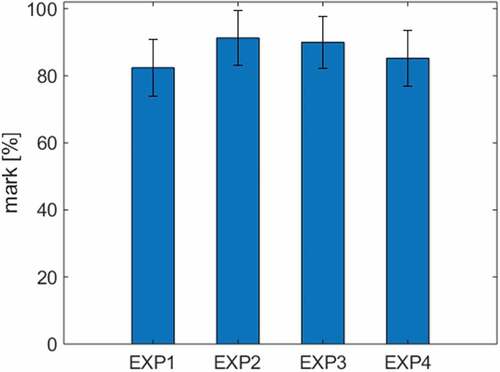

depicts the average student score (and variance) of the four experiments. EXP1 and EXP2 represent Model 1 and had averages of 82.4% and 83.2%, respectively. EXP3 and EXP4 were run with Model 2 and yield the second and third highest scores. The direct comparison of grades reveals that student performance overall was independent of the model used and there is no clear trend based on performance or learning.

Figure 2. Average student score (and variance) of the four experiments. Model 1 represented by (EXP1 & EXP2) and Model 2 by EXP3 & EXP4.

shows the correlation and impact of the learning activities on the students’ performance. It was assumed that all the assessment and learning activities would significantly contribute to the students’ final exam score. Results from the Pearson Correlation coefficients revealed that Critical Thinking Question 1 (CTQ1) (r = 0.565), Critical Thinking Question 2 (CTQ2, r = 0.447), the Midterm Exam (r = 0.433), and Experiment 3 (EXP3, r = 0.357) were moderately correlated, whilst the rest of the predictors were weakly correlated (r < 0.30) to the students’ final exam score. This means that EXP1, EXP2 and EXP4 were not statistically significant compared with EXP3 to the final exam score. Non-significant predictors were negatively correlated to the final exam scores which were isolated and eliminated from the model to better explain the modelling of predictors contributing to the students’ final exam score. The full ANOVA results (see supplementary material) with an r-value of 0.398 revealed that all experiments (EXP1-EXP4), CTQs and assignment activities together contributed significantly to the final exam score F(4, 163) = 28.58; p = 0.000). In terms of their impact, the regression coefficient () revealed that of all assessment items only the Midterm Exam, EXP3 and CTQ1 were the significant contributors to the students’ final exam score. This means that statistically only EXP3 as a laboratory exercise had an impact on the performance. The strong correlation between the Midterm Exam and the final exam score can be explained with similar learning strategies as studying for Midterm exams is often analogous to final exam preparations (study of tutorial problem). In previous years (not shown), CTQs have been the favoured assessment task in the course as evidenced by student-surveys. EXP3 representing laboratory Model 2 was the only other assessment task that had a significant correlation with the final exam score. This can be attributed to several factors: first, EXP3 was most substantially developed for online material (lecture material and recordings, online quiz, laboratory instruction video, laboratory report and real-world example). Second, EXP3 used the same hydraulic apparatus that was already used in EXP1, suggesting that there was a certain level of familiarity with the experimental set-up. The reason why EXP4 was not as significant as EXP3 could be explained by the lack of an instructional video as the online material provided consisted of lecture material and recordings, the online quiz and written laboratory instructions. EXP4 was also a new laboratory exercise compared to previous years. The omission of video instructions could therefore be a key factor as to why the correlation was weaker than for EXP3.

Table 5. Descriptive statistics and Pearson Correlation for CIVL2131 (N = 167). The grade scale for the average values (Mean) column is from Fail (1) to High Distinction (7). The four experiments show marks ‘out of 20’ and were not converted in this table.

Table 6. Regression coefficient of assessment tasks and Pearson Correlation (N = 167). The t-statistic is the coefficient divided by its standard error, r is the regression coefficient and p indicates the statistical significance.

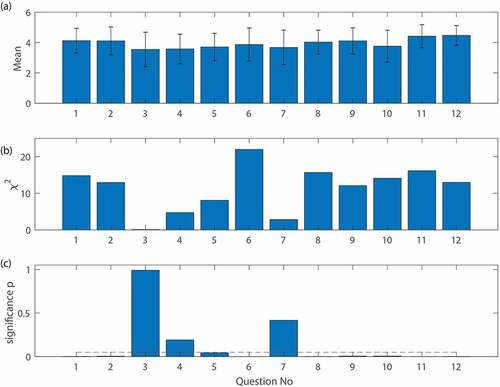

Results of the student survey as related to the comparison of the two laboratory models reflected students’ perceptions of how instructional activities supported their learning. depicts the average score of the 5-point Likert scale. 50% of the questions had an average score lower than 4 (‘agree’), and the lowest overall score of any question was Q3 (‘I used the online/lecture material several times for the preparation’), with 3.55 placing the average answer between ‘undecided’ and ‘agree’. The χ2 -goodness-of-fit test for each question is shown in and the asymptotical significance test () indicated that 75% of the students had perceptions that were statistically significant. Q1 to Q6 were targeting the students ‘opinion of their perception of the different models. Overall, students thought that the online/lecture materials prepared them for the practical experiments (χ2(3) = 14.84, p < .0002). They also indicated that they used the online lecture material for the practical laboratory sessions (χ2(3) = 12.95, p < .0005). The student feedback further demonstrates that the pre-laboratory activities both stimulated their curiosity, and prepared them for the laboratory exercises. However, they also indicated that many did not use the pre-laboratory activities more than once for preparation prior to the laboratory experiments. There was no significant statistical difference between students’ motivation (curiosity) to learn and the online-quizzes (χ2(3) = 4.74, p < .192). The analysis of the responses to Q7 to Q11 further demonstrates that splitting the laboratory into different phases made the learning experience in those activities more efficient (χ2(3) = 15.68, p < .0001) and that the structure of EXP3 and EXP4 was more appreciated than in the traditional laboratory format (EXP1 and EXP2). Submitting assignments digitally was perceived as more convenient than handing in a hard copy (χ2(4) = 14.11, p < .0007), and that generally, students prefer quick feedback on their laboratory report. Finally, Q12 revealed that linking EXP3 and EXP4 to real-world problems helped students to better understand the theory (χ2(2) = 13.00, p < .0002).

Figure 3. Graphical analysis of the student survey (see ) for the experiments. a) depicts the average score of the survey with the 5-point Likert scale on the y-axis. b) The χ2 -goodness-of-fit test (ANOVA) and c) the asymptotical significance test (ANOVA).

The student comments from the end of semester survey (SeCAT) reflect a general positive feedback to laboratory exercises (). Without clear distinction between Model 1 and Model 2, students appreciated the relation of practice to theory and that concepts helped improve understanding of content and solidified knowledge learnt in lectures. Generally, the laboratory models provided visual representation of learning content with clear instructions. However, students also reported that Model 2 had some advantages due to its structure, for example, student commented that ‘ … practical submission times should be after the practical like it was for EXP3 and EXP4 … I really appreciated having the extra time available for EXP3 and EXP4.’ or ‘changing the laboratory layout for the third and fourth practicals which resulted in a more comprehensive understanding of the content.’ as well as ‘Much clearer instructions for Model 2.’ were common statements from the feedback. Students generally preferred Model 2 ‘the new addition of online feedback is a good move forward.’ and the 3 h duration of the experiment in Model 1 was generally less appreciated ‘Draining with rush to submit report in 3 hours’ and ‘Practical were difficult to complete in 3 h’. Whilst the majority of students found it more convenient to submit a digital version of the laboratory report and have extra time to reflect on the analysis, some students expressed concerns about equity of contributions and workload within a group (e.g. ‘ … Inadequate support/marks unreflective contribution by group members’).

Feedback for the different models was also sought from the five laboratory demonstrators. Three were undecided or agreed that the online materials and pre-laboratory activities encouraged better engagement and time management. All laboratory demonstrators favoured the structure of Model 2 (EXP3 and EXP4) over Model 1 (EXP1 and EXP2). They also agreed that the students were better prepared for EXP3 and EXP4 than they were for EXP1 and EXP2. In contrast, the laboratory demonstrators were less enthusiastic about the inclusion of laboratory report submission after the laboratories as they had to commit more time towards marking. This contrasts with the students’ view which was more appreciative of the post laboratory online submission system.

5. Discussion

The benefits of laboratory-based learning in the engineering curriculum are well-documented in the literature (Feisel & Rosa, Citation2005; Forster et al., Citation2017; Kolb, Citation1984), also including the subject of Fluid Mechanics (e.g. (Gamez-Montero et al., Citation2015; Lal et al., Citation2017). Thus, the discussion point is not whether laboratories are fundamental teaching interventions for large engineering classes but whether the restructuring and embedding of laboratory experiences into an online learning environment is beneficial to student engagement (RQ1) and whether there are certain elements of Model 2 that might be worth advancing further (RQ2).

RQ1

As the student grades from both laboratory models were comparable, there is no significant difference in student performance and given the high scores it can be concluded that students in both models met the intended learning outcomes satisfactorily (see ILO, and ). These grades are consistent with previous years, proving the efficacy of the laboratory exercise. However, statistical analysis revealed that Model 2 seems to provide a better learning experience when final exam grades are taken into consideration, suggesting that better alignment with intended learning outcomes took place as a result of using the hybrid model. Based on interactions with the students during the face-to-face, student performance and feedback, students were well engaged in all experiments, but appear to have favoured Model 2(EXP3 and EXP4) over Model 1 (EXP1 and EXP2). This is most likely due to the structure of the hybrid design in Model 2 in which students were more involved in problem-based activities that reinforced their learning and argue that this occurred through several factors: firstly, the students engaging with the theory through online materials and secondly, applying that theory was only possible on completion of the quiz. Thirdly, in applying these concepts to a real-life situation, students had to make connections to what they learnt in the laboratory to solve a problem. We suggest that hands-on practical activities – taking measurements and observing fluid behaviour to internalise the theory- is still the most efficient way to teach this subject and that students are more likely to develop the ability to clearly apply concepts to real-life situations when theory is properly linked to hands-on practical activities. This enables them to reflect on what they have learnt in the laboratory to solve problems, thereby improving their performance.

Significantly, our analysis based on direct student feedback indicates that the structure of Model 2 gave students opportunities to interact more frequently with the lecture and course material. Model 2 increased students’ capacity to revisit recorded lectures and repeatedly view videos of laboratory instructions and experimental procedures. In addition, the use of the online quizzes provided them with the flexibility to interact with the learning material throughout the core unit and to prepare for the final exam.

RQ2:

Students considered online quizzes to be a good preparation but did not feel more motivated by receiving feedback from online quizzes and these quizzes failed to stimulate curiosity (p = 0.192, Q4 in ). Finishing the gatekeeping quizzes prior to the laboratory allowed only well-prepared students to undertake the practical which streamlined the face-to-face time making it more efficient. The benefits of this gatekeeping are considered important (Zimmerman, Citation2000). The use of online quizzes allowed learning gaps to be self-identified by students through the feedback provided and also resulted in increased student engagement, preparation for the face-to-face time and self-reflection (Landrum, Citation2020; León, Núñez, & Liew, Citation2015). In the future, more emphasis could be put on the experimental procedure in the pre-laboratory quiz to ensure a better connection between the theory and laboratory exercises. Statistics available through the Learning Management System (Blackboard) indicate that approximately 50% of the students watched the material more than one time whilst the other approximately 50% visited the instructions only once. This may be indictive of the fact that instructions were clear enough so that students felt confident taking the test before attending the face-to-face laboratory or that because the test could be taken as many times as required – the students developed a risk tolerance by attempting the test after studying the material only once. Thus, future work should analyse the influence of gatekeeping settings, for example, whether limited or unlimited attempts trigger different levels of engagement with the online learning material.

The results also indicate greater ambiguity towards the submission system of the final report, such that the online learning environment in its current form still requires modification and that there is likely no ‘one-size-fits-all’ approach to large classes given the diversity of student learning preferences. Very positive feedback about EXP 3 and EXP4 was received from students regarding the use of online materials and in particular the linking of experiments to real-world examples in the written report. This satisfies a core engineering principle in which laboratory data are used to optimize a real-world design. It also provides an opportunity for students to reflect on the information gained from the laboratory exercise (Selwyn & Renaud-Assemat, Citation2020).

A final thought is given to the long-term impact and cost efficiency of the hybridisation of laboratory experiments. A comparison between the time commitment of tutors and resulting costs between Model 1 and Model 2 did not reveal a significant cost benefit as tutors had to mark more extensive laboratory reports (for instance by including the additional real-world-scenario) and also by creating some of the online material. Nonetheless, the hybrid model could become more cost-efficient as online material can be reused and the marking process streamlined. We anticipate that a hybrid model is more cost-efficient in the long term (for instance with respect to tutor attrition) and provides other benefits such as improved student engagement making the added expense a worthwhile investment. This study conducted in 2019 was in effect a precursor to the changes induced as a result of the COVID pandemic which resulted in a further shift to online laboratories globally. Assessing and evaluating online teaching has already become a key focus in (re-) shaping contemporary teaching strategies (Bonfield, Salter, Longmuir, Benson, & Adachi, Citation2020), and the hybrid model could be a compromise between limited laboratory space, the flipped classroom and much-needed hands-on laboratory training in a streamlined format.

6. Conclusions

The hybrid model as indicated in Model 2, which combined online learning material and assessment with a face-to-face laboratory, provides valuable insight into the efficiency of such approaches and the benefits in terms of student engagement. At present, there exists a dissonance between the desire for hands-on laboratory exercises as a key component of engineering education and contemporary models of course delivery as universities shift to online. The findings of this study are expected to encourage course coordinators in adopting a hybrid approach that integrates best practice in face-to-face teaching with online learning delivery as a result of this. The data analysis indicates that:

There is positive feedback on the use of a hybrid laboratory model, and although there is no statistically significant impact on performance improvements in student engagement are evident.

The use of a quiz prior to the laboratory created greater interaction with the course material but did not stimulate curiosity. We suggest further analysis of gatekeeping routines and automated feedback loops (especially relevant for large classes).

Using a hybrid structure, face-to-face time can be significantly reduced while maintaining or increasing student engagement and achieving the intended learning outcomes. This could be useful for laboratory classes already under the stress of time and space.

Students showed no appreciation of the greater flexibility in time management provided however, linking laboratory data to real-world applications helps students reflect on the learning objectives of the experimental work.

Ethics

This work has received ethics approval from the Human Ethics and the Research Ethics and Integrity Committee under the Ethics Application - 2021/2021/HE002337 at the University of Queensland.

Acknowledgments

This study was funded by a Teaching and Learning Grant from the Faculty of EAIT at the University of Queensland. We would like to thank all students of CIVL2131 who participated in the surveys. Discussions with Badin Gibbes, Liza O’Moore, and the ITaLI Team at UQ were also greatly appreciated. A special thanks goes to the laboratory technicians Mathew Stewart and Jason van der Gevel for support during the laboratory sessions. We also thank the EAIT Helpdesk for discussions around the student-id card reader, which is used to check completion of the pre-laboratory quiz prior to the laboratory.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Abdulwahed, M., & Nagy, Z.K. (2009). Applying Kolb’s experiential learning cycle for laboratory education. Journal of Engineering Education, 98(3), 283–294. doi:10.1002/j.2168-9830.2009.tb01025.x

- Alam, F., Tang, H., & Tu, J. (2004). The development of an integrated experimental and computational virtual learning tool for thermal fluid science. World Transactions on Engineering and Technology Education, 3(2), 249–252.

- Biggs, J., & Tang, C. (2011). Teaching for quality learning at university: McGraw-hill education.

- Bjælde, O.E., Jørgensen, T.H., & Lindberg, A.B. (2017). Continuous assessment in higher education in Denmark: Early experiences from two science courses. Dansk Universitetspædagogisk Tidsskrift, 12(23), 1–19. doi:10.7146/dut.v12i23.25634

- Bonfield, C.A., Salter, M., Longmuir, A., Benson, M., & Adachi, C. (2020). Transformation or evolution?: Education 4.0, teaching and learning in the digital age. Higher Education Pedagogies, 5(1), 223–246. doi:10.1080/23752696.2020.1816847

- Boud, D., & Falchikov, N. (2007). Developing assessment for informing judgement. In Rethinking assessment in higher education (pp. 181–197). London: Routledge.

- Braun, V., & Clarke, V. (2012). Thematic analysis.

- Cheng, Y., Lu, S., Du, Y., & Lim, G. (2017). Introducing online quizzes into lab-based teaching in university. International Journal of Information and Education Technology, 7(8), 571. doi:10.18178/ijiet.2017.7.8.933

- Chowdhury, H., Alam, F., & Mustary, I. (2019). Development of an innovative technique for teaching and learning of laboratory experiments for engineering courses. Energy Procedia, 160, 806–811. doi:10.1016/j.egypro.2019.02.154

- Cohen, D., & Sasson, I. (2016). Online quizzes in a virtual learning environment as a tool for formative assessment. Journal of Technology and Science Education (JoTSE), 6(3), 21. doi:10.3926/jotse.217

- Coleman, S.K., & Smith, C.L. (2019). Evaluating the benefits of virtual training for bioscience students. Higher Education Pedagogies, 4(1), 287–299. doi:10.1080/23752696.2019.1599689

- Dale, E. (1969). Audiovisual methods in teaching (3rd ed.). Orlando, FL: Holt, Rinehart and Winston, Inc.

- Dawes, L., Murray, M., & Rasmussen, G. (2005). Student experiential learning. In D. Radcliffe & J. Humphries (Eds.), Proceedings 4th ASEE/AaeE Global Colloquium on Engineering Education (pp. 1–11). Australia: The University of Queensland.

- Feisel, L., & Rosa, A.J. (2005). The role of the laboratory in undergraduate engineering education. Journal of Engineering Education, 94(1), 121–130. doi:10.1002/j.2168-9830.2005.tb00833.x

- Felder, R.M., & Brent, R. (2003). Designing and teaching courses to satisfy the ABET engineering criteria. Journal of Engineering Education, 92(1), 7–25. doi:10.1002/j.2168-9830.2003.tb00734.x

- Forster, A.M., Pilcher, N., Tennant, S., Murray, M., Craig, N., & Copping, A. (2017). The fall and rise of experiential construction and engineering education: Decoupling and recoupling practice and theory. Higher Education Pedagogies, 2(1), 79–100. doi:10.1080/23752696.2017.1338530

- Gamage, S.H.P.W., Ayres, J.R., Behrend, M.B., & Smith, E.J. (2019). Optimising moodle quizzes for online assessments. International Journal of STEM Education, 6(1), 27. doi:10.1186/s40594-019-0181-4

- Gamez-Montero, P.J., Raush, G., Domenech, L., Castilla, R., Garcia-Vilchez, M., Moreno, H., & Carbo, A. (2015). Methodology for developing teaching activities and materials for use in fluid mechanics courses in undergraduate engineering programs. Journal of Technology and Science Education (JoTSE), 5(1), 16. doi:10.3926/jotse.135

- Gurses, A., Açıkyıldız, M., Doğar, Ç., & Sozbilir, M. (2007). An investigation into the effectiveness of problem-based learning in a physical chemistry laboratory course. Research in Science & Technological Education, 25(1), 99–113. doi:10.1080/02635140601053641

- Hair, J., Ringle, C., & Sarstedt, M. (2013). Partial least squares structural equation modeling: Rigorous applications, better results and higher acceptance. Long Range Planning, 46(1–2), 1–12. doi:10.1016/j.lrp.2013.08.016

- Henseler, J., Ringle, C.M., & Sinkovics, R.R. (2009). The use of partial least squares path modeling in international marketing. In R.R. Sinkovics & P.N. Ghauri (Eds.), New Challenges to International Marketing (Advances in International Marketing) (Vol. 20, pp. 277–319). doi:10.1108/S1474-7979(2009)0000020014

- Huilier, D. (2019). Forty years’ experience in teaching fluid mechanics at Strasbourg university. Fluids, 4(4), 199. doi:10.3390/fluids4040199

- Jaeger, M., & Adair, D. (2014). The influence of students’ interest, ability and personal situation on students’ perception of a problem-based learning environment. European Journal of Engineering Education, 39(1), 84–96. doi:10.1080/03043797.2013.833172

- Kolb, D. (1984). Experiential learning: experience as the source of learning and development. Prentice-Hall.

- Kuh, G.D. (2008). Excerpt from high-impact educational practices: What they are, who has access to them, and why they matter. Association of American Colleges and Universities, 14(3), 28–29.

- Kuh, G., O’Donnell, K., & Schneider, C.G. (2017). HIPs at ten. Change: The Magazine of Higher Learning, 49(5), 8–16. doi:10.1080/00091383.2017.1366805

- Lal, S., Lucey, A.D., Lindsay, E.D., Sarukkalige, P.R., Mocerino, M., Treagust, D.F., & Zadnik, M.G. (2017). An alternative approach to student assessment for engineering–laboratory learning. Australasian Journal of Engineering Education, 22(2), 81–94. doi:10.1080/22054952.2018.1435202

- Landrum, B. (2020). Examining students’ confidence to learn online, self-regulation skills and perceptions of satisfaction and usefulness of online classes. Online Learning, 24. doi:10.24059/olj.v24i3.2066

- León, J., Núñez, J.L., & Liew, J. (2015). Self-determination and STEM education: Effects of autonomy, motivation, and self-regulated learning on high school math achievement. Learning and Individual Differences, 43, 156–163. doi:10.1016/j.lindif.2015.08.017

- Lindsay, E., Liu, D., Murray, S., & Lowe, D. (2007). Remote laboratories in engineering education: Trends in students’ perceptions. In Proceedings of the 18th Conference of the Australasian Association for Engineering Education, Melbourne, Australia. Australasian Association for Engineering Education.

- McClelland, C.J. (2013, June). Flipping a large-enrollment fluid mechanics course–is it effective? In 2013 ASEE Annual Conference & Exposition, Atlanta, Georgia, US (pp. 23–607).

- Meltzer, D.E., & Manivannan, K. (2002). Transforming the lecture-hall environment: The fully interactive physics lecture. American Journal of Physics, 70(6), 639–654. doi:10.1119/1.1463739

- Mooi, E., & Sarstedt, M. (2014). A concise guide to market research. The process, data and methods using IBM SPSS statistics. Springer Texts in Business and Economics. doi:10.1007/978-3-642-53965-7

- Peña-Fernández, A., Acosta, L., Fenoy, S., Magnet, A., Izquierdo, F., Bornay, F.J., … Del Aguila, C. (2020). Evaluation of a novel digital environment for learning medical parasitology. Higher Education Pedagogies, 5(1), 1–18. doi:10.1080/23752696.2019.1710549

- Rahman, A. (2016). A blended learning approach to teach fluid mechanics in engineering. European Journal of Engineering Education, 42, 1–8. doi:10.1080/03043797.2016.1153044

- Salas-Morera, L., Arauzo-Azofra, A., & García-Hernández, L. (2012). Analysis of online quizzes as a teaching and assessment tool. Journal of Technology and Science Education (JoTSE), 2(1), 7. doi:10.3926/jotse.30

- Selwyn, R., & Renaud-Assemat, I. (2020). Developing technical report writing skills in first and second year engineering students: A case study using self-reflection. Higher Education Pedagogies, 5(1), 19–29. doi:10.1080/23752696.2019.1710550

- Sokoloff, D.R. (editor). (2006). “Introduction”, active learning in optics and photonics training manual (pp. 1–13). Paris: UNESCO.

- Sokoloff, D.R., & Thornton, R.K. (1997). Using interactive lecture demonstrations to create an active learning environment. The Physics Teacher, 35(6), 340–347. doi:10.1119/1.2344715

- Thornton, R.K., & Sokoloff, D.R. (1998). Assessing student learning of newton’s laws: The force and motion conceptual evaluation and the evaluation of active learning laboratory and lecture curricula. American Journal of Physics, 66(4), 338–352. doi:10.1119/1.18863

- Vial, P.J., Nikolic, S., Ros, M., Stirling, D., & Doulai, P. (2015). Using online and multimedia resources to enhance the student learning experience in a telecommunications laboratory within an Australian university. Australasian Journal of Engineering Education, 20(1), 71–80. doi:10.7158/D13-006.2015.20.1

- Wallihan, R., Smith, K.G., Hormann, M.D., Donthi, R.R., Boland, K., & Mahan, J.D. (2018). Utility of intermittent online quizzes as an early warning for residents at risk of failing the pediatric board certification examination. BMC Medical Education, 18(1), 287. doi:10.1186/s12909-018-1366-0

- Webster, D.R., Majerich, D.M., & Luo, J. (2014). Flippin’ Fluid Mechanics - Quasi-experimental Pre-test and Post-test Comparison Using Two Groups. https://ui.adsabs.harvard.edu/abs/2014APS.DFDM33005W

- Zimmerman, B.J. (2000). Attaining self-regulation: A social cognitive perspective. In Handbook of self-regulation (pp. 13–39). City University of New York, New York: Elsevier.