ABSTRACT

Prior work has noted changes in musical cue use between the Classical and Romantic periods. Here we complement and extend musicological findings by blending score-based analyses with perceptual evaluations to provide new insight into this important issue. Participants listened to excerpts from either Bach’s The Well-Tempered Clavier or Chopin’s 24 Preludes – historically important sets drawn from distinct musical eras, with 12 major and 12 minor key pieces each. Participants selected one of five categories for each piece, adapted from previous musical analyses exploring historical changes in music’s cues. Combining participant classifications with score-extracted cues offers a useful way to complement and extend previous work exploring changes in the function of mode across musical eras based only on notational information. In doing so, we find evidence that changing associations of cues in the Romantic era influence judgments of affective meaning. This study provides a useful step toward bridging the divide between traditional approaches to musicology, music theory, and music perception by combining perceptual evaluations with cues extracted from musical scores to shed light on changes in musical emotion across eras.

Introduction

A small but growing body of empirical research seeks to understand how musical structure has changed across eras (Daniele & Patel, Citation2013; Harasim, Moss, Ramirez, & Rohrmeier, Citation2021). Recent empirical musicology work has explored the shifting use and associations of the Western major and minor modes (characteristic musical scales). These studies have found that mode’s associations with cues such as timing and loudness changed between the 1600s and 1900s (Ladinig & Huron, Citation2010; Post & Huron, Citation2009; Turner & Huron, Citation2008). Although these findings likely have important implications for theories of music’s emotional meaning, perceptual explorations of the issue are needed to clarify their implications for music listening. The present research addresses this disconnect by exploring how structural differences influence the perceived affective connotations of excerpts composed by two highly acclaimed composers.

In a recent study, Horn and Huron (Citation2015) used cluster analysis to investigate changing patterns in mode’s acoustic associations across history. The authors coded timing, articulation, dynamics, and mode in a large corpus of pieces composed between 1750 and 1900. To clarify these changes, they summarized expressive patterns from cluster analyses of music in three 50-year epochs. After the cluster analysis, they assigned each cluster a descriptive label representing the affective quality evoked by patterns in cue use (), discovering changes in the prevalence of these patterns across music history.

Table 1. Cluster cues

Here we build on past work analyzing scores by combining score-based approaches with a behavioral task where participants selected labels drawn from those Horn and Huron (Citation2015) used to demarcate cue clusters. Specifically, we build upon our team’s existing methods (Battcock & Schutz, Citation2019), adding a classification task for excerpts from keyboard pieces by Bach and Chopin to compare participants’ affective appraisals to musical analyses of timing, loudness, and mode. This exploratory research is a valuable step toward investigating the implications of important musicological findings within the context of experimental research on music perception. Additionally, it raises interesting questions about how compositions from different eras are evaluated by modern listeners – who have undoubtedly heard a wide range of musical styles.

Methods

Participants

The 60 non-musician participants in this experiment received $10 CAD as compensation for completing the experiment online through Prolific Academic (an online platform for recruiting participants and running experiments). We gathered demographic information and musical sophistication scores following the Goldsmiths Musical Sophistication Index (Müllensiefen, Gingras, Musil, & Stewart, Citation2014). Half of these participants (30) listened to Bach excerpts (13 female, 16 male, 1 non-binary), ranging in age from 18–70 (M = 24.6, SD = 9.76) with a mean musical sophistication of 61.97 (SD = 16.4). The other half (30) listened to Chopin excerpts (12 female, 18 male), ranging in age from 18–48 (1 not reported, M = 24.7, SD = 7.30) with a mean musical sophistication of 63.57 (SD = 12.82). On average participants scored in the 17th and 19th percentiles of the General Sophistication scale in the Goldsmiths Musical Sophistication Index (Müllensiefen et al., Citation2014). All participants reported normal hearing, normal/corrected-to-normal vision and identified as non-musicians. The experiment complied with McMaster University’s Research Ethics Board standards.

Materials

Participants listened to eight-measure excerpts from Bach’s The Well-Tempered Clavier and Chopin’s Preludes. These sets provide a balanced comparison as they both contain one piece in each major and minor key (24 pieces per set). As important musical landmarks, both have been recorded frequently. Therefore, we developed a method for identifying suitable exemplars of highly regarded interpretations. To achieve this goal, we first identified the five most frequently occurring performances of the complete set of pieces within the Naxos Music Library (NAXOS) and Classical Music Archives (CMA) databases (Heymann, Citation2019; Schwob, Citation2019). Specifically, we selected the first five performers from the results of the CMA search function for the works specified. Using NAXOS’s features we ranked each album’s performers by their number of entries in the database. Comparing both databases, we selected the highest-ranked performer appearing in both lists. Vladimir Ashkenazy (Chopin/Ashkenazy, Citation1993) emerged as our performer of choice for Chopin’s Preludes. As The Well-Tempered Clavier is identified by 48 individual entries (i.e., BWV 846) in the CMA database, we were unable to assess authoritative performances of the set as a whole. Consequently, we selected Pietro De Maria (Bach/De Maria, Citation2015) as the primary performer using NAXOS alone (for analysis of how performer interpretation can affect emotional communications see Battcock & Schutz, Citationin press). We selected performers in November of 2019, and performance numbers and library tools may have shifted since.

We prepared excerpts of all 24 Preludes from each set, adding a two-second fade-out from the onset of the first note in measure nine in Amadeus Lite (HairerSoft, Citation2019). The excerpts spanned eight measures of each piece (including anacrusis/“pick-up” measures where applicable), roughly corresponding to a complete phrase in Baroque and Classical era compositions and building upon previous work on similar musical sets by including anacrusis measures and a performance-derived measure of attack rate (Poon & Schutz, Citation2015). We removed any silence before the first note onset.

Additionally, we used exemplar pieces from Horn and Huron (Citation2015) to familiarize participants with the associated meaning for each label. These exemplars lasted for 30 seconds, including a five-second fade-out. The pieces come from a subset of solo keyboard-instrument pieces from Horn and Huron (Citation2015) chosen to represent its category ().

Table 2. Exemplar pieces

Cue Quantification

To measure timing, we encoded performer attack rate as the number of unique attacks (in the notation) divided by the duration of the excerpt (ignoring the two-second fade-out). This modifies the original method our group used for analyzing notation-based attack rate in scores (Schutz, Citation2017) to measure the specific tempi of the performances used in this experiment (ranging between 0.3 and 12.8 attacks per second, M = 5.4, SD = 2.9). We annotated mode according to each piece’s nominal key (major or minor).

We collected information on musical dynamics/intensity using the Root Mean Squared (RMS) amplitude values from mono-converted excerpt files in Audacity (The Audacity Team, Citation2000). The RMS amplitude values represent the effective signal amplitude of the entire waveform, excluding the two-second fade-out (ranging between −42.9 dB and −17.4 dB, (ranging between −42.9 dB and −17.4 dB, M = −29.7, SD = 5.5). We analyzed intensity directly from audio files to more accurately account for situations where performers deviate from the dynamic markings in a score. RMS amplitude better controls for performer interpretation by measuring the average sound level of stimuli presented to participants.

Procedure

Participants first followed on-screen instructions to rate valence (defined as “positive/negative emotional quality evoked by a piece”) and arousal (“the energy or intensity of the emotion”), based on widely recognized models (Russell, Citation1980). As these dimensions are not analyzed here, we refer the reader to Battcock and Schutz (Citation2019) for further details. These values were primarily collected for future research and for consistency with previous methods (Battcock & Schutz, Citation2019, Citation2021). We asked participants to rate each piece’s conveyed emotions ignoring any subjective felt emotion and encouraged participants to use the entire range of each scale.

Next, we instructed participants to classify the pieces using one of the five most common expressive categories from Horn and Huron (Citation2015) – Light/Effervescent, Joyful, Tender/Lyrical, Passionate, or Sad/Relaxed – after rating valence and arousal. We provided common descriptions and synonyms for each category (available at https://maplelab.net/emotion-descriptions/). Following these instructions participants heard 25-second (including a 5-second fade-out) exemplar piano pieces from selected labels within Horn and Huron (Citation2015) cluster analysis findings ().

Participants then completed four practice trials, rating four randomized pieces (two major and two minor) from each respective test set (either Bach or Chopin excerpts). They rated each excerpt using the valence and arousal scales and classified each piece using one of the five labels. Participant then reviewed the experiment instructions a second time.

Following all instruction, participants listened to each of the 24 prelude excerpts in a randomized order, providing emotion ratings and selecting a category for each. After the participants rated all 24 excerpts, they completed a survey including the self-report questionnaire from the Goldsmith Musical Sophistication Index along with a brief demographic survey prior to being debriefed (Müllensiefen et al., Citation2014). The experiment was hosted on Pavlovia.org servers, operating on PsychoPy3 (Peirce et al., Citation2019).

Results

Our first method of analysis explored differences in structural cues between composers’ most frequently chosen category for each piece. To compare the structural cues to those of Horn and Huron (Citation2015), we used a median split to dichotomize our continuous values for attack rate and RMS amplitude, using each piece’s nominal key to classify mode. To account for variations between recording environments and sound engineers, we measured intensity relative to each set using normalized RMS amplitude values (converted to Z-scores).

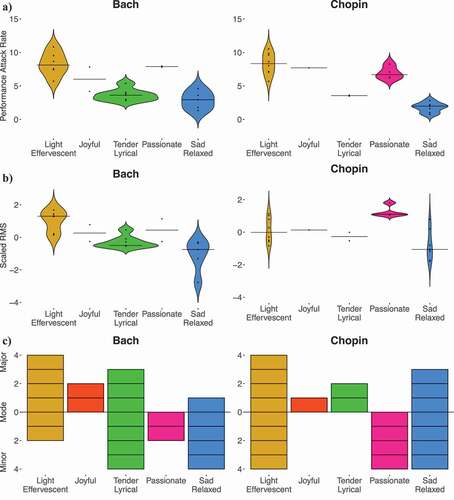

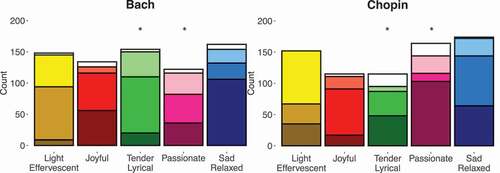

As a result of our method of focusing on the most selected label for each piece, two pieces in both sets contained ties. For Bach’s Eb-major prelude, participants selected Light/Effervescent, Tender/Lyrical, and Passionate labels with the same frequency (seven each). For Bach’s F-major prelude, participants selected Joyful and Passionate labels with the same frequency (nine each). For Chopin’s C-major prelude, participants selected Joyful and Tender/Lyrical equally (nine each). Finally, for Chopin’s Db-major prelude, participants selected Tender/Lyrical and Sad/Relaxed equally (11 each). As there is no clear way to establish the “best” category for a piece using the modal response when multiple categories yield the same number of ratings, we omitted these pieces from , which assigns each piece to a single category (corresponding to the one most frequently chosen for it). We reintroduce them in and further analyses, which avoid “tie” scenarios by assessing category choices by participant, rather than by piece. highlights the cue information corresponding to each participant-selected category by summarizing the most representative pieces (44 of 48 total).

Figure 1. Comparing Labeling and cues between composers

Figure 2. Comparing participant labeling between composers

Exploring Classifications

To explore connections with Horn and Huron (Citation2015), we tallied how many of the dichotomized cues aligned between participants’ classifications and those authors’ descriptions of their acoustic characteristics. We called these Cue Alignment Values (CAVs). For example, participants hearing Chopin’s A-major prelude (slow, quiet, major) most frequently chose the label of Sad/Relaxed (SR). As two of the three analyzed acoustic features are consistent with the Horn and Huron (Citation2015) labels (), we assign the piece a CAV of two. Possible values range from zero (no cues matching those authors’ descriptions) to three (all cues matching), distinguished by the color gradient of each column in . A piece labeled as Joyful with a CAV of three would be dark red, whereas a CAV of zero is always represented by white.

Chi-squared tests of independence comparing the labeling between groups affords tests of differences in category selection of: Light/Effervescent (LE), Joyful (J), Tender/Lyrical (TL), Passionate (P), or Sad/Relaxed (SR). We used this test to assess ratings of each participant’s classification per piece (24 pieces x 30 participants = 720 ratings per set).

Assessing ratings across the labels chosen by each participant (1440 ratings in total), revealed a significant relationship between composer set and participant labeling, x2 (4, N = 60) = 13.75, p < .01. With these data, we compared labels directly using Chi-squared tests with Yate’s continuity corrections: LE, x2 (1, N = 60) = 0.04, p > .05; J, x2 (1, N = 60) = 1.57, p > .05; TL, x2 (1, N = 60) = 6.60, p < .05; P, x2 (1, N = 60) = 7.33, p < .05 and SR, 2 (1, N = 60) = 0.47, p > .05. We found that participants used the label Tender/Lyrical significantly more for Bach’s pieces (p < .05), and the label Passionate for Chopin’s pieces (p < .05).

Discussion

Our findings expand recent interest in the similarities and differences in musical communication across history. Specifically, we connect the study of musical cues with their affective/expressive implications, providing insights available only through historically diverse corpora containing real music by highly regarded composers (rather than controlled stimuli created for research purposes). For example, we found the relationship between musical cues and classification categories to be broadly consistent between Bach and Chopin’s music. Furthermore, the relative medians of attack rate and RMS amplitude are very similar between Bach and Chopin for four of five semantic classifications (Joyful, Tender/Lyrical, Passionate, Sad/Relaxed), mainly differing in shape based on different frequencies of label usage (). This implies that certain musical cues lead to similar perceptual consequences for evaluations by modern listeners, especially as individual interpretation can shape evaluations of this music (Battcock & Schutz, Citation2021). However, mode exerts a less consistent influence on participants’ classifications (e.g., Light/Effervescent in ).

Indications of Differences between Sets by Bach and Chopin

Participants listening to Chopin used more Passionate (P) labels, whereas those listening to Bach used more Tender/Lyrical (TL) labels (). The differing prevalence of P and TL pieces may stem from their contrasting cue relationships: whereas P is characterized by loud dynamics, fast tempi, and the minor mode; TL is characterized by soft dynamics, slow tempi, and the major mode (). These diametrically opposed cue patterns offer a perceptual link to previous work indicating mode’s shifting expressive use over time (Horn & Huron, Citation2015; Schellenberg & von Scheve, Citation2012). Although both sets examined here contain equal proportions of major and minor pieces, our findings show a greater number of P classifications in Chopin’s set. We also see a difference in the prevalence of TL-classified pieces between composers, as well as in mode’s role in distinguishing the category (i.e., Bach’s TL-classified pieces are split between major and minor pieces whereas Chopin’s are exclusively major). These findings hint at differences in how the composers use mode – which appears to exert a stronger influence on participants’ classifications for Chopin. For example, in Chopin three of the five categories contain only a single mode (Joyful [J], TL, P), whereas in Bach only a single category (J) exhibits uniformity with respect to mode (). This could indicate participants relied more consistently on mode when labeling Chopin’s pieces, suggesting cue patterns idiosyncratic to the Romantic eras may be more familiar for modern listeners.

Participants’ classifications of the pieces also reflect mode’s changing importance in distinguishing categories between composers. Although both sets contained equal numbers of major and minor pieces, categories predominately containing major pieces, such as Light/Effervescent (LE), make up 60% of Bach’s set, but only 53% of Chopin’s set. This is consistent with evidence regarding a change in mode’s normative expressive function. Fast tempi and the major mode distinguish both J and LE pieces. However, in Chopin’s set participants perceive a greater proportion of minor pieces belonging to the latter category. In contrast, whereas the TL category predominately comprises minor pieces for Bach, for Chopin it exclusively comprises major pieces. This substitution of fast, loud major pieces (LE) for slow, soft ones (TL) in Chopin’s music is intriguing in light of previous findings suggesting an increase in the prevalence of fast/loud/minor and soft/slow/major expressive cue patterns in the Romantic era (Horn & Huron, Citation2015).

Differences in participants’ use of labels for Bach and Chopin also elucidate the perceptual consequence of mode’s shifting relationship to loudness and timing. For example, mode more clearly conveys “tenderness” and “passion” in Chopin’s music (). Although we use a measure of musical intensity from commercially available recordings using modern instruments, we suspect these differences may reflect changes in instrument design during the Romantic era. Namely, innovations to the modern piano in the 1800s provided Chopin greater opportunity to compose softer and louder pieces (Burkholder, Grout, & Palisca, Citation2006). Consequently, the greater prevalence of the P category and mode’s clearer role in TL pieces suggest Chopin could take advantage of these affordances to target specific cues which convey nuanced affective meaning more clearly.

Comparisons to Previous Notational Analyses

Comparing category prevalence in the current work to Horn and Huron (Citation2015) cluster descriptions, the significant increase in P labels is consistent with the noted increase of P pieces between eras in their study. We also found that the attack rate values of each category align with their interpretation of each cluster. LE, J, and P have the highest attack rate values, whereas TL and Sad/Relaxed (SR) have the lowest, consistent with those authors’ findings on how tempo distinguishes clusters. We also observe differences from Horn and Huron (Citation2015) findings. Tender/Lyrical (TL) (yielding a significant difference between composers) is less prominent in Chopin’s set than Bach’s. This differs from This differs from their findings which indicate an increase in TL pieces during the Romantic era. Participants’ similar use of LE, J, and SR also contrasts with Horn and Huron (Citation2015) which shows clear differences in each cluster’s prevalence between eras, potentially stemming from differences in statistical power relating to the much smaller sampling of composers and pieces in the present study.

Our findings also indicate some differences from other studies on mode’s changing expressive role between eras. Empirical musicologists note that major pieces become relatively quieter with slower tempi compared to their minor counterparts from the Classical era to the Romantic era (Ladinig & Huron, Citation2010; Post & Huron, Citation2009). In our work we find that Chopin’s predominately minor categories (P, SR) are neither louder nor faster than those which are predominately major (LE, J, TL), nor are they louder or faster than Bach’s major and minor categories. Other research finds minor pieces are generally associated with soft intensity and slow tempi, which we did not find in our study (Turner & Huron, Citation2008). Instead, it appears more subtle relationships between cues convey meaningful expressive differences across both modes (Anderson & Schutz, Citationin press). Together, these differences may suggest the clear function of mode, timing and loudness observed in notation-based analyses may not capture how listeners derive meaning from the interplay of musical cues in real listening contexts.

Limitations and Future Directions

Using real compositions as stimuli provides more ecological validity yet comes with inherent challenges. Although we believe Bach and Chopin are suitable exemplars to estimate expressive differences between musical eras, they are clearly a small subset of the 330 composers considered by Horn and Huron (Citation2015), and it remains to be seen whether our findings would generalize to other Baroque and Classical era composers. Nonetheless, the historical importance of these sets makes them strong candidates for this exploratory comparison, and future research building on this approach could offer better generalizability. Additionally, this approach of using excerpts of widely renowned compositions offers a useful complement to more traditional studies using stimuli created for research that provide fine-grained control, but lack a relationship to authentic music listening experiences (Dalla Bella, Peretz, Rousseau, & Gosselin, Citation2001; Kastner & Crowder, Citation1990). Combining different methods and approaches is necessary for developing a more comprehensive understanding of music’s historic differences. Finally, although we suspect many of the world’s musical traditions have changed over time, our data here are limited only to notated music within the Western tradition. Further research might explore stylistic shifts in music outside the Western tradition (preserved through notation or oral transmission) using suitable analytical techniques and expertise to advance an understanding of music’s historic changes at large.

In the future, it could be beneficial to explore other methods of classification as Horn and Huron (Citation2015) derived this terminology from the groupings of cues in their cluster analysis. Our use of these terms in a perceptual experiment provides a starting point for building connections but can be improved upon in the future. Additionally, further exploration of cue alignment values highlighted in holds the potential for shedding more light on musical cues’ expressive roles. We may also expand this line of research to include musicians. Although this study focused on non-musicians for more generalizable findings about music’s emotional connotations, the effect of musicianship should be explored in future work (see Battcock & Schutz, Citation2021 for a summary of differing emotional connotations arising from musical training).

Conclusion

Our results provide a novel extension of previous work exploring differences in the classification of music from different historical eras. Specifically, we build on previous score-based analyses (focused on cues alone) by combining score-based analyses with perceptual classifications of emotion. Our results both connect and contrast with previous findings. For example, attack rate (tempo) and RMS amplitude (intensity) distinguished Bach and Chopin’s pieces similarly, suggesting that participants interpret specific cue patterns consistently between the sets. Our findings of participants’ classification of mode indicates the composers used mode to express emotion differently, and we discover valuable nuance in how participants interpret the major and minor modes between composers. Finally, the significant differences in participants’ use of Passionate and Tender/Lyrical labels between Bach and Chopin provide further insights into how participants use mode to classify pieces composed in different eras. These findings follow previous ideas of the compositional use of mode shifting throughout history (Horn & Huron, Citation2015).

Despite methodological and sample size differences between our study and Horn and Huron (Citation2015), we find subtle but important connections to their work, including suggestions of perceptual classifications of emotion aligning with some of the same cue relationships reported previously. This holds important implications for experimental approaches to music cognition using stimuli constructed for research purposes, which often presuppose generalized, monolithic responses to cues. The present findings suggest even basic low-level cues might convey different emotional messages to listeners depending upon other contextual factors – such as stylistic nuances consistent with a particular musical era. They also provide perceptual context to previous research indicating broad structural shifts across musical eras (Daniele & Patel, Citation2013; Horn & Huron, Citation2015). Along with relationships found between participant classification and use of musical cues, the findings presented here help merge historical, notational, and perceptual studies of music – providing a valuable steppingstone toward understanding how music communicates meaning differently across eras.

Acknowledgements

We would like to thank Katelyn Horn and David Huron for sharing their dataset. We would also like to thank Max Delle Grazie for encoding timing information analyzed in the present work.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Anderson, C. J., & Schutz, M. (in press). Exploring Historic Changes in Musical Communication: Deconstructing Emotional Cues in Preludes by Bach and Chopin. Psychology of Music.

- Ashkenazy, V. (1993). Chopin, 24 preludes, Op.28. [CD]. London, UK: DECCA.

- The Audacity Team. (2000). Audacity.

- Battcock, A., & Schutz, M. (2019). Acoustically expressing affect. Music Perception, 37(1), 66–91.

- Battcock, A., & Schutz, M. (2021). Emotion and expertise: How listeners with formal music training use cues to perceive emotion. Psychological Research, 1–21. doi:https://doi.org/10.1007/s00426-020-01467-1

- Battcock, A., & Schutz, M. (in press). Individualized interpretation: Exploring structural and interpretive effects on evaluations of emotional content in Bach’s Well tempered clavier. Journal of New Music Research.

- Burkholder, J. P., Grout, D. J., & Palisca, C. V. (2006). A History of Western Music - International Student Edition (Tenth ed.). New York, NY: W.W. Norton & Company.

- Dalla Bella, S., Peretz, I., Rousseau, L., & Gosselin, N. (2001). A developmental study of the affective value of tempo and mode in music. Cognition, 80(3), B1–B10.

- Daniele, J. R., & Patel, A. D. (2013). An empirical study of historical patterns in musical rhythm: Analysis of german & Italian classical music using the nPVI equation. Music Perception: An Interdisciplinary Journal, 31(1), 10–18.

- De Maria, P. (2015). Bach, The Well-Tempered Clavier-Book 1 [CD]. London, UK: DECCA.

- HairerSoft. (2019). Amadeus Lite. London, UK: HairerSoft.

- Harasim, D., Moss, F. C., Ramirez, M., & Rohrmeier, M. (2021). Exploring the foundations of tonality: Statistical cognitive modeling of modes in the history of Western classical music. Humanities and Social Sciences Communications, 8(1), 1–11.

- Heymann, K. (2019). Naxos Music Library. Naxos Digital Services; Hong Kong. https://mcmaster-naxosmusiclibrary-com.libaccess.lib.mcmaster.ca/homepage.asp

- Horn, K., & Huron, D. (2015). On the changing use of the major and minor modes 1750–1900. Music Theory Online, 21(1), 1–11.

- Kastner, M. P., & Crowder, R. G. (1990). Perception of the major/minor distinction: IV. Emotional connotations in young children. Music Perception: An Interdisciplinary Journal, 8(2), 189–202.

- Ladinig, O., & Huron, D. (2010). Dynamic levels in classical and romantic keyboard music: Effect of musical mode. Empirical Musicology Review, 5(2), 51–56.

- Müllensiefen, D., Gingras, B., Musil, J., & Stewart, L. (2014). The musicality of non-musicians: An index for assessing musical sophistication in the general population. PLoS ONE, 9(2), 2.

- Peirce, J. W., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., … Lindeløv, J. K. (2019). PsychoPy2: Experiments in behavior made easy. Behavior Research Methods, 51(1), 195–203.

- Poon, M., & Schutz, M. (2015). Cueing musical emotions: An empirical analysis of 24-piece sets by Bach and Chopin documents parallels with emotional speech. Frontiers in Psychology, 6(November), 1–13.

- Post, O., & Huron, D. (2009). Western classical music in the minor mode is slower (except in the romantic period). Empirical Musicology Review, 4(1), 2–10.

- Russell, J. A. (1980). A Circumplex Model of Affect. Journal of Personality and Social Psychology, 39(6), 1161–1178.

- Schellenberg, E. G., & von Scheve, C. (2012). Emotional cues in american popular music: Five decades of the top 40. Psychology of Aesthetics, Creativity, and the Arts, 6(3), 196–204.

- Schutz, M. (2017). Acoustic constraints and musical consequences: Exploring composers’ use of cues for musical emotion. Frontiers in Psychology, 8, 1–10.

- Schwob, P. (2019). Classical Archives. Classical Archives LLC. https://www.classicalarchives.com/

- Turner, B., & Huron, D. (2008). A comparison of dynamics in major- and minor-key works. Empirical Musicology Review, 3(2), 64–68.