Abstract

Experience with simulated patients supports undergraduate learning of medical consultation skills. Adaptive simulations are being introduced into this environment. The authors investigate whether it can underpin valid and reliable assessment by conducting a generalizability analysis using IT data analytics from the interaction of medical students (in psychiatry) with adaptive simulations to explore the feasibility of adaptive simulations for supporting automated learning and assessment. The generalizability (G) study was focused on two clinically relevant variables: clinical decision points and communication skills. While the G study on the communication skills score yielded low levels of true score variance, the results produced by the decision points, indicating clinical decision-making and confirming user knowledge of the process of the Calgary–Cambridge model of consultation, produced reliability levels similar to what might be expected with rater-based scoring. The findings indicate that adaptive simulations have potential as a teaching and assessment tool for medical consultations.

Introduction

Communication is recognized as a core skill for doctors and is key to transforming clinical knowledge into practice, as such it is an essential component of clinical competence.Citation1,Citation2 Yet, despite the knowledge that doctor–patient communication is pivotal in determining positive outcomes from consultations, the most common mistakes reported to the Irish Medical Council (Irish Medical Council Conference, 2012) and the American Medical Association are still ones of communication (rather than failures of knowledge). Consultation training serves to model the important paradigm shifts that need to be enacted by doctors, as they develop approaches to patient-centered consultation.Citation1 Intensive experience is generally assumed to produce more favorable learning outcomes, but recent research suggests that assessment can be a more powerful driver of student learning depending on instructional format.Citation3–Citation5 Knowledge-based instruction alone may not be sufficient for imparting communication skills in medical education, but aligned with pedagogically sound teaching delivery, practice with stimulated patients and contextualized assessment may have a higher impact on student performance.Citation6

Medical education during the past decade has witnessed a significant increase in the use of simulation technology for teaching and assessment.Citation7,Citation8 Adaptive simulations and personalized learning are becoming an innovative feature of international medical schools.Citation9 Adaptive simulation is a learning environment that more deeply explores and exploits the potential synergy between simulations and intelligent tutoring systems. In an adaptive simulation, the simulation environment is not fixed but rather can be modified (or adapted) by the lecturer for optimal pedagogical effect.Citation10 The simulations can also adapt to the student as they engage in real time with the tasks and scenario presented to them. The adaptive simulation consists of different pathways and triggers for personalized feedback to the student depending on their progress, self-assessment, and scores. The interactive nature of adaptive simulations puts an onus on the learner to take more ownership of their learning experience and develop their knowledge and skills through active engagement, thus modeling experiential learning through appropriate sequencing and repeated practice in a safe simulated environment. The situational contexts can be adapted to the relevant skills and learning required (eg, prior knowledge, level of complexity, anatomical system, and subject domain).Citation11

Adaptive simulation technology affords educators and instructional designers a powerful tool for sustaining knowledge retention and transfer.Citation12–Citation15 These are important learning activities for working memory, learning, and assessment.Citation16 The literature suggests that adaptive simulations may hold potential for supporting student learning and staff teaching of medical consultation skills.Citation16–Citation18 However, research is required to explore how best to design adaptive simulations that produce valid performance assessment data and provide useful feedback for informing medical student learning and educational applications.

Cognitive learning of communication skills is optimally coupled with reflection.Citation19 The simulated environment can provide safe consistent real-world scenarios for students to engage with experiential learning based on case histories.Citation20 Experiential learning afforded by adaptive simulations can provide the learner with a rich opportunity to construct a new schema and build upon prior knowledge. In addition, empirical evidence suggests that the use of simulations significantly enhances knowledge transfer in students compared with traditional classroom delivery methods in medical and other health professional curricula.Citation8,Citation21–Citation23 Technology-enhanced learning (TEL) may contribute added value due to the administration of a flexible (any time, any place) educational experience by increasing the number of medical students undertaking continuous assessment (undergraduates) and continuous professional development (postgraduates). Technology may also contribute to efficiency with automated scoring.Citation19 TEL and simulations appear to deliver a positive learning experience, but the literature is not so strong on whether simulation can deliver valid and reliable assessment.Citation16

The generalizability (G) study used in this research employed existing data to determine whether adaptive simulation can produce a valid and reliable measure of undergraduate medical students’ consultation skills. The G study tested two variables regarding their potential to support automated self-assessment and practice of undergraduate medical students’ consultation skills.

Two models that describe the ways that students can learn from experiences are single-loop and double-loop learning.Citation20,Citation21 Single-loop learning involves connecting a strategy for action with a result. Double-loop (deep) learning arises when the outcome not only alters students’ decisions within the context of existing frames but also feeds back to alter their mental models.Citation20 Therefore, it is crucial that the learning environment provides students with necessary scaffolding (eg, personalized feedback, expert coaching, and opportunities for reflection) to facilitate self-regulated learning (SRL) through triggers (eg, thinking prompts and appropriate learning hints at critical incidents) to augment their metacognitive strategies in the consultation process – this is afforded by adaptive simulations.Citation14

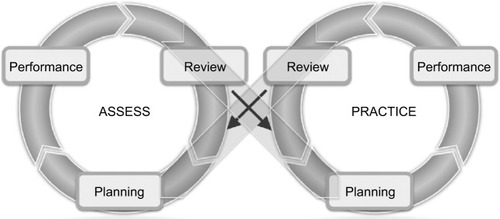

The adaptive simulation platform supports repeated iterations through linked assess–practice cycles by the student to facilitate their double-loop learning (). During the assess loop, students are prompted to reflect and review their performance through automated scores and personalized feedback. They are also prompted to plan for how they will improve their performance through targeted practice. While in the “assess” mode, students can measure their own process, knowledge, skills, and behaviors in comparison to:

Benchmarks set by lecturers

Self-indicated confidence in their abilities

Culminated scores from their previous “assess” mode attempts.

Figure 1 Double-loop learning in the adaptive simulations platform.

During the practice loop, students review repeated iterations of practice performance, with triggers and feedback from a coach, whereupon, they can adapt their practice and master their skills and behaviors, before repeating the “assess” loop (and subsequent “practice” loops) until they achieve optimum performance.Citation21 Students can engage repeatedly to:

Practice and improve targeted areas of the scenario and processes

Gain broader knowledge of the scenario and knowledge domain

Hone-associated skills and behaviors.

Both modes are linked, and the study collects quantitative data from the platform IT data analytics from these two modes. Repeated iterations of self-assessment and practice attempts help the learner to master the skills and behaviors required for their optimum performance.

Objectives

The adaptive simulation platform (called SkillSims) allowed the students to self-assess, practice, and master their skills through immersive video-based simulations using double-loop learning ().Citation10,Citation24 The scenarios used were psychiatric consultations with two patients, respectively, portraying mania and depression. The student assumed the role of the doctor, where they could choose (decision points) what the doctor should say and how they should say it, and exhibited various communication skills (optimal and not), accumulating scores as they progressed. “Practice” mode allowed the students to freely explore the simulation with coaching interventions pointing out more accepted/“optimal” and more challenging/“sub-optimal” ways of handling different situations. Students received personalized feedback via the data analytics (tracking user performance) and a subject expert feedback rating, delivered in an personalized manner, that is, based on students’ individual performance scores also using the data analytics.Citation18,Citation16 Students were scored in “assess” mode but not in “practice” mode.

“Assess” mode measured student’s performance in terms of IT data analytics. Each decision and ensuing path (ie, communication skills) made by the student was scored and accumulated. At the end of the session, the student and the expert rater could review the performance and associated feedback from a performance dashboard. The interactive nature of the adaptive simulations involved the student in the application of prior knowledge, thus stimulating recall. Continuous practice in the safe and controlled environment with real-time feedback and coaching allowed the student to practice, tune, and refine their skills and behaviors. Automated and individualized feedback was based on the responses and actions of the student in the simulation. The inclusion of rich multimedia (eg, video, audio, formatives quizzes, and other multimedia features) appealed to a multiple of learning modalities and contributed to motivation and face validity of the learning experience.Citation18 By linking the self-assessment and practice activities, the students could measure, compare, and align their confidence with their competence, with the aim of becoming better self-regulated learners.

Calculating scores for expert raters

The expert rater of the psychiatry simulations used a scoring system, which encompassed knowledge of the consultation process and communication skills for student’s performance, as it pertains to Calgary–Cambridge model of the consultation interview.Citation25 This score for decision points and communication skills was examined as part of this G study.

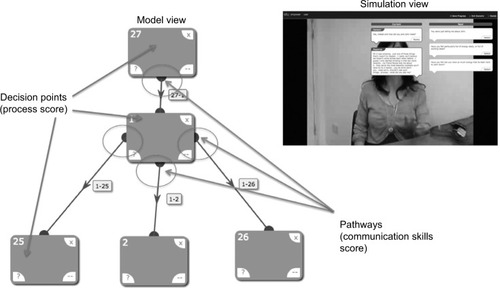

Students are presented with a dialogue option, prescripted by the expert–rater using an authoring tool within SkillSims™, to form multiple branching dialogue trees (). Each branch consists of a decision point and a pathway (between decision points). Both the decision point and pathway were assigned a score, based on how well that branch demonstrated the communication skill competencies required (within the Calgary–Cambridge model), when compared with the other branching pathway options available.Citation16–Citation19,Citation25

A decision point consists of a statement/action (option the student can choose) and a response (the simulation reaction to the student choice) from the patient across the stages of the consultation process, and optionally a coach to give the student feedback in practice mode. This is the measurement of knowledge of the consultation process.

A pathway is defined as the connections (path) between the decision points. This is the assessment of communication skills, which is possible in this adaptive simulation environment—communication skill score.

Knowledge of the consultation process refers to the ability to correctly follow the steps of a predetermined framework for consultation, Calgary–Cambridge Guide (CCG). For the purposes of this simulation, students were assessed on their steps through a decision pathway (ie, decision-making) and the requisite communication skills, which followed the CCG for medical consultation.Citation25 The stages of the consultation process were connected to decision points in the consultation as they pertained to the five stages of the CCG. For each stage in the simulation, decision points and communication skills were scored. Typically, a low score was produced if a student prematurely proceeded from one section to another or skipped a section entirely (according to expert judgment). The decision-making scores are cumulative, with the final score being presented to the student once they had finished the simulation.

Method

The objective of the research was to explore whether adaptive simulations can produce a valid and reliable measure of undergraduate medical students’ consultation skills. A generalizability analysis was conducted on the IT data analytics collected from the first use of the SkillSims software, obtained when undergraduate medical students (psychiatry module) at Trinity College Dublin interacted with two adaptive simulations as a mandatory part of communication skills training (using the Calgary–Cambridge model).Citation18

For the purposes of this study, we looked at the scoring mechanisms of these two adaptive simulations to investigate validity and reliability of scoring. The data analytics were provided by the research team who conducted an initial study in 2012 as part of a EU ImREAL project to carry out research and development in the field of experiential virtual training.Citation16,Citation24,Citation26 Their research on motivation and SRL indicated a positive effect on learning motivation and perceived performance with consistently good usability.Citation14,Citation16,Citation18,Citation26

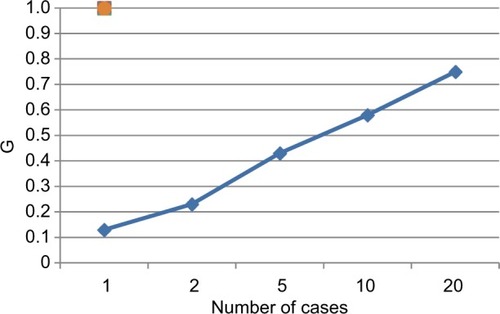

A simple person-by-case (P×C) G study using existing data of these two cases was used to examine variance components. The variance component estimates from the G study are presented in . The G study was conducted on a data set (n=129) for the variable for a score (y-axis in ), indicating knowledge of process and skills.

Table 1 Generalizability (G) study on “score” using a person-crossed-with-cases randomized model

Communication skill scores did not produce a reliable measure of communication ability for these students. However, a G study of the decision-point variable did perform reasonably well and provided evidence of substantial true score variance. The G coefficient for two cases was 0.23, which is consistent with other performance assessment results. In summary, the automated scores using the decision-point variable are similar to those obtained with rater-based scores. The results produced by the G study on the decision-point variable demonstrated that it could be used as an indicator of knowledge of the process of Calgary–Cambridge consultation skills.

Discussion

Assessment in medical education addresses complex competencies and thus requires quantitative and qualitative information from different sources as well as professional judgment from an expert rater.

Despite recognition that assessment drives learning, this relationship is challenging to research, one element being strong contextual dependence and there is increasing interest in assessment for, rather than learning which adaptive simulation exemplifies.Citation3

This research study was conducted by an interdisciplinary team (medical education, technology-enhanced learning, adaptive simulation, and psychometric measurement) to investigate whether it is possible to demonstrate comparable generalizability and performance to real-world raters. A key objective was to investigate the reliability between cases and explore a scoring method used in a self-scoring branching computer-based simulation (adaptive simulation) that aims to teach and assess the application of Calgary–Cambridge principles for undergraduate medical students. It provides an intermediate step in the learning process of conducting a patient interview. Specifically, it assesses the ability to recognize and apply Calgary–Cambridge concepts within the early stages of doctor–patient dialogue. A key objective of this study was to demonstrate the useful variance between subjects across cases.

These promising results from the analysis of initial data inform what we know about the scoring algorithm and prepare the next stage for a future study in the area of psychometric scoring in the assessment of adaptive simulations. Future research will require consideration around the ideal number of raters (n) to arrive at consensus of an accepted score (using the CCG approach) and the ideal number of different simulations to achieve adequate reliability.

Conclusion

In this adaptive simulation of the medical consultation to assess student’s performance, the automated scoring of decision points in the adaptive simulation produced results similar in robustness to those obtained with expert raters. This approach and scoring have potential for wider use in automated assessment of medical students.

The findings indicate that this approach to adaptive simulations has potential as a teaching and assessment tool for the medical consultation, which requires further development and research.

Acknowledgments

We thank Dr Fionn Kelly, Sen. Reg. (Expert rater), Prof. Ronan Conroy, DSc, Conor Gaffney, PhD, Declan Dagger, PhD, and Gordon Power, MSc, for their constructive comments on this article.

We would like to thank EmpowerTheUser (ETU Ltd) for allowing us access to these data.

Disclosure

The authors report no conflicts of interest in this work.

References

- StewartMPatient-Centered Medicine: Transforming the Clinical MethodVol Patient-centered care seriesAbingdonRadcliffe Medical Press2003

- TamblynRAbrahamowiczMDauphineeDPhysician scores on a national clinical skills examination as predictors of complaints to medical regulatory authoritiesJAMA20072989993100117785644

- Van der VleutenCPSchuwirthLWAssessing professional competence: from methods to programmesMed Educ200539330931715733167

- WoodTAssessment not only drives learning, it may also help learningMed Educ20094315619140992

- RaupachTHanneforthNAndersSPukropTThJten CateOHarendzaSImpact of teaching and assessment format on electrocardiogram interpretation skillsMed Educ201044773174020528994

- Van den EertweghVvan der VleutenCStalmeijerRvan DalenJScherpbierAvan DulmenSExploring residents’ communication learning process in the workplace: a five-phase modelPLoS One2015105e012595826000767

- ScaleseRJObesoVTIssenbergSBSimulation technology for skills training and competency assessment in medical educationJ Gen Intern Med200723Suppl 14649

- OkudaYBrysonEODeMariaSThe utility of simulation in medical education: what is the evidence?Mount Sinai J Med: A J Transl Personalized Med2009764330343

- GaffneyCDaggerDWadeVAuthoring and delivering personalised simulations-an innovative approach to adaptive eLearning for soft skillsJ UCS2010161927802800

- McGeeEDaggerDGaffneyCPowerGEmpower the User SkillSims Support Documentation, Empower the User Limited, Dublin, Ireland2013 Available from: http://www.etu.ie/Accessed November 14, 2016

- DaggerDWadeVConlanODeveloping Adaptive Pedagogy with the Adaptive Course Construction Toolkit (ACCT)Third International Conference, AH 2004August 23-26, 2004Eindhoven, The Netherlands

- DaggerDWadeVConlanOPersonalisation for all: Making adaptive course composition easyJ Educ Techno Soc200583925

- Barry IssenbergSMcgaghieWCPetrusaERLee GordonDScaleseRJFeatures and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic reviewMed Teach2005271102816147767

- BertholdMMooreASteinerCMAn initial Evaluation of Metacognitive Scaffolding for Experiential Training SimulatorsProceedings of European Conference on Technology Enhanced Learning18–21 September 2012Saarbrucken, Saarbrucken, Germany Berlin HeidelbergSpringer2012

- SteinerCMWesiakGMooreAAn Investigation of Successful Self-Regulated-Learning in a Technology-Enhanced Learning EnvironmentProceedings of International Conference on Artificial Intelligence in Education9–13 July 2012Memphis, USA

- WesiakGSteinerCMMooreAIterative augmentation of a medical training simulator: Effects of affective metacognitive scaffoldingComput Educ2014761329

- GaffneyCDaggerDWadeVSupporting Personalised Simulations: a Pedagogic Support Framework for Modelling and Composing Adaptive Dialectic SimulationsProceedings of Summer Computer Simulation Conference15–18 July 200711751182San Diego, CA, USA

- KellyFDaggerDGaffneyGStudent attitudes to a novel personalised learning system teaching in medical interview communication skillsMarcus-QuinnABruenCAllenMDundonADigginsYThe Digital Learning Revolution in Ireland: Case studies in practice from NDLRCambridge Scholarly PressCambridge, UK201299104

- JacksonVABackALTeaching communication skills using role-play: an experience-based guide for educatorsJ Palliat Med201114677578021651366

- SokolowskiJBanksCPrinciples of Modeling and SimulationHoboken, New Jersey, NYJohn Wiley and Sons2009209245.1

- ArgyrisCTeaching smart people how to learnHarv Bus Rev200969399109

- CoulthardGJA Review of the Educational Use and Learning Effectiveness of Simulations and Games [dissertation]Purdue University2009

- NestelDTabakDTierneyTKey challenges in simulated patient programs: an international comparative case studyBMC Med Educ2011116921943295

- WesiakGMooreASteinerCMAffective metacognitive scaffolding and enriched user modelling for experiential training simulators: a follow-up studyScaling Up Learn Sustained Impact2013396409

- SilvermanJKurtzSDraperJSkills for Communicating with Patients3rd edLondonRadcliffe Publishing2013

- MooreAGaffneyCDaggerDPreliminary content analysis of reflections from experiential training simulator supportPresented at: 4th Workshop on Self-Regulated Learning in Educational Technologies; ITS ConferenceJune 14–18, 2012Crete, Greece