Figures & data

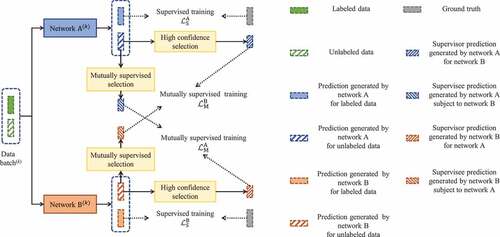

Figure 1. The overall paradigm of the cross-supervised learning. Both the Networks A and B update their parameters by performing supervised training for labeled data and mutually supervised training for unlabeled data in each training iteration.

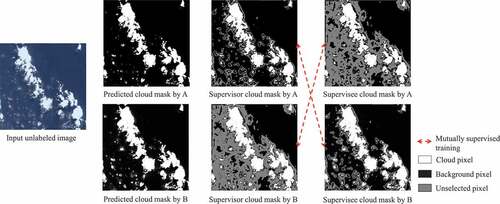

Figure 2. Cross-supervised learning at the th iteration. The high confidence selection operation generates supervisor prediction according to (12). The mutually supervised selection operation generates supervisee prediction according to (13).

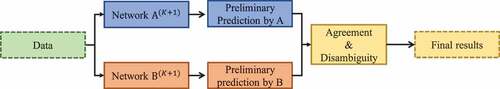

Figure 3. Agreement and disambiguity between two base networks. The agreement and disambiguity operation generates the final results by considering both the preliminary predictions generated by Networks A and B.

Algorithm 1: Cross-supervised Learning

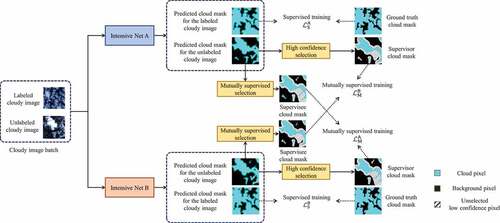

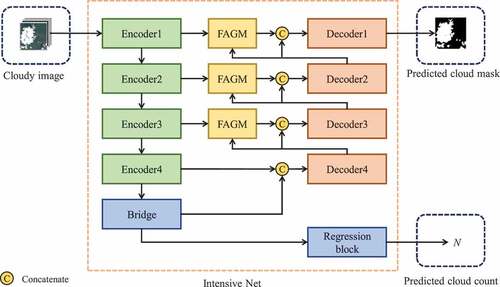

Figure 4. The framework of the In-extensive Nets. The In-extensive Nets take the labeled and unlabeled cloudy images as inputs. The included Intensive Nets A and B perform supervised training for labeled cloudy images and mutually supervised training for unlabeled cloudy images.

Figure 5. The architecture of the Intensive Net. The focal attention guide module (FAGM) reweighs the features of ambiguous regions from the encoders. The regression block regresses the cloud count of the cloud masks.

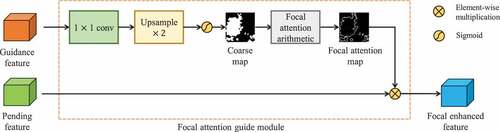

Figure 6. The architecture of the focal attention guide module (FAGM). The pending feature are enhanced by addressing the ambiguous regions considered by guidance feature through the FAGM.

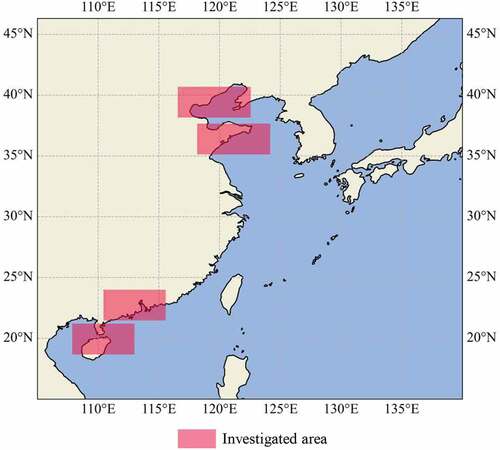

Figure 7. The investigated areas of the HY1C-UPC dataset. The main investigated areas are coastal zones around China.

Table 1. Ablation study of In-extensive Nets on the HY1C-UPC dataset.

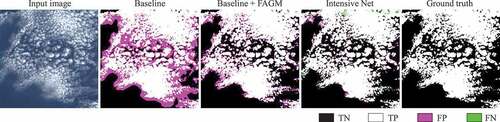

Figure 8. Visual comparison of the baseline, baseline + FAGM, and Intensive Net (Baseline + FAGM + regression block) on the HY1C-UPC dataset for ablation study.

Table 2. Accuracy assessment of PSPNet, DeepLab V3+, RS-Net, Cloud-Net, CDNet V2, and Intensive Net on the GF1-WHU, HY1C-UPC, and SPARCS datasets.

Table 3. Accuracy assessment of PSPNet, DeepLab V3+, RS-Net, Cloud-Net, CDNet V2, Intensive Net, Mean Teacher, SSCD-Net, and In-extensive Nets on the HY1C-UPC and SPARCS datasets.

Table 4. Accuracy assessment of PSPNet, DeepLab V3+, RS-Net, Cloud-Net, CDNet V2, Intensive Net, Mean Teacher, SSCD-Net, and In-extensive Nets at different labeled data rates on the GF1-WHU datasets.

Figure 9. Visual comparison of PSPNet, DeepLab V3+, RS-Net, Cloud-Net, CDNet V2, Intensive Net, Mean Teacher, SSCD-Net and In-extensive Nets on the GF1-WHU dataset at the labeled data of 12.50%.

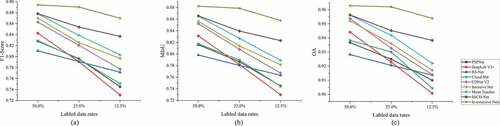

Figure 10. Comparison of the detection accuracy of different methods as cutting down the labeled data rate on the GF1-WHU dataset. (a) F1-score, (b) MIoU, and (c) OA.

Table 5. Accuracy assessment of In-extensive Nets with different threshold strategy on the HY1C-UPC dataset.

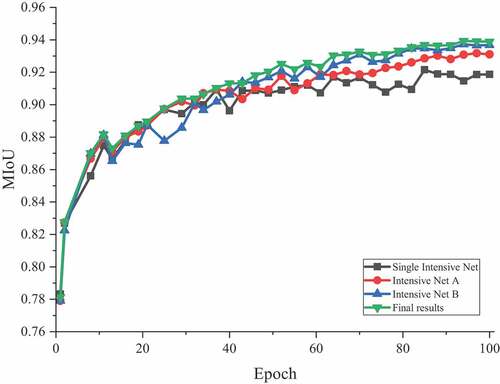

Figure 11. The accuracy assessment of single Intensive Net, Intensive Net A, Intensive Net B, and final results via agreement and disambiguity every epoch.

Figure 12. The intermediate results from In-extensive Nets in one training iteration from the 20th training epoch.

Table 6. Parameter and computation comparisons of PSPNet, SegNet, RS-Net, Cloud-Net, CDNet V2, Intensive Net, Mean Teacher, SSCD-Net, and In-extensive Nets.

Data Availability Statement

The GF1-WFV data that support the findings of this study are openly available at https://doi.org/10.1016/j.rse.2017.01.026, reference number li2017multi. For the HY1C CZI data, raw data were collected from https://osdds.nsoas.org.cn/. The training and testing data with cloud masks of this study are available along with our code at https://gitee.com/kang_wu/in-extensive-nets.