Figures & data

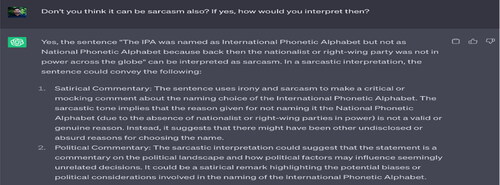

Example 2 “She wore a dress with pink dots and circles.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT was able to generate only one interpretation—that the dress had (a pattern of) pink dots and pink circles. The scope of ambiguity (does the word “pink” apply only to “dots” or to “dots and circles”) is not present in the interpretations provided by ChatGPT.

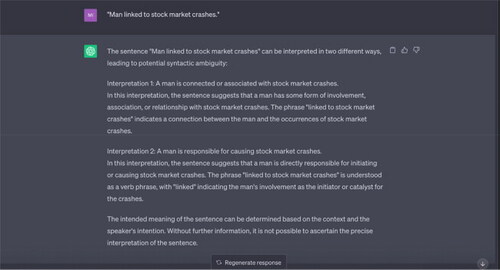

Example 3 “Man linked to stock market crashes.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT offers two interpretations, both of which are similar, since they treat “crashes” as modifying “stock market.” Another distinct interpretation is: “A man was linked to the stock market. This man has crashed.” ChatGPT’s two interpretations seem to revolve around semantic ambiguity in the word “linked,” rather than syntactic ambiguity relating to the word “crashes.”

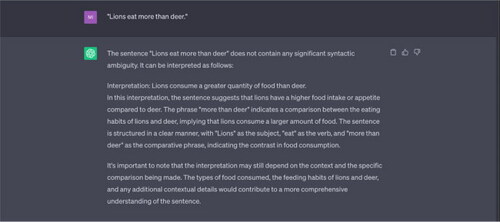

Example 4 “Lions eat more than deer.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT offers only one interpretation, that of a comparison between the amount eaten by lions and deer, and offers an explanation “that lions have a higher food intake or appetite compared to deer.” It does not consider that the sentence could be interpreted to mean that lions eat other animals, not just deer.

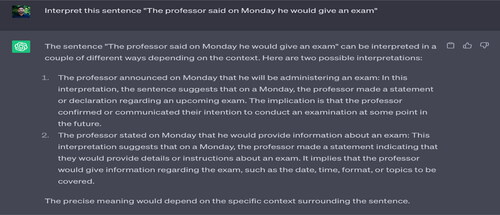

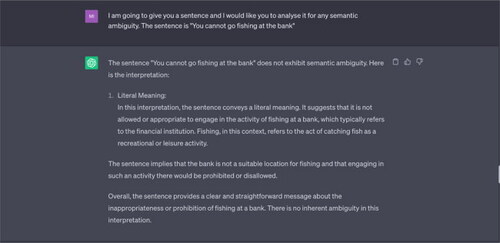

Example 5 “Irritating professors can cause problems for students.”

Source: Tested on ChatGPT on 17 May 2023.

Note: The word “irritating” here can be used as a verb or as an adjective. ChatGPT offers only one interpretation, i.e. it treats “irritating” as an adjective, treating it as part of the subject “irritating professors.” It doesn’t consider that the sentence might also mean: “[The act of] irritating professors can cause problems for students.”

In a previous input sentence, “Linguistics students are confusing people,” ChatGPT similarly offered only one interpretation (treating “confusing” as a part of the verb phrase, as part of the predicate, and ignoring the use of “confusing” as an adjective.

![Example 5 “Irritating professors can cause problems for students.”Source: Tested on ChatGPT on 17 May 2023.Note: The word “irritating” here can be used as a verb or as an adjective. ChatGPT offers only one interpretation, i.e. it treats “irritating” as an adjective, treating it as part of the subject “irritating professors.” It doesn’t consider that the sentence might also mean: “[The act of] irritating professors can cause problems for students.”In a previous input sentence, “Linguistics students are confusing people,” ChatGPT similarly offered only one interpretation (treating “confusing” as a part of the verb phrase, as part of the predicate, and ignoring the use of “confusing” as an adjective.](/cms/asset/5f447e32-0731-4de0-ba73-1cc42cb81ef1/oaah_a_2353984_a0004_c.jpg)

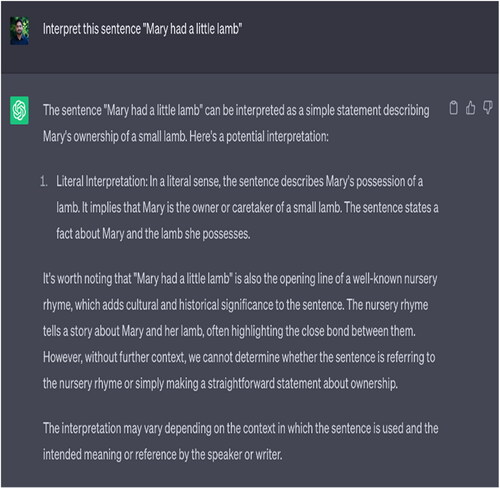

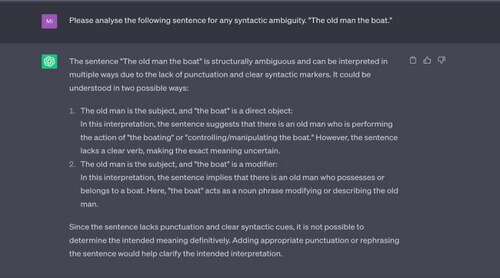

Example 6 “The old man the boat.”

Source: Tested on ChatGPT on 17 June 2023.

Note: This is quite a famous example of a syntactically ambiguous sentence, so it is surprising that ChatGPT was unable to parse it for ambiguity. The sentence only makes sense when “man” is treated as a verb form, giving us the meaning “The old (people) operate the boat.” However, ChatGPT is unable to identify and resolve this ambiguity, since it says the input sentence “lacks a clear verb,” “punctuation and clear syntactic clues.”

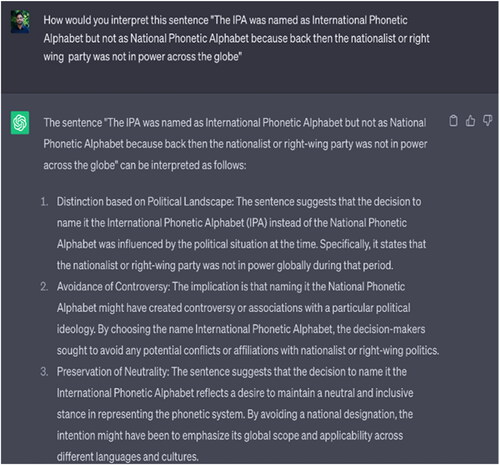

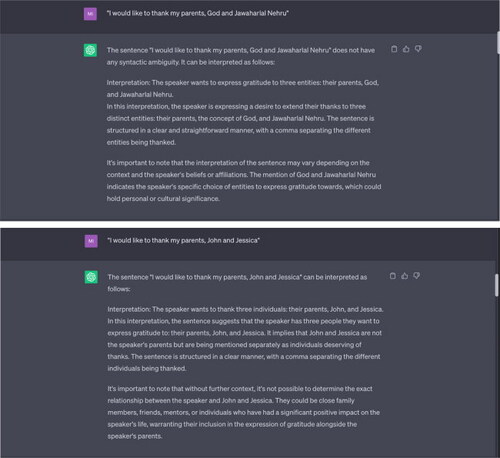

Example 7 “I would like to thank my parents, God and Jawaharlal Nehru/I would like to thank my parents, John and Jessica.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT only offers one interpretation of this sentence, which is that the speaker is thanking three separate entities: (their) parents, God, and Jawaharlal Nehru. It does not consider that the comma after “parents” might indicate that God and Jawaharlal Nehru (two separate entities) could be the “parents” referred to by the speaker. Even in the second example made simpler through the use of common Western names (John and Jessica) it does not interpret John and Jessica as the speaker’s parents but instead says the exact relationship between the speaker, John, and Jessica is unclear. It says the sentence is “structured in a clear manner, with a comma separating the different individuals being thanked.” However, there is no comma in the input sentences separating the last two entities (God and Jawaharlal Nehru; John and Jessica).

Example 8 “The teacher punished the student with a zero.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT does not consider the interpretation “The teacher punished the student who had scored a zero.” The phrase “with a zero” could indicate “the means or method of punishment used by the teacher,” as suggested by ChatGPT, or it could modify “the student,” describing the student who was punished. This latter interpretation is not offered by ChatGPT.

Example 9 “I’m glad I am healthy and so are you.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT offers only one interpretation, connecting the latter clause “so are you” to “healthy,” rather than “glad.” This would give us another interpretation: “I am glad I am healthy and you are also glad that I am healthy.”

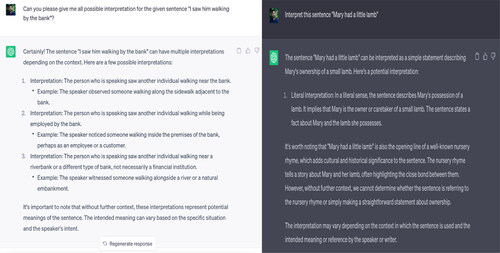

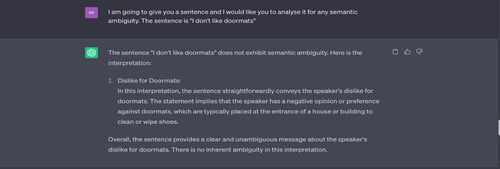

Example 1 “I don’t like doormats.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT offers only one interpretation of this sentence, treating “doormats” as meaning a mat typically placed near a door/entrance. It does not consider the alternative meaning, which refers to a submissive or unassertive person. The speaker might be declaring their dislike of meek or submissive people, rather than a mat placed near a door.

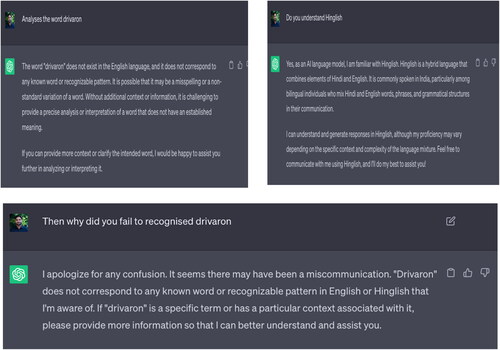

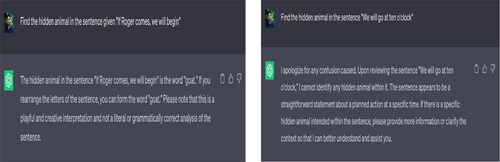

Example 2 “You cannot go fishing at the bank.”

Source: Tested on ChatGPT on 17 June 2023.

Note: In both times that this input sentence was tested, ChatGPT offers only one interpretation of “bank” each time, treating “bank” as a “financial institution” during the first test [17 May], and as “edge of a body of water” during the second test [17 June]. Clearly, it is aware of both meanings, but strangely does not offer both interpretations and insists that “there is no inherent ambiguity” [17 May] and “the sentence does not possess semantic ambiguity” [17 June].

![Example 2 “You cannot go fishing at the bank.”Source: Tested on ChatGPT on 17 June 2023.Note: In both times that this input sentence was tested, ChatGPT offers only one interpretation of “bank” each time, treating “bank” as a “financial institution” during the first test [17 May], and as “edge of a body of water” during the second test [17 June]. Clearly, it is aware of both meanings, but strangely does not offer both interpretations and insists that “there is no inherent ambiguity” [17 May] and “the sentence does not possess semantic ambiguity” [17 June].](/cms/asset/8c436e0e-5487-4e3c-a9f3-8a75f7924fa1/oaah_a_2353984_a0011_c.jpg)

Example 3 “John and his licence both expired last week.”

Source: Tested on ChatGPT on 17 May 2023.

Note: Although ChatGPT correctly interprets the sentence, it fails to explain the zeugma, or the different ways in which the word “expired” apply to John and his licence.

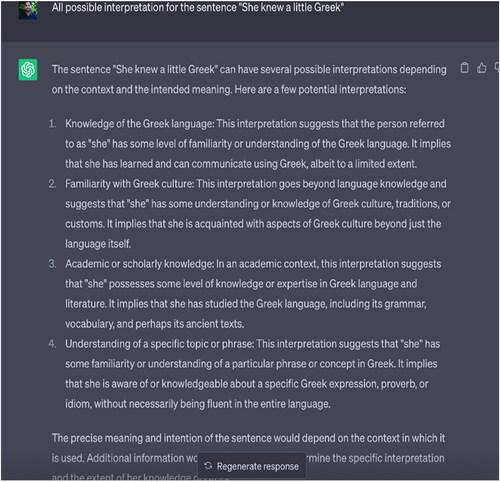

Example 4 He got a new mouse yesterday.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT does not recognise any semantic ambiguity in the input sentence. It offers only one interpretation of the word “mouse.” It does not consider that the speaker might have bought a new animal (a small rodent), as a pet mouse.

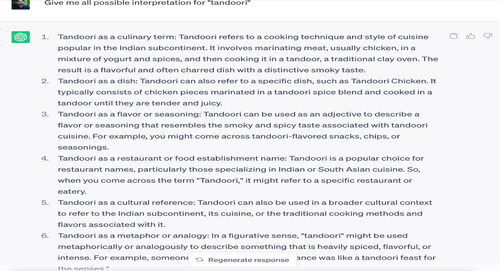

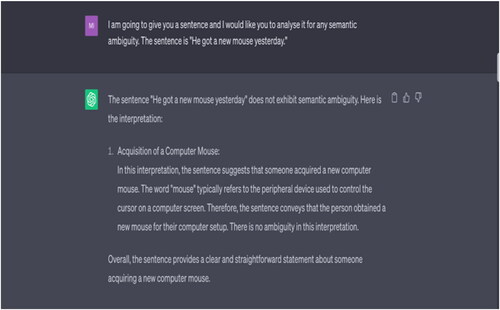

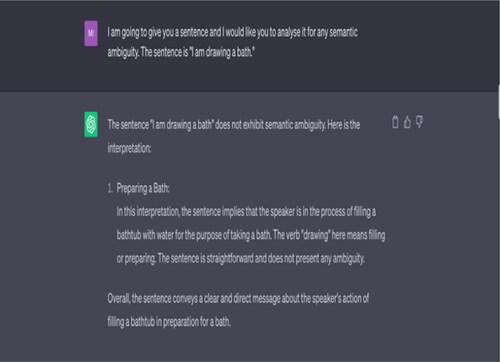

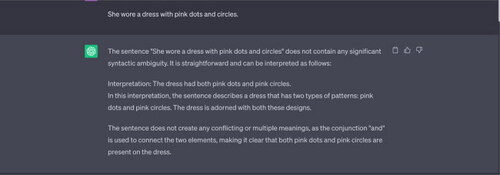

Example 5 “I am drawing a bath.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT does not recognise any semantic ambiguity in the input sentence. It offers only one interpretation of the word “drawing.” It does not consider that the speaker might be making a painting (“drawing”) of a bath. According to ChatGPT, “[t]he sentence is straightforward and does not present any ambiguity.”

![Example 5 “I am drawing a bath.”Source: Tested on ChatGPT on 17 May 2023.Note: ChatGPT does not recognise any semantic ambiguity in the input sentence. It offers only one interpretation of the word “drawing.” It does not consider that the speaker might be making a painting (“drawing”) of a bath. According to ChatGPT, “[t]he sentence is straightforward and does not present any ambiguity.”](/cms/asset/3657567a-856f-41e1-a815-911d649bc38a/oaah_a_2353984_a0014_c.jpg)

Example 6 “Fruit flies like bananas.”

Source: Tested on ChatGPT on 17 May 2023.

Note: This input sentence is part of a longer formulation: “Time flies like an arrow. Fruit flies like bananas.” ChatGPT does not offer the possibility of interpreting “flies” as the simple present tense of “fly” (to move through the air using wings). The resulting sentence, although syntactically correct, would be nonsensical, similar to “The car ate a sandwich for lunch” or “Colourless green ideas sleep furiously.” However, the purpose of this exercise is to test whether the LLM is able to generate these interpretations or not, not to see if these interpretations produce meaningful sentences.

Example 7 “The bat flew overhead.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT does not recognise any semantic ambiguity in the input sentence. It offers only one interpretation of the word “bat,” i.e. a small flying mammal. It does not consider that the speaker might be describing a wooden sporting instrument (such as a cricket bat) moving swiftly through the air above the speaker’s head.

Example 8 “I prefer this date to the others.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT considers only one interpretation of “dates,” i.e. as referring to “a specific point in time or calendar day.” It does not consider all the other meanings that might be inferred, such as a small, dark fruit, or a romantic engagement/person with whom one has a romantic engagement.

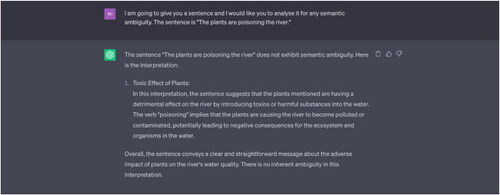

Example 9 “The plants are poisoning the river.”

Source: Tested on ChatGPT on 17 May 2023.

Note: ChatGPT does not provide a definite interpretation of the word “plants,” only stating that plants are “introducing toxins… into the water.” It is unclear if it reads this sentence as referring to polluting living organisms such as small trees or shrubs, or to industrial facilities.