Figures & data

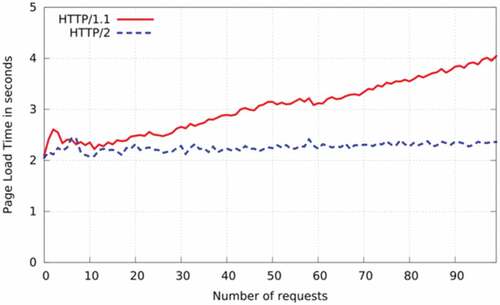

Figure 2. Comparing the loading time between HTTP/1.1 and HTTP/2 (De Saxcé, Oprescu, and Chen Citation2015).

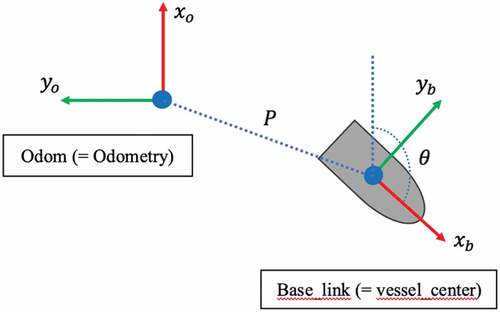

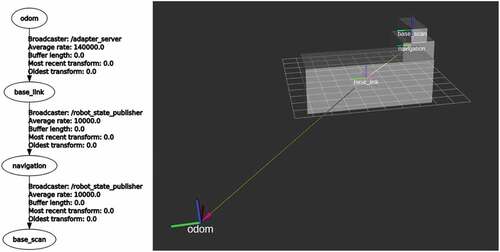

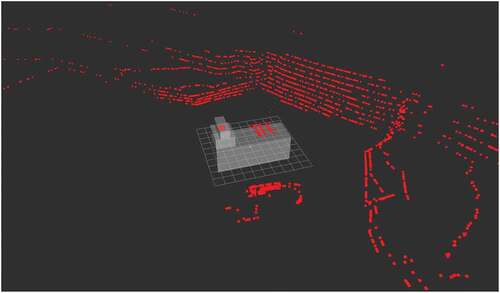

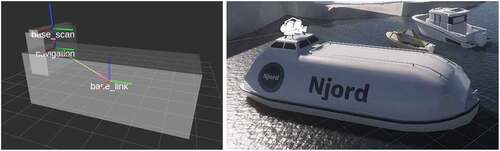

Figure 3. Vessel model with coordinate systems (base_link, navigation, base_scan) in ROS RVIZ, and Unity (NJORD Citation2021).

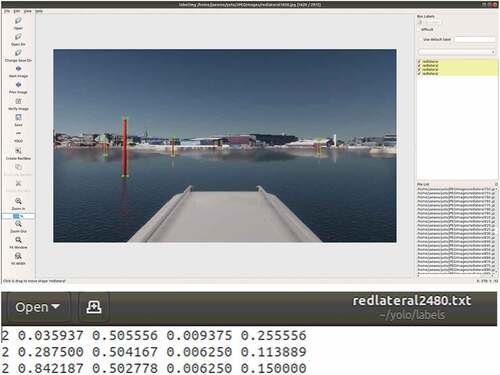

Figure 12. LabelImg program for labelling and labeled text file (representing class, x position, y position, width, height).

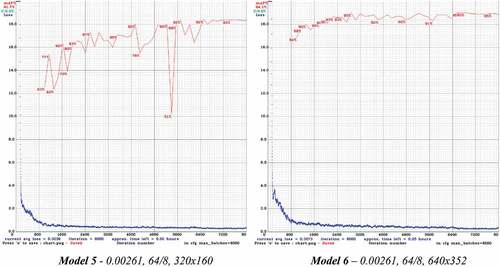

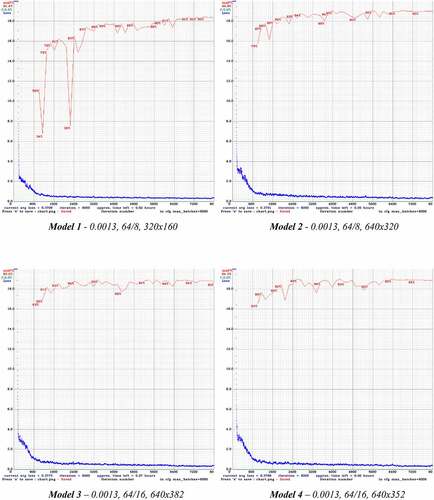

Figure 13. YOLO v4 models – Training process (red line – mAP, blue line – Loss).

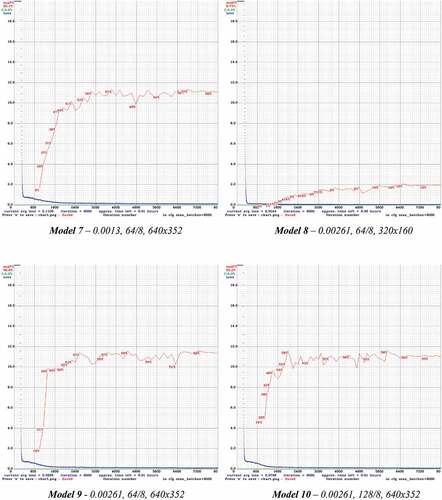

Figure 14. YOLO v4 – tiny models – Training process (red line – mAP, blue line – Loss).

Table 1. Performances of YOLOv4 by Hyperparameter

Table 2. Performances of YOLOv4-tiny by Hyperparameter