?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Many optimal control tasks for engineering problems require the solution of nonconvex optimisation problems, which are rather hard to solve. This article presents a novel iterative optimisation procedure for the fast solution of such problems using successive convexification. The approach considers two types of nonconvexities. Firstly, equality constraints with possibly multiple univariate nonlinearities, which can arise for nonlinear system dynamics. Secondly, nonconvex sets comprised of convex subsets, which may occur for semi-active actuators. By introducing additional decision variables and constraints, the decision variable space is decomposed into affine segments yielding a convex subproblem which is solved efficiently in an iterative manner. Under certain conditions, the algorithm converges to a local optimum of a scalable, piecewise linear approximation of the original problem. Furthermore, the algorithm tolerates infeasible initial guesses. Using a single-mass oscillator application, the procedure is compared with a nonlinear programming algorithm, and the sensitivity regarding initial guesses is analysed.

1. Introduction

Many optimal control tasks for engineering problems require the solution of nonconvex optimisation problems (OPs), which are harder to solve than convex ones. The nonconvexity can have various causes. Restrictions in the operating range of actuators can result in nonconvex sets. Nonconvexity can also be caused by nonlinear system dynamics resulting in nonlinear and thus nonconvex equality constraints. Since a fast and robust computation of the optimum is always desirable and even essential for real-time control applications, convexification represents a fundamental field in optimal control. Convex OPs can be solved efficiently and robustly with elaborate solvers capable of computing the global optimum with high accuracy and generally short computation times. Various convexification methods have been proposed, which generally solve nonconvex problems by iteratively solving convex substitute problems.

1.1. Literature survey on convexification techniques

Lossless convexification (LC) transforms a special class of nonconvex sets into convex ones by lifting the optimisation space into a higher dimension using additional decision variables (Acikmese & Blackmore, Citation2011; Acikmese & Ploen, Citation2007). The approach is applicable to a special class of nonconvex sets: sets generated by removing a convex subset from a convex set. This has been used in aeronautic optimal control applications to successfully convexify annulus-shaped sets. An overview regarding the individual findings on LC is given in Raković and Levine (Citation2018, p.338).

Another often employed technique for convex approximation is linearisation since linear objectives and constraints are convex (Boyd & Vandenberghe, Citation2004). However, linearisation can introduce conservativeness and infeasibility since the resulting accuracy strongly depends on the nonlinearity as well as the linearisation point. Various linearisation techniques have been used to approximate nonlinear systems when applying optimal control methods. In the simplest case, the system is linearised at each time instant around the current operating point, exemplarily implemented in Falcone et al. (Citation2007). However, the model approximation is only sufficiently valid in a close neighbourhood around the linearisation point. With increasing distance to this point, the linearised OP can strongly differ from the original one. Thus, this approach can result in poor controller performance or even lead to infeasibility or instability.

In order to mitigate linearisation errors, the system can be linearised around predicted trajectories resulting in efficiently solvable quadratic programming (QP) problems (Diehl et al., Citation2005; Seki et al., Citation2004). One method using iterative linearisation around trajectories in a model predictive control (MPC) setup is the so-called real-time iteration approach (Diehl et al., Citation2005). The procedure assumes that at every time instant, the shifted solution from the preceding time instant represents a good initial guess close to the actual solution. Thus, a full Newton-step is taken, omitting underlying line-search routines to reduce computation time.

Another iterative scheme linearising around changing trajectories has been presented in Mao et al. (Citation2016). Starting from an initial trajectory, the nonlinear system equations are successively linearised around the solution computed in the preceding iteration. Adding virtual control inputs eliminates the artificial infeasibility introduced by the linearisation. Furthermore, including trust regions ensures that the solution does not deviate too much from the preceding succession and thus the linearisation represents a valid approximation. This avoids unboundedness of the linearised OP. Based on the ratio between actual objective change and linearised objective change, the algorithm adjusts the trust regions and terminates if the objectives coincide.

A linearise-and-project (LnP) approach has been used in Mao et al. (Citation2017) to resolve the nonconvexity due to nonlinear system equations and nonconvex constraints. The approach presumes convex functions for the right-hand side of the system differential equations. The satisfaction of the system dynamics is ensured by relaxing the corresponding equality constraints into inequality constraints and using exact penalty functions. In an iterative optimisation routine, the computed solution is projected onto the constraints in each iteration to gain adequate linearisation points.

Instead of linearising the system equations around trajectories, a linear input-to-state behaviour can be enforced via feedback-linearisation (Khalil, Citation2002, p. 505). An optimal control method can then be used to optimally control the linearised system in an outer control loop (Del Re et al., Citation1993). However, it is possible that the nonlinear mapping of the feedback linearisation transforms originally convex objective and constraint functions into nonconvex ones (Simon et al., Citation2013). Then, the exact satisfaction of these constraints requires a nonlinear programming (NLP) strategy or an iterative scheme which both eliminate the computational benefits of the feedback-linearisation (Kurtz & Henson, Citation1997). One possible solution to this problem is approximating these constraints by estimating future inputs based on the preceding time instant within an MPC scheme (Kurtz & Henson, Citation1997; Schnelle & Eberhard, Citation2015). The authors in Simon et al. (Citation2013) have proposed an alternative MPC approach that replaces such nonlinear constraints via dynamically generated local inner polytopic approximations rendering the OP convex.

Fuzzy MPC with models of Takagi-Sugeno (TS) type has been successfully used for nonlinear MPC. The TS modelling procedure enables an accurate approximation of nonlinear systems by using data combined with model knowledge (Tanaka & Wang, Citation2004). The modelling approach decomposes nonlinear models into several local approximations which are blended together via fuzzy rules. However, this requires the system matrices to be stored, thus occupying more memory. Although TS fuzzy models have been successfully used in nonlinear MPC, computation time can be strongly reduced by linearising the TS models around predicted trajectories (Mollov et al., Citation2004, Citation1998). The resulting convex QP problem can be solved efficiently while guaranteeing convergence via a line search mechanism.

Differential dynamic programming initially linearises the system equations around a nominal trajectory. Starting from the terminal state, a backward pass identifies the optimal controller gains of a linear quadratic regulator at each time step using the linearised system. Subsequently, a forward pass computes new nominal trajectories via numerical integration using the controller gains previously computed in the backward pass. This procedure is repeated until convergence. Iterative linear quadratic regulators are a variant of differential dynamic programming (Mayne, Citation1973). The main difference is that only first-order derivatives are used for the approximation of the system dynamics via Taylor expansion instead of second-order ones which decreases computation time (Tassa et al., Citation2012). Constrained versions of this optimisation technique have been presented in Tassa et al. (Citation2014), Xie et al. (Citation2017) and Chen et al. (Citation2017).

Lifting-based MPC methods represent another class of linearisation approaches used for solving optimal control problems (OCPs) with nonlinear systems. By lifting the nonlinear dynamics to a space of higher dimension, the evolution of the system can be represented in a bilinear or linear fashion via Carleman linearisation or the Koopman operator framework, respectively. Representing the nonlinear system equations via bilinear terms enables the analytical computation of sensitivity functions (Armaou & Ataei, Citation2014) and of solutions (Fang & Armaou, Citation2015) which speeds up computation time. The Koopman MPC approach employs a linear predictor of higher order, which is identified via data sets, to approximate the nonlinear system (Korda & Mezić, Citation2018). The resulting larger OCP is condensed by eliminating the state decision variables and then solved for the input decision variables using linear MPC. Hence, this method enables a fast solution of nonlinear OCPs. However, identifying the linear Koopman system involves some effort requiring an adequate selection of basis functions, non-recurrent data sets and the solution of convex OPs (Cibulka et al., Citation2019). Furthermore, the Koopman MPC approach does not always outperform the standard MPC method using local linearisations (Cibulka et al., Citation2020). Depending on the dimension of the lifted states, both approaches can require the storage of a great number of offline computed matrices.

Various local methods for solving nonconvex quadratically constrained quadratic programming (QCQP) problems have been summarised in d'Aspremont and Boyd (Citation2003) and Park and Boyd (Citation2017). A two-phase coordinate descent method first reduces the maximum constraint violation trying to find a feasible point. The second phase is restricted to feasible points only and searches in each iteration for a feasible point with a better objective function value. The convex-concave procedure (CCP) is a method for finding a local optimum for difference-of-convex programming problems, thus suitable for QCQP. The nonconvex part of the constraints is linearised rendering the constraints convex. In order to deal with infeasible initial guesses, penalty CCP relaxes the linearised constraints via slack variables and introduces a gradually increasing penalty objective for constraint violations. The alternating directions method of multipliers (ADMM) is a variant of the augmented Lagrangian method. It forms an equivalent OP by using auxiliary decision variables which must satisfy a consensus constraint. The objective function is augmented using switching indicator functions which penalise individual constraint violations. The augmented objective function terms are separated into two groups of decision variables. Instead of solving the proximal augmented Lagrangian function for both groups of decision variables simultaneously, the solution is computed using an alternating approach. First, the first group of decision variables is fixed and the problem is solved for the second group. Then, the solution of the second group is fixed and the problem is solved for the first one. The results are used for the dual variable update and the procedure is repeated. This alternating approach requires the solution of simplified QCQP problems enhancing computation time compared to simultaneously solving for both groups of decision variables.

Convexification measures for sequential quadratic programming (SQP) approaches on a more algorithmic level have been presented in Verschueren (Citation2018). Many QP solvers do not support an indefinite Hessian of the Lagrangian since then a descent direction is not guaranteed. The author has presented a structure-preserving convexification procedure for indefinite Hessians. The convexified Hessian can be fed to any structure-exploiting QP solver (Verschueren, Citation2018, p. 39). Furthermore, sequential convex quadratic programming is proposed which uses second-order derivatives of convex objective functions and convex constraint functions to construct positive definite Hessians enabling the sequential solution of convex QP problems (Verschueren, Citation2018, p. 70).

Using the notion of space decomposition for convexification, global optimisation (GO) techniques have similarities with the convexification approach presented in this article. In order to compute the global optimum of continuous, nonconvex OPs, spatial branch-and-bound (BnB) techniques iteratively divide the search space into increasingly smaller subdomains (Liberti, Citation2008; Liberti & Maculan, Citation2006; Ryoo & Sahinidis, Citation1995; Tawarmalani & Sahinidis, Citation2002). A convex relaxation of nonconvex functions is applied on each subdomain. The efficiently solvable relaxed problem provides a lower bound of the objective function for the corresponding subdomain. The comparison with an upper bound, which can be computed using local optimisation techniques, determines if a further space subdivision is necessary. Thus, this technique searches over a tree whose nodes correspond to individual relaxed problems which consider different subdomains. Comparing the solutions on the individual subdomains provides the global solution. Tree nodes are excluded on the fly based on the evaluation of the lower and upper bounds of the individual nodes eliminating the need to explore the entire domain.

1.2. Contribution

In this article, a novel successive convexification method called space splitting convexification (SSC) is proposed aiming at reducing the computation time required to solve nonconvex OPs. The key contribution of the approach is the space decomposition procedure which provides a piecewise linear approximation of the original, nonconvex problem by introducing auxiliary decision variables and absolute value constraints (AVCs). These AVCs possess a beneficial structure for linearisation and point projection onto constraints. The AVCs are transferred to the objective function using the concept of exact penalty functions and then iteratively linearised around changing points. The linearisation of the AVCs results in a binary decision: wrong or right sign of the AVC-linearisation. In each iteration, the computed solution is projected onto the AVCs and the sign is corrected if necessary enabling the linearisation errors to vanish in subsequent iterations. This concludes the iterative solution of a convex QP problem or even linear programming (LP) problem if the original objective is linear. The violation of the linearised AVCs serves as feedback if the correct sign was chosen which is used as an indicator for convergence. The approach is capable of considering two types of nonconvexities. Firstly, a class of nonconvex sets that can be split into convex subsets which are called zonally convex sets (ZCSs) in this article. Such sets arise for instance when semi-active actuators are used. Secondly, equality constraints with possibly multiple but univariate nonlinearities. As already mentioned, a common source for these nonlinear equality constraints are nonlinear system equations when applying optimal control methods. Requiring only simple mathematical operations, the AVC-projection is of linear computational complexity. Furthermore, the binary nature of the AVC-linearisation generally results in few superordinate iterations. Thus, the SSC algorithm greatly reduces computation time enabling an efficient solution in polynomial-time. Furthermore, robust initialisation properties are given since the initial guess is not required to be feasible. The algorithm converges under certain conditions to local optima of the piecewise linear substitute problem, which represents a scalable approximation of the original problem. However, being a local solution method, the computed local solution generally depends on the provided initial guess. For good initialisations, the algorithm computes solutions which are close to the global optimum.

1.3. Article structure

The article is organised as follows. The novel successive convexification method is presented in Section 2. Starting with the general formulation of optimal control problems, the original static OP for the numerical solution of the optimal control task is presented in Section 2.1. Afterwards, Section 2.2 derives the successive convexification algorithm and provides a proof of convergence. The specific application of the SSC algorithm to two-dimensional ZCSs and nonlinear equality constraints is illustrated in Section 2.3. Remarks on the computation time of the algorithm are given in Section 2.4. The comparison of the SSC approach with existing methods in Section 2.5 concludes the theoretical part of the article. Using a single mass oscillator (SMO) application as an example, the SSC algorithm is compared with the NLP solver IPOPT (Wächter & Biegler, Citation2006) in Section 3. The required NLP problem and QP problem are defined in Section 3.1 and the corresponding results are discussed in Section 3.2. Furthermore, the sensitivity regarding initial guesses is analysed in Section 3.3 and rotated space splitting is briefly addressed in Section 3.4. The article closes with concluding remarks in Section 4. In order to promote swift comprehension, a table of notation is included at the end of the article.

2. Space splitting convexification

Optimal control deals with computing the optimal input and state trajectories for a given system model. The motion of a nonlinear, continuous, time-invariant system is given by the differential equation system

(1)

(1) with states

and inputs

. An optimal control trajectory

for a system described by (Equation1

(1)

(1) ) and time interval

can be computed by solving a dynamic OP of following form:

(2a)

(2a)

(2b)

(2b)

(2c)

(2c)

(2d)

(2d) The performance index

in (Equation2a

(2a)

(2a) ) to be minimised is comprised of the terminal cost function

and the intermediate cost term ϕ. Equality constraint (Equation2b

(2b)

(2b) ) ensures that the system equations (Equation1

(1)

(1) ) are satisfied. General equality constraints

and inequality constraints

are included via (Equation2c

(2c)

(2c) ) and (Equation2d

(2d)

(2d) ), respectively. Solving the dynamic OP (2) analytically is generally only possible for simple problems. Thus, dynamic OPs are mostly solved numerically via direct or indirect methods (Sedlacek et al., Citation2020c). While direct methods solve the original OP, indirect methods deduce a boundary-value problem which is analytically derived from the optimality conditions. A discretisation of trajectories via collocation or shooting can be used to obtain a static OP. Collocation methods employ decision variables for the inputs and states at discrete time points and deduce the trajectory via polynomial interpolation between these points. Single shooting methods only employ decision variables for the inputs and compute the corresponding state trajectories via numerical integration. Multiple shooting methods divide the time interval into subintervals on which the numerical integration is performed. The states at the interval margins represent additional decision variables and continuity constraints are introduced to ensure that the boundary points of the individual integration segments coincide. A direct collocation method is employed in this article due to the simpler initialisation of direct methods and the increased sparsity of collocation methods.

2.1. Problem formulation

Using separated Hermite-Simpson collocation with a discretisation into segments yields

collocation points and thus the vector of decision variables

(3)

(3) The SSC procedure is applicable to NLP problems of the form

(4a)

(4a)

(4b)

(4b)

(4c)

(4c)

(4d)

(4d)

(4e)

(4e) with indices

and

representing the corresponding collocation segment and collocation point, respectively. Objective (Equation4a

(4a)

(4a) ) is assumed to be quadratic and convex in the decision variables with

,

and

. The positive semi-definiteness of

concludes the convexity of the objective. Collocation constraints (Equation4b

(4b)

(4b) ) ensure that the system equations (Equation1

(1)

(1) ) are satisfied. For Hermite-Simpson collocation, these equality constraints are given by

(5)

(5) with

and segment width

(Betts, Citation2010; Kelly, Citation2017). The SSC approach considers nonlinear right-hand sides of the form

(6)

(6) with

,

and

. Therein

represents a composition of univariate nonlinearities that can be depicted or approximated by piecewise linear curves. For simplicity, it is assumed that the remaining equality constraints (Equation4c

(4c)

(4c) ) are affine in the decision variables. The inequality constraints (Equation4d

(4d)

(4d) ) are required to be convex in the decision variables. However, aiming at constructing a QP problem, it is assumed below that the inequality constraints are even affine. This stricter assumption can be posed without loss of generality, since convex inequality constraints can be approximated via a polyhedron using multiple affine functions (Boyd & Vandenberghe, Citation2004, p. 32). The inequalities (Equation4e

(4e)

(4e) ) depict ZCSs, which are nonconvex sets that can be split into convex subsets.

2.2. Successive convexification procedure

Considering the standard form of convex OPs (Boyd & Vandenberghe, Citation2004, p. 136), all equality constraints must be affine in the decision variables to enable the convexity of the problem. Hence, a nonlinear right-hand side of the system equations (Equation1(1)

(1) ) results in nonlinear equality constraints (Equation5

(5)

(5) ), yielding a nonconvex OP. Furthermore, the nonconvex inequality constraints (Equation4e

(4e)

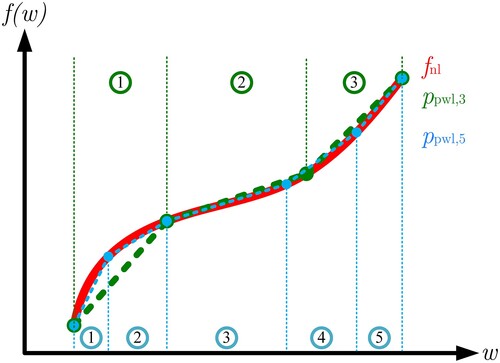

(4e) ) also prohibit a convex problem. The SSC algorithm iteratively solves a convex substitute problem which is derived from the original problem (4) as schematically illustrated in Figure . The core idea of this concept is considering the transformed problem (15) which represents a piecewise linear approximation of the original problem (4). This transformation is achieved by introducing space splitting constraints. These constraints are nonconvex but render the original constraints convex. Furthermore, they possess an advantageous structure which is exploited by the algorithm. In order to deal with the remaining nonconvexity, the nonconvex space splitting constraints are transferred to the objective following the theory of exact penalty functions. The resulting problem (19) possesses a convex feasible set but a nonconvex objective. Thus, the nonconvex part of the objective is iteratively linearised around points that are adjusted based on the previous iterate using an intermediate projection routine. This yields the convex problem (22), which is sequentially solved within the SSC algorithm. A smoothable absolute value function is introduced

(7)

(7) which recovers the true absolute value for

. The intermediate OPs (15)

and (19)

depicted in Figure apply

to provide continuous differentiability for the validity of the optimality conditions legitimising the application of exact penalty functions. Afterwards, this smoothing is removed since it represents an approximation which is not required in problem (22) for continuous differentiability. The subsequent sections describe the problem transformation process in more detail.

2.2.1. Space splitting constraints

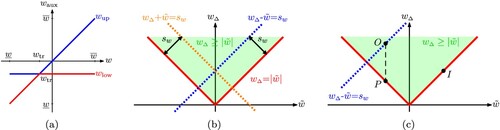

Before presenting the individual OPs, the basic principle for the space splitting of a decision variable is illustrated in this section providing graphical support. If collocation is used, the inputs as well as states represent decision variables. Thus, the input space and the state space can be split. The splitting procedure is presented using as a representative decision variable which is split at the transition point

. As illustrated in Figure (a), the auxiliary decision variables

and

are introduced aiming at satisfying the following relations:

(8)

(8)

Figure 1. Transformation of OP; Dotted and solid arrows represent approximated and exact transformations, respectively.

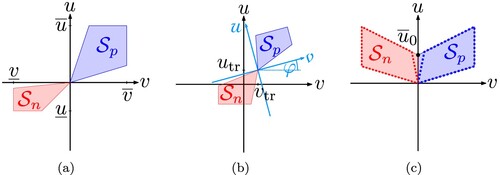

Figure 2. Space splitting. (a) Splitting of decision variable w into and

. (b) Absolute value constraint (Equation9b

(9b)

(9b) ) and its relaxed linearisation according to (Equation13a

(13a)

(13a) ). (c) Projection after optimisation with initially wrong sign; I: initial guess; O: optimum; P: projection of optimum.

Lemma 2.1

The splitting relations (Equation8(8)

(8) ) can be implemented via the splitting constraints

(9a)

(9a)

(9b)

(9b)

Proof.

Solving (Equation9a(9a)

(9a) ) for one of the auxiliary decision variables yields

(10a)

(10a) Inserting (Equation10a

(10a)

(10a) ) into (Equation9b

(9b)

(9b) ) results in

(10b)

(10b) Thus, constraints (9) ensure the desired splitting according to (Equation8

(8)

(8) ).

The AVC (Equation9b(9b)

(9b) ) is relaxed into a convex inequality constraint which can be depicted using two linear inequality constraints

(11a)

(11a) Furthermore, the linearisation of the AVC based on the result of the preceding iteration

is added as an additional objective term in form of an exact penalty function

(11b)

(11b)

(11c)

(11c)

with penalty parameter τ. Considering (Equation11b(11b)

(11b) ), the linearisation breaks down to choosing the sign

of the absolute value based on the previous solution

.Furthermore, the projection of the previous solution onto the constraints in (9) follows from (Equation8

(8)

(8) ) and provides the initial solution, marked as

, for the subsequent iteration:

(12)

(12) The projection step is depicted in Figure (c) for an initial guess with a different sign than the computed solution:

. For a graphical interpretation, the additional cost term (Equation11c

(11c)

(11c) ) can be replaced by an additional relaxed equality constraint with the slack variable

being minimised via a penalty objective:

(13a)

(13a)

(13b)

(13b) Since adding slack variables increases the number of decision variables, this is not desirable from an implementation standpoint; however, it fosters comprehension. The relaxation of constraint (Equation13a

(13a)

(13a) ) via the slack variable

is necessary to avoid infeasibility, which is illustrated in Figure (b): Since

holds, an unrelaxed constraint (Equation13a

(13a)

(13a) ) with

would only allow values satisfying

for

and only values

for

. However, the sign is based on the previous iteration and can thus be wrong. Then, a relaxation represented by the slack variable is necessary to allow values

of the opposite region and avoid infeasibility. The sign is corrected for the subsequent iteration step to enable eradicating the additional objective (Equation13b

(13b)

(13b) ) and thus the slack variable. This concludes that the initial solution does not have to represent a feasible solution, which results in robust initialisation behaviour. The relaxation via the slack variable

in (Equation13a

(13a)

(13a) ) corresponds to the violation of constraint

via (Equation11c

(11c)

(11c) ). The fact that the constraint violation will be large in case of a wrong sign is used as feedback for the algorithm. The algorithm will iterate until a feasible point satisfying

is reached. Then, the additional objective (Equation11c

(11c)

(11c) ) vanishes.

2.2.2. Piecewise linear approximation of optimisation problem

The first step towards a convex OP is the derivation of a piecewise linear approximation of the original problem (4). This is achieved by using the concept of space splitting presented in the previous section. Since multiple decision variables can be split, the formulation of the OP is posed using the augmented vector of decision variables

(14)

(14) with the auxiliary decision variables

. Therein some of the original states and inputs are excluded when they are depicted using affine transformations as illustrated in Section 2.3.1. The corresponding substitute problem is given for all

by

(15a)

(15a)

(15b)

(15b)

(15c)

(15c)

(15d)

(15d)

(15e)

(15e)

(15f)

(15f)

(15g)

(15g) Therein

represents a function which applies the smoothable absolute value function (Equation7

(7)

(7) ) to each vector component individually: No smoothing occurs for

. Furthermore, the right-hand side of the system equations (Equation6

(6)

(6) ) is depicted or approximated by the affine expression

(16)

(16) with

and

representing functions which are affine in the decision variables. Thus, (Equation16

(16)

(16) ) enables affine collocation constraints (Equation15b

(15b)

(15b) ). The transformation function

in problem (15) represents the accumulation of the affine transformations

and

with

yielding

(17)

(17) with

and

inserting the affine mapping (Equation17

(17)

(17) ) into the affine constraints (Equation4c

(4c)

(4c) ) and (Equation4d

(4d)

(4d) ) results in the affine constraints (Equation15c

(15c)

(15c) ) and (Equation15d

(15d)

(15d) ), respectively (Boyd & Vandenberghe, Citation2004, p. 79). Furthermore, the auxiliary variables are used to represent the ZCSs (Equation4e

(4e)

(4e) ) using convex functions

in (Equation15e

(15e)

(15e) ). In order to get a QP problem, these constraints are assumed to be affine or approximated by affine inequality constraints. The application of space splitting for the convexification of the original constraints will be shown in more detail in Section 2.3. The splitting constraints (Equation15f

(15f)

(15f) ) and (Equation15g

(15g)

(15g) ) represent the constraints (Equation9a

(9a)

(9a) ) and (Equation9b

(9b)

(9b) ), respectively. These constraints are necessary to ensure that problem (15) correctly represents the original problem (4). They implement a bisection of selected decision variables at predefined transition values which are stored in the vector

. The matrices

,

and

are selection matrices extracting the desired decision variables. It is assumed that

decision variables are split at each collocation point.

Depending on the individual approximations of the nonlinear constraints, the piecewise linear representation is subject to some error. The approximation error of this substitute problem is defined beforehand by the implemented number of space subdivisions. If the original problem is piecewise linear, this substitute problem can be an exact representation.

Remark 2.1

Let represent a nonlinearity within a constraint depending on the decision variable representative w, as depicted in Figure . Then, the total absolute error of the piecewise linear approximation

is given by the sum of absolute errors at each collocation point of the decision variable:

(18)

(18) An adequate number and position of knot points for the segmentation into piecewise linear parts achieve sufficiently small approximation errors. However, a higher number of splitting segments increases the size of the OP influencing the computation time.

2.2.3. Nonconvex optimisation problem with convex feasible set

The absolute values in equality constraint (Equation15g(15g)

(15g) ) still prohibit convexity. Deploying the theory of exact penalty functions (Di Pillo & Grippo, Citation1989; Han & Mangasarian, Citation1979), the absolute value equality constraints are moved to the objective without compromising optimality:

Theorem 2.1

Let be a stationary point of the OP

(19a)

(19a)

(19b)

(19b)

(19c)

(19c)

(19d)

(19d) with

representing the Lagrangian multipliers of the corresponding equality constraints. Since a

is an exact penalty function,

is a critical point of problem (15) for

and sufficiently large but finite penalty weights

.

Proof.

The prerequisite for the optima recovery is that the optimality conditions are admissible for problem (15) (Nocedal & Wright, Citation2006, p. 507). For this purpose, the linear independence constraint qualification (LICQ) (Nocedal & Wright, Citation2006, p. 320) is analysed subsequently. Since the transformation in (Equation17

(17)

(17) ) is affine, the gradients of the transformed basic constraints (Equation15c

(15c)

(15c) ) and (Equation15d

(15d)

(15d) ) remain linearly independent if the original constraints

and

are linearly independent. This can be verified for

and

via

(20a)

(20a)

(20b)

(20b) The gradients of the splitting constraints (Equation15f

(15f)

(15f) ) and (Equation15g

(15g)

(15g) ) are by definition linearly independent from each other and from the remaining constraints. Furthermore, it can be assumed without loss of generality that the gradients of the collocation constraints (Equation15b

(15b)

(15b) ), of the basic constraints (Equation15c

(15c)

(15c) )–(Equation15d

(15d)

(15d) ) and of the ZCS-constraints (Equation15e

(15e)

(15e) ) are linearly independent. Thus, if the LICQ holds for the original problem (4), then it is also satisfied by the problem (15). By choosing a sufficiently small but positive smoothing parameter

, the prerequisite of continuous differentiable objective and constraint functions is given while approximation errors are kept small. Thus, the optimal solution will satisfy the Karush–Kuhn–Tucker conditions (Nocedal & Wright, Citation2006, p. 321).

Constraints (Equation19d(19d)

(19d) ) are added to enable omitting the norm in the objective as illustrated in (Equation19a

(19a)

(19a) ). Since

is a vector of concave functions, the AVCs (Equation19d

(19d)

(19d) ) represent a convex set. Thus, the feasible set of OP (19) is convex.

2.2.4. Convex optimisation problem for iterative solution

From Theorem 2.1 follows that solving (19) provides the solution for problem (15) assuming . Unfortunately, the augmented objective (Equation19a

(19a)

(19a) ) is nonconvex, which will be addressed via linearisation. Subsequently, the smoothing is removed by setting

since it will not be required for continuous differentiability. Omitting the smoothing represents an approximation to the smoothed version of (19); however, it is beneficial from a practical standpoint since the splitting constraints are exact for

. Furthermore, it enables representing the AVCs (Equation19d

(19d)

(19d) ) by two affine inequalities which yields exclusively affine constraints required for an LP and QP problem. For a convex OP, the absolute value of the AVCs in the augmented objective is linearised around the points

yielding the linearised constraints

(21a)

(21a) which represent (Equation11b

(11b)

(11b) ), with the linearisation points being stored in matrix

(21b)

(21b) Comparing (Equation15g

(15g)

(15g) ) and (Equation21a

(21a)

(21a) ) shows that the linearisation breaks down to a binary decision: choosing the correct sign of the absolute value term

. The linearisation (Equation21a

(21a)

(21a) ) is affine in the decision variables. Since the sum of convex functions preserves convexity (Boyd & Vandenberghe, Citation2004, p. 79), the composite objective function is then convex in the decision variables. With all constraints being affine, using linearisation (Equation21a

(21a)

(21a) ) results in the convex QP problem

(22a)

(22a)

(22b)

(22b)

(22c)

(22c)

(22d)

(22d)

(22e)

(22e) For linear objectives

, the SSC approach results in a LP problem. The convex problem (22) is iteratively solved, whereas the linearisation points in

are updated based on the solution

of the preceding iteration yielding

(23)

(23) However, the question arises if replacing the constraint

in the objective with its linearisation

falsifies the solution. This motivates the following lemma:

Lemma 2.2

The satisfaction of and

concludes

, independently of the linearisation point

.

Proof.

This can be verified using Figure (b) as graphical support. For this purpose, the following equations are given in general notation and are compared with the equations from the illustrative example in Section 2.2.1 using the vector of decision variables . The nonsmoothed absolute value constraint

spans the following set of points:

(24a)

(24a) with

representing the jth row of matrix

. The admissible points for the AVC-linearisation

are given by

(24b)

(24b) representing the infinite extension of the positive and negative branch of the AVC for

and

, respectively. Then, the intersection with the admissible points of the inequality constraint

(24c)

(24c) provides the sets

(24d)

(24d)

(24e)

(24e) which represent the negative and positive branch of the AVC and are thus a subset:

.

This important lemma ensures that convergence of problem (22) to a solution which satisfies the linearised constraints also fulfils the original nonlinear constraints

in (Equation15g

(15g)

(15g) ).

2.2.5. Space splitting convexification algorithm

Subsequently, the SSC algorithm 1 is presented in detail and the proof of its convergence is provided. The algorithm inputs are described in line 1. An initial solution for the inputs and states

must be provided. The algorithm iteratively solves the convex problem (22) using the solution of the previous iterate to correct the signs of the linearisation if necessary. The algorithm terminates when all signs are chosen correctly or the maximum number of iterations

is reached. Thus, if the algorithm converges before reaching the maximum number of iterations

, the largest constraint violation is smaller than a small value

with

. As already mentioned, the violation of the linearised AVCs indicates that a wrong sign was chosen. The reason why the constraint violations are a suitable feedback for correct sign selection can be visualised using Figure (b). The signs and thus the linearised constraints

in the objective (Equation22a

(22a)

(22a) ) are fixed before optimisation. Then, running into the region of

with the opposite sign requires increasing

due to the constraints

, which in turn increases the linearised constraints in the objective. Based on the solution of the preceding iteration, the updating routine in line 7 adjusts the convex QP problem (22) to satisfy (Equation15f

(15f)

(15f) )–(Equation15g

(15g)

(15g) ) using the projection procedure (Equation12

(12)

(12) ): The update routine computes the projected initial solution

and the correct signs

for the linearisation of the AVCs. Afterwards, the optimiser is invoked in line 8 to solve the convex QP problem or LP problem if the main objective is linear. The theory of exact penalty functions states that the penalty parameter τ must be larger than the largest optimal dual variable of the corresponding constraints. Since estimating the limit value for the penalty parameter is a rather complicated task, Algorithm 1 pursues the straightforward approach in line 6 of gradually increasing the penalty parameter up to a high value. A reasonably high but finite upper bound on the penalty parameter

avoids numerical problems. Although this is not the best updating approach, it can work well in practice (Nocedal & Wright, Citation2006, p. 511) as it is the case for the examples considered in this article.

Finally, the following theorem verifies that the algorithm produces a sequence of solutions which eventually converges to a local solution satisfying the AVCs:

Theorem 2.2

Let the feasible set of problem (Equation22(22a)

(22a) ) be denoted with

(25)

(25) which is a convex set. Starting from a point

Algorithm 1 generates a sequence

according to

(26)

(26) with

being a convex function. This sequence eventually converges to a limit point

which satisfies

.

Proof.

Algorithm 1 iteratively solves problem (22) and gradually increases the penalty parameter up to a finite value . Let the condition

mentioned in Theorem 2.1 hold for

. Then, the theory of exact penalty functions states that

is satisfied for all

. As described in Lemma 2.2, this concludes that only solutions on one branch of the AVCs are admissible. Thus, no sign changes of

are possible for

. This concludes that the linearisation points of

and thus OP (22) remain unchanged for

. The convexity of the problem entails that only one optimum exists yielding

(27)

(27) This concludes that for every

, there is a

such that

(28)

(28) since (Equation28

(28)

(28) ) holds at the latest from the value

with

. This proves that (Equation26

(26)

(26) ) is a Cauchy sequence and thus converges (Zorich, Citation2016, p. 85). Hence, the solutions of the individual iterations can oscillate; however, this oscillation vanishes with an increasing number of iterations finally converging to a single point

. Due to Lemma 2.2,

concludes that this point satisfies

.

Theorem 2.2 concludes that the iterative solution of OP (Equation22(22a)

(22a) ) within the SSC algorithm basically solves the OP (Equation15

(15a)

(15a) ) with

: From convergence to

follows that the computed solution satisfies

and the augmented objective term vanishes. The remaining constraints of problems (Equation15

(15a)

(15a) ) and (Equation22

(22a)

(22a) ) are identical. Thus, the SSC algorithm computes a solution that is arbitrarily close, but not necessarily identical, to the solution of the piecewise linearly approximated problem (Equation15

(15a)

(15a) ). The potential discrepancy in solutions is rooted in the necessity to smoothen the problem (Equation15

(15a)

(15a) ) with

for the admissibility of the optimality conditions in Theorem 2.1. Since the linearisation removes the discontinuity, the smoothing is disregarded within the SSC algorithm. From a practical standpoint, the discrepancy is negligible since the smoothing parameter can be assumed arbitrarily small

making the impact of the smoothing numerically irrelevant. This procedure for the approximation of discontinuities in optimal control problems is common practice, see e.g. (Perantoni & Limebeer, Citation2014). In summary, the SSC approach chooses one branch of the discontinuity, resulting in the continuously differentiable problem (Equation22

(22a)

(22a) ) with

, and switches in the following iterations to the other branch if the wrong side of the discontinuity was selected.

2.3. Constraint convexification via space splitting

The previous sections derived the basic structure of the convex OP (Equation22a(22a)

(22a) ), which is solved in an iterative manner. However, the question of how the original constraints can be convexified via space splitting to gain the convex constraints (Equation15b

(15b)

(15b) )–(Equation15e

(15e)

(15e) ) is still open. The basic procedure of bisecting the space of a variable into two subdomains has been illustrated in Section 2.2.1. This can be employed to consider ZCSs and equality constraints with univariate nonlinearities in a convex manner, which is demonstrated in Sections 2.3.1 and 2.3.2, respectively. Subsequently, the index for the collocation discretisation

is omitted for readability.

2.3.1. Convexification of zonally convex sets

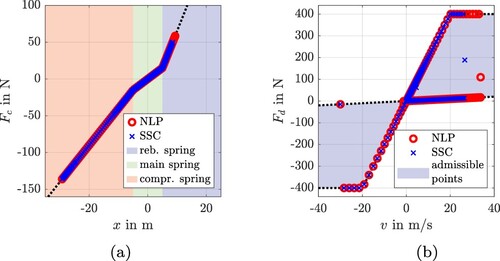

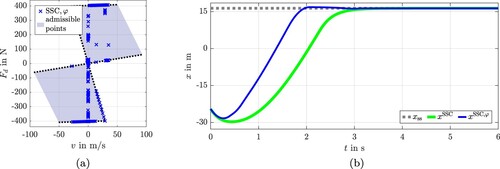

One prominent physical example of ZCSs are constraints ensuring semi-activity. Semi-active actuators like limited slip differentials and semi-active dampers are used in many engineering applications due to their good compromise between low energy consumption and high performance (Savaresi et al., Citation2010; Sedlacek et al., Citation2020a, Citation2020c). A typical ZCS for a semi-active damper is depicted in Figure (a) with damper force and damper velocity v. The nonconvexity of the set is apparent considering that it is easy to draw a line that contains non-admissible points and connects an admissible point in the first quadrant with an admissible point in the third quadrant. Note that simply linearising the inequality constraints depicting this nonconvex set results in a half-plane, which represents a poor approximation of the set. The main idea for the novel convexification approach is appropriately decomposing nonconvex sets, described in the original decision variables, into convex subsets using auxiliary decision variables. The applicability of the SSC method to the ZCS types depicted in Figure is proven in the subsequent paragraphs.

Figure 4. Space splitting convexification of zonally convex sets. (a) ZCS with a single transition point. (b) Shifted, rotated ZCS with a single transition point. (c) ZCS with shared line at transition.

The approach is initially illustrated using the ZCS depicted in Figure (a). Let the convex subset in the first and third quadrant be given by

(29a)

(29a)

(29b)

(29b) respectively. Therein v and u are original decision variables. Moreover,

and

are convex sets. The union of convex sets does not necessarily result in a convex set. As illustrated in Figure (a), the union

is assumed to be nonconvex in this article. Thus,

is a nonconvex set comprised of convex subsets: a ZCS. The velocity v is split at

by a vertical line following Lemma 2.1. The two auxiliary variables

and

are introduced aiming at implementing

(30)

(30)

Introducing analogous constraints to (Equation9a(9a)

(9a) ) and (Equation11a

(11a)

(11a) ) as well as additional objective terms in form of (Equation11c

(11c)

(11c) ) ensures the relations in (Equation30

(30)

(30) ). Furthermore, the auxiliary variables

and

are introduced for the individual subsets. Therein

represents the ordinate value of the common point, which lies on the origin in the current example depicted in Figure (a) resulting in

. However, the common point of the subsets is not required to lie on the abscissa or ordinate. The vector of auxiliary decision variables is given by

. While the original state variable v remains a decision variable in the problem, the input variable is replaced according to the transformation

(31)

(31) which is affine in the decision variables. The convex subsets in terms of the auxiliary decision variables are given by

(32a)

(32a)

(32b)

(32b) Hence, the convex subsets

and

are now defined for separate decision variables and will be linked via the affine mapping (Equation31

(31)

(31) ). This enables the formulation of convex constraints as depicted in problem (Equation15

(15a)

(15a) ). The proof that this procedure produces the same set as

will be given in Theorem 2.3.

For convexity, and

in (Equation32a

(32a)

(32a) ) and (Equation32b

(32b)

(32b) ) must be convex functions. As exemplarily illustrated in Figure (a), the convex subsets are assumed in this article to be representable by

affine inequality constraints

(33a)

(33a)

(33b)

(33b) with

,

,

and

. These constraints represent (Equation15e

(15e)

(15e) ) in the convex OP. Although nonlinear, convex functions for

and

would not prevent convexity, introducing such constraints would prohibit an LP or QP problem, which can be solved especially efficiently. Asymmetric subsets like the one illustrated in Figure (a) can be easily implemented by using varying coefficients in (Equation33a

(33a)

(33a) ) and (Equation33b

(33b)

(33b) ) or by using different domains for

and

.

Theorem 2.3

The subsets (Equation32a(32a)

(32a) ) and (Equation32b

(32b)

(32b) ) in combination with the affine mapping (Equation31

(31)

(31) ) depict the set

which is given by the union of the convex subsets (Equation29a

(29a)

(29a) ) and (Equation29b

(29b)

(29b) ).

Proof.

For proving that the splitting approach provides sets that depict the original one, the individual cases for the bisected variable space are considered subsequently.

Case 1:

The decision variable v is split at . Thus,

holds according to Lemma 2.1. This concludes

since the subsets converge to the common point

when leaving the subset towards the other subset. Lemma 2.1 also concludes

. Then, the affine mapping (Equation31

(31)

(31) ) is given by

. For

,

holds. Since

,

and

provide the same feasible set. The chain of conclusions for the remaining cases follows the same reasoning but is given below in equations for conciseness.

Case 2:

(34a)

(34a) Case 3:

(34b)

(34b)

Thus, inserting the affine mapping (Equation31(31)

(31) ) into the constraints while replacing the original set

with the sets (Equation32a

(32a)

(32a) ) and (Equation32b

(32b)

(32b) ) yields the same feasible set.

The presented proof requires a space splitting of the variable v which is represented by splitting the convex subsets via a vertical line. Thus, the SSC approach is capable of convexifying two-dimensional ZCSs, which can be divided into two convex subsets connected in a single point using any vertical line. Following theorem is added to show that this also holds for any splitting line which is straight but possibly rotated:

Theorem 2.4

Space splitting applied to a rotated, two-dimensional ZCS also results in convex constraints.

Proof.

This can be verified by applying the previously described procedure to a rotated coordinate frame, exemplarily depicted in Figure (b). The auxiliary decision variables need to split the rotated two-dimensional space spanned by the variables and

. Assuming the coordinate system is rotated by the angle φ with counter-clockwise rotations depicting positive rotations, following relations hold between the original coordinates v−u and the rotated coordinates

:

(35)

(35) The vector of auxiliary decision variables is chosen as

. For a concise notation, the equations below use the abbreviations

and

. Constraints (Equation33

(33a)

(33a) ) are analogously formulated on the rotated, auxiliary decision variables:

and

. However, an adjustment using (Equation35

(35)

(35) ) is necessary for the constraints (Equation9a

(9a)

(9a) ), (Equation11a

(11a)

(11a) ) and (Equation11b

(11b)

(11b) ) as well as the input mapping (Equation31

(31)

(31) ):

(36a)

(36a)

(36b)

(36b)

(36c)

(36c)

(36d)

(36d) Since the angle φ is constant, the trigonometric functions represent constant values. Thus, a rotated space splitting procedure also yields affine constraints and mappings enabling the formulation of a convex OP.

The previous theorems required the subsets to be connected in a single point. Following theorem states that the SSC approach can be used to approximate ZCSs which share a line of points:

Theorem 2.5

When a two-dimensional ZCSs is comprised of two convex subsets sharing a line of points, the SSC approach can only approximate the original set however with sufficiently small approximation error.

Proof.

Without loss of generality, this can be analysed considering Figure (c) as example. For the depicted case, the transition line lies on the ordinate . Analysing the individual cases shows that using the original subsets within the SSC approach would yield a larger set than the original set:

Case 1:

(37a)

(37a)

Case 2:

(37b)

(37b)

Case 3:

(37c)

(37c)

Thus, using the original subsets can render non-admissible points of the original problem admissible. When the input variable is replaced by the auxiliary inputs according to (Equation31(31)

(31) ), both sets must be adjusted at the transition line

. The simplest set adjustment is enforcing

at the transition axis, as illustrated in Figure by the dotted lines. Then, the subsets are connected in a single point enabling the application of Theorem 2.4. However, other set manipulations are possible and can be numerically better. Generally, such set approximations introduce conservativeness for the sake of feasible trajectories. However, the introduced error is negligible when the removed area is sufficiently small which can be implemented by choosing steep linear constraints at the transition between the subsets.

Remark 2.2

In this article, only two-dimensional ZCSs which can be split into two convex subsets have been considered. However, the SSC approach can be applied to certain two-dimensional ZCSs with more than two subsets. For instance, consider three convex subsets positioned horizontally to each other, whereas each subset is connected to the next subset in a single point on the abscissa. This can be verified using the procedures described previously in this section.

This section illustrated the convexification procedure for ZCSs in regards to inputs. This results in the depicted affine input transformation . Implementing the approach for a ZCS with state decision variables yields an affine transformation

. This procedure can be applied to multiple inputs and states. The corresponding vector functions for the affine transformations are given by

(38)

(38) with

and

representing the transformation for the individual input and state, respectively. If no transformation is necessary, a direct mapping is implemented according to

and

. The vectors of transformations (Equation38

(38)

(38) ) are used to generate

in (Equation17

(17)

(17) ) which is used for the convex depiction of the original constraints in (Equation15b

(15b)

(15b) )–(Equation15d

(15d)

(15d) ).

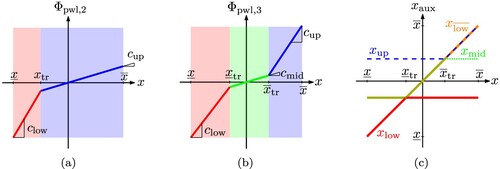

2.3.2. Convexification of nonlinear equality constraints

Some nonlinearities in physical systems are characterised by a univariate function. Examples are nonlinear springs, saturation, dead-zones or the tire force shape curve of vehicles. Since nonlinear system equations yield nonlinear thus nonconvex equality constraints, this section presents how such nonlinear univariate terms can be convexified. The nonlinear univariate curve is depicted using piecewise affine parts. Hence, if the nonlinearity is not a piecewise linear function, SSC introduces approximation errors. The subsequent paragraphs illustrate the application of the SSC algorithm for convexifying piecewise linear nonlinearities.

Theorem 2.6

The splitting constraints in (Equation9(9a)

(9a) ) can be used to convexify equality constraints by transforming a univariate nonlinearity with two piecewise linear segments into an expression which is affine in multiple decision variables.

Proof.

Proof is provided using the piecewise linear curve depicted in Figure (a) as example, which represents a typical function for piecewise linear springs. Assuming the position x is a state of the system, it represents a decision variable which will be split at the transition point . Thus, the auxiliary position variables

and

are introduced. By including analogous constraints to (Equation9a

(9a)

(9a) ) and (Equation11a

(11a)

(11a) ) as well as additional objective terms in form of (Equation11c

(11c)

(11c) ), following relations are ensured using Lemma 2.1:

(39)

(39) Hence, the piecewise linear function can be represented by the expression

(40)

(40) which is affine in the decision variables

. The relations in (Equation39

(39)

(39) ) yield following two segments for the piecewise linear function (Equation40

(40)

(40) ):

(41a)

(41a)

(41b)

(41b) Thus, (Equation41a

(41a)

(41a) ) and (Equation41b

(41b)

(41b) ) depict the upper and lower segment of the nonlinearity, respectively.

Since (Equation40(40)

(40) ) is an affine function in the decision variables, it can be inserted into the differential equations without preventing convexity. The subsequent theorem proves that this approach can be extended to univariate nonlinearities with more than two piecewise linear segments:

Figure 5. Space splitting convexification of piecewise linear equalities. (a) Nonlinearity with two linear segments. (b) Nonlinearity with three linear segments. (c) Splitting of state variable x for three segments.

Theorem 2.7

A repeated application of the space bisection approach using the splitting constraints in (Equation9(9a)

(9a) ) can be used to convexify equality constraints by transforming a univariate nonlinearity with more than two piecewise linear segments into an expression which is affine in multiple decision variables.

Proof.

The general procedure for this extension is illustrated using the piecewise linear curve depicted in Figure (b). The position x is split at the transition points and

. Thus, the auxiliary position variables

,

,

and

are employed. The space is repeatedly bisected following Lemma 2.1 to gain

(42a)

(42a)

(42b)

(42b) which is depicted in Figure . Thus, the position space is bisected into a region below and a region above

whereas the upper region is again bisected into a region below and above

. The piecewise linear function can then be represented by the expression

(43)

(43) which is affine in the decision variables

. The relations in (Equation42

(42a)

(42a) ) yield following three segments for the piecewise linear function (Equation43

(43)

(43) ):

(44a)

(44a)

(44b)

(44b)

(44c)

(44c) Hence, (Equation44a

(44a)

(44a) ), (Equation44b

(44b)

(44b) ) and (Equation44c

(44c)

(44c) ) depict the upper, mid and lower segment of the nonlinearity, respectively.

This repeated bisection procedure enables the generation of a characteristic curve with an arbitrary number of segments. However, an increasing number of segments also increases the number of auxiliary decision variables and additional constraints making the OP larger.

The approach presented in this section yields a scalar function which is affine in the decision variables. The procedure can be applied to multiple univariate, nonlinear terms yielding further scalar mapping functions. The affine vector-valued function in (Equation16

(16)

(16) ) is given by

(45)

(45) with

representing the individual affine mapping functions. For states that are not affected by the space splitting approach,

holds. The transformation (Equation45

(45)

(45) ) is used to generate the convex collocation constraints (Equation15b

(15b)

(15b) ).

2.4. Remarks on runtime

The application of optimisation methods within a real-time capable controller generally requires upper bounds on the necessary computation time. The SSC approach runs through a loop which is comprised of a projection step and an optimisation step resulting in the following theorem regarding computational complexity:

Theorem 2.8

The computational complexity of the SSC algorithm is polynomial.

Proof.

SSC solves a sequence of LP problems or convex QP problems, which are known to be solvable in polynomial time (Karmarkar, Citation1984; Kozlov et al., Citation1980). The projection step (Equation12(12)

(12) ) of the SSC algorithm uses minimum and maximum functions which possess linear time complexity. This concludes a polynomial time complexity of the SSC algorithm:

(46)

(46) Therein n can be interpreted as the number of operations required by the algorithm. The parameters in (Equation46

(46)

(46) ) depend on the utilised solver as well as the number of decision variables which is problem specific. Limiting the maximum number of superordinate iterations to

enables terminating the algorithm prematurely at suboptimal solutions, if necessary.

Theorem 2.8 provides valuable insight for the application in a real-time capable controller. Firstly, a worst-case bound on computation time can be experimentally identified for the specific OP and solver. Secondly, the computation time scales well with increasing problem size. Since the number of superordinate SSC iterations is rather low in practice, this results in a fast solution of optimal control problems. Finally, the influences on computation time are summarised below:

System and discretisation. The number of decision variables increases with rising number of inputs, states and collocation segments for both the original and the convexified OP. This increases computation time for NLP methods and the SSC algorithm. Due to the polynomial complexity, this increase in time will be generally less for SSC than for NLP.

Nonlinearity. The more complicated the nonlinearity, the more splitting segments are required to reduce approximation errors. However, the space splitting increases the problem size compared to the original problem, which influences the variable n in (Equation46

(46)

(46) ). This can put a practical bound on the number of space divisions via the SSC approach. Nevertheless, many physically motivated nonlinearities can be approximated sufficiently well using a moderate number of affine segments.

Initial guess. Being a local method, the solution computed by the SSC approach depends on the provided initial solution. Furthermore, this initial guess can influence the order of sign-corrections regarding the AVC-linearisations, which can impact the number of superordinate iterations and, therefore, the convergence rate. For MPC frameworks, the optimal solution of the preceding time step often represents a good initial guess (Gros et al., Citation2020) promising low computation times.

Penalty parameter update. Too small or too large penalty parameters can slow down convergence (Nocedal & Wright, Citation2006, p. 511). The straightforward approach of gradually increasing the penalty parameter is used in this article. However, more sophisticated routines have been proposed in Mayne and Maratos (Citation1979) and Maratos (Citation1978).

2.5. Comparison with existing methods

In order to highlight the novelty of the proposed algorithm, this section compares the SSC approach with the most related existing methods.

From an algorithmic standpoint, linearising only the nonconvex part of constraints combined with a relaxation and an increasing objective term penalising constraint violations corresponds to penalty CCP (Lipp & Boyd, Citation2016). CCP has the advantage over SQP and other linearisation techniques that it retains more problem information in each iterate: The information of the convex parts is kept and only the concave portions are linearised. Furthermore, CCP does not require to use line-search procedures or limit the progress in each iteration via trust-region methods. Inspired by Mangasarian (Citation2015), the SSC approach avoids additional slack variables by considering the linearised constraints directly in the penalty objective. This avoids further increasing the size of the OP as in penalty CCP. Moreover, SSC employs an intermediate projection to enhance convergence. While CCP linearises the concave part of all nonconvexities, SSC transforms the problem beforehand requiring only the linearisation of AVCs. This enables providing a feedback about the correctness of the selected linearisation points, which are subsequently adjusted. Thus, the linearisation error can vanish completely after the correct signs have been chosen. The binary nature of the linearisation facilitates a rapid convergence in a small number of superordinate iterations. Although the SSC method only computes solutions to the piecewise linear approximation of the original problem, the approximation can be designed to sufficient accuracy enabling small and known approximation errors. Especially the straightforward linearisation of ZCS-constraints yields very poor approximations, which can contain a large set of infeasible points. Thus, the vanishing AVC-linearisation errors result in an advantage of SSC regarding accuracy, also over further linearisation techniques like the ones presented in Mao et al. ( Citation2016, Citation2017). Generally, a scalable trade-off between accuracy and size of the OP, thus computation time, can be chosen. Opposed to Lipp and Boyd (Citation2016), this article provides a convergence proof, even if only converging to the solution of the approximated problem.

While LC possesses the advantage of avoiding approximation errors, it is only applicable to OPs with annulus-like, nonconvex sets resulting from excluding a convex subset from a convex set (Raković & Levine, Citation2018, p. 340). Opposed to that, SSC provides a method for the convexification of ZCS which cannot be considered via LC.

Similar to the LnP approach presented in Mao et al. (Citation2017), the SSC method is an iterative linearisation algorithm using intermediate projection steps. The updating routine for SSC is of linear computational complexity with few and simple mathematical operations. The projection step of the LnP approach generally requires the solution of a convex programming problem, which is of polynomial complexity at best. Thus, the projection step used by the SSC approach is computationally less costly, which is beneficial for splitting methods (Ferreau et al., Citation2017). Additionally, the binary nature of the AVC-linearisation provides the advantage that generally only few superordinate iterations are required, resulting in short computation times. Moreover, SSC is more robust regarding the initialisation since the LnP approach requires an additional lifting procedure to cope with arbitrary initialisations, which costs computation time (Mao et al., Citation2017). Furthermore, the LnP method requires the right-hand side of the system equations to be convex and the inequality constraints to be concave. However, nonconvex collision avoidance constraints can be easily considered via the LnP approach, whereas the SSC procedure requires further measures.

The ADMM is a splitting procedure utilising the augmented Lagrangian method instead of the exact penalty function method, which is employed by the SSC algorithm. Due to the alternating approach, the ADMM requires the solution of two QCQP problems in each iteration. The SSC approach only requires the solution of a single convex QP problem in each iteration, which is beneficial for computation time.

The spatial BnB method also uses space decomposition for the convexification of the optimisation problem. However, the SSC approach solves a piecewise linear approximation of the original problem, which is iteratively adjusted, on the full space domain of the decision variables. Opposed to that, the BnB method solves multiple OPs which represent convexly relaxed versions of the original problem on separate subdomains of the decision variables. BnB techniques determine upper bounds by evaluating the objective function at a feasible point. Since the problem is generally nonconvex, finding a feasible point can be difficult. This is often tackled by solving the nonconvex subproblem locally, which is computationally expensive (Liberti & Maculan, Citation2006, p. 253). Thus, the convergence rate of the SSC approach is most likely to be superior if sufficiently good initial guesses are provided. However, this depends on the nonlinearities of the OP, which influence the number of decision variables for SSC but also for BnB approaches which decompose factorable functions (Tawarmalani & Sahinidis, Citation2002, p. 125). Moreover, SSC only guarantees to find local optima of an approximation of the OP and is only applicable to certain classes of OPs. GO techniques cover a wide range of applicability and provide the global optimum.

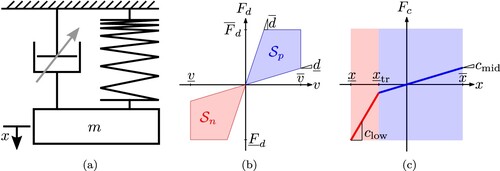

3. Applications

In this section, the proposed SSC algorithm is evaluated using a hanging SMO example with piecewise linear spring and semi-active damping as illustrated in Figure . Semi-active dampers can only generate a force in the opposite moving direction and cannot impose a force at steady-state complying with the passivity constraint (Savaresi et al., Citation2010, p. 16). Hence, the admissible set for semi-active dampers always contains a subset in the first quadrant and a subset in the third quadrant which are connected in a single point, namely, the origin. A performance-oriented OP is formulated and solved using NLP and SSC, respectively. The results of both algorithms are compared regarding accuracy and computation time. Both problems are posed using the modelling language JuMP (Dunning et al., Citation2017) for mathematical OPs. For a fair comparison, the necessary derivatives are computed beforehand via automatic differentiation. Furthermore, the tolerances for constraint feasibility and objective improvement are set to for both solvers. The utilised parameters are listed with description in Table .

Figure 6. Model characteristics for hanging SMO with semi-active damper and nonlinear spring. (a) Hanging SMO model. (b) ZCS for semi-active damper force. (c) Piecewise linear spring force characteristic.

Table 1. Parameters for SMO application.

The NLP problem is solved using the optimiser IPOPT(Wächter & Biegler, Citation2006), which employs an interior-point method: Using the concept of barrier functions, a sequence of relaxed problems is solved for decreasing values of barrier parameters. The barrier parameter relaxes the inequality constraints and hence represents the distance from the current iterate to the border of the admissible set. Thus, interior-point methods use a central path within the admissible set as opposed to SQP-methods which wander along the border. The resulting nonlinear equation system is solved via a damped Newton method. Furthermore, a filter line-search method, heuristics and further correction measures are employed to enhance convergence and robustness.

The novel SSC method transforms the OP into convex QP subproblems which are solved iteratively. In order to capitalise on this problem structure, the elaborate optimiser Gurobi (Gurobi Optimization, Citation2020) is selected.

3.1. Optimisation problems

The equations of motion for the state vector result in

(47)

(47) with x, v,

and

denoting the deflection position, deflection speed, damper force and spring force, respectively. The expression of these forces differs for the NLP method and the SSC approach. Starting from a specified initial state

with damper force

, the goal is to minimise the deviation from the steady-state position

while considering the limitations of the system. Applying Simpson quadrature (Betts, Citation2010; Kelly, Citation2017), the main objective penalises the steady-state position deviation

according to

(48)

(48) Considering the values listed in Table , the steady-state position can be computed using a root-finding approach like Newton's method: At steady-state position, the gravitational force must equal the spring force. For the considered nonlinear springs with two piecewise linear segments the value

is chosen and

if the spring with three segments is used. An uniform discretisation of the time grid

is employed yielding a constant segment width

. Both, the NLP approach and the SSC method, are initialised with zero vectors

and

. From

(49)

(49) follows that the Hessian of (Equation48

(48)

(48) ) is a diagonal matrix with only positive and zero diagonal entries and therefore positive semi-definite. Hence, the main objective (Equation48

(48)

(48) ) is a convex function.

3.1.1. Nonlinear nonconvex optimisation problem

For the solution of the OP via NLP, the decision variables are chosen as

(50a)

(50a)

(50b)

(50b) whereas the input represents the variable damping coefficient dk of the semi-active actuator. With

and

, the original OP is given by

(51a)

(51a)

(51b)

(51b)

(51c)

(51c)

(51d)

(51d)

(51e)

(51e) The nonconvex NLP problem (51) is comprised of following parts: objective function (Equation51a

(51a)

(51a) ), collocation constraints (51b), bounds for the decision variables (Equation51c

(51c)

(51c) ), damper force bounds (Equation51d

(51d)

(51d) ) and initial value condition (Equation51e

(51e)

(51e) ). The piecewise linear spring force is approximated in (51b) via the smoothed minimum-function

(52)

(52) using

to guarantee that the problem is twice continuously differentiable (Sedlacek et al., Citation2020b). Problem (51) is nonconvex due to the right-hand side of the differential equation system in the collocation constraints (51b) and due to the damper force bounds (Equation51d

(51d)

(51d) ).

3.1.2. Convex QP-problem for iterative solution

Following the SSC approach, auxiliary optimisation variables are introduced, which results in the subsequent decision variables:

(53a)

(53a)

(53b)

(53b)

(53c)

(53c)

(53d)

(53d) Opposed to the inputs (Equation50a

(50a)

(50a) ) of the NLP problem (Equation51a

(51a)

(51a) ), the inputs in (Equation53a

(53a)

(53a) ) represent the positive and negative part of the damper force.

As illustrated in Algorithm 1, a projection routine is executed in each iteration. Based on the preceding solution marked by values , this routine computes the projected initial solution

for

via

(54a)

(54a)

(54b)

(54b) and the correct signs according to

(54c)

(54c) For a concise notation in the OP, the decision variables are lumped together in

. With

and

, the convex QP problem, which is iteratively solved for updated values of τ,

,

and corresponding initial guesses, is given by

(55a)

(55a)

(55b)

(55b)

(55c)

(55c)

(55d)

(55d)

(55e)

(55e)

(55f)

(55f)

(55g)

(55g)

(55h)

(55h) The QP problem (Equation55

(55a)

(55a) ) is comprised of following parts: augmented objective function (Equation55a

(55a)

(55a) ), collocation constraints (Equation55c

(55c)

(55c) ), bounds for the decision variables (Equation55d

(55d)

(55d) ), ZCS-constraints (Equation55e

(55e)

(55e) ), the additional space splitting constraints (Equation55f

(55f)

(55f) )–(Equation55g

(55g)

(55g) ) and initial value condition (Equation55h

(55h)

(55h) ). Due to the splitting approach, the right-hand side of the differential equation system in (Equation55c

(55c)

(55c) ) is an affine function in the decision variables. Thus, all constraints are affine in the decision variables and therefore convex. The additional objectives with (Equation55b

(55b)

(55b) ) are affine in the decision variables representing convex functions. Hence, (Equation55

(55a)

(55a) ) represents a convex QP problem. Using (Equation55b

(55b)

(55b) ), the termination criterium in line 9 of Algorithm 1 is computed via

(56)

(56) As illustrated in line 6 of Algorithm 1, the penalty weight τ is linearly increased with a maximum iteration number

using the lower and upper bound

and

, respectively. Both OPs are now fully defined. The results generated by solving NLP problem (Equation51

(51a)

(51a) ) and by applying the SSC algorithm with QP problem (Equation55

(55a)

(55a) ) are compared in the following section.

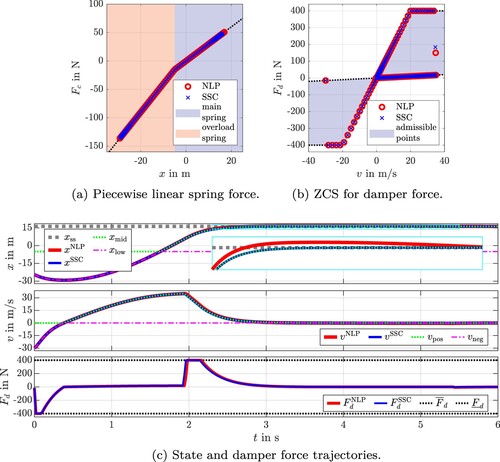

3.2. Results

In order to inspect the performance of the proposed SSC algorithm, 100 OPs are analysed in this section using a desktop computer with Intel CoreTM i7-9850H CPU to determine the solutions. The OPs vary in size and the initial value condition. The number of collocation segments is gradually increased with . For each problem size, the OPs (Equation51

(51a)

(51a) ) and (Equation55

(55a)

(55a) ) are solved using the 10 random, admissible initial values