?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This paper derives the limit of the Kalman filter and smoother as the inverse of the process noise covariance tends to zero (the zero informational limit) in the case that there is direct feedthrough (of full column rank) of the process noise input to the measurements. Two forms of the filter in the limit are derived with the second being a standard Kalman filter without unknown inputs. The latter form is used to derive necessary and sufficient conditions for convergence and stability of the filter. These consist of a controllability condition and a minimum phase condition. The filter and smoother are applied to an automotive example to estimate an unknown road profile. The example illustrates the usefulness of the stability and convergence conditions to inform the choice of a suitable set of sensors.

1. Introduction

This paper is motivated by the need to estimate the inputs as well as the states in a dynamical system where no direct measurement of the exogenous inputs is convenient or feasible. This arises in a number of application domains, e.g. geophysical and environmental engineering, vehicle control and electrical networks. We wish to consider the case where there is direct feedthrough of the exogenous inputs to the measured outputs. This situation is typical in automotive engineering where important exogenous inputs (e.g. tyre forces, slips and road profile inputs) appear in the direct feedthrough term for physical sensors such as accelerometers. Our approach to this problem is to derive the limit of the Kalman filter and smoother as where Q is the process noise covariance (the zero informational limit). In particular, we show that these limits exist and are unique, and we will refer to the resulting recursions as the limit Kalman filter and the limit Kalman smoother respectively. In this paper, we do not address the question of whether the limit filter and smoother can be obtained directly in a stochastic estimation problem with completely unknown inputs. Nevertheless, we will study the convergence and stability of the limit Kalman filter and smoother using standard results from the literature. To facilitate this we will show that the limit filter recursions can be transformed into a standard form of a Kalman filter. For simplicity, the paper will confine attention to the case that the feedthrough matrix from the process noise to the measured output has full column rank. We illustrate the motivation and application of the limit filter and smoother by applying it to estimate a road profile from a vehicle's onboard measurements. Our results suggest that effective estimation is possible for this problem without the use of more sophisticated measurement techniques, e.g. the use of profilometers or surveying apparatus.

Previous work on filters and observers that estimate states as well as unknown inputs has involved a wide variety of problem formulations and assumptions: deterministic or stochastic, discrete or continuous time, whether there is feedthrough of inputs to outputs, and whether real-time estimates of the inputs are sought. Early work on the observability of systems with unknown inputs focused on continuous and deterministic systems without feedthrough of the inputs to the measurements, e.g. Basile and Marro (Citation1969). Procedures for the construction of full, reduced and minimal order observers were developed assuming that the first Markov parameter (CB in the notation of (Equation1(1)

(1) ), (Equation2

(2)

(2) ) for continuous-time systems) is full column rank, the latter being closely related to the use of the first derivative of the output for the estimation of the unknown inputs (see Darouach et al., Citation1994; Hou & Muller, Citation1992; Kudva et al., Citation1980; Wang et al., Citation1975). The approach was extended to the case where there is a non-zero feedthrough matrix in Hou and Muller (Citation1994) again assuming use of the first derivative of the measurement output. The rank condition assumed in Wang et al. (Citation1975), Kudva et al. (Citation1980), Hou and Muller (Citation1992) and Darouach et al. (Citation1994) is relaxed in Hou and Patton (Citation1998a) at the expense of using higher order derivatives of the measured output for input reconstruction.

An early stochastic treatment of unknown input and state estimation for discrete-time systems is Glover (Citation1969). An approach is outlined, and recursive filter equations are provided, for an input with no assumed prior, or by taking the limit in the Kalman filter as where Q is the input covariance (the zero informational limit), assuming no direct feedthrough of the input to the measurement, and assuming left invertibility of CB. Subsequent work explored different formulations of optimality, e.g. Kitanidis (Citation1987) who posed the problem as a constrained optimisation with a free gain matrix parameter. The work continued with Darouach et al. (Citation1995) that reformulated the problem as a state estimation of a singular stochastic system which is solved by employing a generalised least squares approach. Later, Darouach and Zasadzinski (Citation1997) show the optimality of the filter in Kitanidis (Citation1987) among the set of recursive filters and produce stability results, while Kerwin and Prince (Citation2000) verify optimality among the set of all linear filters. In Gillijns and De Moor (Citation2007a) the scope of the filter in Kitanidis (Citation1987) is expanded by simultaneously estimating both the state and unknown input. Bitmead et al. (Citation2019) return to the zero informational limit formulation of Glover (Citation1969) to provide a full derivation and to show that the resulting filter recursions coincide with those given in Kitanidis (Citation1987) and Gillijns and De Moor (Citation2007a).

In Hou and Patton (Citation1998b) the case of a rank-deficient feedthrough matrix is considered. The unknown input decoupling technique developed in Hou and Muller (Citation1994) to transform the original system is used to construct an optimal filter. In Hsieh and Chen (Citation1999) and Hsieh (Citation2000), the input is treated as a stochastic process with a wide-sense representation. The work of Li (Citation2013) considers the system in Kitanidis (Citation1987) with some additional partial and noise-free measurements of the inputs and the ensuing work by Su et al. (Citation2015) derives results on existence, optimality and asymptotic stability for the same system. In Keller and Darouach (Citation1999) a system is considered that includes constant biases in addition to unknown inputs. A solution is proposed based on a system augmentation and transformation.

In Gillijns and De Moor (Citation2007b), a discrete-time stochastic system with a full column rank input feedthrough matrix is considered. The input is estimated based on a generalised least squares approach and state estimation is posed as a constrained minimisation problem using Lagrange multipliers. The approach is reminiscent of Kitanidis (Citation1987) and others but applied to a different problem. The paper does not present any asymptotic stability results. The work of Cheng et al. (Citation2009), Yong et al. (Citation2015) and Yong et al. (Citation2016) is inspired by both Gillijns and De Moor (Citation2007b) and Darouach and Zasadzinski (Citation1997) and derives more general filters which do not require the input feedthrough matrix to be full column rank through the use of successive singular value decompositions.

Our problem formulation in this paper is similar to that of Glover (Citation1969) and Bitmead et al. (Citation2019) in that we derive a zero informational limit, though in contrast, we consider the case that there is direct feedthrough of the unknown inputs to the measurement vector through a matrix that is full column rank. We derive both the filtering and smoothing algorithms and express them in forms that allow the straightforward application of standard stability and convergence results. The filtering recursion takes an interesting form which closely relates to the filter of Gillijns and De Moor (Citation2007b); more precisely, the filters coincide if an additional process noise covariance is set to zero. It is a topic for a future paper to ask if there is a direct way to pose the filtering/smoothing problem with unknown input, either stochastically or deterministically. We mention that Gakis and Smith (Citation2022) provide a continuous time deterministic least squares approach to this problem. Important background on deterministic approaches to the standard Kalman filter/smoother is given in Markovsky and De Moor (Citation2005), Willems (Citation2004) and Buchstaller et al. (Citation2021).

The main contributions of the present paper are as follows:

To derive directly a (first) form of the Kalman filter in the zero informational limit on the process noise when the input feedthrough matrix is full column rank; to note that this limit filter is closely related to the recursive filter of Gillijns and De Moor (Citation2007b) and thereby to provide an alternative interpretation for that filter (Theorem 3.1).

To show that the limit filter equations can be transformed to an alternative (second) form which is a standard Kalman filter for a new system (Theorem 3.2).

To derive the recursive equations for the Kalman smoother in the zero informational limit on the process noise (Theorem 4.2).

To show that the second form of the limit filter allows necessary and sufficient conditions for the stability and convergence of the filter to be stated, which may be expressed as a controllability condition and a minimum phase condition in terms of the invariant zeros of the original system (Theorem 5.5).

To demonstrate the efficacy of the filter and smoother in an automotive example – the estimation of an unknown road profile – by simulation; and further to show how the necessary and sufficient conditions for convergence and stability of the filter can be used to inform the choice of sensors to carry out this task (Section 6).

2. Kalman filter with feedthrough

We consider the linear, finite-dimensional, stochastic, discrete-time system with the state-space description:

(1)

(1)

(2)

(2) where the subscript

(nonnegative natural numbers) is a discrete-time index,

is the system state,

is the vector of measurements,

is the process noise or input and

is the measurement noise, the system matrices

are assumed to be known. We assume that

and

are independent, zero mean, Gaussian white noise processes with covariances Q>0 and R>0, i.e.

,

and

for all

where

is the Kronecker delta and the initial state

is a Gaussian random variable with mean

and covariance

which is independent of all

and

. We denote the set of measurements

by

and introduce the notation for the conditional expectations:

,

,

,

,

.

The Kalman filter determines the conditional means ,

and

(which are also minimum variance estimates) in recursive fashion. The filter recursive equations for the system (Equation1

(1)

(1) )–(Equation2

(2)

(2) ) can be written in terms of an update step:

(3)

(3)

(4)

(4)

(5)

(5)

(6)

(6)

(7)

(7)

(8)

(8)

(9)

(9)

(10)

(10) and a propagation (prediction) step:

(11)

(11)

(12)

(12) See Deshpande (Citation2017) for a detailed derivation.

3. Zero informational limit of the Kalman filter

In this section, we derive the zero informational limit of the Kalman filter with feedthrough (Equation3(3)

(3) )–(Equation12

(12)

(12) ), namely the limit as the information matrix

, under the assumption that D has full column rank, i.e. the exogenous inputs instantaneously and independently influence the measurements. We take

,

and

as given and consider the limits of

,

,

,

,

,

and

as the information matrix

. We show that the limits exist for all k and in particular that the limit is independent of the manner in which

tends to zero. We introduce the notation:

and similarly for

,

, etc. We name the resulting recursive equations the limit filter. We first derive a form of the limit filter written directly in terms of the system matrices A, B, C, D.

Theorem 3.1

Let D have full column rank. Then ,

,

, etc. for all k have well-defined limits

,

,

, etc. as

given recursively by an update step:

(13)

(13)

(14)

(14)

(15)

(15)

(16)

(16)

(17)

(17)

(18)

(18)

(19)

(19)

(20)

(20) and a propagation (prediction) step:

(21)

(21)

(22)

(22) with

and

.

Proof.

See Appendix 1.

It is interesting to note the form of the recursions given in Theorem 3.1. As in the Kalman filter with direct feedthrough there is an estimate of the input as a function of the innovation given by (Equation14

(14)

(14) ) (cf. (Equation4

(4)

(4) )). If (Equation14

(14)

(14) ) is substituted into (Equation13

(13)

(13) ) we obtain the state update in the form:

which is reminiscent of the update in the standard Kalman filter, namely it is the prior state estimate plus a gain matrix times the innovation. Also, the updated state covariance is obtained by subtracting a symmetric matrix (which can be shown to be positive semi-definite) from the predicted covariance (see (Equation15

(15)

(15) )) as in the standard Kalman filter, though the expression is more involved. It turns out that with some further manipulations which are not entirely straightforward the filter equations can be reduced to the standard Kalman filter recursions for a new system with matrices

,

,

(as defined in (Equation30

(30)

(30) )–(Equation32

(32)

(32) )).This form, which is presented next, will be interesting in itself and also very convenient for the analysis of the convergence and stability of the filter.

Theorem 3.2

The filter recursions of Theorem 3.1 are equivalent to the state update:

(23)

(23)

(24)

(24) input update:

(25)

(25)

(26)

(26)

(27)

(27) and state propagation (prediction):

(28)

(28)

(29)

(29) where:

(30)

(30)

(31)

(31)

(32)

(32)

(33)

(33)

(34)

(34)

(35)

(35)

Proof.

See Appendix 2.

It may be noted that the system with matrices ,

,

,

arises naturally when inverting the system (Equation1

(1)

(1) )–(Equation2

(2)

(2) ) on pre-multiplying (Equation2

(2)

(2) ) by the left inverse

of D (which is different to the left inverse

in (Equation19

(19)

(19) )) and substituting for

in (Equation1

(1)

(1) ). This system features in the propagation steps (Equation28

(28)

(28) )–(Equation29

(29)

(29) ) and in the update (Equation25

(25)

(25) ). The matrix Π is a parallel projection onto the range space of D along the null space of

, and

is a left annihilator of D (see (EquationA17

(A17)

(A17) )), which means that

(see (EquationA18

(A18)

(A18) )). Hence, we may note that the component

of the measurement vector in the range space of D does not contribute to the state update (Equation23

(23)

(23) ), whereas the component

in the complement space does not contribute to the input update (Equation25

(25)

(25) ), so the two components of

play distinct roles in the recursion.

We can go further to understand the connection of Theorem 3.2 with system inversion. Substituting (Equation23(23)

(23) ) into (Equation28

(28)

(28) ) and (Equation25

(25)

(25) ) gives:

(36)

(36)

(37)

(37) It can be checked directly that (Equation36

(36)

(36) )–(Equation37

(37)

(37) ) is a left inverse of (Equation1

(1)

(1) )–(Equation2

(2)

(2) ) assuming

. We will make use of (Equation36

(36)

(36) )–(Equation37

(37)

(37) ) to consider asymptotic convergence in Section 5. We refer the reader to Gakis and Smith (Citation2022) for a further discussion of the connection with system inversion for the related continuous-time filter.

4. Zero informational limit of the Kalman smoother

In this section, we derive the zero informational limit of the Kalman smoother, which provides state and input estimates at all time steps in a fixed interval from k = 0 to N conditioned on all the measurements. Note this is an anti-causal process which is only suitable for offline computations. We consider again the case that there is a direct feedthrough matrix of the process noise to the measurements. We first provide the recursions explicitly for the case of a fixed Q matrix, since these are typically presented in the literature in the case where D = 0. See Kailath et al. (Citation2000, Chapter 10) for a tutorial summary on smoothed estimators and Mendel (Citation1977) for an early reference with input as well as state estimation.

Theorem 4.1

Consider the system (Equation1(1)

(1) )–(Equation2

(2)

(2) ) in the fixed interval from k = 0 to N, and let

be defined recursively by (Equation3

(3)

(3) )–(Equation12

(12)

(12) ) for k = 0 to N with initial conditions

. Assuming that

is invertible, the state and input means and variances conditioned on the measurements

are given by the backwards in time recursions:

with the terminal conditions

,

already determined.

Proof.

See Appendix 3.

We now find the zero informational limit of the recursions in Theorem 4.1. Again we will assume that D has full column rank.

Theorem 4.2

Consider the system (Equation1(1)

(1) )–(Equation2

(2)

(2) ) in the fixed interval from k = 0 to N and let D have full column rank. Let

be defined recursively as in Theorem 3.1 (or 3.2) for k = 0 to N. Assuming that

is invertible, then

,

,

and

for all k have well-defined limits as

given by the backwards in time recursions:

(38)

(38)

(39)

(39)

(40)

(40)

(41)

(41) with the terminal condition

,

already determined where we have defined:

Proof.

The claim follows by taking the limit as in the recursion of Theorem 4.1, substituting from (Equation27

(27)

(27) ), (Equation26

(26)

(26) ) and (Equation30

(30)

(30) ) and using an induction argument similar to the proof of Theorem 3.1.

Remark 4.1

It is interesting to note that the smoothed state and input trajectories satisfy the system dynamics (Equation1(1)

(1) ). To verify this substitute

and

from (Equation38

(38)

(38) ) and (Equation39

(39)

(39) ) into (Equation1

(1)

(1) ) and then use (Equation25

(25)

(25) ), (Equation28

(28)

(28) ) and (Equation29

(29)

(29) ) to simplify. In contrast, the filtered estimates

and

do not normally satisfy the system dynamics (to see this use (Equation23

(23)

(23) ), (Equation25

(25)

(25) ) and (Equation28

(28)

(28) )) with the noteworthy exception when D is square (since in that case

). The latter may also be seen from

by noting that the smoothed and filtered solutions coincide in this case.

Remark 4.2

It is interesting to note that and

for all k. This result is very intuitive as more measurements should improve estimates. The first inequality can be proved inductively backwards in time. Suppose that

and note that this is trivial when k + 1 = N. Using

(see (Equation24

(24)

(24) )) we get

and thus using (Equation40

(40)

(40) ) we get

which establishes the claim. The second inequality follows immediately from (Equation41

(41)

(41) ).

5. Asymptotic behaviour of the limit filter

We will now study the asymptotic behaviour of the limit filter. Substituting from (Equation24(24)

(24) ) into (Equation29

(29)

(29) ) gives the Riccati difference equation:

(42)

(42) If

converges to

as

, then

satisfies the algebraic Riccati equation:

(43)

(43) Sufficient conditions for such convergence are given in Lemma 5.2. A real symmetric nonnegative definite solution of (Equation43

(43)

(43) ) is said to be a strong solution if all the eigenvalues of

are on or inside the unit circle, where

is given by:

If all the eigenvalues are strictly inside the unit circle, the solution is said to be a stabilizing solution (Bitmead et al., Citation1985; Chan et al., Citation1984). The standard form taken by Theorem 3.2 and (Equation43

(43)

(43) ) allows well-known stability and convergence conditions to be stated in the next two lemmas for general

,

,

and

.

Lemma 5.1

Chan et al., Citation1984; De Souza et al., Citation1986

For the algebraic Riccati equation (Equation43(43)

(43) ):

the strong solution exists and is unique if and only if

is detectable,

the strong solution is the only nonnegative definite solution if and only if

is detectable and

has no uncontrollable mode outside the unit circle,

the strong solution coincides with the stabilising solution if and only if

is detectable and

has no uncontrollable mode on the unit circle,

the stabilising solution is positive definite if and only if

is detectable and

has no uncontrollable mode inside, or on the unit circle.

Proof.

See De Souza et al. (Citation1986, Theorem 3.2) and Chan et al. (Citation1984, Theorem 3.1).

Lemma 5.2

De Souza et al., Citation1986

Suppose:

have no uncontrollable modes on the unit circle,

be detectable,

or

be detectable,

Proof.

See De Souza et al. (Citation1986, Theorem 4.1, Theorem 4.2).

We will now express the convergence conditions in terms of the original system matrices A, B, C and D using Lemmas 5.3 and 5.4. We begin with the controllability of modes of .

Lemma 5.3

Let and

be given by (Equation30

(30)

(30) ) and (Equation31

(31)

(31) ) with D full column rank and

, then

is an uncontrollable mode of

if and only if it is an uncontrollable mode of

.

Proof.

If is an uncontrollable mode of

then there exists

such that

and

. Hence

and

. The converse follows since

is a left inverse of D.

We now turn to the detectability of . This turns out to be related to the invariant zeros of the system, namely the complex numbers z where the system matrix

has rank less than its normal rank (see Zhou et al., Citation1996, Definition 3.6).

Lemma 5.4

Zhou et al., Citation1996

Let and

be given by (Equation30

(30)

(30) ) and (Equation32

(32)

(32) ) with D full column rank and

, then

is detectable if and only if there are no invariant zeros on or outside the unit circle.

Proof.

The lemma and proof are the same as Zhou et al. (Citation1996, Lemma 13.9) except that modes on or outside the unit circle are considered (rather than the imaginary axis).

Theorem 5.5

Let ,

and

be given by (Equation30

(30)

(30) ), (Equation31

(31)

(31) ) and (Equation32

(32)

(32) ) with D full column rank and R>0, then

given by the Riccati difference equation (Equation42

(42)

(42) ) with

asymptotically converges to the unique stabilising solution

of the algebraic Riccati equation (Equation43

(43)

(43) ) as

, providing the system with realisation

has (1) no uncontrollable modes on the unit circle, and (2) no invariant zeros on or outside the unit circle.

Proof.

This follows from Lemmas 5.1, 5.2, 5.3 and 5.4.

Remark 5.1

Condition (2) in Theorem 5.5 that there are no invariant zeros on or outside the unit circle is a type of minimum phase condition.

Remark 5.2

From Lemma 5.4 we see that a necessary condition for to be detectable is that

is detectable, under the assumption that D is full column rank, though it is clearly not sufficient, e.g. A = −B = C = D = 1.

Remark 5.3

The assumption that D is full column rank requires that , i.e. the number of measurements is no less than the number of unknown inputs. It is interesting to note that the conditions of Theorem 5.5 may still hold even if p = m. In such a case

detectable implies that all eigenvalues of

are strictly within the unit circle. Also since D is square and invertible,

and the update gain

are zero and the filter of Theorem 3.2 reduces to the unique system inverse.

Remark 5.4

We mention that an essentially equivalent necessary and sufficient stability and convergence condition to that of Theorem 5.5 is given in Abooshahab et al. (Citation2022, Theorem 4) albeit with an assumption that is observable.

In light of the convergence results, it is interesting to consider the filter error using the recursions given in Theorem 3.2. Using (Equation36(36)

(36) )–(Equation37

(37)

(37) ) we find that:

Hence we note that the error dynamics converge under the stated conditions towards an exponentially stable time-invariant system, i.e. the eigenvalues of

are strictly within the unit circle when the system realisation

has (1) no uncontrollable modes on the unit circle, and (2) no invariant zeros on or outside the unit circle.

It is also interesting to note for the smoothing problem that:

when

is invertible. Hence under the conditions of Theorem 5.5,

converges to a matrix which is similar to

. This means that the smoothing recursions (Equation38

(38)

(38) ) and (Equation40

(40)

(40) ) are stable whenever the filtering ones are.

6. Illustrative example

6.1. Vehicle model

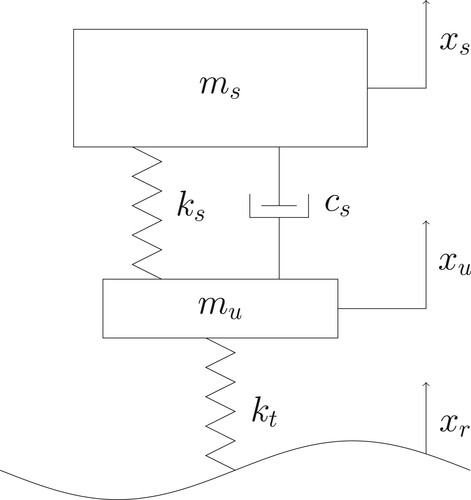

To illustrate the limit filter and smoother we present an automotive application. The problem considered is to construct both in real time and offline an accurate map of a road profile without the use of optical sensors (e.g. profilometer). Road profile measurement (estimation) is important for vehicle dynamics studies and vehicle development, driver training, and for road surface quality monitoring, which motivates the exploration of methods which do not require sophisticated sensing and instrumentation. In our example, a vehicle is assumed to be equipped with a global positioning system (GPS) sensor and basic suspension sensors. We consider the standard quarter-car suspension model of Figure with dynamical equations:

where

and

are sprung and unsprung masses,

and

are suspension spring and damper constants and

is a tyre stiffness constant, and the coordinates

,

and

represent displacements of

,

and road respectively. The road profile

is treated as an unknown system input. We begin by considering a sensor set consisting of accelerometers located on

and

, a suspension deflection sensor, and a GPS sensor located on

that measures

. We, therefore, select the state, input and measurement vectors as follows:

The model is discretised using Euler's method with a time-step

to give the state-space equations (Equation1

(1)

(1) )–(Equation2

(2)

(2) ) with system matrices:

6.2. Convergence and stability of the limit filter

We now investigate the convergence properties of the limit filter. Among the measurements in z it is the measurement of (namely the use of an unsprung mass accelerometer sensor) which ensures that D is full column rank. We now consider the system matrix of Lemma 5.4:

(44)

(44) and investigate if there are any values of z where it loses rank. Denoting by

the nth row of (Equation44

(44)

(44) ) and noting that elementary row operations preserve rank, we perform sequentially the following operations:

followed by reordering and rescaling to obtain a matrix whose first five rows are the identity matrix, which is obviously full column rank for all z. In a similar way, we can show that the matrix

(first 4 rows of (Equation44

(44)

(44) )) has full row rank for all z, hence

is controllable. It, therefore, follows that the stability and convergence results of Lemma 5.1, 5.2 and Theorem 5.5 hold. It can be verified that the condition of Lemma 5.4 holds even without measurement of

(suspension deflection) and

(sprung mass acceleration). However, the condition fails if the measurement of

(GPS sensor) is removed. The condition on

does not change if any of the measurements are removed.

A similar analysis to the above can be carried out for other choices of sensors. For example, a profilometer which measures could be considered. In this case, another non-zero entry appears in the D matrix. Thus the profilometer could be considered as an alternative to the measurement of

to ensure that D is full rank. Similar conclusions hold in this case, namely, the required detectability holds if

is measured but not if only measurements of

and

are available.

6.3. Simulation results and discussion

We apply the limit filter and smoother of Theorems 3.2 and 4.2 with an additional requirement that the GPS sampling period is longer than the time-step . Hence, the measurement vector is time varying with the row corresponding to the GPS replaced by zeros unless a GPS measurement is available. The filter and smoother recursions remain the same with the system matrices replaced by their time-varying counterparts. We demonstrate the performance of the filter and smoother on simulated data with a road profile (specified for convenience as a function of time) that consists of a square wave (period 0.5 s, amplitude 0.2 m), followed by a downward ramp (slope

m/s) and finally a Brownian motion (integrated white noise of standard deviation 0.05 m for the discretisation length

). The vehicle model parameters are

kg,

kg,

kN/m,

kNs/m,

kN/m and the discretisation length is

ms. The GPS measurements are available in regular intervals of 1 second. Simulated white noise with standard deviation as in Table is added to the four measurements to generate the vector

that is input to the limit filter, with standard deviations chosen to reflect the different accuracies expected from the sensors.

Table 1. Sensor noise parameters.

The same model parameters are used for the limit filter and smoother, and the measurement error covariance matrix is taken to be: . The initial state covariance is set to

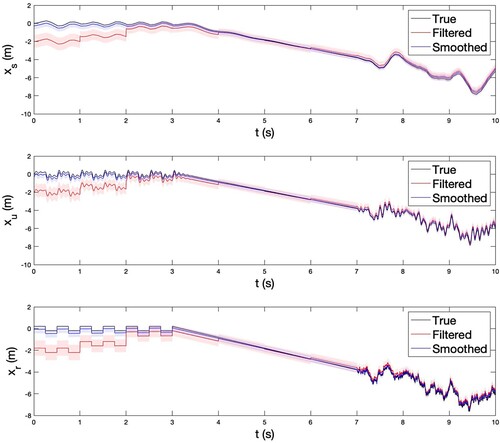

and the filter sprung and unsprung mass position states are initialised with an error of 2 m (equal to 2 standard deviations). The true, filtered and smoothed sprung and unsprung mass position states and road profile are plotted in Figure with the shaded regions representing the 1 standard deviation confidence intervals.

Figure 2. True, filtered and smoothed sprung and unsprung mass position states and road profile input.

It is observed that the filter reduces the large initialisation error over time and that the smoother improves on the filtered estimates over the entire simulation interval and especially at the start. The standard deviation of the smoothed estimates is smaller (or equal) than that of the filtered estimates (see Remark 4.2) and the difference is also most pronounced at the start of the simulation interval. Further note that the smoother generates a realistic state trajectory, which is because the smoothed state and input trajectories, unlike the filtered trajectories, satisfy the model dynamics (see Remark 4.1). Lastly, note that the limit filter is able to blend the different measurements to achieve good performance in contrasting situations. For example, rapid changes in are dealt with as well as slow drift. This is similar to the property in localisation/tracking filters that integrate inertial measurement unit (IMU) and GPS sensors to estimate a system state, whereas here the limit filter is used to estimate an unknown system input (road profile). Note also that we have avoided the difficulty with the standard Kalman filter (Equation3

(3)

(3) )–(Equation12

(12)

(12) ) of specifying a suitable mean and covariance for the exogenous input.

7. Conclusion

This paper has derived two forms for the zero informational limit of the Kalman filter with feedthrough of the process noise to the measurements. We have shown that the recursions in the first form are closely related to those of Gillijns and De Moor (Citation2007b) and hence we have provided an alternative interpretation for that recursive filter. The second form takes the form of the standard Kalman filter for a modified system (Theorem 3.2). This form is convenient to derive conditions for the asymptotic convergence of the limit filter to steady state form which can be conveniently expressed in terms of the original system matrices as a minimum phase and a controllability condition (Theorem 5.5). We further derive the fixed interval Kalman smoother with feedthrough of the process noise to the measurements (Theorem 4.1) and then find the zero informational limit with respect to the process noise (Theorem 4.2). Both the filter and smoother are applied successfully to a quarter-car model to accurately map road elevation using a GPS and vehicle suspension sensors in simulation.

An interesting question for future work is to ask if a stochastic filtering and smoothing problem can be posed and solved directly for a completely unknown exogenous input (without process noise) and whether the solution coincides with the limit filter and smoother of the present paper. In turn, it would be interesting to compare this with the problem of the limiting form of the Kalman filter when the measurement noise covariance tends to zero and the related singular filtering problem (Moylan, Citation1974; Shaked, Citation1985).

Acknowledgments

The first author would like to acknowledge McLaren Automotive Ltd for a CASE studentship awarded for doctoral research.

Additional information

Funding

References

- Abooshahab, M. A., Alyaseen, M. M., Bitmead, R. R., & Hovd, M. (2022). Simultaneous input & state estimation, singular filtering and stability. Automatica, 137, Article ID 110017. https://doi.org/10.1016/j.automatica.2021.110017

- Anderson, B. D., & Moore, J. B. (2012). Optimal filtering. Courier Corporation.

- Basile, G., & Marro, G. (1969). On the observability of linear, time-invariant systems with unknown inputs. Journal of Optimization Theory and Applications, 3(6), 410–415. https://doi.org/10.1007/BF00929356

- Bitmead, R. R., Gevers, M. R., Petersen, I. R., & Kaye, R. J. (1985). Monotonicity and stabilizability-properties of solutions of the Riccati difference equation: Propositions, lemmas, theorems, fallacious conjectures and counterexamples. Systems & Control Letters, 5(5), 309–315. https://doi.org/10.1016/0167-6911(85)90027-1

- Bitmead, R. R., Hovd, M., & Abooshahab, M. A. (2019). A Kalman-filtering derivation of simultaneous input and state estimation. Automatica, 108, Article ID 108478. https://doi.org/10.1016/j.automatica.2019.06.030

- Buchstaller, D., Liu, J., & French, M. (2021). The deterministic interpretation of the Kalman filter. International Journal of Control, 94(11), 3226–3236. https://doi.org/10.1080/00207179.2020.1755895

- Chan, S., Goodwin, G., & Sin, K. (1984). Convergence properties of the Riccati difference equation in optimal filtering of nonstabilizable systems. IEEE Transactions on Automatic Control, 29(2), 110–118. https://doi.org/10.1109/TAC.1984.1103465

- Cheng, Y., Ye, H., Wang, Y., & Zhou, D. (2009). Unbiased minimum-variance state estimation for linear systems with unknown input. Automatica, 45(2), 485–491. https://doi.org/10.1016/j.automatica.2008.08.009

- Darouach, M., & Zasadzinski, M. (1997). Unbiased minimum variance estimation for systems with unknown exogenous inputs. Automatica, 33(4), 717–719. https://doi.org/10.1016/S0005-1098(96)00217-8

- Darouach, M., Zasadzinski, M., Onana, A. B., & Nowakowski, S. (1995). Kalman filtering with unknown inputs via optimal state estimation of singular systems. International Journal of Systems Science, 26(10), 2015–2028. https://doi.org/10.1080/00207729508929152

- Darouach, M., Zasadzinski, M., & Xu, S. J. (1994). Full-order observers for linear systems with unknown inputs. IEEE Transactions on Automatic Control, 39(3), 606–609. https://doi.org/10.1109/9.280770

- Deshpande, A. S. (2017). Bridging a gap in applied Kalman filtering: Estimating outputs when measurements are correlated with the process noise. IEEE Control Systems Magazine, 37(3), 87–93. https://doi.org/10.1109/MCS.5488303

- De Souza, C., Gevers, M., & Goodwin, G. (1986). Riccati equations in optimal filtering of nonstabilizable systems having singular state transition matrices. IEEE Transactions on Automatic Control, 31(9), 831–838. https://doi.org/10.1109/TAC.1986.1104415

- Gakis, G., & Smith, M. C. (2022). A deterministic least squares approach for simultaneous input and state estimation. IEEE Transactions on Automatic Control. https://doi.org/10.1109/TAC.2022.3209415

- Gillijns, S., & De Moor, B. (2007a). Unbiased minimum-variance input and state estimation for linear discrete-time systems. Automatica, 43(1), 111–116. https://doi.org/10.1016/j.automatica.2006.08.002

- Gillijns, S., & De Moor, B. (2007b). Unbiased minimum-variance input and state estimation for linear discrete-time systems with direct feedthrough. Automatica, 43(5), 934–937. https://doi.org/10.1016/j.automatica.2006.11.016

- Glover, J. (1969). The linear estimation of completely unknown signals. IEEE Transactions on Automatic Control, 14(6), 766–767. https://doi.org/10.1109/TAC.1969.1099329

- Hou, M., & Muller, P. C. (1992). Design of observers for linear systems with unknown inputs. IEEE Transactions on Automatic Control, 37(6), 871–875. https://doi.org/10.1109/9.256351

- Hou, M., & Muller, P. C. (1994). Disturbance decoupled observer design: A unified viewpoint. IEEE Transactions on Automatic Control, 39(6), 1338–1341. https://doi.org/10.1109/9.293209

- Hou, M., & Patton, R. J. (1998a). Input observability and input reconstruction. Automatica, 34(6), 789–794. https://doi.org/10.1016/S0005-1098(98)00021-1

- Hou, M., & Patton, R. J. (1998b). Optimal filtering for systems with unknown inputs. IEEE Transactions on Automatic Control, 43(3), 445–449. https://doi.org/10.1109/9.661621

- Hsieh, C.-S. (2000). Robust two-stage Kalman filters for systems with unknown inputs. IEEE Transactions on Automatic Control, 45(12), 2374–2378. https://doi.org/10.1109/9.895577

- Hsieh, C.-S., & Chen, F.-C. (1999). Optimal solution of the two-stage Kalman estimator. IEEE Transactions on Automatic Control, 44(1), 194–199. https://doi.org/10.1109/9.739135

- Kailath, T., Sayed, A. H., & Hassibi, B. (2000). Linear estimation. Prentice Hall.

- Keller, J.-Y., & Darouach, M. (1999). Two-stage Kalman estimator with unknown exogenous inputs. Automatica, 35(2), 339–342. https://doi.org/10.1016/S0005-1098(98)00194-0

- Kerwin, W. S., & Prince, J. L. (2000). On the optimality of recursive unbiased state estimation with unknown inputs. Automatica, 36(9), 1381–1383. https://doi.org/10.1016/S0005-1098(00)00046-7

- Kitanidis, P. K. (1987). Unbiased minimum-variance linear state estimation. Automatica, 23(6), 775–778. https://doi.org/10.1016/0005-1098(87)90037-9

- Kudva, P., Viswanadham, N., & Ramakrishna, A. (1980). Observers for linear systems with unknown inputs. IEEE Transactions on Automatic Control, 25(1), 113–115. https://doi.org/10.1109/TAC.1980.1102245

- Li, B. (2013). State estimation with partially observed inputs: A unified Kalman filtering approach. Automatica, 49(3), 816–820. https://doi.org/10.1016/j.automatica.2012.12.007

- Markovsky, I., & De Moor, B. (2005). Linear dynamic filtering with noisy input and output. Automatica, 41(1), 167–171. https://doi.org/10.1016/j.automatica.2004.08.014

- Mendel, J. (1977). White-noise estimators for seismic data processing in oil exploration. IEEE Transactions on Automatic Control, 22(5), 694–706. https://doi.org/10.1109/TAC.1977.1101597

- Moylan, P. (1974). A note on Kalman-Bucy filters with zero measurement noise. IEEE Transactions on Automatic Control, 19(3), 263–264. https://doi.org/10.1109/TAC.1974.1100570

- Murphy, K. P. (2012). Machine learning: A probabilistic perspective. MIT Press.

- Shaked, U. (1985). Explicit solution to the singular discrete-time stationary linear filtering problem. IEEE Transactions on Automatic Control, 30(1), 34–47. https://doi.org/10.1109/TAC.1985.1103784

- Su, J., Li, B., & Chen, W.-H. (2015). On existence, optimality and asymptotic stability of the Kalman filter with partially observed inputs. Automatica, 53, 149–154. https://doi.org/10.1016/j.automatica.2014.12.044

- Wang, S. H., Wang, E., & Dorato, P. (1975). Observing the states of systems with unmeasurable disturbances. IEEE Transactions on Automatic Control, 20(5), 716–717. https://doi.org/10.1109/TAC.1975.1101076

- Willems, J. C. (2004). Deterministic least squares filtering. Journal of Econometrics, 118(1-2), 341–373. https://doi.org/10.1016/S0304-4076(03)00146-5

- Yong, S. Z., Zhu, M., & Frazzoli, E. (2015). Simultaneous input and state estimation with a delay. In 2015 54th IEEE Conference on Decision and Control (CDC) (pp. 468–475). IEEE.

- Yong, S. Z., Zhu, M., & Frazzoli, E. (2016). A unified filter for simultaneous input and state estimation of linear discrete-time stochastic systems. Automatica, 63, 321–329. https://doi.org/10.1016/j.automatica.2015.10.040

- Zhou, K., Doyle, J. C., & Glover, K. (1996). Robust and optimal control. Prentice Hall.

Appendices

Appendix 1. Proof of Theorem 3.1

For convenience, we state the matrix inversion lemma (see Anderson & Moore, Citation2012, Sec. 6.3) which is used in the proof.

Lemma A.1

Let ,

and H be arbitrary of compatible dimension. Then:

(A1)

(A1)

(A2)

(A2)

To prove Theorem 3.1 we will proceed inductively, first considering the recursive expressions (Equation3(3)

(3) )–(Equation12

(12)

(12) ) in the limit as

. Suppose for a given k,

and

tend to well-defined limits

and

as

. We will show that

,

,

,

,

,

,

have well-defined limits given by (Equation13

(13)

(13) )–(Equation22

(22)

(22) ). Substituting for

from (Equation10

(10)

(10) ) into (Equation9

(9)

(9) ) and using (EquationA2

(A2)

(A2) ) gives:

(A3)

(A3) where:

Taking the limit as

in (EquationA3

(A3)

(A3) ),

approaches

, where

is defined in (Equation20

(20)

(20) ). It follows that (Equation4

(4)

(4) ) has a well-defined limit given by (Equation14

(14)

(14) ). Now substitute for

in (Equation7

(7)

(7) ) and let

be defined as in (Equation18

(18)

(18) ). Then (Equation7

(7)

(7) ) has a well-defined limit given by (Equation17

(17)

(17) ). Applying (EquationA1

(A1)

(A1) ) to (Equation10

(10)

(10) ) gives:

(A4)

(A4) Substituting for (Equation8

(8)

(8) ) and then (EquationA4

(A4)

(A4) ) into (Equation3

(3)

(3) ) and taking the limit as

,

has a well-defined limit given by:

(A5)

(A5) Making use of the expressions for

and

in (EquationA5

(A5)

(A5) ) gives (Equation13

(13)

(13) ). Substituting for

from (Equation9

(9)

(9) ) and

from (Equation10

(10)

(10) ) into (Equation6

(6)

(6) ) and applying (EquationA1

(A1)

(A1) ) gives:

Taking the limit as

,

has a well-defined limit given by:

(A6)

(A6) This expression can be equivalently written in terms of

in the form (Equation16

(16)

(16) ). Substituting for

from (Equation8

(8)

(8) ) and

from (EquationA4

(A4)

(A4) ) into (Equation5

(5)

(5) ), taking the limit as

, and then using the expressions for

and

from (EquationA6

(A6)

(A6) ),

has a well-defined limit given by (Equation15

(15)

(15) ). Taking the limit as

in (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ),

and

have well-defined limits given by (Equation21

(21)

(21) ) and (Equation22

(22)

(22) ) respectively. The result follows by induction since, by definition,

and

.

Appendix 2

Proof of Theorem 3.2

We first establish the following lemma.

Lemma A.2

Suppose D has full column rank, and

of appropriate dimensions, then:

(A7)

(A7) where

as in (Equation33

(33)

(33) ).

Proof.

We first introduce the projection:

(A8)

(A8) We observe that Π and

are parallel projections onto the same space, namely the column space of D, but have different null spaces. Further note that

,

and:

(A9)

(A9)

(A10)

(A10)

(A11)

(A11)

(A12)

(A12) We first claim that:

(A13)

(A13) To see this note that:

using

. Therefore using (EquationA9

(A9)

(A9) ) and (EquationA12

(A12)

(A12) ):

from which (EquationA13

(A13)

(A13) ) follows. Further from (EquationA12

(A12)

(A12) ) and (EquationA13

(A13)

(A13) ):

(A14)

(A14) Hence, substituting

from (EquationA8

(A8)

(A8) ) into (EquationA13

(A13)

(A13) ) gives (EquationA7

(A7)

(A7) ).

We next establish several identities that are needed in the proof.

We claim that:

(A15)

(A15) To see this note that on substitution from (Equation18

(18)

(18) ) and (Equation19

(19)

(19) ) the right hand side of (EquationA15

(A15)

(A15) ) becomes:

which equals:

(A16)

(A16) after applying (EquationA7

(A7)

(A7) ) with

and noting that

from (Equation20

(20)

(20) ). Using the transpose of (EquationA14

(A14)

(A14) ), the

on the right in (EquationA16

(A16)

(A16) ) can be removed, which gives (Equation35

(35)

(35) ) on substituting from (Equation32

(32)

(32) ). This establishes (EquationA15

(A15)

(A15) ).

From (EquationA15

(A15)

(A15) ) it is easy to see that:

(A17)

(A17) since

. Hence:

(A18)

(A18)

We claim that:

(A19)

(A19) To see this note that on substitution from (Equation18

(18)

(18) ) and (EquationA6

(A6)

(A6) ) the right hand side of (EquationA19

(A19)

(A19) ) becomes:

which equals

using (EquationA15

(A15)

(A15) ). Applying (EquationA18

(A18)

(A18) ) establishes the claim.

We claim that:

(A20)

(A20) To see this consider:

where we have used (EquationA15

(A15)

(A15) ), (Equation18

(18)

(18) ), (Equation20

(20)

(20) ), (Equation34

(34)

(34) ) and (Equation19

(19)

(19) ).

To show (Equation27

(27)

(27) ) substitute from (Equation18

(18)

(18) ) and (EquationA20

(A20)

(A20) ) into (Equation17

(17)

(17) ) and then use (Equation24

(24)

(24) ) and (EquationA18

(A18)

(A18) ).

Lastly, we show (Equation26

(26)

(26) ). Substituting (Equation20

(20)

(20) ) and (EquationA20

(A20)

(A20) ) into (Equation16

(16)

(16) ) gives:

(A21)

(A21) Using (EquationA18

(A18)

(A18) ) note that:

from which it follows that:

Noting that

from (EquationA17

(A17)

(A17) ) the last two terms in (EquationA21

(A21)

(A21) ) are zero. The claim follows using (EquationA18

(A18)

(A18) ) and (Equation24

(24)

(24) ) on the first term.

Proof of expressions (Equation23 (23)

(23)  (23) )–(Equation25

(23) )–(Equation25 (25)

(25)  (25) )

(25) )

Substituting (Equation14(14)

(14) ) into (Equation13

(13)

(13) ) and using (EquationA15

(A15)

(A15) ) gives (Equation23

(23)

(23) ). Substituting (EquationA19

(A19)

(A19) ) into (Equation15

(15)

(15) ) gives (Equation24

(24)

(24) ). Substituting (EquationA20

(A20)

(A20) ) into (Equation14

(14)

(14) ) and using (Equation23

(23)

(23) ) gives (Equation25

(25)

(25) ). Substituting (Equation25

(25)

(25) ) into (Equation21

(21)

(21) ) gives (Equation28

(28)

(28) ). Substituting (Equation27

(27)

(27) ) and (Equation26

(26)

(26) ) into (Equation22

(22)

(22) ) gives an expansion of (Equation29

(29)

(29) ).

Appendix 3

Proof of Theorem 4.1

Our approach below follows the method of Murphy (Citation2012, Section 18.3). Consider the joint probability distribution of the state and input at time k and state at time k + 1 given measurements up to time k:

which follows by substituting equations (Equation1

(1)

(1) ) and (Equation11

(11)

(11) ) into the expectation and variance expressions (where

means normally distributed with mean a and variance b). Conditioning on the value of

gives the distribution on

and

:

where for convenience we have defined:

Applying the law of total expectation and variance then gives the smoothed mean and covariance at time k given knowledge at time k + 1:

The backwards in time recursion to find the smoothed state and input estimates follows directly.