?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

We report on a series of task-based interviews in which nine mathematicians were asked to evaluate a series of six mathematical arguments, purportedly produced either by fellow mathematicians or undergraduate students. In this paper, we attend to the role of context in mathematicians’ responses, leading to four themes in expectations when evaluating the proofs that they read. First, mathematicians’ evaluations of identical arguments were sensitive to researchers’ manipulation of authorship, with most accepting arguments purportedly produced by a colleague while taking a more critical view of that same argument if produced by an undergraduate student. Our thematic analysis of interview responses led to three context-based factors influencing mathematicians’ responses when evaluating student-produced texts: course goals, instructors’ expectations, and assessment type. In the final section, we consider implications for researchers focused on understanding common practice amongst mathematicians as well as the pedagogic consequences of our findings for practice in the classroom.

1. Introduction

Proof is central to undergraduate mathematics and is a known challenge for many students. In particular, students’ proof writing practices are a pivotal aspect of their mathematical apprenticeship. As instructors, we spend a lot of time evaluating students’ mathematical arguments in both written assessments and during informal in-class discussions. Yet, despite its importance, we know very little about the factors influencing mathematicians’ grading practices.

In recent decades, it has become increasingly popular to talk of mathematical practice, and mathematical proof in particular, as a socially constructed entity (Dawkins & Weber, Citation2017; Sfard, Citation2008). This is a far cry from the historically privileged position of mathematics as the pinnacle of certainty, above the empirical sciences in its search for a priori knowledge. While this increasing awareness of the social dimension of mathematical proof has permeated many aspects of research in undergraduate mathematics education, we feel that the social dimension of proof has gone under-examined with respect to mathematicians’ proof-reading practices and their grading of student-produced arguments.

We add to our understanding by focusing on mathematicians’ grading of six mathematical arguments one might expect to find in an undergraduate ‘Introduction to Proof’ (ITP) course, covering topics including elementary logic, set theory and mathematical argumentation. By choosing an instruction-based context for our research, we address the dual aims of understanding mathematicians’ epistemological beliefs about proof itself, and their pedagogic beliefs about grading students’ work .

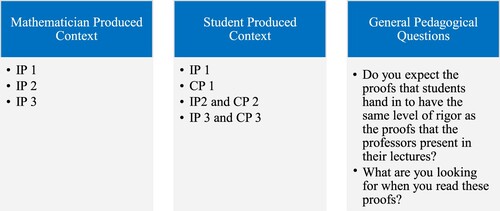

Participating mathematicians were first shown three arguments and told they were produced by a fellow mathematician teaching an introduction to proof course. After being asked to evaluate the validity of the purported proof, mathematicians were then told that the same text was produced by an undergraduate student and asked if this changed their perspective. Following this task-based interview, mathematicians then participated in a more traditional semi-structured interview task in which they were asked more general questions about their approaches to evaluating the proofs that they read and what they expect students to gain from their exposure to (writing) proofs in undergraduate courses.

We begin this paper by discussing the social dimension of proof and its manifestations on mathematicians’ engagement with arguments produced by others. We discuss the role of context informing mathematicians’ evaluations of the proofs that they read, before turning to recent research on mathematicians’ grading of student-produced proofs. We then present our empirical research with mathematicians. We end this paper with commentary on the implications of our work for understanding of mathematicians’ grading practices and directions for future work.

2. The social nature of mathematics and mathematical proof

A proof becomes a proof after the social act of ‘accepting it as a proof’

– Manin (Citation1977).

Dawkins and Weber (Citation2017) enumerated a series of values and norms held by the mathematical community. The authors note that students’ famed difficulties with mathematical proof can be at least partially accounted for by a lack of appreciation for these values and norms. In this way, researchers have positioned learners of mathematics as apprentices into the mathematical community (Cobb & Yackel, Citation2016; Dawkins & Weber, Citation2017; Sfard, Citation2008). The learning process, hence, centers on coming to understand and appreciate the values and norms of the community.

We endorse this ‘apprenticeship model’ of learning mathematics and mathematical proof. Moreover, we note its importance in understanding how mathematicians read and evaluate students’ proofs. That is, when mathematicians evaluate student-produced proofs, they act to uphold the values and norms of the community. When a student unknowingly violates a norm in their writing, that student identifies themselves as an outsider to the community. It is the role of the mathematician, or instructor, to identify and correct the error to aid the student’s apprenticeship and hopefully guide that student’s future proof production. Lew and Mejía-Ramos (Citation2019; Citation2020) capitalized on this norm-correcting behavior through the use of ‘breaching experiments’, in which mathematicians were presented with a series of potential violations in an attempt to draw out the values and norms most important to the mathematicians in their study. We return to the findings of Lew and Mejía-Ramos later, having discussed the consequences of the social dimension of mathematics on mathematicians’ reading practices more generally.

2.1. Mathematicians’ proof-reading practices

Mathematicians have been frequently observed not to agree on various tasks associated with proof (e.g., Inglis et al., Citation2013; Weber & Czocher, Citation2019). Weber and Mejía-Ramos (Citation2011) identified three strategies used by mathematicians’ when evaluating the purported proofs of their peers: appealing to the authority of other mathematicians who read the proof, line-by-line reading, and modular reading. Weber (Citation2018) focused, in particular, on the authorial context, arguing that mathematicians attend to myriad factors including the reputation of the author(s) and publication outlet, and the age and usage of the result at stake. This argument contradicts a common external conception of mathematics as an acontextual discipline in which ‘results are objective, infallible, and incorrigible’ (p. 239).

Weber’s theoretical assertions are supported in the literature, both on mathematicians’ research practice, and in their evaluation of student-produced texts. Inglis et al. (Citation2013) found in a series of task-based interviews, that mathematicians disagreed on the validity of several purported proofs. These authors found that applied mathematicians were more likely to accept the validity of a given argument than their ‘pure’ colleagues. Similarly, Miller et al. (Citation2018) found that mathematicians often awarded full marks for instructor-produced proofs but different scores for those same proofs when purportedly produced by students in multiple mathematical settings. These findings were predicted by Sfard (Citation2008) who conjectured that mathematicians’ evaluations of the validity of given arguments ‘are probably the least uniform aspect[s] of mathematical discourses’ (p. 231).

On the other hand, Weber and Czocher (Citation2019) found substantive agreement on a task similar to Inglis et al. (Citation2013), noting that mathematicians appeared only to disagree on fringe examples like computer-aided or diagrammatic proofs. Davies et al. (Citation2020) found similar results in a comparative judgement-based study, reporting a high degree of reliability between mathematicians’ endorsements of student-produced texts.

The extent and locus of the disagreement on mathematicians’ proof-reading practices remain open questions. In some cases, it seems that mathematicians demonstrate a high degree of agreement on what constitutes a good mathematical proof. In others, the participating mathematicians diverge. In the following section, we focus on the role of context in an attempt to unpack this question.

2.2. Mathematicians’ proof-reading practices: the role of context

Beyond authorship, several authors have noted other contextual factors motivating mathematicians’ judgement. Weber and Czocher (Citation2019) considered two contextual factors guiding mathematicians’ responses: purpose and audience. Concerning purpose, Weber and Czocher found that mathematicians were ‘less tolerant of a gap if the goal of the proof was to rigorously establish’ a new result (p. 4) than if a proof were presented for ‘recreational purposes’ in an expository journal (ibid). The same authors referred to the knowledge base of the audience, and the perception of whether an intended reader was ‘capable of bridging the gap’ (ibid).

Returning to mathematicians’ reading of student-produced proofs, both Moore (Citation2016) and Lew and Mejía-Ramos (Citation2020) raised contextual factors influencing mathematicians’ behavior. Moore discussed mathematicians’ focus on more than just the ‘decontextualized mathematical text’, making frequent reference to students’ understanding and proof-production skills as entities both disconnected from the text-at-hand and relevant to the evaluation of that text. In a similar interview study of mathematicians’ reading, Lew and Mejía-Ramos (Citation2020) noted the variety in (mathematicians’ perceptions of) linguistic conventions of proofs in textbooks, written by instructors on blackboards and student-produced proofs. Mathematicians held textbook proofs to higher standards of rigor than blackboard or student-produced proofs, and were more tolerant of poor grammar, mixed notations and missing verbal connectives from blackboard proofs than those produced by students.

3. Research questions

Having established that mathematicians’ readings of proofs are both highly variable and sensitive to at least some contextual features, it remains to understand the role of context in more detail. In light of the literature survey above, we pose three questions to guide the investigation that follows:

What can be learned about mathematicians’ epistemological beliefs about proof through the evaluation of (ostensibly) student-produced arguments?

How are mathematicians’ evaluations of proofs sensitive to information about the author of the proof?

How are mathematicians’ evaluations of proof influenced by the context in which the proof was produced?

4. Methods

The findings we present in the current paper are based on a reanalysis of the same dataset that formed the basis of Miller et al. (Citation2018). Where Miller and colleagues focused primarily on the quantitative variance itself, in the present paper we focus on qualitative features associated with the role of context.

4.1. Participants

Nine research-active mathematicians were interviewed for this study. All participants were from the same university in the eastern United States, and all had taught at least one upper-level mathematics course on proof-based mathematics. Consistent with previous presentations of these data, we refer to the nine participants with labels M1 through M9 and refer to all with feminine pronouns.

4.2. Materials

We examined proofs of three propositions from number theory that might be proven in an ITP course. For each proposition, we generated two proofs. The Complete Proof (CP) was a proof that included detail sufficient for us to expect the proof to be judged as correct and valid without additional detail at the level of an ITP course. The Incomplete Proof (IP) was an abbreviated version of the Complete Proof that omitted some pieces of the proof, e.g., not defining newly introduced variables.

We present each proposition and its CP below, placing boxes around the text that we omitted from the Incomplete Proof.

Proposition 1:

If is a prime number greater than 5, then the digit in the 1’s place of n is 0, 4, or 6.

Proof 1:

Suppose that is a prime number greater than 5. Thus

is odd and so

is even. Then

is of the form

,

,

,

, or

. Then

is of one of the following forms:

Of these,

and

yield expressions for

that are clearly divisible by 5 and thus not prime (since

). The only choices for

, then, are those numbers with 1’s digits of 0, 4, or 6.

Proposition 2:

There exists a sequence of 100 consecutive integers, none of which is prime.

Proof 2:

We will show that the 100 consecutive integers 101! + 2, 101! + 3, 101! + 4, … , 101! +101 contains no primes. Since both terms of 101! + 2 contain a factor of 2, 2 is a common factor of 101! + 2 and thus 101! + 2 is not prime. Since both terms of 101! + 3 contain a factor of 3, 3 is a common factor of 101! + 3 and thus 101! + 3 is not prime. Similarly, for any ,

is a factor of 101!

and thus 101!

is not prime. Thus, the sequence of 100 consecutive integers given above contains no primes.

Proposition 3:

Given two angles and

, we define that

iff

. Show that

is an equivalence relation on

.

Proof 3:

(Reflexive): Let . Since

by Pythagorean Theorem,

.

(Symmetric): Let and

. So

. Using Pythagorean Theorem on both

and

, we have

which implies

and hence

.

(Transitive): Let and

,

and

. So

and

. Adding up these two equations we have

Rewriting we have

. Thus

or

and hence

. Therefore

is an equivalence relation.

The omissions in these proofs are not necessarily large jumps in logic. In fact, each mathematician was able to understand and judge the validity of the work with the omissions. However, as we will see, the context in which the proof was provided had a significant influence on how the mathematician viewed the work.

4.3. Procedure

Each mathematician engaged in three phases of a task-based interview that was video recorded (see for a summary of these phases). First, the mathematicians were told that a colleague had presented IP 1 in lecture. They were asked if they thought the proof was correct and to comment on the pedagogical quality and appropriateness of the proof. This was repeated for IP 2 and IP 3.

Second, each participant was presented with IP 1 again, but this time told that a student had produced the work instead of a colleague. They were asked to determine if the proof was correct and how many points they would assign on a ten-point scale. The mathematicians were then given CP 1 and asked the same questions. This process was repeated for Propositions 2 and 3 with the modification that the incomplete and complete proofs were presented together. The experience with Proposition 1 led them to immediately recognize that a second, potentially better proof would be coming; hence, they wanted to be able to compare them from the beginning.

For the third and final stage, the mathematicians were asked a series of general open-ended interview questions about the role of proof in their undergraduate courses.

4.4. Analysis

All interviews were transcribed for analysis. As the interview tasks had been designed with a mathematician-produced versus student-produced context, a deductive thematic approach was initially used (Braun & Clarke, Citation2006). We sought to identify how the author of the proof affected the mathematicians’ view of the proof. To this end, data were coded for instances of context that influenced the mathematicians’ views of the presented proof. During this process, some transcript excerpts were lightly edited for readability, but original meanings have been preserved in all cases.

As the mathematicians talked about how they would grade student work, it became evident that context was an important feature of how they would choose to assign points. The codes that were generated in relation to context gave rise to four broad categories of influence: authorial experience, course goals, expectations, and assessment type.

5. Results

The first of our four themes, authorial experience, explores the sensitivity of mathematicians’ evaluations to their understanding of the author. The latter three themes focus on evaluations of student-produced proofs only. The mathematicians who provided excerpts associated with each of the four themes are shown in .

Table 1. Categories of context for the grading of proofs.

5.1. Theme 1: authorial experience

Participants were initially presented with the Incomplete Proofs and told that they were authored by a colleague (to be presented in an ITP course). In this context, mathematicians generally agreed that the proofs were good, sometimes without casting a particularly critical eye over the text. For example, when told the proof was produced by a colleague, M1 ‘read it with an assumption that what [they were] reading was going to be correct and good.’

Further, five mathematicians noted explicitly that the gaps were not overly problematic in this context. As M4 noted: ‘… it is the kind of thing that if I saw in a textbook, I would say good. Good that they did not put it in there, so the student has to think, why does this work?’ M7 commented,

But the way [the proof] is written, it is not necessarily a bad thing because I also like when people start asking these questions. When you just skip a step, but you don’t think that it is that big of deal, and [students] say, well, can you explain that.

if you are telling me … this is something that a colleague presented … my default assumption is that [it] should be correct … It would be very, very surprising to me … I would wonder if maybe the student’s notes were incorrect or something like that … .

… sometimes we assume people mean what we think they mean rather than what they actually, what they write. So sometimes [the student] gets away with [gaps in the proof] when you wouldn’t otherwise. In an [ITP] course you probably want to hold them a little more accountable. [CP 2] is much more clearly explained.

5.2. Theme 2: Evaluating student-produced proofs

We now turn attention to our mathematicians’ responses to the purportedly student-produced proofs. All nine mathematicians raised the expectations laid out by the instructor as a factor influencing their grading of proofs. We identified three overlapping subthemes within these expectations: Expectations regarding course goals, technical requirements, and assessment types.

5.2.1. Theme 2a: Course goals

Five mathematicians commented on grading proofs based on the goals they held for the course for which the proof was produced. These comments most frequently referred to the level of rigor appropriate for the course, or to the appropriate use of notation and language.

Depending on the course, the details of a proof may be less important. Particularly in upper-division courses, details seemed to matter less. For example, M2 explained that a primary goal for her Discrete Mathematics course (junior level) is to have the right idea of a proof, noting when speaking about mathematical induction, ‘First, I just take a look, I usually do not pay attention to the details and the smaller algebra stuff. First, I take a quick glance to make sure they have the right idea.’ In talking about her Complex Variables course (senior level), M7 articulated, ‘I am … happy with proofs that have some serious gaps in them, but they show that they are going in the right direction, and they are using the right concept.’

For lower-level courses, such as Introduction to Proof, providing most, if not all, details was considered an important course goal. After commenting about the grading of both incomplete and complete proof, M7 was asked if she would go back and reassess IP 2 after assigning points to CP 2. She stated that she would not reassess, ‘unless this was part of the requirement for the course. I would say I need [to see] all the easiest of steps. I need [the gap in IP 2] explained, and if you don’t, you will lose points.’ Later, talking specifically about an ITP course, M7 noted, ‘I would like them to give me some crystal-clear arguments because this is the sole purpose of this [ITP] course.’

When M9 was grading Proposition 3, she remarked about the scope of variables not being present in IP 3. She noted that ‘it would depend on if that was what I focused on [in class].’ In other words, if it were a course goal that students should be thorough when writing proofs, then she would expect them to state the scope of variables. Hence, the level of detail that is expected is heavily dependent on the instructor’s goals for the class.

For some, course goals can change as the semester progresses. In the words of M7,

There are times when you give them examples in class that have a certain level of rigor, but as time goes on and as the students become more mature in their own abilities to reason and argue … .you can make statements and assumptions that they have an obligation to sit down and figure out what the details are or know what the implied statements are within that.

… it depends on context. [the author of CP 2] knows how to handle notation better. Conceptually there is no difference between [the two proofs]. I would give both full-credit unless we were trying to learn how to use notation, then this [CP 2] would get full credit and [IP 2] wouldn’t.

5.2.2. Theme 2b: Expectations regarding technical requirements

All of our mathematicians identified technical requirements and the level of detail as playing an important role in their evaluation of the student-produced proofs. That said, approaches to technical requirements varied substantially between mathematicians. At one end of the spectrum, M1 laid out the expectations that she wanted a polished proof and would take away credit when a student’s proof did not meet these standards. She noted that she would ‘deduct heavily if it is just a series of equations’ in a student’s proof of a mathematical statement; however,

a correct proof, presented well, has [complete] sentences and the logic flows. If it is not as elegant as it could be or as streamlined as it could be, I may only take off – somewhere in the range of maybe like 10 percent. A small kind of … a small little thing, just to say, hey I think you can do better.

Others comments explicitly on contextual variation in their expectations regarding technical detail. In contrast, M2 used a graduated system in which expectations increased as the semester progressed. M2 stated

‘The first couple of weeks, I was kind of generous in grading. But once [we have reached] the middle or end of the semester, I told my students that they would have to write great mathematics … I would be more strict [with grading].

When grading proofs, professors are sometimes concerned with students showing technical details to establish the validity, but other times the technical detail is not needed when it is clear the proof shows student understanding.

5.2.3. Theme 2c: Expectations regarding assessment type

Three mathematicians indicated how their expectations changed based on the type of assessment that they were grading. All indicated that they would be less harsh in their grading in the context of an exam and stricter in the context of homework. The stress and time constraints of an exam situation allow for students to leave some gaps and not be penalized if the professor believes they can fill in those gaps.

M7 noted that she would be more critical in assessing a proof on homework or a take-home exam than for an in-class exam. She awarded IP 1 full marks and elaborated that she might be bothered if they turned it in for homework or a take-home exam, but ‘if it is an in-class exam or something that clearly the student thought about it and did it by himself or herself, it would be a little too harsh to take the points off.’

M6 commented that her expectations for proofs of proposition 2 would depend on whether they would have enough time to think about what needs to be proved and convey it in a proof. When the interviewer clarified that she would be more lenient on an exam but would want them to provide the details on homework, she responded in the affirmative. M9 would accept IP 2 on an exam since she is looking for whether the student understood the relevant ideas. ‘If I was saying I just want [the student] to prove this on a test … then what I am looking for is could [they] make a convincing argument that somebody could read and be convinced by … ’ She continued, ‘[as the student], I would make sure I understood step-by-step what I was doing. I think [showing detail] is important for homework, and they should be near perfect.’

6. Discussion

In this study, we interviewed nine mathematicians about their evaluation of six proofs: three complete proofs and three incomplete proofs. Our analysis focused on the contextual features that influenced mathematicians’ evaluation processes.

We reported two major findings. First, the status of the proof’s author had a substantial impact on the way mathematicians approached their reading and evaluation. The importance of authorship has been highlighted in the literature on mathematicians’ motivations for reading mathematical proofs for their own research (e.g., Weber et al., Citation2014), but little was known about the contrast in mathematicians’ proof-reading practices when authored by students or fellow mathematicians.

When speaking about a hypothetical lecturing context, several of our mathematicians asserted that they were not concerned about the technical accuracy of their colleagues’ proofs, noting that these proofs are most often shown to students to demonstrate the key ideas and may purposefully leave gaps for students to fill later, as they build a better understanding of the proof. Both of these sentiments were also reported in Weber’s (2012) similar study on mathematicians’ perspectives about pedagogical practice related to mathematical proof. In contrast, when evaluating ostensibly student-produced proofs, mathematicians were more concerned with technical detail and perceived gaps in the proof, citing the pedagogic necessity for students to articulate their understanding clearly throughout the proof.

Our second major finding is that context matters when assessing students’ proofs. That is, mathematicians’ reportedly evaluated student-produced proofs differently depending on the context in which they are grading. For example, three of our mathematicians reported assessing proofs differently in the context of homework, a quiz, or an examination. Five out of nine also commented on the role of the expectations that they have laid out in their classroom instruction. Several noted that if they had modeled using complete sentences, then they would expect the same from their students, especially in situations that were not time dependent. For time dependent situations, students are allowed more leeway in how they present their proofs.

These findings echo Lew and Mejía-Ramos (Citation2019) who reported that mathematicians attended to the pedagogical context when deciding on appropriate standards of rigor and detail for student-produced proofs. Lew and Mejía-Ramos’ mathematicians noted that students are allowed to get away with things like purely symbolic mathematical phrases in an ITP course, but that they would not be allowable in their own professional communications (e.g., journal submissions).

6.1. Implications for research

Our mathematicians appeared to agree that the level of the course they are teaching affects what they expect students to be able to do and that the type of assessment determines what technical details are required. Yet, it is unclear how this interaction plays out, how these contextual factors govern students’ introduction to mathematical proof writing and the extent to which mathematicians agree on appropriate pedagogical practices with respect to proof writing.

In particular, there is disagreement on when students should be introduced to the linguistic conventions of proof writing. For some participants in our study, it seems that these can be left to later in the mathematical apprenticeship and that the courses they discuss should focus on more substantive mathematical content. While this notion of ‘cutting some slack’ for lower-level students has appeared in the literature on grading proof (e.g., Davies et al., Citation2020), it is more common to talk of linguistic conventions of proof-writing as belonging to an introductory course in proof writing (David & Zazkis, Citation2019; Lew & Mejía-Ramos, Citation2019). David and Zazkis (Citation2019) characterized ‘Introduction to Proof’ courses as providing ‘opportunities for students to develop skills necessary to read and write proofs’ (p. 388). From their analysis of 176 publicly available syllabi, David and Zazkis concluded that while ITP courses vary substantively in mathematical content, most ‘emphasize proof-writing and formal mathematical argumentation as a major goal of the course’ (p. 399).

It is unclear from our data how strongly our participants hold their beliefs, or where they feel an emphasis on proof writing would be more appropriate, if anywhere. However, our data suggest there exists an important source of variation underlying the goal purported common to most ITP courses. That is, at what point do we expect students to make the transition to writing proofs consistent with the linguistic conventions of the mathematical community? While the extant literature suggests that the answer to this question lies simply in ITP courses, our analysis suggests that the issue may be more complicated, warranting more dedicated research, both on the beliefs of mathematicians/practitioners and on empirical best practices for students.

We, as practitioners and researchers ourselves, align ourselves with Lew and Mejía-Ramos (Citation2019), who asserted that students should engage in writing about mathematics more often and much earlier in their schooling. However, we acknowledge that this belief is based on professional judgement rather than empirical research.

To generate the relevant empirical evidence in support of our beliefs, researchers will need assessment tools to evaluate students’ use and understanding of linguistic conventions. To this end, Lew and Mejía-Ramos (Citation2019) proposed a mode of oral assessment, based on the reading aloud of mathematical texts. Through such a read aloud protocol, researchers may gain access to students’ contextualized thinking, and their moment-to-moment processing of the proof at hand. Further, in such real-time assessment, both assessors and students have the opportunity to ask clarification questions, minimizing the possibility that one elusive definition, theorem, or ancillary idea could result in an otherwise capable student scoring low on a task that they are mostly-equipped to answer. We need to be aware of how context may be influencing what we read or hear. We need to consider ways beyond written work to assess student interpretations of the mathematics.

6.2. Implications for practitioners

Having established the importance of contextual factors in grading, we advocate for a greater emphasis on communication between practitioners and students. In particular, our earlier analysis has highlighted the importance of instructors’ expectations in determining grading standards. It is important not only that practitioners be cognizant of their expectations, but that these expectations should be clearly communicated to students.

Prior research (Miller et al., Citation2018; Moore, Citation2016) has concentrated on assessing proofs based on a traditional grading scheme (score out of a total number of points for a particular proof), but mathematicians may want to consider alternate grading schemes like standards-based grading for proofs (Cooper, Citation2020; Stange, Citation2018). With these grading schemes, mathematicians can focus on values and norms that are important to the mathematical community (Dawkins & Weber, Citation2017) and make the intentions of the assessment of proof more explicit for students. Further, for these grading schemes, research has shown that students pay more attention to professors’ feedback in order to revise their proofs when points are not given (Butler, Citation1988; Pulfrey et al., Citation2011). We believe that this is crucial in helping students gain a better understanding of mathematical proofs.

Finally, we want to emphasize that a large part of what we want to accomplish in an Introduction to Proof course is helping students learn the mathematicians’ process along with the discourse of the discipline. Often, all students see from a mathematician is a final, polished product. As was pointed out by, for example, Tanswell and Rittberg (Citation2020) and Weber et al. (Citation2014), mathematicians’ proving practice is messy, fraught and full of uncertainty, and it is pedagogically valuable that students be exposed to this process early and often in their education. Hence, we call for structuring the course in such a way that promotes multiple drafts and revision before arriving at a final product (Jansen et al., Citation2017). Further, assessment practices should align with this path such that students have the opportunity to get feedback often in low-stakes situations to arrive at highly polished proofs and that high-stakes assessments focus on logical reasoning with less emphasis on a polished presentation.

7. Final remarks

It is clear that context plays an important role when mathematicians are assessing proofs. In this study, we have discussed the role of authorship in this process, as well as documenting some of the contextual factors influencing the evaluation process. We do not intend our analysis to be an exhaustive taxonomy of such factors. Rather, we intend to promote a more intentional discourse about the role of context, and to advance the claim that both researchers and practitioners are better placed to serve the student population when context is at the forefront of both practices.

Conflicts of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

References

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101.

- Butler, R. (1988). Enhancing and undermining intrinsic motivation: The effects of task-involving and ego-involving evaluation on interest and performance. British Journal of Educational Psychology, 58(1), 1–14. doi:10.1111/j.2044-8279.1988.tb00874.x

- Cobb, P., & Yackel, E. (2016). Sociomathematical Norms, Argumentation, and Autonomy in Mathematics. Journal for Research in Mathematics Education, 27(4), 458–477.

- Cooper, A. (2020). Techniques grading: mastery grading for proofs courses. PRIMUS, 30(8-10), 1071–1086. doi:10.1080/10511970.2020.1733151

- David, E. J., & Zazkis, D. (2019). Characterizing introduction to proof courses: a survey of U.S. R1 and R2 course syllabi. International Journal of Mathematical Education in Science and Technology, 51(3), 388–404. doi:10.1080/0020739X.2019.1574362

- Davies, B., Alcock, L., & Jones, I. (2020). Comparative judgement, proof summaries and proof comprehension. Educational Studies in Mathematics, 105(2), 181–197. doi:10.1007/s10649-020-09984-x

- Dawkins, P. C., & Weber, K. (2017). Values and norms of proof for mathematicians and students. Educational Studies in Mathematics, 95(2), 123–142. doi:10.1007/s10649-016-9740-5

- Hanna, G. (1987). More than Formal Proof. For the Learning of Mathematics, 9(1), 20–23.

- Hersh, R. (1997). Prove - Once More and Again. Philosophia Mathematica, 5(2), 153–165. doi:10.1093/philmat/5.2.153

- Inglis, M., Mejía-Ramos, J. P., Weber, K., & Alcock, L. (2013). On mathematicians’ different standards when evaluating elementary proofs. Topics in Cognitive Science, 5(2), 270–282. doi:10.1111/tops.12019

- Jansen, A., Cooper, B., Vascellaro, S., & Wandless, P. (2017). Rough-draft talk in mathematics classrooms. Mathematics Teaching in the Middle School, 22(5), 304–307. doi:10.5951/mathteacmiddscho.22.5.0304

- Larvor, B. (2012). How to think about informal proofs. Synthese, 187(2), 715–730. doi:10.1007/s11229-011-0007-5

- Lew, K., & Mejía-Ramos, J. P. (2019). Linguistic conventions of mathematical proof writing at the undergraduate level: Mathematicians’ and students’ perspectives. Journal for Research in Mathematics Education, 50(2), 121–155. doi:10.5951/jresematheduc.50.2.0121

- Lew, K., & Mejía-Ramos, J. P. (2020). Linguistic conventions of mathematical proof writing across pedagogical contexts. Educational Studies in Mathematics, 103(1), 43–62. doi:10.1007/s10649-019-09915-5

- Manin, Y. (1977). A course in mathematical logic for mathematicians. Springer-Verlag.

- Martin, U., & Pease, A. (2013). Mathematical Practice, Crowd-sourcing and Social Machines. International Conference on Intelligent Computer Mathematics, 98–119. doi:10.1007/978-3-642-39320-4_7

- Miller, D., Infante, N., & Weber, K. (2018). How mathematicians assign points to student proofs. Journal of Mathematical Behavior, 49, 24–34. doi:10.1016/j.jmathb.2017.03.002

- Moore, R. C. (2016). Mathematics Professors’ Evaluation of Students’ Proofs: A Complex Teaching Practice. International Journal of Research in Undergraduate Mathematics Education, 2(2), 246–278. doi:10.1007/s40753-016-0029-y

- Pulfrey, C., Buchs, C., & Butera, F. (2011). Why grades engender performance-avoidance goals: The mediating role of autonomous motivation. Journal of Educational Psychology, 103(3), 683–700. doi:10.1037/a0023911

- Sfard, A. (2008). Thinking as communicating: Human development, the growth of discourses, and mathematizing. Thinking as Communicating: Human Development, the Growth of Discourses, and Mathematizing. doi:10.1017/CBO9780511499944

- Stange, K. (2018). Standards-based grading in an introduction to abstract mathematics courses. PRIMUS, 28(9), 797–820. doi:10.1080/10511970.2017.1408044

- Tanswell, F. S., & Rittberg, C. J. (2020). Epistemic injustice in mathematics education. ZDM, 52(6), 1199–1210. doi:10.1007/s11858-020-01174-6

- Weber, K. (2018). The role of sourcing in mathematics. In J. Braasch, I. Bråten, & M. McCrudden (Eds.), Handbook of multiple source use (pp. 238–253). Routledge.

- Weber, K., & Czocher, J. (2019). On mathematicians’ disagreements on what constitutes a proof. Research in Mathematics Education, 21(3), 251–270. doi:10.1080/14794802.2019.1585936

- Weber, K., Inglis, M., & Mejía-Ramos, J. P. (2014). How Mathematicians Obtain Conviction: Implications for Mathematics Instruction and Research on Epistemic Cognition. Educational Psychologist, 49(1), 36–58. doi:10.1080/00461520.2013.865527

- Weber, K., & Mejía-Ramos, J. P. (2011). Why and how mathematicians read proofs: an exploratory study. Educational Studies in Mathematics, 76(3), 329–344. doi:10.1007/s10649-010-9292-z