Abstract

This study investigated upper secondary school students’ skills in evaluating the credibility and argumentative content of a blog text and a YouTube video. Both sources concerned child vaccination, the blog text opposing and the YouTube video supporting it. Students rated each source as credible, fairly credible or non-credible, justified their ratings, and analyzed the argumentation of both sources. Their justifications were analyzed for trustworthiness and expertise and their argument analyses for identification of the main position of the source and the reasons supporting it. Students’ justification skills proved fairly weak, and they also struggled with recognizing unbalanced argumentation. Students’ skill in analyzing the argumentation used in the sources also proved inadequate, especially in the blog text task. Overall academic achievement significantly predicted students’ credibility evaluation and argument analysis skills. The results suggest that greater emphasis should be placed on tasks involving the interpretation and analysis of online information.

Introduction

The internet offers a favorable environment for disseminating inaccurate and biased information, including misinformation and disinformation (Walsh, Citation2010). This is possible as anyone can publish freely on the internet without subjection to any quality control mechanisms (Zubiaga et al., Citation2018) or traditional gatekeeper models (Metzger et al., Citation2010) that have been instituted to uphold the credibility standards of online information. When defining bias Walton (Citation1991) focuses particularly on the argumentative content of the source. According to him, the basic idea of bias is a lack of appropriate balance or neutrality in argumentation, that is, a tendency to consistently and unduly favor one side over the other, ignoring the arguments on both sides. Further, Walton stresses that bias also involves an absence of critical doubt, which appears in a failure to push the viewpoint one supports, thereby implying a departure from reasoned argumentation and critical discussion. Another reason for bias may be that an arguer has some ulterior intentions or something to gain based, e.g., on some ideological or commercial interest (Kammerer et al., Citation2015).

With the rapid growth of electronic commerce, for example, where purchasing decisions are increasingly made in online environments, lack of objectivity in the information given about merchandise can be misleading (Xiao & Benbasat, Citation2015), exposing shoppers to commercial manipulation. Inaccurate or biased information is also harmful to citizens and society as it threatens democratic political processes and the democratic values that shape public policies in such life domains as health, science, and finance (Broniatowski et al., Citation2018; Kahne & Bowyer, Citation2017). For example, internet searches are commonly made for health-related information (Chen et al., Citation2018) despite the fact that many health and nutrition websites are inaccurate or commercially biased (see Kammerer et al., Citation2016) and may even contain distorted information (Chen et al., Citation2018). Thus, important decisions pertaining to health issues may be more strongly affected by biased and flawed argumentation advanced by manufacturers than by rational argumentation and objective comparison of alternatives.

Because biased online information may have harmful effects, protecting people from deceitful information is important. As digitalization increasingly penetrates all areas of life, the role of schools in promoting the capacity of young people to use and identify accurate and useful information becomes increasingly important (Kahne & Bowyer, Citation2017). Promoting students’ skills in evaluating the credibility of online information and analyzing the quality of its argumentation is of particular importance in helping students discriminate between reliable and unreliable online sources. Namely, research has shown analytical argumentation and thinking to be important for the critical evaluation of online information. Pennycook and Rand (Citation2019) found that the ability to discern partisan fake news from real news was associated with the propensity to engage in analytical reasoning. Further, Bronstein et al. (Citation2019) found that people over 18 with reduced analytic thinking were prone to believe in fake news.

The present study investigated upper secondary school students’ ability to evaluate the credibility and argumentative content of two online social media resources familiar to young people, i.e., a blog text, and a YouTube video. The two sources represented opposite viewpoints on a current health-related topic, child vaccination. To find out to what extent students paid attention to source bias, both sources were selected to include flawed and unbalanced argumentation favoring only one side of the question of whether it is wise to vaccinate children or not.

Literature review

Evaluation of credibility and argumentation

Credibility can be defined as the believability of a source or message and has two primary dimensions: trustworthiness and expertise (Flanagin & Metzger, Citation2008). Tseng and Fogg (Citation1999) define expertise as knowledgeable, experienced, and competent and trustworthiness as well-intentioned, truthful, and unbiased.

When evaluating the credibility of online sources for expertise, various source information and source features (Bråten et al., Citation2018) are often of interest, including document type, when and why the document was published, whether the author’s or organization’s contact information is given, and the author’s affiliation and qualifications (Metzger, Citation2007; Paul et al., Citation2017). When evaluating trustworthiness, various content features of the web site are important, e.g., how objective, up to date and in-depth is the information provided (Metzger, Citation2007) and whether there are indications of source bias. Source bias may appear in unbalanced argumentation when only one side of the issue is covered and favored over the other, or in some hidden intentions or motives of the writer related to e.g. political or commercial interests (Kiili et al., Citation2018; Walton, Citation1991).

Evaluating the argumentative quality of a text requires the reader to pay attention to its argumentative structure. Toulmin (Citation1958) defines the following main argumentative elements for analyzing the structure of argumentative texts: a claim is the argument’s conclusion, data are the facts cited as premises for the claim, and a warrant is a general operating principle bridging the data and the claim (Fulkerson, Citation1996, p. 18; Nussbaum, Citation2011). In an argumentative text, a claim or a conclusion presents the writer’s main position or message. In a high-quality argumentative text, the main claim is clearly stated and supported by relevant and sufficient premises (Fulkerson, Citation1996).

Argumentation plays an important role when evaluating the trustworthiness of online sources, as one should be able to assess whether the premises underlying the views presented are relevant and sufficient (Fulkerson, Citation1996), whether the text harbors hidden motives (e.g., economic or ideological), and whether the premises for and against the claim receive equal weight in the source.

The terms grounds (Osborne et al., Citation2004), evidence (Forzani, Citation2016) and reason (Means & Voss, Citation1996) are also used when referring to elements of an argument justifying a claim or a conclusion. In this study, the term “reason” is used to refer to elements of arguments supporting the claim or conclusion, and “argumentation perspective” to refer to categories of reasons representing a specific perspective, such as a financial, ethical or health-related perspective. Xu and Yao (Citation2015) state that the justifications or reasons representing various argumentation perspectives indicate the breadth of argumentation, a further necessary criterion for high-quality argumentation. Breadth of argumentation, or space of debate as van Amelsvoort et al. (Citation2007) put it, is defined as the number of topics and subtopics mentioned that relate to the issue discussed: The higher the number of topics and subtopics related to the arguments used in the discussion, the higher the argumentative quality of the discussion.

Previous research on evaluation of online information

It is easily assumed that because young people are fluent in using the internet and social media, they are equally savvy about what they find there. However, recent research results show the opposite. Coiro et al. (Citation2015) found that 7th graders were unable to justify whether the author of an online source was an expert in the field the source represented. Further, the Stanford History Education Group (Citation2016) showed that students at different educational levels had poor skills in evaluating information delivered through social media channels, and thus were at risk for being duped by disinformation, including flawed argumentation. For example, Miller and Bartlett (Citation2012) in their survey (n = 509) of primary and secondary school teachers reported that students commonly struggle to recognize biased information and may use information that has been deliberately produced to be misleading. Similarly, Kiili et al. (Citation2018) found that only about half of their sample of 6th grade elementary school students questioned the credibility of the commercial online source and only about a fifth fully recognized commercial bias. Similarly, Fogg et al. (Citation2003) asked somewhat older regular web users (n = 2 684; average age 39.9) to comment on the credibility of preselected websites representing various content categories. Only 11.6% of the participants’ credibility comments referred to information bias.

Kiili, Laurinen, and Marttunen (Citation2008) found that upper secondary school students seldom evaluated the credibility of online information and that when they did, they mainly attended to such aspects as the publisher, author or expert interviewed in the source. Cognitively demanding evaluation strategies, such as the evaluation of argumentation or comparison of texts, were rarely used. At college level, McClure and Clink (Citation2009) examined English composition students’ research essays to determine the amount of attention students gave to analyzing and crediting online sources, particularly their timeliness, authority, and bias. They found that students not only struggled with understanding bias but also ignored it when it hampered their writing.

Previous research has shown that students' critical thinking has a positive association with their academic achievement (e.g., Fong et al., Citation2017; Ghanizadeh, Citation2017; Marin & Halpern, Citation2011). Similarly, university applicants' argumentative writing skills predicted their academic achievement in university (Preiss et al., Citation2013). These findings stress the importance of academic achievement in terms of students’ argumentation and critical thinking. In this study we explored this issue further by studying whether upper secondary school students' academic achievement is associated with their credibility evaluation and argument analysis skills.

Prior studies also suggest that girls perform better than boys in critical evaluation of online sources (Forzani, Citation2016; Taylor & Dalal, Citation2017), prior knowledge on a topic enhances students' critical evaluation of sources on similar topics (Forzani, Citation2016), and that offline and online reading skills are intertwined but their relationship remains unclear (Leu et al., Citation2013; Kiili et al., Citation2018). Further, prior knowledge occupies an important role in readers’ activities during offline reading comprehension (e.g., Means & Voss, Citation1985) but in online reading contexts its role is more complex (Coiro, Citation2011). To explore these issues further, this study investigated to what extent students’ performance in the critical and argumentative evaluation of online sources can be explained by their gender, prior knowledge on the topic, and offline reading fluency.

Aim and research questions

Many studies have shown that students commonly struggle in evaluating the credibility of online sources and recognizing bias. Deficiency in credibility evaluation is a serious problem as, e.g., bloggers and video bloggers have a lot of influence particularly on young people who often adopt their ideas despite the absence of guarantees on the trustworthiness, objectivity, and unbiased nature of information disseminated in social media. Thus, further knowledge is needed on how students evaluate online sources low in credibility.

Students’ recognition of bias in online information has been widely investigated. However, to our knowledge, no studies exist in which students have evaluated the credibility of argumentatively unbalanced online sources representing different modalities (text, video) and addressing the same topic from opposite viewpoints (for and against). Such a comparative study may further understanding of whether young people differ in how they deal with unreliable information presented through different social media and whether they are more likely to be misled by erroneous and unobjective information presented via one type of social media rather than another. Further, this information is needed in developing pedagogy to help future citizens recognize inaccurate information and fake news (Vosoughi et al., Citation2018).

This study investigated how students deal with two online social media sources frequently used by young people: a written blog and a multimodal video. Upper secondary school students were given a blog text and a YouTube video, one supporting and the other opposing the same currently contested topic (child vaccination). These online sources were deliberately selected for their unbalanced argumentation on the topic and hence questionable credibility. Both sources lacked credibility also in that the author of both sources was not an expert in the field (vaccination) and that they did not contain any references to support the presented arguments. Students’ skills in evaluating these online sources for argumentative content and credibility were explored. Also examined was whether students’ skills can be explained by gender, prior knowledge on the topic, reading fluency, and previous academic achievement. The research questions were:

How skilled are upper secondary school students in evaluating the credibility of unreliable online sources?

1.1 How do students rate the credibility of unreliable sources?

1.2 How do students justify the lack of credibility of unreliable sources?

1.3 Do students identify unbalanced argumentation?

How skilled are upper secondary school students in analyzing the argumentative content of unreliable online sources?

To what extent are students’ skills explained by gender, prior knowledge on the topic, reading fluency, and previous academic achievement?

Method

Subjects

Participants were 404 upper secondary school students (94.6% aged 17) from seven schools in Finland. There were 60.4% female students and 38.9% male students (missing information 0.7%). Most (79.2%) of the participants were students in three large urban upper secondary schools and the rest (20.8%) students in four small and middle-sized schools in semi-urban and rural areas.

A written consent to participate in the study was obtained from the parents of underage students. Our research was approved by all the participating upper secondary schools and included only minimum risk for participants. According to the Finnish standard for institutional review board approvals, a statement by the Ethical Board of the University was not required.

Study context

This study is a part of a larger research project on Finnish upper secondary school students’ argumentative online inquiry competencies. In the project, students completed an online task in which they composed an argumentative letter to imaginary Finnish members of parliament on the advantages and disadvantages of child vaccination aimed at informing their legislative work on this issue. The online task followed an online research and comprehension framework (Leu et al., Citation2013), including sub-tasks on the information search, evaluating, synthesis and communication. This article reports the results of the analyses of a sub-task on students’ evaluations of the credibility and argumentative content of two online sources utilizing the evaluation component of the framework by Leu et al. (Citation2013).

The two sources, a blog text and a multimodal YouTube video, viewed child vaccination from opposite standpoints. The Finnish blog text (3 pages, 967 words) presented several potential disadvantages of vaccination by appealing to various side effects of vaccines, the financial benefits of vaccination for the pharmaceutical industry, and emotional arguments by describing situations in which parents have reported harmful effects of vaccines on their children. The blog text concluded that children should not be vaccinated. In contrast, the YouTube video presented arguments for vaccination, concluding that it is wise to vaccinate children. In the video, two US comedians (Penn and Teller) argued for vaccination by means of humor, music, sound effects, and visual demonstrations such as gestures and motion. The comedians also cited statistics on both the effectiveness of vaccinations and their minimal injurious effects and described the potential negative effects of not vaccinating children. The video, in English with Finnish subtitles, lasted for 1.5 minutes.

Data collection

Prior knowledge and reading fluency

In this study, students’ prior knowledge on the child vaccination was assessed with ten statements, three of which were correct and seven false. The statements were selected based on both current scientific knowledge and common misinformation disseminated by anti-vaccination movements. To strengthen content validity, two university lecturers in health sciences and a medical expert reviewed the statements. Students were asked to select the three statements they thought were correct. Students’ prior knowledge scores varied from 0 to 3 depending on the number of correct statements selected.

Reading fluency was measured by a word chain test (Holopainen et al., Citation2004). The task was to separate as many of a series of 100 words, written in four-word clusters without spaces in between, as possible in 90 seconds (Kiuru et al., Citation2011).

Credibility evaluation and argument analysis tasks

To evaluate the credibility of the online sources the students completed two tasks, one for the blog text and one for the YouTube video. In both tasks, the students first rated the credibility of the source by answering a multiple choice question (How credible do you think the text/video is?) by selecting one of three responses (credible, fairly credible, not credible), and then justifying their credibility evaluation in writing by answering the question “Justify your choice, why do you think this?.”

Students also completed two tasks on the argumentative content of the online sources, one for the blog and one for the video. In both tasks, the students wrote down the main position on child vaccination they identified in the source and the most important justifications for that position given in the source.

Data analysis

Students’ credibility evaluation skills

First, the frequencies of the students’ responses (n = 404) to the multiple-choice questions on the credibility of each online source were calculated. Next, the students’ justifications for their credibility ratings (n = 403) were analyzed for trustworthiness and expertise. On trustworthiness, students scored points when they appealed to content-related issues: use of citations, research-based information, and unbalanced argumentation (Tseng & Fogg, Citation1999; Metzger, Citation2007; Walton, Citation1991). On expertise, the students scored points when they cited external characteristics of the source, e.g., author attributes, form of the web source, and organization(s) affiliated with the web page (Metzger, Citation2007).

As both sources included unbalanced argumentation on the topic their credibility was principally deemed questionable. Consequently, students scored 0 points if they had not questioned the credibility of the source, i.e., selected the response option “credible”. When they had questioned credibility, i.e., chosen the options “fairly credible” or “not credible”, the response was scored from 1 to 4 depending on how well the lack of credibility had been justified. No justifications scored 1 point and one or more justifications, relating either to expertise or to trustworthiness, scored 2 points. Identification of unbalanced argumentation was emphasized in the scoring. Thus, 3 points was scored when lack of credibility had been justified by appealing to unbalanced argumentation. Identification of unbalanced argumentation is illustrated in the following two examples of students’ justifications (see ): The text is based only on the disadvantages of vaccination and totally ignores its advantages (Student 428, Blog text); The issue was treated only from one side and the attitude for not using vaccines was initially negative (Student 174, YouTube video). Justifications including both expertise and trustworthiness, although without reference to unbalanced argumentation, also earned 3 points. The highest score (4 points) was given when the justifications included, at the least, both expertise and reference to unbalanced argumentation ().

Table 1. Examples of scoring students’ responses to the credibility evaluation tasks.

To test the reliability of the analysis criteria of students’ justifications, 15% of the data were selected at random and analyzed by two independent raters, reaching 82% agreement (Cohen’s kappa, ĸ =.74).

Students’ argument analysis skills

Analysis of students’ skills to analyze the argumentation of the blog text and the YouTube video proceeded in two phases. First, the researchers analyzed the argumentation of these two online sources. Next, the students’ responses to the argument analysis tasks were compared with the argumentation in the sources.

Argumentation of the online sources

First, all the reasons against vaccination in the blog text (42 reasons) and all those for vaccination in the video (5 reasons) were identified. The identified reasons were then classified into argumentation perspectives on a data-driven basis (Hsieh & Shannon, Citation2005).

For the blog text, the argumentation perspectives (an example of a reason in parenthesis) were: 1) Consequences of vaccination (Vaccination results in serious, even fatal injury); 2) Effectiveness of vaccination (Going through things notices that vaccinations do not protect from diseases); 3) Consistency of vaccination (Viral vaccines can never be safe); and 4) Support in problematic situations (Children falling ill from vaccinations are given help by official bodies). Similarly, for the video, the argumentation perspectives were: 1) Consequences of vaccination (Unvaccinated people are more likely to contract diseases); 2) Effectiveness of vaccination (Before Salk’s vaccine there were approximately 58,000 cases of polio).

Analyzing students’ responses

Students’ skills in analyzing the argumentation in the source were scored based on their responses to each task (397 for the blog and 403 for the video). Students who failed to correctly identify the position taken in the source scored 0 points. Students who correctly identified the source position scored from 1 to 4 depending on the quality of their identification (position quality) and how many argumentation perspectives they found to support that position.

Position quality was analyzed by investigating how well the position identified by a student corresponded to the source position, i.e., against vaccination in the blog and for vaccination in the video. Position quality indicated how well a student had understood (partially vs. fully) the main message of the source. In responses indicating partial understanding the source position was correctly identified but either only implicitly embedded in the response or expressed in the form of a descriptive claim by referring, for example, to specific harms or benefits of vaccines. Examples of descriptive claims were “Vaccines cause autism” (Student 416, Blog text) and “Vaccines protect people from severe diseases” (Student 95, YouTube video). Full understanding of the main message of the source was indicated by responses in which the source position was expressed in a form of a correct conclusion expressed in the source. Examples of correct conclusions were “Children should not be vaccinated” (Student 335, Blog text) and “It is wise to vaccinate children” (Student 217, YouTube video).

The minimum requirement for a score of 1 in the blog task was that the student’s response included a position on child vaccination corresponding to that presented in the source (i.e., against vaccination, ). Students scored 2 when the source position (SP) was correctly identified and justified from two argumentation perspectives (AP), as illustrated in the following response by student 296 (analysis markings of the authors in parentheses): “The author is clearly against vaccinations (SP) and presents many justifications for her stand. She states that more children vaccinated against whooping cough contracted the disease than non-vaccinated children (AP 1, Effectiveness of vaccination). She also extensively describes the harm caused by vaccinations” (AP 2, Consequences of vaccination). Students scored 2 also when the response included a correct conclusion justified from one argumentation perspective. Score 3 was given when the source position was correct and justified from three argumentation perspectives, or a correct conclusion was justified from two argumentation perspectives. Score 4 was given when the response included a correct conclusion justified from at least three argumentation perspectives ().

Table 2. Examples of scoring of students’ responses to the blog text argumentation analysis task.

Students scored 1 on the YouTube video task when they correctly identified the source position taken on child vaccination (i.e., for vaccination, see ). Students scored 2 when either the source position was correctly identified and justified from one argumentation perspective, or the conclusion was correct but lacked justifications. Score 3 was given when the source position was correct and justified from two argumentation perspectives, or the conclusion (CO) was correct and justified from one argumentation perspective (AP). The latter option is illustrated in the response by student 354 (analysis markings of the authors in parentheses): “The main message is that vaccination is not useless (CO). The most important justification is the survival of vaccinated children” (AP, Effectiveness of vaccination). Students scored 4 when their response included a correct conclusion justified from both argumentation perspectives ().

Table 3. Examples of scoring of students’ responses to the YouTube video argumentation analysis task.

To test the reliability of the analysis criteria for both the blog and the video, two independent raters classified 15% of the data selected at random, reaching 87% agreement (Cohen’s kappa, ĸ =.79).

Variables used in the study

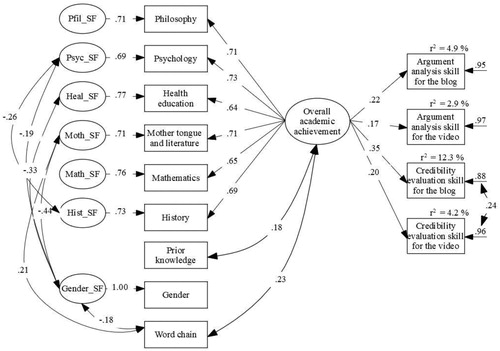

The dependent variables used in this study were formed based on the students’ credibility ratings, credibility evaluation, identification of unbalanced argumentation, and argument analysis for each source ().

Table 4. Variables used in the study.

Students’ most recent grades in various upper secondary school subjects, performance in the word chain test, prior knowledge on vaccination, and gender were used as independent variables (). In Finland, academic performance in various subjects in upper secondary schools is rated from 4 to 10. Moreover, in the Finnish course-based school system at upper secondary level (European Commission, Citation2019), both schools and students have considerable freedom in organizing studies. Hence, the number of courses taken in different subjects may vary considerably between students, as was the case here. Moreover, some students did not consent to the use of their school grades for research purposes, which increased the amount of missing data. The proportion of missing data for the grades ranged from 15% to 32%.

Statistical analyses

To answer research questions (RQ) one and two, descriptive statistics on the level of students’ credibility evaluation and argument analysis skills were used. Additionally, Wilcoxon’s signed ranks test was applied to compare students’ credibility ratings for the blog text and video, and McNemar’s test was used to compare how students identified unbalanced argumentation in the sources (Davis, Citation2013). Furthermore, paired samples t-test was applied to compare differences between the blog text and the video in students’ overall performance in the credibility assessments and argument analysis skills (Davis, Citation2013).

To answer RQ 3, Structural Equation Modeling (SEM) was applied (Kline, Citation2011). All SEM analyses were conducted using Mplus 7.4. Maximum likelihood estimation with robust standard errors (MLR) was used as an estimation method, as the variables showed slight non-normality (skewness values from −.68 to .45 and kurtosis values from −.80 to 1.20).

In the analysis, Overall academic achievement, Prior knowledge of the topic, Reading fluency and Gender were independent variables, and Argument analysis and Credibility evaluation skills dependent variables. In addition, school grades were indicators for the bi-factor model: Overall academic achievement was modeled as a generic factor for which all the individual grades were indicators. To complement the generic factor, specific factors for each individual grade were estimated to model variance not accounted for by the general factor.

The following fit indices and cutoff values for acceptable fit of the model were used: χ2-test, ns, p > .05, Root Mean Square Error of Approximation (RMSEA) value under .06, and Tucker-Lewis Index (TLI) and Comparative Fit Index (CFI) values over .95 (Hu & Bentler, Citation1999). Effect sizes used for the repeated measures tests were reported as Cohen’s d (Cooligan, Citation2017). For the non-parametric tests, the effect sizes were transformed to Cohen’s d. For the SEM-analyses, squared r’s were calculated.

Results

Students’ credibility ratings of unreliable online sources

More than a half of the students (59.1%) considered the blog text fairly credible, slightly above one-third (37.5%) not credible, and only a few (3.5%) credible. Similarly, the majority (53.1%) judged the video to be fairly credible and 38.2% non-credible. In contrast to the blog, nearly a tenth (8.7%) of the students considered the video credible. The difference between the students’ credibility ratings for the blog and the video was not statistically significant (Z = −1.20, p = .232).

Students’ skills in evaluating the credibility and analyzing the argumentative content of unreliable online sources

Students’ skill in evaluating the credibility of the online sources proved rather weak. Although the students commonly questioned the credibility of both sources, they justified the non-credible nature of the sources poorly. More than a half of the students, 50.3% for the blog and 58.8% for the video, offered no justifications or appealed only to either authority or trustworthiness (1-2 points, ). Students’ performance on the blog (M = 2.4) and video (M = 2.1) tasks differed significantly (t = 4.68, df = 402, p < .001, d = .29).

Table 5. Level of credibility evaluation and argument analysis for the blog text and the YouTube video.

As in the credibility evaluation, students’ skill in analyzing the argumentation in the online sources was also fairly weak. This appeared especially in the blog text task. The majority (76.3%; points 0-2 in total) failed to identify the correct position taken in the text (0 points), or identified it but with either no or one justification (1 point), or found at most two of the four argumentation perspectives (2 points; ). Further, only 2.8% of students correctly identified the main conclusion and several justifications for it (4 points; ). Students performed somewhat better in the video task, as the corresponding proportions were 53.3% (0 − 2 points) and 10.1% (4 points). The difference between the blog (M = 1.9) and video (M = 2.3) was statistically significant (t = −7.14, df = 395, p < .001, d = .47).

Students’ identification of unbalanced argumentation

The study also investigated whether students had identified unbalanced argumentation as a justification for the non-credible nature of the sources. The results showed that this was mentioned by only slightly more than a third of the students (37.4%) in the blog text and about a fifth (21.0%) in the video. The difference between the blog and the video was significant (χ2(1) = 31.06, p < .001, d = .57).

Factors explaining students’ skills in credibility evaluation and argument analysis

The structural equation model describing the factors explaining students’ argument analysis and credibility evaluation skills, and its parameter estimates, is presented in . The fit indices of the model were: χ2(51) = 66.31, p = .073, RMSEA = .03, CFI = .98, TLI = .97. Furthermore, all school subjects had significant and reasonable loadings, ranging from .64 to .73, for the Overall academic achievement factor. Correlations between independent variables were estimated as suggested by the modification indices.

Figure 1. Standardized parameter estimates (significant at the.05 level) for the structural equation model explaining students’ skills in argument analysis and credibility evaluation.

Note. Ovals to the left of the grades refer to the specific factors for each grade (e.g., Phil_SF = specific factor for philosophy grade).

The structural equation model () shows that, for both online sources, students’ overall academic achievement was the only significant predictor of their argument analysis and credibility evaluation skills. However, its explanatory power for the video was rather weak, as it predicted only 2.9% to 4.2% of the variances for the argument analysis and credibility evaluation tasks. Instead, overall academic achievement was a somewhat stronger predictor of students’ skill in evaluating the credibility of the blog text, explaining 12.3% of the variance. Finally, the scores for the credibility evaluation of the blog text correlated positively (.24) with the scores for the credibility evaluation of the video.

Discussion

Although the students in this study commonly questioned the credibility of the online sources, their justifications for the non-credible nature of the sources were fairly weak, especially in the case of the YouTube video. As many as 67.5% of students either presented no justifications or appealed only to either authority or trustworthiness. Students also seemed to struggle in identifying unbalanced argumentation, as only slightly more than a third for the blog text and about a fifth for the video mentioned unbalanced argumentation as a reason for the non-credibility of the source. This result supports previous findings on students’ difficulty in recognizing bias in online sources at the primary and secondary levels (Kiili et al., Citation2018; Miller & Bartlett, Citation2012), and at college level (McClure & Clink, Citation2009).

To understand the weakness of students’ justifications for the non-credibility of the YouTube video, it is important to note that the video dealt solely with the advantages of vaccination, and thus echoed to the official stand and a positive public attitude toward vaccination (e.g., The National Institute for Health and Welfare, Citation2019). The students probably found it unnecessary to put forward reasons for this generally accepted stand despite the obviously one-sided nature of information source. Another explanation may be myside bias (Greene et al., Citation2019; Perkins, Citation1989), that is, a tendency to prefer one’s own position on an issue and ignore evidence for the opposite position. van Strien et al. (Citation2016) found that students seem to prefer, and to evaluate as more credible, information consistent with their own attitudes. Furthermore, research on motivated reasoning (e.g., Ditto et al., Citation1998; Kunda, Citation1990) suggests that pro-attitudinal information will be evaluated less thoughtfully than information that runs contrary to one's beliefs and attitudes. This is especially true for social-scientific issues such as vaccination (Sinatra et al., Citation2014). People seem also to evaluate information differently if it comes from entertainment media compared to news media; entertainment media facilitate fairly stable attitudes, such as stereotypical or ideology-based judgments (Kim & Vishak, Citation2008).

Students’ skill in analyzing the argumentation contained in the online sources also proved insufficient, especially in the blog text task. This result is in line with previous findings that students have difficulties in identifying and evaluating arguments in linear texts (e.g., Larson et al., Citation2004; Marttunen et al., Citation2005). Although the students’ analysis of the argumentation of the video was also weak, it was nevertheless better than that of the blog text. One reason for this may be that the argumentative structure of the video was simpler than that of the blog text: the video contained far less reasons than the blog and only two argumentation perspectives in support of its main position in contrast to the four argumentation perspectives in the blog text. The multimodal nature of the video may also have affected the result. Whereas the argumentation in the blog was mainly expressed via linear text, that in the video comprised both verbal and nonverbal communication, such as visuals, motion, and voice, used simultaneously to express its content. The multimodality of the video may have helped the students to understand its content and analyze it (see Lee & List, Citation2019). Tseronis (Citation2018) states that visuals and other nonverbal means can be seen as argumentatively relevant because they can simply depict the argument, or elements of the propositions that constitute the argument, and convey the meaning of the source in more diverse ways than are possible with plain text.

Students’ lack of skills in analyzing argumentation and the credibility of online sources may, on the other hand, mirror a ‘why bother’ attitude stemming from skeptical or even cynical views (Vraga & Tully, Citation2021) that much online information is somehow biased in any case. Skepticism toward, e.g., politics and media in general is, however, considered a ‘healthy’ trait as it includes doubt and a questioning of certainty that encourages participation in a democratic society (Vraga & Tully, Citation2021) and enhances critical discussion on societal issues. For example, Maksl et al. (Citation2015) found that highly news media literate teens were more skeptical of the media and more motivated to consume news than their less news literate counterparts. Therefore, one objective of education should be to enhance students’ ability to engage actively in society by teaching them to be sufficiently critical when searching, evaluating, and utilizing online information.

In educational settings, students often evaluate online information through argumentative writing. Wolfe and Britt (Citation2008) found that when students prepare themselves for essay writing, for example, by taking notes that fail to include information incongruent with their own opinions, their essays tend to be one-sided. Students seem to have misconceptions about good argumentation and argumentative writing, possibly thinking that sound argumentation involves generating arguments only in support of one’s own position. However, high quality argumentation also requires analyzing information from the other side and combining arguments and counterarguments for a final position (Nussbaum & Schraw, Citation2007). For this reason, developing students’ understanding of more sophisticated and versatile argumentation thinking is an important educational goal that should be accorded more attention to prevent students being misled by inaccurate and flawed information. Previous studies (Bronstein et al., Citation2019; Pennycook & Rand, Citation2019) have shown, for example, that deficiencies in analytical thinking and reasoning are related to believing in biased information such as fake news.

The results also showed that students’ previous performance in various individual school subjects did not explain their skills in either evaluating the credibility or analyzing the argumentative content of the two online sources. However, students’ overall academic achievement was found to be significantly associated with these skills. This result supports the study by Utriainen et al. (Citation2017), who found that university applicants' overall performance in the matriculation examination predicted their argument analysis skills. The present finding that students’ overall academic achievement, but not their achievement in individual school subjects, prior knowledge on the task topic, or reading fluency, explained their evaluation and analyzing skills (cf. Kiili et al., Citation2018; Leu et al., Citation2015) refer to the generic nature of credibility evaluation and argument analysis skills. However, it must be noted that, as in the study by Utriainen et al. (Citation2017), the proportions of explanations remained rather low.

Owing to the rapid increase in internet use both in and out of school, it is very likely that current teaching practices do not yet adequately support students’ learning of the skills they need when they work internet-sourced knowledge. This concern is supported by the weak associations found in this study between students’ previous school achievement and their credibility evaluation and argument analysis skills with online sources. These findings suggest that tasks in which students interpret and analyze online information presented in various modes, both written and multimodal, should be more integrated into the instruction given in different subjects in schools.

Limitations

This study was carried out in an authentic context as a part of students’ normal schoolwork in upper secondary school classrooms. Hence, the participating schools were not randomly selected and the data are not necessarily representative of all Finnish upper secondary school students. Thus, the results cannot be generalized beyond the present sample. Further, another limitation of the study is that arguments and information presented were not counterbalanced across the blog and the video, due to the authentic study situation in schools. Same task repeated, although using different medium, would have possibly diminished students’ motivation to complete the task. The task used in this study was also a part of a large and time-consuming online inquiry task, and thus we did not find it possible to increase the students’ workload to complete the task.

Further, it should also be noted that the two online sources used in this study differed in mode, language, length, and argumentative content which complicates their comparison. To facilitate comparison of the sources, the focus was on only the verbal argumentation included in them, and thus many multimodal arguments based on, e.g., visuals, sounds, and gestures in the video were not taken into account. However, the comparison of the sources should be treated with caution. The study, nevertheless, opened interesting topics for further research to compare the credibility and argumentation of multimodal and text-based online sources.

Conclusion

Limitations aside, this study showed that evaluating the credibility and analyzing the argumentative content of two online sources commonly used among young people in social media—a written blog text and a YouTube video—was poorly accomplished by many students. The fact that many major life decisions are made based on information acquired from the internet (Horrigan & Rainie, Citation2006) exposes many individuals to the risk that these decisions are likely to be based on inaccurate or even flawed information. Thus, critical evaluation of information should be increasingly emphasized in schools to promote students’ skills in differentiating reliable from inaccurate information and to protect future citizens from being misled by the disinformation increasingly encountered on the internet.

Disclosure statement

None of the authors have conflicts of interest to declare.

Additional information

Funding

References

- Bråten, I., Stadtler, M., & Salmerón, L. (2018). The role of sourcing in discourse comprehension. In M. F. Schober, D. N. Rapp, & M. A. Britt (Eds.), The Routledge handbook of discourse processes (2nd ed., pp. 141–166). Routledge.

- Broniatowski, D. A., Jamison, A. M., Qi, S., AlKulaib, L., Chen, T., Benton, A., Quinn, S. C., & Dredze, M. (2018). Weaponized health communication: Twitter bots and Russian trolls amplify the vaccine debate. American Journal of Public Health, 108(10), 1378–1384. https://doi.org/10.2105/AJPH.2018.304567

- Bronstein, M. V., Pennycook, G., Bear, A., Rand, D. G., & Cannon, T. D. (2019). Belief in fake news is associated with delusionality, dogmatism, religious fundamentalism, and reduced analytic thinking. Journal of Applied Research in Memory and Cognition, 8(1), 108–117. https://doi.org/10.1016/j.jarmac.2018.09.005

- Chen, Y.-Y., Li, C.-M., Liang, J.-C., & Tsai, C.-C. (2018). Health information obtained from the Internet and changes in medical decision making: Questionnaire development and cross-sectional survey. Journal of Medical Internet Research, 20(2), e47. https://doi.org/10.2196/jmir.9370

- Coiro, J. (2011). Predicting reading comprehension on the Internet: Contributions of offline reading skills, online reading skills, and prior knowledge. Journal of Literacy Research, 43(4), 352–392. https://doi.org/10.1177/1086296X11421979

- Coiro, J., Coscarelli, C., Maykel, C., & Forzani, E. (2015). Investigating criteria that seventh graders use to evaluate the quality of online information. Journal of Adolescent & Adult Literacy, 59(3), 287–297. https://doi.org/10.1002/jaal.448

- Cooligan, H. (2017). Research methods and statistics in psychology (6th ed.). Psychology Press.

- Davis, C. (2013). SPSS for applied sciences: Basic statistical testing. CSIRO Publishing.

- Ditto, P. H., Scepansky, J. A., Munro, G. D., Apanovitch, A. M., & Lockhart, L. K. (1998). Motivated sensitivity to preference-inconsistent information. Journal of Personality and Social Psychology, 75(1), 53–69. https://doi.org/10.1037/0022-3514.75.1.53

- European Commission. (2019). Organisation of general upper secondary education. https://eacea.ec.europa.eu/national-policies/eurydice/content/organisation-general-upper-secondary-education-15_en

- Flanagin, A., & Metzger, M. (2008). Digital media and youth: Unparalleled opportunity and unprecedented responsibility. In M. J. Metzger, & A. J. Flanagin (Eds.), Digital media, youth, and credibility (pp. 5–28). The MIT Press, The John D. and Catherine T. MacArthur Foundation Series on Digital Media and Learning.

- Fong, C. J., Kim, Y., Davis, C. W., Hoang, T., & Kim, Y. W. (2017). A meta-analysis on critical thinking and community college student achievement. Thinking Skills and Creativity, 26, 71–83. https://doi.org/10.1016/j.tsc.2017.06.002

- Fogg, B. J., Soohoo, C., Danielson, D. R., Marable, L., Stanford, J., & Tauber, E. R. (2003). How do users evaluate the credibility of Web sites? A study with over 2,500 participants [Paper presentation]. The Proceedings of the Conference on Designing for User Experiences, San Francisco, CA, June 6-7 (pp. 1–15). https://doi.org/10.1145/997078.997097

- Forzani, E. (2016). Individual differences in evaluating the credibility of online information in science: Contributions of prior knowledge, gender, socioeconomic status, and offline reading ability [Doctoral dissertation]. University of Connecticut. https://www.researchgate.net/profile/ElenaForzani2/publication/311559938

- Fulkerson, R. (1996). Teaching the argument in writing. National Council of Teachers of English.

- Ghanizadeh, A. (2017). The interplay between reflective thinking, critical thinking, self-monitoring, and academic achievement in higher education. Higher Education, 74(1), 101–114. https://doi.org/10.1007/s10734-016-0031-y

- Greene, J. A., Cartiff, B. M., Duke, R. F., & Deekens, V. M. (2019). A nation of curators. Educating students to be critical consumers and users of online information. In P. Kendeou, D. H. Robinson, & M. T. McCrudden (Eds.), Misinformation and fake news in education (pp. 187–206). Information age publishing.

- Holopainen, L., Kairaluoma, L., Nevala, J., Ahonen, T., & Aro, M. (2004). Lukivaikeuksien seulontatesti nuorille ja aikuisille [Dyslexia screening test for youth and adults]. Niilo Mäki Institute.

- Horrigan, J., & Rainie, L. (2006). The internet’s growing role in life’s major moments. Pew Research Center Publication, Pew Internet and American Life Project. http://www.pewinternet.org/2006/04/19/the-internets-growing-role-in-lifes-major-moments/

- Hsieh, H.-F., & Shannon, S. E. (2005). Three approaches to qualitative content analysis. Qualitative Health Research, 15(9), 1277–1288. https://doi.org/10.1177/1049732305276687

- Hu, L. T., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55. https://doi.org/10.1080/10705519909540118

- Kahne, J., & Bowyer, B. (2017). Educating for democracy in a partisan age: Confronting the challenges of motivated reasoning and misinformation. American Educational Research Journal, 54(1), 3–34. https://doi.org/10.3102/0002831216679817

- Kammerer, Y., Amann, D., & Gerjets, P. (2015). When adults without university education search the Internet for health information: The roles of Internet-specific epistemic beliefs and a source evaluation intervention. Computers in Human Behavior, 48, 297–309. https://doi.org/10.1016/j.chb.2015.01.045

- Kammerer, Y., Kalbfell, E., & Gerjets, P. (2016). Is this information source commercially biased? How contradictions between web pages stimulate the consideration of source information. Discourse Processes, 53(5-6), 430–456. https://doi.org/10.1080/0163853X.2016.1169968

- Kiili, C., Laurinen, L., & Marttunen, M. (2008). Students evaluating Internet sources: From versatile evaluators to uncritical readers. Journal of Educational Computing Research, 39(1), 75–95. https://doi.org/10.2190/EC.39.1.e

- Kiili, C., Leu, D. J., Marttunen, M., Hautala, J., & Leppänen, P. H. T. (2018). Exploring early adolescents' evaluation of academic and commercial online resources related to health. Reading and Writing, 31(3), 533–557. https://doi.org/10.1007/s11145-017-9797-2

- Kim, Y. M., & Vishak, J. (2008). Just laugh! You don’t need to remember: The effects of entertainment media on political information acquisition and information processing in political judgment. Journal of Communication, 58(2), 338–360. https://doi.org/10.1111/j.1460-2466.2008.00388.x

- Kiuru, N., Haverinen, K., Salmela-Aro, K., Nurmi, J.-E., Savolainen, H., & Holopainen, L. (2011). Students with reading and spelling disabilities: Peer groups and educational attainment in secondary education. Journal of Learning Disabilities, 44(6), 556–569. https://doi.org/10.1177/0022219410392043

- Kline, R. B. (2011). Principles and practice of structural equation modeling. The Guilford Press.

- Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108(3), 480–498. https://doi.org/10.1037/0033-2909.108.3.480

- Larson, M., Britt, M. A., & Larson, A. A. (2004). Disfluencies in comprehending argumentative texts. Reading Psychology, 25(3), 205–224. https://doi.org/10.1080/02702710490489908

- Lee, H. Y., & List, A. (2019). Processing of texts and videos: A strategy-focused analysis. Journal of Computer Assisted Learning, 35(2), 268–282. https://doi.org/10.1111/jcal.12328

- Leu, D. J., Forzani, E., Rhoads, C., Maykel, C., Kennedy, C., & Timbrell, N. (2015). The new literacies of online research and comprehension: Rethinking the reading achievement gap. Reading Research Quarterly, 50(1), 37–59. https://doi.org/10.1002/rrq.85

- Leu, D. J., Kinzer, C. K., Coiro, J., Castek, J., & Henry, L. A. (2013). New literacies and the new literacies of online reading comprehension: A dual level theory. In N. Unrau, & D. Alvermann (Eds.), Theoretical models and process of reading (6th ed., pp. 1150–1181). International Reading Association.

- Maksl, A., Ashley, S., & Craft, S. (2015). Measuring news media literacy. Journal of Media Literacy Education, 6(3), 29–45. https://digitalcommons.uri.edu/jmle/vol6/iss3/3

- Marin, L. M., & Halpern, D. F. (2011). Pedagogy for developing critical thinking in adolescents: Explicit instruction produces greatest gains. Thinking Skills and Creativity, 6(1), 1–13. https://doi.org/10.1016/j.tsc.2010.08.002

- Marttunen, M., Laurinen, L., Litosseliti, L., & Lund, K. (2005). Argumentation skills as prerequisites for collaborative learning among Finnish, French, and English secondary school students. Educational Research and Evaluation, 11(4), 365–384. https://doi.org/10.1080/13803610500110588

- McClure, R., & Clink, K. (2009). How do you know that?: An investigation of student research practices in the digital age. Portal: Libraries and the Academy, 9(1), 115–132. https:/doi.org/10.1353/pla.0.0033

- Means, M. L., & Voss, J. F. (1985). Star Wars: A developmental study of expert and novice knowledge structures. Journal of Memory and Language, 24(6), 746–757. https://doi.org/10.1016/0749-596X(85)90057-9

- Means, M. L., & Voss, J. F. (1996). Who reasons well? Two studies of informal reasoning among children of different grade, ability, and knowledge levels. Cognition and Instruction, 14(2), 139–178. https://doi.org/10.1207/s1532690xci1402_1

- Metzger, M. J. (2007). Making sense of credibility on the Web: Models for evaluating online information and recommendations for future research. Journal of the American Society for Information Science and Technology, 58(13), 2078–2091. https://doi.org/10.1002/asi.20672

- Metzger, M. J., Flanagin, A. J., & Medders, R. B. (2010). Social and heuristic approaches to credibility evaluation online. Journal of Communication, 60(3), 413–439. https://doi.org/10.1111/j.1460-2466.2010.01488.x

- Miller, C., & Bartlett, J. (2012). Digital fluency': Towards young people's critical use of the internet. Journal of Information Literacy, 6(2), 35–55. https://doi.org/10.11645/6.2.1714

- Nussbaum, E. M. (2011). Argumentation, dialogue theory, and probability modeling: Alternative frameworks for argumentation research in education. Educational Psychologist, 46(2), 84–106. https://doi.org/10.1080/00461520.2011.558816

- Nussbaum, E. M., & Schraw, G. (2007). Promoting argument–counterargument integration in students’ writing. The Journal of Experimental Education, 76(1), 59–92. https://doi.org/10.3200/JEXE.76.1.59-92

- Osborne, J., Erduran, S., & Simon, S. (2004). Enhancing the quality of argumentation in science classrooms. Journal of Research in Science Teaching, 41(10), 994–1020. https://doi.org/10.1002/tea.20035

- Paul, J., Macedo-Rouet, M., Rouet, J.-R., & Stadtler, M. (2017). Why attend to source information when reading online? The perspective of ninth grade students from two different countries. Computers & Education, 113, 339–354. https://doi.org/10.1016/j.compedu.2017.05.020

- Pennycook, G., & Rand, D. G. (2019). Lazy, not biased: Susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition, 188, 39–50. https://doi.org/10.1016/j.cognition.2018.06.011

- Perkins, D. N. (1989). Reasoning as it is and could be: An empirical perspective. In D. M. Topping, D. C. Crowell, & V. N. Kobayashi (Eds.), Thinking across cultures: The third international conference on thinking (pp. 175–195). Erlbaum.

- Preiss, D. D., Castillo, J. C., Flotts, P., & San Martín, E. (2013). Assessment of argumentative writing and critical thinking in higher education: Educational correlates and gender differences. Learning and Individual Differences, 28, 193–203. https://doi.org/10.1016/j.lindif.2013.06.004

- Sinatra, G. M., Kienhues, D., & Hofer, B. K. (2014). Addressing challenges to public understanding of science: Epistemic cognition, motivated reasoning, and conceptual change. Educational Psychologist, 49(2), 123–138. https://doi.org/10.1080/00461520.2014.916216

- Stanford History Education Group. (2016). Evaluating information: The cornerstone of civic responsibility. An executive summary. https://sheg.stanford.edu/upload/V3LessonPlans/Executive%20Summary%2011.21.16.pdf

- Taylor, A., & Dalal, H. A. (2017). Gender and information literacy: Evaluation of gender differences in a student survey of information sources. College & Research Libraries, 78(1), 90–113. https://doi.org/10.5860/crl.78.1.90

- The National Institute for Health and Welfare. (2019). Vaccination coverage. https://thl.fi/en/web/vaccination/vaccination-coverage

- Tseng, S., & Fogg, B. J. (1999). Credibility and computing technology. Communications of the ACM, 42(5), 39–44. https://doi.org/10.1145/301353.301402

- Tseronis, A. (2018). Multimodal argumentation: Beyond the verbal/visual divide. Semiotica, 2018(220), 41–67. https://doi.org/10.1515/sem-2015-0144

- Toulmin, S. E. (1958). The uses of argument. Cambridge University Press.

- Utriainen, J., Marttunen, M., Kallio, E., & Tynjälä, P. (2017). University applicants' critical thinking skills: The case of the Finnish educational sciences. Scandinavian Journal of Educational Research, 61(6), 629–649. https:/doi.org/10.1080/00313831.2016.1173092

- van Amelsvoort, M., Andriessen, J., & Kanselaar, G. (2007). Representational tools in computer-supported collaborative argumentation-based learning: How dyads work with constructed and inspected argumentative diagrams. Journal of the Learning Sciences, 16(4), 485–521. https://doi.org/10.1080/10508400701524785

- van Strien, J. L., Kammerer, Y., Brand-Gruwel, S., & Boshuizen, H. P. (2016). How attitude strength biases information processing and evaluation on the web. Computers in Human Behavior, 60, 245–252. https://doi.org/10.1016/j.chb.2016.02.057

- Vosoughi, S., Roy, D., & Aral, S. (2018). The spread of true and false news online. Science, 359(6380), 1146–1151. https://doi.org/10.1126/science.aap9559

- Vraga, E. K., & Tully, M. (2021). News literacy, social media behaviors, and skepticism toward information on social media. Information, Communication & Society, 24(2), 150–166. https://doi.org/10.1080/1369118X.2019.1637445

- Walsh, J. (2010). Librarians and controlling disinformation: Is multi-literacy instruction the answer? Library Review, 59(7), 498–511. https://doi.org/10.1108/00242531011065091

- Walton, D. N. (1991). Bias, critical doubt and fallacies. Argumentation and Advocacy, 28(1), 1–22. https://doi.org/10.1080/00028533.1991.11951525

- Wolfe, C. R., & Britt, M. A. (2008). The locus of the myside bias in written argumentation. Thinking & Reasoning, 14(1), 1–27. https://doi.org/10.1080/13546780701527674

- Xu, X., & Yao, Z. (2015). Understanding the role of argument quality in the adoption of online reviews: An empirical study integrating value-based decision and needs theory. Online Information Review, 39(7), 885–902. https://doi.org/10.1108/OIR-05-2015-0149

- Xiao, B., & Benbasat, I. (2015). Designing warning messages for detecting biased online product recommendations: An empirical investigation. Information Systems Research, 26(4), 793–811. https://doi.org/10.1287/isre.2015.0592

- Zubiaga, A., Aker, A., Bontcheva, K., Liakata, M., & Procter, R. (2018). Detection and resolution of rumours in social media: A survey. ACM Computing Surveys, 51(2), 1–36. https://doi.org/10.1145/3161603