Abstract

Technological developments have changed the advertising landscape by extending the possibilities to collect, process, and share consumer data to optimize advertising. These developments have made data collection and consequently dataveillance—the automated, continuous, and sometimes unspecific collection, storage, and processing of digital traces—central concepts for advertising scholarship and practice. Studying the impact of consumers’ perceptions of dataveillance is important as perceptions about data collection practices have been shown to diminish the effects of data-driven advertising. This article advances advertising theory by conceptualizing the impact of consumers’ perceptions of dataveillance in digital data-driven advertising and applying long-standing advertising research theories to this new phenomenon to provide an overarching framework for future research. The current work presents the dataveillance effects in advertising landscape (DEAL) framework, with specific research directions for future research. This framework has practical implications as it shows how false or accurate beliefs about dataveillance impact consumer responses to digital data-driven advertising. Advertisers may adapt to ensure that digital data-driven advertising does not result in backlash or raise ethical questions. Finally, the framework has implications for privacy regulations, as consumer understanding of data collection is a core issue in current regulatory approaches to dataveillance.

Over the years, technological developments have played a crucial role in the emergence and evolution of new forms of advertising. Advertising scholars and the industry have been adapting to new technological advances by changing the definition of advertising and adjusting its practice (Dahlen and Rosengren Citation2016; Richards and Curran Citation2002). The same can be said about recent advances in machine-learning algorithms and artificial intelligence (AI), which are currently dominating forces in the advertising industry (Li Citation2019; Rodgers Citation2021). They have transformed the advertising ecosystem and have led to the rise of so-called digital data-driven advertising strategies, such as computational advertising and personalized advertising (). One of the characteristics that distinguishes these types of advertising is the centrality of the collection and processing of consumers’ behaviors and personal characteristics. Computational advertising strongly depends on granular-level data collection, mining, and aggregation (Helberger et al. Citation2020). Hence, recent developments in the advertising practice contribute to dataveillance—or the automated, continuous, and unspecific collection, storage, and processing of digital traces (Büchi, Festic, and Latzer Citation2022; Degli-Esposti Citation2014). However, a comprehensive overview of the impact of consumers’ perceptions of dataveillance on advertising effects is largely missing.

Table 1. Glossary of main concepts.

Consumers have been shown to feel uneasy that their data are so widely collected and processed for advertising purposes, as such harvesting poses a threat to their privacy (Segijn and Van Ooijen Citation2022) and autonomy (Solove Citation2007; Büchi, Festic, and Latzer Citation2022). The impact of consumers’ perceptions of dataveillance is important to study for advertising practice as awareness of data collection practices and privacy threats in general are known to diminish the effects of data-driven advertising by inducing cognitive, affective, and behavioral responses among consumers (e.g., Ham Citation2017). Hence, the aim of this article is to conceptualize the impact of consumers’ perceptions of dataveillance in digital data-driven advertising and apply long-standing theories in advertising research to this new phenomenon to provide an overarching framework for future research on this topic.

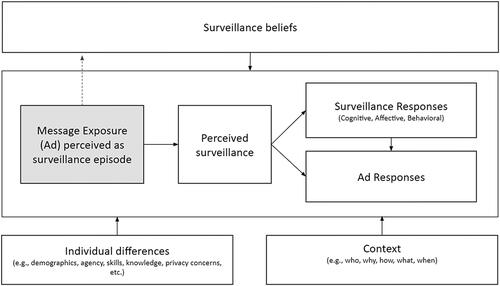

This article contributes to building our understanding of digital data-driven advertising by theorizing the role of consumers’ perceptions of dataveillance in the advertising landscape. Based on existing theories in advertising—most of which were developed before the digital advertising era—we build a framework that could drive future research on digital data-driven advertising. Given the omnipresence of data and its usage for advertising (Huh and Malthouse Citation2020; Yun, Segijn, et al. Citation2020), it is expected that dataveillance will be central to the future of advertising practice. Therefore, we propose the dataveillance effects in advertising landscape (DEAL) framework, which is expected to serve as a framework to study consumers’ perceptions of dataveillance in digital data-driven advertising in the years to come. This framework is patterned on the persuasion knowledge model (Friestad and Wright Citation1994) but is different because (1) the focus of DEAL is on a surveillance episode, (2) DEAL introduces the idea of surveillance beliefs, and (3) DEAL includes surveillance responses as a unique way to respond to digital data-driven advertising which is different from general advertising responses. We start by conceptualizing dataveillance and its role in the digital advertising landscape. Next, we discuss the DEAL framework embedded in theory and research relevant to the advertising scholarship. Finally, building on the DEAL model, we propose an agenda for future research on the role of consumers’ perceptions of dataveillance in advertising.

The DEAL framework also has implications for advertising practitioners and regulators. For advertisers, it shows how false or accurate surveillance beliefs impact consumer responses to digital data-driven advertising and where these beliefs may possibly stem from. This allows advertisers to correct them and to ensure that digital data-driven advertising has the intended positive effects and does not result in a backlash. It also allows advertisers to anticipate possible ethical implications of digital data-driven advertising. In addition, the DEAL framework has important implications for privacy regulations, because consumer understanding of data collection and processing practices is a core issue in current regulatory approaches to dataveillance.

Setting the Scene

Defining Dataveillance

Based on the definitions provided by Degli-Esposti (Citation2014) and Büchi, Festic, and Latzer (Citation2022), we define dataveillance as automated, continuous, and (unspecific) collection, storage, and processing of digital traces from people or groups, by means of personal data systems by state and corporate actors, to regulate or govern their behavior. Companies across various sectors have been collecting, using, and sometimes sharing information about prospects and consumers for decades. As a result, these companies have become the main sources of (continuous) data collection in the digital society (Christl Citation2017). Central to this definition is that the purpose of data collection is to regulate or govern behavior. This includes advertising and other practices of organizations aimed at persuading consumers, and hence makes dataveillance different from simple online monitoring or analytics, which focus on identification of relevant audiences or gaining insights about consumers (Andrejevic Citation2006). In addition, volume, velocity, variety, and continuousness make it different from the concept of data collection ().

Before digital developments, four main sources of consumer data existed. First, companies kept their own records on consumers (e.g., through loyalty programs). Second, in the direct marketing sector, lists of consumers with certain characteristics were commonly traded. Third, credit bureaus held detailed credit information on consumers. And fourth, records about individuals such as birth certificates and various licenses were available to companies (Christl Citation2017). Nowadays, data and analytics companies commonly combine information from all four of these streams, and digital tracking enables the collection of additional information about consumer behavior and characteristics that was not accessible in the past.

For years, tracking of online behavior has been widely used to collect information about people. Technological solutions such as cookies have enabled companies to track website visits and follow the online behavior of individuals (Smit, Van Noort, and Voorveld Citation2014). More recently, we are also observing the extension of data collection from the digital context to the offline world (Christl Citation2017; Yun, Segijn, et al. Citation2020). Devices such as smartphones, wearables, and other smart devices that are equipped with sensors and connected to the Internet are used to make possible today’s tracking and profiling landscape, adding another dimension to the extent of data collection (Christl Citation2017). This continuous collection of data about online and offline behaviors of consumers through a magnitude of devices contributes to the creation of dataveillance.

Dataveillance and Advertising

Digital data-driven advertising that relies on processing consumer data to create and deliver advertising messages and to measure their effects (Huh and Malthouse Citation2020; Yun, Segijn, et al. Citation2020) is one of the factors contributing to the creation of dataveillance (Degli-Esposti Citation2014). In exchange for free services (e.g., web searches, social networks), organizations develop profiles of consumers who use their services and use or sell such information to other companies, either in the form of data or as market segments tailored for ad placement. Based on the combined data of (among others) past search behavior, past browsing behavior, products viewed online, articles read, and videos watched, ads can be adjusted to individual consumers across a network, with the aim of making them attractive and personally relevant to the individual (Yun, Segijn, et al. Citation2020). Due to the importance of data in the current advertising landscape, technology companies that create consumer profiles are now seen as a part of the refined advertising industry, while data collection technology is seen as a crucial precondition for data-driven advertising (Helberger et al. Citation2020).

Regarding the future of digital data-driven advertising, data collection technology is expected to shape it and drive its expansion (Helberger et al. Citation2020). Developments in automated content recognition techniques will make it possible to deliver personalized ads drawing on both offline and online data, such as TV viewing behaviors that are being tracked through watermarking or IP-matching techniques (Segijn Citation2019), or website visits that are being tracked by cookies or social media pixels (Van Gogh, Walrave, and Poels Citation2020). The same applies to indoor data collection—in stores, for example. Bluetooth beacons enable advertisers to monitor and react to consumers’ needs and demands (Kwet Citation2019). Information from smart cars, smart homes, and smart cities are expected to enable further adjustments of ads based on consumer data. Regarding impact on consumers, past research shows that exposure to personalized advertising in the public space (for example, in-store advertising) elicits both positive and negative consumer reactions (Hess et al. Citation2020). Hence, these developments provide opportunities for advertisers but also raise important questions about how these technologies will affect consumer responses to digital data-driven ads.

Perceived Surveillance: Effects of Dataveillance on Consumers

From the societal perspective, dataveillance contributes to the creation of so-called surveillance culture, which is driven by “corporate and state modes of surveillance, mediated by increasingly fast and powerful new technologies, tilted toward the incorporation of everyday life through information infrastructures and our increasing dependence on the digital in mundane relationships” (Lyon Citation2017, p. 826). Such culture goes beyond the surveillance state, a notion commonly discussed in the 20th century and related to governmental surveillance. Corporations now take a central role in dataveillance as well. The consequence of this surveillance culture for individuals is that they, similar to all other actors in the society, may play an active role in it through their perceptions and behaviors (Lyon Citation2017). In the past, consumers’ active role in surveillance culture has been shown through self-monitoring behaviors on social media platforms. Users of such platforms adjust their behavior to anticipate the monitoring of others: social media platforms, family, employers, and so on (Duffy and Chan Citation2019).

From an individual’s point of view, data collection and processing by corporations may trigger one’s perception of being surveilled (i.e., perceived surveillance), which can be defined as the feelings of being watched or listened to, or that personal data are recorded (Segijn et al., Citation2022). An example of this is the so-called surveillance effect; for example, when interacting with smart devices, consumers worry that their devices listen to them, which results in relevant ads being displayed online based on their recent conversation topics (Frick et al. Citation2021). Hence, automated and continuous data collection by corporations may lead to the perception among consumers that they are being watched regardless of whether this is true. provides an overview of studies that examined perceived surveillance in relation to digital data-driven advertising.

Table 2. Overview of past research on perceived surveillance in digital data-driven advertising.

Perceived surveillance may impact individual behavior. More specifically, when their data are being collected, consumers may play an active role in surveillance culture by trying to regulate what others can collect about them and what can be done with this knowledge (Lyon Citation2017). More specifically, they construct surveillance imaginaries, or a collective understanding of the extent and aim of surveillance and expectations about individual own behavior (Duffy and Chan Citation2019). In addition, this leads to the implementation of surveillance practices, in other words, the disciplinary and reactive tactics developed as a consequence of feeling surveilled (Duffy and Chan Citation2019). Hence, surveillance practices are conceptualized as responses by the person being surveilled and not as practices executed by the surveillant. To prevent confusion, we label them surveillance responses, which include consumer behavioral responses to perceived surveillance (). Due to the central role of dataveillance within data-driven advertising and consumers’ perceptions thereof, surveillance responses can potentially be a new type of responses to advertising.

Introducing the Dataveillance Effects in Advertising Landscape (DEAL) Framework

To further our understanding of the role of consumers’ perceptions of dataveillance in the digital advertising landscape and to enable future research in this area, we propose the DEAL framework (). Central to this framework is the concept of consumers’ perceptions of dataveillance. The DEAL framework explains how perceptions of dataveillance can be activated by ad exposure and subsequently affect surveillance (e.g., privacy protection) and advertising responses (e.g., brand attitudes, sales, resistance). The starting point of the DEAL model is the surveillance belief that, similar to other cognitive beliefs such as persuasion knowledge (Friestad and Wright Citation1994; Ham and Nelson Citation2016), consumers develop over time through different sources. We propose that surveillance beliefs can be activated through a surveillance episode, which is a directly observable instance of data collection. For example, exposure to advertising can lead to the perception of surveillance. That means that when surveillance beliefs are absent or not activated, consumers would not make the link between ad exposure and data collection and thus would not perceive surveillance. Hence, surveillance beliefs are a condition of activation of perceived surveillance.

Formation of Surveillance Beliefs

In general, beliefs are ideas that a person holds as being true (Yzer Citation2012). Hence, we define surveillance beliefs as the ideas a person holds regarding the extent and aim of data collection. In contrast to surveillance imaginaries conceptualized in past research, beliefs are individual and they may differ among consumers. Surveillance beliefs can be information driven (i.e., transparency of practice, education/literacy programs, media) and experience driven (i.e., firsthand or secondhand experience). Building on past research on beliefs, we argue that they could be created through accurate and false information (Lin and Xu Citation2021), and therefore surveillance beliefs could be a mix of accurate information and misinformation. However, no matter whether consumers’ beliefs are based on accurate or inaccurate information, they may still be activated by ad exposure and affect subsequent perceptions, attitudes, and behaviors (similar to the impact of other false beliefs on perceptions and behavior; Stasson and Fishbein Citation1990).

Information-Driven Surveillance Beliefs

Information-driven surveillance beliefs can be created in at least three ways: transparency of (ad) practice, education/literacy programs, and the media. First, we argue that surveillance beliefs may be created by transparency of the data collection practices, such as disclosing how corporations collect, process, or share personal data (Segijn, Strycharz, et al. Citation2021). In the context of digital data-driven advertising, this disclosure could be done by providing detailed information about how personal data are collected and for how long they are kept (Song et al. Citation2016), including disclosure statements of data collection methods (Van Noort, Smit, and Voorveld Citation2013; Van Ooijen Citation2022); providing information on personalization parameters, such as “Why am I seeing this ad?” information (Kim, Barasz, and John Citation2019); providing information on inferences made from personal data, such as “Your Interests” on Facebook (Büchi et al. Citation2021) or user agreements when installing a new app (McDonald and Cranor Citation2008). Transparency practices can be voluntary (in which case the type of information provided is decided by the data collector or processor) or obligatory (in which case the regulators set requirements for such practices, as is the case in the European Union) (Degeling et al. Citation2019). Either way, the actor responsible for data collection, processing, or sharing is the one responsible for the transparency of these practices (Segijn, Strycharz, et al. Citation2021). Once consumers are exposed to the disclosure, this may contribute to their surveillance beliefs. Possible exceptions are when disclosures or user agreements are avoided by the consumer, when no attention is paid to the disclosure (Van Noort, Smit, and Voorveld Citation2013), or when the disclosure is misunderstood or difficult to process (Van Ooijen and Vrabec Citation2019).

Second, similar to the formation of persuasion knowledge or advertising literacy (Hudders et al. Citation2017), surveillance beliefs could be created through literacy programs. In fact, it has been argued that in the current advertising landscape consumer understanding of data collection practices is crucial. Hence, regulators and consumer advocates need to take an active role in increasing consumer literacy (Helberger et al. Citation2020). Previous work on data-driven advertising strategies has shown that knowledge about how these tactics work is often limited (e.g., Segijn and Van Ooijen Citation2022; Smit, Van Noort, and Voorveld Citation2014; McDonald and Cranor Citation2010). However, past research has also shown that, even when consumers do not completely understand data collection, processing, and sharing, they are highly aware that their data are being collected, processed, and shared for advertising purposes, and they perceive these practices as a threat to their privacy (Boerman, Kruikemeier, and Zuiderveen Borgesius Citation2021; Strycharz et al. Citation2019, Citation2021). Hence, consumers may form surveillance beliefs without detailed understanding of data collection practices.

Third, consumers can also learn about data collection practices through the news media. As Von Pape, Trepte, and Mothes (Citation2017) have shown in their content analysis of the coverage of Internet privacy by the German press, informational privacy dominates media coverage on privacy- and data-collection-related issues. At the same time, the researchers found there was a strong consensus that the current level of privacy among consumers as reported in the media is low. This may impact consumers’ beliefs about the extent and level of dataveillance in the advertising landscape.

Experience-Driven Surveillance Beliefs

When consumers are exposed to an ad that builds on their personal information, this exposure could make them aware that their information has been collected and is now processed to target them with a specific product or service. This is an example of beliefs through firsthand experience (Friestad and Wright Citation1994). For example, Kim and Huh (Citation2017) found that self-reported exposure frequency to online advertising based on past behavior is negatively related to whether consumers would click on a specific ad; the more ads consumers report seeing daily that are based on their browsing behavior, the less likely it is that they will click on such an ad. In addition, consumers also acquire experience-driven beliefs through conversations with other consumers (secondhand experience) (Friestad and Wright Citation1994), which could also have consequences for advertising effectiveness.

People use folk theories to understand phenomena in everyday life, such as technological systems (DeVito, Gergle, and Birnholtz Citation2017), algorithms in the media (Ytre-Arne and Moe Citation2021), or algorithmic profiling (Büchi et al. Citation2021). Folk theories are not necessarily based on facts but are formed based on people’s personal experiences (Gelman and Legare Citation2011). More specifically, they are rooted in experience, rather than mapping more abstract explanations of how technology works (Toff and Nielsen Citation2018). They can be more or less explicit, purely speculative, based on personal experience, and/or based on secondhand sources (Rip Citation2019). An example of such a folk theory is the earlier mentioned surveillance effect (Frick et al. Citation2021). It is argued that due to selective attention for salient items (Klayman Citation1995), advertising for products one has recently talked about stands out to consumers. This reinforces folk theories because, in general, people look for evidence supporting their existing beliefs (Nickerson Citation1998). Therefore, we argue that it does not matter whether folk theories are accurate for their impact on one’s surveillance beliefs and subsequent change in surveillance and advertising responses.

Surveillance Responses

When consumers’ surveillance beliefs are activated, consumers may experience the feeling of being watched. For example, when seeing a personalized ad on their mobile device consumers sometimes believe that the ad was shown based on the device monitoring their past conversations (Frick et al. Citation2021). This may create or activate their belief that “their phone is listening.” This surveillance belief (cognitive belief, even though not necessarily accurate) makes consumers feel watched (i.e., perceived surveillance). Hence, when an ad is brought in relation with data collection, it makes consumers think of their cognitive beliefs that may make them feel watched. Subsequently, perceived surveillance could affect surveillance and advertising responses. Surveillance responses are responsive practices that relate to being surveilled (Lyon Citation2017). They represent a way to respond to a situation of perceived surveillance. Surveillance responses can be cognitive (e.g., calculus), affective (e.g., emotional responses), and behavioral (e.g., privacy protection measures).

Regarding cognitive surveillance responses, privacy research shows the importance of costs and benefits calculus in the situation of data collection and processing. The so-called privacy calculus theory prescribes that when confronted with collection and processing of their data, consumers balance the associated benefits and costs (Laufer and Wolfe Citation1977). These benefits and costs then define their behavioral reaction, for example, self-disclosure or taking privacy protection measures (Baruh, Secinti, and Cemalcilar Citation2017). Benefits include, for example, entertainment, relevant information, or monetary rewards, while costs include potential identity theft, reputational damage, or loss of control over data (Dinev and Hart Citation2006). A qualitative study showed that people could have positive thoughts related to perceived surveillance, such as the idea that it leads to a better user experience, personal benefits, and admiration about what technology can do (Zhang et al. Citation2021). Regarding advertising, past research has shown that exposure to a personalized ad can indeed trigger considerations of benefits and costs (Bol et al. Citation2018).

Second, perceived surveillance can also lead to affective reactions among consumers. For example, Segijn and Van Ooijen (Citation2022) found that respondents expressed negative affect or emotions in their qualitative answers as a response to a personalization scenario. They found personalized ads and tactics creepy, annoying, or unsettling. Furthermore, they expressed that they disliked, distrusted, or hated them, and that such tactics could make them upset or worried. Besides negative affect, some participants also expressed positive affect or emotions. For example, they mentioned that personalized advertising could make them happy or that it excited them.

Finally, perceptions of surveillance could also result in behavioral surveillance responses by the consumer aimed directly at dataveillance (Lyon Citation2017). They include limiting the information consumers disclose and adopting privacy protection measures (Boerman, Kruikemeier, and Zuiderveen Borgesius Citation2021). Regarding limiting disclosures, consumers may choose to self-censor the information they share online in case of voluntary online disclosure, or they may change their behavior to control what behavioral information can be collected about them—so-called chilling effects (Büchi, Festic, and Latzer Citation2022). Chilling effects can be described as individuals refraining from exhibiting certain behaviors to keep their data from being collected (Solove Citation2007). In the context of advertising, chilling effects can, for example, include not visiting websites that collect consumer data or not using technology that is perceived as surveilling. By adopting protective measures, consumers actively mitigate the collection, processing, and sharing of their personal information, for example, by installing ad blockers, using incognito browsers when searching for flight tickets, declining or deleting cookies, and using a VPN or “do not track” function (Boerman, Kruikemeier, and Zuiderveen Borgesius Citation2021). Furthermore, when data are directly shared by consumers—for example, when signing up for a service—consumers can purposely provide inaccurate information to mislead the data collector (Yun, Segijn, et al. Citation2020).

Advertising Responses

Perceived surveillance and surveillance responses could also lead to certain ad responses (e.g., attention, brand memory, brand attitudes, sales, resistance). We argue that while not directly related to the advertisement itself, perceived surveillance may impact advertising responses through spillover effects. Spillover refers to the way that information not mentioned in the message affects ad perceptions and beliefs (Ahluwalia, Unnava, and Burnkrant Citation2001). For example, Tan, Brown, and Pope (Citation2019) concluded that perceptions about the website on which an ad is shown, such as respect toward the website, positively impacted attitudes toward the advertised brand. Regarding digital data-driven advertising, one could expect that data collection necessary for creation of the ad, while unrelated to the focal information, may influence its effectiveness.

In addition, perceived surveillance could directly result in a form of advertising resistance, which is defined as “a motivational state, in which consumers have the goal to reduce attitudinal or behavioral change or to retain one’s current attitude” (Fransen et al. Citation2015, p. 7). According to reactance theory (Brehm and Brehm Citation1981), resistance is experienced when individuals perceive that their freedom is limited by persuasive attempts, such as advertising. Consumers can resist advertising by using avoidance (e.g., changing channels, ignoring), contesting (e.g., counterarguing), and empowerment (e.g., attitude bolstering) (Fransen et al. Citation2015). Indeed, some initial research found that perceived surveillance increases perceived threat (Farman, Comello, and Edwards Citation2020), counterarguing (Farman, Comello, and Edwards Citation2020; Segijn, Kim, et al. Citation2021), and affective reactance (Farman, Comello, and Edwards Citation2020).

Finally, we propose a link between surveillance responses and advertising responses. For example, experiencing certain emotions (e.g., dislike, hate, distrust) could carry over to the evaluation of the ad message. In addition, taking certain actions (e.g., privacy protection measures) could lead to a false sense of control which could lead to more positive advertising responses by letting one’s guard down. In the context of online disclosure, Brandimarte, Acquisti, and Loewenstein (Citation2013) introduced the notion of a control paradox: Control over sharing private information increases the willingness to publish sensitive information. This paradox could also take place in relation to privacy protection and advertising responses.

Boundary Conditions

How consumers respond to dataveillance may depend on individual characteristics and contextual factors. We discuss several individual characteristics that have often been examined in digital data-driven advertising or linked to dataveillance. In addition, we discuss sources, purpose, mechanism, data type, and timing as context factors.

Individual Differences

As data collection and processing for advertising purposes increases privacy risks (e.g., McDonald and Cranor Citation2010), privacy concerns have become a central factor that determines how consumers cope with data collection. Privacy concerns can be defined as “the degree to which a consumer is worried about the potential invasion of the right to prevent the disclosure of personal information to others” (Baek and Morimoto Citation2012, p. 63). In the past, it has been shown that privacy concerns increase ad skepticism, generate avoidance for personalized advertising, and can also cause less positive attitudes toward such advertising (Kim and Huh Citation2017; Baek and Morimoto Citation2012).

Privacy cynicism is another factor that has recently been examined as an individual difference that could affect how people cope with data collection and processing (Van Ooijen, Segijn, and Opree Citation2022). Privacy cynicism “represents a cognitive coping mechanism, allowing users to overcome or ignore privacy concerns and engage in online transactions, without ramping up privacy protection efforts” (Lutz, Hoffmann, and Ranzini Citation2020, p. 1173). Past research has shown that individuals who are more cynical put less effort into making privacy decisions and thus are less likely to protect their privacy and instead “do nothing” (Choi, Park, and Jung Citation2018).

In addition, conspiracy mentality might be relevant to study in this context. Conspiracy mentality is the susceptibility of people to explanations based on conspiracy theories (Bruder et al. Citation2013). Given that people who are more likely to believe in one conspiracy theory are also more likely to believe in other conspiracy theories (Swami, Chamorro‐Premuzic, and Furnham Citation2010), it is likely that people who score high on conspiracy mentality scales are also likely to believe in, for example, the surveillance effect. Initial research on personalized advertising found that conspiracy mentality contributes to awareness of data collection, processing, and sharing (Boerman and Segijn Citation2022). Regarding advertising responses, one could thus expect that consumers with conspiracy mentality are, for example, more likely to engage in surveillance responses in reaction to perceived surveillance.

While concerns and perceptions about privacy may have a negative impact on consumers’ responses to advertising, having a positive attitude toward receiving digital data-driven ads may mitigate the effect of perceived surveillance. Data-driven advertising has numerous benefits for consumers, such as informativeness, credibility, and entertainment of the ad (Kim and Han Citation2014). The privacy calculus theory or privacy trade-off explains this result; when the value of an object, person, or activity outweighs the costs of sharing personal data, consumers are more likely to share their data or accept data collection methods (Acquisti, John, and Loewenstein Citation2013; Dinev and Hart Citation2006).

Contextual Factors

In terms of contextual factors, Zhang et al. (Citation2021) identified source, purpose, and mechanism as important factors in the discussion of dataveillance. First, the source (i.e., who) is about the actor who is behind the data collection. In our context this is the corporation that collects, processes, and shares the personal data of (potential) consumers. The type of corporation (e.g., sector, size, for profit/nonprofit) and how it is perceived by consumers may influence the effects of perceived surveillance. For example, Bleier and Eisenbeiss (Citation2015) showed that highly trusted corporations can collect and process personal data for advertising purposes without eliciting increased reactance or privacy concerns, which is not the case for less-trusted corporations. In addition, to what extent consumers perceive the source as credible could influence advertising effectiveness. According to the source credibility hypothesis, perceptions about a message rely on perceptions about its source (Hovland and Weiss Citation1951). Relationship strength (e.g., brand loyalty) with the company may also play a mitigating role in the effects of perceived surveillance.

Second, the perceived purpose (i.e., why) of data collection, processing, and sharing is an important context factor that could influence the relationship between perceived surveillance, and surveillance and advertising responses. For example, consumers could believe that corporations collect their data for financial gain, advertising optimization, product development, manipulating them into certain behaviors (Zhang et al. Citation2021), security (Van Dijck Citation2014), or service improvement (Zhu et al. Citation2017). In line with the privacy calculus theory (Dinev and Hart Citation2006), consumers might respond differently to a situation of perceived surveillance when they see benefits (e.g., service improvement, security) rather than costs (e.g., manipulation).

Third, the perceived mechanism (i.e., how) that is used to collect, process, or share the information could influence the relationship between perceived surveillance, and surveillance and advertising responses. Segijn and Van Ooijen (Citation2020) showed that different data collection methods result in varying levels of perceived surveillance. In their study, they examined consumers’ perceptions of online profiling, social media analytics, IP matching, geofencing, keywords, and watermarking. Even though all of these methods were considered to increase perceived surveillance, they varied in the degree of perceived surveillance. Moreover, the older the generation, the more surveilled they felt. Regarding how data are collected, transparency of this process could also impact consumer behavior (Segijn, Strycharz, et al. Citation2021). For example, when organizations collect and process data openly (so-called overt data collection), consumers react to ads more positively than when organizations collect and process data covertly (Aguirre et al. Citation2015).

In addition, we include the type of data (i.e., what) that is collected, processed, or shared. Advertising can be personalized based on, among other factors, demographic information (e.g., De Keyzer, Dens, and De Pelsmacker Citation2015), online behaviors (Smit, Van Noort, and Voorveld Citation2014), or simultaneous media consumption (Segijn Citation2019). The type of personal data could affect advertising outcomes depending on the information specificity (Boerman, Kruikemeier, and Zuiderveen Borgesius Citation2017; Bleier and Eisenbeiss Citation2015).

Finally, we add when to the list of contextual factors. This factor refers to the timing of data collection, processing, or sharing. Data collection could be seasonal, centered around big events (e.g., Super Bowl, Olympics, holidays) or when consumers are in specific locations, such as when they are in a public or private setting (e.g., home). For example, Strycharz and Segijn (Citation2021) investigated to what extent collecting data on offline behavior of individuals in their homes led to more behavioral surveillance responses due to intrusion of private space of consumers. In addition, the impact of perceptions of dataveillance might be different depending on situations in which consumers are more vulnerable, for example, when they are tired and have less cognitive capacity to think critically and make informed decisions (Strycharz and Duivenvoorde Citation2021). Also, consider these practices during crisis situations, such as during the COVID-19 pandemic.

Future Research Agenda

Research regarding the impact of consumers’ perceptions of dataveillance in digital data-driven advertising is still in its infancy. Given the quantities of consumer data available and the opportunities for the advertising industry, it is expected that research focusing on dataveillance will only increase. Based on the DEAL framework described in the previous section, we propose three areas of future research ().

Table 3. Future research directions overview.

Research Direction 1: Surveillance Beliefs

Surveillance beliefs play an important role in the DEAL framework. Future research is necessary to further examine (1) how surveillance beliefs are developed or activated; (2) what the effects of these beliefs are; and (3) how to combat false beliefs (e.g., misinformation or conspiracy theories on dataveillance).

Development and Activation of Surveillance Beliefs

First, research should focus on how surveillance beliefs are developed or activated through transparency of practice, education/literacy programs, news media, and firsthand or secondhand experience. Similar to other types of beliefs (Yzer Citation2012), surveillance beliefs can be measured through a two-step process by conducting a belief elicitation study to identify salient beliefs in the target population first, followed by a survey study in which those beliefs can be linked to, for example, surveillance responses. Regarding transparency of practice, research could further investigate how to best inform consumers about data collection, processing, and sharing practices. The transparency-awareness-control framework (Segijn, Strycharz, et al. Citation2021) could be used as a starting point for such investigations. To what extent transparency is effective in belief formation depends on how consumers are informed about the practices. Studying characteristics of transparency messaging is a possible future research direction that would contribute to theory building on consumer empowerment through transparency. Also, research could further investigate how to effectively ask consumers about collection, processing, and sharing their information through user agreements in a way that will allow consumers to read them and make them easier to comprehend.

Similarly, transparency of practice could be communicated through disclosures, which could activate persuasion knowledge (Boerman, Willemsen, and Van Der Aa Citation2017) and contribute to surveillance beliefs development. Future research could investigate how to best design such disclosures and where and when to place them. Scholars could build on the sponsorship (e.g., Boerman, Willemsen, and Van Der Aa Citation2017) or native advertising (e.g., Wojdynski and Evans Citation2016) literature and extend such theories as the covert advertising recognition and effects model (Wojdynski and Evans Citation2020) to the digital data-driven advertising context and disclosures of data collection, processing, and sharing. Some work has already looked at disclosures in personalized advertising. For example, Van Noort, Smit, and Voorveld (Citation2013) looked at a cookie icon that was used in the Netherlands to indicate online behavioral advertising. They found that awareness and familiarity with this icon was low and that an additional explanation was needed to correctly identify its meaning. In addition, the effect of the disclosure might depend on the trustworthiness of the source and could decrease advertising effectiveness for untrustworthy sources (Van Ooijen Citation2022). However, questions remain about whether and how this works for various types of data collection practices (see Segijn, Strycharz, et al. Citation2021 for an overview of practices with high and low transparency). From a practical perspective, studying the effectiveness of data collection disclosures will allow regulators to engage in evidence-based lawmaking and improve the implementation of current (e.g., General Data Protection Regulation) and future (e.g., Digital Services Act) regulations.

Furthermore, more research is needed to understand how to effectively inform consumers about data collection, processing, and sharing practices through education and literacy programs. Some initial studies have started to investigate different types of information on personalized communication and data collection practices and how these types of information affect consumers’ responses. For example, providing consumers with technical information on personalized communication was found to increase knowledge in people without any prior knowledge of this strategy compared to those with no information (Segijn, Kim, et al. Citation2021). Regarding legal knowledge, Strycharz et al. (Citation2021) found that it improves consumers’ perceived self-efficacy when it comes to control over data collection through cookies. However, this study and one other study (Strycharz et al. Citation2019) found that providing people with technical information on data collection practices led to participants perceiving these practices as less severe and consequently caused participants to be less likely to use the opt-out function of data processing. Given the early stages of research into informing people about data collection for advertising purposes and mixed findings in this field, more research is needed to examine what type of information would work best to make consumers informed decision makers about their personal data.

In addition, research on developing surveillance beliefs through first- or secondhand ad exposure is needed to further understand when ad exposures contribute to and activate beliefs about data collection, processing, and sharing practices. One could argue that the more specific or more personal data are used as input to personalize a message, the more consumers may develop beliefs about how their data are used to create personalized ads for them. This would mean that exposure to more personalized ads could contribute to developing surveillance beliefs and at the same time, seeing such ads could make one think about advertisers collecting and processing their data (belief activation). More research is needed on the relationship between personalization level and surveillance beliefs, which would allow to further our theoretical understanding of the effects of personalization on consumers and, at the same time, inform organizations that serve personalized ads about the point when an ad becomes not only persuasive but also a surveillance episode. A challenge, however, is that asking about surveillance beliefs in research itself also activates those beliefs.

Effects of Surveillance Beliefs

Future research should also focus on the effects of surveillance beliefs on perceived surveillance, surveillance responses, and advertising responses. Building on the transparency-awareness-control framework (Segijn, Strycharz, et al. Citation2021), being aware of data collection and processing results in having more control over personal data only when consumers have the ability and desire to exercise this control. Research is needed to examine the impact of surveillance beliefs on surveillance and advertising responses. But it is also important to explore consumer desire and abilities to make informed decisions based on accurate and relevant knowledge when it comes to data disclosure for advertising (as informed decision making is now one of the aims of the General Data Protection Regulation in Europe).

Combating False Beliefs

Finally, more research is needed into the development, activation, and combating of inaccurate beliefs, as well as the relationship to consumers’ confidence in these beliefs. Combating false beliefs and boosting confidence in accurate ones is important from the viewpoint of the industry which may suffer from disinformation about the extent of data collection and for the society as disinformation is seen as a pressing and widespread societal issue that misleads consumers and undermines trust and democracy (De Cock Bunning Citation2018). In addition, research is needed to further understand how folk theories relate to subsequent perceptions of surveillance and decision making (e.g., behavioral surveillance responses). Also, given that folk theories could include misinformation or conspiracy theories about how data are collected, processed, or shared, future research is needed to combat this type of misinformation,Footnote1 as well as the moderating role of conspiracy mentality in the relationship between perceived surveillance and advertising effects.

Research Direction 2: Perceived Surveillance

Future research is needed to further examine the dimensions of perceived surveillance, when it is likely to occur, and perhaps who is susceptible to it. In addition, as a second step, the relationship between perceived surveillance and surveillance and advertising responses could be examined to get a better understanding of how perceived surveillance impacts advertising effectiveness. While the negative impact of other data-collection-related factors such as privacy concerns on advertising effectiveness is known (e.g., positive impact on ad avoidance; Jung Citation2017), the exact role of perceived surveillance needs further investigation. Segijn et al. (Citation2022) have validated a measurement for perceived surveillance in survey and experimental research that can facilitate this. This measurement consists of four items asking participants to rate the extent to which they feel a company/brand/person is (1) looking over your shoulder, (2) watching your every move, (3) entering your private space, and (4) checking up on you. Although this measure has been validated for personalized advertising practices specifically, it could potentially be used in other contexts as well, such as data collection through smart devices, on social media, or in stores.

Regarding prevalence of perceived surveillance, Segijn and Van Ooijen (Citation2020) described different data collection techniques and their relation to perceived surveillance, which could serve as a starting point to examine when perceived surveillance is more likely to occur. For example, research could look at how different data collection techniques result in different levels of perceived surveillance and subsequently in surveillance and advertising responses. Studying the context in which effects of perceived surveillance are strongest will help advertisers identify the tipping point in which digital data-driven ads have unintended negative effects.

Finally, more research is needed on individual differences in susceptibility to perceived surveillance among consumers. Regarding personality, conspiracy personality that is known to contribute to awareness of data collection (Boerman and Segijn Citation2022) could also strengthen perceived surveillance. In the context of the surveillance effect and perceived surveillance of conversations, anxiety of new technologies and negative experiences with them are factors strongly contributing to it (Frick et al. Citation2021). To what extent such negative feelings and experiences drive perceived surveillance in the context of digital data-driven ads is another potential research avenue.

Research Direction 3: Surveillance Responses

Research is needed to examine how perceived surveillance affects cognitive, affective, and behavioral surveillance responses. While past research has shown that benefits and costs are impacted by exposure to personalization (Bol et al. Citation2018), to what extent digital data-driven advertising in general changes the cost-benefit calculation requires further research. Next, while data collection is known to elicit negative emotions among consumers (Segijn and Van Ooijen Citation2022), to what extent these emotions are also elicited by data-driven ads remains understudied. Regarding change of behavior due to perceived surveillance, it involves taking privacy protection measures (Boerman, Kruikemeier, and Zuiderveen Borgesius Citation2021). However, it can also involve other behavioral adjustments aimed at limiting the extent of dataveillance and protecting personal data. Such chilling effects in response to data collection for advertising and commercial purposes remain understudied (Büchi, Festic, and Latzer Citation2022). Future research needs to examine what behavioral surveillance responses people undertake in reaction to data collection for advertising and what the main motivations are for undertaking such responses. What surveillance responses are elicited by exposure to digital data-driven ads will inform research and practice about potential unintended effects of digital data-driven advertising practices that go beyond traditional advertising responses.

To investigate which consumers are most likely to show surveillance responses, research on individual characteristics that contribute to these responses is needed. Boerman, Kruikemeier, and Zuiderveen Borgesius (Citation2017) proposed a framework in which they described different consumer characteristics (e.g., privacy concerns, desire for privacy, online experience) and other consumer-controlled factors (e.g., knowledge and abilities) that could serve as a starting point for identifying consumers prone to undertaking surveillance responses.

Conclusion and Discussion

The digital technology revolution and advent of big data have led to substantial developments in advertising. Data collection practices to gain more insights in consumers and their behaviors by corporations are not new in the advertising landscape. However, infrastructure and technological advancements have changed the possibilities for data collection and the extent of it (Huh and Malthouse Citation2020). For example, collecting data can be extended into collecting consumers’ offline behaviors and to places that are considered private (e.g., living room) (Segijn Citation2019). Moreover, current data collection practices are automated and continuous, constantly monitoring consumer behavior (Büchi, Festic, and Latzer Citation2022; Degli-Esposti Citation2014). This makes so-called dataveillance an important concept in the current and future advertising landscape. These new developments open new questions regarding the impact and effectiveness of advertising. The current research presents the DEAL framework and research directions designed to provide a theoretical guidance for future research on this topic. We applied long-standing theories in advertising research to this new phenomenon. A conceptualization and theorization of the role of dataveillance in the advertising landscape is crucial due to the central role consumer data play in current digital data-driven advertising and the ethical questions this centrality poses.

Beyond guiding future research on the impact of dataveillance in the advertising landscape on consumers, the DEAL framework also has practical implications for the advertising industry that applies digital data-driven advertising and for regulators who are responsible for the framework in which data collection for advertising takes place. First, for the advertising industry, the framework allows it to better understand the impact of dataveillance on consumers. This can help them anticipate the unintended effects that digital data-driven ads may have through activation of surveillance beliefs. As spillovers refer to the way in which perceptions and beliefs are affected by information that is not mentioned in the message (Ahluwalia, Unnava, and Burnkrant Citation2001), such spillovers could potentially explain whether perceived surveillance impacts other advertising effects. As young users already find digital advertising irrelevant, useless, and not trustworthy (Lineup Citation2021), the spillover effect could deepen this negative sentiment and is important for the advertising industry that tries to improve the image of digital data-driven advertising.

Second, consumer reactions to dataveillance could also impact the quality of the advertising message, which has implications for the advertising and tech industry. Following the computational advertising measurement system (Yun, Segijn, et al. Citation2020), data (e.g., consumer data, brand data) are used as input for advertising strategic planning and tactical execution. However, data could be polluted by consumers who provide fake information (e.g., name, e-mail address) as a privacy protection mechanism (Boerman, Kruikemeier, and Zuiderveen Borgesius Citation2021) or who adjust their behavior to avoid their data being collected (Büchi, Festic, and Latzer Citation2022). Given the importance of data as input for computational advertising (Yun, Segijn, et al. Citation2020), future research is needed into the relationship between surveillance responses and data pollution. Such research could explore in what way the data are polluted and inform the industry how to prevent or account for it in algorithms.

Finally, for regulators, the framework shows how consumers form beliefs about data collection and processing practices of the industry. The description of belief formation highlights the role of transparency regulations and literacy programs in it and shows the potential regulators have in correcting false beliefs and contributing to forming correct beliefs. In addition, future research is needed to examine the DEAL framework in the context of different privacy regulations as these may impact the extent that consumers have trust in advertising companies to handle their data safely and the extent they will engage in surveillance responses. Take, for example, the difference between the United States and Europe. Privacy regulations and the amount of protection from dataveillance offered by the law differ substantially between the United States and the European Union (Tushnet and Goldman Citation2020). A key goal of the General Data Protection Regulation in the European Union is to strengthen individual control in the face of online data collection by corporations (Tushnet and Goldman Citation2020). In contrast, privacy regulations in the United States are less specific about what data on individuals can be collected, and fewer requirements have been outlined for corporations to inform users about these practices. These differences may lead to different perceptions among consumers when it comes to dataveillance (e.g., less transparency is required in the United States, resulting in less information-driven surveillance beliefs). They may also change how individuals react to surveillance episodes (e.g., one’s behavioral surveillance responses may depend on possibilities given by the law). Moreover, cross-country and cross-cultural research could help further understand the DEAL framework and the impact of dataveillance in the advertising landscape on consumers as consumer perceptions of dataveillance might depend on their cultural background and the surveillance culture where they grew up.

In addition, we identify some methodological challenges in and prerequisites for studying consumer perceptions and responses to dataveillance in advertising. For researchers who do not have access to the same data as the advertising industry does, it is difficult to measure consumer interactions with digital data-driven advertising and their surveillance and advertising. Researchers have to start using digital analytics (e.g., social media analytics; see Yun, Duff, et al. Citation2020) to move beyond measuring motivations and intentions (Boerman, Kruikemeier, and Zuiderveen Borgesius Citation2017). To access consumer data, researchers can, for example, ask consumers to donate their digital trace data (Araujo et al. Citation2021). This donation would make it possible to examine real-life data in relation to this topic (see Liu-Thompkins and Malthouse Citation2017 for a practical guide). In addition, including longitudinal designs is particularly important when it comes to perceived surveillance and surveillance responses. As the technology hype cycle shows, novel technologies move toward mainstream adoption over time (Dedehayir and Steinert Citation2016). This mainstream adoption changes the social norms surrounding a technology, which subsequently impacts individuals’ acceptance of it and attitudes toward it (see technology acceptance model; Davis, Bagozzi, and Warshaw Citation1989). When different data collection techniques for digital data-driven advertising become commonly accepted, this can possibly change consumer perceptions and responses to dataveillance.

The current article conceptualized consumer responses to dataveillance in digital data-driven advertising and applied long-standing theories in advertising research to this phenomenon. The extent of and infrastructure for data collection and processing resulting in situation of dataveillance open new questions regarding consumer responses to digital data-driven advertising. The Dataveillance Effects in Advertising Landscape framework and the proposed research directions can provide a theoretical framework to guide future research on this topic.

Acknowledgments

The authors would like to thank the editors and anonymous reviewers for their constructive input that substantially helped to shape the manuscript.

Additional information

Notes on contributors

Joanna Strycharz

Joanna Strycharz (PhD, University of Amsterdam) is an assistant professor, Amsterdam School of Communication Research, University of Amsterdam.

Claire M. Segijn

Claire M. Segijn (PhD, University of Amsterdam) is an associate professor, Hubbard School of Journalism and Mass Communication, University of Minnesota.

Notes

1 See Vraga and Bode (Citation2020) for a definition on misinformation and Swire-Thompson and Lazer (Citation2020) for an example of misinformation in the online health misinformation context.

References

- Acquisti, A., Brandimarte, L., and Loewenstein, G. 2015. “Privacy and Human Behavior in the Age of Information.” Science, 347 (6221):509–14. doi:10.1126/science.aaa1465

- Acquisti, A., L. K. John, and G. Loewenstein. 2013. “What Is Privacy Worth?” The Journal of Legal Studies 42 (2):249–74. doi:10.1086/671754

- Aguirre, E., D. Mahr, D. Grewal, K. De Ruyter, and M. Wetzels. 2015. “Unraveling the Personalization Paradox: The Effect of Information Collection and Trust-Building Strategies on Online Advertisement Effectiveness.” Journal of Retailing 91 (1):34–49. doi:10.1016/j.jretai.2014.09.005

- Ahluwalia, R., H. R. Unnava, and R. E. Burnkrant. 2001. “The Moderating Role of Commitment on the Spillover Effect of Marketing Communications.” Journal of Marketing Research 38 (4):458–70. doi:10.1509/2Fjmkr.38.4.458.18903

- Andrejevic, M. 2006. “The Discipline of Watching: Detection, Risk, and Lateral Surveillance.” Critical Studies in Media Communication 23 (5):391–407. doi:10.1080/07393180601046147

- Araujo, T., J. Ausloos, W. van Atteveldt, F. Loecherbach, J. Moeller, J. Ohme, D. Trilling, B. van de Velde, C. de Vreese, and K. Welbers. 2021. OSD2F: An Open-Source Data Donation Framework. SocArXiv. doi:10.31235/osf.io/xjk6t

- Baek, T. H., and M. Morimoto. 2012. “Stay Away from Me.” Journal of Advertising 41 (1):59–76. doi:10.2753/JOA0091-3367410105

- Baruh, L., E. Secinti, and Z. Cemalcilar. 2017. “Online Privacy Concerns and Privacy Management: A Meta-Analytical Review.” Journal of Communication 67 (1):26–53. doi:10.1111/jcom.12276

- Bleier, A., and M. Eisenbeiss. 2015. “The Importance of Trust for Personalized Online Advertising.” Journal of Retailing 91 (3):390–409. doi:10.1016/j.jretai.2015.04.001

- Boerman, S. C., S. Kruikemeier, and F. J. Zuiderveen Borgesius. 2017. “Online Behavioral Advertising: A Literature Review and Research Agenda.” Journal of Advertising 46 (3):363–76. doi:10.1080/00913367.2017.1339368

- Boerman, S. C., S. Kruikemeier, and F. J. Zuiderveen Borgesius. 2021. “Exploring Motivations for Online Privacy Protection Behavior: Insights from Panel Data.” Communication Research 48 (7):953–77. doi:10.1177/2F0093650218800915

- Boerman, S. C., and C. M. Segijn. 2022. “Awareness and Perceived Appropriateness of Synced Advertising in Dutch Adults.” Journal of Interactive Advertising 22 (2):187–94. doi:10.1080/15252019.2022.2046216

- Boerman, S. C., L. M. Willemsen, and E. P. Van Der Aa. 2017. ““This Post Is Sponsored”: Effects of Sponsorship Disclosure on Persuasion Knowledge and Electronic Word of Mouth in the Context of Facebook.” Journal of Interactive Marketing 38:82–92. doi:10.1016/j.intmar.2016.12.002

- Bol, N., T. Dienlin, S. Kruikemeier, M. Sax, S. C. Boerman, J. Strycharz, N. Helberger, and C. H. de Vreese. 2018. “Understanding the Effects of Personalization as a Privacy Calculus: Analyzing Self-Disclosure across Health, News, and Commerce Contexts.” Journal of Computer-Mediated Communication 23 (6):370–88. doi:10.1093/jcmc/zmy020

- Brandimarte, L., A. Acquisti, and G. Loewenstein. 2013. “Misplaced Confidences: Privacy and the Control Paradox.” Social Psychological and Personality Science 4 (3):340–7. doi:10.1177/2F1948550612455931

- Brehm, S. S., and J. W. Brehm. 1981. Psychological Reactance: A Theorv Offreedom and Control. San Diego. CA: Academic Press

- Bruder, M., P. Haffke, N. Neave, N. Nouripanah, and R. Imhoff. 2013. “Measuring Individual Differences in Generic Beliefs in Conspiracy Theories across Cultures: Conspiracy Mentality Questionnaire.” Frontiers in Psychology 4:225. doi:10.3389/fpsyg.2013.00225.

- Büchi, M., N. Festic, and M. Latzer. 2022. “The Chilling Effects of Digital Dataveillance: A Theoretical Model and an Empirical Research Agenda.” Big Data & Society 9 (1):205395172110653. doi:10.1177/2F20539517211065368

- Büchi, M., E. Fosch-Villaronga, C. Lutz, A. Tamò-Larrieux, and S. Velidi. 2021. “Making Sense of Algorithmic Profiling: user Perceptions on Facebook.” Information, Communication & Society, 1–17. doi:10.1080/1369118X.2021.1989011

- Campbell, J. E., and M. Carlson. 2002. “Panopticon.com: Online Surveillance and the Commodification of Privacy.” Journal of Broadcasting & Electronic Media 46 (4):586–606. doi:10.1207/s15506878jobem4604_6

- Choi, H., J. Park, and Y. Jung. 2018. “The Role of Privacy Fatigue in Online Privacy Behavior.” Computers in Human Behavior 81:42–51. doi:10.1016/j.chb.2017.12.001

- Christl, W. 2017. Corporate Surveillance in Everyday Life. How Companies Collect, Combine, Analyze, Trade, and Use Personal Data on Billions. Vienna: Cracked Labs.

- Dahlen, M., and S. Rosengren. 2016. “If Advertising Won't Die, What Will It Be? Toward a Working Definition of Advertising.” Journal of Advertising 45 (3):334–45. doi:10.1080/00913367.2016.1172387

- Davis, F. D., R. P. Bagozzi, and P. R. Warshaw. 1989. “User Acceptance of Computer Technology: A Comparison of Two Theoretical Models.” Management Science 35 (8):982–1003. doi:10.1287/mnsc.35.8.982

- De Cock Bunning, M. 2018. “A Multi-Dimensional Approach to Disinformation: Report of the Independent High Level Group on Fake News and Online Disinformation.” Luxembourg: Publications Office of the European Union, 2018. Retrieved from Cadmus, European University Institute Research Repository, at: https://hdl.handle.net/1814/70297.

- Dedehayir, O., and M. Steinert. 2016. “The Hype Cycle Model: A Review and Future Directions.” Technological Forecasting and Social Change 108:28–41. doi:10.1016/j.techfore.2016.04.005

- Degeling, M., C. Utz, C. Lentzsch, H. Hosseini, F. Schaub, T. Holz. 2019. “We Value Your Privacy … Now Take Some Cookies: Measuring the GDPR’s Impact on Web Privacy.” In NDSS 2019. doi:10.14722/ndss.2019.23xxx

- Degli-Esposti, S. 2014. “When Big Data Meets Dataveillance: The Hidden Side of Analytics.” Surveillance & Society 12 (2):209–25. doi:10.24908/ss.v12i2.5113

- De Keyzer, F., N. Dens, and P. De Pelsmacker. 2015. “Is This for Me? How Consumers Respond to Personalized Advertising on Social Network Sites.” Journal of Interactive Advertising 15 (2):124–34. doi:10.1080/15252019.2015.1082450

- DeVito, M. A., D. Gergle, and J. Birnholtz. 2017, May. ““Algorithms Ruin Everything" # RIPTwitter, Folk Theories, and Resistance to Algorithmic Change in Social Media.” In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (pp. 3163–3174).

- Dinev, T., and P. Hart. 2006. “An Extended Privacy Calculus Model for e-Commerce Transactions.” Information Systems Research 17 (1):61–80. doi:10.1287/isre.1060.0080

- Duffy, B. E., and N. K. Chan. 2019. ““You Never Really Know Who’s Looking”: Imagined Surveillance across Social Media Platforms.” New Media & Society 21 (1):119–38. doi:10.1177/2F1461444818791318

- Farman, L., M. L. Comello, and J. R. Edwards. 2020. “Are Consumers Put off by Retargeted Ads on Social Media? Evidence for Perceptions of Marketing Surveillance and Decreased Ad Effectiveness.” Journal of Broadcasting & Electronic Media 64 (2):298–319. doi:10.1080/08838151.2020.1767292

- Fransen, M. L., P. W. Verlegh, A. Kirmani, and E. G. Smit. 2015. “A Typology of Consumer Strategies for Resisting Advertising, and a Review of Mechanisms for Countering Them.” International Journal of Advertising 34 (1):6–16. doi:10.1080/02650487.2014.995284

- Frick, N. R., K. L. Wilms, F. Brachten, T. Hetjens, S. Stieglitz, and B. Ross. 2021. “The Perceived Surveillance of Conversations through Smart Devices.” Electronic Commerce Research and Applications 47:101046. doi:10.1016/j.elerap.2021.101046

- Friestad, M., and P. Wright. 1994. “The Persuasion Knowledge Model: How People Cope with Persuasion Attempts.” Journal of Consumer Research 21 (1):1–31. doi:10.1086/209380

- Gelman, S. A., and C. H. Legare. 2011. “Concepts and Folk Theories.” Annual Review of Anthropology 40:379–98. doi:10.1146/annurev-anthro-081309-145822

- Ham, C. D. 2017. “Exploring How Consumers Cope with Online Behavioral Advertising.” International Journal of Advertising 36 (4):632–58. doi:10.1080/02650487.2016.1239878

- Ham, C. D., and M. R. Nelson. 2016. “The Role of Persuasion Knowledge, Assessment of Benefit and Harm, and Third-Person Perception in Coping with Online Behavioral Advertising.” Computers in Human Behavior 62:689–702. doi:10.1016/j.chb.2016.03.076

- Helberger, N., J. Huh, G. Milne, J. Strycharz, and H. Sundaram. 2020. “Macro and Exogenous Factors in Computational Advertising: Key Issues and New Research Directions.” Journal of Advertising 49 (4):377–93. doi:10.1080/00913367.2020.1811179

- Hess, N. J., C. M. Kelley, M. L. Scott, M. Mende, and J. H. Schumann. 2020. “Getting Personal in Public!? How Consumers Respond to Public Personalized Advertising in Retail Stores.” Journal of Retailing 96 (3):344–61. doi:10.1016/j.jretai.2019.11.005

- Hovland, C. I., and W. Weiss. 1951. “The Influence of Source Credibility on Communication Effectiveness.” Public Opinion Quarterly 15 (4):635–50. doi:10.1086/266350

- Hudders, L., P. De Pauw, V. Cauberghe, K. Panic, B. Zarouali, and E. Rozendaal. 2017. “Shedding New Light on How Advertising Literacy Can Affect Children's Processing of Embedded Advertising Formats: A Future Research Agenda.” Journal of Advertising 46 (2):333–49. doi:10.1080/00913367.2016.1269303

- Huh, J., and E. C. Malthouse. 2020. “Advancing Computational Advertising: Conceptualization of the Field and Future Directions.” Journal of Advertising 49 (4):367–76. doi:10.1080/00913367.2020.1795759

- Jung, A. R. 2017. “The Influence of Perceived Ad Relevance on Social Media Advertising: An Empirical Examination of a Mediating Role of Privacy Concern.” Computers in Human Behavior 70:303–9. doi:10.1016/j.chb.2017.01.008

- Kim, H., and J. Huh. 2017. “Perceived Relevance and Privacy Concern regarding Online Behavioral Advertising (OBA) and Their Role in Consumer Responses.” Journal of Current Issues & Research in Advertising 38 (1):92–105. doi:10.1080/10641734.2016.1233157

- Kim, T., K. Barasz, and L. K. John. 2019. “Why Am I Seeing This Ad? The Effect of Ad Transparency on Ad Effectiveness.” Journal of Consumer Research 45 (5):906–32. doi:10.1093/jcr/ucy039

- Kim, Y. J., and J. Han. 2014. “Why Smartphone Advertising Attracts Customers: A Model of Web Advertising, Flow, and Personalization.” Computers in Human Behavior 33:256–69. doi:10.1016/j.chb.2014.01.015

- Klayman, J. 1995. “Varieties of Confirmation Bias.” Psychology of Learning and Motivation 32:385–418. doi:10.1016/S0079-7421(08)60315-1

- Kwet, M. 2019. “In Stores, Secret Surveillance Tracks Your Every Move.” New York Times. https://www.nytimes.com/interactive/2019/06/14/opinion/bluetooth-wireless-tracking-privacy.html

- Laufer, R. S., and M. Wolfe. 1977. “Privacy as a Concept and a Social Issue: A Multidimensional Developmental Theory.” Journal of Social Issues 33 (3):22–42. doi:10.1111/j.1540-4560.1977.tb01880.x

- Lee, H., and C. H. Cho. 2020. “Digital Advertising: Present and Future Prospects.” International Journal of Advertising 39 (3):332–41. doi:10.1080/02650487.2019.1642015

- Li, H. 2019. “Special Section Introduction: Artificial Intelligence and Advertising.” Journal of Advertising 48 (4):333–7. doi:10.1080/00913367.2019.1654947

- Lin, C. A., and X. Xu. 2021. “Exploring Bottled Water Purchase Intention via Trust in Advertising, Product Knowledge, Consumer Beliefs and Theory of Reasoned Action.” Social Sciences 10 (8):295. doi:10.3390/socsci10080295

- Lineup. 2021. “Overcoming the Ad Blindness of Millennials and Gen Z.” https://lineup.com/blog/overcoming-ad-blindness/

- Liu-Thompkins, Y., and E. C. Malthouse. 2017. “A Primer on Using Behavioral Data for Testing Theories in Advertising Research.” Journal of Advertising 46 (1):213–25. doi:10.1080/00913367.2016.1252289

- Lutz, C., C. P. Hoffmann, and G. Ranzini. 2020. “Data Capitalism and the User: An Exploration of Privacy Cynicism in Germany.” New Media & Society 22 (7):1168–87. doi:10.1177/1461444820912544

- Lyon, D. 2017. “Surveillance Culture: Engagement, Exposure, and Ethics in Digital Modernity.” International Journal of Communication 11:824–42. doi:1932–8036/20170005

- McDonald, A. M., and L. F. Cranor. 2008. “The Cost of Reading Privacy Policies.” Isjlp, 4:543–68.

- McDonald, A. M., and L. F. Cranor. 2010, October. “Americans' Attitudes about Internet Behavioral Advertising Practices.” In Proceedings of the 9th Annual ACM Workshop on Privacy in the Electronic Society (pp. 63–72). Chicago, Illinois, USA. doi:10.1145/1866919.1866929

- Nickerson, R. S. 1998. “Confirmation Bias: A Ubiquitous Phenomenon in Many Guises.” Review of General Psychology 2 (2):175–220. doi:10.1037/2F1089-2680.2.2.175

- Richards, J. I., and C. M. Curran. 2002. “Oracles on “Advertising”: Searching for a Definition.” Journal of Advertising 31 (2):63–77. doi:10.1080/00913367.2002.10673667

- Rip, A. 2019. “Folk Theories of Nanotechnologists.” In Nanotechnology and Its Governance, edited by A. Rip, 56–74. London: Routledge.

- Rodgers, S. 2021. “Themed Issue Introduction: Promises and Perils of Artificial Intelligence and Advertising.” Journal of Advertising 50 (1):1–10. doi:10.1080/00913367.2020.1868233

- Segijn, C. M. 2019. “A New Mobile Data Driven Message Strategy Called Synced Advertising: Conceptualization, Implications, and Future Directions.” Annals of the International Communication Association 43 (1):58–77. doi:10.1080/23808985.2019.1576020

- Segijn, C. M., E. Kim, A. Sifaoui, and S. C. Boerman. 2021. “When Realizing That Big Brother Is Watching You: The Empowerment of the Consumer through Synced Advertising Literacy.” Journal of Marketing Communications, 1–22. doi:10.1080/13527266.2021.2020149

- Segijn, C. M., S. J. Opree, and I. Van Ooijen. 2022. “The Validation of the Perceived Surveillance Scale.” Cyberpsychology, 16 (3). doi:10.5817/CP2022-3-9

- Segijn, C. M., J. Strycharz, A. Riegelman, and C. Hennesy. 2021. “A Literature Review of Personalization Transparency and Control: Introducing the Transparency–Awareness–Control Framework.” Media and Communication 9 (4):120–33. doi:10.17645/mac.v9i4.4054

- Segijn, C. M., and I. Van Ooijen. 2022. “Differences in Consumer Knowledge and Perceptions of Personalized Advertising: Comparing Online Behavioural Advertising and Synced Advertising.” Journal of Marketing Communications 28 (2):207–20. doi:10.1080/13527266.2020.1857297

- Segijn, C. M., and I. Van Ooijen. 2020. “Perceptions of Techniques Used to Personalize Messages across Media in Real Time.” Cyberpsychology, Behavior and Social Networking 23 (5):329–37. doi:10.1089/cyber.2019.0682.

- Sifaoui, A. 2021. ““We Know What You See, So Here’s an Ad!” Online Behavioral Advertising and Surveillance on Social Media in an Era of Privacy Erosion.” Doctoral diss., University of Minnesota). https://hdl.handle.net/11299/224475.

- Sifaoui, A., G. Lee, and C. M. Segijn. 2022. “Brand Match vs. mismatch and Its Impact on Avoidance through Perceived Surveillance in the Context of Synced Advertising.” In Advances in Advertising Research, edited by A. Vignolles, Vol. XII. Wiesbaden: Springler-Gabler.

- Smit, E. G., G. Van Noort, and H. A. Voorveld. 2014. “Understanding Online Behavioural Advertising: User Knowledge, Privacy Concerns and Online Coping Behaviour in Europe.” Computers in Human Behavior 32:15–22. doi:10.1016/j.chb.2013.11.008

- Solove, D. J. 2007. “I've Got Nothing to Hide and Other Misunderstandings of Privacy.” San Diego Law Review 44:745.

- Song, J. H., H. Y. Kim, S. Kim, S. W. Lee, and J. H. Lee. 2016. “Effects of Personalized e-Mail Messages on Privacy Risk: Moderating Roles of Control and Intimacy.” Marketing Letters 27 (1):89–101. doi:10.1007/s11002-014-9315-0

- Stasson, M., and M. Fishbein. 1990. “The Relation between Perceived Risk and Preventive Action: A within‐Subject Analysis of Perceived Driving Risk and Intentions to Wear Seatbelts.” Journal of Applied Social Psychology 20 (19):1541–57. doi:10.1111/j.1559-1816.1990.tb01492.x

- Strycharz, J., and B. Duivenvoorde. 2021. “The Exploitation of Vulnerability through Personalised Marketing Communication: Are Consumers Protected?” Internet Policy Review 10 (4):1–27. doi:10.14763/2021.4.1585

- Strycharz, J., and C. M. Segijn. 2021. “Personalized Advertising and Chilling Effects: Consumers’ Change in Media Diet in Response to Corporate Surveillance.” The International Conference on Research in Advertising, Virtual Conference.

- Strycharz, J., E. Smit, N. Helberger, and G. van Noort. 2021. “No to Cookies: Empowering Impact of Technical and Legal Knowledge on Rejecting Tracking Cookies.” Computers in Human Behavior 120:106750. doi:10.1016/j.chb.2021.106750

- Strycharz, J., G. Van Noort, E. Smit, and N. Helberger. 2019. “Protective Behavior against Personalized Ads: Motivation to Turn Personalization off.” Cyberpsychology: Journal of Psychosocial Research on Cyberspace 13 (2) doi:10.5817/CP2019-2-1

- Swami, V., T. Chamorro‐Premuzic, and A. Furnham. 2010. “Unanswered Questions: A Preliminary Investigation of Personality and Individual Difference Predictors of 9/11 Conspiracist Beliefs.” Applied Cognitive Psychology 24 (6):749–61. doi:10.1002/acp.1583

- Swire-Thompson, B., and D. Lazer. 2020. “Public Health and Online Misinformation: Challenges and Recommendations.” Annual Review of Public Health 41:433–51. doi:10.1146/annurev-publhealth-040119-094127.

- Tan, B. J., M. Brown, and N. Pope. 2019. “The Role of Respect in the Effects of Perceived Ad Interactivity and Intrusiveness on Brand and site.” Journal of Marketing Communications 25 (3):288–306. doi:10.1080/13527266.2016.1270344

- Toff, B., and R. K. Nielsen. 2018. ““I Just Google It”: Folk Theories of Distributed Discovery.” Journal of Communication 68 (3):636–57. doi:10.1093/joc/jqy009

- Tushnet, R., and E. Goldman. 2020. Advertising & Marketing Law: Cases & Materials. Santa Clara: Faculty Book Gallery.

- Van Dijck, J. 2014. “Datafication, Dataism and Dataveillance: Big Data between Scientific Paradigm and Ideology.” Surveillance & Society 12 (2):197–208. doi:10.24908/ss.v12i2.4776

- Van Gogh, R., M. Walrave, and K. Poels. 2020. “Personalization in Digital Marketing: Implementation 1793 Strategies and the Corresponding Ethical Issues.” In The SAGE Handbook of Marketing Ethics, edited by L. Eagle, S. Dahl, P. De Pelsmacker, and C. R. Taylor, 411–23. London: Sage.

- Van Noort, G., E. G. Smit, and H. A. Voorveld. 2013. “The Online Behavioural Advertising Icon: two User Studies.” In Advances in Advertising Research, Vol. IV, 365–78. Wiesbaden: Springer Gabler.

- Van Ooijen, I. 2022. “When Disclosures Backfire: Aversive Source Effects for Personalization Disclosures on Less Trusted Platforms.” Journal of Interactive Marketing 57 (2):178–97. doi:10.1177/2F10949968221080499