Abstract

Purpose

Progress tests (PTs) assess applied knowledge, promote knowledge integration, and facilitate retention. Clinical attachments catalyse learning through an appropriate learning context. The relationship between PT results and clinical attachment sequence and performance are under-explored. Aims: (1) Determine the effect of Year 4 general surgical attachment (GSA) completion and sequence on overall PT performance, and for surgically coded items; (2) Determine the association between PT results in the first 2 years and GSA assessment outcomes.

Materials and methods

All students enrolled in the medical programme, who started Year 2 between January 2013 and January 2016, were included; with follow up until December 2018. A linear mixed model was applied to study the effect of undertaking a GSA on subsequent PT results. Logistic regressions were used to explore the effect of past PT performance on the likelihood of a student receiving a distinction grade in the GSA.

Results

965 students were included, representing 2191 PT items (363 surgical items). Sequenced exposure to the GSA in Year 4 was associated with increased performance on surgically coded PT items, but not overall performance on the PT, with the difference decreasing over the year. PT performance in Years 2–3 was associated with an increased likelihood of being awarded a GSA distinction grade (OR 1.62, p < 0.001), with overall PT performance a better predictor than performance on surgically coded items.

Conclusions

Exposure to a surgical attachment improves PT results in surgically coded PT items, although with a diminishing effect over time, implying clinical exposure may accelerate subject specific learning. Timing of the GSA did not influence end of year performance in the PT. There is some evidence that students who perform well on PTs in preclinical years are more likely to receive a distinction grade in a surgical attachment than those with lower PT scores.

Introduction

The literature is sparse on the relationship between learning context and progress testing. It is presumed that clinical attachments contribute to learning for the PT. The limited data on relationships between PT performance and learning activities or other assessments may be because a common tenet of PT is that students cannot specifically study for a test, as it covers the whole breadth of learning in the programme (Wrigley et al. Citation2012; Dion et al. Citation2022). Students should study continually and are likely to focus on current attachments and areas of weakness identified in previous PTs (Schuwirth and van der Vleuten 2010; Neeley et al. Citation2016). This study was undertaken for two reasons. First, anecdote and feedback indicated that some students were concerned with the ‘fairness’ of their clinical attachment rotation cycles in relation to the timing of our three annual PTs. Students felt that starting with certain attachments gave some advantage in the PTs. Second, our department of surgery was interested in knowing how their clinical attachments contributed to PT knowledge development, particularly in surgical items. Although comparison of PT performance with other undergraduate assessments may be part of institutional practice, it is not described in the literature. This study sought to investigate whether the sequence of a clinical attachment (general surgery) interferes with the performance on the PT and whether PT scores correlate with surgical attachment assessments.

Practice points

Students on surgical placement showed a transient performance improvement in surgical knowledge on progress tests.

This effect disappears once all students have completed the attachment.

Timing (sequence) of a surgical attachment does not ultimately advantage or disadvantage a student.

Weaker evidence that high-performing students on progress tests in early years were more likely to be high performers in their later surgical attachment.

Background

Progress Tests (PTs) have been used in medical education since the 1970s, with wider adoption since the 1990s, in both undergraduate and postgraduate medical training (Freeman et al. Citation2010; Wrigley et al. Citation2012; Albanese and Case Citation2016). PTs are designed to provide a longitudinal test of students’ applied knowledge and are an assessment ‘of’ and ‘for’ learning (Schuwirth and van der Vleuten Citation2012; Norman et al. Citation2010). Key principles of progress testing are that all students in a programme of study sit the same test from a large question bank, drawn against a blueprint, several times a year; questions are set at graduating or completing level (Wrigley et al. Citation2012). Compared with one-off assessments, PTs improve acquisition of integrated, higher-order knowledge and facilitate long-term knowledge retention (Cecilio-Fernandes et al. Citation2016; van der Vleuten et al. Citation2018; Görlich and Friederichs Citation2021). This form of testing allows demonstration of knowledge growth as students progress, helps to identify knowledge gaps, and allows staff to apply targeted and timely remediation (Muijtjens et al. Citation2008; Norman et al. Citation2010; Neeley et al. Citation2016; Tio et al. Citation2016; Heeneman et al. Citation2017).

The PT literature has mainly focused on operational aspects (planning, implementation, collaboration, evaluation) and the metrics of testing (Wrigley et al. Citation2012; Neeley et al. Citation2016; Dion et al. Citation2022); description and justification studies (Cook et al. Citation2008). In terms of experimental clarification studies, there are a few correlational studies that have explored the predictive value of PTs on future performance in postgraduate assessment (Willoughby et al. Citation1977; Pugh et al. Citation2016; Karay and Schauber Citation2018; Hamamoto Filho et al. Citation2019; Wang et al. Citation2021), and some exploring the relationship between PT and other assessments or learning activities, with students (Van Diest et al. Citation2004; De Champlain et al. Citation2010; Schaap et al. Citation2012; Ali et al. Citation2018; Laird-Fick et al. Citation2018; Cecilio-Fernandes, Aalders, et al. Citation2018; Cecilio-Fernandes, Kerdijk, et al. Citation2018; Couto et al. Citation2019). Only two of these studies have explored the effect of clinical attachment sequencing (Van Diest et al. Citation2004; De Champlain et al. Citation2010). The longitudinal nature of PTs fits within the paradigm of programmatic assessment (Pugh and Regehr Citation2016; Heeneman et al. Citation2017). Some triangulation between assessments is desirable to confirm consistency of performance across a range of assessments. Ideally, each learning experience should contribute to overall learning in several domain.

Impact of timing/sequence of clinical attachments on PT performance

Two studies were found that explore this (Van Diest et al. Citation2004; De Champlain et al. Citation2010), with one other survey study indicating that medical and dental students found early patient contact more useful than textbook learning for the PT (Ali et al. Citation2018). De Champlain’s et al. study (2010) considered the effect of rotation order of two clinical blocks on PT performance in one academic year of their programme. Block 1 was a generalist block (family medicine, general medicine and surgery) and Block 2 was specialities (neurology, paediatrics, psychiatry and obstetrics & gynaecology). Knowledge of all disciplines grew over four PTs. Clinical attachments in a particular discipline were associated with knowledge growth in that area. However, students who took Block 1 first, performed better in all tests and for all discipline areas in the final test. The authors hypothesised that medicine and surgery may provide the foundation for study in more specialised subjects. Van Diest et al. (Citation2004) found a knowledge growth effect for psychiatry and behavioural sciences items in their PT across a 6-year programme, reaching a plateau in the last 2 years. Learning opportunities in these disciplinary areas were provided in problem-based learning sessions and a later psychiatric attachment, but the study did not look specifically at timing of experiences and performance. Cecilio-Fernandes et al. compared the impact of curriculum design on oncology learning for their own institution and for a group of four in the Netherlands (Cecilio-Fernandes, Kerdijk, et al. Citation2018; Cecilio-Fernandes, Aalders, et al. Citation2018); the national PT was used as the measure of learning. In the single site study, a block of teaching was more effective in the long term than spaced out learning across 3 years (Cecilio-Fernandes, Kerdijk, et al. Citation2018). In the second, contact hours, a focussed semester of learning, and pre-internship training was associated with better knowledge gain (Cecilio-Fernandes, Aalders, et al. Citation2018). Neither study was strictly about attachment sequencing.

Correlation between PT and performance in other assessments

Five studies assessed the correlation between PT scores during the medical programme and other performance in programme or subsequent performance in the postgraduate arena (Willoughby et al. Citation1977; Karay and Schauber Citation2018; Couto et al. Citation2019; Hamamoto Filho et al. Citation2019; Wang et al. Citation2021). Couto et al. correlated PT scores, formative assessment in small group learning (SGL) and OSCE scores (2019). PT correlated positively at all stages with SGL and OSCE, separately, and all three correlated most strongly at the 8th semester timepoint (latest point tested). For the other four studies, PT performance as an undergraduate predicted postgraduate performance in national licensing examinations or selection for training. In a sixth study, Pugh et al. (Citation2016) developed an OSCE PT (a repeated OSCE based on PT philosophy) for postgraduate internal medicine trainees and compared performance in this test with performance in the disciplinary college OSCE. They found a correlation between OSCE PT performance and scores in both the written and practical college assessments; importantly they predicted failure of candidates positioned in the lower end of PT performance.

In our programme, each student has a pre-determined clinical rotation, shared with a small group of students. This allowed for the study of rotation sequence and knowledge growth in one discipline area without an intervention; the intention being to expand on this gap in the literature. General surgery provided a good discipline marker as it was the only dedicated surgical attachment in our Year 4. Unlike De Champlain et al. (Citation2010), we rotated all disciplines and could remove the potential effect of combined discipline blocks. However, we do agree with their assertion that there is generic core learning in all clinical attachments.

Research positioning

In this study we have adopted a realist ontological stance. Therefore, a quantitative approach was taken, with a correlational focus, based on existing data and the rationales for the study. The stance is post-positivist, seeking some objective answers, but acknowledging the fact that there may be several explanations for the findings, and that biases and confounders exist in a complex educational system. Alongside this, the authors hold a cognitive and social constructionist worldview in relation to learning. While this analysis seeks correlations within empirical data, we recognise that students take responsibility for their learning and approach this in different ways. Teaching and assessment play important roles in that process, but students also engage in their own study practices around their experiential learning.

Therefore, using the surgical attachment as our marker, the aims of our study were summarised as two research questions: to identify whether (1) there was any relationship between the sequence of undertaking this clinical attachment and PT performance in related disciplinary questions and/or performance overall (timing), (2) determine any association between PT results (overall and in items coded to surgical topics) and assessment outcomes in the Year 4 general surgery attachment (correlation). Our hypothesis was that there would be an effect in both cases, but no specific effect on non-surgical PT items.

Materials and methods

This was a longitudinal observational study using a retrospective analysis of prospectively collected student assessment data. Ethics approval was granted by the University of Auckland Human Participants Ethics Committee (# 022281).

Setting

Students enter the MBChB programme at Year 2; selected from a common health science first year, or as graduates. Year 2 and 3 (Y2–3) are predominantly campus based and cover early learning for clinical practice (including simulation and some clinical workplace learning). In Year 4–5 (Y4–5) students rotate through a range of clinical attachments. Year 6 (Y6) is a preparation for practice year. The number of students per year group has increased purposively from 2013, with intakes ranging from 242 to 272 students in the current study.

Progress Testing was introduced to the medical programme in 2013 (Lillis et al. Citation2014) and is a summative assessment for the ‘Applied Science for Medicine’ domain (The other four domains are; ‘Clinical and Communication Skills’, ‘Personal and Professional Skills’, ‘Hauora Māori’ (Māori health), and ‘Population Health’).

Participants

The study included all students enrolled in the medical programme who started between January 2013 and January 2016. PT performance was tracked to the end of 2018. Students who did not complete Y4 during the study period, had not completed the GSA, or did not complete all three PTs during Y4 were excluded. For students who repeated a year of the programme, only grades from their first full attempt at Y4 were included.

Context

All students take the PT three times a year, simultaneously, in every year. For Y2–5, the PT is norm-referenced and for Y6 standards-based. Each test is unique and contains 125 single-best-answer questions (one from five) set at graduate level. It is constructed against a blueprint and there is a 3-year stand-down for questions used. The blueprint was adopted from our PT partner in the UK. The details of our PT have been published previously (Lillis et al. Citation2014). There is a ‘don’t know’ option and formula scoring is used (1 mark for correct, −0.25 marks for incorrect, 0 for ‘don’t know’). For each PT, a small number (2–5) of questions are excluded through a moderation process, based on item metrics, resulting in the number of contributing questions being a little less than 125 for most tests. The final item sets had solid internal consistency for each PT, with test-and-year-group-specific Cronbach’s alphas and McDonald’s Omegas both ranging from 0.74-0.92.

Year 4 of the programme is 44 weeks, with 3 weeks of vacation and 4 weeks of non-attachment learning. The remaining time is divided between eight clinical discipline contexts (general medicine, geriatrics, specialty medicine, general practice/primary care, general surgery, emergency medicine, anaesthetics, and musculoskeletal) ranging from 1 to 6 weeks in duration. The GSA is 6 weeks long and students may be assigned to general, paediatric or vascular surgery teams. There are three assessment components: two clinical supervisor’s reports (CSR), a logbook (documents history taking, clinical examination, and self-directed learning from cases), and critical appraisal of a published study to answer a surgery-related question (CAT). Students receive a categorical grade for each component, based on standards, and a rubric is then used to combine them into four outcomes (distinction, pass, borderline performance, fail).

Data extraction

Data on student age, gender, entry pathway (first year or graduate), PT results for Y2–6 and GSA results were extracted from our databases. PTs were numbered sequentially for the purposes of the study as PT1–3 (Y2), PT4–6 (Y3) and PT7–9 (Y4). PT10–12 (Y5) and PT13–15 (Y6) are included for illustrative purposes in to demonstrate knowledge growth over the medical programme but are not otherwise part of the analysis.

Data processing

The PT blueprint does not include surgery as a named topic, as this was considered to be too broad a category when the PT was being designed. Therefore, for this study, all PT items from the study period were reviewed and coded as either surgical or non-surgical. The coding was initially completed by investigator BL, and items were independently reviewed by two other team members (LP, AW). Surgically coded items were defined with reference to the general surgery curriculum and disagreement resolved by consensus. Items related to other surgical specialty topics were coded as non-surgical for the purposes of this analysis, as they have their own clinical attachments and some are covered by specific blueprint topics (ENT, eye, musculoskeletal, neurological, women’s health). Thus, two question sets were used in the analysis: the full set (all items), and the surgical set (those coded as general surgery). For the surgical attachment assessment outcomes, to account for very low numbers who received a borderline or fail result, these were dichotomised into distinction and non-distinction outcomes for analysis.

Statistical analysis

Data were analysed using R 3.5.2 64 bit for Windows (R Foundation for Statistical Computing, Vienna, Austria) in RStudio Version 1.1.383 for Windows (RStudio Inc., Boston, MA). Theglm and nlme4 packages were used for logistic regression and linear mixed model analyses respectively.

Logistic regression analyses were used to test whether past PT performance (full set and surgical set, PTs 1–6) was related to the likelihood of achieving a distinction grade in the GSA assessments. The PT score for each student in each test was converted to a Z score by using the test and year group specific mean and standard deviation. The mean of the student’s Z scores from Y2–3 in the programme (PTs 1–6) was used as a measure of the student’s average PT performance level within their cohort during the preclinical years and is referred to as ‘PT performance’. Gender and entry pathway were included as main effects in all models by forced entry. The mean of each student’s standardised past PT scores were made available for selection as part of a stepwise model selection procedure, as were the interactions between all variables (gender, entry pathway, PT performance).

Linear mixed models were used to test whether there was a relationship between having undertaken a Y4 surgical attachment and PT 6–9 scores (full set and surgical set). To give a meaningful measure of student performance across tests and within question sets, each student’s PT score on each test was standardised by comparing the score the student obtained to the mean and standard deviation of the Y6 students sitting the same question set in the same test. Y6 students were used as a reference point as their scores are typically stable and represent the endpoint of the assessment. Main effects for PT timepoint, student gender (male vs. female) and graduate entry status (first year vs. graduate entry) were included by forced entry. Random intercepts were used for each student to account for underlying differences in student performance. The timepoint was entered as test sequential order, which was considered a surrogate for calendar time (i.e. PT7, PT8 and PT9 occur in order and are separated by roughly equal time between tests). Stepwise model selection procedures were used to assess whether adding additional fixed variables was warranted. The main variable of interest was whether a student had undertaken their GSA (started or completed) by the time of the PT, but interactions between any combination of the fixed variables were also made available for selection. Stepwise procedures were chosen for all analyses as there were no specific predictions made about the likely contribution of any interactions, and if the variables of interest did have an impact they should be selected by the procedure without manual interference. The Akaike Information Criterion (AIC) was used for selection in all cases (Stoica and Selén Citation2004). Optima; mode; AIC used and quoted in .

Results

There were 1035 eligible students. Of these, 70 were excluded by the criteria outlined in the methods section. A total of 965 students were included. Their demographic details were: 519 females (53.8%) and 446 males (46.2%), 853 (88.4%) aged 25 or less, 724 (75.0%) via first year entry and 241 (25.0%) via graduate pathway.

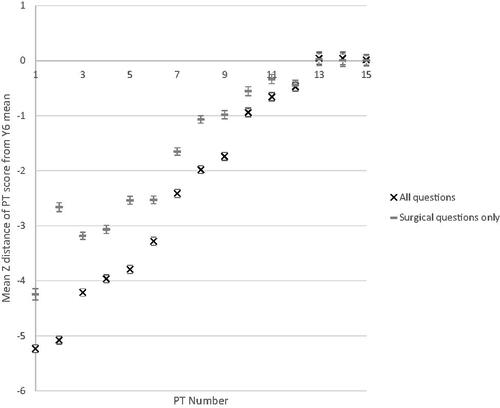

Overall knowledge growth

A total of 2191 PT items were included in the analysis (surgical n = 363; 16.6%). As shown in , PT scores increased from Y2 to Y6. In the Y2 to Y4 range focussed on in this study, statistically significant positive correlations were present for the full set of items (r = .78, p < 0.001) and the surgical set (r = .63, p < 0.001), showing knowledge growth over time.

Association between prior PT performance and clinical attachment results

GSA assessments are shown in . In the tables and text, ‘Performance’ relates to the average standardised (Z score) performance for students in their cohort. The results of the logistic regression analyses are summarised in . The stepwise procedure selected Performance into the model in all cases. Performance was associated with an increased probability of receiving a distinction grade in all GSA assessments, and an overall distinction, regardless of which item set was used; the exception being the logbook model and surgical question set. Excluding coefficients that did not reach statistical significance, a one unit increase in the Performance was associated with an increase in the odds of receiving a distinction grade of between 1.24 and 1.63, dependent on assessment and question set (). Based on the AIC, performance data from the full PT set was a better predictor of GSA results than the surgical set. Female gender and graduate entry were also associated with an overall distinction, and a distinction in some GSA components. There was no significant interaction between Performance and either gender or graduate entry. However, as the largest McFadden’s Pseudo R2 observed was .032 each relationship appears weak and caution in the interpretation of these results is warranted.

Table 1. General surgical attachment (GSA) assessment results.

Table 2. Predictors of a distinction grade in each component of the surgical attachment assessment.

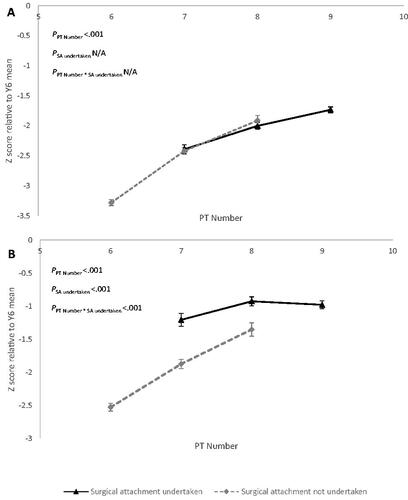

Effect of surgical attachment sequence on subsequent PT performance

The results of the linear mixed models are presented in . The stepwise procedure selected terms related to students undertaking a GSA when describing the relationship with standardised performance on the surgical PT set, but not when describing the relationship with standardised performance on the full PT set. For the surgical set, the selected model had statistically significant coefficients for test sequence, GSA, and the interaction between test sequence and GSA. Taken together (shown in ), performance on the surgical set improves over time regardless of exposure to a GSA. Performance initially increased more with exposure to a GSA, although the effect became reduced at the second time point in Y4 (PT 8). The benefit at PT 9 could not be measured, as all students had been exposed to a GSA by the final test of the year.

Figure 2. Effect of undertaking Surgical Attachment on subsequent Progress Test (PT) performance. A: All PT items. B: Surgical PT items. PT scores calculated as Z distance from the Year 6 mean by PT number and whether students have undertaken a surgical attachment. Year 4 PTs are numbed PT 7-9.

Table 3. Effect on PT scores of completing a surgical attachment.

Discussion

This longitudinal observational study assessed the relationship between PT performance and both the timing sequence of a General Surgical Attachment (GSA) and performance in its assessments. Previous high performance in the PT is predictive of a high performance in the GSA assessments. Timing was explored by looking for a sequencing effect (when students undertook the GSA), and their performance in the three PTs across the year. Undertaking the GSA was associated with an increase in performance on the surgical question set but had no effect in performance on the complete question set (). Scores in the surgical PT set increased across Y4 regardless of GSA exposure, such that the positive effect diminished over time. Thus, the beneficial effect is removed once all students have completed the attachment. The gain, irrespective of timing, suggests that students gain some surgical knowledge during the rest of the year from non-surgical attachments, other learning activities (e.g. online-learning modules, lectures) and from self-study.

All GSA clinical assessments showed a significant association with past PT performance, when the scores were based on the full PT set, and all except the logbook showed a significant association with past PT performance for the surgical PT set. This association is seen as an increased likelihood of students receiving a distinction grade in GSA assessments. However, performance on the surgical PT set did not provide a better predictor of GSA results than performance on the entire PT set. In fact, model selection techniques indicated that the results from the full set were preferable to those from the surgical set. This could be due to several factors; issues of coding of the surgical set, that the full set is a larger and more stable data set, and that general knowledge may be more helpful when entering an attachment than attachment-specific knowledge. While additional research would be required to test these, the fact that exposure to a GSA was associated with an accelerated increase in performance on subsequent surgical PT items, but not the entire PT set, provides initial evidence in favour of the benefit of general PT knowledge/performance over potential issues with question coding. Taken overall, the findings for PT performance and GSA assessment performance, indicate that higher-performing students in the knowledge domain in Y2–3 are also more likely to be higher performers in the GSA assessments. This is interesting because the PT assesses applied knowledge and the GSA assessments assess clinical skills, performance in the clinical environment and professionalism. The findings suggest that students are constructing learning and using knowledge across domains. This aligns with a constructivist view and sits well with the need for triangulation and multiple overlapping sampling in programmatic learning (Pugh and Regehr Citation2016; Heeneman et al. Citation2017). Although Pugh et al. (Citation2016) found a relationship between an OSCE PT and performance in postgraduate examinations, these are within the same domain.

Having undertaken a GSA is associated with a higher level of performance on the surgical PT set, although this difference decreases in the second test and is absent in the final test. This relationship appears to be specific to the surgical PT set, and not overall PT performance which grows steadily. This finding aligns with both De Champlain et al. (Citation2010) and Van Diest et al. (Citation2004) findings. However, De Champlain et al. (Citation2010) grouped clinical attachments and considered only two variations (block 1 then block 2 or vice versa), whereas our study explored timing of a single discipline attachment across the year in multiple combinations. The loss of the benefit of exposure to a GSA by the end of the year was not initially predicted, as previous findings in psychiatry were that plateauing occurred over a much longer period (Van Diest et al. Citation2004). However, that study did not attempt to formally relate timing of learning to performance, it simply found that discipline-specific knowledge grew across the programme and noted that students had relevant learning experiences across the same period. The pattern observed here suggests that as the year continues, knowledge related to general surgery may be developed within the wider learning environment and not just the attachment, and/or students who completed a GSA earlier in the year lose the advantage they did have, essentially diluting the effect. The first scenario (gaining surgical knowledge elsewhere) is understandable when considering the student experience of clinical attachments, where surgeons may be asked to consult on a case, patients may be transferred to surgery, students may share experiences, other learning opportunities are available, etc. This hypothesis aligns with that of De Champlain et al. (Citation2010) who posited the value of core and general knowledge learning taking place in any attachment. The second scenario is more in line with a proximity/current attachment argument and is an area worth considering in future research.

While not the primary focus of this research, associations with gender and/or graduate entry status were present but less consistent. Significant interactions between these variables were found for the overall GSA assessment and CAT assessments, with significant relationships with gender present for logbooks, and CSR assessments in the absence of significant interactions. Further investigation into these areas may be of interest. Gender difference in performance has been identified previously in assessments (Kacprzyk et al. Citation2019), but in favour of male students. In this study, where there was an effect, it was in favour female students in CSAs.

Limitations

The study relied on coding of the PT to identity surgical items. Whilst coding was checked independently by three authors, it was undertaken for the study (similar to Van Diest’s et al.’s study (Citation2004); coding appears to have been directly from the blueprint in Cecilio-Fernandes et al. (Citation2018)). Alternative approaches to explore the relationship between PT performance and clinical attachment results could include using an attachment for which PT items were specifically designed, such as paediatrics (although again there would be overlap with learning in general practice and women’s health). This study assessed the effect of having either started or completed a GSA, which means there were some students who were classified as having GSA exposure without gaining the full benefits of their experiential learning; having the potential to weaken the effects observed. The study also used mean performance from the first phase as a predictor of categorical GSA performance. While this returned significant results, the amount of variance explained was low. Future research could benefit from exploring other ways of measuring student ability from PT data, and/or using non-categorical measures of GSA performance. Unfortunately, in our case, meaningful GSA performance measures are only available in a categorical form.

Conclusions

This study demonstrated a relationship between PT performance and GSA results. Specifically, students who score highly in Year 2–3 PTs, prior to clinical attachments, are more likely to receive a distinction grade during their GSA. Sequencing of the GSA has a positive effect on performance in surgical PT items in Year 4, but the effect becomes diminished over the year and disappears by the final test. Further research is required to determine whether these results are generalisable to other clinical specialties, but it is likely that experiential learning during clinical attachments accelerates acquisition of discipline specific knowledge and may also improve generalist knowledge. The findings are important for several reasons: First, students who perform well in knowledge tests at the start of the medical programme are likely to achieve at a high level in subsequent experiential learning. Second, experiential disciplinary learning increases discipline specific knowledge. Finally, timing of clinical attachments has a temporary advantageous effect on discipline-based knowledge performance, but this advantage disappears once all students in the cohort have undergone the attachment.

Acknowledgements

We thank Dr Leah Pointon (LP) who assisted with validation of coding of the surgical items.

Disclosure statement

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the article.

Additional information

Funding

Notes on contributors

Andy Wearn

Andy Wearn, MBChB, MMedSc, MRCGP, FRNZCGP, is an academic general practitioner and became Head of the Medical Programme during the study period.

Vanshay Bindra

Vanshay Bindra, MBChB, transitioned from medical student to junior doctor during the data analysis.

Bradley Patten

Bradley Patten, BSc, PhD, is a psychometrician with experience in educational assessment.

Benjamin P. T. Loveday

Benjamin P. T. Loveday, MBChB, PhD, FRACS, is an academic surgeon with an interest in surgical education.

References

- Albanese M, Case SM. 2016. Progress testing: critical analysis and suggested practices. Adv Health Sci Educ Theory Pract. 21(1):221–234.

- Ali K, Cockerill J, Zahra D, Tredwin C, Ferguson C. 2018. Impact of progress testing on the learning experiences of students in medicine, dentistry and dental therapy. BMC Med Educ. 18(1):1–11.

- Cecilio-Fernandes D, Kerdijk W, Jaarsma AC, Tio RA. 2016. Development of cognitive processing and judgments of knowledge in medical students: analysis of progress test results. Med Teach. 38(11):1125–1129.

- Cecilio-Fernandes D, Kerdijk W, Bremers AJ, Aalders W, Tio RA. 2018. Comparison of the level of cognitive processing between case-based items and non-case-based items on the Interuniversity Progress Test of Medicine in the Netherlands. J Educ Eval Health Prof. 15:28.

- Cecilio-Fernandes D, Aalders WS, Bremers AJ, Tio RA, de Vries J. 2018. The impact of curriculum design in the acquisition of knowledge of oncology: comparison among four medical schools. J Cancer Educ. 33(5):1110–1114.

- Cook DA, Bordage G, Schmidt HG. 2008. Description, justification and clarification: a framework for classifying the purposes of research in medical education. Med Educ. 42(2):128–133. Epub 2008 Jan 8. PMID: 18194162.

- Couto LB, Durand MT, Wolff AC, Restini CB, Faria M Jr, Romão GS, Bestetti RB. 2019. Formative assessment scores in tutorial sessions correlates with OSCE and progress testing scores in a PBL medical curriculum. Med Educ Online. 24(1):1560862.

- De Champlain AF, Cuddy MM, Scoles PV, Brown M, Swanson DB, Holtzman K, Butler A. 2010. Progress testing in clinical science education: results of a pilot project between the national board of medical examiners and a US medical school. Med Teach. 32(6):503–508.

- Dion V, St-Onge C, Bartman I, Touchie C, Pugh D. 2022. Written-based progress testing: a scoping review. Acad Med. 97(5):747–757.

- Freeman A, Van Der Vleuten C, Nouns Z, Ricketts C. 2010. Progress testing internationally. Med Teach. 32(6):451–455.

- Görlich D, Friederichs H. 2021. Using longitudinal progress test data to determine the effect size of learning in undergraduate medical education - a retrospective, single-center, mixed model analysis of progress testing results. Med Educ Online. 26(1):1972505.

- Hamamoto Filho PT, de Arruda Lourenção PLT, do Valle AP, Abbade JF, Bicudo AM. 2019. The correlation between students’ progress testing scores and their performance in a residency selection process. Med Sci Educ. 29(4):1071–1075.

- Heeneman S, Schut S, Donkers J, Van Der Vleuten C, Muijtjens A. 2017. Embedding of the progress test in an assessment program designed according to the principles of programmatic assessment. Med Teach. 39(1):44–52.

- Kacprzyk J, Parsons M, Maguire PB, Stewart GS. 2019. Examining gender effects in different types of undergraduate science assessment. Ir Educ Stud. 38(4):467–480.

- Karay Y, Schauber SK. 2018. A validity argument for progress testing: examining the relation between growth trajectories obtained by progress tests and national licensing examinations using a latent growth curve approach. Med Teach. 40(11):1123–1129.

- Laird-Fick HS, Solomon DJ, Parker CJ, Wang L. 2018. Attendance, engagement and performance in a medical school curriculum: early findings from competency-based progress testing in a new medical school curriculum. PeerJ. 6:e5283.

- Lillis S, Yielder J, Mogol V, O'Connor B, Bacal K, Booth R, Bagg W. 2014. Progress testing for medical students at the University of Auckland: results from the first year of assessments. J Med Educ Curricular Dev. 1:41–45.

- Muijtjens AM, Schuwirth LW, Cohen-Schotanus J, van der V, Cees PM. 2008. Differences in knowledge development exposed by multi-curricular progress test data. Adv in Health Sci Educ. 13(5):593–605.

- Neeley SM, Ulman CA, Sydelko BS, Borges NJ. 2016. The value of progress testing in undergraduate medical education: a systematic review of the literature. MedSciEduc. 26(4):617–622.

- Norman G, Neville A, Blake JM, Mueller B. 2010. Assessment steers learning down the right road: impact of progress testing on licensing examination performance. Med Teach. 32(6):496–499.

- Pugh D, Bhanji F, Cole G, Dupre J, Hatala R, Humphrey-Murto S, Touchie C, Wood TJ. 2016. Do OSCE progress test scores predict performance in a national high-stakes examination? Med Educ. 50(3):351–358.

- Pugh D, Regehr G. 2016. Taking the sting out of assessment: is there a role for progress testing? Med Educ. 50(7):721–729.

- Schaap L, Schmidt HG, Verkoeijen PP. 2012. Assessing knowledge growth in a psychology curriculum: which students improve most? Assess Eval Higher Educ. 37(7):875–887.

- Schuwirth LW, van der Vleuten C PM. 2012. The use of progress testing. Perspect Med Educ. 1(1):24–30.

- Stoica P, Selén Y. 2004. Model order selection̵a review of the AIC GIC and BIC rules. IEEE Signal Process Mag. 21(4):36–47.

- Tio RA, Schutte B, Meiboom AA, Greidanus J, Dubois EA, Bremers AJA, Dutch Working Group of the Interuniversity Progress Test of Medicine. 2016. The progress test of medicine: the Dutch experience. Perspect Med Educ. 5(1):51–55.

- van der Vleuten C, Freeman A, Collares CF. 2018. Progress test utopia. Perspect Med Educ. 7(2):136–138.

- Van Diest R, Van Dalen J, Bak M, Schruers K, Van Der Vleuten C, Muijtjens A, Scherpbier A. 2004. Growth of knowledge in psychiatry and behavioural sciences in a problem-based learning curriculum. Med Educ. 38(12):1295–1301.

- Wang L, Laird-Fick H, Parker CJ, Solomon D. 2021. Using Markov chain model to evaluate medical students’ trajectory on progress tests and predict USMLE step 1 scores–a retrospective cohort study in one medical school. BMC Med Educ. 21(1):1–9.

- Willoughby LT, Dimond GE, Smull NW. 1977. Correlation of quarterly profile examination and national board of medical examiner scores. Educ Psychol Meas. 37(2):445–449.

- Wrigley W, Vleuten VD, Cees PM, Freeman A, Muijtjens A. 2012. A systemic framework for the progress test: strengths, constraints and issues: AMEE guide no. 71. Med Teach. 34(9):683–697.