Abstract

To integrate humanoid robots into daily life, it is necessary to develop the robots that can understand human behaviors and generate human-like behaviors adaptive to their human partners. In previous robotics research, frameworks of encoding human behaviors into motion primitive models, recognizing observed human actions as the motion primitives, and synthesizing motions from the motion primitive models have been developed. The bidirectional process of motion recognition and motion synthesis can be helpful to establish intuitive behavioral interaction between humans and robots. This paper proposes a novel approach to realizing a robot intelligence for the human–robot interaction. This approach symbolizes perception and actions using hidden Markov models. The recursive process of motion recognition and synthesis based on the hidden Markov models enables a robot to understand that the human partner recognizes robot’s actions and to perform actions accordingly. The proposed framework was applied to an example of human–robot interactions where robot walks through a group of several partners. This application was tested on captured human behaviors, and the validity of this framework is demonstrated through the experiments.

Graphical Abstract

1. Introduction

In developmental psychology, Donald suggested the hypothesis of ‘mimesis’,[Citation1] which posits that intelligence in humans originated in the imitation of gestures. Conceivably, before developing language, early people would have memorized gestures performed by others, symbolized them, recognized behaviors and eventually synthesized behaviors of their own through imitation. This process would have developed into gesture communication. In neuroscience, Rizzolatti et al. discovered mirror neurons in the macaque.[Citation2] Those mirror neurons fire not only when a macaque observes another macaque performing a particular action, but also when the first macaque has just performed the same action. This mirror system is assumed to be related to various functions, such as simulating actions performed by other macaques in order to understand them. Thus, it may contribute to communication as it is located close to Broca’s area, which is relevant to language processing.[Citation3] Thus, bidirectional processes for recognition and synthesis of behaviors in the mirror system have attracted considerable attention in a variety of fields.

The mirror system and the mimesis hypothesis have inspired a wealth of robotics research. Many imitative learning frameworks have been proposed.[Citation4–Citation6] Motion data are encoded into sets of parameters of dynamical systems or stochastic models. These models abstract their corresponding motion patterns, and can be used for motion recognition or motion synthesis. The bidirectional process of the motion recognition and the motion synthesis leads to establishing the behavioral interaction between humans and robots. More specifically, the robot learns human behavioral response to the partners through observation, and understand the current interaction and then generate human-like response as learned during interacting with humans. In some situations, an ability of predicting the partner’s behaviors or looking into the partner’s internal state is crucial for the interaction. This paper describes a novel approach to designing a robot intelligence for human–robot interaction. The proposed concept is based on symbolization of perception and actions using hidden Markov models (HMMs). Symbolization enables robots to recognize human behaviors as well as to synthesize their own behaviors. The processes for recognition and synthesis are assembled in a hierarchical manner, and as a result the robot gains the ability to understand that its partner recognizes the robot’s actions and performs actions accordingly. The proposed framework is applied to an example of human–robot interactions, where a robot can predict that the partner will recognize the robot’s actions, decide which actions to perform, and walk through a group of several partners. The validity of the proposed framework is demonstrated through an experiment.

2. Related work

A lot of imitative learning frameworks have been developed for various robots. For instance, Haruno et al. have proposed an imitative learning framework named MOSAIC (MOdule Selection and Identification for Control).[Citation7] In this framework, a module consists of a pair of models: a forward dynamics model and an inverse dynamics model. Forward dynamics models predict motions and compute the error between perception and prediction. The current motion can be taken as the model with the smallest error. Inverse dynamics models are activated based on the error from the corresponding forward dynamics models and control the system. Thus, in a module, the forward and inverse dynamics models serve as a predictor and a controller, respectively. A single pair of models symbolizes a motion primitive. Neural networks have been used to represent sets of motion primitives. Specifically, Recurrent Neural Networks with Parametric Bias (RNNPBs) have been proposed.[Citation8, Citation9] An RNNPB is an extension of a recurrent neural network (RNN): a layer of bias parameters is added to a conventional RNN. The parameters in an RNNPB are optimized such that the network can predict the movement following the current one. The process also optimizes the bias parameters, and thus, the mapping between the bias parameters and motion primitives is self-organized. Estimating the bias parameters from perceived movement results in recognition, and setting the bias parameters results in switching from one motion primitive to another. Okada et al. have proposed another approach to representing motion primitives in a dynamical system, where a movement is represented by a polynomial of joint angles in vector space. The parameters of the polynomial are optimized such that the movement is attracted to the reference trajectory, and convergence toward the attractor results in the desired motion primitive. This approach has the advantage of achieving robust control. The movement is attracted toward the reference trajectory even if the movement is perturbed.[Citation10] These frameworks can encode motions into a set of parameters of a dynamical system, and encoded motions are reused for motion recognition or motion generation dependent on the current situation. However, researches discussed so far do not aim at establishing frameworks for robot–human or robot–robot interactions.

The stochastic approach has also been used extensively for learning motion primitives. HMMs are a versatile and powerful tool for representing spatiotemporal data, and have been adopted for learning human whole-body motion.[Citation11–Citation13] The parameters of an HMM are optimized such that the likelihood of generating the observed motion is maximized. An HMM that has learned a motion primitive is referred to as a motion symbol. An observed motion can be recognized as the HMM with the largest likelihood of generating that observed motion. Furthermore, motion data are also generated by an HMM to synthesize human-like motion. HMMs can represent high-dimensional data for human whole-body motion, and have been implemented on intelligent humanoid robots. This HMM-based approach has been extended to language processing by integrating an motion with a bigram-based natural language form. Such integration makes it possible for humanoid robots to interpret motions as sentences and to synthesize whole-body motion from sentences.[Citation14, Citation15] Moreover, this bidirectional process of recognition and synthesis of behaviors has been applied to models of human–robot behavioral interaction patterns hierarchically.[Citation16] The robot can recognize an action performed by a human partner, understand the current interaction, and synthesize its own action to maintain the interaction. However, the robot performs the action in response to the partner but cannot predict what action the partner will perform in response to the robot’s action. Premack et al. proposed the ‘theory of mind,’ which concerns the cognitive ability to attribute mental states (such as desires, intentions, knowledge, beliefs, and thoughts) to others in order to understand their mental states.[Citation17] Humans and some primates understand that others have their own mental states, and perform actions in response to those states. The mechanism of understanding others’ mental states and acting accordingly is necessary to enable robots to interact with human partners in a meaningful manner. More specifically, predicting what the partner is going to do is important for achieving suitable interactions. Our approach extends the stochastic framework to represent motions of two interacting partners. The extension makes it possible for a robot to recognize and predict the partner motion, and then to synthesize robot’s own motion accordingly.

We assume that the partners in an interaction act in response to our actions as well as in response to their own actions. Intelligent interaction requires a mechanism for understanding what the partner is thinking, and joint attention is one such mechanism.[Citation18] Various research on cognitive mechanisms for robots has been conducted based on joint attention.[Citation19, Citation20]. Such research is typified by a robot interacting with a caretaker and learning various actions in response to those performed by the caretaker, where the joint attention ability is explicitly given in advance. Nagai et al. pursued the development of emergent joint attention abilities based on a constructive approach using a robot.[Citation21] The robot turns its gaze on an object in the field of view of the human partner and perceives sensorimotor data. Correlation learning from many pairs of sensor and motor data results in the autonomous acquisition of joint attention by the robot. This framework is expected to find application in robots that perform actions in response to a human partner using autonomously acquired joint attention capabilities.

In research on interaction in multi-agent systems, strong emphasis has been placed on social functions. Pedica et al. proposed an approach to generating social behaviors in multi-agent systems based on human territoriality.[Citation22] This framework introduced degrees of spatial proximity (such as intimate, personal, social, and public), and motivation to action depends on the degree of proximity. Rodrigues et al. proposed a method for generating behaviors in groups of agents.[Citation23] The agents perceive other agents and obstacles, and search for possible movements toward a collision-free space. This method generates movements in groups that compete for free space, where the movements depend on geometric constraints only. In this framework, the agents do not assume that other agents’ movements are performed in response to their movements, and therefore, agents do not necessarily generate human-like movements.

The concept of the spatial proximity is widely used for controlling a robot to avoid collisions with other robots or obstacles. A novel approach based on the spatial proximity has been proposed to control the multiple mobile robots not to collide with each other based on the velocity obstacles. The velocity obstacle derives the collision-free area in the velocity space.[Citation24, Citation25] Tamura et al. have proposed a novel approach to tracks the positions of a pedestrian and estimate whether the pedestrian chooses a motion of avoiding the collision or not. This estimation allows the robot to avoid the collision with the pedestrian smoothly.[Citation26] These approach discussed so far can be applied to a robot moving through a group of humans, but the robot does not perform the human-like motion since the robot does not learn human walking trajectory during walking in the crowd. Shiomi et al. have developed the navigation system for a mobile robot that uses a social force model describing the pedestrian’s behavior. This navigation system was evaluated in the field test, and provided not only collision-free motions but also socially acceptable movements to the mobile robot.[Citation27] Ratsamee et al. have extended the social force model to deal with not only distance to the partner but also the partner’s body pose and face direction. The extended social force model enables the robot to accurately predict the partner’s motion and to perform an action of avoiding the collision. The body pose and face direction are manually chosen as key components for the prediction, and the social force model needs to be heuristically designed.[Citation28] Our proposed framework is based on learning the interactions between the two through observation, and therefore, the framework is able to generate human-like motions for the robot responsive to the partner’s behaviors. Moreover, this framework has no design parameters to be manually tuned, and represents various interaction patterns.

3. Representation of interactive behaviors

Interaction is assumed to be represented by a sequence of state vectors of two interacting partners. The state vector at time during the interaction is expressed by vector

, where vectors

and

are the states of the two interacting partners: the self and the other. The state vector sequence

is encoded into the parameters of an HMM,

. A compact notation

is used to represent an HMM.

is a set of initial node probabilities

,

is a set of node transition probabilities

, and

is a set of output distributions

. These parameters are optimized with the Baum–Welch algorithm such that the likelihood of generating the state sequence

by the HMM

is maximized.[Citation29] The HMM represents behavioral interaction in an abstract form, and each HMM is referred to as an ‘interaction primitive’, which is illustrated in Figure .

A set of interaction primitives can be acquired by observing many actual behavioral interactions. Given a sequence of state vectors

representing a behavioral interaction, the interaction can be recognized as the HMM that is most likely to generate the state vectors

. The HMM with the maximum likelihood can be derived from the set of interaction primitives as follows:

(1)

Here, the likelihood that the HMM

generates the state vectors

can be computed by the forward algorithm.

The HMM can also be used for a robot to synthesize its own behavior with respect to an interaction partner. The robot perceives its own states and observes the states

of the partner, where

is the length of the state window. State windows are concatenated into a sequence of state vectors,

. Given a sequence of state vectors, node

at time

in the HMM can be estimated by the Viterbi algorithm. Node

at time

can be sampled by the Monte Carlo method based on node

and the node transition probabilities

. Furthermore, states

and

can be generated from node

based on the output distributions

. State

represents the desired state that the robot moves to, and the robot predicts that the partner moves to state

.

4. Recursive recognition and generation for interaction

4.1. Recursive framework

In cognitive science, it is said that we humans try to understand what the other is thinking by utilizing a recursive process composed of several levels. At the simplest level (referred to as the 0th level), we decide our subsequent actions based on observation alone. At the first level, we predict what action the other will perform upon observing our action, and we decide our subsequent action based on our observation and prediction. At the second level, we predict what action the other will perform upon completing his or her first level of the recursive process, and we decide our subsequent action based on our observation and prediction. In a similar fashion, at the th level of the recursive process, we predict what action the other will perform upon completing his or her

th level of the recursive process, and we decide our own action based on observation and prediction. As the level is incremented, more levels of the behavior recognition and generation process are iterated recursively. This recursive process results in understanding the mind of the other.

Recursive processes are implemented in a hierarchical structure of the interaction primitives represented by HMMs. At the 0th level, a robot perceives its own states and identifies the current node

in the HMM which is most likely to generate the perceived state. The following nodes

are sampled based on the current node

and its node transition probabilities. The state sequence

is eventually generated from the sampled nodes

based on the output distributions. This process results in motion synthesis at the 0th level. At the first level, the robot simulates the execution of the other’s actions according to the 0th level and predicts his or her state sequence

. The sequence of states

of the other can be derived through observation and prediction. The robot perceives its own states

. Thus, the following sequence of state vectors

can be derived:

(2)

The Viterbi algorithm can find a sequence of nodes with the largest likelihood of generating the state vectors in the HMM. The node

at the next frame is estimated, and state

of the robot is generated from node

based on the output distribution

. This process results in motion synthesis at the first level. This computation is performed recursively in the same manner for deeper levels of the robot actions (Figure ).

4.2. Application to moving among several partners

We apply our proposed framework of recursive processes to Human–robot and robot–robot interaction, where a robot has to move through a group of several agents (humans or robots). In this application, we choose three different vectors (,

, and

) for the state vector

(Figure ). Vector

represents the velocity of the trunk in the trunk coordinate system at time

, vector

represents the joint angles of the whole body at time

, and vector

represents the relative position of the agent in the trunk coordinate system. The two vectors

and

define state

, and vector

defines state

. An interaction is expressed as a sequence of state vectors

. Sequences are encoded into their corresponding HMMs.

Figure 3. The state vector consists of three different vectors representing the velocity of the trunk, the joint angles of the whole body, and the relative position of the other.

During an interaction involving moving through a group of several agents, the robot pays attention to a single target agent and performs actions in response to that agent’s actions. Paying attention to a specific target makes it possible to apply our proposed framework to interaction among several agents, even if this framework deals with behavioral relations between two agents only. The target is identified by predicting the possibility of collision between the robot and other agents since collision avoidance is critical when moving among several other agents.

The robot predicts the path of each agent using HMMs. As mentioned above, the robot assumes that each agent initially performs actions based on the 0th level and predicts a sequence of velocity vectors of the

th agent. This sequence defines a path

of the agent. The relative positions of the agent can be estimated from the path, and the robot plans its own path

, which is generated by maintaining the current velocity. The robot’s path is expressed as a straight line. The robot can detect a collision region where these two paths cross by finding

and

that are closer than a given threshold. Finding the collision regions for all agents forms a set

of pairs of agent index

and collision time

. Since the closest collision region must be taken care of first, the agent with the closest collision region is identified as the target agent. In other words, the agent with the shortest collision time

is identified as the target. After identifying the target, the robot recursively recognizes the current interaction as an HMM, predicts the agent’s state, and performs actions in response to the agent’s actions in accordance with the recognized level.

5. Experiments

5.1. Interaction synthesis without recursive process

An experiment on the practical realization of behavioral interaction was conducted using our proposed framework, which iteratively recognizes interactions, predicts states of other agents, and synthesizes robot behaviors. We measured interaction patterns in a setup where two performers walked past each other. Figure shows four examples of captured interactions. The Cartesian coordinates of markers attached to the performers were acquired with an optical motion capture system consisting of 10 cameras at a sampling interval of 10 ms. Inverse kinematics was used to convert the measured position data into a 26-dimensional state vector , which consists of a three-dimensional velocity vector

of the first performer in his own trunk coordinate system, a 20-dimensional vector

of the joint angles of the first performer, and a three-dimensional position vector

of the other performer in the first performer’s coordinate system. We recorded 200 different interaction patterns by varying the experimental conditions, such as the performers’ velocities, the distance between the performers, and their relative orientation. The length of each interaction period was set to about 4 s. Each interaction data-set represented by a sequence of state vectors was encoded to a 30-state left-to-right HMM, and thus, 200 interaction primitives were acquired from the captured data. In this paper, the HMM is used not only for the recognizer for interactions, but also for the synthesizer of the behaviors. It is difficult for HMM trained by multiple interaction examples to synthesize the specific behavior. Therefore, each HMM learns its corresponding interaction sequence.

Figure 5. In case 1, one robot and two other agents are moving in the same space. The robot identifies the target agent, observes the target’s behavior, and synthesizes its own motion sequence using acquired interaction primitives represented by HMMs. The agents are programmed to move straight. The robot moves among two other agents without colliding with them. In case 2, two robots move in the same space and respond to each other’s actions. One robot swerves to the right and the other swerves to the left. These behaviors result in the collision of the two robots.

Figure 6. Five trajectories are displayed in case 1. The trajectories indicated by blue, red, and green circles represent the paths of the robot (A) and the two agents (B and C), respectively. The trajectories indicated by red and green solid circles represent the predicted paths of agents (B) and (C), respectively. Four trajectories are displayed in case 2. The trajectories indicated by blue and red circles represent the paths of robots (A) and (B), respectively, and the trajectories indicated by blue and red solid circles represent the predicted paths of robots (A) and (B), respectively.

We tested the interaction synthesis described in Section 2 using 200 HMMs. We set the length of the observation window to 100 frames, which was equivalent to an observation period of 1.0 s. The distance threshold for collision detection was set to 0.5 m. The capture region was inside a circle with a radius of about 2.5 m. We assumed that the depth sensor is mounted on the head of the humanoid robot and that the robot can observe other agents within a radius of 2.5 m in this test experiment. Note that the occlusion area was not taken into account. All the agents within a radius of 2.5 m could be measured. The robot did not predict the actions of other agents located further than 2.5 m for the purpose of interaction synthesis. The robot’s performance in terms of interaction synthesis was tested under the following two experimental conditions.

| Case 1 | We implemented the interaction primitives represented by the HMMs on robot (A). Agents (B) and (C) were programmed to move straight, where agent (B) crossed the trajectory of robot (A) in front of it while approaching from its left side. Agent (C) approached robot (A) from a distance from the front. | ||||

| Case 2 | We implemented the interaction primitives on both robot (A) and robot (B). | ||||

Under the conditions of case 1, robot (A) observes agent (B) crossing in front of itself and predicts the agent’s behavior. The robot changes its moving direction to cross the agent’s trajectory behind the agent’s back. This behavior results in the robot avoiding collision with agent (B). In the following phase, robot (A) finds another target, namely, agent (C). Robot (A) swerves to the right since it observes that agent (C) is moving straight toward itself, and predicts that not changing its own movement direction would lead to collision with agent (C). The left panel in Figure shows that robot (A) can predict the paths of agents (B) and (C) accurately. This accurate prediction enables robot (A) to select an appropriate behavior for collision avoidance.

Under the conditions of case 2, robot (A) observes that it is being approached by a robot and generates its movement behavior according to an interaction primitive representing the current interaction. Robot (A) predicts that robot (A) would swerve to the left and that robot (B) would move straight since the prediction is based on an interaction primitive of a memorized interaction example where one robot swerves to the left in response to the other robot moving straight. Therefore, robot (A) generates a behavior of swerving to the left. Furthermore, robot (B) predicts that robot (B) would swerve to the right and that robot (A) would move straight since the prediction is based on an interaction primitive of a memorized interaction example where one robot swerves to the right in response to the other moving straight. Thus, robot (B) generates a behavior of swerving to the right. Neither robot (A) nor robot (B) takes into account the interaction primitive used by the other robot in predicting each other’s behavior. This process leads to a wrong prediction of the partner’s behavior and results in the two robots colliding with each other. This experimental result implies that the robot needs to understand that other robots decide their actions according to their own interaction primitives.

Table 1. Results of movement pattern generation for each level: The number in each cell is the HMM number that generates the robot’s movement pattern. The number in parentheses is the HMM number that is searched to predict the partner’s motion. ‘s’ indicates straight motion.

5.2. Simulation of interactions based on recursive processes

We tested the concept of interaction synthesis based on the recursive framework of recognition and generation processes. We used the same interaction primitives represented by HMMs that were presented in Section 4.1. Two robots were programmed to move straight and to take actions based on recursive processes after identifying the target that is most likely to come nearby. The levels in the recursive processes are manually specified and range from zero to four. Table shows sets of four interaction primitives used in the experiment. Element corresponds to interaction where robot (A) behaves based on the

th level of the recursive process, and robot (B) behaves based on the

th level of the recursive process. The first index indicates the HMM used by the robot to generate its own behavior. The index in parentheses indicates the predicted interaction primitive. In other words, the robot predicts that the other robot is using its corresponding HMM, and ‘s’ indicates a motion of moving straight. ‘A : (15) 66’ in element

indicates that robot (A) predicts that the action of robot (B) would be generated according to the 15th HMM, and that robot (A) would generate its behavior using the 66th HMM. The 15th HMM represents a motion of moving straight, and the 66th HMM represents the motion of swerving to the left and avoiding the other robot. ‘B : (66) s’ in element

implies that robot (B) predicts that the action of robot (A) is based on the 66th HMM and that robot (B) would continue to move straight. At the 0th level, the robot does not predict the interaction primitive used by the interaction partner. Therefore, an index in parentheses is not shown for the corresponding prediction.

Four patterns of interaction primitives are shown in several interactions based on different levels. The first pattern (‘A: (15) 66’ and ‘B: 91 (67)’) can be seen in the interactions corresponding to elements ,

, and

. The second pattern (‘A: (66) s’ and ‘B: 91 (67)’) can also be found in the interactions corresponding to elements

,

, and

. Furthermore, the third pattern (‘A: (66) s’ and ‘B: 66 (s)’) can be found in the interactions corresponding to elements

,

, and

. These interaction patterns depend on whether the level number is odd or even. Given the first level of the recursive process for robot (A), robot (A) predicts that robot (B) would move straight toward robot (A), and swerves to avoid collision with robot (B). Given the second level of the recursive process for robot (A), robot (A) assumes that robot (B) behaves according to the first level of the recursive model, which predicts that the robot (A) moves straight toward robot (B). Robot (A) predicts that robot (B) swerves to avoid collision with robot (A) approaching robot (B). Finally, robot (A) moves straight, which does not result in collision with robot (B). Given the third level of the recursive process for robot (A), robot (A) assumes that robot (B) behaves based on the second level of the recursive process. Following the same process as described above, robot (A) predicts that robot (B) would move straight, and as a result robot (A) swerves. In this manner, whether the level is odd or even is critical for the success of the interaction pattern. Given the first level for robots (A) and (B), element

demonstrates that robot (A) swerves to the left and robot (B) swerves to the right. This interaction results in collision between the two robots. This collision is caused by robot (A) wrongly estimating the recursive level of robot (B), and robot (B) also wrongly estimating the recursive level of robot (A). Accordingly, the correct identification of the recursive level of the other robot (in other words, whether the level is 0 or 1) is important for the practical implementation of interaction involving robots moving among several agents.

Figure 7. Four pairs of recursive levels in two robots were tested in the interaction experiment. The upper left panel shows the paths of two robots, both of which use the 0th level of the recursive processes. These two robots move straight and collide with each other. The upper right panel shows the paths of robot (A) with the first level of the recursive process and robot (B) with the 0th level. Robot (A) swerves to the left and avoids collision with robot (B). The lower left panel shows the paths of the robots using the first level of the recursive process, where robot (A) swerves to the left and robot (B) swerves to the right. These actions result in the collision between the two robots. The lower right panel shows the paths of robot (A) using the second level of the recursive process and that for robot (B) using the first level of the recursive process, where robot (A) moves straight and robot (B) swerves to the right, thus successfully avoiding collision.

We used the following four sets of levels in the recursive process for the two robots:

| (0–0) | The recursive process levels of robot (A) and robot (B) are set to 0 and 0, respectively. | ||||

| (1–0) | The recursive process levels of robot (A) and robot (B) are set to 1 and 0, respectively. | ||||

| (1–1) | The recursive process levels of robot (A) and robot (B) are set to 1 and 1, respectively. | ||||

| (2–1) | The recursive process levels of robot (A) and robot (B) are set to 2 and 1, respectively. | ||||

5.3. Estimation of the recursive process level

Accurate estimation of the target agent’s recursive process level is required for robots to be able to move through a group of several agents. In this interaction, it is sufficient for robots to determine whether the recursive process level of a target agent is 0 or 1. The recursive process level is estimated by the following steps.

| Step 1 | The robot considers the velocity vectors | ||||

| Step 2 | The robot assumes that the target agent is moving according to the 0th level of the recursive process and predicts the path | ||||

| Step 3 | The robot observes the actual path | ||||

| Step 4 | The robot measures the error | ||||

Figure 8. Robot (A) estimates the recursive process level of the robot (B) as 0 and swerves to the left. Robot (B) estimates the recursive process level of robot (A) as 1 and moves straight. The resultant motions result in collision avoidance.

We tested the proposed estimation under the conditions of case 2, where the two robots collide with each other (Figure ). As shown in Figure 8, both robots were programmed to estimate the recursive process levels of the target agents. Robot (A) estimated the recursive process level of robot (B) as 0 by observing robot (B) moving straight, and then swerved to the left. In next phase, robot (B) observed robot (A) performing motion based on a process other than the 0th level, and estimated the recursive process level of robot (A) as 1. Robot (B) chose the motion of moving straight. These processes made it possible for robots to move past each other without collision.

We also tested the proposed framework in the following two situations.

| Case 1 | Six robots move among each other. Robot (A) and robot (B) estimate the recursive process levels of their target agents. Robots (C), (D), and (E) use the first level, and robot (F) uses the 0th level of the recursive process. This experiment aims to investigate the behaviors of robots (A) and (B). | ||||

| Case 2 | Six robots move among each other. All robots estimate the recursive process levels of their target agents and adjust their own recursive process levels accordingly. | ||||

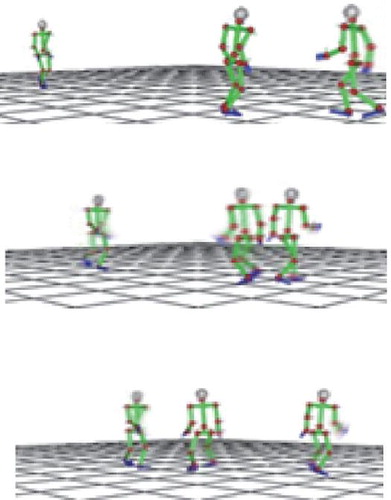

Figure 9. Snapshots of the experimental setting where six robots move among each other. Robots (A) and (B) estimate the respective recursive process level of the target agents, and adjust their own recursive process level accordingly. The other robots use fixed recursive process levels.

Figure shows the experimental results for case 1. Robot (B) identified robot (F) as the target, predicted robot (F)’s motion of moving straight, and then swerved to the right in order to avoid collision. In the same phase, robot (A) identified robot (C) as the target, predicted robot (C)’s motion of moving straight, and performed a motion of moving straight by confirming that this motion would not lead to collision. At the next phase, robot (D) swerved to the left by observing robot (A), and robot (A) stopped until robot (D) completed the motion of swerving. In the following phase, robot (E) swerved to the right to avoid collision with robot (B). Robot (B) estimated the recursive process level of robot (E) as 1 and generated a behavior based on its second level of the recursive process. The experiment demonstrated that robots (A) and (B) were capable of estimating the recursive process levels of their respective target agents, to predict the target paths, and to generate collision-free paths.

Figure 10. Snapshots of experimental setting where six robots estimate the recursive process levels of their respective targets, predict the targets’ corresponding behaviors, and perform motions accordingly.

Figure shows the experimental results for case 2. Robot (B) predicted that robot (F) would move straight, and as a result swerved to the right. Robot (F) estimated the recursive process level of robot (B) as 1 and continued to move straight. Robot (C) estimated the recursive process level of robot (A) and swerved to the left to avoid collision. At the next phase, robot (E) swerved to the right based on the first level of the recursive process. Robot (B) should have estimated the recursive process level of robot (E) as 1, but failed to obtain a correct estimate. Instead, robot (B) estimated the recursive process level of robot (E) as 0 and stopped moving for a moment, even though robot (E) did not move in front of robot (B). Despite the wrong estimation, all robots successfully moved among each other. The rate of the collision avoidance became 1.0 in these experiments, but the robot did not always estimate the recursive process level of its target correctly. The rate of the correct estimation became 0.82. These experiments demonstrate the validity of the proposed framework for interaction involving robots moving through a group of several moving agents.

In the experiments discussed so far, the agents generate their motions according to the interactive primitives acquired from observing human behaviors. All the generations of the motions are processed in the same framework, and all the agents perform human-like motions. This is not realistic since another walking model is implemented into some agents. Even if the motions of the agents are generated from the walking strategies different from our proposed framework, our framework can be applicable only if the generated motions of the partner agents are similar to human motions. If the generated motions of the partner agents are definitely different from the human motions or have not been learned as the interaction primitive, our framework only has to learn them as new interactions incrementally before it becomes adaptive to this situation. The incremental learning obtains behaviors of the two interacting agents, adds one HMM that the behaviors are encoded into. Moreover, the computational cost is critical to integrate our framework into the practical humanoid robot. Our framework required 1.1 s to estimate the recursive process level and generate the robot’s behavior using a computer with 2.0 GHz CPU. The selection of one HMM as current interaction can be processed in parallel, and then the computation time should be reduced.

6. Conclusion

The conclusions obtained in this research are as follows.

| (1) | We can represent behavioral interactions as sequences of state vectors of robots interacting with humans and/or other robots. The state sequences are encoded into sets of parameters of HMMs, which can be referred to as interaction primitives. The interaction primitives can be used by a robot to recognize the current interaction with its interaction partner and to synthesize its own behavior in response to the partner. The recognizer finds the HMM with the largest likelihood of generating the observed state vectors. The synthesizer identifies the node which is most likely to generate the current observation and synthesizes the robot’s movement based on the output of that node. | ||||

| (2) | In interacting with other agents, it is important that the robot be capable of understanding what the others are about to do. A novel approach to recursive assembly of interaction primitives has been proposed. This approach aims to enable robots to predict the behavior of their interaction partners and synthesize their own actions accordingly. This approach was applied to an interactive experiment with a robot moving through a group of several interaction partners. The state vector consisted of the velocity and the joint angles of the first agent and the relative position of the second agent. We recorded 200 interaction patterns where two persons moved in a suitable manner in order to avoid colliding with one another. These interaction patterns resulted in 200 motion primitives, which were tested on robots that moved among several agents. The experimental results showed that pairs of recursive process levels in two interacting robots can be grouped into four patterns, where the actions of both robots are based on the 0th level of the recursive process, the actions of one robot are based on the first level of the recursive process, and those of the other robot are based on the 0th level of the recursive process, the actions of both robots are based on the first level recursive process, or the actions of one robot are based on the second level of the recursive process and those of the other robot are based on the first level of the recursive process. | ||||

| (3) | The estimation of the recursive process levels used by the partners is crucial for realizing interaction involving robots moving among several agents. This paper assumes that it is sufficient to determine whether the recursive process is at level 0 or 1 in this interaction, and proposes a simple method for computing the error between prediction and observation to determine the level. This method was tested with two robots not using the proposed estimation method moving past one another, with two robots using the proposed estimation method and four robots using fixed recursive process levels moving among each other, and with six robots using the proposed estimation method moving among each other. These experiments demonstrate that the recursive process using the proposed estimation method can be useful for devising robots capable of moving through a group of several interaction partners. | ||||

Notes on contributors

Wataru Takanois an assistant professor at Department of Mechano-Informatics, School of Information Science and Technology, University of Tokyo. He was born in Kyoto, Japan, in 1976. He received hi BS and MS degrees from Kyoto University, Japan, in precision engineering in 1999 and 2001, PhD degree from Mechano-Informatics, the University of Tokyo, Japan, in 2006. He was a project assistant professor at the University of Tokyo from 2006 to 2007, and a researcher on project of Information Environment and Humans, Presto, Japan Science and Technology Agency from 2010. His field of research includes kinematics, dynamics, artificial intelligence of humanoid robots, and intelligent vehicles. He is a member of IEEE, Robotics Society of Japan, and Information Processing Society of Japan. He has been the chair of Technical Committee of Robot Learning, IEEE RAS.

Tatsuhiro Jodanreceived his BS degree and MS degrees from Mechano-Informatics, the University of Tokyo, Japan, in 2009 and 2011, respectively. His research interests are natural language processing, robot intelligence, and human–robot interaction.

Yoshihiko Nakamurais a professor at Department of Mechano-Informatics, School of Information Science and Technology, University of Tokyo. He was born in Osaka, Japan, in 1954. He received his BS, MS, and PhD degrees from Kyoto University, Japan, in precision engineering in 1977, 1978, and 1985, respectively. He was an assistant professor at the Automation Research Laboratory, Kyoto University, from 1982 to 1987. He joined the Department of Mechanical and Environmental Engineering, University of California, Santa Barbara, in 1987 as an assistant professor, and became an associate professor in 1990. He was also a co-director of the Center for Robotic Systems and Manufacturing at UCSB. He moved to University of Tokyo as an associate professor of Department of Mechano-Informatics, University of Tokyo, Japan, in 1991. His fields of research include the kinematics, dynamics, control and intelligence of robots – particularly, robots with non-holonomic constraints, computational brain information processing, humanoid robots, human-figure kinetics, and surgical robots. He is a member of IEEE, ASME, SICE, Robotics Society of Japan, the Institute of Systems, Control, and Information Engineers, and the Japan Society of Computer Aided Surgery. He was honored with a fellowship from the Japan Society of Mechanical Engineers. Since 2005, he has been the president of Japan IFToMM Congress. He is a foreign member of the Academy of Engineering in Serbia and Montenegro.

References

- Donald M. Origin of the modern mind. Cambridge: Harvard University Press; 1991.

- Rizzolatti G, Fogassi L, Gallese V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nat. Rev. 2001;661–670.

- Gallese V, Goldman A. Mirror neuron and the simulation theory of mind-reading. Trends Cognit. Sci. 1998;2:493–501.

- Kuniyoshi Y, Inaba M, Inoue H. Learning by watching: extracting reusable task knowledge from visual observation of human performance. IEEE Trans. Robot. Autom. 1994;10:799–822.

- Morimoto J, Doya K. Hierarchical reinforcement learning for motion learning: learning “stand-up” trajectories. Adv. Robot. 1999;13:267–268.

- Mataric MJ. Getting humaniods to move and imitate. IEEE Intell. Syst. 2000;15:18–24.

- Haruno M, Wolpert D, Kawato M. Mosaic model for sensorimotor learning and control. Neural Comput. 2001;13:2201–2220.

- Tani J, Ito M. Self-organization of behavioral primitives as multiple attractor dynamics: a robot experiment. IEEE Trans. Syst. Man Cybern. Syst. 2003;33:481–488.

- Ogata T, Matsumoto S, Tani J, Komatani K, Okuno HG. Human-robot cooperation using quasi-symbols generated by RNNPB model. In: Proceedings of the IEEE International Conference on Robotics and Automation; 2007; Roma, Italy. p. 2156–2161.

- Okada M, Nakamura D, Nakamura Y. On-line and hierarchical design methods of dynamics based information processing system. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’03); 2003; Las Vegas, USA. p. 954–959.

- Inamura T, Toshima I, Tanie H, Nakamura Y. Embodied symbol emergence based on mimesis theory. Int. J. Robot. Res. 2004;23:363–377.

- Billard A, Siegwart R. Robot learning from demonstration. Robot. Autonom. Syst. 2004;47:65–67.

- Kulic D, Takano W, Nakamura Y. Representability of human motions by factorial hidden markov models. In: Proceedings of the IEEE/RSJ 2007 International Conference on Intelligent Robots and Systems; 2007; San Diego, USA. p. 2388–2393.

- Takano W, Nakamura Y. Integrating whole body motion primitives and natural language for humanoid robots. In: Proceedings of the IEEE-RAS International Conference on Humanoid Robots; 2008; Daejon, Korea. p. 708–713.

- Takano W, Nakamura Y. Statistically integrated semiotics that enables mutual inference between linguistic and behavioral symbols for humanoid robots. In: Proceedings of the IEEE International Conference on Robotics and Automation; 2009; Kobe, Japan. p. 646–652.

- Takano W, Yamane K, SugiharaT, Yamamoto K, Nakamura Y. Primitive communication based on motion recognition and generation with hierarchical mimesis model. In: Proceedings of the IEEE International Conference on Robotics and Automation; 2006; Orlando, USA. p. 3602–3609.

- Premack D, Woodruff G. Does the chimpanzee have a theory of mind? Behav. Brain Sci. 1978;1:515–526.

- Butterworth G, Jarrett N. What minds have in common is space: spatial mechanisms serving joint visual attention in infancy. Br. J. Dev. Psychol. 1991;9:55–72.

- Breazeal C, Scassellati B. Infant-like social interactions between a robot and a human caretaker. Adapt. Behav. 2000;8:49–74.

- Kozima H, Yano H. A robot that learns to communicate with human categivers. In: Proceeding of the First International Workshop on Epigenetic Robotics; 2001; Lund, Sweden.

- Nagai S, Hosoda K, Morita A, Asada M. Emergence of joint attention through bootstrap learning based on the mechanisms of visual attention and learning with self-evaluation. Jpn. Soc. Artif. Intell. 2004;19:10–19.

- Pedica C, Vilhjalmsson HH. Spontaneous avatar behavior for human territoriality. Appl. Artif. Intell. 2010;24:575–593.

- Rodrigues RA, Lima Bicho A, Paravisi M, Jung CR, Magalhães LP, Musse SR. Tree paths: a new model for steering behaviors. In: Proceedings of the 9th International Conference on Intelligent Virtual Agents; 2009; Amsterdam, Netherlands. p. 358–371.

- Snape J, Berg JVD, Guy SJ, Manocha D. The hybrid reciprocal velocity obstacle. IEEE Trans. Robot. 2011;27:696–706.

- Berg JVD, Guy SJ, Lin M, Manocha D. Reciprocal n-body collision avoidance. In: Proceedings of the 14th International Symposium on Robotics Research; 2011; Flagstaff, USA. p. 3–19.

- Tamura Y, Fukuzawa T, Asama H. Smooth collision avoidance in human-robot coexisting environment. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems; 2010; Taipei, Taiwan. p. 3887–3892.

- Shiomi M, Zanlungo F, Hayashi K, Kanda T. Towards a socially acceptable collision avoidance for a mobile robot navigating among pedestrians using a pedestrian model. Int. J. Soc. Robot. 2014;6:443–455.

- Ratsamee P, Mae Y, Ohara K, Takubo T, Arai T. Human-robot collision avoidance using a modified social force model with body pose and face orientation. Int. J. HR. 2013; 10: 1350008-1–1350008-24.

- Rabiner L, Juang BH. Fundamentals of speech recognition. Englewood Cliffs, NJ: PTR Prentice Hall; 1993.