Abstract

How can we best facilitate students most in need of learning support, entering a challenging quantitative methods module at the start of their bachelor programme? In this empirical study into blended learning and the role of assessment for and as learning, we investigate learning processes of students with different learning profiles. Specifically, we contrast learning episodes of two cluster analysis-based profiles, one profile more directed to deep learning and self-regulation, the other profile more directed toward stepwise learning and external regulation. In a programme based on problem-based learning, where students are supposedly being primarily self-directed, this first profile is regarded as being of an adaptive type, with the second profile less adaptive. Making use of a broad spectrum of learning and learner data, collected in the framework of a dispositional learning analytics application, we compare these profiles on learning dispositions, such as learning emotions, motivation and engagement, learning performance and trace variables collected from the digital learning environments. Outcomes suggest that the blended design of the module with the digital environments offering many opportunities for assessment of learning, for learning and as learning together with actionable learning feedback, is used more intensively by students of the less adaptive profile.

Introduction

In the influential JISC review on learning analytics (Sclater, Mullan, and Peasgood Citation2016), learning analytics is envisioned to contribute to four different areas: quality assurance and quality improvement; boosting retention rates; to assess and act upon differential outcomes among the student population by closely monitoring the engagement and progress of sub-groups of students, such as black and minority ethnic students or students from low participation areas or underperforming groups; and as an enabler of adaptive learning (p. 5).

An example of how underperforming students can be supported by learning analytics is provided by Pardo (Citation2018). The crucial concept in the support of underperforming students is feedback. Learning analytics is synonymous with ‘data-rich learning experiences’ (Pardo Citation2018): since part or all of the learning is taking place in technology-enhanced learning environments, the performance data available in any module are enriched with learning activity data, collected by systematically analysing trace variables from e-learning systems: the digital footprints in the learning process. Examples of such trace data are the number of problems the student has tried to solve in the digital learning environment, how successful these solutions were, how many and what type of scaffolds (hints, worked-out examples) the student has used in problem-solving, and the time-on-task. Based on these traces of learning activity, or lack of learning activity, learning analytics systems will generate performance predictions to identify students in need of support and provide both instructors and learners with relevant feedback.

Learning analytics also changed the way feedback is provided. It gave rise to the development of dashboards that inform students and instructors of the progress made in learning, the study tactics used and how successful these tactics have been applied. Where learning feedback suffers from having too many students in the class, learning analytics-based feedback gains from having large classes, allowing different profiles of learning patterns to be identified. Since learning analytics feedback is based on learning activity rather than learning performance, it is both more instantaneous and more actionable than test performance-based feedback: it links to concrete learning activities that call for more attention.

This contribution addresses the way learning analytics can facilitate underperforming students when cognitive, rather than ethnic, factors are causing underachievement: students lacking appropriate prior schooling, or lacking appropriate learning dispositions required for successful learning. Internationalisation of higher education, bringing students together from very different secondary educational systems with very different instructional methods, contributes to students having unequal chances in their first university modules. The challenging facet of this type of diversity is that it is strongly intertwined with the learning objectives of individual modules. Students having incomplete mastery of crucial prior knowledge because of different learning journeys need not only to make larger learning gains than students who are fully prepared, but these learning gains are positioned in areas that are typically not included in teaching materials due to the assumption of prior knowledge. In such a context, the underperforming student needs several types of support: adaptive learning materials that combine a wide range of practice opportunities with relevant learning feedback. In our contribution, we will reason that digital learning platforms based on the mastery learning concept, that integrate assessment as, for and of learning and provide both learners and teachers with detailed learning feedback, can play a key role in solving the issue of unequal chances.

Our reasoning is empirically supported by the analysis of the learning processes of a large and diverse group of international students learning their first university module in a Dutch university, of whom many face major challenges in the transfer to university. In the empirical part of this study, we will make use of data generated by an application of dispositional learning analytics (Williams Citation2017). Dispositional learning analytics adds another source of information beyond performance data and traces of learning activity: students’ learning dispositions, collected through surveys. It aims to make feedback even more actionable (connect the feedback with potential educational interventions) and reveal the mechanisms through which learning analytics can support students of different profiles, including underperforming students.

Dispositional learning analytics

Dispositional learning analytics proposes a learning analytics infrastructure that combines learning data, generated in learning activities through the traces of technology-enhanced learning systems, with learner data: student dispositions, values and attitudes measured through self-report surveys (Buckingham Shum and Deakin Crick 2012). The unique feature of dispositional learning analytics is the combination of learning data with learner data: traces of learning activities, as in all learning analytics applications, together with self-response survey learner data, as in the research tradition of educational research. In Buckingham Shum and Deakin Crick (2012) and Buckingham Shum and Ferguson (Citation2012), the source of learner data is found in the use of a dedicated survey instrument specifically developed to identify learning power: the mix of dispositions, experiences, social relations, values and attitudes that influence the engagement with learning. Sharing a similar systems approach, it is the aim of this study to operationalise dispositions with the help of instruments developed in the context of contemporary educational research, so as to make the connection with educational theory as strong as possible. In doing so, we build upon previous research by the author on the impact of learning dispositions on students’ educational choices within blended or hybrid learning environments (Tempelaar, Rienties, and Giesbers Citation2015; Tempelaar et al. Citation2018; Tempelaar, Rienties, and Nguyen Citation2017, Citation2018).

Assessment as, for and of learning

A key aspect of actionable feedback is timeliness: module performance-based feedback might be highly predictive, but if it becomes available only late in the module, it does not allow any action to take place in time (Tempelaar et al. Citation2015, Citation2018). The wish for timely feedback does not exclude the use of assessment data; previous research has pointed to early assessment data being most predictive for final module performance (Tempelaar et al. Citation2015). The timeliest type of assessment data is learning activity data, when such learning is assessment-steered. This is the case with many of the digital learning platforms are based on the principle of mastery learning. These e-tutorials start a learning activity by posing a problem, inviting the student to solve that problem. If the student is able to solve the problem, her or his mastery level is adapted and a next problem is offered. If the student cannot solve independently, scaffolds to help solve the problem are offered, such as worked-out examples or hints for individual problem solution steps. Learning activity data derived from this type of learning, available from the very start of the module, will then include ‘assessment as learning’ type of mastery data (Crisp Citation2012; Reimann and Sadler Citation2017; Rodríguez-Gómez, Quesada-Serra, and Soledad Ibarra-Sáiz 2016).

In the context of our study, two such e-tutorial learning platforms based on mastery learning provide ‘assessment as learning’ data as well as ‘assessment for learning’ data: quizzes that take place after every 2 weeks of the module. When the aim is to early signal students at risk to fail the module, ‘assessment of learning’ data is the better predictor of performance in the final examination than ‘assessment as learning’ data. In other words, the advantage of ‘assessment for learning’ data is that it is more strongly related to ‘assessment of learning’ data than ‘assessment as learning’ data, but the disadvantage is that it has a longer time lag, so is less useful for timely interventions. The combination of all three types of assessment best contributes to actionable learning feedback.

What students are at risk?

The context of our study is a module in a blended or hybrid format, offered to students in an international programme. The face-to-face component of the blend is problem-based learning (Hmelo-Silver Citation2004). In problem-based learning, students are themselves largely responsible for shaping their own learning processes. Students transferring from high school systems based on similar student-centred principles may experience this as a smooth transfer, but the majority of international students face huge challenges. Many of them are educated in both primary and secondary education within teacher-centred pedagogies, and in addition to moving to a new country, switching to a new language, they need to adapt to an educational system where students and instructors have very different roles than they are accustomed to. For that reason, we will operationalise underperforming groups or students-at-risk in other ways than in the JISC review (Sclater et al. Citation2016): not by black and minority ethnic status or coming from low participation areas, but by preferred learning approaches being or not being in line with problem-based learning principles. And it is for the same reason that blended design is chosen as the instructional format: to supplement the learning taking place in the problem-based learning context, with learning constellations that are based on different student dispositions than required in problem-based learning.

Learning dispositions in this study

Learning dispositions applied in this study are based on these considerations: what learning skills do students need to be successful learners in a programme applying problem-based learning? The skill of being a self-regulated learner is generally regarded as a key disposition for problem-based learning (Loyens et al. Citation2013). Therefore, we select students’ approaches to learn (Entwistle Citation1997) or learning patterns (Vermunt and Donche Citation2017) as the main learning disposition relevant to our context. The instrument related to that choice, the Inventory of Learning Styles (ILS, Vermunt Citation1996), not only includes metacognitive regulation of learning, such as self-regulation or external regulation, but extends to cognitive learning processing strategies of deep versus surface learning, well known in the approaches to learning literature (Entwistle Citation1997). These dispositions of cognitive type are complemented with dispositions of affective and behavioural types: epistemic learning emotions (Pekrun and Linnenbrink-Garcia Citation2012), motivation and engagement (Martin Citation2007). Epistemic emotions are learning emotions related to cognitive aspects of the task; prototypical epistemic emotions are curiosity and confusion. The 'Motivation and Engagement Wheel’ framework (Martin Citation2007) includes both behaviours and thoughts or cognitions that play a role in learning. Both are subdivided into adaptive and maladaptive (or obstructive) forms. The choice for these two dispositions is not only to cover the full a-b-c spectrum of affective, behavioural and cognitive types of dispositions but also to apply dispositions of stable, trait-like nature, to find out to what extent prior educational experiences determine success or failure in university.

Research questions

The notion that students enter the module with very different sets of dispositions suggests a number of research questions. Some of these sets of dispositions are hypothesised as being important assets for learning in a student-centred programme; others are rather at odds with it.

Is it possible to create profiles of students based on these approaches to learning-related dispositions, even before the module starts, in a manner that appears relevant for learning processes that unfold later on?

What do these profiles tell us about the other dispositions—learning emotions, motivation and engagement—and will we observe differences in learning choices students make that are associated with these profiles?

Are we able to ‘neutralise’ the large differences in dispositions of our students by providing all students a fair chance to pass the module?

In the investigation of these questions, it is impossible to distinguish the role of learning analytics from the role of blended learning that encompasses two different learning modes based on very different instructional principles. The very first step of the analysis, the profiling of students, is certainly of a learning analytics nature, but in all subsequent steps, it is always the combination of learning analytics-based learning feedback to students and instructors, and the use of the two learning modes by students resulting from that feedback, that determines the outcomes. Only half of these learning decisions are observed: we can measure learning activity in the technology-enhanced mode, but those in the face-to-face mode stay unobserved. The same issue of incomplete measurement refers to the learning feedback: we know what information is provided in the dashboards, but do not attend the private talks tutors have with their students. Therefore, our empirical outcomes describe the combined effect of learning analytics and technology-enhanced learning, rather than learning analytics only.

Research methods

The context of the empirical study

Our study takes place in a large-scale introductory mathematics and statistics module; as a service or secondary topic for the students, whose prime focus is business studies or economics. The module applies an instructional system best described as a ‘blended’ or ‘hybrid’: the combination of face-to-face instruction with technology-enhanced learning. The main component of this blend is face-to-face: problem-based learning, in small groups (14 students), coached by a content expert tutor (Hmelo-Silver Citation2004). Participation in these tutorial groups is mandatory. Optional is the online component of the blend: the use of the two e-tutorials, namely Sowiso and MyStatLab. This choice is based on the philosophy of student-centred education, placing the responsibility for making educational choices primarily on the student. However, although optional, the use of e-tutorials and achieving good scores in the practising modes of the digital environments is stimulated by making bonus points available for good performance in the quizzes. Quizzes are taken every 2 weeks and consist of items that are drawn from the same item pools applied in the practising mode. We chose this particular constellation as it stimulates students with limited prior knowledge to make intensive use of the e-tutorials. The bonus is maximised to 20% of what one can score in the final examination.

The student-centred nature of the instructional design requires, first and foremost, adequate actionable feedback to students so that they can monitor their study progress and topic mastery. The digital platforms are crucial in this monitoring function: at any time, students can see their performance in the practice sessions, their progress in preparing for the next quiz, and receive detailed feedback on their completed quizzes, all in the absolute and relative (to their peers) sense.

The subject of this study is the 2017/2018 cohort of first-year students, who in some way participated in learning activities (i.e. have been active in at least one digital platform: in total, 1035 students). Of these students, 42% were female, 58% male, 21% had a Dutch high school diploma and 79% were international students. Amongst the international students, the neighbouring countries Germany (34%) and Belgium (20%) were well presented, as well as other European countries; 5% of students were from outside Europe. High school systems in Europe differ strongly, both about general educational principles and particularly in the teaching of mathematics and statistics. Consequently, large differences are present in what students know about mathematics and statistics, and what learning approaches they have developed, based on prior educational experience. Therefore, it is crucial that the first module offered to these students is flexible and allows for individual learning paths. In the investigated module, students work an average of 30 h in Sowiso and 22 h in MSL, what is 25–40% of the available time of 80 h for learning in both topics.

Instruments and procedure

Many learning disposition instruments distinguish between adaptive and maladaptive facets of learning. Since this study aims to investigate how a learning design based on blended learning, where students can choose for alternative compositions of learning materials, supported by learning feedback derived from learning analytics, is facilitating less adaptive students, we opt to look at a selection of such instruments. Our main instrument distinguishes adaptive versus maladaptive learning approaches: how do students tend to process and regulate their learning? Beyond this disposition of cognitive type, we apply two disposition instruments of affective and behavioural types, both allowing us to distinguish adaptive from maladaptive facets: epistemic learning emotions and motivation and engagement measures. All dispositions instruments are administered at the start of the module, and focus on stable facets of student learning: what do students bring from 6 years of high school education?

To facilitate students with diverse prior knowledge and diverse learning dispositions, the learning design applied incorporates three types of assessment. Assessment as learning takes shape in the use of the two e-tutorials, both based on test-steered learning. Assessment for learning is mainly through 2-weekly quizzes. Assessment of learning is traditional: a final written examination, though the quizzes also have a small weight in the final grade.

Trace and module performance measures

Assessment for learning is measured through 2-weekly quizzes that are aggregated in two topic scores: MathQuiz and StatsQuiz. Assessment of learning is measured with the final examination with the same composition: MathExam and StatsExam. Assessment as learning allows for a range of different indicators, all based on trace data. Although we have data from two e-tutorials, we will focus on one single e-tutorial, Sowiso, for two reasons: more data and higher quality data (especially connect time data for the MyStatLab tutorial is of low quality). Four different indicators of the intensity of using the Sowiso e-tutorial are:

time: aggregated time on task;

attempts: total the number of attempts to solve the set of practice problems;

solutions: the number of worked-out examples called for;

mastery: mastery score as a proportion of successfully solved practice problems.

Dispositions on learning approaches

Learning processing and regulation strategies shaping learning approaches (Entwistle Citation1997) or learning patterns (Vermunt and Donche 2017) are based on the Inventory of Learning Styles (ILS) instrument (Vermunt Citation1996). In an extensive review of research on learning styles (Coffield et al. Citation2004), the ILS was found to be one of the few learning styles instruments of sufficient rigour for research applications. Our study focuses on the two domains of cognitive processing strategies and metacognitive regulation strategies. Both components are composed of three scales. The deep processing strategy, in which students relate, structure and critically process new knowledge they learn, is built from two sub-scales:

critical processing: students form their own opinions when learning,

relating and structuring: students look for connections, make a diagram.

The stepwise processing strategy (also called surface processing) refers to approaches as memorising, rehearsing and analysing, and is again built from two sub-scales:

analysing: students investigate step by step,

memorising: students learn by heart.

The last and single-scale strategy relates to the tendency to apply new knowledge:

concrete processing: students focus on making new knowledge concrete, applying it.

Three metacognitive regulation strategies are self-regulation of learning, external regulation of learning and lack of learning regulation. The self-regulation scale is composed of:

self-regulation of processes: regulating one’s own learning processes through activities like planning learning activities, monitoring progress, diagnosing problems, testing one’s outcomes, adjusting and reflecting;

self-regulation of content: consulting literature and sources outside the syllabus.

The external regulation scale is composed of:

external regulation of processes: letting one’s own learning be regulated by external sources, such as learning objectives, questions or assignments of teachers or textbook authors;

external regulation of content: testing ones learning outcomes by external means, such as the tests, assignments and questions provided.

The absence of regulation is measured by the

Lack of regulation scale: students are unable to regulate their learning themselves, but also experience insufficient support from the external regulation provided by teachers and the general learning environment.

Because of the timing of the survey, learning approach measurements relate to the typical learning patterns students take with them when transferring from high school to university, rather than learning approaches developed in the current module.

Dispositions on learning emotions

Learning emotions can have different triggers. Emotions that are fuelled by the concrete learning tasks are called achievement emotions of activity type (Pekrun and Linnenbrink-Garcia Citation2012). These learning emotions are context dependent: different learning tasks that are easier or more difficult to master will result in different emotional states. In contrast, epistemic emotions are trait-based versions of learning emotions: they measure the affects students have developed in learning certain academic disciplines. In this study, we wish to investigate what learning emotions students bring with them when entering university, and thus focus on the epistemic type of learning emotions. Measurements of epistemic learning emotions are based on the research by Pekrun (Pekrun et al. Citation2017). Epistemic emotions are composed of positive emotions curiosity and enjoyment, negative emotions confusion, anxiety, frustration and boredom, and neutral emotion surprise.

Dispositions on motivation and engagement

The instrument Motivation and Engagement Wheel (Martin Citation2007) breaks down learning cognitions and learning behaviours into four quadrants of adaptive versus maladaptive types and cognitive (motivational) versus behavioural (engagement) types. Self-belief, valuing and learning focus shape the adaptive, cognitive factors or positive motivations. Planning, task management and persistence shape the adaptive, behavioural factors or positive engagement. The maladaptive, cognitive factors or negative motivations are anxiety, failure avoidance and uncertain control, while self-sabotage and disengagement are the maladaptive, behavioural factors or negative engagement.

Data analysis

The data analysis steps of this study are based on person-oriented modelling of learning dispositions, with the aim to distinguish different profiles of learning approaches. The main step in the analysis is creating different profiles of learning approaches based on k-means cluster analysis of the learning approach data (Jones, Levine Laufgraben, and Morris, Citation2006). Person-oriented methods differ from variable-oriented modelling methods in that they do not assume homogeneity of the sample. They allow for heterogeneous samples and a crucial step in the analysis is finding out how to break down the full sample into a number of sub-samples that demonstrate similar behaviours: the profiles. We opted for k-means cluster analysis to be able to compare solutions with different numbers of clusters and select the cluster solution with all clusters being substantial in size (at least about 200 students), and profiles allowing for an educational interpretation.

The cluster analysis was followed by a comparison of cluster differences for trace variables such as the intensity of using e-tutorials, disposition variables and performance variables. These comparisons were carried out with analyses of variance (ANOVAs). Although this second step of the analysis is of inferential type, we will present the analysis of cluster differences in a descriptive manner. This is because, due to large sample sizes, reported comparisons are, with a few exceptions, statistically significant beyond the 0.0005 level (p-levels reported as 0.000), implying that inclusion of these ANOVA outcomes does not add in the interpretation of the results. This does not refer to the performance differences, that are (with one single exception) not statistically significant.

Results

The cluster analysis

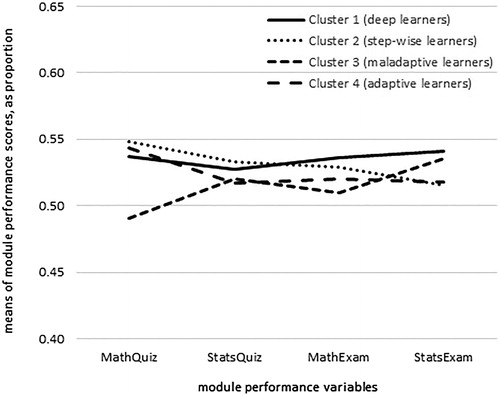

The five cognitive learning processing strategies, and four metacognitive learning regulation strategies, were used as inputs for a k-means cluster analysis. A four-cluster solution is preferred based on both model fit and interpretability of cluster solutions. describes the outcomes of that analysis, providing the four different profiles of student approaches to learning by drawing the cluster means of all four clusters on the nine learning approach variables.

Figure 1. Four learning approaches profiles of international students based on a four-cluster solution of nine scales related to learning processing and regulation strategies.

Cluster interpretations are based on these cluster means. The four clusters do not differ greatly in size: they each contain about a quarter of the students. Cluster4 is the largest, with 289 students. This cluster is best described as that of adaptive learning approaches: these students score high on all processing and regulation strategies, suggesting that they can easily switch from one approach to another when the learning context requires. Cluster3, with 233 students, is their antipode: the maladaptive learning approaches. These students score lower than students of the three other clusters on all facets of learning approaches, but two: the external regulation of both learning process and learning content. We call this cluster maladaptive since these students seem to lack the skills to give shape to their own learning approach, and are dependent on external sources such as teachers or peers to regulate their learning.

In this study, our analysis will focus mostly on the two intermediate clusters. Cluster1 students, 227 in number, are described as deep learners: they score much higher on the critical processing and relating and structuring, shaping deep learning, than on analysing and, most strongly, memorising, shaping stepwise learning. Regarding learning regulation, they seem to follow a hybrid approach: mainly self-regulation with regard to the learning process, but external regulation with regard to content. The last profile, that of Cluster2 with 196 students, turns most of this pattern around. Cluster2 students are step-wise learners in that they score relatively low on the deep learning scales, relatively high on the step-wise learning scales, specifically memorising, relatively low on both self-regulation scales and relatively high on both external regulation scales.

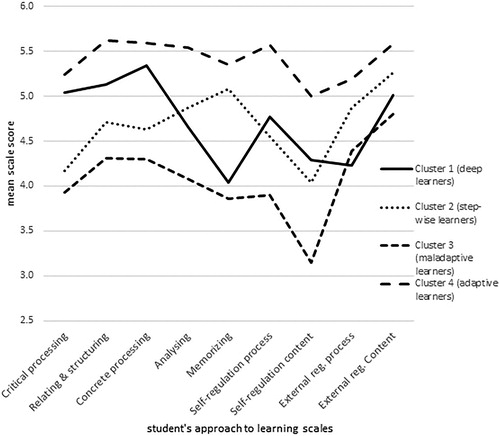

Differences in epistemic learning emotions

A second learning disposition measured at the start of the module is that of epistemic learning emotions. Applying the outcomes of the cluster analysis, we see clear differences in learning emotions between the four profiles: see .

Figure 2. Profile differences in seven scales of epistemic learning emotions, profiles based on a four-cluster solution of learning processing and regulation strategies of international students.

Students with the adaptive profile (Cluster4) score highest on the two positive emotions curiosity and enjoyment, and low on negative emotions confusion, frustration and boredom. The maladaptive profile students (Cluster3) take the opposite position: low on curiosity and enjoyment, high on frustration and boredom. Students with the deep learning profile (Cluster1) mainly stand out in scores on two negative learning emotions: they achieve lowest levels of confusion and anxiety. Those are exactly the same emotion facets that stand out for students of the stepwise learning profile, but in the opposite direction: high scores on confusion and anxiety.

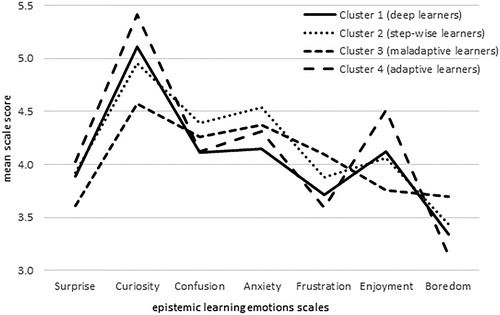

Differences in motivation and engagement

Generic dispositions towards learning in university, rather than module specific ones, are measured with the ‘motivation and engagement wheel’. As depicts, we see substantial differences in cluster means.

Figure 3. Profiles differences in eleven scales of motivation and engagement, profiles based on a four-cluster solution of learning processing and regulation strategies of international students.

The positive motivations self-belief, valuing and learning focus shape the adaptive cognitions. Students of Cluster4, the adaptive cluster, score highest; Cluster3 students, the maladaptive cluster, score lowest. That pattern repeats itself in the following three positive engagement variables: planning, task management and persistence, shaping positive behaviours or adaptive engagement. There is a salient detail: whilst the deep-learning Cluster1 students score higher than step-wise learning Cluster2 students with regard to adaptive cognitions, they score slightly less with regard to adaptive behaviours. Cluster differences are not only clearly significant in a statistical sense (all significance levels below 0.0005), but also effect sizes lie in between 10% and 20% (except for self-belief). With regard to the maladaptive cognitions, the negative motivations anxiety, failure avoidance and uncertain control, cluster differences vanish. However, they appear again in the maladaptive behaviours or negative engagement: self-sabotage and disengagement. Here, the maladaptive Cluster3 students score highest, the adaptive Cluster4 students score lowest, with intermediate positions for Cluster1 and Cluster2 students. Statistical significance is strong, but effect sizes only 3–5%.

Differences in e-tutorial trace data: assessment as learning

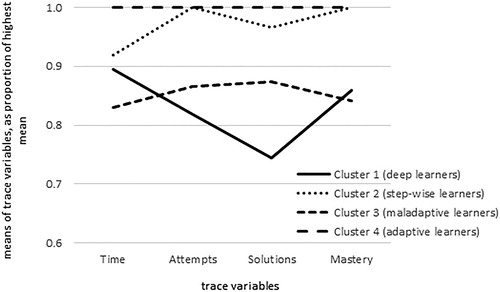

The large amount of trace data available providing the digital footprints of the students’ learning processes is reduced to four key variables that are aggregates over all 8 weeks of the module: learning time in the tool, number of attempts to solve exercises, number of solutions or worked examples called for, and final mastery level, the number of correctly solved problems. To catch all these data in one graph, , all scores are re-expressed as proportions of the highest score amongst the four clusters.

Figure 4. Profile differences in four e-tutorial trace data measures, profiles based on a four-cluster solution of learning processing and regulation strategies of international students.

That highest scoring cluster is, for all variables, Cluster4 of students with the adaptive learning approach. The maladaptive Cluster3 students also exhibit a very stable pattern: they score at about 85% of the scores of the adaptive profile, for all criteria. Patterns that are more diverse are visible in the remaining profiles. The deep learners, Cluster1, stand out in the low number of worked-examples called for, and a low number of attempts, but about average tool time. It suggests their learning in the e-tutorial is not as efficient as that of the stepwise learners (Cluster2), who use about the same amount of learning time, but call for many more solutions (and thus attempts), and doing so, reach higher mastery levels.

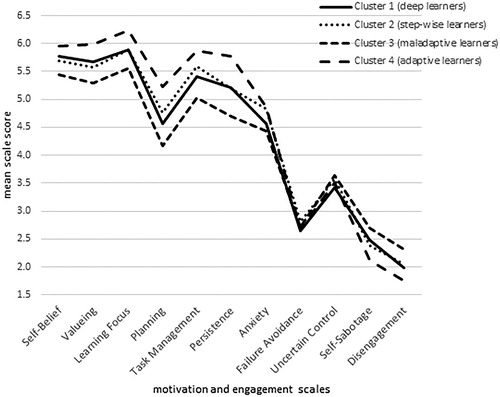

Performance differences

In contrast to dispositions and trace variables, cluster differences in performance variables are absent, with one exception: the MathQuiz, part of the assessment for learning: see . For the other performance measures, significance levels of cluster differences are larger than 0.2.

Absent cluster differences imply that the learning paths taken by students of the four clusters differ in that they are dominated by different learning approaches, but that in the end, all paths seem to produce similar proficiency levels. Since the final module grade is a weighted average of the four performance measures, lack of cluster differences is also present in final module grade.

Discussion and conclusions

In this study, we used dispositions and trace data to understand how learners find their own learning paths in a technology-enhanced learning environment, and what learning approaches can be seen as antecedents of such choices. The intensity of using the e-tutorials, and the choice of what learning scaffolds to use, differ between students of the several clusters, although effect sizes are small. For example, students from the deep learning cluster are less inclined to use worked-examples in their learning process, and more often continue trying to solve the problems by themselves without using any scaffold. That takes them more time to reach the proficiency level as defined by the e-tutorial (the mastery level in the tool). However, we do not find any module performance differences between the four clusters. Apparently, students take different learning paths, but regarding module grades, all these paths lead to Rome (i.e. the same result is obtained).

The possibility of choosing from several paths is created by the blended design of the module. The programme taken by the students in this study follows the instructional approach of problem-based learning. That is demanding: students are expected to take ownership of their learning process, to discuss and define learning goals in small, collaborative groups, with the teacher in the role of tutor, no more than coaching these tutorial groups. This context implies that beyond ethnicity or social, economic background factors, other factors are likely to impact the chances of success and the existence of unequal chances. In our study, we looked at learning approaches as a potential source of inequality. High school systems in Europe are very different, not only regarding the cognitive levels graduates attain but also regarding instructional methods. Some of these systems are student-centred, similar to the problem-based learning method (as in the Netherlands), other systems are strongly teacher-centred. These different backgrounds bring about different student learning approaches, certainly in the first semester. Being at risk for this module will have multiple facets, of which not being well equipped to take own responsibility for the learning is one. For students ill prepared in self-regulation of study, or with little experience of going beyond stepwise learning processes, the external regulation built into digital learning platforms are a welcome supplement in the regulation of their learning.

The obvious limitation in our study is that we observe only part of the learning process and part of the relevant background students bring with them. With regard to the learning process: all learning that takes place through the two digital learning environments is traced in every detail. However, learning in tutorial groups, self-study to prepare sessions of these tutorial groups, and collaborative learning within small subgroups consisting of members of one tutorial group, strongly stimulated by the tutors, is all left unobserved. This might explain the circumstance that Cluster3 students, the overall maladaptive cluster with low values of all types of learning regulation, achieve similar performance scores as the other clusters. In Cluster3, local students are highly overrepresented. These local students profit from (by law) the introductory modules matching with the end levels of Dutch secondary education, that statistics is a substantial part of the high school mathematics program of most local students (explaining why Cluster3 students perform better in the statistics topic of quizzes and examination, relative to the mathematical topic), and being better prepared to organise alternative constellations for studying, such as in these subgroups. All these relevant factors, except nationality, are however beyond observation. In order to include these factors in the analysis, the authentic learning setting applied in this study cannot help us out, so we are bound to investigate these facets in less authentic but better observable settings, such as in laboratory studies. Findings from these studies need in turn corroboration in field studies, implying an interplay of field studies and laboratory studies.

The most meaningful comparison is probably the one of Cluster1 versus Cluster2. Both clusters have the same composition of local and international students (20% local, 80% international). They distinguish, by construction, on how they process and regulate their learning. Cluster1 students are deep learners who tend to self-regulate their learning process, but accept external regulation with regard to content. These are the ideal students for a student-centred programme, feeling like a fish in the water in our problem-based learning programme. Cluster2 students, however, face transfer problems: their learning tends to be stepwise and requires external regulation, both process and content wise. It is the combination of the assessment-steered learning technology and learning analytics-based learning feedback that seems key in the adaptation to a completely new learning context.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Notes on contributors

Dirk Tempelaar

Dirk Tempelaar is an associate professor at the Department of Quantitative Economics at the Maastricht University School of Business and Economics. His research interests focus on modelling student learning, and students’ achievements in learning, from an individual difference perspective. That includes dispositional learning analytics: ‘big data’ approaches to learning processes, to find predictive models for generating learning feedback, based on computer-generated trace data and learning dispositions.

References

- Buckingham Shum, S., and R. Deakin Crick. 2012. “Learning Dispositions and Transferable Competencies: Pedagogy, Modelling and Learning Analytics.” In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, edited by S. Buckingham Shum, D. Gasevic, and R. Ferguson, 92–101. New York, NY: ACM.

- Buckingham Shum, S., and R. Ferguson. 2012. “Social Learning Analytics.” Educational Technology and Society 15 (3): 3–26.

- Coffield, F., D. Moseley, E. Hall, and K. Ecclestone. 2004. Learning Styles and Pedagogy in Post-16 Learning: A Systematic and Critical Review. London: Learning and Skills Research Centre.

- Crisp, G. T. 2012. “Integrative Assessment: Reframing Assessment Practice for Current and Future Learning.” Assessment & Evaluation in Higher Education 37 (1): 33–43. doi:10.1080/02602938.2010.494234.

- Entwistle, N. J. 1997. “Reconstituting Approaches to Learning: A Response to Webb.” Higher Education 33 (2): 213–218.

- Hmelo-Silver, C. E. 2004. “Problem-Based Learning: What and How Do Students Learn?” Educational Psychology Review 16 (3): 235–266. doi:10.1023/B:EDPR.0000034022.16470.f3.

- Jones, J. R., J. Levine Laufgraben, and N. Morris. 2006. “Developing an Empirically Based Typology of Attitudes of Entering Students toward Participation in Learning Communities.” Assessment & Evaluation in Higher Education 31 (3): 249–265. doi:10.1080/02602930500352766.

- Loyens, S. M. M., D. Gijbels, L. Coertjens, and D. J. Côté. 2013. “Students’ Approaches to Learning in Problem-Based Learning: Taking into account Professional Behavior in the Tutorial Groups, Self-Study Time, and Different Assessment Aspects.” Studies in Educational Evaluation 39 (1): 23–32. doi:10.1016/j.stueduc.2012.10.004.

- Martin, A. J. 2007. “Examining a Multidimensional Model of Student Motivation and Engagement Using a Construct Validation Approach.” British Journal of Educational Psychology 77 (2): 413–440. doi:10.1348/000709906X118036.

- Pardo, A. 2018. “A Feedback Model for Data-Rich Learning Experiences.” Assessment & Evaluation in Higher Education 43 (3): 428–438. doi:10.1080/02602938.2017.1356905.

- Pekrun, R., and L. Linnenbrink-Garcia. 2012. “Academic Emotions and Student Engagement.” In Handbook of Research on Student Engagement, edited by S. L. Christenson, A. L. Reschly, and C. Wylie, 259–282. New York, NY: Springer Verlag.

- Pekrun, R., E. Vogl, K. R. Muis, and G. M. Sinatra. 2017. “Measuring Emotions during Epistemic Activities: The Epistemically-Related Emotion Scales.” Cognition and Emotion 31 (6): 1268–1276. doi:10.1080/02699931.2016.1204989.

- Reimann, N., and I. Sadler. 2017. “Personal Understanding of Assessment and the Link to Assessment Practice: The Perspectives of Higher Education Staff.” Assessment & Evaluation in Higher Education 42 (5): 724–736. doi:10.1080/02602938.2016.1184225.

- Rodríguez-Gómez, G., V. Quesada-Serra, and M. Soledad Ibarra-Sáiz. 2016. “Learning-Oriented e-Assessment: The Effects of a Training and Guidance Programme on Lecturers’ Perceptions.” Assessment & Evaluation in Higher Education 41 (1): 35–52. doi:10.1080/02602938.2014.979132.

- Sclater, N., J. Mullan, and A. Peasgood. 2016. Learning Analytics in Higher Education: A Review of UK and International Practice. Bristol: JISC.

- Tempelaar, D., B. Rienties, and B. Giesbers. 2015. “In Search for the Most Informative Data for Feedback Generation: Learning Analytics in a Data-Rich Context.” Computers in Human Behavior 47: 157–167. doi:10.1016/j.chb.2014.05.038.

- Tempelaar, D., B. Rienties, J. Mittelmeier, and Q. Nguyen. 2018. “Student Profiling in a Dispositional Learning Analytics Application Using Formative Assessment.” Computers in Human Behavior 78: 408–420. doi:10.1016/j.chb.2017.08.010.

- Tempelaar, D., B. Rienties, and Q. Nguyen. 2017. “Towards Actionable Learning Analytics Using Dispositions.” IEEE Transactions on Learning Technologies 10 (1): 6–16. doi:10.1109/TLT.2017.2662679.

- Tempelaar, D., B. Rienties, and Q. Nguyen. 2018. “Investigating Learning Strategies in a Dispositional Learning Analytics Context: The Case of Worked Examples.” In Proceedings of the International Conference on Learning Analytics and Knowledge, Sydney, Australia, March 2018 (LAK’18), p. 201–205.

- Vermunt, J. D. 1996. “Metacognitive, Cognitive and Affective Aspects of Learning Styles and Strategies: A Phenomenographic Analysis.” Higher Education 31 (1): 25–50. doi:10.1007/BF00129106.

- Vermunt, J. D., and V. Donche. 2017. “A Learning Patterns Perspective on Student Learning in Higher Education: State of the Art and Moving Forward.” Educational Psychology Review 29 (2): 269–299. doi:10.1007/s10648-017-9414-6.

- Williams, P. 2017. “Assessing Collaborative Learning: Big Data, Analytics and University Futures.” Assessment and Evaluation in Higher Education 42 (6): 978–989. doi:10.1080/02602938.2016.1216084.