Abstract

Properly selecting students is one of the core responsibilities of higher education institutions, which is done with selection criteria that predict student success. However, student selection literature suffers from a dearth of research on non-cognitive selection criteria which can lead to incorrect admission assessments. Contrarily, personnel selection studies are heavily focused on non-cognitive selection criteria and, as such, can offer insights that can improve the student selection literature. We carried out a systematic literature review of both literature strands and looked for ways in which personnel selection literature could inform student selection literature. We found that non-cognitive selection criteria are better predictors of success in personnel selection than in student selection, implying that non-cognitive skills are more important for job success. We also identified promising selection criteria from the personnel selection literature that could lead to better student success assessment during the selection phase: personality tests, conscientiousness, person-organization-fit, core-self-evaluations and polychronicity.

Introduction

Recently, the number of student applications, especially for prestigious universities, has ballooned (Karabel Citation2005; State and Hudson Citation2019; CBS Citation2020; d’Hombres and Schnepf Citation2021). This growing stream of applicants, combined with a limited teaching capacity, increases universities’ responsibility to find and implement fair and valid selection criteria, preventing students from being wrongly rejected from a university program (Pitman Citation2016; van Ooijen-van der Linden et al. Citation2017).

There is a large body of literature on the predictive validity of student selection criteria. The most commonly studied selection criteria, grades and standardized tests measure cognitive skills (Kuncel, Credé, and Thomas Citation2005; Steenman Citation2018) often with positive results (Vulperhorst et al. Citation2018; Nagy and Molontay Citation2021). Cognitive skills refer to an applicant’s ability to acquire, process and utilize knowledge (De Visser et al. Citation2018, 189). Less attention has been paid to selection criteria that measure non-cognitive skills (Thomas, Kuncel, and Credé Citation2007; Eva et al. Citation2009; Niessen and Meijer Citation2017). Such skills include teamwork, empathy, curiosity, confidence and communication (Wood et al. Citation1990). This decreased attention can lead to incorrect student selections.

The lack of insight into non-cognitive selection criteria can be partially mitigated by looking at personnel selection literature. Employees are often hired based on non-cognitive, social skills, measured by criteria also used in student selection, such as interviews, résumés and motivational letters (Tett and Christiansen Citation2007). The personnel selection literature also looks at criteria currently unused in student selection literature, like personality tests (Behling Citation1998; Wiersma and Kappe Citation2017). Although students and employees are selected based on different skills, and student success and job performance are measured differently, research regarding personnel selection could provide valuable insights about student selection for several reasons. First, the personnel selection literature has a rich history of studying selection criteria ability to predict future job performance based on non-cognitive skills (Schmidt, Ones, and Hunter Citation1992; Schmidt and Hunter Citation1998). Second, one role of educational programs is to prepare students for their future jobs, where they have to become successful personnel (Brennan Citation1985; Moore and Morton Citation2017; Clarke Citation2018; Suleman Citation2018). Student success is becoming less dependent on just intellectual prowess and cognitive abilities and more dependent on other highly appreciated skills in the job market, such as teamwork and communication (Te Wierik, Beishuizen, and van Os Citation2015; Jackson and Bridgstock Citation2019). More attention to career guidance and post-educational employment is given to students during their time at university (Sun and Yuen Citation2012; Hughes, Law, and Meijers Citation2017). Knowledge from the personnel selection literature could improve the practice of student selection, especially with regard to non-cognitive selection criteria. This paper aims to answer the following research question: to what extent can the personnel selection literature complement the literature on student selection?

We first systematically reviewed the student and personnel selection literatures. Based on this, we identified promising avenues where personnel selection literature could complement student selection literature. We aimed to establish links between these two strands of literature and searched for complementary knowledge to improve our understanding of student selection criteria. We used these insights to formulate a research agenda for the student selection literature. This will allow further selection research to dive into these understudied areas and improve the internal validity of the student selection literature. Relevant knowledge from personnel selection literature could be implemented into selection procedures for university students.

Conceptual background

The success of students and performance of personnel are different concepts, each with its own dimensions; thus, there are, by definition, differences in how they are measured. Moreover, student success and job performance are far from unidimensional concepts in their own rights. Both student success and job performance can be and are conceptualized and measured in a variety of ways.

Student success

Student success is defined as ‘academic achievement, engagement in educationally purposeful activities, satisfaction, acquisition of desired knowledge, skills, and competencies, persistence, and attainment of educational objectives’ (Kuh et al. Citation2007, 7). The most common association with student success is academic success, which is the achievement of desired learning outcomes (Kuh et al. Citation2011). Academic success is commonly measured using the students attained grades or grade-point average (GPA; van der Zanden et al. Citation2018), as well as student retention and degree attainment (Kuh et al. Citation2007; Trapmann et al. Citation2007; Crisp and Cruz Citation2009; York et al. Citation2015). Within studies on student success, there is a strong focus on success in the first year because most students who drop out do so then (Credé and Niehorster Citation2012; Fokkens-Bruinsma et al. Citation2020). A limitation many studies have when measuring academic success is that they only look at the single study program the student entered after application (Jones-White et al. Citation2010). Most studies do not take into account the success of students who transfer mid-curriculum. This means that in the representation of student success, early curriculum success is overrepresented.

However, student success does not consist solely of academic success. There have been multiple scholars calling for a more expansive view on student success because improving less quantifiable skills of students is a core objective of higher education institutes. (York et al. Citation2015; Niessen and Meijer Citation2017; van der Zanden et al. Citation2018; Alyahyan and Düştegör Citation2020). In recent years, notions of student success have expanded to accommodate students’ personal backgrounds, situations and goals, and to acknowledge their development more broadly (Kahu Citation2013). More holistic conceptualizations of student success include their critical thinking abilities and social and emotional well-being (van der Zanden et al. Citation2018). Medical students have a noteworthy position in this regard as medical programs are the most common context of student selection studies. Within medical selection studies, a holistic view of student selection is relatively prominent, considering that medical students’ success is often evaluated based on their clinical performance (or performance in the medical ward), where non-cognitive skills are highly necessary. Expanding the conceptualization of student success is important because students who score well on more traditional, cognitive measurements of success do not necessarily score well on non-cognitive measures (van der Zanden et al. Citation2018). We distinguish academic from student success and consider academic success to be one of the aspects of student success.

Job performance

Job performance is one of the most important outcomes in work contexts (Ohme and Zacher Citation2015). It relates to an employee’s ability to reach a goal or set of goals within their job, role or organization (Campbell Citation1990). Job performance influences the employee’s salary, promotion and training program decisions, which makes studying its predictors of major relevance for both organizational scholars and practitioners (Ohme and Zacher Citation2015). Just like student success, job performance is a multidimensional construct, as job performance can be conceptualized in various ways depending on the goals and objectives of the organization and individual (Jackson and Frame Citation2018). Jackson and Frame (Citation2018) distinguish three dimensions of job performance: task, contextual and adaptive performance. Task performance involves behaviour that converts resources into goods or services provided by the organization. Contextual performance involves furthering the organization’s goals by positively contributing to its climate and culture (Johnson Citation2001). Adaptive performance relates to the employee’s ability to cope with, react to and support changes in such a way that they contribute to the organization’s goals in times of uncertainty (Griffin, Neal, and Parker Citation2007). When comparing the dimensions of job performance to the dimensions of student success, we argue that there are similarities between contextual job performance and the holistic view on student success because both dimensions require the possession of similar non-cognitive skills, like the ability to work in teams (O’Connell et al. Citation2007). The selection criteria covered in our results will, therefore, mostly focus on contextual performance, as we expect articles studying this kind of performance to yield useful insights for non-cognitive student selection.

Given the multidimensional nature of measuring a job, it comes as no surprise that there are a wide variety of measurements for job performance, which depends on the type of job and the organization. However, the distinction between how the various dimensions of job performance are measured is less clear than it is in student selection literature. For most personnel, job performance is measured through a supervisor rating (Viswesvaran, Ones, and Schmidt Citation1996; Morin and Renaud Citation2009). Other measurements are the salary growth or promotions. For personnel in the medical sector, job performance can be measured by their clinical performance. For academic personnel, job performance is often measured by looking at their ability to win grants or their citation records (Lehmann, Jackson, and Lautrup Citation2008; Costas, van Leeuwen, and Bordons Citation2010).

Methods

We conducted our literature review following Mayring (Citation2000). These steps are: material collection, descriptive analysis of the material, selection of the main conceptual categories, and evaluation of the material pertaining to these categories.

Material collection

We compiled a database of relevant articles on student and employee selections via queries in Elsevier’s Scopus database. As the aim was to combine different strands of literature, we initially used two separate queries to identify relevant articles on student selection and personnel selection, which are found in . The queries were designed to return empirical articles within the domains of student selection and personnel selection literature. Both queries consisted of four terms: the type of applicant, such as a student or employee; the organization the applicant applies to; articles on selection or recruitment; what the articles should study - the performance or hire of the applicant. Multiple iterations of the queries were tested. Each iteration was assessed to evaluate each query’s face validity, ensuring the queries returned relevant articles to be analysed. Only articles published in academic journals written in English since 1990 were considered because of an increase in published articles on student selection at that time. This increase was observed during data collection in Scopus.

Table 1. Search queries used in this study.

During the assessment of query 2, the authors found a relatively small number of empirical studies focusing on the prediction of employees during the application process. Many studies were not empirical, and many empirical studies focused on the job success of employees already working at their organization. As such, the authors used the query in an attempt to gather more empirical studies that predict job performance. This gave us a better overview of the state-of-the-art nature of this field and increased the quality of our review.

Descriptive analysis and classification

The total article count using the queries was 4,754, with nine overlapping articles between the search results, leading to 4,745 unique articles. Next, based on its title and the abstract, the authors classified the articles as either relevant or irrelevant for this study. Articles were considered relevant if they study the predictive validity of a criterion for student or employee success. This step brought the number of articles down to 834. Of these articles, 575 were from the literature strand on student selection, 69% of the total number. The remaining 259 articles (31%) were on personnel selection. Note that these numbers do not match the final numbers provided by , as articles on student selection as well as personnel selection were found through all three queries.

Descriptive results

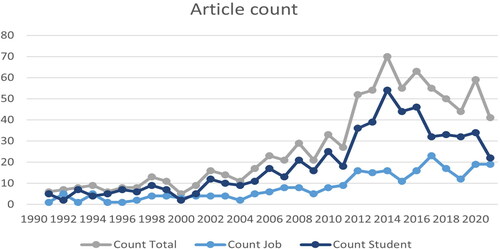

gives an overview of articles published per year: the total number of articles and the number of articles in the fields of personnel and student selection.

highlights the dominance of research on student selection, particularly medical students (see ). This is because medical studies are among the most popular and selective programs. However, this does leave knowledge gaps in the selection of non-medical students. Selection criteria that are common and valid for the selection of medical students may not be valid for other students. The overrepresentation of medical students causes bias, making the results less generalizable for the entire student population.

Table 2. Most frequently used journals in a dataset for studies on student selection (2a: top) personnel selection (2 b: bottom).

Selection of main conceptual categories

Finally, all remaining articles were coded. For each article, we coded if the article focused on the selection of students or personnel. The jobs we find in our sample are quite diverse; examples include personnel at firms, governments, or non-governmental organisations, Ph.D. candidates (classified as personnel because they are paid), medical residents, postdocs, and professors.

For all articles in the final sample, the authors coded the dependent selection criteria and the direction of their relationship (if any). In line with other systematic literature reviews (Pittaway and Cope Citation2007; Thoemmes and Kim Citation2011; Connolly et al. Citation2012; Berne et al. Citation2013; Perkmann et al. Citation2013), we categorically coded the effect of the selection criteria. Our categories were positive, negative or no effect if the study found no clear, consistent results. When articles studied multiple selection criteria, these were coded separately. Therefore, the total number of effects studied in our analysis differs from the number of articles. Only the variables found at least twice in the sample were used in our final analysis, so as to increase the generalizability and internal validity of the results. This cut-off resulted in the 22 selection criteria in , which explains what these variables entail and how they are commonly measured. For each variable, we looked at the occurrence of different codes. Some articles have more nuanced outcomes that are not easily captured by categorical coding. For these, we made notes, which we qualitatively assessed and discussed in the results.

Table 3. Explanations of selection criteria.

Results

We begin by discussing the predictive validity of student success and job performance for each of the 22 selection criteria. These criteria assess candidates based on various skills and characteristics, which are found in . We found 714 positive effects, 41 negative effects, and 174 cases with no effects. This might indicate a potential positivity bias in the data, as there is a widely reported publication bias in favour of studies that report statistically significant and/or positive results, meaning that studies with negative or insignificant results are less often published (Begg and Mazumdar Citation1994; Thornton and Lee Citation2000; Duval and Tweedie Citation2000). We especially find few studies that report negative results. The 22 criteria were divided into criteria that test cognitive skills and criteria that test non-cognitive skills. For each criterion, we provide four columns with information. The first column lists the total number of studies conducted on a particular criterion for both students and staff. The second column lists the absolute number of studies that reported a positive effect of a certain selection criterion on student performance. We also provide the percentage of positive cases compared to the total number of times a criterion was studied. The same information is provided for the number of studies that found a negative effect or that found no effect on student performance in the last two columns.

Table 4. Occurrences of selection criteria on performance.

Predicting performance

Cognitive criteria

Cognitive criteria are studied 503 times in our dataset. Of these effects, 461 were measured in student selection and 42 in personnel selection. In , grades and standardized tests, both cognitive criteria, are the most commonly studied selection criteria with 229 and 228 occurrences, respectively, the majority of which comes from student selection literature. When looking at the predictive validity of grades, we see that this is 87% in student selection, which is the highest of the cognitive criteria. For personnel selection, the predictive validity is lower, at 75%. That grades are more often a better predictor of student performance than standardized tests is further supported by numerous studies on grades and standardized tests, which display the positive effects of both but confirm grades as generally having a higher positive predictive validity (Rhodes, Bullough, and Fulton Citation1994; Hoffman and Lowitzki Citation2005; Cerdeira et al. Citation2018). One explanation for this is that grades reflect students’ self-regulatory competencies better than standardized tests, and these are needed to be a successful student (Galla et al. Citation2019). Another explanation is that while standardized tests form a snapshot of an applicant’s cognitive skills at the moment of testing, grades are acquired over a longer period and provide more consistent insight into the applicant’s cognitive skills.

There are also several important nuances concerning the predictive validity of grades. Grades from previous education lose their predictive validity in later years of study (Sladek et al. Citation2016; Vulperhorst et al. Citation2018). This supports the notion that high school and the first year of the bachelor’s program require mostly lower-order knowledge, whereas the third year of the bachelor’s program requires higher-order knowledge (Steenman Citation2018). This also explains why grades are less good at predicting job performance than they are at predicting student success. When looking at grades on the level of individual courses, we see that grades for mathematics are often found to be a better predictor than average grades for other subjects (Botha, Owen, and McCrindle Citation2003; Anderton, Hine, and Joyce Citation2017; Conn et al. Citation2018).

When looking at the predictive validity of standardized tests, the results show that the predictive validity in student and personnel selections is identical (both 74%). An important note to make is that standardized tests often comprise various elements, for example, a writing element, reading element and logical reasoning element. Several studies have found that the predictive validity differs between these elements (Glaser et al. Citation1992; Goodyear and Lampe Citation2004; McManus et al. Citation2011). The predictive validity of the entire test can therefore differ immensely from the predictive validity of the individual components, with logical reasoning often being the best predictor of performance. Finally, while graders and standardized tests are accurate predictors of student success, a combination of both grades and standardized tests is often found to be an even more accurate predictor (Cope et al. Citation2001; Madigan Citation2006).

Another predictor of cognitive skills is the mastering of a language, which is almost always English. This is studied far less and has a lower predictive validity for student success compared to standardized tests and grades, at 71%. In personnel selection, language was only studied twice: one study reported a positive effect and the other a negative effect. The fourth cognitive predictor we found was admission tests. For students, admission tests have a lower predictive validity than normal standardized tests (64% vs. 74%). This is surprising since admission tests test for study-related knowledge, which should make it a more valid selection criterion because of its clear alignment with the contents of the study program (Steenman Citation2018). For personnel selection, we found only one study on admission tests, which has a positive effect on job performance. As the final cognitive criterion, we have GMA with eight occurrences, all of them in personnel selection and all of them with a positive effect on job performance.

Non-cognitive criteria

In total, non-cognitive criteria are studied 426 times in our dataset. Of these effects, 247 were studies on student selection, and 179 were on personnel selection. This means that studies on non-cognitive criteria form a larger share of state-of-the-art knowledge regarding personnel selection compared with student selection. These results shows that the predictive validity of all criteria on non-cognitive skills is higher for personnel selection than student selection.

Procedural non-cognitive criteria

Interviews were the most common non-cognitive selection criterion with 80 occurrences. Interviews have a positive effect on performance in 63% of studies on student selection and 79% in studies on personnel selection. Therefore, interviews seem to be a more suitable selection criterion in personnel selection. This could be attributed to non-cognitive and interpersonal skills being more important in a professional environment. While interviews might be a less effective predictor of student success, the students do need the skills tested in interviews during their later careers. However, while interviews have a positive effect, unstructured one-on-one interviews have a low predictive validity for both students and personnel. The majority of studies reporting a positive effect studied specific types of interviews, such as the multiple mini-interview or the structured situational interview (Campion, Campion, and Hudson Citation1994; Reiter et al. Citation2007; Eva et al. Citation2012; Husbands and Dowell Citation2013).

Special admission procedures are exclusively found in articles on student selection. In 56% of the articles, these programs positively affect performance, in 8%, a negative effect, and in 36%, no effect is found. This means that if higher education institutes and human resource departments want to increase diversity in their organization using a special admission procedure, there is a substantial risk that they end up selecting students with lower performance.

Recommendation letters receive a lot less attention in academic literature, as they are only studied nine times. This is somewhat surprising, given that universities consider them an important selection criterion (Steenman Citation2018). In terms of predictive validity, the results are mixed. Recommendation letters are seen three times in student selection and six times in personnel selection. In both student and personnel selections, the percentage of positive cases was 67%.

Demographic criteria

Gender is the second most studied non-cognitive criterion. Women tend to perform better in both student and personnel selection. However, the percentage of positive studies on student selection is much lower than those on personnel selection (66% versus 100%). In the field of student selection, we also found seven studies (15%) that report men as performing better and an additional nine studies (19%) that report no significant effect. The field of the student does not have a consistent impact on the success of either female or male students.

Students from underprivileged backgrounds were studied 34 times, whereas personnel from underprivileged backgrounds were not studied. Of these 34 studies, 38% reported a positive effect on performance, 35% reported a negative effect, and 27% saw no effect. This means that there is little consensus about the effect of the background of the applicant on performance.

Finally, with regard to the age of applicants, we see that the older applicants performed much better in both student and personnel selections.

Personality-related criteria

Personality tests were studied a total of 48 times, 40 of which were in the field of personnel selection. This makes it the most commonly studied non-cognitive criterion in the field of personnel selection. Ninety percent of studies in personnel selection report a positive effect on performance, compared to 62.5% in student selection, a sizeable difference. The most commonly used personality test, the Big Five, consists of conscientiousness, extraversion, openness, agreeableness and neuroticism. As with standardized tests, the predictive validity of the entire test can differ greatly from the individual dimensions’ predictive validity. For these tests, conscientiousness was often a very good predictor of student success, and neuroticism was often a negative predictor of student success. Conscientiousness was also studied as a separate criterion in 13 studies, 12 of them in the personnel selection literature. All of these studies report a positive predictive validity. Motivation is studied 4 times in terms of student selection and 10 times in personnel selection; the predictive validity is 50% and 100%, respectively. However, given the small number of cases, more work is necessary. We end with a selection criterion that we find exclusively in personnel selection literature, and where all studies report a positive predictive validity: core-self-evaluation, with five occurrences.

Experience of the applicant

Previous experience through an internship, a previous job or a previous degree is generally a good predictor of performance. This is especially true regarding personnel selection, where this criterion has a predictive validity in 89% of articles; in student selection, this percentage is 76%. This could be attributed to students (especially undergraduates) requiring less specialized knowledge, which makes previous experience less important. PO-fit is also a positive predictor of performance, especially in personnel selection, where it has a 100% predictive validity. A notable limitation of the robustness of this variable is that fit and observed employee performance are often expressed through supervisor ratings, which have a risk of bias.

Skills and capabilities

Finally, we discuss the non-cognitive selection criteria that measure the applicant’s skills and capabilities. These include psychomotor skills, non-cognitive skills, emotional intelligence (EI), critical thinking and polychronicity.

Psychomotor skills are measured 17 times and are often used as a selection criterion for medical students and personnel. Of all studies on student selection, 55% report a positive effect on performance, compared to 86% in personnel selection, making this yet another non-cognitive criterion with a higher predictive validity in personnel versus student selection. Generic non-cognitive skills were studied 14 times in student selection, where they have a positive effect on performance in 93% of studies. With regard to personnel selection, generic non-cognitive skills were only studied twice, but they both reported a positive effect on performance. Emotional intelligence was studied four times in the student selection, displaying one positive study, one negative study and two studies that reported no effect. In personnel selection, EI was studied 24 times, with 23 studies (96%) reporting a positive effect on performance. The next criterion, critical thinking, has a predictive validity of 100% in personnel selection; however, this is based on a single study. Regarding the student selection, we found eight studies, 75% of which reported a positive effect on performance. Polychronicity was studied four times in our dataset. All of these were in personnel selection and reported a positive effect on job performance.

Criteria that can improve student selection

What insights from the personnel selection literature can add to the student selection literature? In order to draw meaningful lessons about selection criteria, they must be scarcely studied in student selection literature and often studied in the personnel selection literature. Moreover, they must have positive predictive validity. Five criteria fit this description: personality tests, conscientiousness, PO-fit, core-self-evaluation and polychronicity. GMA may also fit, but we expect this criterion to have little added value over other cognitive criteria as they both measure the applicant’s cognitive abilities, and cognitive criteria have already been widely studied in student selection literature.

Personality tests could help universities select students who possess the traits needed in the educational program of their choice or in their future careers. For some students, conscientiousness could be a highly necessary personality trait; for others, extraversion might be essential. However, this requires a careful analysis by universities regarding which personality traits are needed to succeed in the study program; otherwise, the risk of wrongful implementation increases. Furthermore, personality tests can be subject to fraud and faking (Connelly and Ones Citation2010). Applicants might know what the desired outcomes of such tests are and enter them accordingly. There is a high risk in many personality tests as they allow applicants to rate their own personality traits, which can lead to desirable answering and false representation of an applicant’s personality. Several meta-analyses have found that observer ratings of personality traits, therefore, have higher predictive validity than self-reported scores (Connelly and Ones Citation2010; Oh, Wang, and Mount Citation2011). However, forming a reliable and truthful observer rating of a student requires time and intense personal contact. This strengthens the case for careful implementation of personality tests, should universities decide to do so. However, we found strong evidence that conscientiousness, when used as a separate criterion, is an effective predictor of job performance. It may prove to be useful for universities to expand the possibilities for selecting students based partly on their conscientiousness, without submitting students to an entire personality test.

The insights from personnel selection literature on PO-fit could prove to be a useful addition to the field of student selection because this criterion has not yet been studied among students in our dataset and has a 100% positive score among personnel selection. Prior research has found that students flourish in academic environments that match with their personality (Rocconi, Liu, and Pike Citation2020). PO-fit for students can be tested by having applicants participate in a day of ‘onboarding’ to ascertain whether applicants fit in well with the organization and if the organization matches the preferences of the applicants. Onboarding trials have already been conducted at universities, often with positive results (Niessen, Meijer, and Tendeiro Citation2016).

Finally, core-self-evaluation remains a potentially fruitful selection criteria for students. For this criterion to work, universities must find ways of allowing students to critically reflect on their self-efficacy, self-esteem and locus of control. This could be done in structured interviews, but written documents such as motivational letters can also be used for this purpose. A noteworthy challenge is that self-esteem and self-efficacy are far from static. Scholars have argued that these concepts can change rapidly, perhaps even over the course of a few minutes (Judge and Bono Citation2001). This is because external events, such as peer feedback or job rewards, profoundly impact the outcome of a core-self-evaluation. At the moment of measurement, core-self-evaluation are still positive predictors of job performance, despite their flaws, making it at least interesting to include this in the student selection process. An explanation for the absence of these criteria in student selection literature could be because the non-cognitive skills tested by these criteria are more critical for personnel. However, reflective skills multi-tasking are also needed by successful students (Taub et al. Citation2014).

Research agenda

Going beyond these promising non-cognitive criteria, we also have other points for a research agenda for non-cognitive student selection. There is a scarcity of studies researching the predictive validity of motivation letters and personal statements in both student and personnel selection. Given that applicants to both jobs and universities are often required to write such a letter, more knowledge on the predictive validity of these letters is needed. Current research concerning motivational letters often measures letter quality through a rating of observers (Salvatori Citation2001). However, the inter-rater reliability of these ratings is often low. In future research, we suggest the use of methodologies from computational sciences, such as text mining and natural language processing (Blei, Ng, and Edu Citation2003; Chowdhury Citation2005). A more robust depiction of the quality of motivation letters can be achieved using such measures, as was shown by Pennebaker et al. (Citation2014). Structured motivational letters could also help with this since structured interviews lead to a higher predictive validity, as shown by several empirical studies (Reiter et al. Citation2007). This method for increasing the predictive validity could also help with motivation letters.

For some selection criteria, we have only found a relatively small number of empirical articles. We hope that by making explicit which selection criteria are understudied, we may encourage scholars to contribute to filling in the remaining knowledge gaps. Our results also show that student selection literature is dominated by research into undergraduate medical students. This is problematic since these students require different knowledge from students in other fields and are selected using different selection criteria. Therefore, we suggest that more research into graduate students in other fields.

Discussion and conclusions

Limitations

One limitation of this study encounters is the uneven distribution of empirical studies across the two literature strands. Studies on student selection are more common in our dataset than studies on personnel selection; there are several possible explanations for this. One explanation is that universities are under more intense scrutiny to ensure that selection is made with evidence-based selection. Another explanation is that empirical studies on selection are easier to execute for students. Data are easily accessible, and there are many ready-to-go success measures; this is more complex for personnel.

Assessing promising selection criteria and research agenda

This literature review answers the following research question: to what extent can the personnel selection literature complement the state-of-the-art literature regarding student selection? We expected the added value of personnel selection literature to concern more knowledge on selecting based on non-cognitive criteria, and find that personality tests and conscientiousness, PO-fit, core-self-evaluation and polychronicity are indeed promising non-cognitive criteria for student selection because of their high predictive validity in personnel selection. Implementing these criteria mitigates the scarcity of well-studied non-cognitive selection criteria in student selection. Including these criteria can lead to a more accurate assessment of students’ non-cognitive skills, which are becoming more important in higher education. The overall quality of selection improves when non-cognitive skills are better assessed during selection, leading to higher student success. However, for this to happen, careful implementation of these selection criteria is important because they are not without risks or drawbacks. An important facet of a valid implementation is that higher education institutes should carefully assess the applicant’s necessary skills and knowledge to be successful in the study program. These skills need to be measured accurately by the selection criterion. In other words, there needs to be alignment between the knowledge and skills needed in the study program and the knowledge and skills as measured by the criterion (Steenman Citation2018).

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Notes on contributors

Timon de Boer

&

References

- Alyahyan, E., and D. Düştegör. 2020. “Predicting Academic Success in Higher Education: Literature Review and Best Practices.” International Journal of Educational Technology in Higher Education 17 (1): 3. doi:https://doi.org/10.1186/s41239-020-0177-7.

- Anderton, R., G. Hine, and C. Joyce. 2017. “Secondary School Mathematics and Science Matters: Academic Performance for Secondary Students Transitioning into University Allied Health and Science Courses.” International Journal of Innovation in Science and Mathematics Education 25. https://researchonline.nd.edu.au/health_article/169.

- Begg, C. B., and M. Mazumdar. 1994. “Operating Characteristics of a Rank Correlation Test for Publication Bias.” Biometrics 50 (4): 1088–1101. https://www.jstor.org/stable/2533446. doi:https://doi.org/10.2307/2533446.

- Behling, O. 1998. “Employee Selection: Will Intelligence and Conscientiousness Do the Job?” Academy of Management Perspectives 12 (1): 77–86. doi:https://doi.org/10.5465/ame.1998.254980.

- Berne, S., A. Frisén, A. Schultze-Krumbholz, H. Scheithauer, K. Naruskov, P. Luik, C. Katzer, R. Erentaite, and R. Zukauskiene. 2013. “Cyberbullying Assessment Instruments: A Systematic Review.” Aggression and Violent Behavior 18 (2): 320–334. doi:https://doi.org/10.1016/j.avb.2012.11.022.

- Blei, D. M., A. Y. Ng, and J. B. Edu. 2003. “Latent Dirichlet Allocation Michael I. Jordan.” Journal of Machine Learning Research 3.

- Botha, A. E., J. H. Owen, and C. M. E. McCrindle. 2003. “Mathematics at Matriculation Level as an Indicator of Success or Failure in the 1st Year of the Veterinary Nursing Diploma at the Faculty of Veterinary Science, University of Pretoria.” Journal of the South African Veterinary Association 74 (4): 132–134. https://www.ingentaconnect.com/content/sabinet/savet/2003/00000074/00000004/art00007. doi:https://doi.org/10.4102/jsava.v74i4.526.

- Brennan, J. 1985. “Preparing Students for Employment.” Studies in Higher Education 10 (2): 151–162. doi:https://doi.org/10.1080/03075078512331378569.

- Campbell, J. P. 1990. “Modeling the Performance Prediction Problem in Industrial and Organizational Psychology.” In Handbook of Industrial and Organizational Psychology.

- Campion, M. A., J. E. Campion, and J. P. Hudson. 1994. “Structured Interviewing: A Note on Incremental Validity and Alternative Question Types.” Journal of Applied Psychology 79 (6): 998–1002. doi:https://doi.org/10.1037/0021-9010.79.6.998.

- CBS. 2020. “Statline.” Cbs.Nl.

- Cerdeira, J. M., L. C. Nunes, A. B. Reis, and C. Seabra. 2018. “Predictors of Student Success in Higher Education: Secondary School Internal Scores versus National Exams.” Higher Education Quarterly 72 (4): 304–313. doi:https://doi.org/10.1111/hequ.12158.

- Chowdhury, G. G. 2005. “Natural Language Processing.” Annual Review of Information Science and Technology 37 (1): 51–89. doi:https://doi.org/10.1002/aris.1440370103.

- Clarke, M. 2018. “Rethinking Graduate Employability: The Role of Capital, Individual Attributes and Context.” Studies in Higher Education 43 (11): 1923–1937. doi:https://doi.org/10.1080/03075079.2017.1294152.

- Conn, K. M., C. Birnie, D. McCaffrey, and J. Brown. 2018. “The Relationship between Prior Experiences in Mathematics and Pharmacy School Success.” American Journal of Pharmaceutical Education 82 (4): 6257. doi:https://doi.org/10.5688/ajpe6257.

- Connelly, B. S., and D. S. Ones. 2010. “An Other Perspective on Personality: Meta-Analytic Integration of observers’ accuracy and predictive validity.” Psychological Bulletin 136 (6): 1092–1122. doi:https://doi.org/10.1037/a0021212.

- Connolly, T. M., E. A. Boyle, E. MacArthur, T. Hainey, and J. M. Boyle. 2012. “A Systematic Literature Review of Empirical Evidence on Computer Games and Serious Games.” Computers & Education 59 (2): 661–686. doi:https://doi.org/10.1016/j.compedu.2012.03.004.

- Cope, M. K., H. H. Baker, R. Fisk, J. N. Gorby, and R. W. Foster. 2001. “Prediction of Student Performance on the Comprehensive Osteopathic Medical Licensing Examination Level I Based on Admission Data and Course Performance.” The Journal of the American Osteopathic Association 101 (2): 84–90. https://www.semanticscholar.org/paper/Prediction-of-student-performance-on-the-Medical-I-Cope-Baker/590a66d698188fcc48673ba4a4ead278e812abee.

- Costas, R., T. N. van Leeuwen, and M. Bordons. 2010. “A Bibliometric Classificatory Approach for the Study and Assessment of Research Performance at the Individual Level: The Effects of Age on Productivity and Impact.” Journal of the American Society for Information Science and Technology 61 (8): n/a–n/a. doi:https://doi.org/10.1002/asi.21348.

- Credé, M., and S. Niehorster. 2012. “Adjustment to College as Measured by the Student Adaptation to College Questionnaire: A Quantitative Review of Its Structure and Relationships with Correlates and Consequences.” Educational Psychology Review 24 (1): 133–165. doi:https://doi.org/10.1007/s10648-011-9184-5.

- Crisp, G., and I. Cruz. 2009. “Mentoring College Students: A Critical Review of the Literature Between 1990 and 2007.” Research in Higher Education 50 (6): 525–545. doi:https://doi.org/10.1007/sl.

- d’Hombres, B., and S. V. Schnepf. 2021. “International Mobility of Students in Italy and the UK: Does It Pay off and for Whom?” Higher Education: 1–22. doi:https://doi.org/10.1007/s10734-020-00631-1.

- Da Silva, N., J. Hutcheson, and G. D. Wahl. 2010. “Organizational Strategy and Employee Outcomes: A Person-Organization Fit Perspective.” The Journal of Psychology 144 (2): 145–161. doi:https://doi.org/10.1080/00223980903472185.

- De Visser, M., C. Fluit, Janke Cohen-Schotanus, and Roland Laan. 2018. “The Effects of a Non-Cognitive versus Cognitive Admission Procedure within Cohorts in One Medical School.” Advances in Health Sciences Education 23 (1): 187–200. doi:https://doi.org/10.1007/s10459-017-9782-1.

- Debusscher, J., J. Hofmans, and F. De Fruyt. 2015. “The Effect of State Core Self-Evaluations on Task Performance, Organizational Citizenship Behaviour, and Counterproductive Work Behaviour.” European Journal of Work and Organizational Psychology doi:https://doi.org/10.1080/1359432X.2015.1063486.

- Duval, S., and R. Tweedie. 2000. “A Nonparametric “Trim and Fill” Method of Accounting for Publication Bias in Meta-Analysis.” Journal of the American Statistical Association 95 (449): 89–98. doi:https://doi.org/10.1080/01621459.2000.10473905.

- Eva, K. W., H. I. Reiter, J. Rosenfeld, K. Trinh, T. J. Wood, and G. R. Norman. 2012. “Association between a Medical School Admission Process Using the Multiple Mini-Interview and National Licensing Examination Scores.” JAMA 308 (21): 2233–2240. doi:https://doi.org/10.1001/jama.2012.36914.

- Eva, K. W., H. I. Reiter, K. Trinh, P. Wasi, J. Rosenfeld, and G. R. Norman. 2009. “Predictive Validity of the Multiple Mini-Interview for Selecting Medical Trainees.” Medical Education 43 (8): 767–775. doi:https://doi.org/10.1111/j.1365-2923.2009.03407.x.

- Fokkens-Bruinsma, M., C. Vermue, J.-F. Deinum, and E. van Rooij. 2020. “First-Year Academic Achievement: The Role of Academic Self-Efficacy, Self-Regulated Learning and beyond Classroom Engagement.” Assessment & Evaluation in Higher Education : 1–12. doi:https://doi.org/10.1080/02602938.2020.1845606.

- Galla, B. M., E. P. Shulman, B. D. Plummer, M. Gardner, S. J. Hutt, J. P. Goyer, S. K. D’Mello, A. S. Finn, and A. L. Duckworth. 2019. “Why High School Grades Are Better Predictors of on-Time College Graduation than Are Admissions Test Scores: The Roles of Self-Regulation and Cognitive Ability.” American Educational Research Journal 56 (6): 2077–2115. doi:https://doi.org/10.3102/0002831219843292.

- Glaser, K., M. Hojat, J. J. Veloski, R. S. Blacklow, and C. E. Goepp. 1992. “Science, Verbal, or Quantitative Skills: Which is the Most Important Predictor of Physician Competence?” Educational and Psychological Measurement 52 (2): 395–406. doi:https://doi.org/10.1177/0013164492052002015.

- Goodyear, N., and M. F. Lampe. 2004. “Standardized Test Scores as an Admission Requirement.” Clinical Laboratory Science: Journal of the American Society for Medical Technology 17 (1): 19–24. http://www.ncbi.nlm.nih.gov/pubmed/15011976.

- Griffin, M. A., A. Neal, and S. K. Parker. 2007. “A New Model of Work Role Performance: Positive Behavior in Uncertain and Interdependent Contexts.” Academy of Management Journal 50 (2): 327–347. doi:https://doi.org/10.5465/amj.2007.24634438.

- Hamp-Lyons, L. 1998. “Ethical Test Preparation Practice: The Case of the TOEFL.” TESOL Quarterly 32 (2): 329. doi:https://doi.org/10.2307/3587587.

- Hoffman, J. L., and K. E. Lowitzki. 2005. “Predicting College Success with High School Grades and Test Scores: Limitations for Minority Students.” The Review of Higher Education 28 (4): 455–474. doi:https://doi.org/10.1353/rhe.2005.0042.

- Hogan, R., J. Hogan, and B. W. Roberts. 1996. “Personality Measurement and Employment Decisions.” American Psychologist 51 (5): 469–477. https://ovidsp.tx.ovid.com/sp-3.32.2a/ovidweb.cgi?&S=ILPCFPBDCDDDNJENNCDKCAJCJBOMAA00&Complete+Reference=S.sh.29%7C1%7C1&Counter5=FTV_complete%7C00000487-199605000-00002%7Covft%7Covftdb%7Covftb. doi:https://doi.org/10.1037/0003-066X.51.5.469.

- Hughes, D., B. Law, and F. Meijers. 2017. “New School for the Old School: Career Guidance and Counselling in Education.” British Journal of Guidance & Counselling doi:https://doi.org/10.1080/03069885.2017.1294863.

- Husbands, A., and J. Dowell. 2013. “Predictive Validity of the Dundee Multiple Mini-Interview.” Medical Education 47 (7): 717–725. doi:https://doi.org/10.1111/medu.12193.

- Jackson, D., and R. Bridgstock. 2019. “Evidencing Student Success and Career Outcomes among Business and Creative Industries Graduates.” Journal of Higher Education Policy and Management 41 (5): 451–467. doi:https://doi.org/10.1080/1360080X.2019.1646377.

- Jackson, A. T., and M. C. Frame. 2018. “Stress, Health, and Job Performance: What Do we Know?” Journal of Applied Biobehavioral Research 23 (4): e12147. doi:https://doi.org/10.1111/jabr.12147.

- Johnson, J. W. 2001. “The Relative Importance of Task and Contextual Performance Dimensions to Supervisor Judgments of Overall Performance.” The Journal of Applied Psychology 86 (5): 984–996. doi:https://doi.org/10.1037/0021-9010.86.5.984.

- Jones-White, D. R., P. M. Radcliffe, R. L. Huesman, and J. P. Kellogg. 2010. “Redefining Student Success: Applying Different Multinomial Regression Techniques for the Study of Student Graduation across Institutions of Higher Education.”Research in Higher Education 51 (2): 154–174. doi:https://doi.org/10.1007/sl.

- Judge, T. A., and J. E. Bono. 2001. “Relationship of Core Self-Evaluations Traits-Self-Esteem, Generalized Self-Efficacy, Locus of Control, and Emotional Stability-with Job Satisfaction and Job Performance: A Meta-Analysis.” The Journal of Applied Psychology 86 (1): 80–92. doi:https://doi.org/10.1037/0021-9010.86.1.80.

- Kahu, E. R. 2013. “Framing Student Engagement in Higher Education.” Studies in Higher Education 38 (5): 758–773. doi:https://doi.org/10.1080/03075079.2011.598505.

- Kantrowitz, T. M., D. M. Grelle, J. C. Beaty, and M. B. Wolf. 2012. “Time is Money: Polychronicity as a Predictor of Performance across Job Levels.” Human Performance 25 (2): 114–137. doi:https://doi.org/10.1080/08959285.2012.658926.

- Karabel, J. 2005. The Chosen: The Hidden History of Admission and Exclusion at Harvard, Yale and Princeton. Houghton Mifflin Company.

- Kuh, G. D., J. Kinzie, J. H. Schuh, and E. J. Whitt. 2011. “Fostering Student Success in Hard Times.” Change: The Magazine of Higher Learning 43 (4): 13–19. doi:https://doi.org/10.1080/00091383.2011.585311.

- Kuh, G. D., J. Kinzie, J. A. Buckley, B. K. Bridges, J. C. Hayek. 2007. Piecing Together the Student Success Puzzle: Research, Propositions, and … - George D. Kuh, Jillian Kinzie, Jennifer A. Buckley, Brian K. Bridges, John C. Hayek – Google Books. https://books.google.nl/books?hl=en&lr=&id=E2Y15q5bpCoC&oi=fnd&pg=PR7&dq=student+success+defined&ots=ZxINljxrKN&sig=rvxsWCBH_MWjvBh4p4-oRMMQuwc#v=onepage&q=studentsuccessdefined&f=false

- Kuncel, N. R., M. Credé, and L. L. Thomas. 2005. “The Validity of Self-Reported Grade Point Averages, Class Ranks, and Test Scores: A Meta-Analysis and Review of the Literature.” Review of Educational Research 75 (1): 63–82. doi:https://doi.org/10.3102/00346543075001063.

- Kuncel, N. R., S. A. Hezlett, and D. S. Ones. 2001. “A Comprehensive Meta-Analysis of the Predictive Validity of the Graduate Record Examinations: Implications for Graduate Student Selection and Performance.” Psychological Bulletin 127 (1): 162–181. doi:https://doi.org/10.1037/0033-2909.127.1.162.

- Lehmann, S., A. D. Jackson, and B. E. Lautrup. 2008. “A Quantitative Analysis of Indicators of Scientific Performance.” Scientometrics 76 (2): 369–390. doi:https://doi.org/10.1007/s11192-007-1868-8.

- Madigan, V. 2006. “Predicting Prehospital Care Students’ First-Year Academic Performance.” Prehospital Emergency Care: Official Journal of the National Association of EMS Physicians and the National Association of State EMS Directors 10 (1): 81–88. doi:https://doi.org/10.1080/10903120500366037.

- Mayring, P. 2000. “Qualitative Content Analysis.” Forum Qualitative Sozialforschung/Forum: Qualitative Social Research 1 (2). http://www.qualitative-research.net/index.php/fqs/article/view/1089/2385.

- McManus, I. C., E. Ferguson, R. Wakeford, D. Powis, and D. James. 2011. “Predictive Validity of the Biomedical Admissions Test: An Evaluation and Case Study.” Medical Teacher 33 (1): 53–57. doi:https://doi.org/10.3109/0142159X.2010.525267.

- Meyers, C. 1986. Teaching Students to Think Critically. San Francisco, CA: Jossey-Bass. https://eric.ed.gov/?id=ED280362

- Moore, T., and J. Morton. 2017. “The Myth of Job Readiness? Written Communication, Employability, and the ‘Skills Gap’ in Higher Education.” Studies in Higher Education 42 (3): 591–609. doi:https://doi.org/10.1080/03075079.2015.1067602.

- Morin, L., and S. Renaud. 2009. “Participation in Corporate University Training: Its Effect on Individual Job Performance.” Canadian Journal of Administrative Sciences/Revue Canadienne Des Sciences de L’Administration 21 (4): 295–306. doi:https://doi.org/10.1111/j.1936-4490.2004.tb00346.x.

- Nagy, M., and R. Molontay. 2021. “Comprehensive Analysis of the Predictive Validity of the University Entrance Score in Hungary.” Assessment & Evaluation in Higher Education : 1–19. doi:https://doi.org/10.1080/02602938.2021.1871725.

- Nicholls, D., L. Sweet, and J. Hyett. 2014. “Psychomotor Skills in Medical Ultrasound imaging: an analysis of the core skill set.” Journal of Ultrasound in Medicine: Official Journal of the American Institute of Ultrasound in Medicine 33 (8): 1349–1352. doi:https://doi.org/10.7863/ultra.33.8.1349.

- Niessen, A. S. M., and R. R. Meijer. 2017. “On the Use of Broadened Admission Criteria in Higher Education.” Perspectives on Psychological Science : A Journal of the Association for Psychological Science 12 (3): 436–448. doi:https://doi.org/10.1177/1745691616683050.

- Niessen, A. S. M., R. R. Meijer, and J. N. Tendeiro. 2016. “Predicting Performance in Higher Education Using Proximal Predictors.” PLoS ONE 11 (4): e0153663. doi:https://doi.org/10.1371/journal.pone.0153663.

- O’Connell, Matthew S., Nathan S. Hartman, Michael A. McDaniel, Walter Lee Grubb, and Amie Lawrence. 2007. “Incremental Validity of Situational Judgment Tests for Task and Contextual Job Performance.” International Journal of Selection and Assessment 15 (1): 19–29. doi:https://doi.org/10.1111/j.1468-2389.2007.00364.x.

- Oh, I. S., G. Wang, and M. K. Mount. 2011. “Validity of Observer Ratings of the Five-Factor Model of Personality Traits: A Meta-Analysis.” Journal of Applied Psychology 96 (4): 762–773. doi:https://doi.org/10.1037/a0021832.

- Ohme, M., and H. Zacher. 2015. “Job Performance Ratings: The Relative Importance of Mental Ability, Conscientiousness, and Career Adaptability.” doi:https://doi.org/10.1016/j.jvb.2015.01.003.

- Pennebaker, J. W., C. K. Chung, J. Frazee, G. M. Lavergne, and D. I. Beaver. 2014. “When Small Words Foretell Academic Success: The Case of College Admissions Essays.” PLoS ONE 9 (12): e115844. doi:https://doi.org/10.1371/journal.pone.0115844.

- Perkmann, M., V. Tartari, M. Mckelvey, E. Autio, A. Broström, P. D’este, R. Fini, et al. 2013. “Academic Engagement and Commercialisation: A Review of the Literature on University-Industry Relations.” Research Policy 42 (2): 423–442. doi:https://doi.org/10.1016/j.respol.2012.09.007.

- Pitman, T. 2016. “Understanding ‘Fairness’ in Student Selection: Are There Differences and Does It Make a Difference Anyway?” Studies in Higher Education 41 (7): 1203–1216. doi:https://doi.org/10.1080/03075079.2014.968545.

- Pittaway, L., and J. Cope. 2007. “Entrepreneurship Education: A Systematic Review of the Evidence.” International Small Business Journal: Researching Entrepreneurship 25 (5): 479–510. doi:https://doi.org/10.1177/0266242607080656.

- Reiter, H. I., K. W. Eva, J. Rosenfeld, and G. R. Norman. 2007. “Multiple Mini-Interviews Predict Clerkship and Licensing Examination Performance.” Medical Education 41 (4): 378–384. doi:https://doi.org/10.1111/j.1365-2929.2007.02709.x.

- Rhodes, M. L., B. Bullough, and J. Fulton. 1994. “The Graduate Record Examination as an Admission Requirement for the Graduate Nursing Program.” Journal of Professional Nursing: Official Journal of the American Association of Colleges of Nursing 10 (5): 289–296. doi:https://doi.org/10.1016/8755-7223(94)90054-X.

- Roberts, B. W., J. J. Jackson, J. V. Fayard, G. Edmonds, and J. Meints. 2009. “Conscientiousness.” In Handbook of Individual Differences in Social Behavior, edited by M. R. Leary and R. H. Hoyle.

- Rocconi, L. M., X. Liu, and G. R. Pike. 2020. “The Impact of Person-Environment Fit on Grades, Perceived Gains, and Satisfaction: An Application of Holland’s Theory.” Higher Education 80 (5): 857–874. doi:https://doi.org/10.1007/s10734-020-00519-0.

- Salovey, P., and J. D. Mayer. 1990. “Emotional Intelligence.” Imagination, Cognition and Personality 9 (3): 185–211. doi:https://doi.org/10.2190/DUGG-P24E-52WK-6CDG.

- Salvatori, P. 2001. “Reliability and Validity of Admissions Tools Used to Select Students for the Health Professions PENNY SALVATORI.” Advances in Health Sciences Education 6 (2): 159–175. https://link.springer.com/content/pdf/10.1023%2FA%3A1011489618208.pdf. doi:https://doi.org/10.1023/A:1011489618208.

- Schmidt, F. L., and J. E. Hunter. 1998. “The Validity and Utility of Selection Methods in Personnel Psychology: Practical and Theoretical Implications of 85 Years of Research Findings.” Psychological Bulletin 124 (2): 262–274. doi:https://doi.org/10.1037/0033-2909.124.2.262.

- Schmidt, F. L., D. S. Ones, and J. E. Hunter. 1992. “Personnel Selection.” Annual Review of Psychology 43. www.annualreviews.org.

- Sladek, R. M., M. J. Bond, L. K. Frost, and K. N. Prior. 2016. “Predicting Success in Medical School: A Longitudinal Study of Common Australian Student Selection Tools.” BMC Medical Education 16 (1): 187 doi:https://doi.org/10.1186/s12909-016-0692-3.

- State, K., and M. Hudson. 2019. “Accessibility and Pressures of a Growing Student Population on Urban Green Spaces.” Meliora: International Journal of Student Sustainability Research 2 (1). doi:https://doi.org/10.22493/Meliora.2.1.0016.

- Steenman, S. 2018. Alignment of Admission: An Exploration and Analysis of the Links between Learning Objectives and Selective Admission to Programmes in Higher Education. Wilco BV.

- Suleman, F. 2018. “The Employability Skills of Higher Education Graduates: Insights into Conceptual Frameworks and Methodological Options.” Higher Education 76 (2): 263–278. doi:https://doi.org/10.1007/s10734-017-0207-0.

- Sun, V. J., and M. Yuen. 2012. “Career Guidance and Counseling for University Students in China.” International Journal for the Advancement of Counselling 34 (3): 202–210. doi:https://doi.org/10.1007/s10447-012-9151-y.

- Taub, M., R. Azevedo, F. Bouchet, and B. Khosravifar. 2014. “Can the Use of Cognitive and Metacognitive Self-Regulated Learning Strategies Be Predicted by Learners’ Levels of Prior Knowledge in Hypermedia-Learning Environments?” Computers in Human Behavior 39: 356–367. doi:https://doi.org/10.1016/j.chb.2014.07.018.

- Te Wierik, M. L. J., J. Beishuizen, and W. van Os. 2015. “Career Guidance and Student Success in Dutch Higher Vocational Education.” Studies in Higher Education 40 (10): 1947–1961. doi:https://doi.org/10.1080/03075079.2014.914905.

- Tett, R. P., and N. D. Christiansen. 2007. “Personality Tests at the Crossroads: A Response to Morgeson, Campion, Dipboye, Hollenbeck, Murphy, and Schmitt (2007).”Personnel Psychology 60 (4): 967–993. doi:https://doi.org/10.1111/j.1744-6570.2007.00098.x.

- Thoemmes, F. J., and E. S. Kim. 2011. “A Systematic Review of Propensity Score Methods in the Social Sciences.” Multivariate Behavioral Research 46 (1): 90–118. doi:https://doi.org/10.1080/00273171.2011.540475.

- Thomas, L. L., N. R. Kuncel, and M. Credé. 2007. “Noncognitive Variables in College Admissions the Case of the Non-Cognitive Questionnaire.” Educational and Psychological Measurement 67 (4): 635–657. doi:https://doi.org/10.1177/0013164406292074.

- Thornton, A., and P. Lee. 2000. “Publication Bias in Meta-Analysis: Its Causes and Consequences.” Journal of Clinical Epidemiology 53 (2): 207–216. doi:https://doi.org/10.1016/S0895-4356(99)00161-4.

- Trapmann, S., B. Hell, J. O. W. Hirn, and H. Schuler. 2007. “Meta-Analysis of the Relationship between the Big Five and Academic Success at University.” Zeitschrift Für Psychologie/Journal of Psychology 215 (2): 132–151. doi:https://doi.org/10.1027/0044-3409.215.2.132.

- van der Zanden, P. J. A. C., E. Denessen, A. H. Cillessen, and P. C. Meijer. 2018. “Domains and Predictors of First-Year Student Success: A Systematic Review.” Educational Research Review 23: 57–77. doi:https://doi.org/10.1016/j.edurev.2018.01.001.

- van Ooijen-van der Linden, L., M. J. van der Smagt, L. Woertman, and S. F. Te Pas. 2017. “Signal Detection Theory as a Tool for Successful Student Selection.” Assessment and Evaluation in Higher Education 42 (8). doi:https://doi.org/10.1080/02602938.2016.1241860.

- Viswesvaran, C., and D. S. Ones. 2002. “Human Performance Agreements and Disagreements on the Role of General Mental Ability (GMA) in Industrial.” Human Performance 15 (1-2): 211–231. doi:https://doi.org/10.1080/08959285.2002.9668092.

- Viswesvaran, C., D. S. Ones, and F. L. Schmidt. 1996. “Comparative Analysis of the Reliability of Job Performance Ratings.” Journal of Applied Psychology 81 (5): 557–574. doi:https://doi.org/10.1037/0021-9010.81.5.557.

- Vulperhorst, J., C. Lutz, R. de Kleijn, and J. van Tartwijk. 2018. “Disentangling the Predictive Validity of High School Grades for Academic Success in University.” Assessment & Evaluation in Higher Education 43 (3): 399–414. doi:https://doi.org/10.1080/02602938.2017.1353586.

- Wiersma, U. J., and R. Kappe. 2017. “Selecting for Extroversion but Rewarding for Conscientiousness.” European Journal of Work and Organizational Psychology 26 (2): 314–323. doi:https://doi.org/10.1080/1359432X.2016.1266340.

- Wood, P. S., W. L. Smith, E. M. Altmaier, V. S. Tarico, and E. A. Franken. 1990. “A Prospective Study of Cognitive and Noncognitive Selection Criteria as Predictors of Resident Performance.” Investigative Radiology 25 (7): 855–859. http://www.ncbi.nlm.nih.gov/pubmed/2391201. doi:https://doi.org/10.1097/00004424-199007000-00018.

- York, T. T., C. Gibson, S. Rankin, T. T. York, and C. Gibson. 2015. “Defining and Measuring Academic Success.” Practical Assessment, Research, and Evaluation 20. doi:https://doi.org/10.7275/hz5x-tx03.