Abstract

Several times each year the teaching performance of academics at higher education institutions are evaluated through anonymous, online student evaluation of teaching (SET) surveys. Universities use SETs to inform decisions about staff promotion and tenure, but low student participation levels make the surveys impractical for this use. This scoping review aims to explore student motivations, perceptions and opinions of SET survey completion. Five EBSCO® databases were searched using key words. Thematic analysis of a meta-synthesis of qualitative findings derived from 21 papers identified five themes: (i) the value students’ place on SET, (ii) the knowledge that SET responses are acted upon to improve teaching, (iii) assurance of survey confidentiality and anonymity, (iv) incentives for completing SET, and (v) survey design and timing of survey release. Perceptions, knowledge and attitudes about the value of SET are essential factors in motivating students to engage and complete SETs, particularly if surveys are easy to interpret, time for completion is incentivised and responses are valued.

Most higher education institutions rely on anonymous, online student evaluation of teaching (SET) surveys to assess teaching staff performance and appraise the quality of teaching and learning (Cook, Jones, and Al-Twal Citation2022; Heffernan Citation2022). Researchers and academics have challenged the validity of anonymous SETs and recommend caution when using them to evaluate teaching quality (Fenn Citation2015; Lee et al. Citation2021; Kreitzer and Sweet-Cushman Citation2022). In the past, SET surveys have produced significantly biased and prejudicial responses towards women and marginalised groups (Kreitzer and Sweet-Cushman Citation2022). Some have suggested that the relationship between SET ratings and teaching quality is tenuous at best, and there is generally a poor correlation between responses and student learning (Uttl, White, and Gonzalez Citation2017; Chen Citation2023)

Anonymous SET surveys enable students to make personalised, prejudicial and offensive comments about teachers and courses with impunity (Clayson Citation2022; Kreitzer and Sweet-Cushman Citation2022; Hutchinson et al. Citation2023). Such commentary can adversely affect the health and wellbeing of teaching staff and lead to accommodations to appease students, which may negatively impact teaching quality (Lakeman et al. Citation2022a; Lee et al. Citation2022). The validity of SET survey responses is highly contingent on students being scrupulously honest, insightful, and possessing the requisite skills and self-awareness to make constructive comments about the teaching and learning experience (Heffernan and Bosetti Citation2021).

For at least a century, the teaching provided by higher education academic staff has been assessed using SET (Freyd Citation1923). Early tools designed to evaluate academic performance overtly included subjective criteria for evaluating personal qualities such as a ‘sense of humour’ (Freyd Citation1923, p. 434) and ‘personal appearance’ (Smalzreid and Remmers Citation1943, p. 366). Such superficial issues continue to influence how many students appraise academic performance when providing evaluations of teaching (Read, Rama, and Raghunandan Citation2001; Chen and Hoshower Citation2003; Riniolo et al. Citation2006; Boring, Ottoboni, and Stark Citation2016). Characteristics such as physical attractiveness (Riniolo et al. Citation2006; Chen Citation2023), the perceived humour of the academic and perceived grade leniency influence the aggregated results of SET, and the opportunity to commend or castigate teachers for these attributes may motivate students to participate in SET surveys (Martin Citation1998; Gump Citation2007; Chen Citation2023)

Some students elect not to participate in the SET processes for various reasons, including the perception that it will not make any difference to them. Hoel and Dahl (Citation2019) surveyed 689 Norwegian higher education students and found that 30% chose not to participate if the survey was estimated to take longer than five minutes to complete. This effect is likely compounded if students complete the survey in what they perceive as their own time. El Hassan (Citation2009) surveyed 605 students in Lebanon and found that only 50% believed changes would result from their feedback. Approximately 60% of students in a study conducted across 20 higher education institutions in the USA (n = 597) believed their feedback was unlikely to be read (Kite, Subedi, and Bryant-Lees Citation2015). These findings offer insight into why students participate in SET surveys and why some may be unconcerned about providing constructive comments.

Altruistic motivators of participation in SET, such as wishing to improve learning for future students or recognising academic teaching skills, are well established (Hattie and Timperley Citation2007; Kite, Subedi, and Bryant-Lees Citation2015). Participation rates in SET differ depending on whether survey responses are anonymous and if the mode of delivery is online or face-to-face (Dommeyer et al. Citation2004). Hollerbach, Sarnecki, and Bechtoldt (Citation2021) suggest anonymity may reverse the perceived balance of power between students and teachers. This may result in different individuals responding to and providing different responses in online surveys. The characteristics of survey responders may be quite different to the student body in general (Richardson Citation2005).

Anonymously delivered online surveys increase the opportunity for individuals to engage in trolling-like behaviours where students unleash frustrations in unprofessional, non-constructive and offensive ways (Lakeman et al. Citation2022). Ching (Citation2019) asserts that students use SET to reward or punish academic staff. Fear of retribution or disapproval from students may lead to practices that diminish the quality of the teaching and learning experience. These phenomena may exacerbate occupational stress experienced by teaching staff and impact on esteem and wellbeing (Lee et al. Citation2022).

Few researchers have examined students’ views regarding participation in SET. The studies which have investigated this area are inconclusive. Some have found that students generally hold positive opinions and take the process of evaluation seriously (Heine and Maddox Citation2009; Kite, Subedi, and Bryant-Lees Citation2015). Others have cited the emotionally charged comments left by students as breaching the trust afforded to those students by inviting them to participate (Heffernan Citation2022). Understanding why students participate in online SETs will enable a deeper understanding of the factors that promote student engagement in the process and enable more meaningful construction of questions used in SET evaluations and interpretation of data so informed changes to teaching, and learning strategies may be implemented. This scoping review explores student motivations, perceptions and opinions of SET. Additionally, the findings suggest alternative solutions when engaging students in teaching and learning evaluation strategies.

Methodology

A systematic scoping review protocol was registered with the Open Science Framework (https://osf.io/fm98u/). The scoping review was conducted using the Preferred Reporting Items for Systematic Review and Meta-Analysis extension for scoping reviews (PRISMA-ScR; Tricco et al. Citation2018). Scoping reviews are a valuable tool for exploring evidence-based literature on a topic without a previous systematic exploration (Levac, Colquhoun, and O’Brien Citation2010). In a scoping review, studies with diverse methodologies are included and analysed to collate the current knowledge base to develop best practice processes and identify knowledge gaps. Scoping reviews are helpful for a comprehensive and broad analysis of literature when exploring an under-examined area of research (Arksey and O’Malley Citation2005). Thus, a scoping review was identified as the most appropriate methodological approach to use. This structured framework ensured transparency in the methodological and analytical decisions undertaken throughout the review. The framework includes six steps: (i) identifying a question, (ii) identifying relevant studies, (iii) study selection, (iv) data charting and collating, (v) summarising, and (vi) reporting the results (Arksey and O’Malley Citation2005).

Stage one: identifying the question

Broad questions, including appropriate key terms, are essential in framing a scoping review. We aimed to generate a breadth of coverage. We developed the broad research aim: to systematically scope the literature to explore student motivations, perceptions and opinions of SET.

Stage two: identifying the relevant studies

In consultation with a university librarian, two independent researchers duplicated the search. The following keywords and search terms were used in the systematic literature search: ‘student evaluation of teaching’ OR ‘student evaluation*’ OR ‘student rating*’ OR ‘student satisfaction’ OR ‘teach* evaluation’ OR ‘teach* effective*’ OR ‘teach* performance’ OR ‘student feedback*’ OR ‘student survey’ AND ‘higher*education’ OR ‘university’ OR ‘college’ OR ‘tertiary’.

Five EBSCO databases were searched: Academic Search Premier, Teacher Reference Centre, Education Research Complete, ERIC and PsycINFO, which were chosen as they comprehensively cover educational and psychological research. The systematic search was conducted in November 2022 for any English-written, scholarly studies published after 2010, when anonymous online SET surveys became more commonly employed than the paper-based alternative (Baruch and Tal Citation2019). All articles that met the following inclusion criteria were examined by title, abstract and full text: i) qualitative, quantitative or mixed-methods primary research, (ii) published in English, (iii) motivations, perceptions, opinions of higher-education students eligible to complete SETs, and (iv) focused on anonymous, online SETs. Papers were excluded if they: (i) were a secondary resource (other reviews or meta-analyses), (ii) included only academics’ motivations, perceptions and opinions of SETs, (iii) focused on paper-based or non-anonymised SETs, or (iv) were not related to the higher education sector (see supplementary material for full table of excluded studies with reasons).

Stage three: study selection

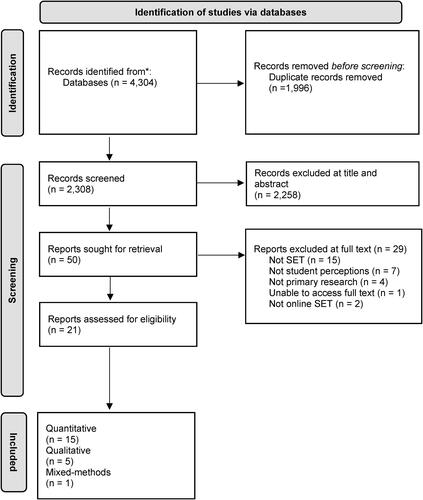

The search retrieved 4,304 articles. Of these, 1,996 were duplicated and removed, leaving 2,308 articles read by title and abstract. Inclusion criteria were unmet for 2,258 articles at this stage, and the full text of 50 articles were then read. Of these, 29 were excluded because they met the exclusion criteria. Twenty-one papers remained and were included in the review. clarifies the complete screening and selection process in a PRISMA flow diagram.

Stage 4: charting the data

A data extraction table was developed based on the preliminary scoping phase (see supplementary material). Scoping reviews are set apart from other types of systematic reviews by the lack of requirement to include a critical appraisal of the reviewed studies. This is generally due to the homogenous nature of the study types and methodologies that a scoping review will uncover (Tricco et al. Citation2018). However, the limitations of each paper were included in the data extraction table so that the reader could identify any apparent lack of quality across the included papers. Data extraction included: (i) author, year and country; (ii) research aim; (iii) study type; (iv) research design; vi) participant characteristics; (vii) institution type; (viii) findings; and (ix) limitations.

Stage 5: collating, summarising and reporting the results

In keeping with the scoping review methodology, we extracted and charted the data into predefined meaningful categories, which included study characteristics and the key identified themes. After data extraction, reflexive thematic analysis (Braun and Clarke Citation2006) was conducted to explore the identified themes within the papers and compare the findings between studies. All authors reviewed the themes and agreed on the structure of the findings. The data were collated, summarised and presented as a narrative synthesis.

Results

Study characteristics

Across the 21 studies, 16,561 students were represented. There were 14 cross-sectional studies (Balam and Shannon Citation2010; Patrick Citation2011; Backer, Citation2012; Fetzner Citation2013; Asassfeh et al. Citation2014; Nasrollahi et al. Citation2014; Kite, Subedi, and Bryant-Lees Citation2015; Spooren and Christiaens Citation2017; McClain, Gulbis, and Hays Citation2018; Thielsch, Brinkmöller, and Forthmann Citation2018; Alsmadi Citation2019; Hoel and Dahl Citation2019; Cox, Rickard, and Lowery Citation2022; Suárez Monzón, Gómez Suárez, and Lara Paredes Citation2022), three focus group studies (Kinash, Knight, and Hives Citation2011; Ernst Citation2014; Gupta et al. Citation2020), one qualitative Delphi study (Cone et al. Citation2018), mixed methods study (Stein et al. Citation2021), natural experiment (Cho, Baek, and Cho Citation2015) and qualitative longitudinal study (Pettit et al. Citation2015). Ten studies were conducted in the United States (Balam and Shannon Citation2010; Patrick Citation2011; Fetzner Citation2013; Ernst Citation2014; Kite, Subedi, and Bryant-Lees Citation2015; Pettit et al. Citation2015; Cone et al. Citation2018; McClain, Gulbis, and Hays Citation2018; Gupta et al. Citation2020; Cox, Rickard, and Lowery Citation2022), two in Australia (Kinash, Knight, and Hives Citation2011; Backer, Citation2012) and Jordan (Asassfeh et al. Citation2014; Alsmadi Citation2019), one in Ecuador (Suárez Monzón, Gómez Suárez, and Lara Paredes Citation2022), New Zealand (Stein et al. Citation2021), Iran (Nasrollahi et al. Citation2014), Korea (Cho, Baek, and Cho Citation2015), Norway (Hoel and Dahl Citation2019), Germany (Thielsch, Brinkmöller, and Forthmann Citation2018) and Belgium (Spooren and Christiaens Citation2017).

Themes

Five themes were identified which captured the main known influences on students’ motivations, perceptions and opinions on completing SET surveys: (i) the value students place on SET, (ii) the knowledge that SET responses are acted upon to improve teaching, (iii) assurance of survey confidentiality and anonymity, (iv) the promise of incentives for completing SET, (v) survey design and timing of survey release.

Theme one: the value students place on SETs

Students’ perceptions, knowledge and attitudes about SET were identified as factors influencing students’ decisions to participate in SET processes. Students commented that their lack of knowledge about the SET process and how they were used to improve teaching performance and course content was a barrier to completing the SET surveys (Cone et al. Citation2018; Hoel and Dahl Citation2019; Gupta et al. Citation2020; Stein et al. Citation2021). Students acknowledged that SET was important to help improve teaching and evaluate academic performance, so student voices were heard (Balam and Shannon Citation2010; Kinash, Knight, and Hives Citation2011; Backer, Citation2012; Kite, Subedi, and Bryant-Lees Citation2015; Pettit et al. Citation2015; Spooren and Christiaens Citation2017; Thielsch, Brinkmöller, and Forthmann Citation2018; Alsmadi Citation2019; Gupta et al. Citation2020; Suárez Monzón, Gómez Suárez, and Lara Paredes Citation2022). A qualitative longitudinal study of 193 fourth-year medical students explored what students value in SET by asking them to design their ideal SET survey (Pettit et al. Citation2015). Thirty-six of the surveys were included in a content analysis. Four factors identified what students value in SET: (i) content, (ii) environment, (iii) teaching methods and (iv) teacher personal attributes.

The studies also showed that students perceived themselves as good judges of teaching performance, believing they are qualified to assess the teaching of academic staff (Nasrollahi et al. Citation2014; Suárez Monzón, Gómez Suárez, and Lara Paredes Citation2022). A cross-sectional survey of 251 health science students in Iran reported that students rated themselves more highly than academics on the ability to assess teaching performance reliably (Nasrollahi et al. Citation2014).

Not all students provided honest feedback on SET. Students admitted that they were sometimes dishonest in their evaluation of teaching performance (Backer, Citation2012; Asassfeh et al. Citation2014; Kite, Subedi, and Bryant-Lees Citation2015; McClain, Gulbis, and Hays Citation2018; Cox, Rickard, and Lowery Citation2022). In a cross-sectional study of 235 students in Australia, 30% believed that students provide low SET scores as a punishment to their teacher for receiving low grades (Backer, Citation2012)

Researchers suggest academics who give better grades are more likely to get better SET scores, while academics who are stricter in grading receive poorer SET scores (Patrick Citation2011; Backer, Citation2012; Fetzner Citation2013; Cho, Baek, and Cho Citation2015; Suárez Monzón, Gómez Suárez, and Lara Paredes Citation2022). A natural experimental opportunity arose when 5135 Korean students were the subject of a system-related technical error which naturally created experimental groups. Group one was accidentally informed of grades in class before completing SET and group two were not. (Cho, Baek, and Cho Citation2015). Students who received better grades than expected evaluated teaching performance highly, while those who received poorer grades evaluated teaching performance as lower.

The likability of an academic also influences SET scores. Ernst (Citation2014) found that students were strongly motivated to participate in SET if they believed the evaluation could positively or negatively impact academics’ tenure, salary or promotional opportunities. Academics with personality traits deemed as likable scored better in SET than those considered less likable (Balam and Shannon Citation2010; Patrick Citation2011; Spooren and Christiaens Citation2017; Suárez Monzón, Gómez Suárez, and Lara Paredes Citation2022). A cross-sectional study of 974 students in six disciplines in Belgium found that gender, seniority and academic discipline influenced student views of SET (Spooren and Christiaens Citation2017). In the USA, 978 students in a cross-sectional study believed that academics with better publication records were better teachers and deserved better SET scores (Balam and Shannon Citation2010).

Theme two: the knowledge that SET responses are acted upon to improve teaching

Students appeared motivated to complete SET surveys at the end of the study period if they perceived their responses would be acted on and their future learning enhanced. Students were more motivated to engage with the SET if they could see that previous SET response had resulted in changes in teaching performance or course development (Asassfeh et al. Citation2014; Ernst Citation2014; Cone et al. Citation2018; Hoel and Dahl Citation2019; Gupta et al. Citation2020; Stein et al. Citation2021). A mixed methods study including 1161 multi-disciplinary undergraduate students in two New Zealand universities utilising surveys and focus groups explored student perceptions about SET (Stein et al. Citation2021). The students reported they were happy to complete SET surveys but were more likely to complete them if they were confident the teaching staff would use their responses to improve teaching performance and course content. A similar sentiment was reflected in studies conducted in the United States (Ernst Citation2014; Cone et al. Citation2018; Gupta et al. Citation2020), Norway (Hoel and Dahl Citation2019) and Jordan (Asassfeh et al. Citation2014). Although these studies differ widely in cultural context, academic discipline and study design, all demonstrated students’ willingness to participate if their time invested in completing the SET resulted in change.

Theme Three: Assurance of survey confidentiality and anonymity

Traditionally, students have completed SETs anonymously (Lakeman et al. Citation2022b). Students are more likely to complete SET surveys if they can be assured of their confidentiality and that teaching staff cannot identify them from their responses (Kinash, Knight, and Hives Citation2011; Ernst Citation2014; Stein et al. Citation2021). Kinash, Knight, and Hives (Citation2011) cited anonymity as critical because of fears of reprisal by academic staff and potential adverse effects on their grades if they were identifiable. This fear was magnified if students believed they would have the same academic in subsequent courses.

In addition to anonymity, Stein et al. (Citation2021) found that students who were uncomfortable with constructing feedback found surveys a helpful way to provide feedback. However, in a study of five focus groups, each with six postgraduate students, Ernst (Citation2014) observed that preserving students’ confidentiality was at odds with incentivising students to participate in SET. Ernst found a strong correlation between students’ need to retain anonymity and their perception of the potential consequences their feedback could have on them.

Theme Four: the promise of incentives for completing SET

Another motivator acknowledged in the literature for engaging in SET is using various incentives (Ernst Citation2014; Cone et al. Citation2018; Hoel and Dahl Citation2019; Gupta et al. Citation2020). When no incentive was offered, students were less likely to see the value in completing SET surveys. Ernst (Citation2014) found a strong positive correlation between the likelihood of providing feedback and the reward for releasing grades. Students in two studies from the USA indicated that financial incentive for completing the SET would increase their participation (Cone et al. Citation2018; Gupta et al. Citation2020), while a Norwegian cross-sectional survey of 689 students indicated that going into a draw for a prize increased the number of students who completed SET (Hoel and Dahl Citation2019). However, a qualitative Delphi study of 36 pharmacy students in the USA noted the potential for threatening the academic-student alliance by using negative incentives such as withholding student grades until the SET was complete (Cone et al. Citation2018).

Theme Five: Survey design and timing of survey release

The importance of the design of SET surveys was identified in the literature as a predictor of students engaging and completing SETs. Surveys shorter in length with rating scales that were easy to interpret were more likely to be completed than longer surveys with confusing rating scales (Kinash, Knight, and Hives Citation2011; Asassfeh et al. Citation2014; Cone et al. Citation2018; Gupta et al. Citation2020). A cross-sectional study of 620 undergraduate students in Jordan found that students preferred online delivery of the SET surveys rather than the traditional paper-based surveys (Asassfeh et al. Citation2014). These findings were mirrored in a focus group study exploring 2487 undergraduates’ perceptions in Australia (Kinash, Knight, and Hives Citation2011).

Student evaluation of teaching surveys have traditionally been released at the busiest time of students’ study periods when final assessments and examinations are due (Lakeman et al. Citation2022a). Releasing SETs at quieter times during the study period was a predictive factor in motivating students to complete them (Cone et al. Citation2018; Kinash, Knight, and Hives Citation2011; Gupta et al. Citation2020). In the study conducted by Ernst (Citation2014) using focus groups, it was found ‘time investment’ was an essential part of student’s decision-making process when deciding to participate in SETs, further reinforcing the potential to skew results. They suggested that students with moderate views are less likely to participate in SET in their own time, meaning those who do participate are likely to either be very happy or very unhappy; students in one group expressed that anonymous SET is their only opportunity for retribution.

Discussion

In this scoping review, we aimed to scope the literature and explore student motivations, perceptions and opinions of SET. Student evaluations of teaching are the most common tool for assessing teaching in contemporary higher education (Spooren and Christiaens Citation2017). Indeed, SET is also often a required reported measure of universities’ key performance indicators and used to judge the quality of the university (Suárez Monzón, Gómez Suárez, and Lara Paredes Citation2022). The scoping review found students’ perceptions and opinions of SET were important motivators in SET when used to (i) improve teaching quality, (ii) inform tenure/promotion decisions, and (iii) demonstrate an institution’s accountability (Kember, Leung, and Kwan Citation2002; Chen and Hoshower Citation2003). Clearly, SET are essential tools for students, academics and universities. Despite the importance of SET, students do not always engage with them (Chen and Hoshower Citation2003; Cone et al. Citation2018), and the reasons for this are poorly understood. Therefore, this review’s findings offer important insights.

We identified studies from several countries highlighting that SET is an essential international quality improvement activity undertaken by universities. Whilst SET is important and should provide a valid and reliable measure of academic performance (Oermann et al. Citation2018), they often fall short of achieving this objective (Heffernan Citation2022). Ende (Citation1983) recognised the role emotion plays when students give feedback, which means that feedback left by students may be either purposefully or unintentionally emotionally charged (Guess and Bowling Citation2014; Heffernan Citation2022; Lakeman et al. Citation2022). Given the widespread use of SET by universities and the overwhelming evidence of the poor validity of SET (El Hassan Citation2009; Patrick Citation2011; Uttl, White, and Gonzalez Citation2017; Cook, Jones, and Al-Twal Citation2022), a reliable measure of sound evaluation practices which recognises the imperfect nature of feedback in human sciences should be pursued.

An important finding of this scoping review was that students do not believe they benefit from changes resulting from their feedback once a course is completed. Therefore, the process is often perceived by students as altruistic and time-consuming, which can become a significant barrier to student engagement (Gupta et al. Citation2020). Evidence shows that evaluations offered to students earlier provide richer information than information collected later due to responders being more engaged (Estelami Citation2015). We, therefore, suggest providing the opportunity for feedback during teaching periods rather than around the end of teaching when assessments and examination deadlines are imminent for students. This change will likely improve engagement and enhance the validity of the data.

Concerns about the anonymous nature of SET have been raised in the literature (Lakeman et al. Citation2022; Lakeman et al. Citation2022a, Citation2022b). SET provides a vehicle for retribution and damage to academics, which negatively impacts the recruitment and retention of the academic workforce (Clayson Citation2022; Lee et al. Citation2022). Students are rarely given instructions on how to give constructive feedback or how to complete SET. Despite this, students identify that they feel qualified to provide objective and valid feedback on the quality of teaching (Suárez Monzón, Gómez Suárez, and Lara Paredes Citation2022). Therefore, we recommend changes to the anonymity afforded to students when participating in SET. Students’ rights to preserve anonymity must be balanced against the right of academics to enjoy a safe working environment free from harassment and abuse.

Knowledge gaps and potential solutions

Previous research has focused on the impact of SET on teaching and learning and how to improve the system from an academic point of view (Gupta et al. Citation2020; Cook, Jones, and Al-Twal Citation2022; Lloyd and Wright-Brough Citation2022), but no research to date has explored students’ ideas about how the SET system could be improved for all stakeholders. Many of the recommendations offered within this scoping review, such as finding a balance between anonymity and protecting staff from abuse or improving the timing of feedback collection, may already be addressed in research focused on academic problem-solving of the issue. Therefore, these solutions may be absent from this review due to limiting the search terminology to student perspectives. Future research could expand upon these gaps by gauging students’ opinions on how they believe the system could be improved and incorporating this with current SET solution-focused research.

Strengths and limitations

This scoping review has provided a systematic and replicable overview of a broad SET literature sample. This has enabled the capture of a wide range of data from diverse designs and methodologies to explore student motivations, perceptions and opinions of SET. Limitations to the study included a lack of a formalised tool to appraise studies. However, the limitations of each paper were identified and included in the data extraction table so that the reader can determine the quality of data across the included papers. Students more likely to participate in research are those most likely to complete SET. All the studies, therefore, may miss the voices of those students least likely to complete SET.

Conclusions

This scoping review identified a range of research related to the motivations, perceptions and opinions of students who provide feedback related to academic staff teaching ability. This affords direction and suggestions for academics and institutions to refine processes and systems for collecting SET data that will be meaningful. Perceptions, knowledge and attitudes about the value of SET were identified as essential factors in motivating students to engage and complete SETs, particularly if surveys were easy to interpret, their time for completing them was incentivised, and they believed their responses would be valued. However, a lack of knowledge about the SET process hindered engaging with SET. Opportunities to engage with SET at quieter times of the study period were meaningful for motivation to engage with the process. Another factor was that students felt protected by SET as a confidential process. Small changes to how SETs are distributed may help in improving student participation levels and reduce unconstructive negative commentary aimed at academic staff.

Supplemental Material

Download MS Word (42.7 KB)Supplemental Material

Download MS Word (44.1 KB)Data availability

The review protocol was registered with Open Sciences Framework (https://osf.io/fm98u/).

Disclosure statement

The authors declare no conflict of interest.

Notes on contributors

Daniel Sullivan is a casual academic and PhD student at Southern Cross University. Daniel’s research focuses on improving social stigma and psychiatric care for young males experiencing psychotic symptoms.

Richard Lakeman is an Associate Professor at Southern Cross University and Coordinator of SCU Online Mental Health Programmes. He is a Mental Health Nurse, psychotherapist and fellow of the Australian College of Mental Health Nursing.

Professor Debbie Massey at Edith Cowan Universoty is an intensive care nurse and focuses research in the area of patient safety, patient deterioration and teaching and learning.

Dima Nasrawi is Lecturer in Nursing at Southern Cross University and a PhD student at Griffith University. She is a cardiac nurse and a member of the Australian Cardiac Rehabilitation Association.

Associate Professor Marion Tower has an established career in teaching and learning and leadership experience in the higher education sector. She is also a Board Director and Deputy Chair for Metro South Hospital and Health Services and Chairs the Metro South Safety & Quality committee.

Dr Megan Lee is a Senior Teaching Fellow at Bond University and adjunct senior lecturer at Southern Cross University. Dr Lee’s research focuses on nutritional psychiatry and occupational stress in academic populations.

Additional information

Funding

References

- *Alsmadi, A. A. 2019. “Assessment of the Relationship between Level of Achievement and Perceived Importance of Teaching Evaluation.” Dirasat: Educational Sciences, 46 (1): 771–777. https://archives.ju.edu.jo/index.php/edu/article/view/14632/9682.

- Arksey, H., and L. O’Malley. 2005. “Scoping Studies: Towards a Methodological Framework.” International Journal of Social Research Methodology 8 (1): 19–32. doi:10.1080/1364557032000119616.

- *Asassfeh, S., H. Al-Ebous, F. Khwaileh, and Z. Al-Zoubi. 2014. “Student Faculty Evaluation (SFE) at Jordanian Universities: A Student Perspective.” Educational Studies 40 (2): 121–143. doi:10.1080/03055698.2013.833084.

- *Backer, E. 2012. “Burnt at the Student Evaluation Stake - the Penalty for Failing Students.” E-Journal of Business Education & Scholarship of Teaching, 6 (1): 1–13. https://media.proquest.com/media/pq/classic/doc/2826724981/fmt/pi/rep/NONE?cit%3Aauth=Backer%2C+Elisa&cit%3Atitle=Burnt+at+the+Student+Evaluation+Stake+-+the+penalty+for+failing+students&cit%3Apub=The+e+-+Journal+of+Business+Education+%26+Scholarship+of+Teaching&cit%3Avol=6&cit%3Aiss=1&cit%3Apg=1&cit%3Adate=2012&ic=true&cit%3Aprod=ProQuest+Central&_a=ChgyMDIyMTIwNjAwMDIyODY1MDoyNjg3NzcSBTg4ODQ0GgpPTkVfU0VBUkNIIg8xNTkuMTk2LjE2OS4yMzIqBzE0NTYzNjQyCjEyMjA0NDY1MDk6DURvY3VtZW50SW1hZ2VCATBSBk9ubGluZVoCRlRiA1BGVGoKMjAxMi8wMS8wMXIKMjAxMi8wNi8zMHoAggEyUC0xMDAwMDAxLTI2NTAzLUNVU1RPTUVSLTEwMDAwMjU1LzEwMDAwMDA4LTQ3MzUwMjOSAQZPbmxpbmXKAW9Nb3ppbGxhLzUuMCAoV2luZG93cyBOVCAxMC4wOyBXaW42NDsgeDY0KSBBcHBsZVdlYktpdC81MzcuMzYgKEtIVE1MLCBsaWtlIEdlY2tvKSBDaHJvbWUvMTA4LjAuMC4wIFNhZmFyaS81MzcuMzbSARJTY2hvbGFybHkgSm91cm5hbHOaAgdQcmVQYWlkqgIrT1M6RU1TLU1lZGlhTGlua3NTZXJ2aWNlLWdldE1lZGlhVXJsRm9ySXRlbcoCB0ZlYXR1cmXSAgFZ8gIA%2BgIBToIDA1dlYooDHENJRDoyMDIyMTIwNjAwMDIyODY1MDo0NDExODY%3D&_s=VAksNZlEIg0kEIrT4YR7RfRYzWA%3D.

- *Balam, E. M., and D. M. Shannon. 2010. “Student Ratings of College Teaching: A Comparison of Faculty and Their Students.” Assessment & Evaluation in Higher Education 35 (2): 209–221. doi:10.1080/02602930902795901.

- Baruch, A. F., and H. M. Tal. 2019. “Mobile Technologies in Educational Organizations.” Advances in Educational Technologies and Instructional Design (AETID) Book Series. Hershey, PA: IGI Global. doi:10.4018/978-1-5225-8106-2.

- Boring, A., K. Ottoboni, and P. Stark. 2016. “Student Evaluations of Teaching (Mostly) Do Not Measure Teaching Effectiveness.” ScienceOpen Research 0 (0): 1–11. doi:10.14293/S2199-1006.1.SOR-EDU.AETBZC.v1.

- Braun, V., and V. Clarke. 2006. “Using Thematic Analysis in Psychology.” Qualitative Research in Psychology 3 (2): 77–101. doi:10.1191/1478088706qp063oa.

- Chen, Y. 2023. “Does Students’ Evaluation of Teaching Improve Teaching Quality? Improvement versus the Reversal Effect.” Assessment & Evaluation in Higher Education. doi:10.1080/02602938.2023.2177252.

- Chen, Y., and L. B. Hoshower. 2003. “Student Evaluation of Teaching Effectiveness: An Assessment of Student Perception and Motivation.” Assessment & Evaluation in Higher Education 28 (1): 71–88. doi:10.1080/02602930301683.

- Ching, G. 2019. “A Literature Review on the Student Evaluation of Teaching: An Examination of the Search, Experience, and Credence Qualities of SET.” Higher Education Evaluation and Development 12 (2): 63–84. doi:10.1108/HEED-04-2018-0009.

- *Cho, D., W. Baek, and J. Cho. 2015. “Why Do Good Performing Students Highly Rate Their Instructors? Evidence from a Natural Experiment.” Economics of Education Review 49: 172–179. doi:10.1016/j.econedurev.2015.10.001.

- Clayson, D. 2022. “The Student Evaluation of Teaching and Likability: What the Evaluations Actually Measure.” Assessment & Evaluation in Higher Education 47 (2): 313–326. doi:10.1080/02602938.2021.1909702.

- *Cone, C., V. Viswesh, V. Gupta, and E. Unni. 2018. “Motivators, Barriers, and Strategies to Improve Response Rate to Student Evaluation of Teaching.” Currents in Pharmacy Teaching & Learning 10 (12): 1543–1549. doi:10.1016/j.cptl.2018.08.020.

- Cook, C., J. Jones, and A. Al-Twal. 2022. “Validity and Fairness of Utilising Student Evaluation of Teaching (SET) as a Primary Performance Measure.” Journal of Further and Higher Education 46 (2): 172–184. doi:10.1080/0309877X.2021.1895093.

- *Cox, S. R., M. K. Rickard, and C. M. Lowery. 2022. “The Student Evaluation of Teaching: Let’s Be Honest - Who is Telling the Truth?” Marketing Education Review 32 (1): 82–93. doi:10.1080/10528008.2021.1922924.

- Dommeyer, C. J., P. Baum, R. W. Hanna, and K. S. Chapman. 2004. “Gathering Faculty Teaching Evaluations by in-Class and Online Surveys: Their Effects on Response Rates and Evaluations.” Assessment & Evaluation in Higher Education 29 (5): 611–623. doi:10.1080/02602930410001689171.

- El Hassan, K. 2009. “Investigating Substantive and Consequential Validity of Student Ratings of Instruction.” Higher Education Research & Development 28 (3): 319–333. doi:10.1080/07294360902839917.

- Ende, J. 1983. “Feedback in Clinical Medical Education.” JAMA: The Journal of the American Medical Association 250 (6): 777. https://jamanetwork.com/journals/jama/fullarticle/387652. doi:10.1001/jama.1983.03340060055026.

- *Ernst, D. 2014. “Expectancy Theory Outcomes and Student Evaluations of Teaching.” Educational Research and Evaluation 20 (7-8): 536–556. doi:10.1080/13803611.2014.997138.

- Estelami, H. 2015. “The Effects of Survey Timing on Student Evaluation of Teaching Measures Obtained Using Online Surveys.” Journal of Marketing Education 37 (1): 54–64. doi:10.1177/0273475314552324.

- Fenn, A. J. 2015. “Student Evaluation Based Indicators of Teaching Excellence from a Highly Selective Liberal Arts College.” International Review of Economics Education 18: 11–24. doi:10.1016/j.iree.2014.11.001.

- *Fetzner, M. 2013. “What Do Unsuccessful Online Students Want us to Know?” Journal of Asynchronous Learning Networks 17 (1): 13–27. https://files.eric.ed.gov/fulltext/EJ1011376.pdf.

- Freyd, M. 1923. “A Graphic Rating Scale for Teachers.” The Journal of Educational Research 8 (5): 433–439. doi:10.1080/00220671.1923.10879421.

- Guess, P., and S. Bowling. 2014. “Students’ Perceptions of Teachers: Implications for Classroom Practices for Supporting Students’ Success.” Preventing School Failure: Alternative Education for Children and Youth 58 (4): 201–206. doi:10.1080/1045988X.2013.792764.

- Gump, S. E. 2007. “Student Evaluations of Teaching Effectiveness and the Leniency Hypothesis: A Literature Review.” Educational Research Quarterly 30 (3): 56–69. https://ezproxy.scu.edu.au/login?url=https://search.ebscohost.com/login.aspx?direct=true&db=eric&AN=EJ787711&site=ehost-live.

- *Gupta, V., V. Viswesh, C. Cone, and E. Unni. 2020. “Qualitative Analysis of the Impact of Changes to the Student Evaluation of Teaching Process.” American Journal of Pharmaceutical Education 84 (1): 7110. doi:10.5688/ajpe7110.

- Hattie, J., and H. Timperley. 2007. “The Power of Feedback.” Review of Educational Research 77 (1): 81–112. doi:10.3102/003465430298487.

- Heffernan, T. 2022. “Sexism, Racism, Prejudice, and Bias: A Literature Review and Synthesis of Research Surrounding Student Evaluations of Courses and Teaching.” Assessment & Evaluation in Higher Education 47 (1): 144–154. doi:10.1080/02602938.2021.1888075.

- Heffernan, T., and L. Bosetti. 2021. “Incivility: The New Type of Bullying in Higher Education.” Cambridge Journal of Education 51 (5): 641–652. doi:10.1080/0305764X.2021.1897524.

- Heine, P., and N. Maddox. 2009. “Student Perceptions of the Faculty Course Evaluation Process: An Exploratory Tsudy of Gender and Class Differences.” Research in Higher Education Journal, 3 (1): 1–10. https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=25ce08745715bed8d6e4f4c26b624d98c3632ea2.

- *Hoel, A., and T. I. Dahl. 2019. “Why Bother? Student Motivation to Participate in Student Evaluations of Teaching.” Assessment & Evaluation in Higher Education 44 (3): 361–378. doi:10.1080/02602938.2018.1511969.

- Hollerbach, S., A. Sarnecki, and M. Bechtoldt. 2021. “The Wolf in Sheep’s Clothing [Article].” Academy of Management Proceedings 2021 (1): 12368. doi:10.5465/AMBPP.2021.12368abstract.

- Hutchinson, M., R. Coutts, D. Massey, D. Nasrawi, J. Fielden, M. Lee, and R. Lakeman. 2023. “Student Evaluation of Teaching: Reactions of Australian Academics to Anonymous Non-Constructive Student Commentary.” Assessment & Evaluation in Higher Education. doi:10.1080/02602938.2023.2195598.

- Kember, D., D. Y. P. Leung, and K. P. Kwan. 2002. “Does the Use of Student Feedback Questionnaires Improve the Overall Quality of Teaching?” Assessment & Evaluation in Higher Education 27 (5): 411–425. doi:10.1080/0260293022000009294.

- *Kinash, S., D. Knight, and L. Hives. 2011. “Student Perspective on Electronic Evaluation of Teaching.” Studies in Learning, Evaluation, Innovation & Development, 8 (1): 86–97. http://eprints.usq.edu.au/32981/1/Kinash_2011_PV.pdf.

- *Kite, M. E., P. C. Subedi, and K. B. Bryant-Lees. 2015. “Students’ Perceptions of the Teaching Evaluation Process.” Teaching of Psychology 42 (4): 307–314. doi:10.1177/0098628315603062.

- Kreitzer, R. J., and J. Sweet-Cushman. 2022. “Evaluating Student Evaluations of Teaching: A Review of Measurement and Equity Bias in SETs and Recommendations for Ethical Reform.” Journal of Academic Ethics 20 (1): 73–84. doi:10.1007/s10805-021-09400-w.

- Lakeman, R., R. Coutts, M. Hutchinson, M. Lee, D. Massey, D. Nasrawi, and J. Fielden. 2022. “Appearance, Insults, Allegations, Blame and Threats: An Analysis of Anonymous Non-Constructive Student Evaluation of Teaching in Australia.” Assessment & Evaluation in Higher Education 47 (8): 1245–1258. doi:10.1080/02602938.2021.2012643.

- Lakeman, R., R. Coutts, M. Hutchinson, D. Massey, D. Nasrawi, J. Fielden, and M. Lee. 2022a. “Playing the SET Game: How Teachers View the Impact of Student Evaluation on the Experience of Teaching and Learning.” Assessment & Evaluation in Higher Education. doi:10.1080/02602938.2022.2126430.

- Lakeman, R., R. Coutts, M. Hutchinson, D. Massey, D. Nasrawi, J. Fielden, and M. Lee. 2022b. “Stress, Distress, Disorder and Coping: The Impact of Anonymous Student Evaluation of Teaching on the Health of Higher Education Teachers.” Assessment & Evaluation in Higher Education 47 (8): 1489–1500. doi:10.1080/02602938.2022.2060936.

- Lee, M., R. Coutts, J. Fielden, M. Hutchinson, R. Lakeman, B. Mathisen, D. Nasrawi, and N. Phillips. 2022. “Occupational Stress in University Academics in Australia and New Zealand.” Journal of Higher Education Policy and Management 44 (1): 57–71. doi:10.1080/1360080X.2021.1934246.

- Lee, M., D. Nasrawi, M. Hutchinson, and R. Lakeman. 2021. Our uni teachers were already among the world’s most stressed. COVID and student feedback have just made things worse. https://theconversation.com/our-uni-teachers-were-already-among-the-worlds-most-stressed-covid-and-student-feedback-have-just-made-things-worse-162612

- Levac, Danielle, Heather Colquhoun, and Kelly K. O’Brien. 2010. “Scoping Studies: Advancing the Methodology.” Implementation Science: IS 5: 69. doi:10.1186/1748-5908-5-69.

- Lloyd, M., and F. Wright-Brough. 2022. “Setting out SET: A Situational Mapping of Student Evaluation of Teaching in Australian Higher Education.” Assessment & Evaluation in Higher Education. doi:10.1080/02602938.2022.2130169.

- Martin, J. R. 1998. “Evaluating Faculty Based on Student Opinions: Problems, Implications and Recomendations from Deming’s Theory of Management Perspective.” Issues in Accounting Education 13 (4): 1079–1094. https://maaw.info/ArticleSummaries/ArtSumMartinSet98.htm.

- *McClain, L., A. Gulbis, and D. Hays. 2018. “Honesty on Student Evaluations of Teaching: Effectiveness, Purpose, and Timing Matter!.” Assessment & Evaluation in Higher Education 43 (3): 369–385. doi:10.1080/02602938.2017.1350828.

- *Nasrollahi, A., A. Mirzaei, M. Shamsizad, Z. Mirzaei, A. Azar-Abdar, R. Vafaee, and N. Vazifeshenas. 2014. “Students and Faculty Viewpoint of Ilam University of Medical Sciences about the Students’ Evaluation System of the Instruction Quality.” Journal of Paramedical Sciences, 5 (2): 51–58. https://journals.sbmu.ac.ir/aab/article/view/5918/5102.

- Oermann, M. H., J. L. Conklin, S. Rushton, and M. A. Bush. 2018. “Student Evaluations of Teaching (SET): Guidelines for Their Use.” Nursing Forum 53 (3): 280–285. doi:10.1111/nuf.12249.

- *Patrick, C. L. 2011. “Student Evaluations of Teaching: Effects of the Big Five Personality Traits, Grades and the Validity Hypothesis.” Assessment & Evaluation in Higher Education 36 (2): 239–249. doi:10.1080/02602930903308258.

- *Pettit, J. E., R. D. Axelson, K. J. Ferguson, and M. E. Rosenbaum. 2015. “Assessing Effective Teaching: What Medical Students Value When Developing Evaluation Instruments.” Academic Medicine: Journal of the Association of American Medical Colleges 90 (1): 94–99. doi:10.1097/ACM.0000000000000447.

- Read, W. J., D. V. Rama, and K. Raghunandan. 2001. “The Relationship between Student Evaluations of Teaching and Faculty Evaluations.” Journal of Education for Business 76 (4): 189–192. doi:10.1080/08832320109601309.

- Richardson, J. T. 2005. “Instruments for Obtaining Student Feedback: A Review of the Literature.” Assessment & Evaluation in Higher Education 30 (4): 387–415. doi:10.1080/02602930500099193.

- Riniolo, T. C., K. C. Johnson, T. R. Sherman, and J. A. Misso. 2006. “Hot or Not: Do Professors Perceived as Physically Attractive Receive Higher Student Evaluations?” The Journal of General Psychology 133 (1): 19–35. doi:10.3200/GENP.133.1.19-35.

- Smalzried, N. T., and H. H. Remmers. 1943. “A Factor Analysis of the Purdue Rating Scale for Instructors.” Journal of Educational Psychology 34 (6): 363–367. https://psycnet.apa.org/doi/10 .1037/h0060532.

- *Spooren, P., and W. Christiaens. 2017. “I Liked Your Course Because I Believe in (the Power of) Student Evaluations of Teaching (SET). Students’ Perceptions of a Teaching Evaluation Process and Their Relationships with SET Scores.” Studies in Educational Evaluation 54: 43–49. http://files.eric.ed.gov/fulltext/EJ960975.pdf. doi:10.1016/j.stueduc.2016.12.003.

- *Stein, S. J., A. Goodchild, A. Moskal, S. Terry, and J. McDonald. 2021. “Student Perceptions of Student Evaluations: Enabling Student Voice and Meaningful Engagement.” Assessment & Evaluation in Higher Education 46 (6): 837–851. doi:10.1080/02602938.2020.1824266.

- *Suárez Monzón, N., V. Gómez Suárez, and D. G. Lara Paredes. 2022. “Is my Opinion Important in Evaluating Lecturers? Students’ Perceptions of Student Evaluations of Teaching (SET) and Their Relationship to SET Scores.” Educational Research and Evaluation 27 (1-2): 117–140. doi:10.1080/13803611.2021.2022318.

- *Thielsch, M. T., B. Brinkmöller, and B. Forthmann. 2018. “Reasons for Responding in Student Evaluation of Teaching.” Studies in Educational Evaluation 56: 189–196. doi:10.1016/j.stueduc.2017.11.008.

- Tricco, A. C., E. Lillie, W. Zarin, K. K. O’Brien, H. Colquhoun, D. Levac, D. Moher, et al. 2018. “PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation.” Annals of Internal Medicine 169 (7): 467–473. doi:10.7326/M18-0850.

- Uttl, B., C. A. White, and D. W. Gonzalez. 2017. “Meta-Analysis of Faculty’s Teaching Effectiveness: Student Evaluation of Teaching Ratings and Student Learning Are Not Related [Article].” Studies in Educational Evaluation 54: 22–42. doi:10.1016/j.stueduc.2016.08.007.