ABSTRACT

Integration of technology in schools rests on effective teacher education programmes that help teachers create new teaching and learning methods and adopt them for classroom use. Social learning processes play a key role in this, but there is a lack of understanding of their role in technology adoption and in evidencing them in teacher education programmes. Using the knowledge appropriation model, we propose a self-report questionnaire instrument to evidence knowledge creation and learning practices during training. With a sample of N = 109 in-service teachers participating in the Teacher Innovation Laboratory, a teacher professional development programme that is built around school−university co-creation partnerships, we demonstrate the instrument to be reliable and to differentiate between groups who completed different programmes. The instrument predicted intended adoption of technology-enhanced learning methods beyond individual level constructs, highlighting the important role that social practices play for the eventual adoption of technologies in the classroom.

Introduction

Introducing technologies into the classroom is a challenging endeavour and despite interest and investments, technologies are often not productively used in schools on a larger scale. Such limitations become especially important in today’s reality where more distance and blended learning practices are promoted in schools. Therefore, the preparation of teachers to combine technological, pedagogical and subject knowledge is becoming more crucial in teacher education. Studies conducted during the distance learning period following school lockdowns highlight that teachers having previous experiences with digital technologies experience online teaching more positively than they had expected (Van Der Spoel et al. Citation2020). In a recent editorial, Swennen calls this experience a ‘giant and collaborative experience in experiential learning’ (Swennen Citation2020, 657), and predicts it would become the ‘new normal’ of how we prepare teachers for these new challenges.

In this paper we aim to contribute to this new normal by offering a systematic approach of how to evidence and support important collaborative processes in experiential learning that should lead to a more meaningful integration of technology into the teaching and learning process at school. Based on the knowledge appropriation model, we have created an approach to teacher education that is embedded into school–university partnerships and implemented it with over 100 teachers seeking to integrate technology into their teaching practices. In the current paper, we focus especially on evidencing the social practices crucial for professional learning and eventual adoption of new teaching practices in the classroom.

Adoption of technology in teaching and learning

Not only since the pandemic in 2020, it is evident that knowledge about how to integrate technology in teaching and learning needs to be embedded in content level learning processes (Harris, Mishra, and Koehler Citation2009). However, previous studies have shown that adoption of innovative teaching practices is challenging for teachers (e.g. Webb and Cox Citation2004), hindering the deployment of educational innovation. Several studies have found that teachers who have access to different technologies, use it to support existing teaching practices (Sheffield Citation2011), which tend to be teacher-led and designed to transfer knowledge. However, when used in a pedagogically meaningful way, there is a good potential for using technology to promote self-directed or contextualised learning and to promote learning of transversal skills, like problem solving or collaboration.

As an example, educational robots have shown the potential to support the development of students’ understanding of abstract concepts and motivate students and enhance their interest in STEM fields (Eguchi Citation2014). Pepin et al. (Citation2015) have shown that digital textbooks in maths could provide transformative learning experiences and offer new possibilities for teacher and student agency. Still, teachers’ limited technology-supported pedagogical knowledge and skills is the main barrier to implementing student-centred learning practices in instructional practices.

One of the realities that have been contributing to the lack of meaningful integration of technology to the teaching practices is the lack of professional development programmes addressing the needs and challenges of effective integration of technology and pedagogy (Zhao Citation2007). New types of pedagogies around the use of technologies are needed to be established and tested, and teachers trained in their use, which requires change in teaching and learning practices. Until now, teacher training programmes for supporting the adoption of technology-enhanced teaching strategies have usually focused on teachers’ individual skills and beliefs (Twining et al. Citation2013). At the basis of these training models lies a ‘linear knowledge transmission model’ that assumes innovative teaching practices are invented at the university and then transferred into practice. Clearly these are not effective means to promote innovation adoption or professional learning.

In an attempt to understand the underlying causes of why certain technologies are adopted in the classroom, research has been similarly dominated by models of ‘technology acceptance’ that take an individualistic stance towards adoption by modelling individual decisions based on considerations of personal costs and benefits. For example, the Unified Theory of Acceptance and Use of Technology (UTAUT) by Venkatesh et al. (Citation2003) that integrates a number of models on technology acceptance has found broad applications in modelling teacher readiness to adopt technological innovations in schools (e.g. Teo Citation2011). UTAUT suggests the following important factors: Effort Expectancy (Ease of Use), Performance Expectancy (Perceived Usefulness), Social Influence (e.g. Social and Management support), and Facilitating Conditions (Compatibility with previous practices). The model has been applied to study adoption of a number of different learning technologies by teachers, including online learning environments and learning management systems (Wang and Wang Citation2009; Radovan and Kristl Citation2017), interactive whiteboards (Šumak and Šorgo Citation2016), educational robots (Fridin and Belokopytov Citation2014) and mobile technology (Hu, Laxman, and Lee Citation2020).

Many of these studies have confirmed the important role that social factors play for intention to use technology in the classroom. For example, in research reported by Radovan and Kristl (Citation2017) on the use of virtual classrooms, the construct ‘social influence’ appeared as a central variable influencing many of the other constructs. Similarly, the study by Wang and Wang (Citation2009) on the adoption of web-based learning systems resulted in ‘subjective norm’ to be the main predictor of intended use, a construct that is often understood to describe the ‘social pressure’ for the use of technology. These results already point to the possibility that social dynamics may play a crucial and more complex role than originally hypothesised in UTAUT and other adoption models.

Co-creation and innovation adoption in school−university partnerships

Instead of focusing on individual teacher attitudes and skills, we propose that implementation of content-specific professional development programmes may support schools and teachers to adopt novel technologies and integrate them more effectively into teaching and learning (Doering et al. Citation2014). To support this approach, there is a recent growing interest in co-creation methodologies for adoption of novel pedagogical approaches, which means that practitioners are integrated early into the research process (Durall Gazulla et al. Citation2020). The recent research has shown that co-creation of knowledge and appropriate practices (Alderman Citation2018) could lead to more efficient adoption of innovations in the classroom. For instance, Spiteri and Chang Rundgren (Citation2017) demonstrate that teachers benefit from the opportunity to collaboratively practice technology and to reflect and receive feedback from each other, because collaborative efforts encourage them to support each other and make them more willing to take risks when integrating technology. In our research, we expand such collaboration to multi-professional teams of teachers, school leaders, researchers and other actors. In education, such practices have long been studied under the term ‘school−university partnerships’ (SUPs), which are aimed at bringing together research in the field of education and everyday school practice (Arhar et al. Citation2013; Coburn and Penuel Citation2016).

Close relationships between research and practice must be built to change and improve the practice of teachers, because researchers provide research-based knowledge concerning effective educational practices, and together with practitioners they identify problems to be solved (Qvortrup Citation2016). In this way, SUPs bring educational research and classroom practice closer together.

Models of technology acceptance as described in the previous section are not appropriate to model the social dynamics that ensue as a result of such co-creation activities. Also, UTAUT and its variants treat the ‘technology’ as if it was an object that is either adopted or not. It neglects the fact that especially in early phases of adoption, the new method or approach as such is still socially constructed and adapted, as new practices are being created in a social process of knowledge creation (Hakkarainen Citation2009). Recent research on teacher professional learning based on socio-cultural theories, highlights the importance of co-creation of the knowledge and adoption practices (Voogt et al. Citation2015).

A theoretical perspective that has been applied to the co-creation in multi-professional teams is that of boundary crossing (Akkerman and Bruining Citation2016). Participants cross boundaries between practices of teaching, teacher education, and educational research, and these boundaries are perceived as inconsistencies between different practices, beliefs and worldviews. At the same time, boundaries are potential resources for learning, and provide a possibility for communities to develop further by developing new practices (Wenger Citation1998) or transforming existing practices (Engeström Citation2001). Snoek et al. (Citation2017) identified three key elements that determine the effectiveness of boundary crossing activities in SUP: members of the community need to develop ownership of the activity, the activity is perceived to be meaningful for members of the community, and it facilitates dialogue between members of different communities (e.g. teachers and researchers). Boundary crossing activities are facilitated by the creation of boundary objects. In our case, these can be lesson plans or exercises that the teachers create to conduct lessons employing new teaching and learning methods. We argue here that the collaborative creation of these boundary objects is key in the learning process to adopt the shared knowledge.

While current research on SUPs has mainly focused on their effectiveness for professional teacher education, co-creation in SUPs should lead to meaningful and evidence-based innovation that addresses problems of practice and improves educational outcomes. A model that is focused on these co-creation practices is the Knowledge Appropriation Model (KAM) (Ley et al. Citation2020). Similar to the conceptions of boundary crossing, the model is informed by social and situated models of learning and is an attempt to better integrate models of knowledge creation with professional learning. In doing so, KAM () distinguishes learning and knowledge practices in three areas:

Figure 1. Knowledge Appropriation Model (Ley et al. Citation2020). Figure reused under the Creative Commons Attribution 4.0 License (hötötöp:ö//öcreatiövecommons.org/licenses/by/4.0/)

Knowledge maturation (the left section of ) describes the practices of knowledge creation, namely how an individual experience becomes a shared knowledge in communities, and of its further transformation into more mature knowledge that is available for formal knowledge management processes of organisations. Specifically, this part describes how knowledge, for example, materials for new teaching and learning methods, is created, shared and refined.

Knowledge scaffolding (the right section of ) explains how professionals learn and are supported when applying the newly created knowledge in real-life settings.

Knowledge appropriation (the middle section of ) brings these two perspectives together and explains how knowledge is applied and validated in concrete work settings. IT should therefore have a main effect to ensure successful, sustained and scaled adoption of innovation.

There is some qualitative evidence for the importance of these practices in co-creation activities in teacher professional education programmes (Leoste, Tammets and Ley Citation2019). Social practices of knowledge sharing and co-design were important for teachers who participated in those programmes as were scaffolding practices that supported adoption of new teaching and learning methods in practice. Moreover, an analysis of the co-creation processes in tens of thousands of learning designs created in an online teacher community conducted by Rodriguez-Triana et al. (Citation2020) found practices of knowledge maturation, scaffolding and appropriation (evidenced through sharing, collaboratively creating and reusing learning designs) to be important for eventual classroom adoption.

Based on this overview, it is evident that there is a need for professional development models and training programmes that bring together research and practice and consider social dynamics and co-creation. Such an approach could promote adoption of new teaching and learning methods in practice. However, there is a gap in the current understanding how to evidence those social practices and their impact on teachers’ innovation adoption.

Aim of the current research

The main aim of this research is to understand the impact of collaborative knowledge creation and learning practices for the adoption of technology-based innovations for teaching and learning. We implement the current research in a SUP context where teachers co-create and learn together with university didactics and educational technology researchers and apply their knowledge in their own practice.

While several models, like boundary crossing (Snoek et al. Citation2017) and the KAM model suggest practices for professional learning, there is not yet an instrument that could be applied in SUPs or teacher education contexts to evidence co-creation processes and their effect in practice. In the current paper, we therefore report on the construction and validation of a questionnaire instrument that can evidence those social practices in larger samples of teachers involved in co-creation activities. We use the instrument to predict the eventual adoption of technology-enhanced teaching practices by teachers participating in the programme.

We devise the following research questions

RQ1: How to measure knowledge appropriation practices in a larger sample of teachers participating in SUP programmes?

RQ2: Do the created measurement scales differentiate between different types of SUP programmes?

RQ3: Do the KAM constructs predict adoption of technology-enhanced teaching practices beyond individual level variables?

Materials and methods

Research context: The Teacher Innovation Laboratory (TIL)

The main context for this research is the Teacher Innovation Laboratory (TIL), a 6–12 months long teacher development programme developed at Tallinn University (Leoste, Tammets and Ley 2019). The idea of the TIL programme is to provide a suitable context for teachers and researchers to co-design new teaching and learning methods, develop ways to implement them in the classroom and study their effectiveness. TIL should encourage different social learning processes to occur, such as knowledge maturation, scaffolding and the appropriation of new knowledge. The programme should therefore help an educational innovation initiative to survive in a real classroom environment and find long-term and sustained adoption.

During the programme, multi-professional teams hold joint co-creation sessions that take place approximately once a month. The teams consist of teachers as practitioners, subject didactics (e.g. mathematics), researchers in the field of technology-enhanced learning, educational psychology, teacher professionalisation from the university. A typical co-creation session lasts 6–8 academic hours and includes several components: learning about novel student-centred teaching practices, co-creating innovative teaching and learning methods and materials, gathering knowledge about action research and field research methods and group reflection. In between those sessions, teachers engage in collaborative field research in their own classrooms enabling teachers to pilot co-created learning scenarios, monitor the process to collect evidence about what happened in the classroom and analyse the collected data to understand the effectiveness of the intervention.

Participants

All participants were in-service teachers who attended different programmes organised as partnerships between the university and schools to enhance innovation adoption in practice. In total, 109 participants filled the KAM questionnaire corresponding to three different groups (see ).

Table 1. Participants of the study across three different types of SUP.

Teacher innovation laboratory

Cases 1–5 followed the TIL model that has been described in the previous section. Case 1 (Robomathematics) aimed to co-create lesson plans integrating educational robots to enhance the learning in mathematics in middle school. A pilot study (Leoste and Heidmets Citation2018) had shown that use of educational robots was challenging for teachers, as most of them had never programmemed before. Additionally, it was difficult for the teachers to integrate the subject knowledge with the affordances of the educational robots and to adopt new teaching methods, like co-creation, self-regulation, peer tutoring, co-teaching, and teamwork. Case 2 involves the same teachers, but measures were taken after the training programme had ended. This data was used to check for stability of the instrument (test–retest reliability).

Case 3 was a TIL programme about Educational Robots in pre-school and included 15 pre-school teachers to co-create teaching and learning activities for kindergarten. Case 4 focused on supporting 14 teachers to co-create student-centred teaching practices by integrating technology, arts and natural sciences education (STEAM) with the usage of sensor kits. Case 5 involved 21 secondary maths teachers to co-create digital learning tasks and learning designs to activate students’ mathematical thinking skills and pilot them in maths classes.

Short-term school-based programmes

Cases 6 (21 teachers) and 7 (10 teachers) followed different approaches to co-create new practices around use of educational robots. These were short training courses, initiated by the school management members with the aim to train more school teachers to integrate educational robots into their teaching. Some of the teachers had been previously part of Case 2 and the aim was to scale the innovation across the school. In contrast to TIL, these programmes were short term, involving teachers in a several hours-long workshop format.

Long-term school development programme

Case 8 (‘Future School’) was designed as a school improvement programme where the focus was on co-creating new change management practices and adopting them at a school level. The programme shared some characteristics with TIL in that it was also long-term (10 months) and included some elements of validation and reflection. While the other cases focused on specific technologies and subjects, the main focus of Case 8 were different school-level interventions based on the schools’ needs, such as enhancing teachers’ collaboration, improving students’ learning to learn skills, or raising students’ digital competences. In the context of this study, we aimed to understand how 20 teachers from 5 schools adopted the new knowledge created in multi-professional teams, although the focus in each school was different.

Instruments

Maturation, scaffolding and knowledge appropriation practices

As no measurement instrument was available for measuring constructs of the knowledge appropriation model in the context of SUPs and technology adoption in schools, we created a questionnaire-based instrument. The instrument was constructed and initially validated through the present study and the construction process, expert validation and reliability and validity analyses will be reported in the results section.

The instrument has been theoretically derived from the constructs of the knowledge appropriation model. For each of the practices (maturation, scaffolding and knowledge appropriation), one item was formulated that would describe typical activities undertaken for the particular practice. For example, for ‘sharing knowledge’ (part of knowledge maturation), the item was ‘I have often shared my own experiences and materials with other participants inside and outside the trainings’. The final questionnaire is composed of 13 items divided into three sub scales, covering the three constructs of maturation, scaffolding and appropriation. Answer scales are Likert-style and provide five response options, which ranged from ‘completely disagree’ to ‘completely agree’.

The instrument can be used with teachers involved in co-creation and professional development activities targeted at new teaching and learning methods. The items were initially formulated in English and later translated to Estonian for the use with teachers in our sample. The questionnaire is included in Appendix A.

Innovation adoption

For RQ3, there was a need to measure the likelihood that the innovative teaching and learning practices would be adopted by the teachers after the end of the intervention. We borrowed from models of technology acceptance to establish the behavioural intention to adopt the innovation after the end of the intervention. Venkatesh et al. (Citation2003) assume that behavioural intention is a good predictor for the actual adoption of the technology, and mainly determined then by facilitating conditions. In total, 4 items were used that focused on intended use of the method (e.g. ‘I am certain I will use the [method, innovation] after the training has ended in my own teaching’), as well as scalability and sustainability of the use of the method.

Individual motivational constructs: psychological ownership, self-efficacy and belongingness

For RQ3, we were intending to establish the contribution of the knowledge appropriation constructs to the prediction of adoption in comparison to some other established (individual level) constructs. So, scales for the following constructs were included in the questionnaire filled by the teachers:

Ownership: Inclusion of this construct was motivated through research on boundary crossing in teacher professional development. It is assumed that through successful cross-professional boundary crossing activities teachers develop personal ownership for the developed intervention (Snoek et al. Citation2017). For creating the scale, we drew on the concept of psychological ownership, which has been described as a cognitive-affective construct that defines an individual’s state of feelings as though the target of ownership is theirs (Avey et al. Citation2009). We adapted two of the items of the subscale of ‘Accountability’ suggested by Avey et al. (Citation2009) for our purposes. A sample item was ‘I feel the need to defend the [method, innovation] if it would be criticised’.

Self-efficacy: One key outcome of a training intervention is that participants develop confidence about the application of the new knowledge and skills they learned. This is especially important if participants should implement interventions in their own work practice. The construct of self-efficacy has been found important to measure success of training (Hall and Trespalacios Citation2019), it is important for intentions to adopt an innovation (Venkatesh et al. Citation2003) and it has been included as a subscale in the construct of ‘psychological ownership’ suggested by Avey et al. (Citation2009). Two items were included to measure self-efficacy, e.g. ‘I feel confident to carry out the [method, innovation] in my classroom’.

Belongingness: Any type of professional development activity involves social contacts with other members of the training group. Usually, these contacts are thought to contribute to the success of training interventions. It should be noted, however, that social practices of knowledge appropriation as we have defined them here should go much beyond simple feelings of being part of a group or community. So, the construct of belongingness was added to the item pool to differentiate social practices of knowledge construction from a mere feeling of belonging to the community. Avey et al. (Citation2009) have included a subscale of belongingness as part of the construct of psychological ownership. One of the two items we used was ‘I feel I am a part of the learning community consisting of teachers and researchers who work on the [method, innovation].’

In addition to these, items for several other constructs were included (such as individual motivation). However, since the questionnaire used in different groups slightly differed, and some of the items were updated over time, we include here only those scales that were used in similar ways across all groups. All items were contextualised to the particular training setting, so ‘the [method/innovation]’ was replaced e.g. by ‘the Robomathematics method’ in the questionnaires sent to the teachers.

Procedure

For the TIL cases, as well as for the long-term school development cases, common measurement times were defined as depicted in : At the beginning of the intervention (T1), a baseline of intended adoption and individual motivational scales was taken. Results of these measurements are not included in the current analysis. Because questions about knowledge appropriation practices were only sensible once participants had already taken part in the programme for some time, measures of the Knowledge Appropriation scales were obtained in the middle of the intervention (T2). At the end of the intervention (T3), measures from all scales were obtained. In some individual cases, measures of intended adoption and individual motivation were also obtained at T2.

Table 2. Data collection procedure.

The questionnaires were sent in electronic format to all participants taking part in the programme. Participants were usually given time to fill the questionnaire at the end of a training session when they were still on site. Participants used their own devices to fill the questionnaire (using their personal laptop, tablet or smartphone). Data was collected in an anonymised form. In some cases, participants were asked to provide a code so that answers across measurement times could be matched without identifying the person. Data was analysed using SPSS employing different quantitative data analysis procedures that will be presented in more detail in the results section.

Results

Results will be reported according to the three research questions:

we report the development of a self-report questionnaire instrument and establish its content validity and reliability (RQ1)

we test whether there are group differences in responding to the scales between participants from different types of implementation of SUPs (construct validation) (RQ2), and

we test whether the scales predict intended adoption beyond individual level constructs (such as self-efficacy and ownership) (predictive validation) (RQ3).

Construction and initial validation of the measurement instrument (RQ1)

This section reports the results of the construction and initial validation of the three subscales of the knowledge appropriation questionnaire. We started with generation of items and content validation by expert judgements. We then report different measures of reliability of the constructed scales as well as initial validation in the sample described above.

Item generation and initial content validation

The item generation was done in a theory-guided way. We initially formulated one item for each of the 12 practices given in the knowledge appropriation model, describing typical activities that would be undertaken by the participants during the training. We then submitted a preliminary draft of the questionnaire items to experts’ judgement (Lawshe Citation1975). A panel of 10 content experts from fields like psychology and education were asked to review the relevance and the content of each item on a 4-point Likert scale. Then for each item, the proportion of relevance agreements was calculated (Content validity Index for individual items I-CVI), before to calculate the Content Validity Index for the scale (S-CVI). The I-CVI for 11 items was 1.0.

Four of the thirteen items obtained absolute agreement, four items relative agreement, three average agreement, and one item low agreement. After the analysis, four items were changed (for the practices ‘adaptation’ in knowledge appropriation, ‘sharing’ and ‘standardise’ in maturation, and ‘guide’ in scaffolding). The item with the low score was completely modified according to experts’ suggestions, 10 items were slightly reworded and 1 new item was added as suggested by the experts (practice ‘guide’ in construct Scaffolding ‘I offer guidance and help to my colleagues about [method, innovation]’). The final number of 13 items can be found in Appendix A.

Reliability: internal consistency

Following this iterative process of refinement, a preliminary reliability test on the items of the knowledge appropriation subscales was done by means of Cronbach alpha which measures the internal consistency of the scales. In , we present these values. The overall reliability of = .873 (N = 109) of the composite KAM scale (averaging over all 13 items) seems sufficient. The three subscales vary in their consistency, with the knowledge appropriation subscale having lower reliability than the others.

Table 3. Internal consistency of the items.

The item statistics show very high ratings on a five-point scale, with means around and medians around 4 on a 5-point scale. This is potentially problematic as it indicates a ceiling effect. The reason for these high ratings can be seen in the sample that was involved in this validation. All of the respondents had been taking part in some sort of intervention programme. Therefore, it can be expected that ratings of agreement to the practices should be higher than in a sample where no intervention had taken place.

As a result of this emerging ceiling effect, the formulation of the items was slightly changed to make them more extreme. For example, the item on intended adoption was rephrased from ‘I will use the [method, innovation] after the training has ended in my own teaching’ to ‘I am certain I will use the [method, innovation] after the training has ended in my own teaching’.

Reliability: stability over time

Besides internal consistency, it was possible to measure the stability of the KAM scales over time. For this, we used a subset of the data where repeated measures from participants of the Robomathematics TIL (denoted with case 1 and 2 in above) had been taken in the middle and the end of the programme (NT2 = 14, NT3 = 14). We used a test–retest measurement to check absolute-agreement with the two-way mixed-effects model. The Intraclass Correlation Coefficient (ICC) = 0.386. So, the test–retest reliability of ICC = 0.837 for the KAM scales is ‘good’ at the 95% confidence interval, p < .001 (Koo and Li Citation2016).

Group difference between different implementations of SUP programmes (RQ2)

After having ensured a sufficient level of reliability of the scales, the validity of the scales was explored. Despite the fact that all teacher groups participating in the study had undergone some form of intervention drawing on ideas of SUP, the types of intervention were quite different. While some of the groups participated in the Teacher Innovation Laboratory (TIL), a long-term professional development programme dedicated to the co-creation of classroom innovations (Robomath, STEAM, Kindergarten Robotics, Digimath), others had only participated in one-off school-based workshops with teachers having varying levels of motivation (School 1, School 2), and another group had participated in a yearlong school development programme called ‘Future School’.

It was therefore expected that the KAM questionnaire we constructed should show differences between those groups in terms of the KAM scales as well as in terms of the intended adoption. This would contribute to the construct validation of the KAM instrument because it would relate the construct to be measured (knowledge appropriation) to other related constructs (SUP programme). Such hypothesis testing can be considered a construct validation technique (Smith Citation2005).

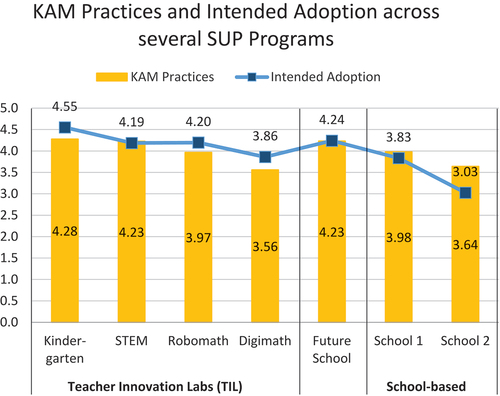

We first looked at a composite measure of the KAM scales (mean of maturation, scaffolding and appropriation practices), as well as ‘Intended Adoption’. As expected, reveals some descriptive differences between the groups. Both the TIL groups as well as the Future School group have slightly higher ratings than the two short-term school-based interventions. Especially, School 2 scores considerably lower both in the composite KAM scale as well as in the Intended Adoption.

A one-way multivariate analysis of variance was run to determine whether the type of SUP intervention (TIL, N = 58; Future School, N = 20; short-term school-based training programmes, N = 31) had an effect on the composite KAM scale or Intended Adoption. Two out of ten assumptions for the test were violated (including homogeneity of variance for the ‘Intended Adoption’ scale), but we decided the violations were not serious enough to affect the results (Laerd Statistics Citation2017). In addition to the one-way MANOVA, we additionally conducted univariate ANOVAs to follow up individual effects and then used a Welch ANOVA which is not sensitive to unequal variances and sample sizes. Results for both are reported below, a more detailed description of which assumptions were checked is reported in Appendix B.

Overall, teachers in the Future School programme scored higher in Intended Adoption (4.24 ± .40Footnote1) and the composite KAM scales (4.23 ± .52) than teachers in TIL (4.19 ± .57 and 4.00 ± .52, respectively,), and the school-based trainings (3.57 ± .89 and 3.87 ± .60, respectively,). The differences between groups on the combined dependent variables was statistically significant, F(4, 210) = 6.489, p < .0005; Wilks’ Λ = .792; partial η2 = .110, using a Bonferroni-adjusted α level of .025.

Follow-up univariate ANOVAs showed that the difference in the composite KAM scale is not statistically significant between the different groups, F(2, 106) = 2.621, p = .077; partial η2 = .047. On the other hand, there was a statistically significant difference in Intended Adoption between the different groups, F(2, 106) = 10.375, p < .001; partial η2 = .164. As we violated homogeneity of variances for Intended Adoption, we additionally conducted a Welch ANOVA which confirmed the difference (Welch’s F(2, 51.693) = 7.046, p < .001). Games-Howell post-hoc test analysis reveals that both, teachers participating in TIL (diffmean = .626, p < .0005) and the Future School programme (diffmean = .667, p < .0005) had significantly higher mean scores for Intended Adoption than teachers from school-based interventions.

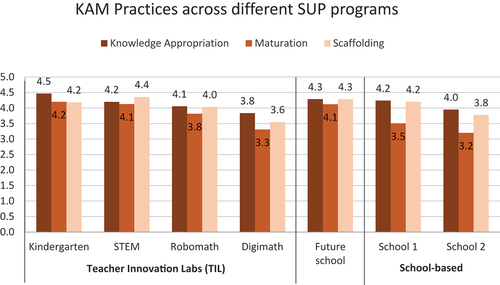

Although the composite KAM measure did not show a difference among interventions, we conducted a further analysis to compare effects on all the three KAM subscales across the 7 teacher groups (N = 109). shows that while ‘knowledge appropriation’ and ‘scaffolding’ seem to be high for the school-based programmes as well, it is especially the knowledge maturation scale where low scores were obtained (3.50 ± .77 and 3.20 ± .57, for School 1 and 2, respectively,).

A one-way multivariate analysis of variance was run to determine the effect of the type of SUP intervention (TIL vs. Future School vs. School-based) on knowledge appropriation, maturation and scaffolding practices. We violated one out of ten assumptions for the test (see Appendix B for details), but decided that these violations did not affect the results (Laerd Statistics Citation2017) and so we present results for one-way MANOVA below.

The differences between the settings on the combined dependent variables was statistically significant, F(6, 208) = 3.933, p < .001; Wilks’ Λ = .807; partial η2 = .102. Future School teachers scored higher in knowledge appropriation (4.28 ± .45), maturation (4.12 ± .67) and scaffolding (4.28 ± .60) than TIL teachers (4.14 ± .50, 3.85 ± .64 and 4.02 ± .64, respectively,) and school-based training (4.08 ± .65, 3.4 ± .71 and 4.06 ± .78, respectively,).

Follow-up univariate ANOVAs showed that knowledge maturation scores were significantly different between the participants from different settings (F(2, 106) = 7.765, p < .001; partial η2 = .128), using a Bonferroni−adjusted α level of .025. Tukey post-hoc tests showed that for Knowledge Maturation, the participants from TIL settings had significantly higher mean scores than participants from School-based settings (diffmean = .45, with p < .001) and the participants from School-based settings had statistically significantly lower mean scores than participants from ‘Future School’ settings (diffmean = −.713, with p < .0005). While there were no statistically significant differences between participants from TIL and Future School (diffmean = .26 with p = .294).

We conclude from these results that the instrument generally was sensitive to differences between intervention groups. Especially the ‘Intended Adoption’ scale showed differences between the two longer term intervention programmes and the school-based ones. In terms of the KAM scales, differences were statistically significant for Knowledge Maturation. It seems that long-term programmes offer more possibility for co-creation and formalisation than short-term ones do. These results give a first indication that the KAM scales measure meaningful differences in the perception of SUP programmes. Furthermore, they indicate that there might be a relationship between knowledge maturation practices and the intended adoption of an innovation. This will be explored in more detail in the next section.

Prediction of intended adoption (RQ3)

While the previous section explored what can be considered construct validity, RQ3 deals with predictive validity. We were intending to explore whether KAM practices (knowledge maturation, appropriation and scaffolding) during SUP activities would predict Intended Adoption. We calculated a multiple linear regression with the composite KAM scale as predictors and intended adoption as a dependent variable. To compare the contribution of KAM practices to this prediction, we included several individual level motivational variables into the multiple regression model as well (see ), namely self-efficacy, belongingness and psychological ownership. The dataset met 7 out of 8 assumptions for the multiple linear regression (like linearity, and independence of residuals), hence we decided to run the test for the purposes of this paper. A more in-depth presentation of the assumptions tested is given in Appendix B.

Table 4. Scales included in the multiple linear regression model.

The multiple linear regression model significantly predicted Adoption, F(4, 73) = 25.632, p < .001. The effect was strong with R2 = 0.584. The predictors Self-efficacy, Ownership and the KAM scale added statistically significantly to the prediction (p < .05). Regression coefficients and standard errors can be found in . Ownership contributed the most to the prediction of the model with a beta weight of .389 while the KAM practices were next with beta = .258.

Table 5. Results of the multiple linear regression model predicting intended adoption.

In summary, the KAM scale showed some power in predicting the intended adoption of an educational innovation in a SUP context. KAM practices implemented during interventions seem to be important prerequisites for teachers to adopt the innovation in their classroom practices. These add significantly to the prediction beyond several individual motivational variables, particularly self-efficacy and psychological ownership. Moreover, the effect on intended adoption goes beyond a mere feeling of belonging to the social community (as indicated by the non-significant beta weight for the factor Belongingness). KAM practices instead seem to create possibilities for collaborative creation of practices and their appropriation.

Discussion

With this research we were intending to evidence the important role that social practices play in the adoption of classroom level technology-enhanced innovations for teaching and learning. We focused on practices on knowledge maturation, scaffolding and appropriation that can be observed in different interventions of school−university partnership (SUP) programmes where the aim is to co-create innovative teaching and learning methods with teachers and researchers, implement them in the classroom and reflect about the evidence obtained in their implementation. We will now discuss the two main contributions of this paper, namely the systematic construction and validation of a measurement scale for the KAM model as well as the contribution of social practices for the adoption of educational innovations. We close the discussion with limitations and future work.

Measuring knowledge appropriation in school−university partnerships

When engaged in SUP programmes, new knowledge is created in multi−professional teams and applied in the context of work. Teachers become aware of technologies to be used in the classroom, develop new teaching methods, learn from other participants and apply the knowledge in their professional practice. Individual learning is embedded into these knowledge creation processes. As no measurement instrument was available that would allow evidencing of these practices, we constructed and validated a self-report questionnaire instrument consisting of three subscales (knowledge maturation, scaffolding and appropriation) derived from the Knowledge Appropriation Model. After an initial expert review focusing on content validity, the measurement instrument was subjected to different reliability and validity analyses using a sample of over 100 teachers participating in different SUP programmes. The three subscales were found to be of sufficient internal consistency as evidenced through Cronbach alpha, and stability, as evidenced through an analysis of test–retest reliability using one subsample of teachers.

The created measurement instrument was then validated using construct and predictive validation techniques. The instrument differentiated different SUP programme groups, especially showing differences in knowledge maturation between the long-term and the short term programmes. These programmes were: (a) The Teacher Innovation Laboratory (TIL) on adopting educational technology innovation in teaching practices (6–10 month long); (b) Future School, a whole-school level school improvement programme aiming to adopt school-level changes (10 months long) and (c) school-based training courses to adopt certain educational technology innovation in the school (one-off workshops and courses). Teachers participating in the TIL and Future School programmes had higher ratings in knowledge maturation than those teachers participating in the school-based programmes.

The KAM scales also predicted intended adoption of the classroom level innovations, as evidenced by a multiple linear regression where the KAM composite measure explained significant amounts of variance beyond other constructs like self-efficacy, belongingness or ownership. This shows that the KAM scales are a useful measurement device when evaluating cross-professional boundary crossing activities of teachers beyond personal skills and ownership. In our view, KAM practices describe the shared learning that happens in boundary crossing (Akkerman and Bakker Citation2011) specifying what Snoek et al. (Citation2017) have called ‘dialogue’ and ‘meaning’ across different activity systems.

The importance of social practices for professional development

This last result underlines the important role that social co-creation practices have in the professional development of teachers when new teaching and learning practices using novel technologies are adopted. This supports our argument that teacher education programmes should be a longer systematic co-creation process, which enhances the integration of content knowledge with technological and didactical methodological approaches. Co-creating shared understanding, knowledge and practices, piloting and reflecting the experiences together with teachers from the same field and with experts from the university, could be seen as an important factor in the adoption process. School-based interventions include important elements like school level support, which is an important factor to adopt innovations. However, in our cases, probably teachers’ internal motivation to participate in the training and to improve their own practice played an important role in enhancing teacher leadership and agency, which then promoted knowledge appropriation.

The Teacher Innovation Laboratory programme that we followed can be seen as a suitable format to promote these processes (Leoste, Tammets and Ley 2019). The multiple linear regression we reported here gives evidence that activities aimed at co-creation of innovations function as important predictors for intended adoption. In fact, the KAM practices we evidenced had a more important effect than a mere feeling of social belonging, and were as important as feelings of self-efficacy created through the training.

Implications and limitations

It is evident that different educational technologies will be increasingly used by teachers, and the need to adopt pedagogically meaningful student-centred learning practices that integrate technologies is more important than ever. If we want teachers to adopt those innovative classroom practices, then the findings of this research are more than clear: promoting social practices through professional development programmes have an important effect, especially if they focus on teachers co-creating knowledge in school–university partnerships, formalising it and applying it in the work context. We have proposed a measurement instrument based on the Knowledge Appropriation Model that can evidence those practices and shown its application in the context of several SUPs, including the Teacher Innovation Laboratory where these practices could be observed.

A possible limitation of this research lies in the fact that the measurement instrument was created at the same time as it was applied. It could be argued that a more appropriate methodology would have validated the instrument in a large sample and only then applied it in a SUP. It should be noted, however, that the application of the instrument presupposes that respondents are involved in quite complex processes of knowledge creation, adoption and boundary crossing. Creation of the instrument in an independent sample is therefore an unrealistic endeavour. We have tried to still follow an iterative process of scale construction and validation which included important steps of construct and predictive validation. We have appended the final questionnaire items in Appendix A and hope to thereby encourage further validation of the instrument in different contexts.

Through this, we would like to encourage testing of generalisability of the instrument. Although the instrument was applied in a large sample of teachers covering all stages of basic education, it is important to consider that generalisability may still be restricted due to the common focus of our interventions on the introduction of innovative teaching methods. Also, by drawing largely on teachers recruited though the TIL programme, the context of innovation adoption was similar for all of them and the instrument would need to be adapted to different implementations of SUP.

In this sense, it is a limitation of more quantitatively focused research as reported here that it can not well uncover differences in SUP formats and how KAM practices were applied differently in different multi-professional teams. We have additionally tested the KAM model in qualitative research, analysing different cases of TIL applications (Leoste, Tammets and Ley 2019). Rodriquez-Triana et al. (Citation2020) have recently applied the model in a large-scale online teacher community.

Also, a more in-depth evaluation of the TIL format needs to be undertaken as future work. We suggest that after having collected qualitative evidence and quantitative evidence in separate studies, a longitudinal mixed-methods study should be conducted in which concurrent or integrated triangulation from different sources could be applied, and we could evidence the longer-term learnings and developments that teachers experience in this setting.

Acknowledgments

This research was funded by the European Union’s Horizon 2020 research and innovation programmeme, grant agreement No. 669074 (CEITER, http://ceiter.tlu.ee).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Tobias Ley

Tobias Ley is a Professor of Learning Analytics and Educational Innovation at Tallinn University where he leads the Center of Excellence in Educational Innovation. His research interests are in technology-enhanced learning and learning analytics, adaptive and collaborative learning technology and educational innovation. His research has been published in over 100 scientific articles and has won several awards, including the state science award of the Estonian Academy of Sciences in 2020, and the European Research Excellence Award for Vocational Education and Training in 2018. Tobias has acted as general chair of the European Conference on Technology-enhanced Learning (EC-TEL) and was a member of the editorial board of the IEEE Transactions on Learning Technologies. Tobias holds a PhD in Psychology and Knowledge Management from the University of Graz.

Kairit Tammets

Kairit Tammets is the head of Centre for Educational Technology (CET) and senior researcher of educational technology. She holds a PhD in Educational Sciences from the Tallinn University (Estonia). Her research focus has been on teachers’ professional learning in school-university partnerships to support the adoption of technology-enhanced learning practices. Recently Kairit has been contributing to the development of teacher inquiry tools to support teachers’ evidence-informed instructional design. Kairit has won state science award of the Estonian Academy of Sciences in 2020.

Edna Milena Sarmiento-Márquez

Edna Milena Sarmiento-Márquez is a Ph.D. candidate at the School of Educational Sciences at Tallinn University where she studies educational innovations through the lenses of school-university partnerships and living labs in education. Her research interests include educational innovations, R&D, sustainability of School-University Partnerships, evaluation of educational change, and organisational learning. Her current work focuses on evaluating changes in teaching and learning practices in School-University Partnerships to support the sustainability of innovations.

Janika Leoste

Janika Leoste is a PhD candidate at the School of Educational Sciences of Tallinn University (Estonia). Since 2021 she is also a Business Collaboration and Innovation Chief Specialist and Junior Researcher in Educational Robotics in School of Educational Sciences in Tallinn University. Her research includes the sustainability of technology enhanced educational innovations and didactics on STEAM teaching in all stages of education, including using robot integrated learning in early childhood and primary education.

Maarja Hallik

Maarja Hallik is a recent graduate from the MA in Educational Innovation and Leadership programme at Tallinn University and a project coordinator at the School of Educational Sciences. She has been involved with several initiatives related to educational innovation at Tallinn University, including the EDULabs, and is currently running a programme called the Glocally Transformative Learning Lab (Proovikivi) that focuses on ‘transformative’ community-embedded project-based learning, sustainable development and active citizenship.

Katrin Poom-Valickis

Katrin Poom-Valickis is a professor of Teacher Education at the School of Educational Sciences at Tallinn University. The main research interest is primarily focused on the teacher’s professional development, more precisely how to support future teachers’ learning and development during their studies and first years of work. She has been a project leader and co-coordinator in several projects related to the development of Teacher Education and member of the expert group who prepared the implementation of the induction year for novice teachers in Estonia.

Notes

1. Data are expressed as mean ± standard deviation.

References

- Akkerman, S., and T. Bruining. 2016. “Multilevel Boundary Crossing in a Professional Development School Partnership.” Journal of the Learning Sciences 25 (2): 240–284. doi:10.1080/10508406.2016.1147448.

- Akkerman, S. F., and A. Bakker. 2011. “Boundary Crossing and Boundary Objects.” Review of Educational Research 81 (2): 132–69. doi:10.3102/0034654311404435

- Alderman, M. K. 2018. Motivation for Achievement: Possibilities for Teaching and Learning. 3rd ed. New York: Routledge.

- Arhar, J., . T., J. Niesz, S. Brossmann, K. Koebley, D. L. O’Brien, F. Black, F, and F. Black. 2013. “Creating a ‘Third Space’ in the Context of a University–school Partnership: Supporting Teacher Action Research and the Research Preparation of Doctoral Students.” Educational Action Research 21 (2): 218–236. doi:10.1080/09650792.2013.789719.

- Avey, J. B., B. J. Avolio, C. D. Crossley, and F. Luthans. 2009. “Psychological Ownership: Theoretical Extensions, Measurement and Relation to Work Outcomes.” Journal of Organizational Behavior 30 (2): 173–191. doi:10.1002/job.583.

- Coburn, C. E., and W. R. Penuel. 2016. “Research–Practice Partnerships in Education: Outcomes, Dynamics, and Open Questions.” Educational Researcher 45 (1): 48–54. doi:10.3102/0013189X16631750.

- Doering, A., S. Koseoglu, C. Scharber, J. Henrickson, and D. Lanegran. 2014. “Technology Integration in K–12 Geography Education Using TPACK as a Conceptual Model.” Journal of Geography 113 (6): 223–237. doi:10.1080/00221341.2014.896393.

- Durall Gazulla, E., M. Bauters, I. Hietala, T. Leinonen, and E. Kapros. 2020. “Co-creation and Co-design in Technology-enhanced Learning: Innovating Science Learning outside the Classroom.” IxD&A Interaction Design & Architecture(s) 42: 202–226.

- Eguchi, A. 2014. “Educational Robotics Theories and Practice: Tips for How to Do It Right.” In Robotics: Concepts, Methodologies, Tools, and Applications, Vol. 3, edited by Information Resources Management Association, 193–223. Hershey, PA: IGI Global. doi:10.4018/978-1-4666-4607-0.ch011.

- Engeström, Y. 2001. “Expansive Learning at Work: Toward an Activity Theoretical Reconceptualization.” Journal of Education and Work 14 (1): 133–156. doi:10.1080/13639080020028747.

- Fridin, M., and M. Belokopytov. 2014. “Acceptance of Socially Assistive Humanoid Robot by Preschool and Elementary School Teachers.” Computers in Human Behavior 33 (April): 23–31. doi:10.1016/J.CHB.2013.12.016.

- Hakkarainen, K. 2009. “A Knowledge-practice Perspective on Technology-mediated Learning.” International Journal of Computer-Supported Collaborative Learning 4 (2): 213–231. doi:10.1007/s11412-009-9064-x.

- Hall, A. B., and J. Trespalacios. 2019. “Personalized Professional Learning and Teacher Self-Efficacy for Integrating Technology in K–12 Classrooms.” Journal of Digital Learning in Teacher Education 35 (4): 221–235. doi:10.1080/21532974.2019.1647579.

- Harris, J. B., P. Mishra, and M. Koehler. 2009. “Teachers’ Technological Pedagogical Content Knowledge and Learning Activity Types.” Journal of Research on Technology in Education 41 (4): 393–416. doi:10.1080/15391523.2009.10782536.

- Hu, S., K. Laxman, and K. Lee. 2020. “Exploring Factors Affecting Academics’ Adoption of Emerging Mobile Technologies-an Extended UTAUT Perspective.” Education and Information Technologies 25 (5): 4615–4635. doi:10.1007/s10639-020-10171-x.

- Koo, T. K., and M. Y. Li. 2016. “A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research.” Journal of Chiropractic Medicine 15 (2): 155–163. doi:10.1016/j.jcm.2016.02.012.

- Lawshe, C. H. 1975. “A Quantitative Approach to Content Validity.” Personnel Psychology 28 (4): 563–575. doi:10.1111/j.1744-6570.1975.tb01393.x.

- Leoste, J., and M. Heidmets. 2018. “The Impact of Educational Robots as Learning Tools on Mathematics Learning Outcomes in Basic Education.” In Digital Turn in Schools—Research, Policy, Practice, edited by T. Väljataga and M. Laanpere, 203–217, Lecture Notes in Educational Technology, Heidelberg: Springer.

- Leoste, J., K. Tammets, and T. Ley. 2019. “Co-Creating Learning Designs in Professional Teacher Education: Knowledge Appropriation in the Teacher’s Innovation Laboratory.”Interaction Design and Architecture(s) Journal42: 131–63. http://www.mifav.uniroma2.it/inevent/events/idea2010/doc/42_7.pdf

- Ley, T., R. Maier, S. Thalmann, L. Waizenegger, K. Pata, and A. Ruiz-Calleja. 2020. “A Knowledge Appropriation Model to Connect Scaffolded Learning and Knowledge Maturation in Workplace Learning Settings.” Vocations and Learning 13 (1): 91–112. doi:10.1007/s12186-019-09231-2.

- Pepin, B., G. Gueudet, M. Yerushalmy, L. Trouche, and D. Chazan. 2015. “E-textbooks In/for Teaching and Learning Mathematics: A Disruptive and Potentially Transformative Educational Technology.” In Handbook of International Research in Mathematics Education, edited by L. English and D. Kirshner, 636–661. Third ed. New York: Taylor & Francis.

- Qvortrup, L. 2016. “Capacity Building: Data- and Research-informed Development of Schools and Teaching Practices in Denmark and Norway.” European Journal of Teacher Education 39 (5): 564–576. doi:10.1080/02619768.2016.1253675.

- Radovan, M., and N. Kristl. 2017. “Acceptance of Technology and Its Impact on Teacher’s Activities in Virtual Classroom: Integrating UTAUT and CoI into a Combined Model.” Turkish Online Journal of Educational Technology 16 (3): 11–22.

- Rodríguez-Triana, M. J., L. P. Prieto, T. Ley, T. De Jong, and D. Gillet. 2020. “Social Practices in Teacher Knowledge Creation and Innovation Adoption: A Large-scale Study in an Online Instructional Design Community for Inquiry Learning.” International Journal of Computer-Supported Collaborative Learning 15 (4): 445–67. doi:10.1007/s11412-020-09331-5.

- Sheffield, C. C. 2011. “Navigating Access and Maintaining Established Practice: Social Studies Teachers’ Technology Integration at Three Florida Middle Schools.” Contemporary Issues in Technology and Teacher Education 11: 3.

- Smith, G. T. 2005. “On Construct Validity: Issues of Method and Measurement.” Psychological Assessment 17 (4): 396–408. doi:10.1037/1040-3590.17.4.396.

- Snoek, M., J. Bekebrede, F. Hanna, T. Creton, and H. Edzes. 2017. “The Contribution of Graduation Research to School Development: Graduation Research as a Boundary Practice.” European Journal of Teacher Education 40 (3): 361–378. doi:10.1080/02619768.2017.1315400.

- Spiteri, M., and S. N. Chang Rundgren. 2017. “Maltese Primary Teachers’ Digital Competence: Implications for Continuing Professional Development.” European Journal of Teacher Education 40 (4): 521–534. doi:10.1080/02619768.2017.1342242.

- Statistics, L. 2017. “One-way ANOVA Using SPSS Statistics. Statistical Tutorials and Software Guides.” Retrieved from https://statistics.laerd.com/

- Šumak, B., and A. Šorgo. 2016. “The Acceptance and Use of Interactive Whiteboards among Teachers: Differences in UTAUT Determinants between Pre- and Post-Adopters.” Computers in Human Behavior 64 (November): 602–620. doi:10.1016/j.chb.2016.07.037.

- Swennen, A. 2020. “Experiential Learning as the ‘New Normal’ in Teacher Education.” European Journal of Teacher Education 43 (5): 657–659. doi:10.1080/02619768.2020.1836599.

- Teo, T. 2011. “Factors Influencing Teachers’ Intention to Use Technology: Model Development and Test.” Computers & Education 57 (4): 2432–2440. doi:10.1016/j.compedu.2011.06.008.

- Twining, P., J. Raffaghelli, P. Albion, and D. Knezek. 2013. “Moving Education into the Digital Age: The Contribution of Teachers‘ Professional Development.” Journal of Computer Assisted Learning 29 (5): 426–437. doi:10.1111/jcal.12031.

- Van Der Spoel, I., O. Noroozi, E. Schuurink, and S. Van Ginkel. 2020. “Teachers’ Online Teaching Expectations and Experiences during the Covid19-pandemic in the Netherlands.” European Journal of Teacher Education 43 (4): 623–638. doi:10.1080/02619768.2020.1821185.

- Venkatesh, V., M. G. Morris, G. B. Davis, and F. D. Davis. 2003. “User Acceptance of Information Technology: Toward a Unified View.” MIS Quarterly: Management Information Systems 27 (3): 425–478. doi:10.2307/30036540.

- Voogt, J., T. Laferrière, A. Breuleux, R. C. Itow, D. T. Hickey, and S. McKenney. 2015. “Collaborative Design as a Form of Professional Development.” Instructional Science 43 (2): 259–282. doi:10.1007/s11251-014-9340-7.

- Wang, W. T., and C. C. Wang. 2009. “An Empirical Study of Instructor Adoption of Web-based Learning Systems.” Computers & Education 53 (3): 761–774. doi:10.1016/j.compedu.2009.02.021.

- Webb, M., and M. Cox. 2004. “A Review of Pedagogy Related to Information and Communications Technology.” Technology, Pedagogy and Education 13 (3): 235–286. doi:10.1080/14759390400200183.

- Wenger, E. 1998. Communities of Practice: Learning, Meaning, and Identity. Cambridge: Cambridge University Press.

- Zhao, Y. 2007. “Social Studies Teachers’ Perspectives of Technology Integration.” Journal of Technology and Teacher Education 15 (3): 311–333.

Appendix A

Appendix B

Assumptions checked for the MANOVA (KAM composite and Intended Adoption) reported for RQ2:

Preliminary assumption checking revealed that data was normally distributed for each group in the case of S3 but not in Adoption scores, as assessed by Shapiro–Wilk test (p > .05). Despite the violations to the normality assumption, we decided to run the test regardless because the one-way MANOVA is fairly ‘robust’ to deviations from normality.

There were no multivariate outliers, as assessed by Mahalanobis distance (p > .001). Nevertheless, there were univariate outliers in the Adoption scores, as assessed by inspection of a boxplot for values greater than 1.5 box-lengths from the edge of the box, nevertheless, we decided to keep them in the analysis anyway because we don’t believe the result will be materially affected (Laerd Statistics Citation2017).

There was a linear relationship between Adoption and S3 scores in each group, as assessed by scatterplot.

There was no multicollinearity, as assessed by Pearson correlation (r = .644, p = .000).

There was homogeneity of variance-covariances matrices, as assessed by Box’s test of equality of covariance matrices (p = .010).

There was homogeneity of variances for S3 but not for Adoption, as assessed by Levene’s Test of Homogeneity of Variance (p > .05 and p = .021 respectively). In this case, we were not able to interpret the standard one-way ANOVA, but must use a modified version of the ANOVA, the Welch ANOVA to report the results, also a post-hoc analysis of Adoption (considering violation of the assumption of homogeneity of variances, we run a Games–Howell post-hoc test).

Assumptions checked for the MANOVA (individual KAM scales) reported for RQ2:

Preliminary assumption checking revealed that data was not normally distributed, as assessed by Shapiro – Wilk test (p > .05); there was one univariate outlier, as assessed by boxplot, that was kept for the analysis as it was considered a genuinely unusual value, there were not multivariate outliers as assessed by Mahalanobis distance (p > .001); there were linear relationships, as assessed by scatterplot; There was no multicollinearity, as assessed by Pearson correlation for Appropriation–Maturation (r = .612, p = .000), Appropriation–Scaffolding (r = .667, p = .000) and Maturation–Scaffolding (r = .635, p = .000); and there was homogeneity of variance-covariances matrices, as assessed by Box’s test of equality of covariance matrices (p = .159). There was homogeneity of variances as assessed by Levene’s Test of Homogeneity of Variance (p > .05).

Assumptions checked for the multiple linear regression model reported for RQ3:

To assess linearity a scatterplot of Adoption against all the 4 variables with superimposed regression line was plotted. Visual inspection of these plots indicated a linear relationship between the variables. There was independence of residuals, as assessed by a Durbin-Watson statistic of 1.899. The data analysed didn’t present outliers. There was no homoscedasticity, as assessed by visual inspection of a plot of studentized residuals versus unstandardised predicted values. There was no evidence of multicollinearity, as assessed by tolerance values greater than 0.1. There were no studentized deleted residuals greater than ±3 standard deviations, no leverage values greater than 0.2, and values for Cook’s distance above 1. The assumption of normality was met, as assessed by a Q-Q Plot.