ABSTRACT

Interventions to improve mental health can target individuals, working groups, their leaders, or organisations, also known as the Individual, Group, Leader, and Organisational (IGLO) levels of intervention. Evaluating such interventions in organisational settings is complex and requires sophisticated evaluation designs taking into account the intervention process. In the present systematic literature review, we present state of the-art of quantitative measures of process evaluation. We identified 39 papers. We found that measures had been developed to explore the organisational context, the intervention design, and the mental models of the intervention and its activities. Quantitative process measures are often poorly validated, and only around half of the studies linked the process to intervention outcomes. Fifteen studies used mixed methods for process evaluation. Most often, a qualitative process evaluation was used to understand unexpected intervention outcomes. Despite the existence of theoretical process evaluation frameworks, these were not often employed, and even when included, frameworks were rarely acknowledged, and only selected elements were included. Based on our synthesis, we propose a new framework for evaluating interventions, the Integrative Process Evaluation Framework (IPEF), together with reflections on how we may optimise the use of quantitative process evaluation in conjunction with a qualitative process evaluation.

The importance of ensuring good mental health is a key priority in the workplace. In the EU-28, 10% of the population suffered from either anxiety or depression in 2016 (OECD/EU, Citation2018). The costs of mental health to organisations are immense, exceeding 4% of GPD in the EU-28 in 2015 (OECD/EU, Citation2018). In the financial year 2016–2017, mental health issues cost UK organisations £34.9 billion; of which reduced productivity due to mental health issues cost £21.2 billion, sickness absence cost £10.6 billion, and a final £3.1 billion were due to workers leaving the organisation (Parsonage & Saini, Citation2017). There is thus a strong motivation for organisations to implement interventions in the workplace to promote good worker mental health. In the present systematic literature review, we review the existing literature of workplace interventions aimed at promoting good mental health, focusing on the evaluation of the intervention processes of such interventions and how such processes may be captured using quantitative measures. We propose a novel framework for evaluating the processes and outcomes of workplace interventions, the Integrated Process Evaluation Framework (IPEF). The overall aim is to develop our understanding of the current state-of-the art of quantitative process evaluation, identify gaps and limitations in the current literature, and propose ways forward of how we may conduct high-quality process evaluation studies.

The contributions of our literature review are fourfold. First, reviews and meta-analyses have pitched individual- and organisational-level interventions against each other (Richardson & Rothstein, Citation2008; Van der Klink et al., Citation2001). Integrative reviews, however, have concluded that multi-level interventions are more effective than single-level interventions (LaMontagne et al., Citation2007; LaMontagne et al., Citation2014). Day and Nielsen (Citation2017) proposed an intervention taxonomy based on four levels of intervention: the Individual, Group, Leader, and Organisational (IGLO). We focus interventions on one or more of these levels of intervention.

Second, we focus on the process evaluation of such interventions. Nytrø et al. (Citation2000, p. 214) defined process evaluation as evaluating “individual, collective or management perceptions and actions in implementing any intervention and their influence on the overall result of the intervention”. The benefits of process evaluation are many: they enable researchers to understand the outcomes of an intervention and draw conclusions about the generalisability, applicability, and transferability of the intervention (Armstrong et al., Citation2008), informing the design of future interventions (von Thiele Schwarz et al., Citation2015). Ongoing process evaluation may help organisations take corrective actions if the intervention is not being implemented according to plan; minimising the risks of negative or unwanted side effects (von Thiele Schwarz et al., Citation2015). Narrative and systematic reviews have explored the impact of the intervention process on intervention outcomes (Egan et al., Citation2009; Havermans et al., Citation2016; Montano et al., Citation2014; Murta et al., Citation2007; Nielsen & Abildgaard, Citation2013; Nielsen & Randall, Citation2013). These reviews focused only on organisational interventions paying limited attention to the methods used to capture the intervention’s processes.

Third, we focus on quantitative process evaluation measures to understand the current state-of-the-art. Intervention processes can be evaluated using both qualitative and quantitative methods and they may complement each other (Abildgaard et al., Citation2016). In light of the quantitative process evaluation measures adopted in mixed methods studies and how qualitative data have been integrated with the quantitative data.

Fourth and finally, despite calls for ongoing process evaluation (Nielsen & Randall, Citation2013), only one process evaluation framework has focused on what data need to be collected at which stages of the process (Nielsen & Abildgaard, Citation2013). This framework was based on organisational interventions only and did not discuss the methods that may be best used to capture different elements of the intervention process. We propose a framework for which measures to include in quantitative process evaluation to capture different levels of interventions and when to measure them during the intervention, supplemented by cost-effective qualitative methods.

Workplace interventions to improve workers’ mental health

In the present review, we focus on interventions to promote mental health in the workplace. We use Keyes (Citation2014) definition of mental health, which contains three dimensions: emotional wellbeing (e.g. happiness, interest in life, and (job) satisfaction), psychological wellbeing (i.e. mastering one’s life, having good relationships with others, being satisfied with one’s life), and social wellbeing (i.e. making a contribution and positive functioning). We use the IGLO framework suggesting interventions may target the Individual, Group, Leader, or Organisational level (Day & Nielsen, Citation2017; Nielsen et al., Citation2017). Interventions at the Individual level (I) focus on building the individual’s resources, improving and maintaining workers’ wellbeing by engaging in recovery activities, having positive coping styles and strategies (e.g. stress management training), and developing their resilience. Group-level (G) interventions target work groups and teams with the goal to improve group-level processes and the quality of work group interactions and relationships, e.g. civility or team-building training. Leader-level (L) interventions target the immediate manager, the line manager. L-level interventions help foster worker (and leader) health, either by providing leaders with information or resources about improving their own mental health and the mental of subordinates (Kelloway & Barling, Citation2010) or by training leaders to become better leaders more generally, i.e. improve their leadership styles. Examples of interventions at the L-level may be leadership training or mental health awareness training.

Finally, at the Organisational level (O), interventions aim to change the policies, practices, and procedures of the organisations to improve psychosocial working conditions through changing the work is organised, designed, and managed, e.g. through improving social support, increasing job autonomy or decreasing hindering job demands (Semmer, Citation2011). One example of such interventions are participatory organisational interventions where employees and managers jointly define the process and the methods used in the intervention, jointly plan which actions to make to the way work is organised, designed, and managed, collaborating to implement and evaluate the effects of these actions (Nielsen et al., Citation2010).

Workplace interventions can address multiple levels; participatory interventions often include a G-level intervention where work groups and teams develop and implement actions to improve working conditions and mental health in their area (i.e. the O-level). In the present literature review, we focus on workplace interventions to improve worker mental health at all four IGLO levels and explore which quantitative process measures were included in the evaluation. We explore which processes facilitate the translation of intervention components, e.g. implementation, action plans, or the extent that skills and knowledge acquired during training are generalised and maintained in the workplace setting (Baldwin & Ford, Citation1988). Gaining an understanding of which intervention processes may be important at the IGLO levels enables us to inform future studies using quantitative process evaluation and ultimately learn what intervention processes facilitate successful intervention outcomes.

The importance of process evaluation

Process evaluation uses qualitative and quantitative methods (Abildgaard et al., Citation2016). Quantitative process evaluation collects information about processes in ongoing or follow-up questionnaires, most often distributed to employees and managers (Abildgaard et al., Citation2016). Qualitative methods capture workers’, managers’, and other key stakeholders’ perceptions of the intervention process (Abildgaard et al., Citation2016; Hasson et al., Citation2014), mostly using semi-structured interviews (Havermans et al., Citation2016). Both methods are used in conjunction with before and after quantitative effect evaluation (Havermans et al., Citation2016).

Each method has its strengths and weaknesses. The strengths of quantitative methods are threefold. First, collecting and analysing quantitative measures in follow-up questionnaires is less time-consuming than collecting and analysing qualitative data, enabling in-depth process evaluation to be collected from large-scale studies. Information can be gathered about the intervention process from all participants, increasing the likelihood of obtaining a representative picture of perceptions of the intervention process (Nielsen et al., Citation2007). Second, standardised measures enable the comparison of the intervention process across a range of interventions, testing the validity and generalisability of constructs and findings (Hinkin, Citation1998). Third, statistical analyses can estimate how much of the variation in intervention outcomes can be explained by the intervention process (Nielsen & Randall, Citation2012). Significant limitations of quantitative methods involve their pre-determined design, making it imperative that measures accurately capture the most crucial intervention processes and the need for a sufficient sample size to avoid type 2 error (Maxwell et al., Citation2008).

Understanding how an intervention works can inform the design of future interventions in one or more organisational settings (Nielsen & Noblet, Citation2018). Previous research (Nielsen & Noblet, Citation2018; Nielsen & Randall, Citation2013) found that key organisational intervention processes are management support, participation, and integration of the intervention into the organisational context. Similarly, in the I, G, L levels of intervention (training) literature, individual and organisational contextual factors, together with the design of the intervention, have been found to predict training transfer, i.e. the extent to which skills and knowledge are transferred into the workplace and maintained over time (Baldwin & Ford, Citation1988; Blume et al., Citation2019). Regardless of the intervention level, the intervention processes are likely to influence the intervention’s outcomes and it is thus crucial to understand which intervention levels employ quantitative process evaluation and whether such evaluation considers the multi-level nature (LaMontagne et al., Citation2007, Citation2014).

We, therefore, formulated our first research question:

Research Question 1: What levels of IGLO intervention include quantitative process measures in their evaluation?

The Nielsen and Randall (Citation2013) framework was developed for O-level interventions only; however, the intervention process is also likely to influence intervention outcomes at the I, G, L levels of intervention. For example, training design and satisfaction with the training design predicts training transfer (Blume et al., Citation2010). Based on the Nielsen and Randall framework, Havermans et al. (Citation2016) conducted a systematic literature review of O-level interventions, including qualitative and quantitative process evaluation studies. In this review, we focus explicitly on quantitative process evaluation at the IGLO levels. Based on the Nielsen and Randall (Citation2013) framework, we explore which contextual, intervention, and mental models are captured using quantitative process evaluation and formulated our second research question:

Research Question 2: Which quantitative measures of process evaluation are applied in workplace interventions targeting the IGLO levels?

Abildgaard et al. (Citation2016) found that quantitative process evaluation researchers use both standardised and tailored self-developed measures. Using standardised measures reduces the workload of the evaluator and allows for comparisons of findings across studies (Hinkin, Citation1998). Tailored measures may better capture the specific intervention; however, concerns arise about their validity and reliability (Boateng et al., Citation2018; Morgeson & Humphrey, Citation2006). The Intervention Process Measure (IPM) consists of five process measures capturing central elements of the intervention process that can form the basis for tailoring measures relevant to specific interventions in different settings (Randall et al., Citation2009). The use of unvalidated measures may impact the conclusions drawn from studies (Randall et al., Citation2009). Therefore, a crucial question thus becomes whether validated measures are used and if tailored measures are used, to what extent are they validated?

Quantitative process evaluation enables the integration of process evaluation and effect evaluation to analyse how much of the variation on outcomes can be accounted for by the intervention process (Nielsen & Randall, Citation2012). The extent to which such integration is state of the art is unknown and we, therefore, do not know whether this potential benefit is reaped. We, therefore, review what types of analyses are used in quantitative process evaluation. We formulated our third research question:

Research Question 3: What type of validation and analyses of quantitative process measures are conducted and do studies establish a link between intervention process and outcomes?

Quantitative process evaluation reduces data collection time, allows the use of standardised measures, enables statistical explanations of variance in intervention outcomes, and allows multi-level analyses that integrate the relative importance of process measures at multiple intervention levels (Randall et al., Citation2009). Mixed methods designs enable researchers to collect, analyse, and mix qualitative and quantitative methods to understand better research problems or phenomena (Creswell & Plano Clark, Citation2007; Nielsen & Randall, Citation2013). In intervention evaluation research, mixed methods studies have used qualitative methods to capture the participants’ acceptance of the intervention, the quality of the implementation process, and how the intervention is integrated into existing practices and procedures supplementing quantitative effect evaluation (Nastasi et al., Citation2007). Abildgaard et al. (Citation2016) explored which information about the intervention process was offered by quantitative and qualitative process evaluation and found that qualitative and quantitative data complemented each other in organisational interventions. Four themes were identified in both types of data (information about changes, line managers’ attitudes and actions, improved psychosocial work environment, and need for organisational intervention), but where the qualitative methods offered depth and detail, quantitative measures offered breath and representativeness. Bryman (Citation2006) suggested that in mixed methods designs, qualitative methods can either be used to expand quantitative results by providing additional information explaining mechanisms or be complementary such that the two methods explore the same phenomena, with qualitative methods providing richer insights into the phenomena. To date, we have little knowledge about how mixed-methods process evaluation in IGLO-level interventions employs qualitative and quantitative methods. This knowledge is crucial if we are to develop cost-effective process evaluation reaping the benefits of both methods. We, therefore, formulated the following research question:

Research Question 4: In mixed methods studies, which aspects of the intervention process are captured by quantitative methods and which by qualitative methods?

Steckler and Linnan (Citation2002) identified seven dimensions of process evaluation: Context refers to the environmental factors that may influence implementation. Reach refers to the proportion of targeted participants that actually participated. Dose delivered refers to the number of units delivered, and Dose received to the actual number of units received by participants. Fidelity is similar to RE-AIM’s implementation, i.e. protocol adherence, and Implementation is the composite rating of the execution and receipt of the intervention. Finally, Recruitment refers to how many participants were invited.

Realist evaluation is a more loosely formulated evaluation framework, which seeks to answer the questions of: “what works for whom in which circumstances” (Pawson & Tilley, Citation1997). These questions should be answered through the test of CMO-configurations, which examine the Conditions under which certain Mechanisms are activated and what Outcomes are brought about by such activation (Pawson & Tilley, Citation1997).

In OHP, a few evaluation frameworks have been developed to evaluate O-level interventions. Examples include the IPM measures with its five dimensions of the intervention process (Randall et al., Citation2009) and the Nielsen and Randall’s (Citation2013) Framework for Evaluating Organisational-level interventions mentioned above. Nielsen and Abildgaard (Citation2013) extended this framework and, based on realist evaluation principles, argued that each intervention phase (i.e. initiation, screening, action planning, implementation, evaluation effects) should be evaluated to understand how one phase influences what happens in the next. To understand whether formalised process evaluation frameworks are used, allowing for comparison and synthesis across intervention studies, we formulated our final research question:

Research Question 5: Which theoretical process evaluation frameworks are used in studies including quantitative process evaluation? Moreover, what elements of such frameworks are used?

Method

Following the principles of PRISMA-P (Shamseer et al., Citation2015), we conducted a systematic literature review to provide an overview of the state-of-the-art of empirical papers in OHP using quantitative process evaluation measures at the IGLO levels of intervention and to develop a research framework together with future research agenda (Suri & Clarke, Citation2009).

Search strategy

We searched for empirical papers in the major databases (PsycARTICLES, PsycINFO, Social Policy and Practice, and Medline via OvidSP, Scopus, CINAHL via EBSCO, Web of Science/Social Science Citation Index (SSCI), Cochrane Library, ProQuest). Inclusion criteria were: (1) empirical papers including at least one quantitative process evaluation measure, (2) papers published in English, in peer-reviewed journals, between January 2000 and April 2020 (date of our search), (3) papers examining a workplace intervention focusing on at least one of the IGLO levels; we excluded intervention studies that focused on employees not in the workplace, e.g. return to work interventions, and (4) papers that included mental health as the main outcome as per the definition of Keyes (Citation2014). We accepted any kind of study design, including Cluster Randomised Control Trials (CRCTs) and quasi-experiments. We identified search terms based on previous literature reviews of process evaluation (e.g. Havermans et al., Citation2016) and discussed these among the study team. Search terms have been published in Figshare: https://figshare.com/articles/figure/Search_terms/19626828

Selection of relevant papers

We identified a total of 13,792 papers with titles broadly related to workplace mental health interventions. Once duplicates had been removed, 13,525 papers remained, and two reviewers then examined the titles, leaving 248. We omitted papers that were not intervention studies or did not include our outcome search terms. Next, the same two reviewers read the abstracts and eliminated a further 160 due to a lack of mention of process evaluation terms. They then reviewed the remaining 88 papers, and a further 53 papers were eliminated due to a lack of focus on quantitative process evaluation. A further six studies were identified through manual searches, and an additional two were included, which were published between April 2020 and July 2020, leaving a final total of 39 papers. Due to the diversity of papers included and the relatively low number of studies at each level, we were unable to conduct a meta-analysis. As the objective was to create a narrative around the “what” and “how” of quantitative process evaluation, we did not apply quality appraisal criteria of included papers, rather we focus on the quality of the quantitative process measures included.

Systematic review

We reviewed the final 39 papers in detail and extracted the following information: study design, country of origin, level of intervention at the IGLO levels, sector, quantitative process measures, sample size and response rate, analyses conducted, a summary of quantitative and qualitative results (to enable comparison), and evaluation framework used. This information has been summarised in tables and published on Figshare: https://figshare.com/articles/dataset/Tables/19626813. presents an overview of papers including the quantitative process evaluation measures used.

Table 1. Overview of papers in the review.

Results

None of the 39 studies were published before 2005, with the majority being published from 2015 to 2020 (23 out of 39). One study included participants from 36 Western countries, 21 studies were conducted in the Scandinavian countries, with 11 from Denmark, four papers were produced from one study (26, 27, 28, 32), three papers from one study (1, 2, 38), and two papers from one study (3, 15). Eight were from Sweden (three papers were published from one study, 6, 37, 38), one from Finland, and one from Norway. Eleven papers were from the remainder of Europe (the Netherlands, Germany, Switzerland, and the UK), two from North America, four from Australia, and one from Japan. Two papers from Switzerland used the same study sample (14, 19).

Following the International Standard Classification of Occupations (ILO, Citation2012), seven studies were conducted in the healthcare sector, seven in mixed settings, three in manufacturing, four in education, two in the financial sector, and two in the government and public sector. The most frequent research design was a quasi-experimental design without control group (18 studies), followed by the CRCT design (nine studies). Five studies applied a quasi-experimental design with a control group, and four studies (all focused on interventions targeting individuals) employed a CRT trial design. One study used a post-intervention design only. Fifteen papers employed a mixed-methods approach.

In response to research question 1: “What levels of IGLO intervention include quantitative process measures in their evaluation?” we found that 13 papers focused on one level of intervention – eight papers at the I level (9, 14, 16, 17, 23, 25, 30, 39), none at the G-level, two at the L-level (24, 35), and three at the O-level (4, 18, 34). Nineteen papers studies focused on two levels of intervention (1/2/38, 5, 7, 10–13, 21, 22, 27/28/32, 31, 33, 36, 6/37/38), primarily a combination of the G and O levels (1/2, 5, 6, 11–13, 27/28/32, 33, 36, 37/38), five papers on three levels (8, 19, 20, 26, 29). Only two papers focused on all four IGLO levels, taken from the same study (3, 15).

In response to research question 2: "Which quantitative measures of process evaluation are applied in workplace interventions targeting the IGLO levels?” nine papers included a quantitative measure of context. Eight of these were participant-rated (6, 10, 21, 22, 26, 27, 28, 32) and included measures of existing work practices (6), previous intervention history (32), ongoing changes (10), culture (26), pre-existing working conditions (27, 28), and health (21, 22, 27, 28). Three papers used objective measures (workload, [5], financial resources allocated to the intervention [12], and ongoing changes identified in meeting notes [10]). The majority of the studies that included a context measure focused on the G, L, and O levels, only one study focused on the I level combined with the G-level (21).

Twenty-eight papers included measures about the intervention itself. Fourteen of these papers used objective measures or other ratings (5–12, 14, 15, 17, 19, 30, 35), including recordings of reach (7, 8, 10, 14, 19, 30), dose delivered and dose received (8, 9, 10, 15), together with fidelity (5, 6, 10, 12, 30) and recruitment (8, 10, 12, 30). Using self-report, only one paper asked participants about implementation fidelity (6), integrity (39), and adherence (24), and two about compliance (24, 25). One paper explored reach (29) and another, dose delivered, and dose received using self-report (30). Two papers explored the degree of implementation of action plans using facilitator ratings (11, 20) and three using self-report measures (2, 13, 18). Six papers explored exposure to the intervention and its active ingredients (8, 28, 31, 32, 34, 38), asking participants to rate their exposure, only one used objective measures (8). Three studies included a measure of intervention fit and integration with the organisational context (22, 37, 38).

Nineteen studies focused on the implementation strategy. The majority of these studies focused on drivers of change. The role of line managers in the intervention was explored in 12 papers (1–6, 21, 27, 32–34, 36), three papers focused on the transformational leadership (3, 21, 22), and one on manager support (8). The measure second most often included was employees’ participation in designing and implementing the intervention and its activities and their influence on which changes should be introduced (6, 11, 28, 29, 32–34, 36). One paper focused on peer support (8) and another on participation in an online support group (9). Another frequently studied aspect of the implementation strategy was participants’ mental models of information and communication (1, 6, 29, 33).

Twenty-five papers included in our review studied participants’ mental models using self-report (1–4, 6, 8, 9–11, 16, 18, 19, 21, 23–25, 28, 29, 30, 32–35, 37, 38). Thirteen papers explored the quality of the intervention, including the quality of action plans (2, 4, 6, 18, 29, 34) and satisfaction with the intervention (9, 10, 11, 16, 19, 24, 30, 34). Three papers explored usefulness or usability (9, 25, 37) and three participants’ mental models of acceptability (9, 24, 35). Participants’ readiness for change was explored in four studies (1, 6, 32, 34). One study explored participants’ expectations of their ability to benefit from training (9). Changes in mental models were studied in 10 studies (1, 3, 6, 8, 23, 24, 28, 33, 37, 38) including both changes in individual resources (3, 23, 24), as well as perceptions of changes in procedures, the work environment, the attitudes towards and ability to manage the psychosocial work environment and stress issues (1, 3, 6, 28, 33, 37, 38). One paper explored changes in mental models of supervisor and peer-mentor support (8).

In response to the first part of research question 3: “What type of validation and analyses of quantitative process measures are conducted?”, the vast majority of studies did not include any validation of scales, many used single-item measures (2, 4, 5, 7, 10, 12, 14, 15, 18, 20, 29, 31, 34, 36), objective or other ratings (11, 12, 17, 30, 35). Only one study was a validation study (32), including sensitivity, discriminant validity, predictive validity, and reliability (Cronbach’s alpha). Seven studies used validated scales (8, 21, 23, 26, 27, 28, 34), 16 tested for reliability (3, 6, 8, 9, 13, 21–23, 26–29, 32, 34, 37, 38); however, only four studies validated their scales using exploratory factor analysis (1, 6, 22, 32). One study used scales that were not validated in any way (19), and five studies included scales but treated them as single items (11, 16, 24, 33, 39).

The second part of research question 3: “Do studies establish a link between intervention process and outcomes?”, we found that most studies used descriptive statistics only. Fifteen studies reported frequencies (2, 4, 7–10, 15, 16, 18, 24, 25, 30, 32, 34, 35), six studies reported means (11, 19, 20, 30, 34, 39), and one summed up ratings (12). A range of studies divided the intervention group into subgroups according to exposure or implementation degree (11, 17), examined either contextual factors explaining the difference in exposure or implementation (6) or differences in intervention outcomes according to subgroups, finding that higher levels of implementation were related to increased vertical social capital, kept horizontal social capital stable (as opposed to a decrease in control and low implementation groups, 13). Only trained employees reported an increase in occupational self-efficacy (14). Employees who felt that changes had been implemented and led to improved working conditions also reported significant improvements in decision latitude, supervisor support, and rewards (18). In departments where employees had participated in intervention workshops, improvements were reported in peer, supervisor and worksite support, along with reduced work overload, depression, and health risks (20). Employees who were aware of the intervention content and had access to intervention resources reported less exhaustion (31). Two studies compared context or processes with a control group (8, 37). Three studies used multivariate analyses examining context as a moderator of intervention outcomes, leader’s wellbeing (26), linking communication about the intervention to increased job autonomy and job satisfaction, and increased dialogue about work stress to reduced job demands. Finally, one study found that readiness for change was related to self-efficacy, line managers’ attitudes and actions were related to self-efficacy, and intervention history was related to job satisfaction (32). Nine studies used structural equation modelling linking intervention context, process mechanisms, and outcomes (3, 21–23, 27–29, 36, 38), primarily examining pre-existing levels of working conditions and wellbeing as contextual factors. Line managers’ attitudes and actions were indirectly related to psychological wellbeing and job satisfaction through working conditions (27). Transformational leadership was related to wellbeing (3), motivation (the latter through fit, 21), and health and work ability (through line managers’ attitudes and actions, 22). Mindfulness was related to work engagement through psychological capital and affect (23). Participation was related to job satisfaction through social support and changes in procedures were related to psychological wellbeing and job satisfaction through social support and job autonomy (28). Exploring a complex chain of relationships (29) found that employees who felt they had had the opportunity to influence the intervention content, were more likely to participate in intervention activities, those who had voluntarily participated in the intervention activities, had a more positive appraisal of the intervention and finally, those who had a more positive appraisal reported higher levels of job satisfaction and less stress. Line managers’ support in pre and during the intervention was related to participation during the intervention (36), which in turn was related to wellbeing during and post-intervention. Furthermore, participation pre-intervention was related to line managers’ support during the intervention, which in turn predicted participation during the intervention, leading to job satisfaction post-intervention (36). One study linked reach to the impact of the intervention and impact to outcomes in separate analyses (19).

In response to research question 4: “In mixed methods studies, which aspects of the intervention process are captured by quantitative methods and which by qualitative methods?” we found that quantitative process evaluation was used to compare the effects of different subgroups in the overall intervention group, either in terms of process mechanisms (3; transformational leadership and collective efficacy) or implementation of actions (4, 6, 7, 31, 34) and wider process issues such as communication, participation and line manager support for the intervention (34). In these papers, a qualitative process evaluation was employed to explore the mechanisms explaining quantitative group differences. These factors included (a) perceptions of the intervention process such as well-functioning Kaizen processes (6), and perceptions of workshops (7), (b) omnibus contextual factors such as management support, (3, 34) relations between employees and managers (3), change fatigue, (3, 6), and cultural barriers to talk about stress (7) and (c) discrete contextual factors such as concurrent changes (4, 7) and time pressure (6). One paper explored the intervention mechanisms such as improved control, work flow, or work–life balance (31).

A second set of mixed-method papers employed quantitative process evaluation to explore the implementation process such as adoption (19), dose delivered and dose response (8, 30), reach (8, 10, 19, 30), recruitment (8, 35), adherence (16, 35), and satisfaction with the intervention (10, 19, 30). In these papers, qualitative process evaluation explored which changes were implemented (8), intervention mechanisms such as line management support (8), shared mental models, resilience building, and project management (19), and the influence of omnibus contextual factors, e.g. time pressure (10), management support (10), readiness for change (10), and perceptions of the programme (30). Qualitative methods were also used to explore maintenance in terms of integration into existing practices and processes (19) and contextual factors such as senior management support, middle manager time pressure, and integration into organisational procedures (35). Two of these mixed-method papers used qualitative data to understand the working mechanisms of the intervention such as learning (16), use of tools (16), improved self-awareness/self-acceptance, a positive state of mind, goal achievement, relationships, and awareness of wellbeing (30).

In one mixed-method paper, the focus was on the implementation of action plans, with the qualitative evaluation focusing on providing a richer account of the perceptions of action plan acceptability (2) or the details of the intervention process (1, e.g. line manager support and attitudes, need for organisational intervention). Likewise, in another mixed-method paper, a quantitative process evaluation was used to explore the fidelity of the project, with the qualitative process evaluation focused on detailing the explanations for low fidelity (5). In summary, most mixed-methods papers employed an expansion approach with the two types of methods being used to obtain different types of information about the intervention process.

In answer to our fifth research question: “which theoretical process evaluation frameworks are used in studies including quantitative process evaluation? What elements are used from these frameworks?”, we found that just under half of the included papers did not explicitly use an evaluation framework (4, 5, 9, 11, 13–18, 20, 22–24, 26, 29, 31, 35–37, 39). The framework most often used was the IPM, which was used in seven papers (1, 21, 27–28, 32, 34), followed by the Realist evaluation framework (2, 3, 7, 8, 38) and The Steckler and Linnan (Citation2002) framework (8, 10, 30, 34). The Nielsen and Randall (Citation2013) Framework for Evaluating Organisational-level Interventions was used in three papers (6, 33, 34). Other frameworks were only used in one paper: the RE-AIM (19). the Conceptual Framework for Implementation Fidelity (6; Carroll et al., Citation2007), Evaluation of Treatment Integrity (25; Borelli et al., Citation2005), the Model for Continuous Improvement Participatory Ergonomic Programs (12; Haims & Carayon, Citation1998), Implementation Outcomes (12; Proctor et al., Citation2011), Multi-level Framework Predicting Implementation Outcomes (12; Chaudoir et al., Citation2013), and the Multifaceted Approach to Treatment Efficacy (30; Nelson & Steele, Citation2006). Many studies used elements from the frameworks but did not refer directly to these.

Discussion

In the present systematic literature review, we explored the use of quantitative process evaluation in workplace interventions aimed at improving mental health by targeting individuals, groups, leaders, and/or organisational policies, procedures, and practices as outlined by the IGLO model (Day & Nielsen, Citation2017; Nielsen et al., Citation2017). Based on Nielsen and Randall’s (Citation2013) framework of process evaluation, we expand the review of Havermans et al. (Citation2016) to IGLO-level interventions. We found that relatively few papers included quantitative measures of the context of interventions, mostly omnibus context in the form of pre-existing working practices and procedures. Havermans et al. (Citation2016) did not make a distinction between omnibus and discrete context. With regard to the quantitative measures of the intervention itself, most papers focused on elements of recruitment, reach, dose delivered, dose received, together with fidelity, primarily captured using objective measures. Despite the use of concepts from prominent implementation frameworks such as Steckler and Linnan (Citation2002), few papers made direct reference to frameworks, and no papers identified included all components of any framework. In terms of the implementation strategy, papers included issues around line managers’ attitudes and behaviours, participation, and communication and information. These factors were identified by Havermans et al. (Citation2016). We also identified several studies focused on specific leadership styles of line managers, not identified in the Nielsen and Randall (Citation2013) framework nor in Havermans et al.’s (Citation2016) review.

Issues around the implementation strategy have been widely explored in O-level interventions, but I, G., L-level interventions have failed to consider whether the training is transferred, generalised, and maintained over time (Baldwin & Ford, Citation1988). In the wider training literature, training transfer is extensively researched (Blume et al., Citation2010). Baldwin and Ford (Citation1988) suggested two contextual factors influencing learning: individual characteristics (e.g. ability, motivation, and personality) affecting learning and environmental factors (e.g. support from colleagues and managers, opportunities to practice skills learned during training) influencing learning and transfer. Akin to the documentation of the intervention itself (Nielsen & Abildgaard, Citation2013; Nielsen & Randall, Citation2013), the training transfer model suggests that training characteristics such as content, learning principles, and sequencing may influence learning and training transfer. Although the training transfer model was developed for training, similar components may be relevant to O-level interventions, e.g. whether tools and methods used to facilitate the development and implementation of action plans (Nielsen et al., Citation2014). A large number of papers focused on aspects of participants’ mental models and the perceptions of the intervention, at all levels of intervention.

In response to our third research question, 15 of the reviewed papers employed a mixed-methods approach. In line with Bryman’s (Citation2006) classification of expansion and complementarity, we found that the majority of papers employed an expansion approach to mixed methods. Although quantitative process evaluation was employed to compare effects between groups that varied in their implementation of actions and the implementation process, e.g. adoption, dose delivered, dose received, and satisfaction with the intervention, a qualitative process evaluation was primarily explored process mechanisms and the omnibus and discrete context; the two methods provided breath and expand the range of enquiry as they explored different aspects of the intervention process. Only three papers used a complementary approach to process evaluation (Bryman, Citation2006); qualitative process evaluation was elaborated and illustrated findings from the quantitative process evaluation.

Despite the one of the advantages of standardised process evaluation questionnaire being validation and generalisation of measures (Abildgaard et al., Citation2016), the majority of papers did little to validate scales. Most reviewed studies used single items or treated scales as single items. Although single items are valuable in terms of questionnaires concise, complex intervention processes may require scales to capture this complexity (Randall et al., Citation2009). Few validated measures were used and most often only the coefficient. The IPM (Randall et al., Citation2009) was widely used, without further validation. This omission raises serious questions about the construct validity of the measures used. Another limitation is that few studies statistically link quantitative process measures to outcomes, thus failing to reap one of the key benefits of using quantitative process evaluation, namely that we can statistically determine how much of changes in outcomes can be ascribed to the process (Abildgaard et al., Citation2016; Nielsen et al., Citation2007). We cannot know whether studies that did not link the process to outcomes would have confirmed such a link; future studies should seek to establish such links. The studies linking the degree of implementation with intervention outcomes and linking process mechanisms with outcomes did establish such links, confirming that intervention processes influence intervention outcomes. Current models (e.g. Nielsen & Abildgaard, Citation2013) suggest that evaluation should consider interrelationships and intersections between structures ranging from the context, the experiences of organisational members and stakeholders and include temporality. Overall, a broad consensus seems to emerge about the importance of observing changes in the organisational context through a time-sensitive perspective analysing the interplay between different levels of analysis.

Our review reveals quantitative process evaluation being a fast-growing field, with 39 papers published since 2000 and 23 of these published since 2015, however, also confirms that little consensus of what to evaluate and when. Overall, established evaluation frameworks were rarely used, and where adopted, not all components of the evaluation framework were included. Employing comprehensive frameworks is crucial to ensure systematic and comparable process evaluation (Steckler & Linnan Citation2002). In general, the frameworks identified in this review use overlapping concepts, e.g. fidelity and adherence, which are included in Carroll et al. (Citation2007), Steckler and Linnan (Citation2002), RE-AIM (Glasgow et al., Citation1999) and Borelli et al. (2005). Existing frameworks fail to consider the active role of employees and managers, especially in O-level interventions, where the process and content are rarely pre-planned (Nielsen, Citation2013). The training transfer literature suggests trainees need to proactively try out acquired skills and knowledge for the training to achieve its intended outcomes (Blume et al., Citation2019). None of the reviewed studies considered this body of literature. Considering training transfer may improve the quality of workplace interventions to improve mental health in the workplace as they offer insights into how training interventions at the I, G, and L levels achieve their intended outcomes.

Integrated process evaluation framework

Based on our systematic literature review, we synthesise the insights gleaned from our five research questions and propose an Integrated Process Evaluation Framework, the IPEF, built on quantitative process evaluation measures at the IGLO levels and training transfer components. In the IPEF, we propose which dimensions need to be measured to capture all three components of intervention processes and at which time point. Based on arguments that comprehensive process evaluation needs to incorporate both qualitative and quantitative process evaluation (Randall et al., Citation2009), our review gleaned insights from the two methods, the IPEF adopts an expansion approach to ensure cost-effective process evaluation. Quantitative measures are used to collect data at the IGLO-level while qualitative methods focus on issues that cannot easily be collected through quantitative measures, such as discrete contextual measures, unintended events during the intervention (Nielsen & Randall, Citation2013).

Insights from our fourth question about the types of analyses used to (potentially) integrate quantitative process evaluation into outcome evaluation suggest that different elements of the intervention should be measured at different time points and analyses should explore how these elements influence each other (e.g. Nielsen et al., Citation2007; Tafvelin et al., Citation2019). We propose which elements need to be measured when and at which level. Finally, based on research question 5, we gained insights into the use of existing frameworks. Evaluation frameworks tend to focus primarily either on objective measures of uptake of the intervention (Glasgow et al., Citation1999; Steckler & Linnan, Citation2002) or people’s perceptions of the intervention process and context (Nielsen & Abildgaard, Citation2013; Nielsen & Randall, Citation2013; Randall et al., Citation2009). Integration of the two perspectives may promote meaningful insights into the factors that influence the intervention’s outcomes and understanding of how perceptions drive uptake, for example, if participants do not feel ready for change, they are unlikely to engage in the intervention’s activities.

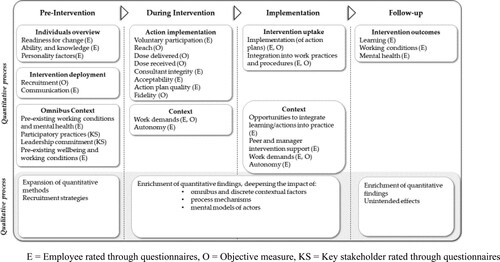

In the following, we present the IPEF (see ). This five-phased framework offers valuable information about what data to collect when workplace interventions aimed at improving employee mental health are to be evaluated. The framework can be used to evaluate interventions at one or more of the IGLO levels. We propose that data should either be collected from employees at all levels in the intervention, including where relevant leaders (E) or could be objective measures (O).

Figure 1. The integrated process evaluation framework (IPEF). E, employee rated through questionnaires; O, objective measure, KS, key stakeholder rated through questionnaires.

Pre-intervention

Although not identified in our literature review, insights from the training transfer model (Baldwin & Ford, Citation1988) suggest that individual context measures such as ability and personality are crucial to employees participating in the intervention. We propose that readiness for change is key at all levels of intervention. Readiness for change refers to the extent to which participants see the need for change, believe the intervention in question will bring about the necessary change that participants can make use of the intervention to improve their conditions and they are ready to accept changes brought about by the intervention (Weiner, Citation2020) and should be measured prior to the intervention to understand whether employees are likely to buy into the intervention (Randall et al., Citation2009). All these constructs are related to individuals’ mental models and characteristics and could be measured in questionnaires. Relating to the planned interventions, the amount and quality of communication is important to measure (Nielsen & Abildgaard, Citation2013). Communication is an important precursor of participation in intervention activities as the benefits of the intervention may motivate employees to participate in the intervention and its activities (Roozeboom et al., Citation2020).

Nielsen and Randall (Citation2013) argued that the omnibus context, the culture and procedures in place to manage health, influences the extent to which interventions are implemented. This includes baseline levels of existing working conditions and mental health (Nielsen & Randall, Citation2012; von Thiele Schwarz et al., Citation2017). In particular, autonomy and work demands are crucial. High work pressures may prevent people from participating in intervention activities (Gupta et al., Citation2018) and autonomy may allow them to plan their tasks, so that engagement with intervention activities, e.g. participation in training or action planning workshops is the least obstructive. These factors should be measured in employee questionnaires; however, objective data can be obtained about workload in some occupations (Arapovic-Johansson et al., Citation2018). Although not identified in any of the reviewed studies, a recent tool has been developed to capture the policies, practices, and programs that may facilitate the implementation of interventions to promote worker safety, health, and wellbeing; the Workplace Integrated Safety and Health Assessment (WISH; Sorensen et al., Citation2018). Two factors from this tool may also be useful for mental health interventions. First, existing participatory practices focused on identifying and managing mental health. In order for interventions to work, they must address the needs of participants (Nielsen & Noblet, Citation2018) and therefore dialogue processes should be in place that helps identify which activities are needed at each level of intervention. Second, leadership commitment in the form of (1) management assuming responsibility for managing mental health and (2) willingness to allocate resources necessary for such management was found to be important in our review (e.g. Lundmark et al., Citation2017). The tool is intended to be completed by Human Resources, Occupational Health and Safety practitioners, and others with a responsibility for managing safety and health in the organisation. The recruitment strategies also need to be documented (Steckler & Linnan, Citation2002), i.e. the strategies for recruiting organisations and departments within organisations in order to understand the underlying motives for engaging with the intervention (Nielsen & Randall, Citation2013). Recruitment strategies can be documented through minutes of steering group meetings.

During the intervention

During the intervention, it is crucial to measure the implementation of intervention activities (Baldwin & Ford, Citation1988; Glasgow et al., Citation1999; Nielsen & Randall, Citation2013; Steckler & Linnan, Citation2002). First, research has found that voluntary participation is important (Blume et al., Citation2010; Jenny et al., Citation2015). To obtain reliable data, a single item can be included in a questionnaire, after workshops and/or training sessions have been completed. Second, it should be measured how many participants had the opportunity to be involved in the intervention. Reach can be interpreted differently at the different levels of intervention. At the I, G, L intervention levels, reach refers to the percentage of those who were invited to participate in training (Glasgow et al., Citation1999). For O-level interventions, reach could refer to the number of people who worked in participating departments/work groups (Kobayashi et al., Citation2008). This information can be obtained through organisational records of the size of organisations and/or departments, and the demographic composition of employees. Combined with demographic information from the baseline questionnaires or organisational data, respondents’ representativeness can be analysed. Dose delivered and dose received should be captured to understand whether the participants engaged with the intervention (Steckler & Linnan, Citation2002). This information can be obtained objectively through registrations at workshops and training sessions documenting how many signed up and how many sessions, participants completed. Such implementation data is crucial to understand how employees react to the intervention activities. If they are not offered the chance to participate in activities, they may be less likely to accept changes to work policies, practices, and procedures (Schelvis et al., Citation2016). If employees do not complete all I, G, L training sessions, they may be less likely to attempt to transfer skills and knowledge to the workplace.

Primarily I-level interventions measured satisfaction with the intervention’s activities, e.g. consultants' integrity (competence and confidence), and acceptability of the intervention (Coffeng et al., Citation2013); however, satisfaction may be relevant at all levels of intervention. These factors are central for participants to integrate learned skills and behaviours into the workplace (Blume et al., Citation2010). For interventions where action plans are developed as part of the activities, satisfaction with the developed action plans may be important for participants to support the actual implementation of such plans (Abildgaard et al., Citation2018). Satisfaction with the intervention’s activities may explain the differences between dose delivered and dose received, e.g. if participants perceive facilitators do not understand their needs, they may be more likely to drop out of training or stop attending workshops. These factors rely on employees’ appraisals and should be collected through questionnaires, immediately after training sessions and workshops have been completed.

During the intervention phase, contextual factors such as autonomy and work demands may influence participation in activities. For example, if participants lack autonomy, they may not be able to reschedule their work tasks to participate in activities and therefore more likely to drop out or be too stressed out to fully with the intervention activities. Workload allocations should allow for attendance in intervention activities (Hasson et al., Citation2010). Autonomy should be collected through questionnaires, but workload can potentially be collected also using company records.

Qualitative methods should be used to explore discrete contextual factors that happen during the intervention. It is near impossible to predict which discrete factors may influence the intervention process and the outcomes of the intervention and analysis. Meeting minutes may identify contextual happenings, e.g. downsizing, mergers, and change of leadership.

Implementation

After the completion of intervention activities (workshops and training sessions), and the development of action plans, learned skills and knowledge from I, G, L levels of interventions and changes to work policies, practices, and procedures of O-level interventions should be translated into the daily work practices (Baldwin & Ford, 1998; Nielsen & Abildgaard, Citation2013). The frameworks of RE-AIM (Glasgow et al., Citation1999) and Steckler and Linnan (Citation2002), term this Maintenance, namely that changes are maintained over time whereas in the O-level intervention literature, this has been termed sustainability, i.e. that action plans are translated into work practices and procedures (Nielsen & Abildgaard, Citation2013). For I and L interventions, maintenance involves changing behaviours or reacting differently to situations encountered at work (Baldwin & Ford, Citation1988). The implementation of action plans can be captured through documentation of visual representations, e.g. improvement boards (e.g. Ipsen et al., Citation2015) or employees’ appraisals of the degree of implementation (Abildgaard et al., Citation2018). Changes in work practices and procedures and changes of employees’ reactions best measured through questionnaires as they capture participants’ appraisals of the changes (von Thiele Schwarz et al., Citation2017).

An important contextual factor is the opportunity to integrate learning and action plans (Burke & Hutchins, Citation2007). At the O-level, working with action plans, i.e. discussing them and reviewing them to make adjustments is crucial (Abildgaard et al., Citation2018; Augustsson et al., Citation2015), allowing for ongoing adaptation to ensure full integration into work policies, practices, and procedures. We propose a joint term for this process, regardless of the level of intervention: Opportunities to integrate learning/action into practice. This construct relies on employees’ appraisals. The levels of work demand and autonomy likely influence whether integration and implementation happen and should also be measured at this phase. For example, participating workers need to have the necessary autonomy to change work policies, practices, and procedures and trained leaders need to be able to change their leadership practices.

The training transfer literature (Baldwin & Ford, Citation1988; Burke & Hutchins, Citation2007) emphasises peer and manager support as an important contextual factor; peers and line managers may need to support trainees trying out new behaviours. Similarly, the O-level intervention literature emphasises the importance of a participatory climate, where employees discuss work-related issues and line managers act as role models and proactively support the implementation of actions (Nielsen & Randall, Citation2012). We term this peer and manager support regardless of the level of intervention. These factors are dependent on employees’ appraisals and should be measured in questionnaires.

In this phase of the intervention, qualitative process evaluation should capture concurrent events as they likely influence the extent to which participants attempt to transfer acquired skills and knowledge to the workplace and whether action plans are implemented according to plan. Such information may be collected through meeting minutes.

Follow-up

Once the interventions have been implemented and integrated, process and effect evaluation should be conducted addressing key questions around whether the intervention achieved its intended outcomes and how can intended and unintended outcomes be explained (Glasgow et al., Citation1999; Nielsen & Abildgaard, Citation2013). Effects can be measured at multiple levels. First, it is important to capture whether learning has taken place, i.e. whether individuals, groups, leaders, and organisations as a whole have developed their abilities to manage mental health issues (Abildgaard et al., Citation2016). This learning may have wider consequences beyond the individual and perhaps minimise issues such as stigma as workers become more aware and accepting how mental health issues can be managed at work (Dimoff et al., Citation2016). We should also measure outcomes in terms of working conditions and mental health to understand how the different phases of the intervention have influenced the interventions’ outcomes. These constructs should be measured in employee questionnaires.

Qualitative process evaluation offers an opportunity to understand the (unintended) effects of the intervention and may enrich quantitative results by explaining why the results were brought about (e.g. Abildgaard et al., Citation2019) and should therefore be conducted at follow-up, for example through semi-structured interviews.

Strengths and limitations of the IPEF framework

The key strengths of the IPEF framework are the guidance of what data to collect when and how and the integrated, multi-level approach to interventions overcomes the dichotomy of individual vs. organisational interventions. One challenge of the IPEF framework is its reliance of quantitative measures. The length of questionnaires is a common concern in OHP research and adding to the burden of collecting process data in addition to measures of proximal and distal outcomes at baseline and follow-up may raise concerns about survey fatigue and low response rates. It is important to balance the demands put on the participants and the desire for in-depth process evaluation. Quantitative process evaluation, however, it not just about distributing surveys to participants, many measures can be collected by facilitators of the interventions, e.g. reach, dose delivered and dose received. Data collected throughout the intervention can be fed back to the organisation to enhance implementation thus enhancing the chances of the intervention(s) achieving their intended results. Using the IPEF as template, evaluators can plan of which data are best collected in high quality and cost-effective way. Due to its reliance on quantitative methods, it is best suited for use in large-scale intervention studies.

Conclusion

In the present systematic literature review, we reviewed the state-of-the-art of intervention studies using quantitative process measures, on their own or combined with qualitative process evaluation. We explored what constructs are used, in which types of interventions, and how they are analysed. On the basis of our review, we call for an integration of the different frameworks and suggest we need to study what happens before, during, and after the intervention to understand how intervention processes and context influence intervention outcomes. In the attempt to address this call, we developed the Integrative Process Evaluation Framework (IPEF). This framework offers valuable information about what data to collect when integrating different frameworks. Evaluating and reporting such comprehensive process evaluation and integrating it into effect evaluation will inform future interventions. We propose a stronger use of mixed methods to triangulate and ease the burden of employees responding to lengthy questionnaires. Collecting data at various time points also ease this burden at the same time as ensuring recency. We hope that our review and the use of our proposed framework can help improve the evaluation of multi-level interventions and that such evaluation can improve how we design and implement sustainable and effective interventions that can promote mental health in the workplace.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- The full list of references included in the literature review can be found in Figshare: https://figshare.com/articles/dataset/References_docx/19626708

- Abildgaard, J. S., Nielsen, K., & Saksvik, P. O. (2016). How to measure the intervention process? An assessment of qualitative and quantitative approaches to data collection in the process evaluation of organizational interventions. Frontiers in Psychology: Organizational Psychology. https://doi.org/10.3389/fpsyg.2016.01380

- Abildgaard, J. S., Nielsen, K., & Sverke, M. (2018). Can job insecurity be managed? Evaluating an organizational-level intervention addressing the negative effects of restructuring. Work & Stress, 32(2), 105–123. https://doi.org/10.1080/02678373.2017.1367735

- Abildgaard, J. S., Nielsen, K., Wåhlin-Jacobsen, C. D., Maltesen, T., Christensen, K. B., & Holtermann, A. (2019). Same, but different’: A mixed-methods realist evaluation of a cluster-randomized controlled participatory organizational intervention. Human Relations. https://doi.org/10.1177/0018726719866896.

- Arapovic-Johansson, B., Wåhlin, C., Hagberg, J., Kwak, L., Björklund, C., & Jensen, I. (2018). Participatory work place intervention for stress prevention in primary health care. A randomized controlled trial. European Journal of Work and Organizational Psychology, 27(2), 219–234. https://doi.org/10.1080/1359432X.2018.1431883

- Armstrong, R., Waters, E., Moore, L., Riggs, E., Cuervo, L. G., Lumbiganon, P., … Hawe, P. (2008). Improving the reporting of public health intervention research: Advancing TREND and CONSORT. Journal of Public Health, 30(1), 103–109. https://doi.org/10.1093/pubmed/fdm082

- Augustsson, H., von Thiele Schwarz, U., Stenfors-Hayes, T., & Hasson, H. (2015). Investigating variations in implementation fidelity of an organizational-level occupational health intervention. International Journal of Behavioral Medicine, 22(3), 345–355. https://doi.org/10.1007/s12529-014-9420-8

- Baldwin, T. T., & Ford, J. K. (1988). Transfer of training: A review and directions for future research. Personnel Psychology, 41(1), 63–105. https://doi.org/10.1111/j.1744-6570.1988.tb00632.x

- Blume, B. D., Ford, J. K., Baldwin, T. T., & Huang, J. L. (2010). Transfer of training: A meta-analytic review. Journal of Management, 36(4), 1065–1105. https://doi.org/10.1177/0149206309352880

- Blume, B. D., Ford, J. K., Surface, E. A., & Olenick, J. (2019). A dynamic model of training transfer. Human Resource Management Review, 29(2), 270–283. https://doi.org/10.1016/j.hrmr.2017.11.004

- Boateng, G. O., Neilands, T. B., Frongillo, E. A., Melgar-Quiñonez, H. R., & Young, S. L. (2018). Best practices for developing and validating scales for health, social, and behavioral research: A primer. Frontiers in Public Health, 6, 149. https://doi.org/10.3389/fpubh.2018.00149

- Borrelli, B., Sepinwall, D., Ernst, D., Bellg, A. J., Czajkowski, S., Breger, R., … Orwig, D. (2005). A new tool to assess treatment fidelity and evaluation of treatment fidelity across 10 years of health behavior research. Journal of Consulting and Clinical Psychology, 73(5), 852–860. http://doi.org/10.1037/0022-006X.73.5.852

- Bryman, A. (2006). Integrating quantitative and qualitative research: How is it done? Qualitative Research, 6(1), 97–113. https://doi.org/10.1177/1468794106058877

- Burke, L. A., & Hutchins, H. M. (2007). Training transfer: An integrative literature review. Human Resource Development Review, 6(3), 263–296. https://doi.org/10.1177/1534484307303035

- Carroll, C., Patterson, M., Wood, S., Booth, A., Rick, J., & Balain, S. (2007). A conceptual framework for implementation fidelity. Implementation Science, 2(1), 40. https://doi.org/10.1186/1748-5908-2-40

- Chaudoir, S. R., Dugan, A. G., & Barr, C. H. (2013). Measuring factors affecting implementation of health innovations: A systematic review of structural, organizational, provider, patient, and innovation level measures. Implementation Science, 8(1), 22. https://doi.org/10.1186/1748-5908-8-22

- Coffeng, J. K., Hendriksen, I. J., van Mechelen, W., & Boot, C. R. (2013). Process evaluation of a worksite social and physical environmental intervention. Journal of Occupational and Environmental Medicine, 55(12), 1409–1420. https://doi.org/10.1097/JOM.0b013e3182a50053

- Creswell, J. W., & Plano Clark, V. L. (2007). Designing and conducting mixed methods research. Sage.

- Day, A., & Nielsen, K. (2017). What does our organization do to help our well-being? Creating healthy workplaces and workers. In N. Chmiel, F. Fraccaroli, & M. Sverke (Eds.), An introduction of work and organizational psychology (pp. 295–314). Wiley Blackwell.

- Dimoff, J. K., Kelloway, E. K., & Burnstein, M. D. (2016). Mental health awareness training (MHAT): The development and evaluation of an intervention for workplace leaders. International Journal of Stress Management, 23(2), 167–189. https://doi.org/10.1037/a0039479

- Egan, M., Bambra, C., Petticrew, M., & Whitehead, M. (2009). Reviewing evidence on complex social interventions: Appraising implementation in systemic reviews of the health effects of organisational-level workplace interventions. Journal of Epidemiology & Community Health, 63(1), 4–11. https://doi.org/10.1136/jech.2007.071233

- Gaglio, B., Shoup, J. A., & Glasgow, R. E. (2013). The RE-AIM framework: A systematic review of use over time. American Journal of Public Health, 103(6), e38–e46. https://doi.org/10.2105/AJPH.2013.301299

- Gjerde, S., & Alvesson, M. (2020). Sandwiched: Exploring role and identity of middle managers in the genuine middle. Human Relations, 73(1), 124–151. https://doi.org/10.1177/0018726718823243

- Glasgow, R. E., Vogt, T. M., & Boles, S. M. (1999). Evaluating the public health impact of health promotion interventions: The RE-AIM framework. American Journal of Public Health, 89(9), 1322–1327. https://doi.org/10.2105/AJPH.89.9.1322

- Gupta, N., Wåhlin-Jacobsen, C. D., Abildgaard, J. S., Henriksen, L. N., Nielsen, K. N., & Holtermann, A. (2018). Effectiveness of a participatory physical and psychosocial intervention for balancing the demands and resources of industrial workers: A cluster-randomized controlled trial. Scandinavian Journal of Work, Environment, and Health, 44(1), 58–68. https://doi.org/10.5271/sjweh.3689

- Haims, M. C., & Carayon, P. (1998). Theory and practice for the implementation of ‘in-house’, continuous improvement participatory ergonomic programs. Applied Ergonomics, 29(6), 461–472. https://doi.org/10.1016/S0003-6870(98)00012-X

- Hasson, H., Brown, C., & Hasson, D. (2010). Factors associated with high use of a workplace web-based stress management program in a randomized controlled intervention study. Health Education Research, 25(4), 596–607. https://doi.org/10.1093/her/cyq005

- Hasson, H., Villaume, K., von Thiele Schwarz, U., & Palm, K. (2014). Managing implementation: Roles of line managers, senior managers, and human resource professionals in an occupational health intervention. Journal of Occupational and Environmental Medicine, 56(1), 58–65. https://doi.org/10.1097/JOM.0000000000000020

- Havermans, B. M., Schelvis, R. M., Boot, C. R., Brouwers, E. P., Anema, J. R., & van der Beek, A. J. (2016). Process variables in organizational stress management intervention evaluation research: A systematic review. Scandinavian Journal of Work, Environment & Health, 42, 371–381. https://doi.org/10.5271/sjweh.3570

- Hinkin, T. R. (1998). A brief tutorial on the development of measures for use in survey questionnaires. Organizational Research Methods, 1(1), 104–121. https://doi.org/10.1177/109442819800100106

- International Labor Office. (2012). International standard classification of occupations: ISCO–08. International Labor Office. Retrieved July 13, 2020, from https://www.ilo.org/wcmsp5/groups/public/—dgreports/—dcomm/—publ/documents/publication/wcms_172572.pdf

- Ipsen, C., Gish, L., & Poulsen, S. (2015). Organizational-level interventions in small and medium-sized enterprises: Enabling and inhibiting factors in the PoWRS program. Safety Science, 71, 264–274. https://doi.org/10.1016/j.ssci.2014.07.017

- Jenny, G. J., Brauchli, R., Inauen, A., Füllemann, D., Fridrich, A., & Bauer, G. F. (2015). Process and outcome evaluation of an organizational-level stress management intervention in Switzerland. Health Promotion International, 30(3), 573–585. https://doi.org/10.1093/heapro/dat091

- Kelloway, E. K., & Barling, J. (2010). Leadership development as an intervention in occupational health psychology. Work & Stress, 24(3), 260–279. https://doi.org/10.1080/02678373.2010.518441

- Keyes, C. L. (2014). Mental health as a complete state: How the salutogenic perspective completes the picture. In G. F. Bauer & O. Hämmig (Eds.), Bridging occupational, organizational and public health (pp. 179–192). Springer.

- Kobayashi, Y., Kaneyoshi, A., Yokota, A., & Kawakami, N. (2008). Effects of a worker participatory program for improving work environments on job stressors and mental health among workers: A controlled trial. Journal of Occupational Health, 50(6), 455–470. https://doi.org/10.1539/joh.L7166

- LaMontagne, A. D., Keegel, T., Louie, A. M., Ostry, A., & Landsbergis, P. A. (2007). A systematic review of the job-stress intervention evaluation literature, 1990–2005. International Journal of Occupational and Environmental Health, 13(3), 268–280. https://doi.org/10.1179/oeh.2007.13.3.268

- LaMontagne, A. D., Martin, A., Page, K. M., Reavley, N. J., Noblet, A. J., Milner, A. J., Keegel, T., & Smith, P. M. (2014). Workplace mental health: Developing an integrated intervention approach. BMC Psychiatry, 14(1), 1–11. https://doi.org/10.1186/1471-244X-14-131

- Lundmark, R., Hasson, H., von Thiele Schwarz, U., Hasson, D., & Tafvelin, S. (2017). Leading for change: Line managers’ influence on the outcomes of an occupational health intervention. Work & Stress, 31(3), 276–296. https://doi.org/10.1080/02678373.2017.1308446

- Maxwell, S. E., Kelley, K., & Rausch, J. R. (2008). Sample size planning for statistical power and accuracy in parameter estimation. Annual Review of Psychology, 59(1), 537–563. https://doi.org/10.1146/annurev.psych.59.103006.093735

- Montano, D., Hoven, H., & Siegrist, J. (2014). Effects of organisational-level interventions at work on employees’ health: A systematic review. BMC Public Health, 14(1), 135. http://www.biomedcentral.com/1471-2458/14/135.

- Morgeson, F. P., & Humphrey, S. E. (2006). The work design questionnaire (WDQ): Developing and validating a comprehensive measure for assessing job design and the nature of work. Journal of Applied Psychology, 91(6), 1321–1339. https://doi.org/10.1037/0021-9010.91.6.1321

- Murta, S. G., Sanderson, K., & Oldenburg, B. (2007). Process evaluation in occupational stress management programs: A systematic review. American Journal of Health Promotion, 21(4), 248–254. https://doi.org/10.4278/0890-1171-21.4.248

- Nastasi, B. K., Hitchcock, J., Sarkar, S., Burkholder, G., Varjas, K., & Jayasena, A. (2007). Mixed methods in intervention research: Theory to adaptation. Journal of Mixed Methods Research, 1(2), 164–182. https://doi.org/10.1177/1558689806298181

- Nelson, T. D., & Steele, R. G. (2006). Beyond efficacy and effectiveness: A multifaceted approach to treatment evaluation. Professional Psychology: Research and Practice, 37(4), 389–397. https://doi.org/10.1037/0735-7028.37.4.389

- Nielsen, K. (2013). Review article: How can we make organizational interventions work? Employees and line managers as actively crafting interventions. Human Relations, 66(8), 1029–1050. http://doi.org/10.1177/0018726713477164

- Nielsen, K., & Abildgaard, J. S. (2013). Organizational interventions: A research-based framework for the evaluation of both process and effects. Work & Stress, 27(3), 278–297. https://doi.org/10.1080/02678373.2013.812358

- Nielsen, K., Abildgaard, J. S., & Daniels, K. (2014). Putting context into organizational intervention design: Using tailored questionnaires to measure initiatives for worker well-being. Human Relations, 67(12), 1537–1560. https://doi.org/10.1177/0018726714525974

- Nielsen, K., Nielsen, M. B., Ogbonnaya, C., Känsälä, M., Saari, E., & Isaksson, K. (2017). Workplace resources to improve both employee well-being and performance: A systematic review and meta-analysis. Work & Stress, 31(2), 101–120. https://doi.org/10.1080/02678373.2017.1304463

- Nielsen, K., & Noblet, A. (2018). Introduction: Organizational interventions: Where we are, where we go from here? In K. Nielsen & A. Noblet (Eds.), Organizational interventions for health and well-being: A handbook for evidence-based practice (pp. 1–23). Routledge.

- Nielsen, K., & Randall, R. (2012). The importance of employee participation and perceptions of changes in procedures in a teamworking intervention. Work & Stress, 26(2), 91–111. https://doi.org/10.1080/02678373.2012.682721

- Nielsen, K., & Randall, R. (2013). Opening the black box: Presenting a model for evaluating organizational-level interventions. European Journal of Work and Organizational Psychology, 22(5), 601–617. https://doi.org/10.1080/1359432X.2012.690556

- Nielsen, K., Randall, R., & Albertsen, K. (2007). Participants’ appraisals of process issues and the effects of stress management interventions. Journal of Organizational Behavior, 28(6), 793–810. https://doi.org/10.1002/job.450

- Nielsen, K., Randall, R., & Christensen, K. B. (2010). Does training managers enhance the effects of implementing team-working? A longitudinal, mixed methods field study. Human Relations, 63(11), 1719–1741. https://doi.org/10.1177/0018726710365004

- Nytrø, K., Saksvik, P. Ø., Mikkelsen, A., Bohle, P., & Quinlan, M. (2000). An appraisal of key factors in the implementation of occupational stress interventions. Work & Stress, 14(3), 213–225. https://doi.org/10.1080/02678370010024749

- OECD/EU. (2018). Health at a glance: Europe 2018: State of health in the EU cycle. OECD Publishing. https://doi.org/10.1787/health_glance_eur-2018-en

- Parsonage, M., & Saini, G. (2017). Mental health at work. Center for Mental Health.

- Pawson, R., & Tilley, N. (1997). Realistic evaluation. Sage.

- Proctor, E., Silmere, H., Raghavan, R., Hovmand, P., Aarons, G., Bunger, A., Griffey, R., & Hensley, M. (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38(2), 65–76. https://doi.org/10.1007/s10488-010-0319-7

- Queirós, A., Faria, D., & Almeida, F. (2017). Strengths and limitations of qualitative and quantitative research methods. European Journal of Education Studies, 3(9), 369–387. https://doi.org/10.46827/ejes.v0i0.1017

- Randall, R., Nielsen, K., & Tvedt, S. D. (2009). The development of five scales to measure employees’ appraisals of organizational-level stress management interventions. Work & Stress, 23(1), 1–23. https://doi.org/10.1080/02678370902815277