ABSTRACT

Massive open online courses (MOOCs) are usually six to ten weeks long. Participation tends to decrease as the courses progress, leading to low completion rates. This led to the question: Could shorter MOOCs contribute to learners’ engagement, retention and success? This paper compares two versions of Study Skills MOOC, which shared the same content but were delivered in different length formats. One was deployed as a single six-week course and the other as two three-week blocks. In total, 617 people registered for the two versions. Data sources included learning analytics, surveys and the Spanish version of the General Self-Efficacy Scale. Both versions of the Study Skills MOOC resulted in increased participants’ self-efficacy. However, learners enrolled in the version composed of two three-week blocks were also more engaged with course content, other students and the facilitators. Their retention and completion rates were higher than those in the longer version of the course. Reasons linked to goal proximity, motivation, interactions and social modelling are discussed

1. Introduction

Since their appearance in 2008, Massive Open Online Courses (MOOCs) have been on the rise and are still featuring strongly within the higher education sector (Johnson, Adams Becker, Estrada, & Freeman, Citation2015; Sharples et al., Citation2014). MOOCs offer potentially valuable opportunities for delivery at scale. As they are mostly free of charge and normally require no prerequisites of knowledge or demographics (Anderson, Citation2013), they attract a large number of users.

However, whereas traditional university courses can usually retain over 80% of students (Atchley, Wingenbach, & Akers, Citation2013), the average completion rate for MOOCs is approximately 15% (Jordan, Citation2015b). As a MOOC progresses, an increasing number of participants commonly stop engaging with the course content, activities and assessments, a phenomenon known as ‘funnel participation’ (Clow, Citation2013). About half of people who enrol in a MOOC do not show up. The first two weeks seem to be critical for fostering learners’ engagement. Those who take an active role at the beginning of the course are more likely to participate in subsequent weeks (Ho et al., Citation2014; Jordan, Citation2015a).

Although the length of a MOOC ranges from two to sixteen weeks, it is most frequently between six and ten weeks (Hollands & Tirthali, Citation2014), with regional variations. A review by the MOOC-Maker Project found that European MOOCs tend to be longer than those produced by institutions in Latin America (nine versus six weeks on average) (Pérez Sanagustín, Maldonado, & Morales, Citation2016). This research focused on whether the duration of a MOOC has any impact on completion rates. Could shorter MOOCs contribute to learners’ engagement, retention and success? The literature on this topic is scarce and limited to a few studies that provide contradicting evidence.

Perna et al. (Citation2014) analysed 16 Coursera MOOCs and found a steep decline in participants’ access to video lectures as the courses progressed. Yet, there was no relationship between learners who received a final grade of 80% or higher and the number of course weeks or modules. In the EMOOCs, European MOOCs Stakeholders Summit 2017, R. de Rosa, C. Ferrari and R. Kerr (2017, May, personal communication) argued that ‘length is not a determinant’ for participation and active contribution in MOOCs in the European Multiple MOOC Aggregator (EMMA) platform.

In her review of public domain information on MOOCs, Jordan (Citation2014) found that longer courses (n = 87) attract a greater number of registrants, but completion rates (as a percentage of the total enrolment) are negatively correlated with course length (n = 39 MOOCs). Jordan (Citation2015a) makes a case in favour of shorter, modular courses with guidance as to how they could be combined, recommending further research to examine the effects in practice. In their analysis of a Coursera MOOC, Engle, Mankoff, and Carbrey (Citation2015) consider that reducing course length could have an effect on completion.

These reports are valuable but have an important limitation. They are based on the description of existing MOOCs, with no manipulation of course length. The varying levels of learner participation in MOOCs might be due to factors other than the duration of the course, such as the topic, type of assessment or pedagogical approach. Pernaet al. (Citation2014) highlight the need for research that systematically changes particular design characteristics and tests the variations in learners’ behaviours. The present study sought to address this gap in the literature, answering the follow research question: How does the duration of a MOOC relate to different measures of learner engagement, retention, and success? The objective was to compare the experience of two versions of a Study Skills MOOC, one as a single six-week course and another as two three-week blocks.

2. Materials and methods

2.1 Study skills moocs

The Study Skills MOOC was the product of an alliance between the Autonomous University of Nuevo Leon (Mexico) and the University of Northampton (United Kingdom). It found its inspiration in the Study Skills for Academic Success (SSAS) MOOC, developed previously by the University of Northampton. While maintaining the spirit of the SSAS MOOC, the Study Skills MOOC was adapted to better suit the needs of a Latin American audience. It was delivered in Spanish on the Open Education Blackboard platform.

The course was designed for a general audience, but it focused on first-year university students. It aimed to help them transition to higher education, improve their study skills and develop their self-efficacy, i.e., their beliefs about their own capabilities to produce expected outcomes (Bandura, Citation1994). Students with a high level of self-efficacy are confident, self-motivate, regulate their learning, persist in the face of difficulties and tend to achieve their educational goals (Bandura, Citation2002; Komarraju & Nadler, Citation2013).

Course topics were based on key academic challenges reported by first-year students at the Autonomous University of Nuevo Leon. These difficulties included the need to develop the following study skills:

Managing time efficiently

Taking effective notes

Searching for reliable information

Understanding academic texts

Using the APA referencing format

Writing academically.

The Study Skills MOOC was discipline-neutral. It provided a structured space where students could practise and develop their academic skills. Each week participants worked on a lesson with several units. Each lesson had explicit learning outcomes around one study skill. Each unit included multimedia materials and a formative activity. Some activities in the lesson on the APA format were based on multiple-choice questions with automated feedback. Most of the activities, though, relied on student engagement in discussion forums. They followed the e-tivity framework (Salmon, Citation2013), which promotes active and participative online learning. They included the following key elements:

‘Spark’ – a resource, such as an image or a video, aimed at generating interest in the topic of the activity

Learning objective – contributing to the achievement of the lesson’s overall learning outcome

Task – with specific and clear instructions of what was expected from the learners

Response – requiring participants to reflect and comment on others’ contributions.

Educators around the world have successfully used e-tivities to support and assess learning (Armellini & Aiyegbayo, Citation2010; Salmon, Pechenkina, Chase, & Ross, Citation2017). MOOC participants consider them valuable and worthwhile (Salmon, Gregory, Lokuge Dona, & Ross, Citation2015).

Students were encouraged to reflect on their own experiences, identify their own mistakes, share their stories and define action plans for improvement. Additional (optional) content and exercises were included for participants who wished to explore specific topics in more depth. These design features focused on fostering self-efficacy (see Bartimote-Aufflick, Bridgeman, Walker, Sharma, & Smith, Citation2015; Hodges, Citation2016). The final unit of each lesson included an overarching assignment that encouraged learners to practise the corresponding study skill of the week. The recommended study time was three hours per week. Non-credit bearing certificates of participation were available for learners who completed each overarching assignment, in all six lessons.

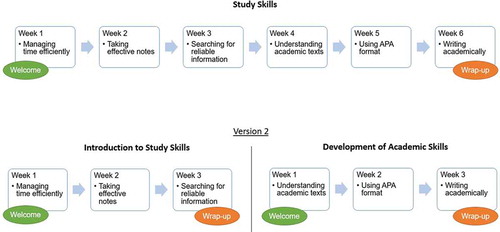

Two versions of this MOOC were developed (see ). Version 1 matched the original idea for the Study Skills MOOC. It had the standard length of six weeks (Hollands & Tirthali, Citation2014) and was delivered in May–June 2016. It included the six topics, plus welcome and wrap-up activities at the beginning and end of the course. Version 2 was created by dividing the original course into two. Each of these shorter MOOCs was three weeks long. They were titled ‘Introduction to Study Skills’ and ‘Development of Academic Skills’. Each of them had their own welcome and wrap-up activities. They used the exact same content and learning design as Version 1. At the end of the Introduction to Study Skills MOOC (i.e. the first three weeks), participants were invited to join the Development of Academic Skills MOOC. These MOOCs were delivered sequentially, so learners were engaged in six consecutive weeks in February–March 2017. In both versions, after the six weeks, participants had additional time available to complete activities. Certificates of participation were only available for those who completed Version 1 or both shorter MOOCs in Version 2. The key difference between the versions was solely the length of the MOOCs.

Two staff facilitators and three student moderators provided support throughout the delivery of both versions of the MOOC. In all cases, participants received weekly follow-up emails with summaries of discussions and tips on how to optimise their learning experience. A Twitter hashtag (#hemooc) enabled interactions beyond the boundaries of the MOOC platform. Facilitators tweeted at least three times per week during the delivery of the MOOCs. The same standard emails and tweets were used in both versions of the MOOC, with minimum changes to account for the differences in the delivery.

2.2 Participants

Both versions of the MOOC were advertised on social media (Facebook and Twitter), the Open Education Blackboard platform and through word of mouth. In total, 617 people registered for the two versions of the MOOC. Version 2 had 294 users, but only 134 people who took the first block (Introduction to Study Skills) also enrolled in the second one (Development of Academic Skills).

Only a fraction of enrolled participants answered an initial survey, where they provided information about their sociodemographic characteristics (see ). Their ages ranged from 17 to 62 years, but most of them were in their early twenties. The majority were university students from Mexico, which is in line with the target audience of the MOOC.

Table 1. Participants in versions 1 and 2 of the MOOC

The MOOC participants in the different versions shared roughly the same demographic profile. However, Version 1 included a higher proportion of non-traditional learners (i.e. full-time employees), most of whom were Colombian.

2.3 Sources of information

Learning analytics and survey analysis provided insights into participants’ engagement, retention and success in the MOOCs. Specifically, we considered the following metrics and sources of information for each variable of interest.

2.3.1 Engagement

In this study, engagement refers to learners’ participation in the course, as viewed through their interactions with the content, peers and facilitators. We considered that users who attempted a multiple-choice exercise, answered a survey or posted one message were active, engaged participants at least once during the course.

We focused on the following indicators, which are widely used in MOOC reports (e.g. Coelho, Teixeira, Nicolau, Caeiro, & Rocio, Citation2015; Padilla Rodriguez, Bird, & Conole, Citation2015). The analytics function of Open Education provided reports with these data.

Active participants

Content views

Number of messages posted in discussion forums.

2.3.2 Retention

Retention in MOOCs is commonly defined as the percentage of participants who successfully finish the course to the specified standards (Koller, Ng, & Chen, Citation2013). It refers to users ‘returning’ to the MOOC throughout its duration. In this study, we focused on:

learners who were active and engaged in the different lessons, either posting messages, attempting the multiple-choice exercises or answering surveys

learners who earned a certificate of participation.

2.3.3 Success

Retention and completion rates often fail to capture the diversity of students’ goals and engagement patterns (Ho et al., Citation2014; Koller et al., Citation2013). Participants report enrolling for a variety of reasons that seldom include finishing the course (Padilla Rodriguez, Rocha Estrada, & Rodriguez Nieto, Citation2017; Siemens, Citation2013). MOOCs can be successful not only if students pass but also if they contribute to achieving specific learning outcomes (e.g. Otto, Bollmann, Becker, & Sander, Citation2018).

In this research, we considered an additional indicator of success: self-efficacy, because it is a strong predictor of academic performance and learning (Bartimote-Aufflick et al., Citation2015; Komarraju & Nadler, Citation2013). This matches the aim of the Study Skills MOOC. The focus of the course was not on the acquisition of knowledge but rather on the enhancement of a general feeling of confidence in learners’ own abilities.

We used online surveys with the Spanish version of the General Self-Efficacy Scale (Baessler & Schwarzer, Citation1996), which focuses on the global sense of a person’s confidence in their own ability to face a range of new or stressful situations. This instrument has been widely tested in Spanish-speaking countries, such as Mexico and Spain. It is considered a reliable and valid measure of the perception of self-efficacy. It has a Cronbach alpha of around 0.86 (Padilla, Acosta, Gómez, Guevara, & González, Citation2006). It consists of ten items, with answer options corresponding to a four-point Likert scale.

Additional items addressed self-efficacy related to the specific study skills the MOOC covered. Using a five-point Likert scale, participants were asked to rate their confidence in the study skills covered in the course: managing their time efficiently, taking effective notes, searching for reliable information, understanding academic texts, using the APA format and writing academically. Space for optional comments was also available.

2.4 Procedure

Before the start of both versions of the MOOC, registered participants received an email with information about the research. This message was permanently available in the announcements section of all the courses. At the beginning of every survey, a brief explanation reminded participants of the purpose of the study, assured them that their answers would be anonymous, and referred them to the researchers for questions and comments.

During the first and final lessons of the MOOCs, participants were invited to answer the online surveys. The mean of the answers to the General Self-Efficacy Scale was calculated, as well as the means of the six items that addressed specific study skills. The nonparametric Wilcoxon signed-rank test (Shier, Citation2004) was conducted to check the significance of self-efficacy differences before and after the MOOC.

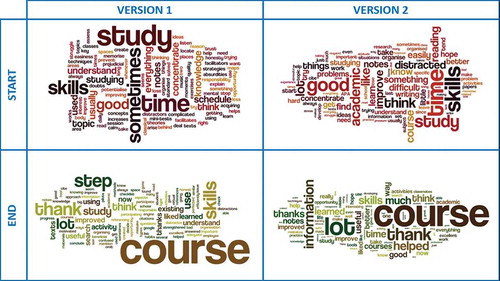

Wordles (word clouds) were created with participants’ optional comments. These are visual representations in which the most frequently used words in a text have a larger size. Wordles provide visually rich ways to conduct preliminary analysis of qualitative data (McNaught & Lam, Citation2010). A thematic analysis was then conducted, aimed at identifying salient themes and common patterns. To maintain their anonymity, participants were assigned a generic ID composed of the MOOC version they were part of (V) and an individual number (P). The two blocks of Version 2 (Introduction to Study Skills and Development of Academic Skills) were identified as ‘a’ and ‘b’, respectively. Thus, a participant’s ID followed the structure V#(a/b)P#; for example, V1P1, V2aP1 or V2bP1. To exemplify findings, sample comments were translated from Spanish to English, focusing on substance over form. A word-by-word translation was used only when doing so would convey the same or nearly the same meaning as the original message.

After the end of the courses, learning analytics reports were downloaded from the Open Education Blackboard platform. Frequencies, percentages and averages were obtained. The number of students who earned a certificate of participation was checked.

These measures of engagement, retention and success were compared between the two versions of the Study Skills MOOC to identify differences and similarities.

3. Results

Both versions of the Study Skills MOOC resulted in increased participants’ self-efficacy. However, learners enrolled in Version 2 (two three-week blocks) were also more engaged with the MOOC experience and had a higher retention rate than those in Version 1 (single six-week course). We describe the details of our findings organising them in terms of the variable they relate to.

3.1 Engagement

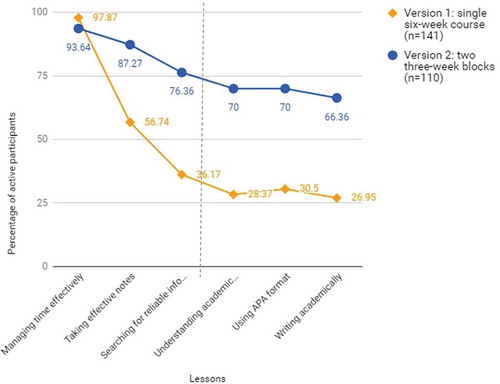

The percentage of active participants was higher in Version 2 than in Version 1, when considering users enrolled in each independent block and in both. The difference ranged from 2.02 to 38.44 percentage points (see ). Version 2 included 294 participants, out of whom 149 (50.68%) were active. Only 134 people enrolled in both blocks (Introduction to Study Skills and Development of Academic Skills), but 110 of them (82.09%) engaged either with course content, peers or facilitators in multiple-choice exercises, surveys or discussion forums.

Table 2. Active participants

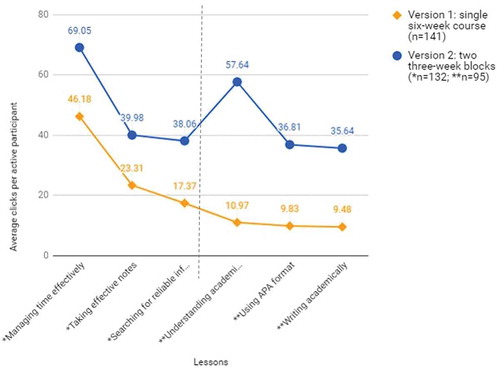

Learners’ engagement with course content decreased throughout delivery (see ). In Version 1, average views per active participant dropped from 46.18 in the first lesson to 9.48 in the last one. Although Version 2 showed a similar pattern, the change was not as dramatic. Mean content views dropped from 69.05 to 38.06 in the first block (Introduction to Study Skills), and from 57.64 to 35.64 in the second block (Development of Academic Skills). Overall, active learners in Version 2 had more content views on average than those in Version 1 (277.18 versus 117.14).

Figure 2. Content views

A minority of learners in Version 1 (125/323; 38.70%) engaged with peers and facilitators by posting at least one message in the discussion forums. A higher percentage of participants in Version 2 (107/134; 79.85%) did the same (see ). On average, throughout the six lessons, each active participant posted 15.13 messages in Version 1 and 48.96 in Version 2 (see ). Overall, learners in Version 2 were much more engaged in social interactions.

Table 3. Messages posted

3.2 Retention

Few active participants (22/141; 15.60%) in Version 1 successfully submitted the overarching assignment of each lesson and therefore completed the MOOC to the specified standards and obtained a certificate of participation. The completion rate improved in Version 2 by 46.22 percentage points (see ), as did the participation and retention rate. Most learners in Version 2 who engaged at least once with the course (73/110; 66.36%) remained active throughout the MOOC, either posting messages, attempting the multiple-choice exercises or answering surveys. Those in Version 1 tended to stop their participation before the end of the course, with a retention rate of only 26.95% (38/141; see ).

Table 4. Completion rate

Figure 3. Participation and retention throughout MOOC delivery

3.3 Success

In this paper, self-efficacy is an indicator of success, as it matches the aim of the Study Skills MOOC. In both versions, participants reported improvements in their general self-efficacy (maximum value = 4), with increases ranging from 0.22 to 0.30. Although the differences are minimal, Wilcoxon signed-rank tests confirmed that they were all statistically significant (see ). A previous analysis of the results in Version 1 is available from Padilla Rodriguez and Armellini (Citation2017).

Table 5. General self-efficacy before and after the MOOCs

By the end of the MOOCs, participants felt more confident in their own study skills. The differences between their mean self-efficacy before and after the MOOC (maximum value = 5) ranged from 0.37 to 0.87 points. Increases were all statistically significant, except for self-efficacy related to searching for reliable information in Version 1 (see ).

Table 6. Study skills’ self-efficacy before and after the MOOCs

The percentage of participants who provided optional comments at the end of the surveys ranged from 46.88% to 70.42% (see ). A preliminary Wordle analysis showed similar keywords in both versions (see ). Initially, answers seemed to refer to study skills (notes, time, academic), challenges (struggle, distracted, difficult) and course expectations (understand, improve, learn). In the survey conducted during the final lesson, most frequently mentioned words related to the usefulness and appreciation of the MOOCs: helped, study, skills, thanks.

Table 7. Key themes in participants’ comments

Thematic analysis provided further insights into participants’ views (see ). Respondents referred initially to the difficulties they faced regarding their study skills:

I don’t trust my skills to use some study strategies. [V1P2]

I am easily distracted, and I leave things for the last minute. [V2aP70]

To a lesser extent, comments at the beginning of both versions of the MOOC focused on participants’ strategies to address their challenges or their academic strengths:

Sometimes I listen to classical music, as it helps me concentrate … [V1P9]

I took a course on research methodology in high school, so I have some experience writing academic papers. [V2bP22]

Some respondents expressed their course expectations and goals:

I want to learn a learning methodology so I can apply it in my studies. [V1P31]

… .I hope that once I finish the course I make better use of my time. [V2aP24]

In the second block of Version 2 (Development of Academic Skills), a couple of comments referred to the usefulness of the first block; for example: Thanks to the previous course, now I am better at taking notes. [V2bP13]

Answers in the final surveys showed some differences. Participants in both versions of the MOOC showed appreciation and gratefulness. For example:

Thanks a lot for this online course. I think it is a very good course. It helped me as a student and as a professional. [V1P24]

Congratulations for this course. It was really very useful. Thank you for the opportunity. I learned a lot during this period. [V2aP42]

Many comments focused on learning or general benefits derived from studying the MOOC:

… I have improved in several aspects, such as organising my time and using an adequate space for academic activities. I am also now able to use effective notes to understand texts more efficiently. Many questions I had on how to use citations and references were answered in this course … [V1P18]

This course helped me a lot to know how to write correctly my essays or lab reports. Also, it taught me that I was an unintentional plagiarist when I didn’t cite or reference [sources] adequately. [V2bP65]

In Version 2, some participants went beyond expressions of MOOC benefits. They described specific ways in which their learning had resulted in a behavioural change or been meaningful for them. For example:

I used to take notes of everything the teacher wrote on the blackboard, without realising that it wasn’t necessary. Now I only write the most important points and I do conceptual maps. [V2aP41]

I am really very happy because a few days ago I had to do an assignment related to the last topic I saw in this course. It was very useful for me. Many thanks. (: [V2bP42]

Also, some respondents highlighted the value of social interactions and shared suggestions to improve the course:

My coursemates’ advice was good. I could use some [tips] in my daily life. [V2aP75]

Thanks to the group activities, [I was able to see] that I wasn’t the only one who had this type of problems […], this helped me overcome them … [V2bP48]

Teams should be automatically created, so I can get to know other coursemates better [V2bP35]

4. Discussion

This study addresses a gap in current research into how changing specific MOOC design characteristics – in this case, course length – impacts on the learner experience (Perna et al., Citation2014). We focused on engagement, retention and success in two versions of a Study Skills MOOC. As has been reported in the literature, about half of the people enrolled did not show up, and funnel participation was evident (Clow, Citation2013; Ho et al., Citation2014; Jordan, Citation2015a). However, engagement improved considerably when the original six-week MOOC (Version 1) was divided into two three-week blocks (Version 2). Interactions with content, peers and facilitators more than doubled in terms of average course views, number of messages posted and percentage of active participants throughout the six lessons.

Students expressed themselves positively in relation to the Study Skills MOOC. Both versions were successful in that they helped participants fulfil the goal of developing their confidence in their own capabilities. Learners who completed the six lessons reported statistically significant increases in their self-efficacy, both in general and specifically related to their study skills. The only exception was in Version 1, where participants’ self-efficacy in relation to searching for reliable information before and after the course showed no significant differences. Respondents are likely to have reviewed the materials and participated in the online activities. As the content was the same in both versions, it is not surprising that the levels of success achieved by both sets of participants were very similar.

Student retention in the Study Skills MOOC was lower than that of over 80% in traditional university courses (Atchley et al., Citation2013). However, Version 1 had a 15.60% completion rate, equivalent to the average reported in other MOOCs (Jordan, Citation2015b). Version 2 had a 61.82% completion rate. Optional comments provided evidence of how learning was meaningful for participants and how they were able to apply it in their own contexts. We, thus, encourage MOOC designers to move away from the standard length of six to ten weeks (Hollands & Tirthali, Citation2014). We support recommendations in favour of shorter, modularised courses (Engle et al., Citation2015; Jordan, Citation2015a).

Version 2 had a higher engagement and retention rate. As it was composed of two blocks, it had two welcome and wrap-up activities (see ). Completing it required more work than Version 1 and yet, learners were more likely to participate and complete it. Why? We consider possible explanations at individual and group levels, which we describe next.

Individual level – Proximal goals and motivation. Completing Version 1 might have seemed a distal goal for participants, six weeks away. Version 2 divided this target into smaller, more manageable and proximal goals, against which progress was easier to gauge (Schunk, Citation1990). Each block in Version 2 had a beginning and an end. Finishing the first one might have offered a motivating sense of accomplishment, which led students to want to engage in the second block.

Group level – Social interactions and social modelling. MOOC participants who engage in social interactions are less likely to drop out (Sunar, White, Abdullah, & Davis, Citation2016). In Version 2, learners posted on average three times more messages than in Version 1. Some reported valuing communications with their peers. Interacting participants might have provided a social modelling for others (Bandura, Citation2001), who imitated their behaviour and in turn motivated others to engage as well. In contrast, in Version 1, some learners might have dropped out when they saw others disengaging from the course.

These explanations are not mutually exclusive. Both might contribute to understanding why dividing a MOOC into two blocks yielded different results in terms of learner engagement and retention. However, more research is needed before we can generalise these results.

This study faced several limitations. We manipulated the courses’ duration, keeping the content and learning design unmodified, and used a pre-post test approach. However, there was no control group. The samples were not randomly selected and had unequal sizes. A number of unforeseen variables could have influenced learners’ engagement, retention and success. For example, participants’ profiles were slightly different between groups: in Version 1, roughly half the learners were employed, whereas in Version 2 most were undergraduate students. Also, the MOOCs did not run simultaneously. The dates selected for implementation could have matched holidays in some countries or have been particularly (in)convenient for participation.

5. Conclusions

This paper contributes to the debate on how the duration of a MOOC may impact on learner engagement, retention and success. It moves beyond the description of a set of several MOOCs, and reports on two versions of a MOOC, where the focus was course duration. The first MOOC was six weeks in length, whereas in the second the decision was made to divide the content into two three-week blocks. The study therefore eliminates the influence of some potentially confounding factors such as the topic, type of assessment and underlying pedagogy. It offers evidence in favour of short, modularised MOOCs. We found that the three-week version doubled the amount of learner participation, enhanced the depth and quality of that engagement, and quadrupled completion rates. In both versions of the MOOC, participants who completed the courses reported positive views and an increased self-efficacy.

The findings we present derive from a single case. They may inspire designers to consider the relevance of MOOC duration. We encourage other researchers to replicate this study and address its limitations. An interesting approach for a future study would be to condense the original six-week MOOC into a three-week MOOC, as opposed to just dividing it. Additional questions to consider include: How short can a MOOC be before learner engagement and retention stops improving? What other factors can affect student participation and completion? Answers will help MOOC designers and developers create effective and engaging courses.

Infograph_-_Why_Size_Matters_in_MOOCs.png

Download PNG Image (555.4 KB)Acknowledgments

The National Council of Science and Technology (CONACYT) in Mexico supported this work.

Supplementary data

Supplemental data for this article can be accessed on the here.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Notes on contributors

Brenda Cecilia Padilla Rodriguez

Brenda Cecilia Padilla Rodriguez (a.k.a Brenda Padilla) is a lecturer at UANL, a member of the National Researchers’ System in Mexico, and a global consultant at E-Learning Monterrey. Her research focuses on online interactions, course design and educational innovations.

Alejandro Armellini

Alejandro Armellini (MEd, PhD, PFHEA, FRSA) is Dean of Learning and Teaching and Director of the Institute of Learning and Teaching in Higher Education at the University of Northampton. His research focuses on learning innovation, online pedagogy, course design in online environments, institutional capacity building and open practices.

Ma Concepción Rodriguez Nieto

Ma Concepción Rodriguez Nieto is a lecturer at UANL, the coordinator of the Science Master programme with orientation in Cognition and Education at UANL’s Faculty of Psychology and a member of the National Researchers’ System in Mexico. Her research focuses on educational psychology, cognitive processes and online learning.

References

- Anderson, T. (2013). Promise and/or peril: MOOCs and open and distance education. Athabasca University. Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.363.4943&rep=rep1&type=pdf

- Armellini, A., & Aiyegbayo, O. (2010). Learning design and assessment with e‐tivities. British Journal of Educational Technology, 41(6), 922–935.

- Atchley, T., Wingenbach, G., & Akers, C. (2013). Comparison of course completion and student performance through online and traditional courses. The International Review of Research in Open and Distributed Learning, 14(4). doi:10.19173/irrodl.v14i4.1461

- Baessler, J., & Schwarzer, R. (1996). Evaluación de la autoeficacia: Adaptación española de la escala de Autoeficacia General [Evaluation of self-efficacy: Spanish adaptation of the General Self-Efficacy Scale]. Ansiedad Y Estrés, 2(1), 1–8.

- Bandura, A. (1994). Self-efficacy. In V. S. Ramachaudran (Ed.), Encyclopedia of human behavior (Vol. 4, pp. 71–81). New York, NY: Academic Press.

- Bandura, A. (2001). Social cognitive theory: An agentic perspective. Annual Review of Psychology, 52, 1–26.

- Bandura, A. (2002). Growing primacy of human agency in adaptation and change in the electronic era. European Psychologist, 7(1), 2–16.

- Bartimote-Aufflick, K., Bridgeman, A., Walker, R., Sharma, M., & Smith, L. (2015). The study, evaluation, and improvement of university student self-efficacy. Studies in Higher Education, 41(11), 1918–1942.

- Clow, D. (2013, April). MOOCs and the funnel of participation. In LAK ’13. Proceedings of the Third International Conference on Learning Analytics and Knowledge (pp.185–189). New York, NY: ACM. DOI: 10.1145/2460296.2460332

- Coelho, J., Teixeira, A., Nicolau, P., Caeiro, S., & Rocio, V. (2015). iMOOC on climate change: Evaluation of a massive open online learning pilot experience. The International Review of Research in Open and Distributed Learning, 16(6). doi:10.19173/irrodl.v16i6.2160

- Engle, D., Mankoff, C., & Carbrey, J. (2015). Coursera’s introductory human physiology course: Factors that characterize successful completion of a MOOC. The International Review of Research in Open and Distributed Learning, 16(2). doi:10.19173/irrodl.v16i2.2010

- Ho, A. D., Reich, J., Nesterko, S. O., Seaton, D. T., Mullaney, T., Waldo, J., & Chuang, I. (2014). HarvardX and MITx: The first year of open online courses (HarvardX and MITx Working Paper No. 1). DOI: 10.2139/ssrn.2381263

- Hodges, C. (2016). The development of learner self-efficacy in MOOCs. In P. Kirby & G. Marks (Eds.), Proceedings of Global Learn – Global Conference on Learning and Technology (pp. 517–522). Waynesville, N.C.: Association for the Advancement of Computing in Education (AACE).

- Hollands, F. M., & Tirthali, D. (2014). MOOCs: Expectations and reality. Full report. Center for Benefit-Cost Studies of Education, Teachers College Columbia University. Retrieved from http://files.eric.ed.gov/fulltext/ED547237.pdf

- Johnson, L., Adams Becker, S., Estrada, V., & Freeman, A. (2015). NMC horizon report: 2015 higher education edition. Austin, Texas: The New Media Consortium. Retrieved from http://cdn.nmc.org/media/2015-nmc-horizon-report-HE-EN.pdf.

- Jordan, K. (2014). Initial trends in enrolment and completion of massive open online courses. The International Review of Research in Open and Distributed Learning, 15(1). doi:10.19173/irrodl.v15i1.1651

- Jordan, K. (2015a). Massive open online course completion rates revisited: Assessment, length and attrition. The International Review of Research in Open and Distributed Learning, 16(3). doi:10.19173/irrodl.v16i3.2112

- Jordan, K. (2015b). MOOC completion rates: The data. Retrieved from http://www.katyjordan.com/MOOCproject.html

- Koller, D., Ng, A., & Chen, Z. (2013). Retention and intention in massive open online courses: In depth. EDUCAUSE Review Online. Retrieved from https://er.educause.edu/articles/2013/6/retention-and-intention-in-massive-open-online-courses-in-depth

- Komarraju, M., & Nadler, D. (2013). Self-efficacy and academic achievement: Why do implicit beliefs, goals, and effort regulation matter? Learning and Individual Differences, 25, 67–72.

- McNaught, C., & Lam, P. (2010). Using wordle as a supplementary research tool. The Qualitative Report, 15(3), 630–643.

- Otto, D., Bollmann, A., Becker, S., & Sander, K. (2018). It’s the learning, stupid! Discussing the role of learning outcomes in MOOCs. Open Learning: the Journal of Open, Distance and e-Learning, 33(3), 203–220.

- Padilla, J., Acosta, B., Gómez, J., Guevara, M., & González, A. (2006). Propiedades psicométricas de la versión española de la escala de autoeficacia general aplicada en México y España [Psychometric properties of the Spanish version of the general self-efficacy scale applied in Mexico and Spain]. Revista Mexicana De Psicología, 23(2), 245–252.

- Padilla Rodriguez, B. C., & Armellini, A. (2017). Developing self-efficacy through a massive open online course on study skills. Open Praxis, 9(3), 335–343.

- Padilla Rodriguez, B. C., Bird, T., & Conole, G. (2015). Evaluation of massive open online courses (MOOCs): A case study. In T. Bastiaens & G. Marks (Eds.), Proceedings of Global Learn 2015 (pp. 527–535). Berlin, Germany: Association for the Advancement of Computing in Education (AACE).

- Padilla Rodriguez, B. C., Rocha Estrada, F. J., & Rodriguez Nieto, M. C. (2017). Razones para estudiar un curso en línea masivo y abierto (MOOC) de habilidades de estudio [Reasons to study a massive open online course (MOOC) of study skills]. In C. Delgado Kloos, C. Alario-Hoyos, & R. Hernández-Rizzardini (Eds.), Actas de la Jornada de MOOCs en Español en EMOOCs 2017 (EMOOCs-ES) (pp. 54–61). Madrid, Spain: CEUR-WS.org.

- Pérez Sanagustín, M., Maldonado, J., & Morales, N. (2016). Status report on the adoption of MOOCs in higher education in Latin America and Europe. MOOC-Maker Project. Retrieved from http://www.mooc-maker.org/wp-content/files/WPD1.1_INGLES.pdf

- Perna, L. W., Ruby, A., Boruch, R. F., Wang, N., Scull, J., Ahmad, S., & Evans, C. (2014). Moving through MOOCs: Understanding the progression of users in massive open online courses. Educational Researcher, 43(9), 421–432.

- Salmon, G. (2013). E-tivities: The key to active online learning. New York, NY: Routledge.

- Salmon, G., Gregory, J., Lokuge Dona, K., & Ross, B. (2015). Experiential online development for educators: The example of the Carpe Diem MOOC. British Journal of Educational Technology, 46(3), 542–556.

- Salmon, G., Pechenkina, E., Chase, A.-M., & Ross, B. (2017). Designing massive open online courses to take account of participant motivations and expectations. British Journal of Educational Technology, 48(6), 1284–1294.

- Schunk, D. H. (1990). Goal setting and self-efficacy during self-regulated learning. Educational Psychologist, 25, 71–86.

- Sharples, M., Adams, A., Ferguson, R., Gaved, M., McAndrew, P., Rienties, B., … Whitelock, D. (2014). Innovating Pedagogy 2014: Exploring new forms of teaching, learning and assessment, to guide educators and policy makers. United Kingdom: The Open University. Retrieved from http://www.open.ac.uk/iet/main/files/iet-web/file/ecms/web-content/Innovating_Pedagogy_2014.pdf.

- Shier, R. (2004). Statistics: 2.2 The Wilcoxon signed rank sum test. Mathematics Learning Support Centre. Retrieved from http://www.statstutor.ac.uk/resources/uploaded/wilcoxonsignedranktest.pdf

- Siemens, G. (2013). Massive open online courses: Innovation in education? In R. McGreal, W. Kinuthia, S. Marshall, & T. McNamara (Eds.), Open educational resources: Innovation, research and practice (pp. 5–16). Vancouver, Canada: Commonwealth of Learning and Athabasca University.

- Sunar, A., White, S., Abdullah, N., & Davis, H. (2016). How learners’ interactions sustain engagement: A MOOC case study. IEEE Transactions on Learning Technologies, 10((4)). doi:10.1109/TLT.2016.2633268