ABSTRACT

Active learning in mathematics can lead to deeper understanding than passively listening to a lecture, yet recent studies indicate that didactic teaching dominates online tutorials. This study investigated student participation in three types of activity: solving mathematical problems via polling, on-screen activities on a shared whiteboard and text-chat, during online undergraduate mathematics tutorials. Data were collected from tutorial observations, student and tutor surveys, semi-structured student interviews and a tutor focus group. Results showed high student participation in all types of activity. Students also perceived them as enjoyable and aiding their learning, though with some differences between the types of activity such as those done by text-chat being slightly less well received. Perceived benefits to students’ learning included the ability to attempt similar activities, with suggested benefits such as correcting misconceptions receiving a more mixed response. By demonstrating the successful use of mathematical activities in synchronous online tuition, these results provide motivation for tutors to facilitate essential active learning online.

Introduction

Active learning has been encouraged for many years as a route to deepening students’ understanding, with the meta-study by Freeman et al. (Citation2014) providing striking evidence of its benefits in STEM subjects. Their meta-analysis of 225 studies suggested an average increase in examination scores of 6% with the incorporation of active learning, and students being 1.5 times more likely to fail when taught by traditional methods.

In 2016 the Conference Board of Mathematical Sciences in Washington (CBMS, Citation2016) issued a statement calling on higher education institutions and others to ‘ensure that effective active learning is incorporated into post-secondary mathematics classrooms’. In this paper, we have adopted the CBMS definition of active learning in mathematics as

` ….classroom practices that engage students in activities, such as reading, writing, discussion, or problem solving, that promote higher-order thinking.’

Incorporating active learning applies equally to the online classroom. Biggs and Tang (Citation2007) include the online environment in their many recommendations for incorporating active learning to encourage deeper learning. Rogoza (Citation2008) and Robinson et al. (Citation2015) also argue that active learning is more relevant than ever online.

Some early pilot studies reported positively on introducing synchronous online tuition to various educational settings (McBrien et al., Citation2009; Ng, Citation2007; Pan & Sullivan, Citation2005; Saw et al., Citation2008; Wang & Hsu, Citation2008). Other studies, however, indicated that although online mathematics tutorials were valued by both students and tutors, these tended to be in a lecture style with only limited active participation by students (Beyth-Marom et al., Citation2005; Lowe et al., Citation2016). Students and tutors reported that the level of active participation in online sessions compared poorly with that in face-to-face tutorials.

Since then, the provision of online tuition has greatly increased, in some cases replacing face-to-face tuition. However, more recent research on science and technology courses continues to indicate that tutors tend not to use the available interactive online tools (Butler et al., Citation2018) and that tutors perceive that students expect a didactic online tutorial (Campbell et al., Citation2018). Additional anecdotal evidence from recent monitoring of online mathematics tutorials suggests that whilst some tutors incorporate activities in their sessions, the majority adopt a lecturing style with little active student participation.

Encouraging active learning in synchronous online tuition poses a significant challenge. One issue is that tutors feel hampered in their ability to adapt to student needs by the lack of feedback from them (Campbell et al., Citation2018; Kear et al., Citation2012; Lowe et al., Citation2016). Ng (Citation2007) reports that both students and tutors find interaction easier and clearer face-to-face, and Hampel (Citation2006), Wang and Hsu (Citation2008), McBrien et al. (Citation2009), and Kear et al. (Citation2012) all comment on the difficulties posed by the lack of non-verbal communication in online synchronous environments. Yet the importance of formative feedback ‘as they learn’ is emphasised by Biggs and Tang (Citation2007) as encouraging deep learning. Williams (Citation2015) also notes that tutorial activities have the same benefits as students working on problems in their own time, but within a tutorial both quicker and more personalised feedback is possible, as well as encouraging mathematical dialogue to occur.

Another difficulty is the high cognitive load on tutors in having to simultaneously manage numerous tasks (Kear et al., Citation2012). Slow uptake of technological tools to promote active learning is noted in a wider context by Ottenbreit-Leftwich et al. (Citation2010), who argue that tutors need to believe that technology is valuable for student learning to incorporate it into their practice. These barriers may provide explanations for the prevalence of didactic online tutorials, as well as a means of addressing it.

It is worrying that despite the significant positive effects of active learning reported in Freeman et al. (Citation2014), moving online appears to encourage the opposite – a return to traditional, didactic teaching. More research is needed into techniques for facilitating active learning and improved communication online, as well as motivational evidence of the associated pedagogic value to promote uptake by tutors.

To address this, we have investigated tutors’ current practice in online synchronous tutorials which do include activities to facilitate active learning, to assess how successfully they achieve student participation. As well as measuring student participation, we have collected data on student and tutor perceptions of these activities. Such data will be instrumental in informing a move towards increasing student participation online, since real-time feedback from students is limited in an online environment.

Three types of activity were included in these tutorials, which form one part of a more comprehensive teaching programme: solving a mathematical problem via polling, completing activities on a shared whiteboard (on-screen activities) and using text-chat.

Examples of each type of activity are given in .

Figure 1. Examples of the three types of online activity: polling, on-screen and text-chat activities.

Polling questions can be designed to check understanding of a mathematical concept, assess prior understanding and test critical thinking skills. Options can be chosen to catch common misconceptions. On-screen activities are useful for visual concepts such as drawing plots, and provide a greater variety of activities. They can help develop deep understanding of threshold concepts. Text-chat questions are normally asked verbally or displayed on a shared whiteboard, with answers given by students typing into the text-chat. They can be used to test understanding and build on earlier responses. This also encourages students to communicate their ideas and explain their thinking. These activity types were chosen for their general wide applicability, ease of use and as tools reported elsewhere as being perceived as effective for learning mathematics (Lowe et al., Citation2016).

In addition to the above, we probed what barriers students perceived there to be to participating in the activities, such as lack of confidence or time. More specifically, we considered the following research questions:

To what extent do students participate in polling, on-screen activities and text-chat whilst solving mathematical problems within synchronous online tutorials?

How are these activities perceived by students?

What do students and tutors perceive as the benefits to learning from these activities?

What technological issues, if any, do tutors and students face?

Educational context

This study was conducted in the School of Mathematics and Statistics at the Open University (OU). The OU is the largest distance-education provider in the United Kingdom and offers both undergraduate and postgraduate degrees studied either part- or full-time. Undergraduate mathematics students study several modules, each one typically over a 30-week period involving between 10- and 20-hours’ study per week. The module and assessment materials are provided online with the core texts also provided as textbooks. All the compulsory material is normally covered in these core texts, which students study independently, and which include numerous worked examples and activities with solutions. Some modules also include compulsory materials on mathematical software. The compulsory material is supplemented with additional, optional resources such as video clips and forum participation. Students are required to complete both written and computer-based continuous assessment and to sit a final module exam. Students involved in this project had to pass both the continuous assessment component and the final exam to gain module credit.

Tutor support is provided on each module, with a tutor typically supporting 20 students in a group. Tutors deliver both face-to-face (when possible) and online tutorials, available to several tutor-groups. These tutorials, which are the focus of this research, are optional, though attendance is strongly encouraged. The broad content of a tutorial is specified module-wide, but tutors prepare their own materials and are encouraged to adapt to the needs of the students who attend. The tutorials therefore do not cover all the compulsory materials, but provide additional reinforcement and support. They are, however, constructively aligned (Biggs & Tang, Citation2007) with the core texts, focussing on the module learning objectives and preparing for assessment. Face-to-face sessions have traditionally been very interactive, providing an important opportunity for active learning within the full teaching programme of a module. It is therefore vital that this aspect is maintained in online alternatives.

The OU introduced Adobe Connect (AC) as its online conferencing software in October 2016 and provided a compulsory staff development programme on the use of the software. AC includes similar interactive tools (such as polling, text chat, microphones, whiteboard and breakout rooms) to those widely available in much online conferencing software.

Methods

A mixed methods approach was taken, with both qualitative and quantitative data collected on the online tutorials given by three tutors on three modules spanning all three levels of undergraduate mathematics. Data were collected from the following sources:

eleven online tutorial observations;

student and tutor surveys on these tutorials;

semi-structured interviews with nine students who had participated in the tutorials;

a focus group with the three tutors delivering the online tutorials.

Ethics approval for the research was granted by the OU’s Student Research Project Panel.

As is typical with tutorials in this programme, tutors planned their own tutorial content, when and how to use activities, and which online tools to use. The three participating tutors were highly conversant with the software and experienced in running these types of activity. There was no requirement to include all three types of activity (solving mathematical problems via polling, on-screen activities and text-chat) in every tutorial. Students were encouraged to answer the text-chat activities by either microphone or text-chat, but as only one student used a microphone once during these eleven tutorials, we refer to them as text-chat activities.

Tutorial observations

Recordings of eleven live online tutorials were observed between February and May 2018. Students were familiar with the online conferencing software, having used it as part of their previous studies, and consent was obtained for recording the tutorials. The three authors observed the recordings rather than the live sessions to avoid any effect their presence might have had.

The following data were collected for each activity: the type of activity (one of the three studied), the purpose of each activity, the overall duration of the activity as well as the duration of active student participation, the (minimum) number of students participating in the activity and the number of interactions made (these last two were not possible for on-screen activities due to technological limitations). Notes on the tutors’ teaching style, interaction with students and any technical issues were also made.

An Excel spreadsheet was used to calculate various statistics for each type of activity in each tutorial:

the total number of activities of each type;

mean length of time spent on the activities (overall duration as well as the duration of active student participation);

proportion of tutorial spent on the activities (overall duration as well as the duration of active student participation);

mean number of individual students participating in the activities (this was not possible for the on-screen activities);

mean number of interactions relating to the activities (again not accurate for on-screen activities).

Also found was the proportion of students who participated at least once during a tutorial in any activity (not including on-screen activities as above). No activities other than the three types studied were included.

Student and tutor surveys

Student surveys were included to investigate students’ own perception of their participation, why they did or did not participate in the different activities and what effect (if any) they felt the activities had on their learning. A link to an anonymous online survey was publicised to the students in each of the eleven tutorials, and tutors encouraged students to participate. The questions concentrated on whether the students had attempted the activities, shared their answers, and perceived that the activities aided or hindered their learning and enjoyment for each of the three different types of activity. The survey also explored factors that encouraged or hindered students in engaging with the activities and what effects, if any, the activities had on aspects of their learning. While the student survey provided mainly quantitative data from multiple choice questions using a Likert scale, the provision of open comment boxes also led to qualitative data from the surveys.

The three tutors filled in a tutor survey after each of their tutorials included in this research. These survey questions concentrated on the tutors’ perception of the level of student participation, and what benefits and disadvantages to student enjoyment and learning they perceived the inclusion of these activities to have.

The quantitative data from the multiple-choice student and tutor survey questions were collated within the survey software Online surveys (Citation2018) and manually exported to Excel for visual display and comparison. The free text comments from the surveys were analysed using NVivo version 11 (Citation2015), alongside the results from the student interviews and the tutor focus group.

Student interviews

Semi-structured interviews were carried out by the three authors with nine students who were selected from the live tutorial participants to cover a balance of undergraduate levels, gender, geography and level of participation observed during the tutorials. The interviews were conducted by telephone (Skype for Business) after obtaining student consent. A script of open questions was used to avoid any ‘leading’ questions. The questions concentrated on the same themes as the student survey questions, allowing these to be explored in more depth and new themes to emerge. The interviews were recorded and transcribed professionally for analysis and coded in nVivo.

Tutor focus group

The three tutors took part in a two-hour focus group with the authors. Open questions were used to investigate the tutors’ experiences of using the three different types of activity, their understanding of the purpose and benefits of including activities, how they encouraged participation and how participation could be improved. Though guided by these open questions, the discussion was driven by the tutors to allow any new themes to emerge and be explored. This enabled comparison between the students’ and tutors’ perceived experiences. The discussion was recorded, transcribed professionally for analysis and coded in nVivo.

Qualitative data

A thematic analysis approach (Braun & Clarke, Citation2013) was used to analyse the qualitative data (in nVivo) from the student interview transcripts (merged with the open comments from the student surveys) and the tutor focus group transcript (merged with the open comments from the tutor surveys). Thematic analysis of two interview transcripts was carried out independently by each author. A subsequent lengthy discussion of the themes showed good agreement between the authors. Further independent analysis and thorough comparison of one full transcript ensured close agreement between the authors on both the emergent themes and the coding of the transcripts.

Potential sources of bias

The variety of sources enabled triangulation of the findings, but there remains concern that selection bias could have affected the results. The study necessarily relied on feedback from students who had chosen to attend these optional tutorials given by these tutors.

Results

The length of tutorials and the proportion of activities in each tutorial were highly variable; tutorials lasted between 1 and 1.5 hours with 17% – 81% of a tutorial being spent on activities (the mean across tutorials was 45%). During an activity, between 13% and 84% of the time was devoted to the students working on the activity, with the rest used by the tutor to introduce the problem and explain the solution.

Some screen activities were very long (up to 12.5 min) and tended to include more in-depth problems, sometimes with several parts, to allow for the overhead in set-up time. Text-chat activities were generally quick (1–3 min), with polling activities in-between (2–8.5 min). Students were generally given more thinking time in polling activities than text-chat activities. Some polling activities were also extended activities with hints and partial solutions given part-way through. All tutors created a relaxed environment and verbally encouraged participation in the activities.

There were 51 responses to the student tutorial survey, from 109 attendances. While it is possible that individual students who attended more than one of the eleven tutorials could have filled in more than one survey, it is unlikely that many would have done so. This is supported by a higher number of survey responses for the initial tutorials in each module. Tutorial attendance ranged from 5–14 students with response rates for three early tutorials as high as 70%-100%, dropping to 20%-44% in later tutorials (with one outlier at 7%).

To address the research questions, the different data sources were analysed separately before combining and triangulating the evidence from all sources, culminating in the following key findings.

Key findings

This section is structured as four key findings which address each of the four research questions. The relevant data is summarised according to source in the following order: tutorial observations, student surveys (quantitative data), student surveys (qualitative data) and interviews, tutor surveys and focus group.

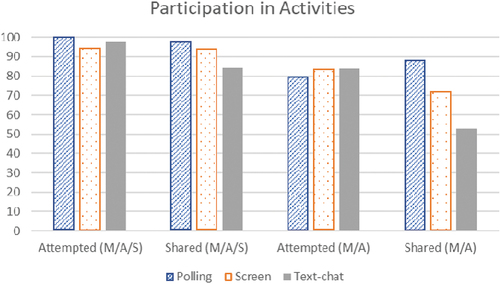

High student participation was evident in all three types of activity in these online tutorials. Answers to text-chat activities seemed to be shared less often, though were still generally attempted.

Tutorial observations

Participation rates as high as 100% in polling activities were observed, with the average proportion of attendants participating in individual polling activities between 37% and 100%. Nine out of the eleven tutorials had a mean participation rate above 60%. Furthermore, in five of the eleven tutorials, 100% of students participated in at least one polling activity, and this number was above 76% in nine of the tutorials.

Observed participation was less in text-chat activities, with the average proportion of attendees participating in individual text-chat activities between 11% and 67%. However, between 25% and 80% of students participated in at least one text-chat activity during each full tutorial. Unfortunately, it was not possible to identify individual contributions to the screen activities due to technological constraints, but observations indicated that activities were actively attempted.

Student surveys (quantitative data)

This high level of participation is supported by student survey data, shown in , where the percentage of responses indicating they shared their answers most of the time or always (M/A) was 88% for the polling activities; 72% for the screen activities and 53% for the chat-box activities.

Figure 2. Percentage of student responses reporting that they attempted the activities (whether they shared their answer or not), and shared their answer, mostly, always or sometimes (M/A/S) and mostly or always (M/A). These high participation rates are consistent with tutorial observations, including the considerably lower percentage of responses indicating they shared their answer to text-chat activities. Since only those who attempted activities could share an answer, the results for sharing relate to fewer responses (42 as opposed to 50).

Encouragingly, responses were even more positive when students were asked whether they attempted the activities, regardless of whether they shared their answer or not, with over 90% of responses reporting that they attempted all types of activity sometimes, mostly or always (M/A/S).

Student surveys (qualitative data) and interviews

This high student participation was further supported by every interviewee indicating that they attempted and shared their answer to all types of activity at least some of the time, except for 1–3 cases where this question was not explicitly answered during the interview. The following quote from Student F indicates motivation to participate:

I found that to get the most out of the tutorials, you need to be participating when they ask for participation.

Tutor surveys and focus group

Tutor perceptions of student participation support the finding of high participation in polling, reducing in text-chat activities. Tutors estimated participation rates of 67%-100% for polling activities, reducing to a full spread of perceived participation in screen activities. This reduced again to estimates of mostly 0%-33% for individual text-chat activities.

Other findings related to participation

Anonymity emerged spontaneously in three of the student interviews as an important factor in participating in polling activities, as well as one highlighting the lack of anonymity as a factor in not participating in text-chat. The tutor survey responses also indicated that the tutors perceived anonymity in polling to be important for student participation. This is illustrated by the following student quotes on polling activities:

I’m always relieved that the polls don’t show who voted for what.

I would share whatever I worked out basically, I mean it is all anonymous anyway, so no one is going to know that I am the only person who responded with the answer B and that was the only one that was wrong.

Student interview data also allowed more in-depth exploration of reasons behind the lower participation rates in text-chat activities. It emerged that students don’t perceive it as useful to respond after one or two students have already responded. The quickest students respond first, meaning the tutorial moves on before others have had sufficient time. Student B comments as follows regarding text-chat activities:

No, I don’t always share that, sorry. […] A lot of the times, with those sort of things, people are quite fast and you are like ‘I am not going to bother because people have already answered it’ […] I am not very fast, I must admit, I like to think about it but some people are so fast and people have already answered that and there is no point me putting it in sometimes.

The main reported issues in the student survey that prevented participation were a lack of time (36% of responses) and a lack of confidence (30% of responses). In contrast, some students commented negatively on the waiting time in activities and tutors generally felt that they had allowed sufficient time. Approximately 10% of responses indicated that activities did not suit their learning preferences.

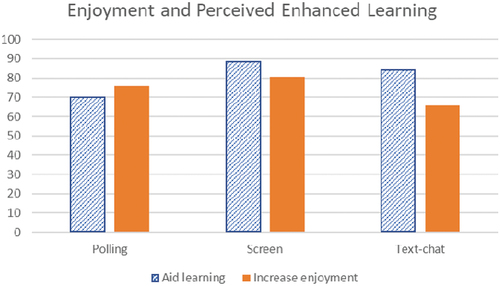

(2) All activities were generally perceived as aiding learning and enjoyable, with some indication that text-chat activities were slightly less well received.

Tutorial observations

The tutorial observation data was not relevant to this research question as it relates to student perceptions.

Student surveys (quantitative data)

Between 66% and 89% of responses to the student survey indicated that all types of activity were perceived as both aiding learning and enjoyable, as shown in . The remainder were almost exclusively neutral (the only negative responses were 2% and 3% reporting that polling and screen activities decreased their enjoyment, respectively). There was not a single response with ‘Did not think it was useful’ selected as a reason for not attempting the activities. The lowest score in this survey data was that text-chat activities were reported as enjoyable in 66% of responses. (Although the perception of polling activities aiding learning is almost as low, this is not supported by the rest of the data. This may reflect polling sometimes being used for easier warm-up activities and for other purposes such as to assess the knowledge of the students.)

Student surveys (qualitative data) and interviews

Student interview data support these findings with 7 out of the 9 interviewees indicating that they found polling and screen activities aided learning, but only 3 expressed such opinions about text-chat activities with 4 indicating that they did not think text-chat activities improved their understanding. (The explorative nature of the interviews meant that not all interviewees expressed an opinion.)

Student E explained why s/he valued the activities as follows:

They are good because otherwise you feel ‘am I the only person who is awake’ or the tutor must feel that as well, and I think it is difficult to actually try and make it like a classroom but if you don’t then it is like talking into space.

Student F enjoyed all three types of activity:

I think for me they were all equally enjoyable. I like the being interactive and being part of something rather than just attending a lecture-type tutorial where there is no participation and you are just sitting there taking notes. It was nice to be a part of it and to show your understanding, even if it is only to yourself.

Student I explained why s/he enjoyed text-chat activities less:

If everybody’s typing, it can slow the session down and also if everybody then posts something at the same time relating to what’s been discussed, then Tutor 2 has to sift through all these responses that have just been dumped on him effectively, to try – to then try to answer everybody in turn. So I think it has its place, but if, like you say, if the polls aren’t available and the actual audio is not available, it can really slow a whole session down and it can become quite fragmented and disjointed because you’re waiting for somebody to type something before you can then proceed.

Tutor surveys and focus group

Tutors also perceived these activities to increase student participation and enjoyment, though the text-chat was again perceived as less enjoyable for students.

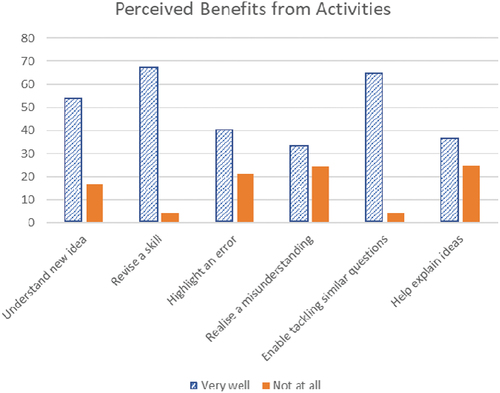

(3) Perceived benefits of participating in activities were dominated by enabling students to attempt similar activities, revision and understanding new ideas.

Tutorial observations

The tutorial observation data was again largely not relevant to this research question as it relates to student and tutor perceptions. However, it was noted during observations that misconceptions were sometimes evident but went uncorrected.

Student surveys (quantitative data)

The most common ways in which the activities were reported to aid learning in the student survey data were ‘understand a new mathematical idea’, ‘revise a skill’ and ‘enable me to tackle similar questions’, with 50% – 70% of responses identifying these options as aiding their learning ‘very well’. Less common were ‘highlighted an error I had made’, ‘realised I had misunderstood a problem’ and ‘helped me explain my ideas’, with 30% – 40% of responses rating these options as aiding learning ‘very well’. These results are shown in , and confirmed by the approximate inversion for responses indicating whether the activities aided their learning in these ways ‘not at all’.

Student surveys (qualitative data) and interviews

The student interview data indicated that students valued the opportunity to work things out for themselves. Students reported that they attend tutorials for revision purposes, to ask questions and to have new materials covered. A small number, 3 out of 9, indicated that tutorials had highlighted an error or area of weakness for them. Student interviews also indicated other perceived benefits such as benchmarking against other students, reassurance that others also make mistakes, testing understanding and helping to maintain concentration.

Tutor surveys and focus group

The tutors all perceived that including activities enhanced learning. Some of their reasons for including activities matched students’ perceived benefits: to discuss different ways of solving a problem and next steps; to highlight main ideas in upcoming assessments; to clarify core topics; to assess students’ understanding; to include extensions to the formal materials. Tutors also hoped to highlight common errors and encourage questions.

Further reasons given by tutors and relating to enhancing learning included: getting students to engage with ideas; adding variety to the session and encouraging persistence and providing motivation; as a tool to identify students’ needs so they can be addressed, and the tutorial adapted.

(4) Technical issues remain a significant problem

Tutorial observations

Technical issues were observed in nine out of the eleven tutorials, including lost sound or poor sound quality, lost connection with the tutorial room, difficulties in displaying mathematical symbols, difficulties in writing on the screen and parts of the displayed content disappearing.

Student surveys (quantitative data)

No quantitative data was collected regarding technical issues.

Student surveys (qualitative data) and interviews

One survey respondent commented:

Sometimes the audio was not in sync with the screen. The audio was fine but the screen lagged, so at times I did not know what the tutor was referring to on the screen as it would take a long time for the screen to catch up.

It also seemed that screen activities were only suitable for relatively small groups of ten students or less, otherwise the system slowed down to a halt and the screen became messy. Student B noted:

when they do enable things like drawing on the Blackboard, it doesn’t half slow things down.

Student B added:

You go onto a lecture and think ‘I hope Adobe works today. I hope we can hear what the person is saying, I hope we don’t end up having difficulties with sound’ […] it is the most frustrating bit about the whole system in that […] it doesn’t seem to be 100% […].

Tutor surveys and focus group

The issues noted in the tutorial observations were also raised by the tutors. Furthermore, they commented on the time it took to design workable activities, because of issues in getting appropriate mathematical content on the whiteboard. Both students and tutors needed to spend a significant amount of time learning how to use tools effectively. Activities have to be designed carefully and clear instructions given to minimise technical issues.

Technical issues are frustrating for both tutors and students, and interfere significantly with the smooth running of activities. This encourages the use of only the most basic and ‘safe’ features.

Discussion

It is encouraging that, despite the prevalence of didactic content in online tutorials (Butler et al., Citation2018), high student participation in activities in online tutorials is clearly achievable, with students perceiving activities as aiding learning and enjoyable. Furthermore, most students seemed to be attempting the activities most of the time, even if there was no indication of this to the tutor. As the students in this study had chosen to attend these tutorials given by these tutors, there may be bias in this sample. This overwhelmingly positive response is nonetheless encouraging.

It can be disconcerting for tutors to incorporate activities in their tutorials when the lack of non-verbal feedback from students in an online environment leaves them unable to see whether students are participating in activities or how they are experiencing them. Resorting to a didactic tutorial seems a safer option. These encouraging results are therefore very valuable in increasing confidence that students do attempt activities and find them enjoyable, though often unseen to the tutor. This should motivate tutors to incorporate activities in online tutorials.

Interestingly, activities answered by text-chat were perceived as less useful and less enjoyable by both students and tutors. This is consistent with the findings of Lowe et al. (Citation2016), where student perception of how well text-chat aids learning decreased slightly over the 5-year period studied, and its perceived effectiveness was less than polling. Our findings that fewer students participated in text-chat activities, which tended to move at the pace of the fastest attendees, provides a possible explanation for this.

An emergent theme was the importance to students of anonymity in participating, which may explain the high participation rates in polling activities. Anonymity is easier to achieve online than in face-to-face settings, and there may be great potential in investigating ways of exploiting this further online, as well as exploiting the use of anonymous online tools in face-to-face settings.

Lack of time was one main reason given for not participating (in approximately one third of student survey responses), while tutors felt they had allowed sufficient time. This reflects the difficulties of planning activities for a student group with a wide range of mathematical experience, but seems emphasised in an online environment where the tutor receives less feedback from the students. Allocating a set amount of time to each activity, with an extension activity for faster students, may help address this issue. Lack of confidence was the other dominant reason given for not participating. Achieving a balance between student activities and tutor-led content may improve student confidence to attempt activities.

It is difficult to measure whether including these types of activity in online tutorials improves understanding of mathematical ideas, especially as it was not possible to isolate the effects of the tutorials from the many other aspects of the full teaching programme, to compare assessment results with a control group. The results reported in Freeman et al. (Citation2014), however, increase our confidence greatly that including activities is beneficial relative to didactic tutorials. We also found that students perceived that activities enabled them to attempt similar questions, that is to apply their knowledge, which is a high cognitive level skill. Several other high cognitive level skills are employed in attempting similar mathematical questions to those used in these tutorials, such as analysing and generalising. Facilitating the active employment of such skills during tutorials should encourage deeper learning (Biggs & Tang, Citation2007).

There is, however, significant scope for improvement in employing techniques to encourage deep learning. Only some students felt enabled to explain their ideas, to ask questions and have misconceptions corrected, all of which are associated with encouraging deep learning (Biggs & Tang, Citation2007). Other perceived benefits were less related to direct encouragement of deep learning (though still beneficial!), such as benchmarking and maintaining concentration.

Further research

More research is needed into activity design and the use of tools in an online environment, to facilitate active learning and to encourage deep learning. Larger scale studies (with control cohorts) are needed to explore the use of different types of online mathematical activities, tactics (Mason, Citation2002) and questions (Paoletti et al., Citation2018) across the undergraduate mathematics curriculum, and their effect on assessment scores and pass rates. These may well differ at different levels of undergraduate study, another aspect for further research. New techniques are required to overcome the lack of non-verbal communication and difficulties of providing individual attention and correcting misconceptions.

Technological advances are also needed. Teaching in an online environment cannot fully flourish until pedagogic needs drive the development of teaching software. Rogoza (Citation2008) and Koehler et al. (Citation2013) highlight that educational needs should be at the core of the development stage of software for online synchronous tuition. It is more difficult to adapt software designed for business needs. Our findings support this, by indicating that some online tools are well suited to incorporating activities in tutorials, but the fuller educational picture is lacking in the software design. Furthermore, the prevalence of technological issues calls for improved hardware as well as software.

It is important also to note the related requirement of training and time-commitment from tutors in incorporating more activities online. The full impact on tutors of incorporating new technology in their practice is evident in the TPACK framework (Koehler et al., Citation2013). Considerably more training than a quick course on the relevant software is required before tutors can fully incorporate the pedagogy of active learning online, including subject-specific training.

Conclusion

Our results indicate that a high level of student participation in activities can be facilitated by polling, on-screen activities on a shared whiteboard and text-chat in online synchronous tuition. Especially encouraging was the finding that most students attempted the activities most of the time, even if this was not evident to the tutor through the software. The activities were also perceived both as aiding learning and enjoyable by students, though text-chat received slightly less favourable reports. Students perceived that activities enabled them to apply their knowledge to similar questions, but there is concern that the current online environment is not conducive to correcting misconceptions and adapting to individuals.

This success of incorporating activities comes with significant scope for further improvement, with the aim of further encouraging active learning and thereby deeper learning. Improved technical and pedagogic tools are required, particularly for adapting to individuals and providing essential instant feedback in an online environment. More research is needed into activity design using available online tools as well as improved software design with pedagogic needs at the core. The impact on tutors in terms of development needs must also not be under-estimated, nor the significant technological issues that were apparent.

The results from this study, in particular the high participation and enjoyment rates, should encourage tutors to include more activities in online tutorials (especially using the polling and shared whiteboard tools), moving away from a didactic approach to one which promotes greater student participation and active learning. The importance of anonymity to participation also emerged strongly, and some techniques may be adaptable to the anonymous use of technology in a face-to-face environment.

Ethics approval

HREC/2744/Rogers. Permission obtained from the OU’s Student Research Project Panel, and individual student consent obtained.

Acknowledgments

The authors thank their eSTEeM mentor, Diane Butler, for invaluable support and discussions, as well as the tutors and students for their contributions.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Katrine Sharp Rogers

Katrine Sharp Rogers is a Lecturer in the School of Mathematics and Statistics at the Open University. She leads a distance-learning final-year module with over 150 students, as well as producing teaching and assessment material for new and existing distance-learning modules in mathematics and physics. Her research interests are in methods for teaching mathematics at a distance, as well as within her field of applied mathematics.

Claudi Thomas

Claudi Thomas is a Senior Lecturer in the School of Mathematics and Statistics at the Open University. She is particularly interested in the most effective ways in which tutors can support students at a distance, whether this be during synchronous online sessions or by asynchronous means.

Hilary Holmes

Hilary Holmes is a Senior Lecturer in the School of Mathematics and Statistics at the Open University. Her main interests are online learning of mathematics, improving support for disabled students and widening participation in mathematics.

References

- Beyth-Marom, R., Saporta, K., & Caspi, A. (2005). Synchronous vs. asynchronous tutorials. Journal of Research on Technology in Education, 37(3), 245–262. https://doi.org/10.1080/15391523.2005.10782436

- Biggs, J., & Tang, C. (2007). Teaching for quality learning at university: What the student does (3rd ed.). Open University Press.

- Braun, V., & Clarke, V. (2013). Successful qualitative research: A practical guide for beginners. Sage Publications Ltd.

- Butler, D., Cook, L., Haley-Mirnar, V., Halliwell, C., & MacBrayne, L. (2018). Achieving student centred facilitation in online synchronous tutorials. In 10th EDEN Research Workshop (pp. 76–82). [online]. Barcelona. http://www.eden-online.org/wp-content/uploads/2018/11/RW10_2018_Barcelona_Proceedings.pdf

- Campbell, A., Gallen, A.-M., Jones, M. H., & Walshe, A. (2018). The perceptions of STEM tutors on the role of tutorials in distance learning. Open Learning: The Journal of Open, Distance and e-Learning, 34(1), 1–14. https://doi.org/10.1080/02680513.2018.1544488

- Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., & Wenderoth, M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences, 111(23), 8410–8415. https://doi.org/10.1073/pnas.1319030111

- Hampel, R. (2006). Rethinking task design for the digital age: A framework for language teaching and learning in a synchronous online environment. ReCALL, 18(1), 105. https://doi.org/10.1017/S0958344006000711

- Kear, K., Chetwynd, F., Williams, J., & Donelan, H. (2012). Web conferencing for synchronous online tutorials: Perspectives of tutors using a new medium. Computers & Education, 58(3), 953–963. https://doi.org/10.1016/j.compedu.2011.10.015

- Koehler, M. J., Mishra, P., & Cain, W. (2013). What is technological pedagogical content knowledge (TPACK)? Journal of Education, 193(3), 13–19. https://doi.org/10.1177/002205741319300303

- Lowe, T., Mestel, B., & Williams, G. (2016). Perceptions of online tutorials for distance learning in mathematics and computing. Research in Learning Technology, 24(1), 30630. https://doi.org/10.3402/rlt.v24.30630

- Mason, J. H. (2002). Mathematics teaching practice. A guide for university and college lecturers. Horwood Publishing Limited.

- McBrien, J. L., Cheng, R., & Jones, P. (2009). Virtual spaces: Employing a synchronous online classroom to facilitate student engagement in online learning. The International Review of Research in Open and Distributed Learning, 10(3), 1–9. https://doi.org/10.19173/irrodl.v10i3.605

- Ng, K. C. (2007). Online technologies : Challenges and pedagogical. International Review of Research in Open and Distance Learning, 8(1), 1–15. https://doaj.org/article/b802d7657a77490690c3f6a2e530408b

- NVivo. (2015). QSR International Pty Ltd.

- Online surveys. (2018). Jisc.

- Ottenbreit-Leftwich, A. T., Glazewski, K. D., Newby, T. J., & Ertmer, P. A. (2010). Teacher value beliefs associated with using technology: Addressing professional and student needs. Computers & Education, 55(3), 1321–1335. https://doi.org/10.1016/j.compedu.2010.06.002

- Pan, -C.-C., & Sullivan, M. (2005). Promoting synchronous interaction in an eLearning environment. T.H.E. Journal, 33(2), 27–30. https://thejournal.com/articles/2005/09/01/promoting-synchronous-interaction-in-an--elearning-environment.aspx

- Paoletti, T., Krubnik, V., Papadoulos, D., Olsen, J., Fukawa-Connelly, T., & Weber, K. (2018). Teacher questioning and invitations to participate in advanced mathematics lectures. Educational Studies in Mathematics, 98(1), 1–17. https://doi.org/10.1007/s10649-018-9807-6.

- Robinson, H., Phillips, A. S., Sheffield, A., & Moore, M. (2015). A rich environment for active learning (REAL). In J. Keengwe & J. J. Agamba (Eds.), Models for improving and optimizing online and blended learning in higher education (pp. 34–57). IGI Global. https://doi.org/10.4018/978-1-4666-6280-3.ch003

- Rogoza, C. (2008). The influence of epistemological beliefs on learners’ perceptions of online learning: Perspectives on three levels. In J. Visser & M. Visser-Valfrey (Eds.), Learners in a changing learning landscape (pp. 91–108). Springer. https://doi.org/10.1007/978-1-4020-8299-3_5

- Saw, K. G., Majid, O., Abdul Ghani, N., Atan, H., Idrus, R. M., Rahman, Z. A., & Tan, K. E. (2008). The videoconferencing learning environment: Technology, interaction and learning intersect. British Journal of Educational Technology, 39(3), 475–485. https://doi.org/10.1111/j.1467-8535.2007.00736.x

- Tanner, J. D., Bryant, R., Thomas, C. D., Utts, J., Kohlenbach, U., Hill, R. R., Lauter, K. E., Walker, M., Davis, R. A., Su, F. E., Goins, E. Staley, J., Larson, M., Cook, P., & Kinch, D. (2016). Active learning in post-secondary mathematics education. CBMS. https://www.cbmsweb.org/wp-content/uploads/2016/07/active_learning_statement.pdf

- Wang, S.-K., & Hsu, H.-Y. (2008). Use of the webinar tool (Elluminate) to support training: The effects of webinar-learning implementation from student-trainers’ perspective. Journal of Interactive Online Learning, 7(3), 175–194. http://www.ncolr.org/jiol/issues/pdf/7.3.2.pdf

- Williams, J. (2015). Mathematics education and the transition into higher education. In J. Williams, M. Grove, T. Croft, J. Kyle, & D. Lawson (Eds.), Transitions in undergraduate mathematics education (pp. 25–37). Higher Education Academy.