ABSTRACT

This study examined the influence of higher education teacher training on student physics teachers’ progression in content knowledge (CK). To become experts, student teachers must acquire both declarative and procedural – conditional CK. This progression is generally characterized by the prerequisite role of declarative knowledge, meaning that declarative knowledge influences procedural – conditional knowledge exclusively. The progression in declarative as well as procedural – conditional CK requires special support, due to the complexity of physics. This special cognitive support is assumed to enhance student teachers’ CK development by reducing complexity and cognitive demand. To date, however, it has been unclear how cognitive support contributes to student physics teachers’ declarative and procedural – conditional CK development. To address this issue, we analyzed data across one year of higher education teacher training of student physics teachers (N = 107). We used a cross-lagged model to examine the development of declarative and procedural – conditional knowledge under the influence of cognitive support. Our findings revealed that the development of student teachers’ declarative and procedural – conditional CK is intertwined. Interestingly, cognitive support only influenced the development of procedural – conditional CK. This implies that higher education teacher training should provide cognitive support to promote student teachers’ progression toward CK expertize.

Introduction

Expert knowledge can be characterized as the understanding of facts and relationships and the ability to apply knowledge in specific situations (Bransford, Brown, and Cocking Citation2000). Therefore, to become experts, teachers must not only know that something is so but also understand why (Shulman Citation1986). Accordingly, higher education teacher training involves supporting student teachers in developing declarative and procedural – conditional knowledge.

Many studies on learning in higher education teacher training have shown that the quantity of learning opportunities positively affects student teachers’ content knowledge (CK) (e.g. Großschedl et al. Citation2015; Kleickmann et al. Citation2013). Beyond that, the importance of teaching quality in supporting student teachers is well documented. In particular, coherent, clear, and well-structured presentations of content (i.e. cognitive support; Kleickmann, Steffensky, and Praetorius Citation2020) have been found to support the development of student teachers’ professional knowledge (Schiering et al. Citation2021). Despite the findings that higher education teacher training programs support the development of student teachers’ professional knowledge, how higher education teacher training in particular supports student teachers’ progression toward declarative and procedural – conditional knowledge and the role that cognitive support plays in this process are unclear. The aim of this study was therefore to produce insights into (1) student teachers’ knowledge progression during higher education teacher training and (2) the influence of cognitive support on that progression.

Theoretical background

Experts’ knowledge

Developing students’ expertize in a domain is a main goal of higher education (Zlatkin-Troitschanskaia et al. Citation2017). Expertize rests on a highly elaborate and structured knowledge base organized around big ideas (e.g. Bransford, Brown, and Cocking Citation2000; Shavelson, Ruiz-Primo, and Wiley Citation2005). From this point of view, novice knowledge consists mainly of facts (Ferguson-Hessler and de Jong Citation1990), while expert knowledge also includes schemes, heuristics, or mental models (Shavelson, Ruiz-Primo, and Wiley Citation2005). Therefore, to have expert knowledge means to know ‘ideas deeply, know how these ideas are connected and why they are important, and know when, where, and how to use this knowledge to accomplish a task’ (Brownell and Kloser Citation2015, 530).

The characteristics of expert knowledge presented by Brownell and Kloser (Citation2015) were also reflected in Shavelson, Ruiz-Primo, and Wiley’s (Citation2005) distinction of four kinds of knowledge: declarative, procedural, schematic, and strategic. Declarative knowledge (‘knowing that,’ see also Ryle Citation1949) comprises knowledge of facts and definitions, such as Newton’s second law of motion. Procedural knowledge (‘knowing how,’ see also Ryle Citation1949) consists of applicable knowledge, such as methods and how to use them, e.g. knowledge of how to measure the force of an accelerating body. Schematic knowledge (‘knowing why’) involves understanding how knowledge is applied and how to reason in specific situations, e.g. knowing why bodies of all masses accelerate downward at the same rate, neglecting air resistance. Lastly, strategic knowledge (‘knowing when, where, and how knowledge applies’) comprises an understanding of ways in which knowledge can be applied as well the ability to check whether they are reasonable, for instance, knowing that Newton’s second law of motion can only be applied to an entire system of constant mass. As these different kinds of knowledge can be located on a level continuum that ranges from low (declarative knowledge) to high (strategic knowledge) proficiency (Shavelson, Ruiz-Primo, and Wiley Citation2005), they can be used to differentiate between novices and experts. Novices, at one end of the continuum, are typically able to recall a list of facts and definitions and are restricted in their conceptual understanding (Bransford, Brown, and Cocking Citation2000). Experts, at the other end of the continuum, have elaborated knowledge, meaning that their knowledge contains declarative, procedural, schematic, and strategic knowledge (de Jong and Ferguson-Hessler Citation1996).

Progression in developing experts’ knowledge

Models on the development of the different kinds of knowledge differ, especially with respect to how the interrelation between declarative and procedural knowledge is delineated in the progression (for an overview, see Sun, Merrill, and Peterson Citation2001). Most models have outlined the importance of declarative knowledge in the development of procedural knowledge (e.g. Anderson Citation1982). In this view, declarative knowledge is transformed (i.e. proceduralized) through continuous practice into a procedural form, which consequently results in a gain of procedural knowledge (‘top-down learning,’ see Sun, Merrill, and Peterson Citation2001). However, Rittle-Johnson, Siegler, and Alibali (Citation2001) outlined the opposite. From this point of view, using procedural knowledge to solve problems can help learners become aware of underlying key concepts, leading to a deeper understanding of principles (‘bottom-up learning,’ see Sun, Merrill, and Peterson Citation2001). For example, Sahdra and Thagard (Citation2003) examined biologists’ knowledge and found that their procedural knowledge can be developed without having a priori declarative knowledge. In sum, knowledge development can occur from declarative to procedural knowledge as well as the other way around; the development of declarative and procedural knowledge is assumed to be interdependent.

Teachers as experts of teaching

Teachers are experts in teaching their subjects and must therefore understand the content they teach (see e.g. Shulman Citation1986). This understanding is commonly known as subject matter knowledge or CK. CK has been found to predict teachers’ actions in classrooms (e.g. Childs and McNicholl Citation2007) and to serve as a baseline for developing pedagogical content knowledge (e.g. Rollnick et al. Citation2008). Therefore, there is no doubt that CK represents a key component of teachers’ professional knowledge (Nixon, Hill, and Luft Citation2017).

CK is commonly described as knowledge about ‘major facts and concepts within a field and the relationships among them’ (Grossman Citation1990, 6). To characterize this knowledge in more detail, teachers’ CK can also be classified into different kinds of knowledge: declarative, procedural, and conditional (e.g. Jüttner et al. Citation2013; Kirschner et al. Citation2016; Sorge et al. Citation2019). Teachers’ declarative and procedural CK are conceptualized similarly to Shavelson, Ruiz-Primo, and Wiley (Citation2005), whereas conditional CK includes schematic and strategic knowledge. In line with recent research on competence measurement (Blömeke, Gustafsson, and Shavelson Citation2015), the assessment of these different kinds of CK can be located on a continuum. While most of the research used paper – pencil tests to assess the cognitive characteristics of CK (see e.g. Kirschner et al. Citation2016; Sorge et al. Citation2019), Kulgemeyer et al. (Citation2020) developed performance assessments to measure procedural knowledge. However, little is known about the extent to which teachers actually hold these different kinds of CK. Jüttner et al. (Citation2013) showed that teachers were more likely to answer declarative items correctly than they were procedural or conditional items. However, it was not possible to differentiate between the procedural and conditional items. Woitkowski (Citation2020) emphasized that student teachers started their study program with only factual (i.e. declarative) CK and were able to extend it during their first year of study.

Overall, it seems appropriate to differentiate between declarative and procedural – conditional knowledge (i.e. factual and applicable knowledge, respectively) to describe teachers’ CK. While beginner student teachers are comparable to novices, as they rely on only declarative knowledge, it is expected that they will develop declarative and procedural – conditional CK throughout their higher education teacher training.

Progression in becoming teaching experts

Student teachers’ CK is mainly developed during higher education teacher training (e.g. Jüttner et al. Citation2013; Kirschner et al. Citation2016; Kleickmann et al. Citation2013). Multiple studies have shown that the quantity (i.e. number of courses taken) of learning opportunities such as lectures and seminars has a strong influence on student teachers’ CK (e.g. Großschedl et al. Citation2015; Sorge et al. Citation2019). However, the mere number of courses is not sufficient to explain teachers’ CK development. Garet et al. (Citation2001) pointed out that various teaching factors impact teachers’ professional development, such as the coherence of instructional guidance they receive. Indeed, the relevance of teaching quality for improving students’ outcomes has been stressed in many studies and meta-analyses (e.g. Schneider and Preckel Citation2017). Most recently, Kleickmann, Steffensky, and Praetorius (Citation2020) emphasized the role of cognitive support as a crucial dimension of teaching quality (see also Schiering et al. Citation2021). The cognitive support dimension comprises the reduction in complexity and cognitive demands ‘by means of structuring content and promoting clarity’ (Kleickmann, Steffensky, and Praetorius Citation2020, 38).

Because the structure of physics is complex and hierarchically organized (e.g. Koponen and Pehkonen Citation2010), learning seems to be particularly challenging within this domain (Mulhall and Gunstone Citation2012). One way to overcome learning barriers in physics is to purposefully provide cognitive support, ranging from support for the entire class to individualized support (Kleickmann, Steffensky, and Praetorius Citation2020). Support for the entire class addresses the clarity of learning goals, coherence among larger units, well-structured presentations of content, and adequate learning materials (Helaakoski and Viiri Citation2014; Seidel, Rimmele, and Prenzel Citation2005). This kind of support can foster self-determined learning motivation because well-structured learning opportunities are assumed to result in the integration of teaching goals in individual learning goals (Seidel, Rimmele, and Prenzel Citation2005). Individualized support comprises support to adjust students’ individual learning through adjusted explanations and analogies or methods and strategies of scaffolding (see e.g. Puntambekar and Hübscher Citation2005). Individualized support helps students overcome limitations in working memory and allows for the transfer of information into long-term memory (Kirschner, Sweller, and Clark Citation2006). For example, student physics teachers can fail at authentic physics problems if they lack strategies to handle rich information: the working memory load hampers learning. To support student teachers in coping with such situations, they require adequate scaffolding, such as feedback on their problem-solving strategies (see e.g. Taconis, Ferguson-Hessler, and Broekkamp Citation2001). Consequently, cognitive support provides strategies to guide students through these challenging problems. As challenging problem solving is predominantly related to students’ knowledge of methods and their application in specific situations, it can be assumed that cognitive support especially results in a gain of procedural – conditional knowledge.

In sum, cognitive support promotes self-determined learning motivation and enables learners to actively engage in the challenging process of knowledge development. It is therefore likely that CK learning opportunities in higher education teacher training also need to provide cognitive support to promote student teachers’ CK development. In fact, the research indicated that cognitive support is a key influencing factor of student physics teachers’ CK development during higher education teacher training (Schiering et al. Citation2021). Therefore, it can be assumed that cognitive support will benefit student teachers’ progression from novice to expert by promoting their procedural – conditional CK development.

Research questions

Student teachers need both declarative and procedural – conditional CK to become expert teachers in their domains. Although cognitive support in learning opportunities is positively related to student teachers’ CK (Schiering et al. Citation2021), it remains unclear how cognitive support contributes to student teachers’ progression from novice to expert. To fill this gap, this study focused on the following two research questions:

How do student teachers progress in their CK development during higher education teacher training?

How does cognitive support promote student teachers in developing their declarative and procedural – conditional CK?

Methods

Research context

To address our research questions, we used data from a four-year longitudinal study (The Development of Professional Competence in Pre-Service Mathematics and Science Teacher Education, German acronym: KeiLa). Student physics teachers from German higher education teacher training programs were invited by local teacher educators to participate in the study.

Pre-service teacher preparation in Germany takes place in universities and emphasizes the development of teachers’ professional knowledge (Neumann et al. Citation2017). This stage includes academic studies of two subjects (e.g. physics), corresponding subject education (e.g. physics education), and general education and psychology. Typically, student teachers complete a three-year bachelor’s program before finishing a two-year master’s program. Learning opportunities for CK are mainly located in the bachelor’s program (see Neumann et al. Citation2017). Hence, this stage is of interest for answering the research questions.

Data basis

This study was conducted in 20 German universities from 2014 to 2017 during the winter and summer semesters. At the onset of the winter semester, the participants completed a paper – pencil test on their CK, followed by an online survey at the end of each subsequent summer semester. The online survey featured items concerning the students’ cognitive support in the current year of study. In addition, the participants were asked to specify the number of CK learning opportunities they had attended in their current year of study.

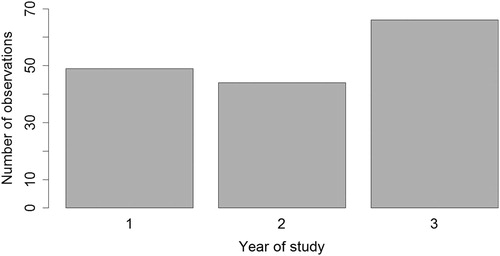

A total of 107 undergraduate student physics teachers (42% female) participated in the study. However, the number of participants varied across years. Therefore, longitudinal participation was considered to be multiple single pre – post cases. Student teachers who participated, for example, at three measurement points (e.g. CK 1, CK 2, and CK 3) were split into two pre – post CK observations (i.e. CK 1 and CK 2, CK 2 and CK 3). Hence, our data consisted of 159 pretest CK observations, 76 posttest CK observations, and 130 ratings of cognitive support of CK learning opportunities. shows the number of observations per year.

Instruments

Content knowledge

The participants’ CK were assessed using a paper – pencil test focusing on the cognitive characteristics of CK. The development of the CK items was based on an instrument development framework that accounts for different kinds of knowledge (declarative, procedural – conditional knowledge). Prior analyses provided information about the substantive validity of the instrument (e.g. measurement invariance across teacher education, convergent validity; for details, see Sorge et al. Citation2019).

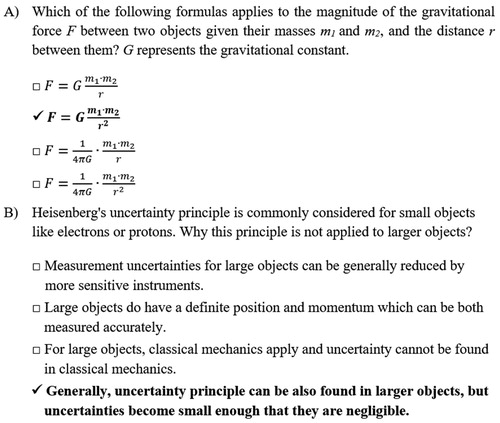

The final CK test consisted of 40 core items and an additional 20, 20, and 18 items specialized for the first, second, and third years of study. Two independent raters coded all 98 items based on the kind of knowledge (i.e. declarative vs. procedural – conditional CK). Cohen’s kappa showed good interrater reliability between the two raters (κ = .66). Both raters reached agreement on differently coded items. shows the final coding of CK items per year-specific booklet. shows two sample multiple-choice items coded as declarative and procedural – conditional CK.

Figure 2. Sample multiple-choice CK items. A) shows a sample declarative CK item, B) shows a sample procedural-conditional CK item.

Table 1. Number of CK items per year-specific booklet and kind of knowledge.

Cognitive support

Items for cognitive support had been adapted from existing instruments for the evaluation of higher education learning opportunities (Gollwitzer, Kranz, and Vogel Citation2006; Marsh Citation1982; Rindermann and Amelang Citation1994). Our survey featured four Likert-type items and showed good reliability (Cronbach’s α = .87). Two items covered individual support (e.g. ‘The lecturers’ explanations were clear.’) and two items focused on support for the entire class (e.g. ‘The learning goals were transparent and clear.’).

Data analyses

Participants’ declarative and procedural – conditional CK

We used the R package TAM (Robitzsch, Kiefer, and Wu Citation2020) to calibrate the participants’ CK test scores according to the Rasch model. We submitted year-specific booklets to a concurrent calibration (von Davier and von Davier Citation2011) with a two-dimensional loading structure of items, in order to place declarative and procedural – conditional items on a common scale. After calibration, nine CK items (four declarative items and five procedural-conditional items) showed insufficient infit outside of 0.8 and 1.2 (Bond and Fox Citation2007) and were excluded. The remaining declarative and procedural – conditional items showed good EAP reliability (.86 and .85, respectively). There was no significant difference between the item difficulty of declarative and procedural – conditional items (F(1,94) = 1.52, p = .22).

Longitudinal analyses

We aimed to examine the student teachers’ development of declarative and procedural – conditional CK during one year of study. We therefore applied a bivariate cross-lagged model using the R package lavaan (Rosseel Citation2012). We added the participants’ ratings of cognitive support and the number of attended CK learning opportunities as covariates to the cross-lagged model. In addition, we adjusted for the year of study and student teachers’ gender, as literature has reported that male student physics teachers generally have higher CK than females (e.g. Woitkowski Citation2020). As maximum likelihood is used as the estimation method, we assessed the multivariate normality of outcome variables using univariate skew, univariate kurtosis, and multivariate kurtosis (Finney and DiStefano Citation2006). The univariate skewness as well as the univariate kurtosis of all four outcome variables were considered acceptable (ranging between 0.01 and 1.89; see Finney and DiStefano Citation2006). To test multivariate kurtosis, we used Mardia’s (Citation1970) test, which did not significantly differ from multivariate normality (bk2 = 24.5; p = .72). Therefore, the outcome variables were within an acceptable range of non-normality.

Missing data

For the cross-lagged model, we used the full information maximum likelihood (FIML) estimation implemented in lavaan to handle missing data in posttest CK observations and ratings of cognitive support. We included auxiliary variables (participants’ self-concept and interest in physics, cognitive ability, last physics school grade, high school GPA, preparation for teaching in the academic track, study satisfaction, and academic self-efficacy) in the FIML procedures as saturated-correlates (Graham Citation2003).

Results

Descriptive results

presents the descriptive results for the study variables. To examine the relation between cognitive support and CK development, pretest CK should not influence the students’ ratings of cognitive support. The data showed that the participants’ ratings of cognitive support did not correlate significantly with their pretest declarative CK (r = -.08, p = .37) or pretest procedural – conditional CK (r = -.06, p = .50). It can therefore be assumed that the student teachers’ ratings of cognitive support were independent of their initial knowledge. Furthermore, the student teachers rated the cognitive support in learning opportunities as moderately high (M = 2.73 on a four-point Likert-type scale). Interestingly, cognitive support was significantly correlated with posttest procedural – conditional CK (r = .27, p = .022). These results could be related to the potential influence of cognitive support on the student teachers’ development of procedural – conditional CK.

Table 2. Descriptive statistics and correlations of study variables.

The development of declarative and procedural – conditional CK

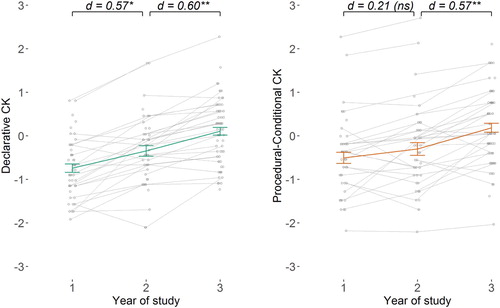

To answer the first research question, we examined the participants’ CK development. shows the progression of participants’ declarative and procedural – conditional CK. For both kinds of knowledge, we observed an overall increase in CK with the year of enrollment (for declarative CK: F(156,2) = 20.20, p < .001, η2 = 0.21; for procedural – conditional CK: F(156,2) = 10.03, p < .001, η2 = 0.11). However, this increase in declarative and procedural – conditional CK was not similar. Tukey’s post hoc test detected a significant increase in declarative CK from the first to the second and from the second to the third year of study. The procedural – conditional CK increased only from the second to the third year of study. It can therefore be assumed that student teachers develop declarative CK before acquiring procedural – conditional CK during the second half of their bachelor’s program.

Promoting the development from declarative to procedural – conditional CK

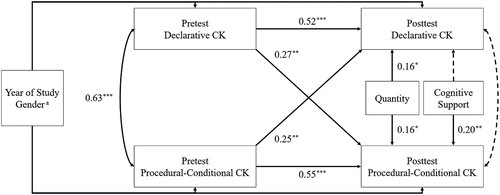

To answer the second research question, we computed a cross-lagged model that incorporated pre and posttest CK, participants’ year of study, gender, quantity of learning opportunities, and cognitive support in learning opportunities.

The model shown in had a good fit (χ2(8) = 8.00, p = .434; CFI = 1.000, RMSEA = .000, SRMR= .034). All standardized regression coefficients are displayed in the electronic appendix. The effects of pretest CK were highly predictive of the corresponding posttest CK. Moreover, both cross-lagged effects, from pretest procedural – conditional CK to posttest declarative CK and vice versa, were significant. Interestingly, the quantity of learning opportunities contributed to the development of declarative (β = 0.16, p = .014) and procedural – conditional CK (β = 0.16, p = .028), while cognitive support had a significant impact only on participants’ procedural – conditional CK development (β = 0.20, p = .001). Therefore, it can be assumed that student teachers’ development of procedural – conditional CK was promoted, especially through cognitive support in learning opportunities.

Figure 4. Cross-lagged model used to analyze student teachers’ development of declarative and procedural-conditional CK. All coefficients are standardized. Dashed lines indicate non-significant path coefficients. Auxiliary variables and correlations are not displayed. a0 = female and 1 = male. ***p < .001; **p < .01; *p < .05.

Discussion

In this study, we investigated student physics teachers’ progression from novice to expert during higher education teacher training. Our data suggest that the development of procedural – conditional CK mainly occurred during the second year of study. During the first year of study, the participants gained only declarative knowledge, although learning opportunities in higher education teacher training do not exclusively focus on factual knowledge (see Kultusministerkonferenz Citation2019). This result might be related to the essential role of declarative knowledge in student teachers’ CK development. As declarative and procedural – conditional CK can be located on a level continuum of proficiency, declarative CK could be considered a prerequisite for the development of procedural – conditional CK. However, during the second half of the bachelor’s program, student teachers additionally developed procedural – conditional knowledge. This result is in line with previous studies stressing the influence of higher education teacher training, as they identified years of study as an important predictor of student teachers’ CK (e.g. Großschedl et al. Citation2015; Sorge et al. Citation2019).

Regarding student teachers’ progression toward expertize in CK, our cross-lagged model revealed that declarative and procedural – conditional development were intertwined. Because the initial declarative CK affects procedural – conditional CK and vice versa, student teachers’ CK progression relied on top-down and bottom-up processes (Sun, Merrill, and Peterson Citation2001). Thus, declarative CK impacted the development of applicable knowledge. In turn, the student teachers’ procedural – conditional CK might have contributed to making new principles and concepts accessible (see e.g. Sahdra and Thagard Citation2003). Taking both top-down and bottom-up processes into account, Rittle-Johnson, Siegler, and Alibali (Citation2001, 347) argued that ‘[i]ncreases in one type of knowledge lead to gains in the other type of knowledge, which in turn lead to further increases in the first.’ However, it can be assumed that this iterative process needs to rest on a fundament. During their first year of study, student teachers develop this essential set of rules, examples, and principles (i.e. declarative knowledge) that enables them to iteratively develop their CK (see Sun, Merrill, and Peterson Citation2001). Overall, we were able to show that this iterative process drives student physics teachers’ CK progression.

Our study identified cognitive support in learning opportunities as an auspicious factor of the promotion of this iterative progression. While the quantity of learning opportunities influenced both declarative and procedural – conditional CK development, cognitive support exclusively promoted the development of procedural – conditional CK. This might be explained by the fact that higher education learning opportunities (i.e. quantity) already contribute to student teachers’ factual knowledge. However, developing applicable knowledge requires more than numerous learning opportunities. Therefore, our results have important implications for higher education. To provide cognitive support, lecturers need to anticipate difficult topics in advance to offer adequate guidance of student teachers’ existing knowledge (i.e. individualized support). In addition, lecturers must promote clarity of learning goals and comprehensibly structure CK learning opportunities (i.e. support for the entire class). Thus, cognitive support can be used to prevent students from falling behind; however, future studies should investigate which aspects of cognitive support are relevant for learning in higher education.

Some limitations also need to be considered with respect to our findings. First, our study was limited to the cognitive characteristics of procedural – conditional knowledge. Hence, it is possible that our findings cannot be generalized to procedural – conditional knowledge understood as performance or skill (see e.g. Blömeke, Gustafsson, and Shavelson Citation2015). Second, our analyses did not differentiate between procedural and conditional knowledge. Accordingly, we were not able to examine whether higher education teacher training and cognitive support in particular affect procedural CK, conditional CK, or both. Further studies are required to establish a sharp distinction between both kinds of CK to provide more insight into student teachers’ progression. Furthermore, our sample covered different teacher education programs in Germany. While this permitted rather generalizable conclusions for the national context, our findings need to be replicated in additional international studies to account for different higher education teacher training programs. In addition, the student teachers’ ratings of cognitive support reflected a global assessment of CK learning attended in the concurrent and preceding semester. We therefore do not have detailed information on the influence of cognitive support in specific learning opportunities. Hence, our results provide initial insights into cognitive support in higher education teacher training but must be interpreted with caution.

Conclusion

Our study incorporated a new perspective on cognitive support and its impact on student teachers’ CK development. We found that higher education teacher training did indeed result in student teachers’ declarative and procedural – conditional CK development. Moreover, to promote their progression, learning opportunities need to provide cognitive support by structuring content (e.g. coherent presentation of content used in instructional materials) and promoting clarity (e.g. explaining and highlighting main ideas). In this manner, higher education teacher training and learning opportunities can foster student teachers’ progression from novice to expert, consequently improving teachers’ future actions in classrooms and, finally, student learning in physics.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Anderson, J. R. 1982. “Acquisition of Cognitive Skill.” Psychological Review 89 (4): 369–406.

- Blömeke, S., J.-E. Gustafsson, and R. J. Shavelson. 2015. “Beyond Dichotomies: Competence Viewed as a Continuum.” Zeitschrift für Psychologie 223 (1): 3–13. doi:https://doi.org/10.1027/2151-2604/a000194 .

- Bond, T. G., and C. M. Fox. 2007. Applying the Rasch Model: Fundamental Measurement in the Human Sciences. 2nd ed. Mahwah, NJ: Lawrence Erlbaum.

- Bransford, J. D., A. L. Brown, and R. R. Cocking. 2000. How People Learn: Brain, Mind, Experience, and School: Expanded Edition. Washington, DC: National Academies Press.

- Brownell, S. E., and M. J. Kloser. 2015. “Toward a Conceptual Framework for Measuring the Effectiveness of Course-Based Undergraduate Research Experiences in Undergraduate Biology.” Studies in Higher Education 40 (3): 525–44. doi:https://doi.org/10.1080/03075079.2015.1004234 .

- Childs, A., and J. McNicholl. 2007. “Science Teachers Teaching Outside of Subject Specialism: Challenges, Strategies Adopted and Implications for Initial Teacher Education.” Teacher Development 11 (1): 1–20. doi:https://doi.org/10.1080/13664530701194538 .

- de Jong, T., and M. G. M. Ferguson-Hessler. 1996. “Types and Qualities of Knowledge.” Educational Psychologist 31 (2): 105–13. doi:https://doi.org/10.1207/s15326985ep3102_2 .

- Ferguson-Hessler, M. G. M., and T. de Jong. 1990. “Studying Physics Texts: Differences in Study Processes Between Good and Poor Performers.” Cognition and Instruction 7 (1): 41–54.

- Finney, S. J., and C. DiStefano. 2006. “Non-normal and Categorical Data in Structural Equation Modeling.” In Quantitative Methods in Education and the Behavioral Sciences. Structural Equation Modeling: A Second Course, edited by G. R. Hancock, and R. O. Mueller, 269–314. Charlotte, NC: IAP Information Age Publishing.

- Garet, M. S., A. C. Porter, L. Desimone, B. F. Birman, and K. S. Yoon. 2001. “What Makes Professional Development Effective? Results from a National Sample of Teachers.” American Educational Research Journal 38 (4): 915–45.

- Gollwitzer, M., D. Kranz, and E. Vogel. 2006. “Die Validität studentischer Lehrveranstaltungsevaluationen und ihre Nützlichkeit für die Verbesserung der Hochschullehre: Neuere Befunde zu den Gütekriterien des Trierer Inventars zur Lehrevaluation (TRIL).” [The Validity of Students’ Ratings and Their Utility for Enhancing Teaching Quality: Recent Results on the Psychometric Properties of the Trier Inventory for Teaching Evaluation.] In Didaktik und Evaluation in der Psychologie, edited by G. Krampen and H. Zayer, 90–104. Göttingen: Hogrefe.

- Graham, J. W. 2003. “Adding Missing-Data-Relevant Variables to FIML-Based Structural Equation Models.” Structural Equation Modeling: A Multidisciplinary Journal 10 (1): 80–100. doi:https://doi.org/10.1207/S15328007SEM1001_4 .

- Großschedl, J., U. Harms, T. Kleickmann, and I. Glowinski. 2015. “Preservice Biology Teachers’ Professional Knowledge: Structure and Learning Opportunities.” Journal of Science Teacher Education 26 (3): 291–318. doi:https://doi.org/10.1007/s10972-015-9423-6 .

- Grossman, P. L. 1990. The Making of a Teacher: Teacher Knowledge and Teacher Education. New York: Teachers College.

- Helaakoski, J., and J. Viiri. 2014. “Content and Content Structure of Physics Lessons and Students’ Learning Gains: Comparing Finland, Germany and Switzerland.” In Quality of Instruction in Physics: Comparing Finland, Switzerland and Germany, edited by H. E. Fischer, P. Labudde, K. Neumann, and J. Viiri. 1. Aufl., 93–110. Münster, New York: Waxmann Verlag.

- Jüttner, M., W. Boone, S. Park, and B. J. Neuhaus. 2013. “Development and Use of a Test Instrument to Measure Biology Teachers’ Content Knowledge (CK) and Pedagogical Content Knowledge (PCK).” Educational Assessment, Evaluation and Accountability 25 (1): 45–67. doi:https://doi.org/10.1007/s11092-013-9157-y .

- Kirschner, S., A. Borowski, H. E. Fischer, J. Gess-Newsome, and C. von Aufschnaiter. 2016. “Developing and Evaluating a Paper-and-Pencil Test to Assess Components of Physics Teachers’ Pedagogical Content Knowledge.” International Journal of Science Education 38 (8): 1343–72.

- Kirschner, P. A., J. Sweller, and R. E. Clark. 2006. “Why Minimal Guidance During Instruction Does Not Work: An Analysis of the Failure of Constructivist, Discovery, Problem-Based, Experiential, and Inquiry-Based Teaching.” Educational Psychologist 41 (2): 75–86. doi:https://doi.org/10.1207/s15326985ep4102_1 .

- Kleickmann, T., D. Richter, M. Kunter, J. Elsner, M. Besser, S. Krauss, and J. Baumert. 2013. “Teachers’ Content Knowledge and Pedagogical Content Knowledge.” Journal of Teacher Education 64 (1): 90–106. doi:https://doi.org/10.1177/0022487112460398 .

- Kleickmann, T., M. Steffensky, and A.-K. Praetorius. 2020. “Quality of Teaching in Science Education: More Than Three Basic Dimensions?” In Empirische Forschung zu Unterrichtsqualität: Theoretische Grundfragen und quantitative Modellierungen, edited by A.-K. Praetorius, J. Grünkorn, and E. Klieme, 37–53. Zeitschrift für Pädagogik. Beiheft 66. Weinheim: Beltz Juventa.

- Koponen, I. T., and M. Pehkonen. 2010. “Coherent Knowledge Structures of Physics Represented as Concept Networks in Teacher Education.” Science & Education 19 (3): 259–82. doi:https://doi.org/10.1007/s11191-009-9200-z .

- Kulgemeyer, C., A. Borowski, D. Buschhüter, P. Enkrott, M. Kempin, P. Reinhold, J. Riese, H. Schecker, J. Schröder, and C. Vogelsang. 2020. “Professional Knowledge Affects Action-Related Skills: The Development of Preservice Physics Teachers’ Explaining Skills During a Field Experience.” Journal of Research in Science Teaching 57 (10): 1554–1582.

- Kultusministerkonferenz. 2019. Ländergemeinsame Inhaltliche Anforderungen für die Fachwissenschaften und Fachdidaktiken in der Lehrerbildung. (Beschluss der Kultusministerkonferenz vom 16.10.2008 i. d. F. vom 16.05.2019) [Requirements for the Federal Teacher Education (Resolution passed at the Kultusministerkonferenz on the 10-16-2008 as amended on the 05-16-2019)]. Bonn: KMK.

- Mardia, K. V. 1970. “Measures of Multivariate Skewness and Kurtosis with Applications.” Biometrika 57 (3): 519. doi:https://doi.org/10.2307/2334770 .

- Marsh, H. W. 1982. “SEEQ: A Reliable, Valid, and Useful Instrument for Collecting Students’ Evaluations of University Teaching.” British Journal of Educational Psychology 52 (1): 77–95. doi:https://doi.org/10.1111/j.2044-8279.1982.tb02505.x .

- Mulhall, P., and R. Gunstone. 2012. “Views About Learning Physics Held by Physics Teachers with Differing Approaches to Teaching Physics.” Journal of Science Teacher Education 23: 429–49.

- Neumann, K., H. Härtig, U. Harms, and I. Parchmann. 2017. “Science Teacher Preparation in Germany.” In Model Science Teacher Preparation Programs: An International Comparison of What Works, edited by J. E. Pedersen, T. Isozaki, and T. Hirano, 29–52. Charlotte, North Carolina: IAP Information Age Publishing.

- Nixon, R. S., K. M. Hill, and J. A. Luft. 2017. “Secondary Science Teachers’ Subject Matter Knowledge Development Across the First 5 Years.” Journal of Science Teacher Education 28 (7): 574–89. doi:https://doi.org/10.1080/1046560X.2017.1388086 .

- Puntambekar, S., and R. Hübscher. 2005. “Tools for Scaffolding Students in a Complex Learning Environment: What Have We Gained and What Have We Missed?” Educational Psychologist 40 (1): 1–12. doi:https://doi.org/10.1207/s15326985ep4001_1 .

- Rindermann, H., and M. Amelang. 1994. Das Heidelberger Inventar zur Lehrveranstaltungs-Evaluation [The Heidelberg Inventory of Course Evaluation]. Heidelberg: Asanger.

- Rittle-Johnson, B., R. S. Siegler, and M. W. Alibali. 2001. “Developing Conceptual Understanding and Procedural Skill in Mathematics: An Iterative Process.” Journal of Educational Psychology 93 (2), doi:https://doi.org/10.1037/0022-0663.93.2.346 .

- Robitzsch, A., T. Kiefer, and M. Wu. 2020. “TAM: Test Analysis Modules.” R package version 3.5-19. Accessed February 24, 2021. https://CRAN.R-project.org/package=TAM.

- Rollnick, M., J. Bennett, M. Rhemtula, N. Dharsey, and T. Ndlovu. 2008. “The Place of Subject Matter Knowledge in Pedagogical Content Knowledge: A Case Study of South African Teachers Teaching the Amount of Substance and Chemical Equilibrium.” International Journal of Science Education 30 (10): 1365–87. doi:https://doi.org/10.1080/09500690802187025 .

- Rosseel, Y. 2012. “lavaan: An R Package for Structural Equation Modeling.” Journal of Statistical Software 48 (2): 1–36. http://www.jstatsoft.org/v48/i02/.

- Ryle, G. 1949. The Concept of Mind. London: Hutchinson.

- Sahdra, B., and P. Thagard. 2003. “Procedural Knowledge in Molecular Biology.” Philosophical Psychology 16 (4): 477–98. doi:https://doi.org/10.1080/0951508032000121788 .

- Schiering, D., S. Sorge, S. Tröbst, and K. Neumann. 2021. Course Quality in Higher Education Teacher Training: What Matters for Pre-Service Physics Teachers’ Content Knowledge Development? [Manuscript submitted for publication].

- Schneider, M., and F. Preckel. 2017. “Variables Associated With Achievement in Higher Education: A Systematic Review of Meta-Analyses.” Psychological Bulletin 143 (6): 565–600. doi:https://doi.org/10.1037/bul0000098 .

- Seidel, T., R. Rimmele, and M. Prenzel. 2005. “Clarity and Coherence of Lesson Goals as a Scaffold for Student Learning.” Learning and Instruction 15 (6): 539–56. doi:https://doi.org/10.1016/j.learninstruc.2005.08.004 .

- Shavelson, R. J., M. A. Ruiz-Primo, and E. W. Wiley. 2005. “Windows Into the Mind.” Higher Education 49: 413–30.

- Shulman, L. S. 1986. “Those Who Understand: Knowledge Growth in Teaching.” Educational Researcher 15 (4): 4–14.

- Sorge, S., J. Kröger, S. Petersen, and K. Neumann. 2019. “Structure and Development of Pre-Service Physics Teachers’ Professional Knowledge.” International Journal of Science Education 41 (7): 862–89. doi:https://doi.org/10.1080/09500693.2017.1346326 .

- Sun, R., E. Merrill, and T. Peterson. 2001. “From Implicit Skills to Explicit Knowledge: A Bottom-up Model of Skill Learning.” Cognitive Science 25: 204–44.

- Taconis, R., M. G. M. Ferguson-Hessler, and H. Broekkamp. 2001. “Teaching Science Problem Solving: An Overview of Experimental Work.” Journal of Research in Science Teaching 38 (4): 442–68. doi:https://doi.org/10.1002/tea.1013 .

- von Davier, M., and A. A. von Davier. 2011. “A General Model for IRT Scale Linking and Scale Transformations.” In Statistical Models for Test Equating, Scaling, and Linking, edited by A. A. von Davier, 225–42. New York, NY: Springer.

- Woitkowski, D. 2020. “Tracing Physics Content Knowledge Gains Using Content Complexity Levels.” International Journal of Science Education 42 (2): 1585–1608..

- Zlatkin-Troitschanskaia, O., H. A. Pant, C. Lautenbach, D. Molerov, M. Toepper, and S. Brückner. 2017. “Competency Orientation in Higher Education.” In Modeling and Measuring Competencies in Higher Education: Approaches to Challenges in Higher Education Policy and Practice, edited by O. Zlatkin-Troitschanskaia, H. A. Pant, C. Lautenbach, D. Molerov, M. Toepper, and S. Brückner, 1–5. Wiesbaden: Springer VS.

Electronic Appendix

Table A1. Regression coefficients of the cross-lagged model.