?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The ongoing implementation of research-based learning (RBL) within the higher education core curriculum leads to a growing number of students who conduct their own research projects during their course of study. Although previous studies have indicated that research-based learning has positive outcomes for students, their causal link remains unclear given a focus on specific disciplines, and the lack of pre-post designs and control groups. To fill this gap, the aim of our study is to examine how research-based learning, specifically the conduct of the research process by students on their own, affects their self-rated research competences with a longitudinal research design that was conducted in a wide range of disciplines. We used panel data from Germany (N = 520) and applied fixed-effects panel regression models to analyze students within student variance to the treatment effect of research-based learning. Results suggest two main findings. First, engagement in research-based learning significantly increases students’ self-rated research competences relating to reviews of the state of research, methodology, reflection on research findings, communication, and content knowledge. Second, the effectiveness of research-based learning differed among students enrolled in bachelor's and master's degree programs.

Introduction

Research-related teaching is a central pillar in academic education that can occur in different ways. One of the most sophisticated ways is research-based learning (RBL), in which students actively participate in a research process. The aims of RBL are not only to promote students’ research competences but also to augment their general professional qualifications by teaching them key competences like communication, presentation, and problem-solving skills (Wessels et al. Citation2021; Thiem, Preetz, and Haberstroh Citation2020b; Linn et al. Citation2015; Taraban and Logue Citation2012; Stanford et al. Citation2017). Accordingly, RBL is considered a ‘panacea for addressing a range of demands within higher education’(Wessels et al. Citation2021, 2595). In many degree programs, learning through the conduct of research is described as an ‘undergraduate research experience’ (URE), which is, by definition, limited to undergraduate students. We use the term ‘research-based learning’ (Healey and Jenkins Citation2009) to describe an instructional format for Bachelor and Master students who conduct a research project (full research cycle) (Mieg and Deicke Citation2022; Pedaste Citation2022; Wessels et al. Citation2021).Footnote1

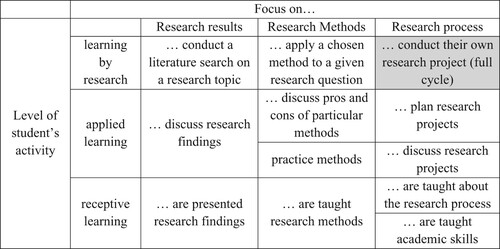

Healey and Jenkins (Citation2009) systematized various possible combinations of linking research and teaching along two axes. One axis of their model showed research content versus research processes, and the second axis showed to what extent students are treated as either participants or as an audience. The following linkages were identified according to the two dimensions of the model (Healey and Jenkins Citation2009):

Research-led: students are the audience, and the focus is on research content;

Research-tutored: students are participants, and the focus is on research content;

Research-oriented: students are the audience, and the focus is on research processes and problems; and

Research-based: students are participants, focusing on research processes and problems.

Despite its popularity in (under-)graduate research, this model neither specifies the exact nature of students’ involvement in research nor does it present a clear definition of RBL (Wessels et al. Citation2021). Based on Healey and Jenkins (Citation2009), Rueß, Gess, and Deicke (Citation2016) provided a more pronounced classification concerning students’ involvement in research-related teaching as they differentiated the students’ activity level (receptive learning, applied learning, learning by research) and the thematic focus (research results, research methods, research process) (see ). The model was tested in a curriculum analysis across various disciplines in which modules, characterized by research-related teaching, were identified and categorized according to these two dimensions (Rueß, Gess, and Deicke Citation2016) (see also Wessels et al. Citation2021). This classification allows for more conceptual clarity and offers a theoretically derived and empirically tested re-definition of RBL. In this model, RBL is located in the cell ‘research process/learning by research’ (see ). It thus activates students to undertake their own research process. By pursuing their own research questions and inquiries, students become active producers of knowledge rather than simply being consumers. A central element of RBL entails producing insights that are new not only to the student but also to the community as a whole. Herein lies a fundamental difference between this learning method and other activating learning strategies, such as inquiry-based learning (Lopatto Citation2009).

RBL has been implemented within many degree programs across various disciplines because students’ independent research projects are ‘high impact’ educational practices that significantly benefit them (Kilgo, Ezell Sheets, and Pascarella Citation2015). However, little is known about the actual effects of this approach (Linn et al. Citation2015). Given the extensive requirements of resources (e.g. time and support provided by lecturers) for implementing RBL, the question of its effectiveness is pertinent. A key question is whether the benefits associated with RBL are a causal effect of this teaching method. This study addresses this question by applying a longitudinal, quasi-experimental research design in a multitude of disciplines. Specifically, we investigated whether RBL, in which students conduct a whole research process, affects the development of their self-rated research competences.

What is currently known about the effects of research-based learning?

Several benefits are associated with RBL, most of which can be categorized as research competences. Thus, students report a better understanding of the research process as a whole, enhanced skills in interpreting results, a higher tolerance for obstacles, and increased proficiency in analyzing data (Lloyd, Shanks, and Lopatto Citation2019), as well as greater confidence in their research skills (Russell, Hancock, and McCullough Citation2007; Seymour et al. Citation2004). In addition, students demonstrate enhanced abilities to assess their own research (Taraban and Logue Citation2012; Kardash Citation2000), research knowledge (Wessels et al. Citation2021; Seymour et al. Citation2004), research interest (Gess, Rueß, and Deicke Citation2014), and a deeper understanding of their research findings and of conducting research (Bauer and Bennett Citation2003). Other positive developments include growing interest in the subject, which can foster a scientific career or interest in RBL in general (Ward, David Clarke, and Horton Citation2014; Seymour et al. Citation2004). Furthermore, studies have shown that key competences, such as communication, problem-solving, and teamwork skills, are promoted through RBL (Ward, David Clarke, and Horton Citation2014; Taraban and Logue Citation2012; Seymour et al. Citation2004; Bauer and Bennett Citation2003; Kardash Citation2000).

However, little is known about the actual causal effects of RBL on acquiring research competences because the design of most of the studies does not allow for statements on causality, which require experimental or at least quasi-experimental designs with pre-post measurements and a control group. Existing studies are either based on qualitative interviews (Seymour et al. Citation2004), retrospective alumni assessments of undergraduate research experience (Bauer and Bennett Citation2003), or self-reports (Lloyd, Shanks, and Lopatto Citation2019). Wessels et al. (Citation2021) assessed research knowledge, but their study is limited to the social sciences. Most quantitative studies lack a pre-post design, control group (Wessels et al. Citation2021), or both (Taraban and Logue Citation2012). The few existing studies with a quasi-experimental design are of limited scope because they are restricted to specific disciplines (Lloyd, Shanks, and Lopatto Citation2019; Ward, David Clarke, and Horton Citation2014). Moreover, some studies (e.g. Kardash Citation2000) may also suffer from (self-)selection effects, as they focus on courses or programs for which students participate voluntarily or even have to apply.

The current study

The aim of our study is to investigate the effects of research-based learning on several self-rated research competences. By developing their knowledge through RBL and transferring it to their individual knowledge structure, students acquire skills that extend beyond mere knowledge acquisition (Lopatto Citation2009). In light of these considerations, we expect that students who have completed an entire research project will rate their research competences higher than those who have not completed an entire research process.

Our study contributes to the literature in three ways: (1) the use of longitudinal panel data and methods, (2) a setting across disciplines, i.e. in a multitude of disciplines, and (3) investigating differences between undergraduate and graduate students.

First, we used longitudinal panel data covering the duration of the student's life cycles to analyze their self-related research competences before and after they experienced RBL. We also considered the research competences of students in a control group who did not experience RBL. Because previous studies are often based on qualitative or retrospective interviews or lack a pre-post design, a control group, or both, the causal effects of RBL remain unclear. The setting of our study, a German university, offers all of the necessary conditions for investigating the causal effects of RBL. Approximately 16,000 students at this university are enrolled in a wide range of disciplines, from the humanities and cultural studies to economics, law, social sciences, mathematics, computer science, the natural sciences, and medicine. Educational formats grounded in RBL are a key curricular element in the university's degree programs and not just within its undergraduate programs. At the University, RBL is the conduct of research projects by students within particular courses or modules (i.e. independently from their bachelor's or master's theses). Thus, all processual steps in a research project should be included, from defining a research question, gathering and analyzing data or material, to evaluating and presenting the project's findings. This conceptualization of RBL is in line with that of Rueß, Gess, and Deicke (Citation2016) (see also Wessels et al. Citation2021; Pedaste Citation2022).

At a general level, this definition adheres to the pragmatic theory of science, according to which the unity of science lies in the unity of academic research practice or in the unity of the criteria of scientific rationality (Butts Citation1991; Akademie der Wissenschaften zu Berlin Citation1991), which is expressed in the (theoretically and methodologically based) research process. Thus, the common aim is to generate new knowledge based on empirical data. This approach allows for the domain-specific implementation of RBL according to the scientific traditions of the particular domains.

Second, most previous studies have focused on students within specific disciplines, but our study adopted a cross-disciplinary approach. We operationalized research competences as self-rated competences with reference to the cross-disciplinary RMRC-K model formulated by Thiel and Böttcher (Citation2014) and the R-Comp instrument derived from this model (Böttcher and Thiel Citation2018; Citation2016). Both the model and the instrument are based on a pragmatic theory of science (Butts Citation1991; Dewey Citation1938), and the operationalization of research competences occurs according to the research process.

A cross-disciplinary model of research competences shares certain features of modern research, namely the independence of different subject cultures. Such research entails a methodically reflective examination of a research topic, consideration of existing theories and findings on the topic, and documentation of the research process and results that are comprehensible to the relevant research community (Thiel and Böttcher Citation2014).

From this definition, four basic dimensions of the research process can be identified and operationalized as concrete research competences (Böttcher and Thiel Citation2018; Thiel and Böttcher Citation2014):

- skills in reviewing the state of research (R),

- methodological competences (M),

- reflection skills (R), and

- communication skills (C)

In addition to these four dimensions of research competence, the model also incorporates the dimension of content knowledge (K). This dimension has been included, given the importance of comprehensive knowledge of the central theoretical constructs, paradigms, methods, and standards of the respective subject for the acquisition of research competence (Thiel and Böttcher Citation2014, 120; Böttcher and Thiel Citation2018).

The concept of competence in the RMRC-K model is based on a functional and pragmatic view of competences (Klieme and Hartig Citation2008) and an understanding of cognitive dispositions (Mayer Citation2003; Simonton Citation2003). Accordingly, students’ research competences have the following characteristics: they can be learned at university; they are helpful in the research process; and they are cognitive performance dispositions that functionally relate to situations and requirements, for example, during research-oriented teaching or while working on the final thesis; and they are in the domain of science/research. Motivational or volitional aspects are explicitly excluded in this concept (Böttcher and Thiel Citation2018, Citation2016).

The R-comp instrument relies on self-reports of research competences, thus allowing to apply of this instrument for students of all disciplines. Self-assessments may be biased depending on personality (John and Robins Citation1994) or social desirability (Ziegler and Buehner Citation2009). Students also have to assess their competences in comparison to immediate peers (Gess, Geiger, and Ziegler Citation2019). Therefore, self-reports depend, among other things, on the abilities of the other students in the course, and biases may arise due to the composition of the course (e.g. big-fish-little-pond effect; Seaton, Marsh, and Craven Citation2010). In addition, inexperienced individuals tend to overestimate their competences, which can paradoxically lead to a decrease in the level of competences in the course of an intervention (Kruger and Dunning Citation1999) (for this discussion, see also (Gess, Geiger, and Ziegler Citation2019)). However, due to the lack of a general objective research competence test that can be used in all academic disciplines in the same way, we decided to use self-rated competences.

Third, our study extends the existing literature on research-based learning by considering differences between undergraduate and graduate (master's level) students. Previous studies have mainly focused on undergraduate research. Therefore, little is known about differences in the effectiveness of RBL between students enrolled in bachelor's and master's degree programs. The effectiveness of RBL could be related to different levels of expertise or competence (Böttcher and Thiel Citation2018; Simonton Citation2003; Mayer Citation2003). At the beginning of their degree programs, students are regarded as novices who may benefit most from RBL when they learn and experience the elements of a research process for the first time. As their degree program continues, the effect of RBL on the students’ research competences may decrease because their current level of experience does not result in further advancement of their research competences. For example, their communication or presentation skills may not show further improvement after they have made a specific number of presentations.

Data and methods

Participants

We used longitudinal data from the project ‘Research-Based Learning in Focus Plus’. The aim of this project was to evaluate the ongoing implementation of RBL in the degree programs offered by the university across all disciplines. Starting in April 2018, we administered an online, questionnaire-based survey within a cohort of students enrolled in bachelor's and master's degree programs to elicit their experiences with RBL. Over two years, the students were invited to answer follow-up questionnaires after every semester, resulting in five survey waves. Online surveys with questions about the student's participation in RBL, entailing reflection on the previous semester, were conducted at the beginning of each new semester. For example, students were asked if they had engaged in RBL during the previous winter semester at the beginning of the summer semester. We used this strategy to capture all kinds of RBL, especially those that continued up to the end of the semester, such as writing or presenting final papers.

A total of 749 students participated in at least two waves and provided longitudinal information. To uncover the effects of RBL on students’ self-rated research competences, we applied a quasi-experimental research design focusing on individuals’ within-variation by following up with students before and after they had experienced RBL. The basic idea behind this approach was to analyze how the occurrence of a treatment (research-based learning) resulted in increasing or decreasing outcomes (self-rated research competences). We analyzed data from students who had not experienced RBL during the first panel wave to obtain a baseline measurement before RBL occurred. We followed up with these students over time. Due to this restriction, our final sample comprised 520 students with 1,405 observation time points. The students came from a wide range of disciplines such as social and natural sciences, the humanities and cultural studies, economics, engineering science, mathematics, computer science, sport science, and medicine. A total of 53 different study programs are covered in the sample. Most of the students (73.8%) were at the beginning of their studies in the first wave. Female students made up 73.4% of the sample, and nearly two-thirds (62.5%) of all students were enrolled in a bachelor's degree program. Half of all students (N = 212; BA students = 107; MA students = 105) experienced RBL in subsequent waves.

Data analysis

We use fixed-effects (FE) panel regression models to investigate the causal effects of research-based learning on students’ self-rated research competences (Allison Citation2009). Standard regression models result in biased estimates of causal effects when there are unobserved confounders, whereas fixed-effects panel regression provides unbiased estimates when modeling causality because of the use of persons’ within-person variation (Brüderl and Ludwig Citation2014). FE models do not infer causal effects from group comparisons of different persons but from comparing within-person changes induced by a treatment event (Brüderl and Ludwig Citation2014; Allison Citation2009). The main advantage of this method is that its focus on persons’ within-variation enables individuals’ unobserved heterogeneity to be controlled for, thus preventing confounding effects that could result from persons’ between-variation. In more detail, instead of conducting a between-variation comparison of students who did or did not experience RBL, we identified the effect of this learning method on students’ self-rated research competences, according to differences between individuals’ competences observed before and after they underwent RBL. Stable characteristics or heterogeneity between individuals caused, for example, by their sex or course characteristics, no longer influenced the effect of RBL on self-rated research competences. Self-selection and between-variance did not bias results. Thus, the transformation of a standard between-regression model into an FE panel regression model that reveals differences over time illustrates the advantage of using only within-variation:

(1)

(1)

(2)

(2)

is the dependent variable (self-rated research competences) that changes for different individuals (

) and time points

, and

is a vector of an independent variable or treatment (research-based learning) that varies with individuals and over time. Conversely,

is a vector of time-constant variables that do not vary over time, and

and

are vectors of coefficients. The two error terms are

and

, with

representing the unit-specific effects of all unobserved time-constant variables and

representing random variation for individuals and different time points that is statistically independent from other variables (Feldhaus and Kreutz Citation2021; Brüderl and Ludwig Citation2014). In the less restrictive FE model, any correlations between

and

and

are allowed and have the effect of controlling for time-invariant observed and unobserved variables. Given that this study focused on changes in the dependent measure

(self-rated research competences) caused by changes in the time-variant independent measure

(research-based learning), Equationequation (1

(1)

(1) ) can be rewritten as Equationequation (2

(2)

(2) ). By using the differences scores Δ, all time-constant information and errors were netted out of the equation, and only the within-variation information was considered. The FE approach offers unbiased estimates of a time-varying predictor or treatment variable for a specific outcome that is not confounded by interindividual variation (Allison Citation2009).

It is essential to control for time as an independent variable in the FE models to consider possible time trends within the control group of those who do not experience a treatment (Brüderl and Ludwig Citation2014). In this study, it seems plausible that students’ self-rated research competences increased not only when they underwent RBL. Competences like content knowledge or communication skills may have also increased over the course of their studies in light of more general experiences acquired in several different classes. Without controlling for time trends, the treatment effect could be overestimated. To uncover the causal effects of RBL, we considered these general time trends by including time dummies for every panel wave and considered the control group of non-treated students that did not experience RBL. The time trend contributed to the FE estimation when it was differenced out from the within comparison in the treatment group.

We estimated several FE models, with each self-rated research competence considered as a dependent variable and RBL and time as independent and control variables. While it is neither possible nor necessary to estimate time-constant effects in FE models, we used interactions between experiencing RBL and the student's enrollment in bachelor's or master's degree programs to investigate differences for both stages in the student's life cycles. Within panel data, observations from the same individual over several time points were not independent of each other. We used panel-robust standard errors in all FE models to correct for heteroscedasticity and arbitrary clustering of time series due to serial correlation (Brüderl and Ludwig Citation2014; Stock and Watson Citation2008). Furthermore, we used a specific extension of the widely used Jarque-Bera test to test the assumption of normality. While this test detects departures from normality in the form of skewness and excess kurtosis for standard regression models, (Alejo et al. Citation2015) extended the test for linear panel data. Normality of the residuals of each presented FE model was tested with the null hypothesis that our sample data was not significantly different from a normal population. For all models, test results accept the null hypothesis and normality. The lowest significance value was p = 0.137 for skills in reflecting on research findings.

Dependent measures

Our dependent variables were self-ratings of five different research competences based on the RMRC-K model and the R-Comp questionnaire developed by Böttcher and Thiel (Citation2018). This model and associated questionnaire were aimed at capturing several key research competences that included all aspects of an entire research process experienced during RBL. All items were phrased in a way that encompassed a multitude of disciplines, entailing interdisciplinary conceptualization and enabling the analysis of a sample of students across a wide spectrum of academic disciplines. Overall, students assessed their research competences via self-ratings for 32 grouped items. An example of an item under skills in reviewing the state of research was ‘I am able to systematically review the state of research regarding a specific topic.’ An example of an item under methodological skills was ‘I can apply different research methods appropriate to my research question.’ An example of an item under skills in reflecting on research findings was ‘I am able to adequately interpret my own research findings by relating them to key theories in the subject area.’ An example of an item under communication skills was ‘I am able to prepare research findings for a presentation at a research colloquium.’ Lastly, an example of an item under content knowledge was ‘I have a good overview of the main (current) research findings in my discipline.’ The response scale for every item ranged from 1 = strongly disagree to 5 = strongly agree. Table S1 in the Supplementary lists all of the items with their means. All research competences showed high reliability levels, with Cronbach's alpha ranging from .84 to .91 ().

Table 1. Description of research competences.

Independent measures

To analyze RBL as a treatment, we asked students whether they had performed and completed an entire research process during the previous semester, which entailed developing a research question, applying research methods and analysis, and presenting results. The time trend of students’ research competences independent of conducting RBL was measured by including time dummies for every panel wave.

Results

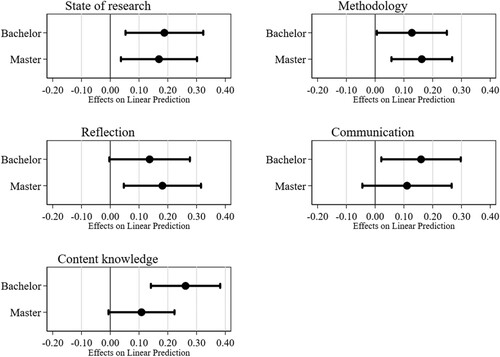

We began with a number of FE panel regression models, shown in . Separate models were developed for each research competence, indicating how RBL (i.e. completing an entire research process) resulted in changes in self-rated research competences. The results shown in models 1–5 indicate that the experience of engaging in RBL led to an increase in several self-rated research competences. Students’ skills in reviewing the state of research, methodological skills, skills in reflecting on research findings, communication skills, and content knowledge increased significantly after they had experienced an entire research process. For example, students’ self-rated skills in reviewing the state of research increased significantly by a factor of .179 when they engaged in RBL. The significant positive time effects for all research competences illustrate the importance of considering time trends in longitudinal models. The results indicated an overall increase in students’ self-rated research competences over the course of their degree programs. Apart from this general time trend, the significant treatment effects are indicative of a further increase in self-rated research competences as a result of experiencing RBL.

Table 2. Fixed-Effects Panel Regression for students’ research competences.

In the next step, we analyzed whether the positive effects of RBL on students’ self-rated research competences varied according to whether students were enrolled in bachelor's or master's degree programs. Students enrolled in university undergraduate programs may experience RBL for the first time within small projects, whereas master's students may experience more complex research processes. This difference in research processes could lead to different outcomes or pathways of self-rated research competences that change over time (Böttcher and Thiel Citation2018). Table S2 in the Supplementary shows the results of independent FE models for each research competence with interactions between the treatment, completing an entire research process, and if the student is in a bachelor's or master's degree program.

In the interaction model, the treatment's main effect shows how bachelor students’ self-rated research competences changed after completing an entire research process. The interaction effect revealed the difference between the RBL outcomes of undergraduate and graduate students. graphically illustrates the results of the interaction models, differentiating the effects of RBL on these two categories of students. Both groups of students developed enhanced skills in reviewing the state of research and methodological skills as a result of undergoing RBL, with significant improvements evident after they had completed the research process. Undergraduate students’ self-rated skills relating to reflection on research findings did not significantly increase after undergoing RBL. The communication skills and content knowledge of students in master's degree programs showed no significant changes after completing the research process.

Discussion

The rise of RBL within higher education has prompted a growing number of studies that have analyzed the outcomes and effectiveness of RBL for students (Wessels et al. Citation2021; Lloyd, Shanks, and Lopatto Citation2019; Ward, David Clarke, and Horton Citation2014; Taraban and Logue Citation2012; Russell, Hancock, and McCullough Citation2007; Thiem, Preetz, and Haberstroh Citation2020a).

The aim of our longitudinal study was to investigate the impact of RBL, and specifically the effects of conducting an entire research process, on various self-rated research skills. Our cross-disciplinary approach, encompassing the students’ life cycles, yielded general insights on the effects of RBL that were not limited to a specific discipline or specific types of students (undergraduate vs. graduate). Moreover, it allowed a comparison between these two types of students. Using a longitudinal data set from a German university, we explored the progress of students’ self-rated research competences throughout their student life cycle, i.e. from the first semester until well into their studies or even until graduation. The availability and use of panel data allowed us to model the effect of RBL by applying fixed-effects panel regression models in a more causally focused manner than in previous studies. FE models focus on individuals’ within-variation and allow to control for unobserved heterogeneity caused by between variance. Therefore, the results are not biased because of self-selection or between variance.

Our results revealed two main findings. First, due to the use of a quasi-experimental research design, the results show that students’ research experience is effective, and previous findings are not only the result of self-selection effects (Linn et al. Citation2015). We found a statistically significant increase in the self-rated research competences of students who experienced RBL. Even after we controlled for general time trends over the course of the study program by including a control group of students who did not participate in RBL, the effects on students’ self-rated cognitive competences remained significantly positive. The consideration of a control group allowed us to differentiate between general increases in students’ self-rated competences over their course of studies and the specific benefits of experiencing RBL. Our results proved general rises in self-rated research competences for all students but further increases in competence levels for those who participated in RBL. Completing an entire research process increased self-rated skills in reviewing the state of research, methodology, reflection on research findings, communication, and content knowledge. Taken together, the implementation of research-based learning pays off.

Second, our results revealed differences in the effectiveness of RBL among students enrolled in bachelor's and master's degree programs. Completing an entire research process led to enhanced skills in reviewing the state of research and methodology among both groups of students. However, the communication skills and content knowledge of Master students were not significantly improved through their engagement in RBL. Students at the master's level should have a higher level of expertise than undergraduate students, given their prior experiences in RBL (Böttcher and Thiel Citation2018; Simonton Citation2003; Mayer Citation2003). Consequently, they may reach a peak in specific research competences that do not increase further with frequent repetitions. Students should have practiced developing communication skills, notably writing and presenting research findings acquired in previous courses, or at least in developing a bachelor's thesis. Additionally, RBL within master's degree programs may be more focused and based on previous content knowledge, exploring an innovative research question, and developing a research design that includes the use of advanced methods rather than simply gaining familiarity with and performing every step of a research process at a more basic level, like undergraduate students (for examples see, e.g. Ruf, Ahrenholtz, and Matthé Citation2019; Kaufmann Citation2019). This difference may explain why skills in content knowledge did not increase among master's level students and could also account for the lack of improvement in undergraduate students’ skills in reflecting on research findings. The focus for these students may have been more on learning how to conduct a research process and try it out rather than on reflecting upon it.

Practical and pedagogical implications

The implementation of RBL requires not only extensive resources (e.g. time-consuming supervision of students) it also goes along with a student-centered approach of learning. Since in RBL, students are responsible for their learning process, the role of the lecturer is to provide support as a learning facilitator or coach rather than an instructor who teaches knowledge. However, also in RBL, lecturer-centered units are not uncommon. But they aim to convey methodological and content knowledge to facilitate the student research process (Reichow Citation2021). From a pedagogical perspective, this requires lecturers who are able to adapt to these different forms of teaching. At many universities, seminars on RBL in higher education didactics have been implemented to prepare lecturers for RBL. In these seminars, central aspects of designing and planning teaching for RBL are addressed, such as the degree of independent work by students, the role of teachers, group work, and the development of research questions or suitable examination formats. Furthermore, the participants can jointly identify the difficulties and challenges of RBL and develop concepts for them.

Limitations and future research

Despite the advantages of our study's cross-disciplinary, quasi-experimental design, it also had some limitations. First, even though it covers 53 study programs, it presented evidence from only one university in Germany. Whereas on the one hand, the choice of one location removed institutional variability, on the other hand, the extent to which the results could be generalized to other institutions as well remains to be demonstrated (Stanford et al. Citation2017). However, the cross-disciplinary RMRC-K model and the operationalization of RBL as the completion of an entire research process allows for unmodified extension to other institutions.

Second, an approach that covers a wide range of disciplines entails one limitation: it relies on students’ self-reports of their research competences rather than on a test of their competences. It cannot be ruled out that the students’ self-reports of research competences when answering the R-comp questionnaire capture their expectations of research competences rather than their actual research competences. In this case, the self-reports may rather display research-related self-efficacy expectations. The concept of research-related self-efficacy is ‘understood to describe the individuals’ beliefs in their ability to perform certain tasks such as conducting sound empirical research and disseminating the research findings’ (Lambie et al. Citation2014, 142). Whereas self-reported competences indicate the current state of capabilities, self-efficacy expectations refer to future behavior. Moreover, while both constructs seem closely related, empirical findings on this relation are still lacking. Thus, the question of how research competence tests (that still are to be developed for a cross-disciplinary context), self-reported research competences, and self-efficacy expectations are related has not yet been answered. So far, research has been inconclusive: Whereas several studies find only a weak or no correlation at all between indirect measures of individuals’ cognitive competences and more objective test scores (Mabe and West Citation1982; Schladitz, Ophoff, and Wirtz Citation2018), other studies have demonstrated that self-assessments of competences provide valid measurements of students’ cognitive abilities (Caspersen, Smeby, and Aamodt Citation2017; Douglass, Thomson, and Zhao Citation2012).

A third limitation is that, like the samples used in previous studies, ours did not allow observations of multiple research processes per student. Future studies could examine the cumulative effects of repeatedly performed research processes or the effects of carrying out single elements of the research process on research competences (Reichow Citation2021). However, the results suggest that even master's students with prior research experience benefit from conducting a complete research process. In conclusion, our results, which are based on a longitudinal research design, contribute to advancing understanding of the effectiveness of RBL for strengthening students’ research competences.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

Raw data were generated at the University of Oldenburg, Germany. Derived data supporting the findings of this study are available from the author Janina Thiem on request.

Additional information

Funding

Notes

1 When referring to the evidence presented in studies on URE, we confirmed that this approach accords with our definition of RBL (for a similar approach, see also (Wessels et al. Citation2021)).

References

- Akademie der Wissenschaften zu Berlin. 1991. Einheit der Wissenschaften: Internationales Kolloquium der Akademie der Wissenschaften zu Berlin, Bonn, 25.−27. Juni 1990. Forschungsbericht / Akademie der Wissenschaften zu Berlin = The Academy of Sciences and Technology in Berlin 4. Berlin; New York: W. de Gruyter.

- Alejo, Javier, Antonio Galvao, Gabriel Montes-Rojas, and Walter Sosa-Escudero. 2015. “Tests for Normality in Linear Panel-Data Models.” The Stata Journal: Promoting Communications on Statistics and Stata 15 (3): 822–32. doi:10.1177/1536867X1501500314.

- Allison, Paul D. 2009. Fixed Effects Regression Models. Thousand Oaks, CA: Sage.

- Bauer, Karen W., and Joan S. Bennett. 2003. “Alumni Perceptions Used to Assess Undergraduate Research Experience.” The Journal of Higher Education 74 (2): 210–30. doi:10.1353/jhe.2003.0011

- Böttcher, Franziska, and Felicitas Thiel. 2016. “Der Fragebogen Zur Erfassung Studentischer Forschungskompetenzen - Ein Instrument Auf Der Grundlage Des RMRK-W-Modells Zur Evaluation von Formaten Forschungsorientierter Lehre.” In Neues Handbuch Hochschullehre, edited by Brigitte Berendt, Andreas Fleischmann, Niclas Schaper, Birgit Szczyrba, and Johannes Wildt, 57–74. Berlin: DUZ Medienhaus.

- Böttcher, Franziska, and Felicitas Thiel. 2018. “Evaluating Research-Oriented Teaching: A new Instrument to Assess University Students’ Research Competences.” Higher Education 75 (1): 91–110. doi:10.1007/s10734-017-0128-y.

- Brüderl, Josef, and Volker Ludwig. 2014. “Fixed-Effects Panel Regression.” In The SAGE Handbook of Regression Analysis and Causal Inference, edited by Henning Best, and Christof Wolf, 327–358. Thousand Oaks, CA: SAGE Publications.

- Butts, Robert E. 1991. “Methodology, Metaphysics and the Pragmatic Unity of Science.” In Einheit Der Wissenschaften: Internationales Kolloquium Der Akademie Der Wissenschaften Zu Berlin, Bonn, 25−27. Juni 1990, edited by Akademie der Wissenschaften zu Berlin, 23–29. Berlin; New York: W. de Gruyter.

- Caspersen, Joakim, Jens-Christian Smeby, and Per Olaf Aamodt. 2017. “Measuring Learning Outcomes.” European Journal of Education 52 (1): 20–30. doi:10.1111/ejed.12205.

- Dewey, John. 1938. Unity of Science as a Social Problem. Chicago: University of Chicago Press.

- Douglass, John Aubrey, Gregg Thomson, and Chun-Mei Zhao. 2012. “The Learning Outcomes Race: The Value of Self-Reported Gains in Large Research Universities.” Higher Education 64 (3): 317–35. doi:10.1007/s10734-011-9496-x.

- Feldhaus, Michael, and Gunter Kreutz. 2021. “Familial Cultural Activities and Child Development – Findings from a Longitudinal Panel Study.” Leisure Studies 40 (3): 291–305. doi:10.1080/02614367.2020.1843690.

- Gess, Christopher, Christoph Geiger, and Matthias Ziegler. 2019. “Social-Scientific Research Competency.” European Journal of Psychological Assessment 35 (5): 737–50. doi:10.1027/1015-5759/a000451.

- Gess, Christopher, Julia Rueß, and Wolfgang Deicke. 2014. “Design-Based Research Als Ansatz Zur Verbesserung Der Lehre an Hochschulen – Einführung Und Praxisbeispiel.” Qualität in Der Wissesnchaft 8 (1): 10–16.

- Healey, Mick, and Alan Jenkins. 2009. Developing Undergraduate Research and Inquiry. York: The Higher Education Academy.

- John, Oliver P., and Richard W. Robins. 1994. “Accuracy and Bias in Self-Perception: Individual Differences in Self-Enhancement and the Role of Narcissism.” Journal of Personality and Social Psychology 66 (1): 206–19. doi:10.1037/0022-3514.66.1.206.

- Kardash, CarolAnne M. 2000. “Evaluation of Undergraduate Research Experience: Perceptions of Undergraduate Interns and Their Faculty Mentors.” Journal of Educational Psychology 92 (1): 191–201. doi:10.1037/0022-0663.92.1.191.

- Kaufmann, Margrit E. 2019. “Inquiry-Based Learning in Cultural Studies.” In Inquiry-Based Learning – Undergraduate Research. The German Multidisciplinary Experience, edited by Harald A. Mieg, 271–80. Cham: Springer Open.

- Kilgo, Cindy A., Jessica K. Ezell Sheets, and Ernest T. Pascarella. 2015. “The Link Between High-Impact Practices and Student Learning: Some Longitudinal Evidence.” Higher Education 69 (4): 509–25. doi:10.1007/s10734-014-9788-z.

- Klieme, Eckhard, and Johannes Hartig. 2008. “Kompetenzkonzepte in den Sozialwissenschaften und im erziehungswissenschaftlichen Diskurs.” In Kompetenzdiagnostik, edited by Manfred Prenzel, Ingrid Gogolin, and Heinz-Hermann Krüger, 11–29. Wiesbaden: VS Verlag für Sozialwissenschaften.

- Kruger, Justin, and David Dunning. 1999. “Unskilled and Unaware of it: How Difficulties in Recognizing One's own Incompetence Lead to Inflated Self-Assessments.” Journal of Personality and Social Psychology 77 (6): 1121–34. doi:10.1037//0022-3514.77.6.1121.

- Lambie, Glenn W., B. Grant Hayes, Catherine Griffith, Dodie Limberg, and Patrick R. Mullen. 2014. “An Exploratory Investigation of the Research Self-Efficacy, Interest in Research, and Research Knowledge of Ph.D. in Education Students.” Innovative Higher Education 39 (2): 139–53. doi:10.1007/s10755-013-9264-1.

- Linn, Marcia C., Erin Palmer, Anne Baranger, Elizabeth Gerard, and Elisa Stone. 2015. “Undergraduate Research Experiences: Impacts and Opportunities.” Science 347 (6222): 1261757. doi:10.1126/science.1261757.

- Lloyd, Steven A., Ryan A. Shanks, and David Lopatto. 2019. “Perceived Student Benefits of an Undergraduate Physiological Psychology Laboratory Course.” Teaching of Psychology 46 (3): 215–22. doi:10.1177/0098628319853935.

- Lopatto, David. 2009. Science in Solution: The Impact of Undergraduate Research on Student Learning. Computer File. Quincy, MA: National Fire Protection Assoc.

- Mabe, Paul A., and Stephen G. West. 1982. “Validity of Self-Evaluation of Ability: A Review and Meta-Analysis.” Journal of Applied Psychology 67 (3): 280–96. doi:10.1037/0021-9010.67.3.280.

- Mayer, Richard E. 2003. “What Causes Individual Differences in Cognitive Performance?” In The Psychology of Abilities, Competencies, and Expertise, edited by Robert J. Sternberg, and Elena L. Grigorenko, 263–73. New York: Cambridge University Press.

- Mieg, Harald A., and Wolfgang Deicke. 2022. “Theory and Research on Undergraduate Research.” In The Cambridge Handbook of Undergraduate Research, edited by Harald A. Mieg, Elizabeth A. Ambos, Angela Brew, Judith Lehmann, and Dominique M. Galli, 23–32. Cambridge: Cambridge University Press.

- Pedaste, Margus. 2022. “Inquiry Approach and Phases of Learning in Undergraduate Research.” In The Cambridge Handbook of Undergraduate Research, edited by Harald A. Mieg, Elizabeth A. Ambos, Angela Brew, Judith Lehmann, and Dominique M. Galli, 149–57. Cambridge: Cambridge University Press.

- Reichow, Insa L. 2021. Students’ Affective-Motivational Research Dispositions. Modelling, Assessment, and Their Development Through Research-Based Learning. Berlin: HU Berlin.

- Rueß, Julia, Christopher Gess, and Wolfgang Deicke. 2016. “Forschendes Lernen und forschungsbezogene Lehre - empirisch gestützte Systematisierung des Forschungsbezugs hochschulischer Lehre.” Zeitschrift für Hochschulentwicklung 15 (2): 23–44.

- Ruf, Andrea, Ingrid Ahrenholtz, and Sabine Matthé. 2019. “Inquiry-Based Learning in the Natural Sciences.” In Inquiry-Based Learning – Undergraduate Research: The German Multidisciplinary Experience, edited by Harald A. Mieg, 191–204. Cham: Springer Open.

- Russell, S. H., M. P. Hancock, and J. McCullough. 2007. “Benefits of Undergraduate Research Experiences.” Science 316 (5824): 548–49. doi:10.1126/science.1140384.

- Schladitz, Sandra, Jana Groß Ophoff, and Markus Wirtz. 2018. “Konstruktvalidierung eines Tests zur Messung bildungswissenschaftlicher Forschungskompetenz.” Zeitschrift für Pädagogik 61 (April): 167–84. doi:10.25656/01:15509.

- Seaton, Marjorie, Herbert W. Marsh, and Rhonda G. Craven. 2010. “Big-Fish-Little-Pond Effect.” American Educational Research Journal 47 (2): 390–433. doi:10.3102/0002831209350493.

- Seymour, Elaine, Anne-Barrie Hunter, Sandra L. Laursen, and Tracee DeAntoni. 2004. “Establishing the Benefits of Research Experiences for Undergraduates in the Sciences: First Findings from a Three-Year Study.” Science Education 88 (4): 493–534. doi:10.1002/sce.10131.

- Simonton, Dean K. 2003. “Expertise, Competence, and Creative Ability.” In The Psychology of Abilities, Competencies, and Expertise, edited by Robert J. Sternberg, and Elena L. Grigorenko, 213–40. New York: Cambridge University Press.

- Stanford, Jennifer S., Suzanne E. Rocheleau, Kevin P.W. Smith, and Jaya Mohan. 2017. “Early Undergraduate Research Experiences Lead to Similar Learning Gains for STEM and Non-STEM Undergraduates.” Studies in Higher Education 42 (1): 115–29. doi:10.1080/03075079.2015.1035248.

- Stock, James H., and Mark W. Watson. 2008. “Heteroskedasticity-Robust Standard Errors for Fixed Effects Panel Data Regression.” Econometrica 76 (1): 155–74. doi:10.1111/j.0012-9682.2008.00821.x.

- Taraban, Roman, and Erin Logue. 2012. “Academic Factors That Affect Undergraduate Research Experiences.” Journal of Educational Psychology 104 (2): 499–514. doi:10.1037/a0026851.

- Thiel, Felicitas, and Franziska Böttcher. 2014. ‘Modellierung Fächerübergreifender Forschungskompetenzen - Das RMKR-W-Modell Als Grundlage Der Planung Und Evaluation von Formaten Forschungsorientierter Lehre’. In Neues Handbuch Hochschullehre, edited by Brigitte Berendt, Andreas Fleischmann, Niclas Schaper, Birgit Szczyrba, and Johannes Wildt, 109–24. Berlin: DUZ Medienhaus.

- Thiem, Janina, Richard Preetz, and Susanne Haberstroh. 2020a. ‘Forschendes Lernen Im Student Life Cycle. Eine Panelstudie Zur Weiterentwicklung Des Lehrprofils Der Universität Oldenburg’. In Qualitätssicherung Im Student Life Cycle, edited by Philipp Pohlenz, Lukas Mitterauer, and Susan Harris-Huemmert, 81–93. Münster: Waxmann.

- Thiem, Janina, Richard Preetz, and Susanne Haberstroh. 2020b. ““Warum soll ich forschen?” - Wirkungen Forschenden Lernens bei Lehramtsstudierenden.” Zeitschrift für Hochschulentwicklung 15 (2): 187–207. doi:10.3217/zfhe-15-02/10.

- Ward, Jennifer Rhode, H. David Clarke, and Jonathan L. Horton. 2014. “‘Effects of a Research-Infused Botanical Curriculum on Undergraduates’ Content Knowledge, STEM Competencies, and Attitudes toward Plant Sciences’. Edited by Diane Ebert-May.” CBE—Life Sciences Education 13 (3): 387–96. doi:10.1187/cbe.13-12-0231.

- Wessels, Insa, Julia Rueß, Christopher Gess, Wolfgang Deicke, and Matthias Ziegler. 2021. “Is Research-Based Learning Effective? Evidence from a Pre–Post Analysis in the Social Sciences.” Studies in Higher Education (12): 2595–2609. doi:10.1080/03075079.2020.1739014.

- Ziegler, Matthias, and Markus Buehner. 2009. “Modeling Socially Desirable Responding and Its Effects.” Educational and Psychological Measurement 69 (4): 548–65. doi:10.1177/0013164408324469.