ABSTRACT

Research Integrity Advisors are used in Australia to provide impartial guidance to researchers who have questions about any aspect of responsible research practice. Every Australian institution conducting research must provide access to trained advisors. This national policy could be an important part of creating a safe environment for discussing research integrity issues and thus resolving issues. We conducted the first formal study of advisors, using a census of every Australian advisor to discover their workload and attitudes to their role. We estimated there are 739 advisors nationally. We received responses to our questions from 192. Most advisors had a very light workload, with an median of just 0.5 days per month. Thirteen percent of advisors had not received any training, and some advisors only discovered they were an advisor after our approach. Most advisors were positive about their ability to help colleagues deal with integrity issues. The main desired changes were for greater advertising of their role and a desire to promote good practice rather than just supporting potential issues. Advisors might be a useful policy for supporting research integrity, but some advisors need better institutional support in terms of training and raising awareness.

Introduction

Research integrity breaches are an international problem and policies are needed to maintain and improve research integrity (Bouter Citation2023). The Australian Code for the Responsible Conduct of Research (hereafter called “the Code”) states that institutions are required to “Identify and train Research Integrity Advisors who assist in the promotion and fostering of responsible research conduct and provide advice to those with concerns about potential breaches of the Code” (National Health and Medical Research Council, the Australian Research Council, and Universities Australia Citation2018). This national policy was added to the Code in 2007 (National Health and Medical Research Council, Australian Research Council, and Universities Australia Citation2007), possibly because of specific concerns of poor practice (Anderson, Cordner, and Breen Citation2006). Advisors were previously mentioned in a national statement on research practice in 1997, but were limited to dealing with suspected or alleged research misconduct (National Health and Medical Research Council Citation1997).

Research Integrity Advisors are nominated, trained and supported by institutions to promote the responsible conduct of research. Advisors provide impartial guidance to colleagues with questions about any aspect of responsible research practice (National Health and Medical Research Council, Australian Research Council, and Universities Australia Citation2022). Research Integrity Advisors can have conversations with colleagues about integrity issues without starting formal procedures, although they are obliged to report breaches of the Code to their research integrity office. They offer impartial support and may refer people to other sources of support. Research Integrity Advisors do not have the power to adjudicate on cases, and any serious matters have to be passed on to the institution. They are not expected to investigate potential breaches, although they may be contacted if the institute starts a formal investigation.

Australia is not the only country to use advisors to improve research integrity (Mejlgaard et al. Citation2020). For example, there is a national scheme in France of research integrity officers that has similar goals to the Australian scheme (Deniau Citation2023). Aarhus University (Denmark) has advisors on the responsible conduct of research and freedom of research (Aarhus University Citation2022). The Luxembourg Agency for Research Integrity has research integrity coaches who provide guidance, support and encouragement to researchers (The Luxembourg Agency for Research Integrity Citation2022). The Data Champions initiatives at Delft University of Technology (Netherlands) and University of Cambridge (UK) aim to implement good research data management practices (University of Cambridge Citation2022; TU Delft Citation2021, Citation2022). Delft University also has confidential advisors who can be approached by researchers who suspect a breach of integrity (TU Delft Citation2021). Some UK institutions have added Research Integrity Champions, Leads and/or Advisors in response to a national concordat to support research integrity (UKRIO Citation2022). These examples are not an exhaustive list, and other countries and institutions likely also have related policies.

Research Integrity Advisors are by no means the only mechanism for ensuring research integrity in Australia. Other examples of promoting research integrity are good supervision, mentoring and open science practices. Australia also has a national body for research integrity, the Australian Research Integrity Committee which was established in 2011 (Australian Government Citation2023). They can conduct reviews of how an institution handled a potential breach of the Code.

Despite the many mechanisms in place for promoting research integrity, breaches of the Code still occur. For example, a 2019 survey of Australian researchers found that standards were not always high, with problems including selective reporting, a lack of transparency, and poor supervision (National Health and Medical Research Council Citation2020).

Research Integrity Advisors could be an important part of creating a safe environment for discussing research integrity issues, and strategies to mitigate and resolve disputes (Roje et al. Citation2022). However, there are costs to the policy in terms of staff time and training, and opportunity costs if alternative policies are more effective. Evidence of the value of advisors would help justify the time spent. To our knowledge, no previous study has examined the potential value of Research Integrity Advisors. Our study aimed to gather novel data from the advisors to examine their potential value and areas for improvement.

Methods

Our target population was all current Research Integrity Advisors in Australia. Our study design is a census of the entire population rather than a random sample. To get data on this population we searched for the names and e-mails of Research Integrity Advisors from the web pages of 99 Australian universities and research institutions (hereafter called “institutions”). To identify every eligible institution we used data from the National Health and Medical Research Council (NHMRC), that lists all the institutions that applied for research funding (National Health and Medical Research Council Citation2022a, Citation2022b). It was assumed that every institution that conducted research in humans or animals that required ethical clearance would have at least one NHMRC funding application during the years 2020 to 2022. The list of NHMRC Approved Administering Institutions, which must comply with the Code, was also used (National Health and Medical Research Council, Australian Research Council, and Universities Australia Citation2022). We supplemented the list with one additional institution from our team’s personal knowledge (see supplement S.1 for the list).

The website of each institution was searched for the phrase “Research Integrity Advisor” using Google’s site-specific search function (e.g., site:.sydney.edu.au). Searches were conducted between 31 October 2022 and 7 November 2022. Information on advisors from one institution was extracted on 22 December 2022. One institution required additional paperwork that our team began but could not complete, hence this institution was excluded.

When publicly available information on Research Integrity Advisors was not available, an e-mail address for the institution’s research integrity office or ethics office was recorded. When these contacts were not available, a general enquiry contact was recorded. These contacts were sent an e-mail by the first author that explained the study’s purpose and asked if the Research Integrity Advisors names and e-mails could be shared with us for research purposes. If a publicly available list had gaps for Research Integrity Advisors, then we emailed the institution to ask if these positions had been filled and ask for the contact details. Institutions are not required to make their Research Integrity Advisors contact details publicly available, but the supporting guide recommends that “the availability and role of RIAs is readily accessible by staff and students” (National Health and Medical Research Council, Australian Research Council, and Universities Australia Citation2022).

The names, titles and e-mail addresses of Research Integrity Advisors were recorded in an Excel spreadsheet. Once data collection was complete, all identifying information was deleted.

Inclusion and exclusion criteria

The inclusion criterion was any current or recent Research Integrity Advisor working at an Australian institution. We included Research Integrity Advisors who had recently left the role (within 1 year), as we assumed they were representative of current practice. Excluding all advisors who had left the role could have excluded some who left because of bad experiences.

Questionnaire and distribution

A questionnaire was designed by the research team, including consultations with three current Research Integrity Advisors. The questionnaire had 12 closed questions and 6 open questions. All questions could be skipped except an initial consent question. The open questions were any additional comments from participants and were labeled as “optional.” There was no check of questionnaire completeness. The entire questionnaire took 5 to 7 screens, and in pilot testing it took 5 to 10 minutes to complete.

The first page of the questionnaire was a participant information sheet; respondents had to give their consent before they saw the questions. The questionnaire was voluntary with no incentives. The questionnaire was online and used the Qualtrics software that allowed easy viewing on screens and smart phones (Qualtrics Citation2023).

The questionnaire asked about their time spent in the role, their training and workload, the advice they have given, and their thoughts on the role (see supplement S.2 for the full questionnaire). The questions were pilot tested by around 30 researchers and the three current Research Integrity Advisors.

The full list of Research Integrity Advisors were emailed by the first author on 13 February 2023 (see Supplement S.3 for e-mail text). Advisors were given 4 weeks to respond, with a follow up reminder after 2 weeks for non-responders. We aimed for a response percentage of at least 50%. We used partially completed questionnaires and did not impute missing data.

Statistical methods

This was a census that attempted to capture all advisors in Australia, so there was no sample size calculation for hypothesis testing. To estimate the likely workload, we estimated the national number by assuming that the number of advisors per institution was one plus a Poisson distribution with mean 2. This gave an estimated 90% probability that there would be between 236 and 279 advisors nationally.

After data collection, we estimated the national number of advisors using:

The total number of Research Integrity Advisors for whom we had contact details,

Minus the advisors whose e-mails bounced, assuming they had left the institution and their role,

Plus an estimate of the number of Research Integrity Advisors who we were unable to contact because their contact details were not shared by their institution.

We estimated the number of advisors per missing institution using the observed distribution of advisors per institution, which assumes that missing and non-missing institutions had similar numbers. We made 1,000 random resamples from this observed distribution for each missing institution. The estimate of the total national number of Research Integrity Advisors is the mean of these bootstrap resamples with a 95% bootstrap interval to capture the uncertainty.

All analyses were descriptive with no hypothesis tests. For categorical variables we used numbers and percentages in tables, and bar plots as graphs. For continuous variables we used medians and inter-quartile ranges in tables, and histograms or bar-plots as graphs. For key statistics, such as the percentage receiving training, we included bootstrap 95% confidence intervals to show the uncertainty due to non-response. These intervals were based on randomly resampling responses for the missing Research Integrity Advisors to give an estimate of the statistic in the target population. Standard confidence intervals capture sampling error, but we used a census which has no sampling error.

We did not use a formal qualitative analysis of the optional comments, but instead selected comments that highlighted key points or represented common themes.

We used the STROBE reporting guideline for cross-sectional studies (Elm et al. Citation2007) and the CHERRIES reporting guideline for online questionnaires (Eysenbach Citation2004). Our study design and statistical methods were detailed in a protocol (Barnett and Borg Citation2022).

All data management and analysis were made using R version 4.2.1 (R Core Team Citation2023). The code and data to run the analysis are publicly available (Barnett Citation2023).

The study was approved by the QUT Human Research Ethics Committee (approval number: 2023–6395–12670).

Results

Finding every advisor in Australia

A flow chart showing what institutions and advisors were contacted is in . Publicly available information on advisors was found on 36 of the 101 institutions’ websites. We contacted 62 institutions for information on their advisors and received some or all names and e-mail addresses from 10 (16%). Five institutions did not share the names but offered to distribute the questions on our behalf. Five institutions did not require advisors as they used advisors from another institution, which is permitted in the policy. Three institutions were not contacted, two because we became aware of them after completing data collection, and one because they required additional paperwork.

Figure 1. Flow chart of institutions and Research Integrity Advisors approached to take part in the census.

There was some confusion about the difference between Research Integrity Advisors and other roles related to ethics and integrity. One institution did not believe they needed Research Integrity Advisors as they had Research Integrity Officers, and another institution shared the names of their Research Ethics Officers not Research Integrity Advisors. Because of this confusion, we added a question for respondents to confirm that they were a Research Integrity Advisor, and eight respondents were not advisors.

One respondent also highlighted this confusion, “I would also say that, at least at my institution, the line between issues that should be handled by the ethics administrators and committees and the Research Integrity administrator and advisors was very unclear – clarity here would help.”

Two institutions said they did not have any advisors, but were working on appointing them. These institutions may be in breach of their compliance with the Code, which states that institutions are required to “Identify and train Research Integrity Advisors” (National Health and Medical Research Council, Australian Research Council, and Universities Australia Citation2022).

Number of respondents

The questionnaires were completed between 13 February 2023 and 6 March 2023. The overall response percentage was 39% (192 out of 494 invites). The response percentage from the five institutions that distributed the questions on our behalf was 17%, whereas the response percentage to direct e-mails from the study team was 46%.

Seven e-mail addresses were invalid and 12 respondents were no longer working at that institution or were no longer an advisor, indicating that some institutions had out of date information. Thirty-nine advisors had out-of-office replies, none of which mentioned their role as a Research Integrity Advisor or who could be contacted about integrity issues whilst they were away. The response percentage after excluding dead e-mail addresses (7) and ineligible participants (20) was 41% (192 out of 467).

Eight advisors replied to say they were not a Research Integrity Advisor, despite being listed on their institution’s website. At least one advisor discovered that they were an advisor after our approach: “I wasn’t aware I’d been nominated as a Research Integrity Advisor.” And a similar comment was, “While I’m listed as a Research Integrity Officer, the university hadn’t notified me of this, nor have I undergone any training.” This quote, which uses “Officer,” further highlights the confusion on the role’s title. A number of advisors said they were given the role as part of another role, for example, “It is part of being the Dean of Research.”

The questions took a median of 7 minutes to complete, with a 1st to 3rd quartile of 5 to 12 minutes. The questions were generally well completed. A summary of item-missing questions is in supplement S.4.

Advisor numbers

The median number of advisors per institution was 6, with a 1st to 3rd quartile of 3 to 10. The smallest number was 1 (which was also the mode) and the largest 43 (see supplement 0.5 for a plot of the distribution).

Based on the observed numbers of advisors, we estimate the total number of current Research Integrity Advisors at Australian institutions is 739, with a 95% bootstrap interval of 660 to 839.

Advisors’ workload

The median length of time that advisors had been in the role was 4 years, with a 1st to 3rd quartile of 2 to 5 years. Seventeen respondents (9%) were no longer Research Integrity Advisors, confirming that some institutions had not updated their information.

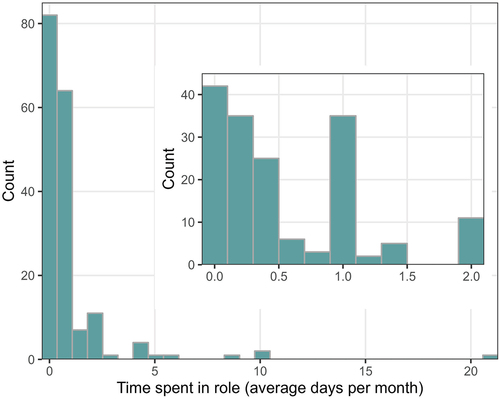

The distribution of time spent in the role was strongly positively skewed, with a few advisors spending most of their time in their advisory role (). The median time spent in the role was 0.5 days per month (1st to 3rd quartile: 0.1 to 1.0 days). There were 8 advisors (5%) who reported no time, and this was backed up by quotes including:

Figure 2. Histogram of the time spent in the role of Research Integrity Advisor expressed as average days per month. The inset histogram shows times up to 2 days per month. Estimates from 175 advisors.

“I have never been contacted in my role since my appointment so it has taken very little time.”

“This role is nominal as far as I’m concerned.”

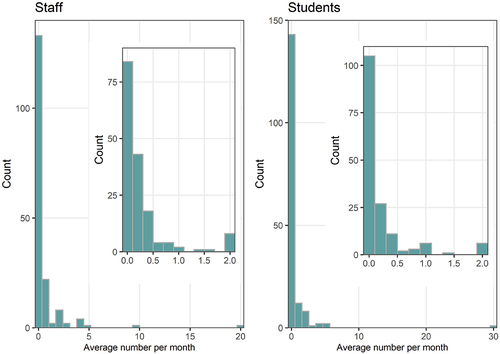

The amount of help provided to staff and students is plotted in , we used insets of smaller numbers under 2, as the distribution had a strong positive skew. The median number of staff helped per month was 0.1 (1st to 3rd quartile 0 to 0.3), and the number of students was 0.1 (1st to 3rd quartile 0 to 0.2). There were 41 advisors (22%) who said they had never provided help to staff, and 60 (32%) who said they had never provided help to students.

Figure 3. Histograms of the average number of staff and students helped per month. The insets show the results up to 2 staff or students. Estimates from 178 advisors.

Most of the respondents (87%) had 11 or more years of research experience. Two respondents indicated they were not researchers.

Training

Initial training was received by 79% of advisors (95% bootstrap interval 76 to 82%) and 69% received ongoing training (95% bootstrap interval 66 to 72%). Thirteen percent had no initial or ongoing training. Only 1% thought they received too much training, whilst 29% thought it was too little.

Some key negative comments on their training were:

“Regarding training we have consistently been told that there will be training but that has not materialized.”

“RIA training has been patchy, with no meetings occurring in some years, although there was some (minimal) training in the first year.”

There were also positive comments about the training, including:

“Training provided by the RIO [Research Integrity Office] is necessary and valuable.” (Our addition in square brackets)

“Four times a year I get together with my fellow RIAs and we present cases to each other (anonymized) and that is a great learning experience.”

Advice provided

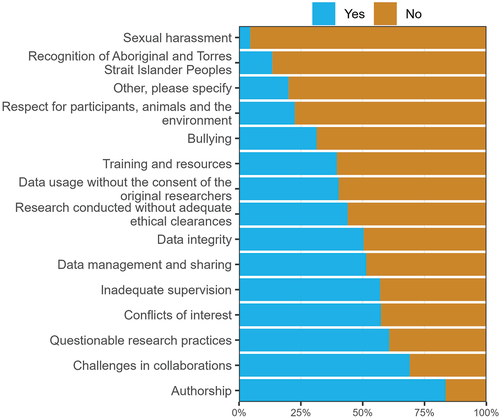

The advice provided is summarized in . The most frequent advice was provided about authorship and the least frequent about sexual harassment. The comments on “Other” advice provided included:

Figure 4. Bar chart of research integrity topics where advice has been provided, ordered from least to most common. Estimates from 190 advisors.

“Research fraud”

“Ethics approval application”

When asked to think about all the issues they had provided advice about and if they were able to help, the modal answer was “Most of the time” (58%). Only 2% said they had never been able to help. Fifteen percent said they were “Unsure” and there were comments that this question was difficult to answer because they often did not know how situations were resolved.

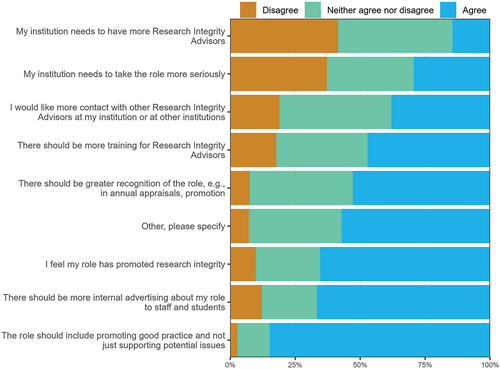

We asked advisors for their thoughts on aspects of their role and what might be improved; their answers are summarized in . There was least support for the need for more Research Integrity Advisors, which makes sense given the light workload. There was relatively strong support for greater recognition of the role and more advertising. There was very strong support for greater involvement in promoting good practice. The comments on “Other” thoughts included:

Figure 5. Bar chart of the percentages of advisors who agreed or disagreed with nine statements about their role, ordered by the percentage who agreed. Estimates from 190 advisors.

“Clear pathway for feedback on RI [research integrity] issues to senior management”

“RIAs should be introduced/visible to everyone (staff/students) who starts at a University, and they are not.”

Some comments on what advice they had provided were:

“Advice provided is usually just going over some options. Typically limited action has resulted.”

“The situations are usually extremely complicated involving power imbalances. (The straightforward stuff people can solve themselves.)”

“There is certainly a wide gulf between the rules that operate and people’s understanding of them. In some/many cases, the ‘rules’ (i.e., the Code) are disregarded often actively.”

A number of respondents gave a positive comment at the end of the questions:

“I think RIAs are a useful resource for institutions/departments.”

“I am not consulted often, but when I have, I believe the responses I provide help the person seeking advice and avoid a negative situation from escalating.”

“This is an important role in universities and can be vey helpful to staff to have a safe conversation about research integrity.”[sic]

Other respondents ended with a negative comment:

“Role needs greater recognition. Most staff/students are unaware that the role exists and it is usually not considered in institutional workload models.”

“My experience is that Institutions seem to ‘tick and flick’ research integrity advisors. They are required to have them under The Code, but they often just nominate someone in each school and then forget about them once initial training is provided.”

Discussion

We extract three main insights from this attempted national census of Research Integrity Advisors: 1) advisors do not work many hours in the role, 2) most believe they can help with integrity issues, 3) some institutions are not fully engaging with the policy, as evidenced by not providing training, not updating their advisors’ details, not informing researchers that they are an advisor, and – for some – having no advisors at all.

Non-compliance

The most serious finding is that some Australian institutions may be in breach of their responsibility to have Research Integrity Advisors and support them with training. A previous study examined whether Australian universities were compliant with The Code in terms of authorship policies (Morris Citation2010). Similar to our results, there were universities where no information could be found (7 out of 39). The author suggested that the government funding awarded to these non-compliant institutions could be withdrawn, which would have been over 100 million dollars. Our study also uncovered noncompliance, and indicates that a formal government-led follow-up of compliance is warranted.

Advisors’ workload

Many advisors reported spending very little time in the role (). This lack of activity is surprising given the findings of recent national surveys which reported that 47% of early career researchers impacted by questionable research practices (Christian, Larkins, and Doran Citation2022) and 53% to 60% of researchers agreed there is currently a significant crisis of reproducibility (National Health and Medical Research Council Citation2020). The light workload might indicate that researchers who are concerned about integrity issues may be reluctant to speak with advisors or that they are seeking advice through other avenues. Most Research Integrity Advisors were senior researchers with 11 or more years of experience and some were even Deans of research. Some researchers may be reluctant to approach such senior colleagues due to perceived power imbalances and concerns about their careers. A system used by Delft university includes an advisor who is external to the institute (TU Delft Citation2021), and this could be a useful addition to the Australian policy.

Training

Training advisors is an essential step to their success. Although most respondents (87%) reported receiving some training, 13% had no initial or ongoing training, 29% thought the training received was too little, and two institutions had not yet appointed (and therefore not trained) any advisors. This mirrors a study of advisors in France, which found some reported a lack of training (Deniau Citation2023).

A potential reason for the lack of training is that institutions found it difficult to create their own courses (Hooper et al. Citation2018). Being an advisor can be challenging, requiring familiarity with the Code, empathy and good communication skills (National Health and Medical Research Council, Australian Research Council, and Universities Australia Citation2022). Inadequate training could lead to unresolved or exacerbated challenges for those researchers needing advice.

Authorship

Authorship was the most common issue that advisors reported engaging with in this study (83%). This reflects the findings of a previous study which found, “Undeserved authorship was perceived by all groups as the most prevalent detrimental research practice” (Malički et al. Citation2023). A systematic review of authorship issues estimated that 29% of researchers had experienced “misuse of authorship” (Marušić et al. Citation2011). A recent survey of Australian early career researchers found the most commonly reported questionable research practice was claiming of undeserved authorship (Christian, Larkins, and Doran Citation2022). Similarly, a survey of early career physicists in the US found that “Putting nonauthors on a paper” was the most encountered bad practice (Houle, Kirby, and Marder Citation2023). Authorship was also noted by the Australian Research Integrity Committee as one of the most common issues it deals with (Australian Government Citation2023).

These widespread problems could be reduced by greater enforcement on how authorship is earned by institutions (Morris Citation2010). However, authorship issues are possibly just a symptom of the current hyper-competitive research system (Rahal et al. Citation2023), as one advisor stated: “Sometimes although someone comes with one problem (e.g., authorship), when you dig you find that underlying it can be bullying or harassment or other issues.”

Related research

Previous surveys of researchers concerning research integrity may relate to the current findings. A study of US and European researchers found that 26% had no or little confidence in their organization’s ability to ensure research integrity (Allum et al. Citation2022). A survey of early career researchers in Australia reported 33 respondents who said that institutions had failed to act on a research integrity complaint (Christian, Larkins, and Doran Citation2022), and this may be related to a lack of training, visibility and clarity of research advisor roles. A survey of early career physicists in the US found that most respondents (93 out of 190) who reported inappropriate behavior were unsatisfied with the institution’s response (Houle, Kirby, and Marder Citation2023).

A recent review of integrity principles and best practices stated that it is incumbent on institutions to foster a culture of scientific integrity, and have transparent systems to report problems, which could be partly addressed by Research Integrity Advisors (Kretser et al. Citation2019).

An analysis of retractions found that, “the likelihood of retraction was lower in countries that have policies and structures to handle allegations of misconduct, particularly when such policies are legally defined or institutional” (Fanelli et al. Citation2015). Research Integrity Advisors are institutional and are designed to be a first contact for allegations of misconduct.

Recommendations

There were multiple institutions where we found it difficult to find anything about research integrity and other institutions where the contact about research integrity was a generic e-mail or generic online form. One of our e-mails was blocked by a security control and other e-mails were likely ignored or filtered as spam. We believe that all institutions conducting medical research in Australia need to show a clear commitment to research integrity with a named person as a contact, with an institutional phone number and e-mail. This information should be prominently displayed and easily findable by the public. Additionally, the names and contact details of the Research Integrity Advisors could be made publicly available. This would increase transparency and show a commitment to tackling integrity issues. The lack of information meant we could not verify if 62 institutions are compliant with the Code. Two institutions that did not have publicly available Research Integrity Advisors recommended that people outside the institution could contact a Research Integrity Advisor as a first point of contact for integrity issues, despite this being impossible as the contact details were not available.

At some institutions there was a confusion between Research Integrity Officers, Research Ethics Officers, and Research Integrity Advisors. Research Integrity Officers and Research Ethics Officers are usually employed by the institution in their integrity/ethics roles and are not current researchers. Given the strong response from Research Integrity Advisors to also be champions for good practice (Agree = 85%, ), the role could be renamed to create a clearer distinction, possibly including the word “Champion” which is used in other countries (The Luxembourg Agency for Research Integrity Citation2022; University of Cambridge Citation2022; TU Delft Citation2022). A renaming might become necessary if the new independent body of Research Integrity Australia is created (Chubb Citation2023), as having the same initialism will likely cause confusion.

One role for a national integrity body like Research Integrity Australia could be to create an online platform for Research Integrity Advisors to discuss their work and/or provide training. It could also create a national website explaining the role of research integrity advisors, and raise awareness of their purpose. A national research integrity website is being developed in the Netherlands to provide examples of scientific misconduct (Siegerink et al. Citation2023).

A study of advisors in France found that better communication about their role was needed (Deniau Citation2023), and in our study most researchers (67%) agreed with the statement that there should be more internal advertising about their role (). This quote supports the need for awareness raising: “Most people at the university don’t know what a RIA is, or the support we might provide.”

An interesting issue was raised by an advisor by e-mail, who questioned what happens when integrity issues are raised about researchers who have two affiliations, as it would be unclear which institution’s advisors should be contacted. The risk with these cases is that both institutions take no action, as they assume the other institution is taking the lead. Hence specific guidance on this issue would be appropriate, especially as a relatively large number of Australian researchers work across institutions.

Limitations

We explored the advisors’ opinions and did not collect actual data on the advice given or the outcomes of any follow-up investigations. One question that some advisors found difficult to answer was about whether they had generally been helpful to those seeking advice, as there was no follow-up and the people seeking their help were often “very polite” (e-mail from RIA).

Our results could be skewed by non-response bias. We probably heard less from those advisors that are infrequently contacted. We received some e-mails from advisors saying they did not feel it was helpful to complete the questions as they had not dealt with any issues. We also received e-mails from advisors who were unaware that they were listed as advisors. Hence it is likely that we heard from a more engaged group, and the true workload for Research Integrity Advisors may be smaller than our estimates. There could also be bias in the other direction, with advisors who were overwhelmed being less willing to answer our questions.

We missed our target response rate of 50%. One Research Integrity Advisor contacted our team about the study and wanted to be involved, as unfortunately their institution had not passed on our invitation. It is likely that other advisors would have been willing to take part but were unaware of the study because their institution did not respond to our approach or did not pass on the information. We had a much higher response percentage from direct approaches from our team (46%) rather than the institution passing on our approach (17%).

We focused on institutes conducting health and medical research and so missed two institutions conducting wholly non-medical research who should have been included.

Our target population was advisors. A specific survey would be needed to gather the opinions of researchers who have solicited advice.

Our study does not reveal whether Research Integrity Advisors are effective in reducing the number of integrity breaches.

This study could serve as a useful baseline to ascertain if any changes occur over time and/or after changes in national policy.

Supplemental Material

Download MS Word (1 MB)Acknowledgments

Thanks to the Research Integrity Advisors who gave initial feedback on the questions and our colleagues who pilot-tested the online questionnaire. Thanks to Dr Glenn Begley for their ideas in designing the questions and comments on the paper. Thanks to Mark Hooper for their insightful comments on the first draft.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data that support the findings of this study are openly available in Zenodo at https://doi.org/10.5281/zenodo.7839212, reference number v1.0.

Supplemental data

Supplemental data for this article can be accessed online at https://doi.org/10.1080/08989621.2023.2239532

Additional information

Funding

References

- Aarhus University. 2022. Advisers on the Responsible Conduct of Research and Freedom of Research. https://tinyurl.com/2p8zzrt6.

- Allum, N., A. Reid, M. Bidoglia, G. Gaskell, N. Aubert Bonn, I. Buljan, S. Fuglsang, S Horbach, P Kavouras, A Marušić, N Mejlgaard. 2022. Researchers on Research Integrity: A Survey of European and American Researchers. https://doi.org/10.31222/osf.io/fgy7c.

- Anderson, W. P., C. D. Cordner, and K. J. Breen. 2006. “Strengthening Australia’s Framework for Research Oversight.” Medical Journal of Australia 184 (6): 261–263. https://doi.org/10.5694/j.1326-5377.2006.tb00232.x.

- Australian Government. 2023. Australian Research Integrity Committee Annual Report to the Sector, 2021–22. https://tinyurl.com/4bm6zjxp.

- Barnett, A. 2023. “R Code and Data for Integrity Advisors Study”. https://doi.org/10.5281/zenodo.8000902.

- Barnett, A., and D. Borg. 2022. A Census of Research Integrity Advisors in Australia. https://doi.org/10.17605/OSF.IO/SPTCG.

- Bouter, L. 2023. “Why Research Integrity Matters and How It Can Be Improved.” Accountability in Research. 1–10. https://doi.org/10.1080/08989621.2023.2189010.

- Christian, K., J.-A. Larkins, and M. R. Doran. 2022. The Australian Academic STEMM Workplace Post-COVID: A Picture of Disarray. https://doi.org/10.1101/2022.12.06.519378.

- Chubb, I. 2023. Understanding the Research Integrity Landscape. WEHI. https://tinyurl.com/4rhhscju.

- Deniau, N. 2023. “Perceptions on the Role of Research Integrity Officers in French Medical Schools.” Accountability in Research: 1–21. https://doi.org/10.1080/08989621.2023.2173070.

- Elm, E. V., D. G. Altman, M. Egger, S. J. Pocock, P. C. Gøtzsche, and J. P. V. And. 2007. “The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) Statement: Guidelines for Reporting Observational Studies.” PLoS Medicine 4 (10): e296. https://doi.org/10.1371/journal.pmed.0040296.

- Eysenbach, G. 2004. “Improving the Quality of Web Surveys: The Checklist for Reporting Results of Internet E-Surveys (CHERRIES).” Journal of Medical Internet Research 6 (3): e34. https://doi.org/10.2196/jmir.6.3.e34.

- Fanelli, D., R. Costas, V. Larivière, and K. B. Wray. 2015. “Misconduct Policies, Academic Culture and Career Stage, Not Gender or Pressures to Publish, Affect Scientific Integrity.” PLoS ONE 10 (6): 1–18. https://doi.org/10.1371/journal.pone.0127556.

- Hooper, M., V. Barbour, A. Walsh, S. Bradbury, and J. Jacobs. 2018. “Designing Integrated Research Integrity Training: Authorship, Publication, and Peer Review.” Research Integrity and Peer Review 3 (1). https://doi.org/10.1186/s41073-018-0046-2.

- Houle, F. A., K. P. Kirby, and M. P. Marder. 2023. “Ethics in Physics: The Need for Culture Change.” Physics Today 76 (1): 28–35. https://doi.org/10.1063/pt.3.5156.

- Kretser, A., D. Murphy, S. Bertuzzi, T. Abraham, D. B. Allison, K. J. Boor, J. Dwyer, A. Grantham, L. J. Harris, R. Hollander, et al. 2019. “Scientific Integrity Principles and Best Practices: Recommendations from a Scientific Integrity Consortium.” Science and Engineering Ethics 25 (2): 327–355. https://doi.org/10.1007/s11948-019-00094-3.

- The Luxembourg Agency for Research Integrity. 2022. LARI Peer Coaching. https://lari.lu/lari-services/lari-peer-coaching/.

- Malički, M., I. Jan Aalbersberg, L. Bouter, A. Mulligan, G. Ter Riet, and F. Naudet. 2023. “Transparency in Conducting and Reporting Research: A Survey of Authors, Reviewers, and Editors Across Scholarly Disciplines.” PLoS ONE 18 (3): 1–13. https://doi.org/10.1371/journal.pone.0270054.

- Marušić, A., L. Bošnjak, A. Jerončić, and T. Jefferson. 2011. “A Systematic Review of Research on the Meaning, Ethics and Practices of Authorship Across Scholarly Disciplines.” PLoS ONE 6 (9): 1–1. https://doi.org/10.1371/journal.pone.0023477.

- Mejlgaard, N., L. M. Bouter, G. Gaskell, P. Kavouras, N. Allum, A.-K. Bendtsen, C. A. Charitidis, N. Claesen, K. Dierickx, A. Domaradzka, et al. 2020. “Research Integrity: Nine Ways to Move from Talk to Walk.” Nature 586 (7829): 358–360. https://doi.org/10.1038/d41586-020-02847-8.

- Morris, S. E. 2010. “Cracking the Code: Assessing Institutional Compliance with the Australian Code for the Responsible Conduct of Research.” Australian Universities’ Review 52 (2): 18–26.

- National Health and Medical Research Council. 1997. Joint NHMRC/AVCC Statement and Guidelines on Research Practice. Canberra, ACT, Australia. https://tinyurl.com/58wamh27.

- National Health and Medical Research Council. 2020. 2019 Survey of Research Culture in Australian NHMRC-Funded Institutions. Canberra. https://tinyurl.com/4zx7kwhv.

- National Health and Medical Research Council. 2022a. NHMRC Approved Administering Institutions. Canberra. https://tinyurl.com/y7h673w4.

- National Health and Medical Research Council. 2022b. Outcomes of Funding Rounds. Canberra. https://www.nhmrc.gov.au/funding/data-research/outcomes.

- National Health and Medical Research Council, Australian Research Council, and Universities Australia. 2007. Australian Code for the Responsible Conduct of Research, R39, Canberra. https://tinyurl.com/2p899esv.

- National Health and Medical Research Council, Australian Research Council, and Universities Australia. 2022. Research Integrity Advisors: A Guide Supporting the Australian Code for the Responsible Conduct of Research, NH197, Canberra. https://www.nhmrc.gov.au/file/18189/download?token=ICGN4pDn.

- National Health and Medical Research Council, the Australian Research Council, and Universities Australia. 2018. Australian Code for the Responsible Conduct of Research, R41, Canberra. https://tinyurl.com/3de23r82.

- Qualtrics. 2023. Qualtrics. Provo, Utah: Qualtrics. https://www.qualtrics.com.

- Rahal, R.-M., S. Fiedler, A. Adetula, R. P.-A. Berntsson, U. Dirnagl, G. B. Feld, C. J. Fiebach, S. A. Himi, A. J. Horner, T. B. Lonsdorf, et al. 2023. “Quality Research Needs Good Working Conditions.” Nature Human Behaviour 7 (2): 164–167. https://doi.org/10.1038/s41562-022-01508-2.

- R Core Team. 2023. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing. https://www.R-project.org/.

- Roje, R., A. Reyes Elizondo, W. Kaltenbrunner, I. Buljan, and A. Marušić. 2022. “Factors Influencing the Promotion and Implementation of Research Integrity in Research Performing and Research Funding Organizations: A Scoping Review.” Accountability in Research 1–39. https://doi.org/10.1080/08989621.2022.2073819.

- Siegerink, B., L. A. Pet, F. R. Rosendaal, and M. Y. H. G. Erkens. 2023. “The Argument for Adopting a Jurisprudence Platform for Scientific Misconduct.” Accountability in Research: 1–12. https://doi.org/10.1080/08989621.2023.2172678.

- TU Delft. 2021. Data Champions. https://tinyurl.com/2p92mhdw.

- TU Delft. 2022. Data champions. https://tinyurl.com/2p95stt8.

- UKRIO. 2022. Good Practice in Research: Research Integrity Champions, Leads & Advisers. Technical report. https://doi.org/10.37672/ukrio.2022.01.champions.

- University of Cambridge. 2022. Data Champions. https://www.data.cam.ac.uk/intro-data-champions.