ABSTRACT

The development of metaknowledge about models (MKM) and metaknowledge about the modelling process (MKP) are important in pre-service science teachers (PSTs) education. MKM refers to knowledge about the model’s entities and its purposes, while MKP refers to knowledge about the components and structure of the modelling process. Assessing MKM and MKP is crucial to foster this knowledge in PSTs’ education. However, assessment instruments focus on MKM rather than MKP, and the connection between MKM and MKP had not been examined deeply. This study validates and applies a diagram task for assessing PSTs’ MKP. For validation, ten experts were surveyed and think-aloud interviews were conducted with ten PSTs. The findings support valid interpretations of PSTs’ MKP based on the diagram task. In the main study, the diagram task was administrated to 63 PSTs, along with an MKM questionnaire. PSTs’ MKP ranged from simple conceptions of modelling as a linear process including few components to sophisticated conceptions of modelling as an iterative process including multiple components. Furthermore, it was found that knowledge about using models to predict a phenomenon is an aspect connecting sophisticated MKM and MKP, indicating that this aspect is crucial to foster both, PSTs MKM and MKP.

Introduction

Modelling is the process of constructing and applying models (e.g. Giere, Citation2010 Gouvea & Passmore, Citation2017). As it is a signature practice of sciences (Lehrer & Schauble, Citation2015), modelling is addressed in science standards and curricula guidelines in many countries (e.g. KMK, Citation2019; NRC, Citation2012). In science education, modelling has been conceptualised as a competence (e.g. Chiu & Lin, Citation2019; Nielsen & Nielsen, Citation2021). Modelling competence is comprised of the abilities to generate new knowledge by constructing and applying models, reflecting models with reference to their purpose, and reflecting about the modelling process (Nicolaou & Constantinou, Citation2014; Upmeier zu Belzen et al., Citation2021). Therefore, modelling competence refers to reflected engagement in modelling practices such as ‘constructing models’ or ‘using models to predict phenomena’ (Nielsen & Nielsen, Citation2021; Schwarz et al., Citation2009; Upmeier zu Belzen et al., 2021). It is assumed that these practices are guided by domain-general metaknowledge about models and metaknowledge about the modelling process (Chiu & Lin, Citation2019; Justi & van Driel, Citation2005; Nielsen & Nielsen, Citation2021). Based on their theoretical definitions, metaknowledge about models (MKM) and metaknowledge about the modelling process (MKP) focus on different facets of metaknowledge: MKM is defined as knowledge about a model’s entities and purposes as well as its stability over time (Justi & van Driel, Citation2005; Nicolaou & Constantinou, Citation2014; Schwarz & White, Citation2005). Thus, MKM refers to metaknowledge about the model object as a tool for sensemaking (Gouvea & Passmore, Citation2017; Rost & Knuuttila, Citation2022). In contrast, MKP is defined as knowledge about the modelling process, its components and its structure (Justi & van Driel, Citation2005; Nicolaou & Constantinou, Citation2014).

Pre-service science teachers (PSTs) need to develop their modelling competence to teach their future students appropriately (Krell & Krüger, Citation2016). They should also develop their MKM and MKP, as this knowledge impacts their model-related teaching in the classroom (Harlow et al., Citation2013; Krell & Krüger, Citation2016; Vo et al., Citation2015). Therefore, supporting MKM and MKP is an important aspect of PSTs education (KMK, Citation2019).

The development of assessments is an integral part of science education research because it helps to operationalise and communicate constructs (Opitz et al., Citation2017; Osborne, Citation2014). To assess PSTs’ MKM and MKP, instruments have been developed, mostly based on rating scales, multiple choice questions, and open-ended questionnaires (e.g. Justi & van Driel, Citation2005; Krell & Krüger, Citation2016; van Driel & Verloop, Citation2002). However, in recent reviews, scholars report that most diagnostic instruments focus on MKM rather than MKP (Mathesius & Krell, Citation2019; Nicolaou & Constantinou, Citation2014). Only a few studies focus on investigating students’ or teachers’ MKP explicitly, usually using open-ended questions and examining knowledge concerning certain aspects of the modelling process (Lazenby et al., Citation2020; Sins et al., Citation2009). To the best of our knowledge, there is no instrument focusing on the modelling process as a whole when assessing MKP. To address this gap, we developed a task that could expose PSTs’ MKP by requiring them to draw a process diagram of their conceptions about the scientific modelling process. Since assessing MKP with the diagram task is not an established method, we explored the extent to which the diagram task’s results enabled valid inferences of PSTs’ MKP. Furthermore, we investigated the extent to which the PSTs’ MKP was related to their MKM. Hence, in this study, we provide an instrument for explicitly assessing MKP as well as first insights about how PSTs’ MKM and MKP are related. This will contribute to our understanding of PSTs’ metaknowledge about models and modelling and to the development of instructional strategies fostering it.

Frameworks for modelling in science education

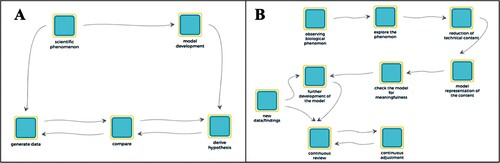

In the process of modelling, models are constructed and applied by an agent for a specific purpose (Giere, Citation2010; Mahr, Citation2009). Modelling in science education has two purposes: Modelling can be used for communicating content knowledge or to investigate phenomena in inquiry (Giere, Citation2010; Gouvea & Passmore, Citation2017). In this study, we refer to modelling for inquiry where modelling is used for generating knowledge about an unknown phenomenon by proposing explanations or predictions (Gouvea & Passmore, Citation2017; Passmore et al., Citation2014; Schwarz et al., Citation2022). Several frameworks have been developed to structure the scientific modelling process in science education. Gilbert and Justi (Citation2016) propose the modelling diagram framework that structures the modelling process in ‘creation’, ‘expression’, and ‘test and evaluation of models’. The learning progression from Schwarz et al. (Citation2009) describes four practices in the modelling process: ‘constructing models’, ‘using models to explain and predict’, ‘evaluating models’ and ‘revising models’. As a theoretical basis to assess MKP in this study, we applied the framework for modelling in scientific inquiry (Göhner et al., Citation2022; Upmeier zu Belzen et al., 2021), as illustrated in . This framework structures the modelling process in the stages of model construction and model application. It provides four components belonging either to the experiential world, in which the modeller directly interacts with the phenomenon, or to the model world, in which data from the experiential world is integrated into the model (Giere, Bickle, & Mauldin, Citation2006). In explore the phenomenon, the modeller engages with the phenomenon and collects data through observation without focusing on explicit hypotheses. In develop the model, the modeller links the collected data to prior knowledge and integrates it into a novel or existing model. In predict with the model, the modeller derives hypotheses. In test the model with data the modeller tests the model-derived hypothesis with data generated by scientific inquiry methods ().

Figure 1. Framework for modelling in scientific inquiry (adapted from Göhner et al., Citation2022 and Upmeier zu Belzen et al., 2021)

The described frameworks (Justi & Gilbert, Citation2002; Schwarz et al., Citation2009; Upmeier zu Belzen et al., Citation2021) propose similar components for modelling and share the view that modelling is a cyclic and iterative process. The differences between the frameworks are due to the different perspectives on modelling that they focused on. Furthermore, the frameworks were constructed with different aims to structure the modelling process for science education. Gilbert and Justi's (Citation2016) and Schwarz et al.’s (2009) frameworks refer to both perspectives of modelling (i.e. inquiry and representation). They aim to provide guidance for modelling-based teaching. Our framework focuses on modelling for inquiry. It was constructed to examine what learners know about and how they engage in modelling for inquiry (Göhner et al., Citation2022; Upmeier zu Belzen et al., 2021). Since it explicitly focuses on assessing knowledge and practices involved in modelling for inquiry (Göhner et al., Citation2022; Upmeier zu Belzen et al., Citation2021), we decided to apply our framework as the theoretical basis for assessing PSTs’ MKP in this study. By referring to the framework of modelling for scientific inquiry (), we conceptualise modelling as an iterative process consisting of the components explore the phenomenon, develop the model, predict with the model, and test with data.

Metaknowledge about models (MKM) and metaknowledge about the modelling process (MKP)

Metaknowledge about models and metaknowledge about the modelling process has been established as domain-general knowledge (Justi & van Driel, Citation2005; Schwarz & White, Citation2005; White & Frederiksen, Citation1998). Schwarz and White (Citation2005) proposed five types of knowledge for what they described as metamodelling knowledge: ‘Nature of Models’, ‘Evaluation of Models’, ‘Purpose or Utility of Models’, ‘Utility of Multiple Models’, and ‘Nature or Process of Modelling’.

While the first four types focus on knowledge about the model object as a tool for sensemaking, ‘Nature or process of modelling’ refers to knowledge about the modelling process. By referring to these seminal studies (Justi & van Driel, Citation2005; Schwarz & White, Citation2005; White & Frederiksen, Citation1998), recent frameworks for modelling competence argued that engagement in the modelling practice is guided by metaknowledge about models (MKM) and metaknowledge about the modelling process (MKP, Chiu & Lin, Citation2019; Nicolaou & Constantinou, Citation2014; Nielsen & Nielsen, Citation2021).

MKM was defined as knowledge about ‘what a model is, the use to which it can be put, the entities of which it consists, its stability over time’ (Justi & van Driel, Citation2005, p. 555). This connects to the definition of MKM as ‘the epistemological awareness about the nature and the purpose of models’ (Nicolaou & Constantinou, Citation2014, p. 53).

MKP was defined as knowledge about the ‘steps to be followed in the modelling process and factors on which the modelling process depends’ (Justi & van Driel, Citation2005, p. 555). Thus, MKP includes not only the knowledge about the modelling process componentsFootnote1 but also the knowledge about how and under which circumstances these components are being followed. The latter we describe as knowledge about the structure of the modelling process. Based on this, and in reference to our framework of modelling for scientific inquiry, we conceptualise MKP as knowledge about the components of explore the phenomenon, develop the model, predict with the model, and test with data, and knowledge about the structure of engaging with them in modelling processes.

Theoretical definitions of MKM and MKP suggest that these aspects share some common basis. For example, the stability of models over time belongs to MKM, since it refers to knowledge about the model object itself as a changeable entity used for sensemaking. But it also refers to MKP, since it refers to knowledge about the factors on which the modelling process depends, such as that models are revised if model-derived hypotheses have been falsified.

Although there is some overlap between the constructs of MKM and MKP, we argue that they differ in the central aspects of metaknowledge that are addressed: While MKM addresses aspects of the model object as a tool for sensemaking, MKP addresses the aspects of which components are involved in modeling and the modelling process structure. We argue that assessing MKM requires different assessment instruments with other instructional prompts than assessing MKP: While assessment instruments for MKM should prompt the participants to describe different aspects of the model object as a tool for sensemaking, instruments for MKP should prompt participants to describe the modelling processes as a whole in order to identify whether they know about the components and structure of modelling processes.

Preservice science teachers’ MKM and MKP

The importance of developing and diagnosing metaknowledge has been supported by the assumption that it ‘guides the practice’ (Schwarz et al., Citation2009, p. 635). Several studies found that in- and pre-service science teachers’ MKM and MKP are limited (e.g. Cheng et al., Citation2021; Göhner et al., Citation2022; van Driel & Verloop, Citation2002). Recent studies did not find correlations between in- and pre-service science teachers’ MKM and their engagement in modelling practices (e.g. Cheng et al., Citation2021; Göhner et al., Citation2022), concluding that the role of metaknowledge for engaging in modelling practices and, thus, its role for modelling competence is questionable. Not finding a relationship between teachers’ metaknowledge and their engagement in modelling practices might be explained by a lacking focus on MKP in related studies. For example, Cheng et al. (Citation2021; Citation2015) operationalised metaknowledge by implementing the instrument of understanding models in science (Treagust et al., Citation2002) and Göhner et al. (2022) used an open-ended questionnaire assessing knowledge of models in five aspects (Krell & Krüger, Citation2016). Both instruments focus on models rather than on the modelling process and, thus, on MKM rather than on MKP. Engagement in modelling practices in these related studies was conceptualised as constructing and applying models to explain and predict a phenomenon (Cheng et al., Citation2021; Cheng & Lin, Citation2015; Göhner et al., Citation2022). We argue that MKP as knowledge about the components and the structure of modelling processes might be more closely related to engagement in the modelling practice than MKM. This assumption can be tested in studies with an instrument that focuses on the assessment of MKP. Some studies (e.g. Lazenby et al., Citation2020; Sins et al., Citation2009; van Driel & Verloop, Citation2002) use instruments that assess knowledge about certain aspects of the modelling process, but not the process as a whole. For instance, Lazenby et al. (Citation2020) investigated chemistry undergraduate students’ MKP, focusing on model construction by asking the question ‘How do you think models are developed?’ (Lazenby et al., Citation2020, p. 806). Based on this literature, we recognise a research gap in assessing PSTs’ MKP with an instrument that assesses the knowledge about the process holistically.

Research questions

The goal of this study is to characterise PSTs MKP and to examine its relation to MKM by applying a diagram task. Since this task has not been established yet, we set to explore the validity of interpreting the diagram task’s results (the produced diagrams) regarding PSTs’ MKP.

Our research questions (RQ) are:

RQ1: To what extent do the diagram task results provide a valid interpretation of PSTs’ MKP?

RQ2: What characterises PSTs’ MKP, as reflected in the diagram task?

RQ3: To what extent is PSTs’ MKM related to their MKP?

Methods

To answer our research questions, we (a) developed a diagram task for assessing MKP and conducted studies examining the diagram task’s results concerning valid interpretations regarding PSTs’ MKP (RQ1), and (b) implemented the task in a study with PSTs while also examining their MKM with a questionnaire (RQ2 and RQ3), that was used for operationalising MKM in previous Studies (e.g. Krell & Krüger, Citation2016).

The MKP diagram task

Visual representations, such as diagrams, are effective learning and evaluation tools in science education (Bobek & Tversky, Citation2016). Therefore, we designed a diagram task, rather than a textual task, to examine PSTs’ MKP. We aimed to develop a task that can be performed remotely using an online platform, which also allowed students to perform the task from the distance.

Instruction

The task instruction is to create a diagram representing the modelling process in scientific inquiry. In our studies, these diagrams were drawn on a blank digital canvas using the online open-access platform SageModelerFootnote2 (Bielik, Damelin, & Krajcik, Citation2019) allowing PSTs to draw and label nodes and connect them with arrows. Before drawing the diagram, participants were encouraged to watch a short video (1:42 min.) that explained how to draw a process diagram in SageModeler. This video is embedded in SageModeler and participants were able to watch it while drawing their diagram again.

Coding scheme

A coding scheme for evaluating PSTs’ MKP was developed in a discursive process by referring to the framework of modelling for scientific inquiry () and the definition of MKP (adapted from Justi & van Driel, Citation2005). According to this, not only the knowledge about the modelling process components needed to be considered but also the knowledge about the process structure, thus, to what extent cycles of model construction and revision are considered as part of the modelling process. Therefore, the analysis of the drawn diagrams included a component score and a structure score. The component score indicates to what extent PSTs addressed the four major components provided by the framework of modelling for scientific inquiry () when reflecting about the modelling process. The structure score indicates to what extent PSTs conceptualise modelling as an iterative process of constructing and revising models.

The four components suggested by our framework of modelling for scientific inquiry included sub-elements that are crucial for modelling (). The component score was determined by counting how many components were addressed in the diagram, ranging from 0 (none of the components was addressed) to 4 (all components were addressed). A component was addressed if at least one of its related sub-elements was found in the diagrams.

Table 1. Descriptions of the components and referring sub-elements used for coding.

To identify PSTs’ knowledge about the structure of modelling processes, each diagram was given a structure score. Since there is no universal structure for the modelling process ( Göhner et al., Citation2022; Passmore et al., Citation2014; Schwarz et al., Citation2022), we decided not to refer to an idealised sequence. Instead, we focused on iterative cycles of data collection linked to model revision for scoring the diagram structures. Studies have shown that model revision is a crucial part of engaging in the modelling process (Justi & van Driel, Citation2005; Lucas et al., Citation2022). Thus, diagrams displaying several activities of data collection that are linked to cycles of model revision indicate more sophisticated knowledge about the structure of modelling processes. This was considered in the coding scheme as the structure score, ranging from 1 to 3 ().

Table 2. Coding of the modelling process structure.

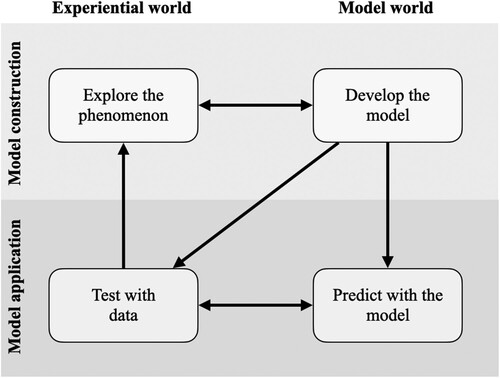

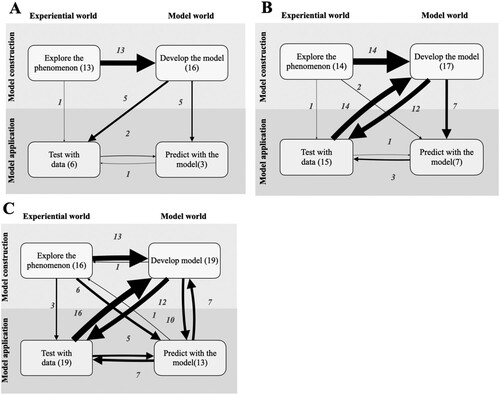

For example, (A) presents the diagram drawn by Participant A. This diagram was given a component score of 4 since it addressed all modelling components: explore the phenomenon (‘scientific phenomenon’ node), develop the model (‘model development’ node), predict with the model (‘derive hypothesis’ node) and test with data (‘generate data’ node connected to the ‘derive hypothesis’ node via the ‘compare’ node). Although student A mentioned that a model-derived hypothesis needs to be compared with data (test with data), the diagram did not display information on how to continue if data will prove the hypothesis wrong. Thus, this diagram did not include any model revision and received the lowest structure score of 1. In another example, the diagram drawn by Participant B ((B)) was given the component score 3. It did not include the component predict with the model, but the components explore the phenomenon (‘observing biological phenomenon’ and ‘explore the phenomenon’ nodes), develop the model (‘model representation of the content’ node), and test with data (‘check the model for meaningfulness’, ‘continuous review’, and ‘continuous adjustment’ nodes). The diagram received the highest structure score of 3 since it included an iterative cycle of model revision and data collection.

Validation of the MKP diagram task (RQ1)

Assessing MKP using diagrams is a novel method. Therefore, we conducted two validation studies referring to established sources of validity evidence, which are test content and response processes (AERA et al., Citation2014; Cook et al., Citation2014).

Validation study referring to test content

According to AERA et al. (Citation2014), test content as a source of validity evidence that refers to ‘the themes, wording, and format of the items, tasks or questions on a test’ (AERA et al., Citation2014, p. 14) and evidence can come from ‘expert judgments between parts of the test and the construct’ (AERA et al., Citation2014, p. 14). In this study, 10 experts were asked in a written online survey to judge the appropriateness of the diagram task. The experts were science education researchers (3 PhD students, 5 postdocs and 2 professors) in science education at different German and Austrian universities with extensive experience in research and teaching about models and modelling.

Following the completion of the diagram task, the experts reflected on the fit between the theoretical construct of MKP and the diagram task by responding to a five-point rating scale (1: fully disagree, 2: mostly disagree, 3: partly agree, 4: mostly agree, to 5: fully agree) consisting of two statements. The first statement was: The diagram task assesses PSTs’ knowledge about modelling process components. The second statement was: The diagram task assesses PSTs’ knowledge about modelling process’s structure. In addition, the experts were asked to explain their decisions and to provide additional feedback; both in written form.

Responses to the rating scale were analysed quantitatively by calculating the mean and standard deviations of the responses to both statements. Explanations of agreement decisions and additional feedback were analysed qualitatively, in terms of summarising similar comments.

Validation study referring to response processes

To obtain validity evidence based on response processes, ‘the detailed nature of the performance or response actually engaged in by the test takers’ (AERA et al., Citation2014, p. 13) needs to be analysed. Therefore, we conducted think-aloud interviews (Ericsson & Simon, Citation1980) with 10 pre-service biology teachers enrolled as M.Ed. students at a German university. Due to the pandemic situation in Germany during the data collection period (October and November 2022), interviews were conducted online with a video conference system. Since think-aloud is a method to verbalise cognitive processes (Ericsson & Simon, Citation1980), it is a source to examine participants’ response processes to tasks (Cook et al., Citation2014; Hubley & Zumbo, Citation2017; Leighton, Citation2017).

At first, the participating PSTs were asked to write down how they conceptualise the modelling process in the scientific discipline of biology. Afterwards, participants were engaged in a warm-up think-aloud task before responding to the diagram task, as suggested in the literature (Ericsson & Simon, Citation1980; Leighton, Citation2007). Interviews were recorded and transcribed verbatim. Written descriptions of the modelling process and links to generated diagrams were collected with an online tool for questionnaires (SoSci Survey, Leiner, Citation2019).

All three descriptions of the modelling process (the written response, the diagrams, and the think-aloud transcripts) were co-analysed by two coders using the developed coding schemes ( and ). In this process, each of the three artefacts was scored separately for each participant. As a result, every participant received three scores for their MKP. The comparison of these scores provides evidence to discuss the appropriateness of MKP with diagrams. To test the usability of SageModeler for the diagram task, participants responded to a usability scale (Bangor et al., Citation2009; Brooke, Citation1996).

Investigation of PSTs’ MKP and its relation to MKM (RQ2 and RQ3)

Sample

Our main study addressing research questions 2 and 3 includes a sample of another 63 pre-service biology teachers from two German universities. All PSTs participated voluntarily and answered the MKP diagram task and an MKM questionnaire at the beginning of the second lesson that was part of a master course dealing with inquiry in biology classrooms. Similar to the think-aloud interviews, the data collection was conducted online in a synchronised format.11 of the 63 participants were excluded from the analysis since they did not answer the open-ended questionnaire (n = 2), they did not draw a diagram describing their idea of the modelling process (n = 5), or they did not share a link to their diagram (n = 4). Therefore, a sample of 52 participants that responded to both instruments, was taken for analysis.

Assessment and analysis of MKP

The diagram task was used to assess PSTs’ MKP in this study. Links to the created diagrams were collected by using SoSciSurvey. Diagrams were analysed with the developed coding scheme and this analysis was evaluated for its objectivity and reliability. Regarding objectivity, all 52 diagrams were analysed by two independent coders with ‘substantial’ inter-coder agreements (K = .76 for the component score and K = .80 for the structure score; both ‘substantial’ according to Landis & Koch, Citation1977; K was calculated in reference to Brennan & Prediger, Citation1981). Diagrams that were not coded consistently were further discussed by the two coders until a full consensus was reached. To evaluate reliability, a random subsample of 13 diagrams was coded again by one of the coders. Three months passed between the initial and second coding. A ‘substantial’ reliability score (intra-coder agreement) was reached (K = .90 for the component score and K = .77 for the structure score).

To further explore how PSTs sequenced the modelling process components, we analysed how nodes that represented the components were connected through arrows. Thus, each of the possible 12 connections between the four components was counted for each diagram collaboratively by the two coders in a discursive process, after inter- and intra-coder measures were performed. As an example, in (a) connections were found from explore the phenomenon to develop the model (arrow from ‘scientific phenomenon’ to ‘model development’), develop the model to predict with the model (arrow from ‘model development’ to ‘derive hypothesis’) as well as from between predict with the model to test with data and vice versa (arrows going back and forth between ‘derive hypothesis’, ‘compare’ and ‘generating data’).

Assessment and analysis of MKM

MKM as knowledge about ‘what a model is, the use to which it can be put, the entities of which it consists, its stability over time’ (Justi & van Driel, Citation2005, p. 555) was operationalised with an open-ended questionnaire (Krell & Krüger, Citation2016), that assesses five aspects of MKM: Nature of Models, Alternative Models, Purpose of Models, Testing Models and Changing Models. MKM of these five aspects was assessed in three levels (Krell & Krüger, Citation2016; Upmeier zu Belzen et al., 2021): Whereas models are viewed as objects to describe the phenomenon in level I, models are understood as idealised representations to communicate about a phenomenon in level II. Level III perspectives are characterised by considering models as research tools conveying theoretical ideas and plausible explanations for so far unexplained phenomena as well as predicting phenomena.

The questionnaire was also implemented in SoSci-Survey, requiring the participants to answer the questionnaire after completing the diagram task. The questionnaire consisted of five open-ended questions, each directly addressing one of the five aspects Nature of Models, Alternative Models, Purpose of Models, Testing Models and Changing Models. The written responses to the questions were analysed with a coding scheme (Krell & Krüger, Citation2016) by two independent coders. Thus, the coders evaluated the PSTs’ achieved level for each of the five aspects. A substantial inter-coder agreement was found (ranging from K = .62 for purpose of models to K = .86 for changing models, interpreatation based on Landis & Koch, Citation1977). Differences between the two coders were discussed to reach a consensus coding.

Relation between MKM and MKP

Correlations between PSTs’ diagram scores and achieved levels in the open-ended questionnaire were calculated to examine the relation between these instruments and to gain insights into the relation between PSTs’ MKM and MKP. Since not all data was not normally distributed (see results), the Spearman correlation coefficient was calculated (Field, Citation2013). Furthermore, by using a Welch t-test (Field, Citation2013) it was tested whether PSTs that addressed specific components in their diagrams achieved higher levels in the open-ended questionnaire compared to participants that did not address these components.

Results

RQ1: validation of the MKP diagram task

Validation of the test content

Referring to the five-point rating scale, experts moderately agreed that the diagram task assesses knowledge about the modelling process’ components and structure (components: M = 3.6, SD = 0.52; structure: M = 3.5, SD = 0.85). None of the experts disagreed with the statement that the diagram task assesses knowledge about the modelling process components. However, none of the experts fully agreed with this statement (n = 6 mostly agree; n = 4 partially agree). As a justification for not fully agreeing with this statement, experts referred to the openness of the task: a different level of details is problematic for evaluating knowledge, or PSTs might just guess words that sound scientific to them as ‘phenomenon’ or ‘hypothesis’ which may not indicate higher knowledge.

One expert partially disagreed with the statement that the diagram task assesses knowledge about the structure of the modelling process, stating that connections indicated through the diagram arrows can be randomly guessed. Other experts that partially agreed (n = 4), mostly agreed (n = 4) or fully agreed (n = 1) with this statement also mentioned that for better assessing PSTs’ knowledge about the structure of the modelling processes, they should elaborate more on their diagrams, i. e., in a reflective text about the diagram or by demanding them to label the arrows. As general feedback, three experts raised the question of whether a contextualisation of the task would be necessary.

Validation of the response processes

In the think-aloud interviews, all 10 PSTs reflected on the modelling process on a meta-level by considering the components of modelling processes and how they were connected. Component and structure scores of PSTs’ think-aloud protocols match the scores of their diagrams in all cases (see supplementary file).

However, there were differences between the diagram scores and written descriptions of the modelling process’ scores: two out of the 10 participants achieved higher components scores in the written descriptions than in the diagrams, by mentioning the components of either model development or model testing in the written description that was not addressed in the diagram. Two other participants received higher scores on the diagrams than on the open-ended question. Both addressed the component explore the phenomenon in their diagrams but not in their written descriptions. Regarding the structure score, three participants received lower scores in the written descriptions than in the diagrams. None of the PSTs achieved a higher structure score in the written descriptions compared to their diagrams (see supplementary data).

Issues with SageModeler were raised at least once in every interview. These mostly referred to difficulties in labelling the nodes and arrows as well as the question of how to connect the nodes with arrows. However, eight participants were able to solve these issues quickly. Two other participants needed assistance from the interviewer. All participants reported that they had no (n = 7) or only very little (n = 3) experience with the SageModeler software. However, the usability of the software for the task was rated as ‘good’ (M = 78.8, SD = 16.30; maximum 100 possible points in the usability scale, Bangor et al., Citation2009).

RQ2: PSTs’ MKP

PSTs averaged a component score of 3.04 (SD = 0.87) out of possible 4 and most of the participants (38 of 52) addressed at least three out of the four major modelling process components in their diagrams. 18 participants addressed all four components. The component develop the model was addressed by all 52 participants, whereas most participants displayed explore the phenomenon (43 of 52) and test with data (40 of 52). PSTs’ knowledge of the components differs most often in the component predict with the model being addressed in 23 of the diagrams.

The mean structure score of the sample was 2.06 (SD = 0.83) with a possible highest score of 3. The structure scores were almost equally distributed: 16 participants achieved a score of 1, 17 scored 2, and 19 scored 3.

A highly positive correlation between structure score and component score was found in the sample (Cohen, Citation1988; r = .53, p < .001), indicating that PSTs with higher structure scores are likely to address more components in their diagrams – or vice versa. This is further illustrated by the frequency of how many components were mentioned in each structure score as illustrated in . This figure shows the sum of each component and the connections between components for each structure score. PSTs that did not include any model revision in their diagrams (structure score 1, (a)), mentioned mostly components of model construction (explore the phenomenon and develop the model), and only rarely components of model application (predict with the model and test with data). In these diagrams, only a few connections between the examined components were found, mostly linking explore the phenomenon to develop the model. With higher structure levels, the frequency of model application components (predict with the model and test with data) as well as the number of connections between components increased. In structure scores 2 and 3 ((b) and 3(c), respectively), bi-directional connections between develop the model and test with data were found. In level 2-diagrams, connections from develop the model to predict with the model were frequently found, while in structure score 3, bi-directional connections between these components were found. The component predict with the model was addressed most frequently in structure score 3-diagrams.

Figure 3. Frequency of modelling process components and connections between components grouped by achieved structure scores in their diagram (A. PSTs that achieved structure score 1, B. PSTs that achieved structure score 2, C. PSTs that achieved structure score 3). Numbers in brackets indicate number of participants addressing this component in their diagrams, thickness of component box frames correspond with these numbers. The numbers on the arrows between the component boxes indicate how many participants connected one component with another component in their diagrams. Thickness of arrows corresponds to these numbers.

RQ2: relation of PSTs MKP with their MKM

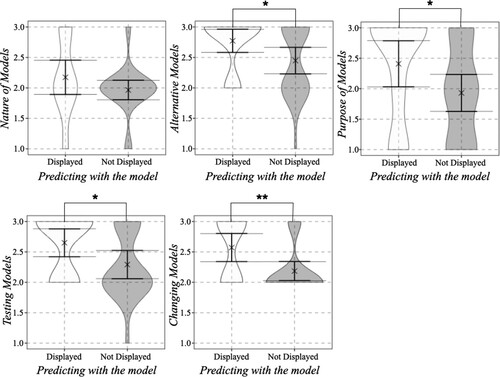

To address the second research question regarding the relation between PSTs’ MKM and MKP, participants’ answers to the open-ended questionnaire were analysed qualitatively. 38% of the answers were assigned to the highest level III. Most level III answers were rated for the aspect alternative models and the least for the aspect nature of models ().

Table 3. Distribution of levels I, II and III in the five aspects of the MKM open-ended questionnaire (N = 52).

Moderate correlations (r = .37, p < .01; Cohen, Citation1988) were found between overall scores of the diagram task (mean of component and structure score) and overall achieved levels of the open-ended questions (mean of achieved levels over all five aspects). In more detail, moderate correlations were found between the questionnaire aspect purpose of models and the diagram components score (r = .30, p < .05; Cohen, Citation1988) and the questionnaire aspect testing models and the diagram structure score (r = .33, p < .05; Cohen, Citation1988) – but not for any other relationship between MKP diagram scores and MKM questionnaire aspects ().

Table 4. Correlations between MKP diagram task (component score and structure score) and aspects of the MKM questionnaire (nature of models, alternative models, purpose of models, testing models, and changing models).

As mentioned above, predict with the model was the component that was found in less than half of the diagrams (23 out of 52 diagrams, 44.2%). Therefore, the relation of displaying this component in the diagrams with the answers to the open-ended questionnaire was examined more in detail. PSTs addressing predict with the model component in their diagrams achieved significantly higher levels in 4 out of the 5 aspects of the MKM questionnaire compared to PSTs not addressing this component ().

Figure 4. Scoring of the aspects of open-ended questionnaire depending on whether the component predict with the model was displayed in the diagram or not (N = 52 diagrams, 23 displayed predicting with the model). Significant differences between groups in cases where mean value of one group (![]()

Discussion

Validation of the MKP diagram task (RQ1)

The overall agreement of science education experts that the diagram task appropriately assesses knowledge about components and structures of the modelling process can be interpreted as supporting validity evidence in reference to ‘test content’ (AERA et al., Citation2014; Cook et al., Citation2014). However, experts addressed several important limitations. Some of these limitations, like the problem of scoring different levels of detail, are addressed in our coding scheme (i.e. through scoring components, if at least one of its related sub-elements was found in the diagrams). Other limitations, such as that PSTs might guess words that sound scientific to them or that they connect components randomly, are general limitations of diagnosing knowledge. These incidents are even more likely to happen in closed-ended task formats like multiple choice items (e.g. Birenbaum & Tatsuoka, Citation1987) and are solved when participants elaborate on their diagrams, for example by demanding them to label the arrows.

The think-aloud interviews were conducted to generate validity evidence regarding the response processes of the task (AERA et al., Citation2014; Cook et al., Citation2014). The findings indicate that all ten participants reflected on the modelling processes on a meta-level when answering the task which can be interpreted as an argument to support valid interpretations of PSTs’ MKP using the diagram task. Another supporting argument can be derived from the equal scores of the think-aloud protocols and the diagrams, which indicates that the diagrams represent the MKP that the PSTs had in their mind when drawing their diagram.

Three out of ten participants achieved higher structure scores in the diagrams than in the written descriptions. This suggests that the diagram format is more appropriate for describing cycles of model revision than a written format, which would favour the diagram task over a written format. On the other hand, two out of ten PSTs received higher component scores in the written description of the modelling process than in their diagram. This means that these participants were not able to represent the modelling process components they knew about in the diagram task, limiting the valid interpretation of PSTs’ MKP based on diagram task scores.

Taken together, these differences add to findings from other scholars indicating that the format of assessment influences learners’ responses (e.g. Bobek & Tversky, Citation2016) and the interpretation of their knowledge (e.g. Ropohl et al., Citation2015; Walpuski & Ropohl, Citation2011).

Additionally, it is possible that participants’ responses were influenced by a potential learning effect from completing the writing task before the diagram task. Participants’ knowledge may have been activated by first elaborating on the modelling process in writing. This could have allowed them to process their ideas more thoroughly in the diagram task, resulting in more sophisticated knowledge being presented in their diagrams. This may also account for an explanation for why some participants achieved higher structure scores (n = 2) or component scores (n = 2) in the diagrams than in the written task.

Although all participants in the think-aloud study encountered some difficulties when using SageModeler to draw diagrams, they were able to solve most issues quickly. The fact that these technical problems were resolved quickly is an argument supporting the usability of SageModeler for this task. This is consistent with the finding that the usability of the SageModeler for the diagram task was rated as ‘good’ by the participants (interpretation of usability based on Bangor et al., Citation2009 and Brooke, Citation1996).

We conclude that the overall expert agreements and PSTs’ explicit reflections of MKP in the think-aloud interviews, support using the diagram task to investigate PSTs’ decontextualised MKP. However, in future studies, some of the found limitations can be addressed. For example, further elaboration within the diagrams can be done by asking the participants to label the arrows. On the other hand, the think-aloud interviews showed that participants had problems in labelling the arrows in SageModeler. Tools originally built for concept mapping as CoMapEd (Mühling, Citation2014) could be used alternatively in future studies since they require participants to label the arrows.

Pre-service science teachers‘ MKP (RQ2)

In this study, we examined PSTs’ MKP, which has been rarely investigated (Lazenby et al., Citation2020; Mathesius & Krell, 2019; Nicolaou & Constantinou, Citation2014). In our study PSTs’ MKP is operationalised as their knowledge about the modelling process components and the extent to which they understand modelling as an iterative process (adapted from Justi & van Driel, Citation2005; Nielsen & Nielsen, Citation2021). PSTs describing modelling as a linear process without model revision in their diagram (structure score 1) were more likely to include the components that belong to model construction (explore the phenomenon and develop the model) and rarely the components belonging to model application (predict with the model and test with data). All PSTs that described modelling as an iterative process including several cycles of model revision (structure score 3) at least addressed the component test with data that belongs to the stage of model application (Giere et al., Citation2006; Halloun, Citation2007; Upmeier zu Belzen et al., Citation2021). In addition, considering the high correlation between the component and structure scores, these results suggest that knowledge about model application components, that are, predict with the model or test with data relates to PSTs’ ability to think about modelling as an iterative process for scientific inquiry. Thus, we assume that knowledge about model application components enables PSTs to conceptualise modelling as an iterative process that involves cycles of model revisions which reflects sophisticated knowledge representing conceptions of the modelling process as an authentic scientific endeavour (Van Der Valk et al., Citation2007).

In our study, all PSTs addressed the component of develop the model. This matches the results of Vo et al. (Citation2015) reporting in their multiple case study with six in-service elementary school teachers that all of them referred to the idea that models are constructed when describing their conception of the modelling process. Furthermore, Vo et al. (Citation2015) stated that only half of the participating teachers (three out of six) reflected on deeper epistemic conceptions of modelling, such as the role of data for testing models in the modelling process. Similar findings were also reported by Lazenby et al. (Citation2020), who found that undergraduate chemistry students gave less consideration to the role of data when reflecting on modelling processes. In our study, the role of data was considered more often as 40 out of 52 PSTs addressed the component test with data in their MKP diagrams. An explanation for this difference can be found in the different sample of this study compared to Lazenby’s: PSTs in their master's studies at a German university in this study versus undergraduate chemistry students in Lazenby’s study. In Germany, developing MKM and MKP is an explicit goal in teacher education (KMK, Citation2019). Thus, it is likely that this knowledge has been addressed in the previous courses of our study's participants.

In contrast to the other components, predict with the model was considered in less than 50% of the diagrams. Thus, knowledge about this component can be identified as a learning potential for our samples’ PSTs. This connects to results of other studies indicating that pre- as well as in-service teachers frequently do not elaborate on models as tools for predicting phenomena (Cheng et al., Citation2021; Göhner et al., Citation2022; Justi & Gilbert, Citation2002; Justi & van Driel, Citation2005; Krell & Krüger, Citation2016).

Relation between pre-service science teachers’ MKM and MKP

Since most studies examining modelling knowledge focused on MKM rather than MKP (Mathesius and Krell, Citation2019; Nicolaou & Constantinou, Citation2014), the relation between MKM and MKP is unclear. In our study, we found moderate correlations between MKM operationalised by the questionnaire and the MKP operationalised by the diagram task, indicating that there may be a positive relation between MKM and MKP. Lazenby et al. (Citation2020) argued that the MMK aspects ‘nature and purpose of models [are] as deeply intertwined and inseparable from other constructs’ (Lazenby et al., Citation2020, p. 805). Our results support this assumption for the aspect purpose of models, as a moderate positive correlation between the purpose of models and the knowledge about the modelling process components (component score) was found. Explaining this correlation may suggest how MKM-related knowledge regarding purpose of models is connected to MKP-related knowledge about the modelling process components: In our study, diagrams that received high component scores were the ones addressing the component predict with the model. Level III for purpose of models was coded when participants reflected on using a model to find a novel explanation for a phenomenon or using models to derive a hypothesis. The latter connects to the component predict with the model in the diagram task. Based on this, we conclude that the level III score in the MKM open-ended questionnaire regarding the aspect purpose of models and the scoring for the MKP component predict with the model both demonstrate a shared common understanding of using models as predictive tools. This is further illustrated by the results in : PSTs addressing the component predict with the model in their diagrams achieved significantly higher levels for four out of five aspects of the open-ended questionnaire (except for nature of models), which indicates that the knowledge about using models to predict phenomena may be related not only to sophisticated MKP but also to sophisticated MKM. Scholars have argued that it is important in sceintific inquriy to use models and the modelling process for generating predictions about phenomena (e.g. Giere et al., Citation2006; ). Therefore, and in line with this study's results, knowing that models and the modelling process can be used to predict phenomena could play a central role in fostering MKM and MKP. Further studies can provide in-depth details regarding the extent to which this metaknowledge can guide learners in using models for predicting, as this is one practice in the framework of learning progressions for modelling proposed by Schwarz et al. (Citation2009).

The MKP structure score and the MKM aspect of testing models were also moderately correlated. Higher structure scores were coded when diagrams displayed iterative cycles of model revision. As suggested in the literature, model revision results from tests with data (Giere, Citation2010; Lucas et al., Citation2022). This can explain our finding of correlations between achieved levels for the aspect testing models in the open-ended questionnaire and the diagrams’ structure score. In this regard, other studies also emphasise the importance of testing models as a key aspect of developing metaknowledge about models and modelling (Krell & Krüger, Citation2016; Lee & Kim, Citation2014).

No correlation was found between the diagram structure score and the aspect changing models of the open-ended questionnaire. The aspect changing models is about reflecting on model revision. This correlation was expected since referring to model revision in the diagram was the explicit criterion to achieve high structure scores. Not finding any correlation might be explained by the different prompts of the instruments:

The diagram task, which the PSTs performed first, provided them with a blank canvas without explicit prompting for model revision. However, the following question referring to changing models explicitly prompted PSTs to explain why models are changed (models’ stability over time, Justi & van Driel, Citation2005). We interpreted this as a potential advantage of the diagram task in being open and not explicitly prompting PSTs to pre-consider model revision or any other aspects of the modelling process.

Limitations and outlook

One limitation of this study is that our results did not show the entire spectrum of MKM and MKP, especially for low levels of knowledge. 38 out of the 52 participants addressed at least three out of four components in their diagrams, and level I reflections of the aspects alternative models, testing models and changing models were rarely found in the data from the MKM questionnaire. This can be explained by the fact that the sample included PSTs in master courses since modelling knowledge was shown to increase during university progress (Upmeier zu Belzen et al., Citation2021). A larger and more heterogenic sample is required to further elaborate on different levels of MKP and to examine connections between learners’ MKM and MKP. Further studies addressing other sources of validity evidence, such as internal structure or relations to other variables (AERA et al., Citation2014; Cook et al., Citation2014) are needed to examine the validity of drawing interpretation on learners’ MKP based on diagram task results. For instance, future studies might implement the diagram task with PSTs from other disciplines, in-service teachers, and school students.

As raised by three of our surveyed experts, the lack of concrete biological context in the diagram task can be seen as another limitation of our diagram task. Since studies on middle and high school, and undergraduate students indicate that their MKM and MKP are context-dependent (Al-Balushi, Citation2011; Lazenby et al., Citation2019; Treagust et al., Citation2002), assessing MKM and MKP with decontextualised instruments is criticised (Schwarz et al., Citation2022). Studies that assessed decontextualised MKM frequently mention decontextualisation as a limitation (Cheng et al., Citation2021; Göhner et al., Citation2022; Lazenby et al., Citation2019). Arguing for the usefulness of the diagram task as a tool for examining PSTs’ decontextualised MKP, studies with in-service science teachers indicate that domain-general metaknowledge about models and modelling, assessed with decontextualised instruments, is connected to teachers’ modelling activities in the classroom (e.g. Harlow et al., Citation2013; Vo et al., Citation2015). Although these studies focussed mostly on MKM rather than MKP, the development of metaknowledge about models and modelling, thus MKM and MKP is an explicit goal in teacher education (KMK, Citation2019). To foster and evaluate this knowledge, decontextualised tests are needed, hence contextualised instruments have the risk of only measuring context-related content knowledge and thus might fail to indicate metaknowledge. In addition, decontextualised instruments for assessing modelling knowledge are needed for basic research in modelling competence, for example, to investigate how metaknowledge ‘guides the practice’ (Schwarz et al., Citation2009, p. 635). Recent studies investigating this assumption did not find a correlation between MKM and engagement in modelling practices (Cheng et al., Citation2021; Cheng & Lin, Citation2015; Göhner et al., Citation2022). As a next step, we plan to use the diagram task to examine the relationship between MKP and the engagement of students in the modelling practice.

Conclusions

In this paper, we investigated to what extent the results of a diagram task allow valid interpretations of PSTs’ MKP, how MKP can be characterised and how it is connected to MKM.

Referring to test content as a source of validity evidence (e.g. AERA et al., Citation2014; Cook et al., Citation2014), experts’ evaluation that the diagram task addresses MKP aspects supports the idea of using the diagram task to evaluate PSTs’ MKP. This idea is also supported by our examination of PSTs’ response processes as another source of validity (e.g. Hubley & Zumbo, Citation2017; Leighton, Citation2017) as they explicitly reflected on the modelling process when answering the diagram task in think-aloud interviews.

PSTs’ MKP ranged from conceptions that describe modelling as a linear process of model construction to conceptions that describe modelling as an iterative process of constructing and applying models. The latter represents sophisticated knowledge about the modelling process as an authentic scientific endeavour (Van Der Valk et al., Citation2007).

By operationalising MKP with the diagram task and MKM with an established open-ended questionnaire, we identified that knowledge about using models to predict phenomena bridged between PSTs’ sophisticated MMK and MKP. Matching results from other studies (e.g. Cheng et al., Citation2021; Justi & Gilbert, Citation2002), this knowledge was also found to be weakly developed in our study’s PSTs.

Scholars have argued that MKM and MKP can be developed by allowing learners to engage in modelling practices and reflecting on them (e.g. Schwarz et al., Citation2022; Vo et al., Citation2015). Thus, we propose that engaging in and reflecting on the practice of using models to predict phenomena can contribute to fostering the adoption of sophisticated metaknowledge about models and the modelling process among PSTs.

Ethics statement

In accordance with local legislation and institutional requirements, our study did not require the approval of an ethics committee because the research did not pose any threats or risks to the participants, and it was not associated with high physical or emotional stress. Nevertheless, it is understood, that we strictly followed ethical guidelines as well as the Declaration of Helsinki. Before taking part in our study, all participants were informed about its objectives, absolute voluntariness of participation, the possibility of dropping out of participation at any time, guaranteed protection of data privacy (collection of only anonymised data), no-risk character of study participation, and contact information in case of any questions or problems.

Supplemental Material

Download Zip (104 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1 We use the term ‘components’ instead of ‘steps’, since ‘steps’ indicate a linear step by step conception of modelling which does not represent our presented theoretical approach of an iterative modelling process.

2 Link to the instructions for the diagram task in SageModeler is available here.

References

- Al-Balushi, S. M. (2011). Students’ evaluation of the credibility of scientific models that represent natural entities and phenomena. International Journal of Science and Mathematics Education, 9(3), 571–601. https://doi.org/10.1007/s10763-010-9209-4

- American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (Eds.). (2014). Standards for educational and psychological testing. American Educational Research Association.

- Bangor, A., Kortum, P., & Miller, J. (2009). Determining what individual SUS scores mean: Adding an adjective rating scale. Journal of Usability Studies, 4(3), 114–123.

- Bielik, T., Damelin, D., & Krajcik, J. S. (2019). Shifting the balance: Engaging students in using a modeling tool to learn about ocean acidification. EURASIA Journal of Mathematics, Science and Technology Education, 15(1). http://doi.org/10.29333/ejmste/99512

- Birenbaum, M., & Tatsuoka, K. K. (1987). Open-Ended versus multiple-choice response formats—It does make a difference for diagnostic purposes. Applied Psychological Measurement, 11(4), 385–395. https://doi.org/10.1177/014662168701100404

- Bobek, E., & Tversky, B. (2016). Creating visual explanations improves learning. Cognitive Research: Principles and Implications, 1(1), 1–14. https://doi.org/10.1186/s41235-016-0031-6

- Brennan, R. L., & Prediger, D. J. (1981). Coefficient kappa: Some uses, misuses, and alternatives. Educational and Psychological Measurement, 41(3), 687–699. https://doi.org/10.1177/001316448104100307

- Brooke, J. (1996). Sus: A “quick and dirty” usability scale. In usability evaluation In industry (pp. 207–212). CRC Press.

- Cheng, M.-F., & Lin, J.-L. (2015). Investigating the relationship between students’ views of scientific models and their development of models. International Journal of Science Education, 37(15), 2453–2475. https://doi.org/10.1080/09500693.2015.1082671

- Cheng, M.-F., Wu, T.-Y., & Lin, S.-F. (2021). Investigating the relationship between views of scientific models and modeling practice. Research in Science Education, 51(1), 307–323. https://doi.org/10.1007/s11165-019-09880-2

- Chiu, M.-H., & Lin, J.-W. (2019). Modeling competence in science education. Disciplinary and Interdisciplinary Science Education Research, 1(1), 12. https://doi.org/10.1186/s43031-019-0012-y

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed). L. Erlbaum.

- Cook, D. A., Zendejas, B., Hamstra, S. J., Hatala, R., & Brydges, R. (2014). What counts as validity evidence? Examples and prevalence in a systematic review of simulation-based assessment. Advances in Health Sciences Education, 19(2), 233–250. https://doi.org/10.1007/s10459-013-9458-4

- Ericsson, K. A., & Simon, H. A. (1980). Verbal reports as data. Psychological Review, 87(3), 215–251. https://doi.org/10.1037/0033-295X.87.3.215

- Field, A. (2013). Discovering statistics using IBM SPSS statistics (4th ed.). SAGE Publications. https://books.google.de/books?id=srb0a9fmMEoC

- Giere, R. (2010). An agent-based conception of models and scientific representation. Synthese, 172(2), 269. https://doi.org/10.1007/s11229-009-9506-z

- Giere, R., Bickle, J., & Mauldin, R. (2006). Understanding scientific reasoning. Thomson/Wadsworth.

- Gilbert, J. K., & Justi, R. S. (2016). Approaches to modelling-based teaching. In J. K. Gilbert (Ed.), Modelling-based teaching in science education. Springer International Publishing.

- Göhner, M. F., Bielik, T., & Krell, M. (2022). Investigating the dimensions of modeling competence among preservice science teachers: Meta‐modeling knowledge, modeling practice, and modeling product. Journal of Research in Science Teaching, 59(8), 1354–1387. http://doi.org/10.1002/tea.v59.8

- Gouvea, J., & Passmore, C. (2017). ‘Models of’ versus ‘models for’. Science & Education, 26, 49–63. https://doi.org/10.1007/s11191-017-9884-4

- Greve, W., & Wentura, D. (1997). Wissenschaftliche beobachtung. Eine einführung [scientific observation. An introduction]. Beltz.

- Halloun, I. A. (2007). Mediated modeling in science education. Science & Education, 16(7-8), 653–697. http://doi.org/10.1007/s11191-006-9004-3

- Harlow, D. B., Bianchini, J. A., Swanson, L. H., & Dwyer, H. A. (2013). Potential teachers’ appropriate and inappropriate application of pedagogical resources in a model-based physics course: A “knowledge in pieces” perspective on teacher learning. Journal of Research in Science Teaching, 50(9), 1098–1126. https://doi.org/10.1002/tea.21108

- Hubley, A. M., & Zumbo, B. D. (2017). Response processes in the context of validity: Setting the stage. In B. D. Zumbo, & A. M. Hubley (Eds.), Understanding and investigating response processes in validation research (pp. 1–12). Springer International Publishing. https://doi.org/10.1007/978-3-319-56129-5_1

- Justi, R. S., & Gilbert, J. K. (2002). Modelling, teachers’ views on the nature of modelling, and implications for the education of modellers. International Journal of Science Education, 24(4), 369–387. https://doi.org/10.1080/09500690110110142

- Justi, R. S., & van Driel, J. (2005). The development of science teachers’ knowledge on models and modelling: Promoting, characterizing, and understanding the process. International Journal of Science Education, 27(5), 549–573. https://doi.org/10.1080/0950069042000323773

- KMK. (2019). Standards für die lehrerbildung [standards for teacher education]. Luchterhand.

- Krell, M., & Krüger, D. (2016). Testing models: A key aspect to promote teaching activities related to models and modelling in biology lessons?. Journal of Biological Education, 50(2), 160–173. http://doi.org/10.1080/00219266.2015.1028570

- Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33(1), 159–174. https://doi.org/10.2307/2529310

- Lazenby, K., Rupp, C. A., Brandriet, A., Mauger-Sonnek, K., & Becker, N. M. (2019). Undergraduate chemistry students’ conceptualization of models in general chemistry. Journal of Chemical Education, 96(3), 455–468. https://doi.org/10.1021/acs.jchemed.8b00813

- Lazenby, K., Stricker, A., Brandriet, A., Rupp, C. A., Mauger-Sonnek, K., & Becker, N. M. (2020). Mapping undergraduate chemistry students’ epistemic ideas about models and modeling. Journal of Research in Science Teaching, 57(5), 794–824. https://doi.org/10.1002/tea.21614

- Lee, S., & Kim, H.-B. (2014). Exploring secondary students’ epistemological features depending on the evaluation levels of the group model on blood circulation. Science & Education, 23(5), 1075–1099. https://doi.org/10.1007/s11191-013-9639-9

- Lehrer, R., & Schauble, L. (2015). The development of scientific thinking. In R. M. Lerner, L. S. Liben, & U. Mueller (Eds.), Handbook of child psychology and developmental science: Cognitive processes (Vol. 2 (7th ed, pp. 671–714). John Wiley.

- Leighton, J. P. (2017). Collecting and analyzing verbal response process data in the service of interpretive and validity argument. In K. Ercikan, & J. W. Pellegrino (Eds.), Validation of score meaning for the next generation of assessments (pp. 25–38). Routledge.

- Leiner, D. J. (2019). SoSci Survey (Version 3.1.06). www.soscisurvey.de

- Loftus, G. R. (1993). A picture is worth a thousandp values: On the irrelevance of hypothesis testing in the microcomputer age. Behavior Research Methods, Instruments, & Computers, 25(2), 250–256. https://doi.org/10.3758/BF03204506

- Lucas, L., Helikar, T., & Dauer, J. (2022). Revision as an essential step in modeling to support predicting, observing, and explaining cellular respiration system dynamics. International Journal of Science Education, 44(13), 2152–2179. https://doi.org/10.1080/09500693.2022.2114815

- Mahr, B. (2009). Information science and the logic of models. Software & Systems Modeling, 8(3), 365–383. https://doi.org/10.1007/s10270-009-0119-2

- Mathesius, S., & Krell, M. (2019). Assessing modeling competence with questionnaires. In A. Upmeier zu Belzen, D. Krüger, & J. van Driel (Eds.), Towards a competence-based view on models and modeling in science education (pp. 117–129). Springer International Publishing.

- Mühling, A. (2014). CoMapEd. https://www.comaped.de/

- Nicolaou, C. T., & Constantinou, C. P. (2014). Assessment of the modeling competence: A systematic review and synthesis of empirical research. Educational Research Review, 13, 52–73. https://doi.org/10.1016/j.edurev.2014.10.001

- Nielsen, S. S., & Nielsen, J. A. (2021). A competence-oriented approach to models and modelling in lower secondary science education: Practices and rationales Among danish teachers. Research in Science Education, 51(2), 565–593. https://doi.org/10.1007/s11165-019-09900-1

- NRC. (2012). A framework for K-12 science education. The National Academy Press.

- Oh, P. S. (2019). Features of modeling-based abductive reasoning as a disciplinary practice of inquiry in earth science. Science & Education, 28(6), 731–757. https://doi.org/10.1007/s11191-019-00058-w

- Opitz, A., Heene, M., & Fischer, F. (2017). Measuring scientific reasoning – a review of test instruments. Educational Research and Evaluation, 23(3-4), 78–101. https://doi.org/10.1080/13803611.2017.1338586

- Osborne, J. (2014). Teaching scientific practices: Meeting the challenge of change. Journal of Science Teacher Education, 25(2), 177–196. https://doi.org/10.1007/s10972-014-9384-1

- Passmore, C., Gouvea, J., & Giere, R. (2014). Models in science and in learning science. In M. Matthews (Ed.), International handbook of research in history, philosophy and science teaching (pp. 1171–1202). Springer.

- Ropohl, M., Walpuski, M., & Sumfleth, E. (2015). Welches Aufgabenformat ist das richtige? – Empirischer Vergleich zweier Aufgabenformate zur standardbasierten Kompetenzmessung. Zeitschrift für Didaktik der Naturwissenschaften, 21(1), 1–15. https://doi.org/10.1007/s40573-014-0020-6

- Rost, M., & Knuuttila, T. (2022). Models as epistemic artifacts for scientific reasoning in science education research. Education Sciences, 12(4), 276. https://doi.org/10.3390/educsci12040276

- Schwarz, C. V., Ke, L., Salgado, M., & Manz, E. (2022). Beyond assessing knowledge about models and modeling: Moving toward expansive, meaningful, and equitable modeling practice. Journal of Research in Science Teaching, 59(6), 1086–1096. https://doi.org/10.1002/tea.21770

- Schwarz, C. V., Reiser, B. J., Davis, E. A., Kenyon, L., Achér, A., Fortus, D., Shwartz, Y., Hug, B., & Krajcik, J. (2009). Developing a learning progression for scientific modeling: Making scientific modeling accessible and meaningful for learners. Journal of Research in Science Teaching, 46(6), 632–654. https://doi.org/10.1002/tea.20311

- Schwarz, C. V., & White, B. Y. (2005). Metamodeling knowledge: Developing students' understanding of scientific modeling. Cognition and Instruction, 23(2), 165–205. https://doi.org/10.1207/s1532690xci2302_1

- Sins, P. H. M., Savelsbergh, E. R., van Joolingen, W. R., & van Hout-Wolters, B. H. A. M. (2009). The relation between students’ epistemological understanding of computer models and their cognitive processing on a modelling task. International Journal of Science Education, 31(9), 1205–1229. https://doi.org/10.1080/09500690802192181

- Treagust, D., Chittleborough, G., & Mamiala, T. (2002). Students' understanding of the role of scientific models in learning science. International Journal of Science Education, 24(4), 357–368. https://doi.org/10.1080/09500690110066485

- Upmeier zu Belzen, A., Engelschalt, P., & Krüger, D. (2021). Modeling as scientific reasoning—the role of abductive reasoning for modeling competence. Education Sciences, 11(9), 495. http://doi.org/10.3390/educsci11090495

- Van Der Valk, T., Van Driel, J. H., & De Vos, W. (2007). Common characteristics of models in present-day scientific practice. Research in Science Education, 37(4), 469–488. https://doi.org/10.1007/s11165-006-9036-3

- van Driel, J. H., & Verloop, N. (2002). Experienced teachers’ knowledge of teaching and learning of models and modelling in science education. International Journal of Science Education, 24(12), 1255–1272. https://doi.org/10.1080/09500690210126711

- Vo, T., Forbes, C. T., Zangori, L., & Schwarz, C. V. (2015). Fostering third-grade students’ Use of scientific models with the water cycle: Elementary teachers’ conceptions and practices. International Journal of Science Education, 37(15), 2411–2432. https://doi.org/10.1080/09500693.2015.1080880

- Walpuski, M., & Ropohl, M. (2011). Einfluss des Testaufgabendesigns auf Schülerleistungen in Kompetenztests [Influence of test item design on student performance in competency tests]. Naturwissenschaften Im Unterricht Chemie, 22, 82–86.

- White, B. Y., & Frederiksen, J. R. (1998). Inquiry, modeling, and metacognition: Making science accessible to All students. Cognition and Instruction, 16(1), 3–118. doi:10.1207/s1532690xci1601_2