ABSTRACT

A primary justification of teaching science to all young people is to develop students to become “critical consumers” of science. This worthy goal, however, is hampered by a flawed premise that school science is sufficient to develop intellectual independence. In contrast, we start from the premise that we are all epistemically dependent on the expertise of others. Hence, any science education for all must develop the capabilities to become a “competent outsider” capable of making judgements not of the science itself, but whether the source is credible. Essential to developing such informed epistemic trust are: (1) a basic understanding of the social practices that enable the production of reliable knowledge; and (2) a familiarity with the major explanatory theories and styles of reasoning that guide the work of scientists. These elements provide a framework for the non-expert necessary to interpret and understand the work of scientists and the claims they make. We show how such an education would address three of the four primary aims of science education outlined by Rudolph (Citation2022). To achieve this goal a substantial reduction of existing standards to an essential, but fundamentally different, core is required, while returning significant autonomy to classroom professionals.

Introduction

‘What kinds of education, for example, could substantially improve the ability of novices to appraise expertise, and what kinds of communication intermediaries might help make the novice-expert relationship more one of justified credence than blind trust?’ (Goldman, Citation2001) (p. 109)

Indeed, why teach science? Rudolph offers a succinct and historically grounded answer to the question, identifying four broad reasons (Rudolph, Citation2022). One is the need to imbue young people with scientific methods of thinking; a second is to enable them to become informed citizens and consumers of scientific knowledge for personal and public decision-making (Osborne et al., Citation2023). A third is the liberal argument that science represents one of the great intellectual achievements of humanity, and that students are ‘heirs to an inheritance of human achievements’ and ‘the thoughts, beliefs, ideas, understandings, procedures and tools’ which only an education in science can provide (Kind & Osborne, Citation2017; Oakeshott, Citation1965). The fourth is the utilitarian argument that scientific knowledge provides the foundation for scientific careers and ensures an enduring supply of scientists necessary to sustain a growing economy. A version of all these arguments can be found in A Framework for K-12 Science Education (National Research Council, Citation2012).Footnote1

The case that all students should be educated in science is reliant on a mix of the first three arguments. Yet, in enacted curricula, it is the fourth goal, the career-oriented one, that has always predominated while the other goals have languished in the shadows (Banilower et al., Citation2013; Banilower et al., Citation2018; Lindsay & Otero, Citation2023; Mehta & Fine, Citation2019). In pursuit of this fourth goal, it is the practices of expert scientists that have served as a model to which science education should attend. ‘Knowing science’ – even as a citizen or consumer – has been equated with the capability to know and think like an expert scientist (Roberts, Citation2011)

Effectively, it is the economic imperative that school science should produce the next generation of scientific and technical personnel that has eclipsed Rudolph’s first three goals. Indeed, the economic argument has been invoked so many times over the past hundred years that it has become an unchallenged mantra of faith. offers a summary of a sample of the influential reports that have propagated this message over the past 100 years.

Table 1. Sample of reports from 1918–2014 putting the case for the fourth goal of science education.

In contrast, Beyond 2000: Science Education for the Future (Millar & Osborne, Citation1998) developed a fuller, more coherent, and articulate vision of what an education for the non-scientist might be – one that was more closely aligned with Rudolph’s other three goals. This report was exceptional in that it led to the development of a high school curriculum (Millar, Citation2006) that was supported by a commercial publisher, and then fully implemented in a large minority of UK schools. As such, this curriculum provided a foundational model focused on Rudolph’s first three goals which were compulsory for all students and an additional module for those who wished to pursue further study of academic science. This program, however, was resisted by the political forces who saw it as a threat to the traditional form of science education, and this reform was ultimately abandoned when new standards were introduced with a change of government (Millar, this issue).

This singular focus on the Rudolph’s fourth goal has been perpetuated in part by the rhetoric of a scientific community that has sought to prioritise the future supply of professional scientists, and sustain their own funding (Charette, Citation2013; Palmer et al., Citation2018; Teitelbaum, Citation2007). Yet only 5.5% of the current workforce in the U.S specifically requires such technical knowledge (National Science Board, Citation2022). Even considering the additional 8.4% who work in Science and Engineering related professions, there remains the 86.1% (7 out of every 8) whose legitimate needs are ignored by the primary focus of standard science curricula. Thus, while the needs of the majority are acknowledged in principle, the reality is that they have been continually and unjustly neglected for the interests of a minority.

Our view is that the needs of the majority, the 86%, are even stronger and more visible today than they ever were. As Polman et al. (Citation2014) argue:

Young people weigh the health risks in getting a tattoo, playing football after a concussion, or going to a tanning salon. On a daily basis, they decide whether to drink soda and the latest boutique sports drinks, eat fast food, or buy a bracelet that claims to boost strength. They worry about their dad who has diabetes and their grandmother fighting breast cancer. In the future, they and their family members will decide whether to try alternative medicines, new drugs, or undergo an invasive procedure for health conditions they face.

Moreover, to make informed choices when answering these and other questions we are increasingly dependent on the expertise of others – something which philosophers have called epistemic dependence (Hardwig, Citation1985). Being epistemically dependent requires students to recognise the limits of their own knowledge – in short, epistemic humility. A primary challenge of any education for citizenship, then, becomes reducing the gap between the expert and the non-expert, by helping to build the basis for informed trust. Students have to be educated in the capabilities necessary to evaluate specialised expertise and recognise the bounded nature of their own knowledge (Nichols, Citation2017; Simon, Citation1955) – a situation whose challenge is captured by Goldman (Citation2001, p. 109) in our initial quote. How then, can we help students learn who speaks for science, and, why they should trust the consensus of the relevant scientific experts (Oreskes, Citation2019)? Essentially, why should we give pre-eminence to Rudolph’s first three goals, and how should they be realised?

The neglect of the first three goals of science education persists – in part because there has been little emergent consensus around how to articulate an alternate conception of scientific literacy. Indeed, the field has seen multiple attempts, over many decades, to conceptualise ‘scientific literacy’ (DeBoer, Citation2000; Norris & Phillips, Citation2009; Roberts, Citation2007). No consensus has emerged, however. The consequence is that ‘scientific literacy’ has been reduced to little more than an ambiguous programmatic term – one which all can endorse, while holding conflicting conceptions of what exactly it means (Norris et al., Citation2014). Moreover, this multiplicity of divergent meanings has led to a failure to operationalise any of these definitions as concrete competencies or assessable outcomes in the K-12 curriculum. For example, less than five out of the 200 performance expectations in the NGSS can even remotely be characterised as measuring the abilities required to distinguish trustworthy scientific information from misinformed or bogus claims.

In this paper we seek to salvage scientific literacy from such vagueness and lack of clarity by describing some specific outcomes we believe any set of standards and their associated curricula for all must, at a minimum, address. Core to our argument is that any science education of value, whatever else it may do, must prepare students to become competent outsiders – that is, non-experts with sufficient knowledge of, and about science to make evaluative judgements of credibility that can warrant their trust in science-related information (Feinstein, Citation2011).

We begin with a critique of what exists, arguing that current standards are based on two flawed assumptions which are unjustified. We then discuss the nature of the challenge: how best to educate students (even future scientists) who, as non-experts, will be epistemically dependent on experts. This leads us to define two essential curriculum components: (1) an understanding of how the social structure of science supports the production of reliable knowledge and how this knowledge is then shared; and (2) an appreciation of the overall structure of scientific knowledge and its disciplines, its foundational theories and concepts, and how scientists reason from empirical evidence. We conclude with a vision of ‘the way forward’ and how it might be enacted.

Two persistent problems with science education

Developing any vision of what should be done is dependent on identifying what is missing or neglected, and yet important. Existing curricula draw consistently on two notions that we regard as deeply problematic. Both arise from the overemphasis on educating future scientists. The first is a failure to recognise the inevitable and inescapable reliance of non-scientists (including scientist themselves when issues fall outside their own specialism) on scientific experts, and what the consequences are. The second is a belief that a school-based knowledge of science is sufficient for most to achieve intellectual independence.

Our modern culture divides its intellectual labor. Specialised knowledge is distributed across a diverse array of experts (Nichols, Citation2017). Our ability to know everything, and even the time to learn it, is limited. In short, our individual knowledge is bounded (Simon, Citation1955). We rely on others who know more than we do for a whole range of specific topics (Goldman, Citation2001; Hardwig, Citation1985). This condition of epistemic dependence is especially true for science. The non-expert simply does not have the expertise to spot experimental flaws, to recognise cherry-picked data, to detect flawed statistical analyses, to consider unstated alternative hypotheses, and more. Evaluating complex scientific evidence and explanations can only be done competently by scientific experts and not the lay public. As we cannot all be science insiders, we must learn to be competent outsiders.

Accordingly, the primary challenge for the non-expert is how to find expert sources and assess their credibility. Whose expertise can we trust (Goldman, Citation2001)? In the case of lawyers, doctors or plumbers, such judgements are often made on the basis of professional reputation or personal recommendation (Origgi, Citation2019). The case of science, however, is different. We are generally not concerned with the judgments or advice of individual scientists. Rather, scientific knowledge is established by a consensus of relevant experts. We trust the critical dialogue among them to have exposed any biases and filtered out errors.

Being a competent outsider involves trust – but not blind trust as we have good grounds for trusting others. For example, most readers of this journal probably accept the anthropogenic basis of climate change. Few, if any (we suspect), have reviewed the vast body of evidence or the arguments and assessed it all for themselves. Even the authors of the authoritative report of the Intergovernmental Panel on Climate Change (IPCC, Citation2021) have not done that. Rather, the IPCC members trust each other’s conclusions. They (and we) trust their expertise. They (and we) trust that the claims have been peer reviewed – namely, that each claim has been vetted by fellow experts. As a consequence, we are justified in believing that the findings represent the consensus of relevant experts. In addition, we trust the specific news media (as responsible gatekeepers) to report that consensus faithfully. So, while we rely on trust, it is, nevertheless, informed trust.

An internet that provides instant access to a universe of information leads many to wonder, ‘Why do I need expertise when I have access to the evidence myself?’ (Lynch, Citation2016)? Indeed, many a website invites us to ‘do your own research’ (DYOR) (Ballantyne et al., Citation2022). While seductive, this appeal invites us to use capabilities we mostly do not have. In other words, we all too readily succumb to epistemic hubris: a belief that we know more than we actually do. Much better would be to adopt a posture of epistemic humility: recognising the limits of our knowledge and competence (Norris, Citation1997).

The faith in our epistemic capabilities is sustained by the second assumption of most science education – the implicit myth of intellectual independence. This is the belief that a school-based knowledge of science is sufficient to evaluate the evidence for scientific arguments on our own. It is argued, for instance, that the knowledge developed by school science will enable students to ‘generate and evaluate scientific evidence and explanations’; ‘understand the nature and development of scientific knowledge’; and ‘participate productively in scientific practices and discourse’ (National Research Council, Citation2007) and thus become a ‘critical consumer of scientific information’ (National Research Council, Citation2012)(p. 9). Essentially, the NGSS, and so many others like them, take for granted an assumption that a commonly shared, career-oriented curriculum can also achieve the other three goals of science education. As a consequence, students are presented with a landscape of science populated with such features as Ohm’s Law, the Krebs cycle, ox-redox reactions, benzene rings, diffraction and refraction, molar equivalents, mitosis, meiosis, gas laws, frictionless motion and many more landmarks. For most students, this is an experience of a ‘tortuous technical highway to specialization’ (French, Citation1952) which results in little more than a ‘miscellany of facts’ (Cohen, Citation1952) whose value is never appreciated, and whom most abandon at the first available opportunity (Osborne et al., Citation2003; Potvin & Hasni, Citation2014). Moreover, even with this grounding, intellectual independence is simply an enduring chimera.

For example, those who believe in the sufficiency of their own intellectual capabilities can easily be deceived by plausible (but incomplete) arguments. For instance: are N-95 masks useless for controlling infections by COVID-19 because the virus particles (which are commonly 0.1 µm) are much smaller than the holes (which are commonly 5 µm or more)? Such an argument would suggest that they are a very poor sieve and not effective against infection. What reason would the school-trained student have to doubt it? The reason that masks are effective, however, is clear to the specialist. They know that the viruses are transmitted attached to much larger respiratory droplets. In addition, the particles are in constant random motion, technically known as Brownian motion, and thus likely to hit the mesh fibers as they pass through the mask. The better analogy for a mask is not one of a flat sieve but of multiple layers of a spider’s web (Caulfield & Wineburg, Citation2023).

Students are now awash in a sea of such (mis)information and plausible but flawed arguments propounded by conspiracy theorists, snake-oil salesmen, and those with ideological or political agendas (Höttecke & Allchin, Citation2020; Kozyreva et al., Citation2020; McIntyre, Citation2018; Nichols, Citation2017). And while voices of misinformation have always existed, the internet and social media have provided a much louder megaphone and, more importantly, the means to avoid traditional media gatekeepers such as science journalists (Höttecke & Allchin, Citation2020). The widespread acceptance of unfounded claims (such as the idea that vaccines cause autism, that the Earth is flat, or that climate change is a hoax) is of grave concern. For, while true knowledge is a collective good, erroneous or fake knowledge can be a danger – both individually and collectively. For instance, the idea that vaccines are unsafe endangers not only the lives of those who hold this idea, but the whole community that depends on a high level of vaccination to ensure its health. Moreover, research indicates that people of color and marginal populations are relatively more susceptible to misinformation.Footnote2 Reforming science education in this way thus also addresses issues of social justice.

Without some foundations of critical competency, future citizens, as non-experts, remain vulnerable to the claims made by those who would falsely claim to be experts. And without those foundations, citizens are then forced to choose between unquestioning belief, or alternatively, outright rejection. Developing a middle ground of informed trust and reducing the gulf between these two dichotomous choices must, therefore, be a central focus of any science education for all, especially if its avowed goal is to prepare ‘scientifically literate’ citizens and consumers.

Educating students for informed epistemic trust – envisioning science literacy for the twenty-first century

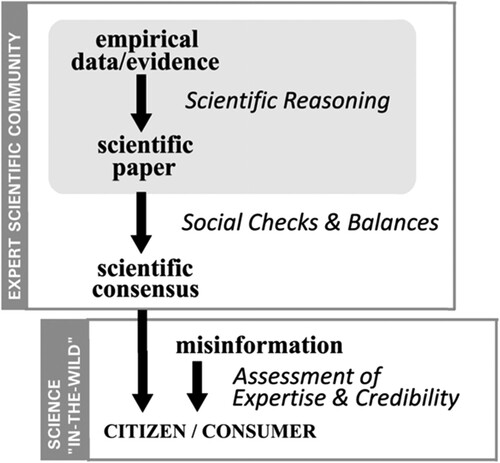

To develop the competencies to exercise informed epistemic trust in science, an organising framework is provided in (Allchin et al., Citation2024). This maps the construction and trajectory of scientific knowledge on its long pathway from test tubes to YouTube. We argue that reducing the gap between the expert and the non-expert, and developing the capabilities to construct informed trust, requires four elements represented in .

An understanding of the social practices of science and the institutional structures that enable scientists to produce reliable knowledge (the Social Checks and Balances in the box labeled ‘Expert Scientific Community’).

An appreciation of the nature and potential pitfalls of mediated communication (the box labeled ‘Science-in-the-Wild’).

A familiarity with the major explanatory theories developed by science of the natural world and the basic entities that exist (represented by the ‘scientific consensus’ in ).

Some appreciation of the empirical basis of science and of the diversity of modes of reasoning used to warrant the claims advanced by science (shaded box labeled ‘Scientific Reasoning’).

Figure 1. The pathway of scientific information ‘from test tubes to YouTube’. Reproduced from Allchin et al. (Citation2024).

In what follows, each of these elements (a)-(d) is addressed separately.

(A) The social practices of science

The ultimate goal of science is the production of reliable knowledge. Non-experts first need to know just how that knowledge is generated and validated. Unfortunately, the image presented in much current instruction is a caricature of a singular, mythical ‘scientific method’: Scientists develop hypotheses. Then they devise tests. And finally, they make simple logical conclusions based on the results. They then publish their conclusions in a scientific journal. There is, of course, some truth to this (shaded box in ). But in this view, any published paper or statement by a scientific expert will count as authoritative science. Alas, this is not true. Any single paper may still contain errors. It may be biased by theoretical preferences, by ideology, or by gender, race, culture, or conflicts of interest. Or other scientists may have found conflicting results. To be considered reliable, claims have to be thoroughly reviewed and properly vetted. That is, not only must claims be empirically sound, but they must also survive the slings and arrows of expert critique. Or as Metropolis et al. (Citation1984) expressed it – ‘science is the business of learning not to make the same mistake again’ (p. 177). Sociologist Robert Merton (Citation1973) called it ‘organized skepticism’. Fundamentally then, science is a social process undertaken by a community of experts (Alberts, Citation2022; Holden Thorp et al., Citation2023; Ziman, Citation1968) What knowledge of the social processes of science, then, would be useful to judge scientific claims that lie beyond our knowledge and expertise advanced by unknown individuals?

Checks and balances

Clearly one element to informed trust is an understanding of the role of peer review. Viewed narrowly, ‘peer review’ refers to the process by which fellow experts evaluate written reports to determine their suitability for publication in academic journals, conference proceedings, or books. But peer review is not perfect. The process is not designed to catch every logical or methodological error in a scientific study, let alone to detect deliberate fraud. For example, peer reviewers do not attempt to replicate the experiments or even the statistical analyses described. Rather, the authors’ work is taken as having been conducted in good faith, by individuals of suitable skill who have correctly carried out the procedures described. And while peer review is an important filter for detecting error and low-quality (or non-expert) work, it cannot be a guarantor of correctness. By situating the work in the context of other research, however, it merely enriches the pool of published papers of work that is considered as competent and a potential contribution to knowledge, however small.

What the competent outsider needs to appreciate and understand is that the scientific community also relies on peer review in a much broader sense. Scientists begin by vetting one another’s work long before a manuscript is submitted to a journal, and continue to do so long after publication. Most research funding is allocated through peer review of competitive grant proposals. Later, peers critique each other’s work in the early stages of development: at scientific meetings; by sharing draft papers through informal communication networks; and in response to working papers or preprints posted to online such repositories as arXiv, bioRxiv, and medRxiv. Scientists also review and discuss each other’s work after reports have been published, either in person, through correspondence, in meta-analyses of the research on a given topic, or on social and online platforms, such as pubPeer, that have been designed specifically for this purpose. Such review enables a more thorough evaluation through peer criticism at all stages of the process – all of which helps expose errors of execution and biases in interpretation (Longino, Citation1990; Ziman, Citation2000). In short, science has developed a set of social practices that enable and support epistemic checks and balances. Such knowledge is key to to the basis of the informed trust in claims that lie beyond our sphere of expertise.

The significance of consensus

No scientific claim is formally settled until the relevant experts agree and the community arrives at a consensus. Consensus is the result of sustained discourse and negotiation through successive rounds of research. Results and models must demonstrably guide further investigation. Exceptions and anomalies must be examined; limits of generalisation, tested. Contradictory experimental results must be resolved; conflicting theoretical interpretations, reconciled (Allchin, Citation2012; Ziman, Citation2000). Confronted with any scientific claim, the basic question the competent outsider must ask is whether there exists any consensus amongst scientific experts.

Yet, there are many scientific topics in ongoing research on which experts disagree – sometimes vehemently. Outsiders may portray this as a weakness: namely, if scientists cannot agree, then surely the evidence and arguments are worthless? The reality is exactly the opposite. Debate is a normal part of science-in-the-making. More fundamentally, it is a sign of a healthy, active scientific community, still engaged in research. Ideas which survive attain the apotheosis of scientific work – a consensus amongst the scientific community. So widely held and agreed are they that they then populate the pages of standard scientific textbooks (Latour & Woolgar, Citation1986).

Any consensus is the product of a body of work, sometimes taking decades, which has undergone critical examination. Institutions, such as the AAAS, CDC, FDA, EPA, IPCC, WHO, and various national academies are the formal bodies of experts that help to debate and document any established consensus (see , top box). They support the structure of critical scientific discourse by publishing journals and sponsoring conferences. Sometimes, they also convene panels to help articulate the scientific consensus and to assist in communicating that knowledge in public statements or outreach. As such, scientific institutions are another important part of the social system of science.

Identifying if an expert consensus exists is, therefore, what the competent outsider should be seeking to do. It is an essential element to establishing warranted trust. And such trust is integral to accepting the scientific conclusions on the safety of vaccines, GMO foods, the efficacy of drugs, the dangers of vaping, and more (Oreskes, Citation2019).

Yet virtually no science education standards or institutionalised curricula address these elements and their importance, even, ironically, those curricula that aim to teach the nature of science, ‘how science works’ or what it means to be ‘working scientifically’. Remediating this deficiency must be an urgent priority.

(B) The challenges of science communication

Establishing that a consensus of the relevant scientific experts is a trustworthy benchmark for the competent outsider is an important foundation. One might imagine that this should solve the dilemma of who, or what, to trust. But consider a notable contemporary case. Should we vaccinate our children? Experts from the Centers for Disease Control (CDC) and other medical institutions recommend it strongly. But many contrarian voices exist – among them, political leaders, celebrities, and even some doctors. They, too, often appeal to science. Some contend that the scientists at the CDC or at pharmaceutical companies are poisoned by ideological corruption and conflict of interest. Whose view of ‘science’ should one trust?

The problem for the non-expert is one of distinguishing genuine science from faux science in the untamed ‘wilds’ of the internet and social media. This is the science that lies outside the boundaries of the expert scientific community: what we choose to call ‘science-in-the-wild’. (e.g. (Gieryn, Citation1999); see lower box in ). While attempts to present bogus claims as legitimate science have always existed, social media and the internet provide an environment which has enabled an invasive species of misinformation disguised as science to flourish. How is the competent outsider – a non-expert – to decide what counts as science or who speaks for science (Allchin, Citation2012, Citation2022)?

The purveyors of disinformation know that science occupies the epistemic high ground. Hence, rather than reject the science outright, they claim that their work is legitimate science. To this end, they often endeavor to imitate the hallmarks of science, and to make their clams look like they are credible science (Allchin, Citation2022). For example, they may claim expertise, but use false credentials. They may report results from a journal, but one that is not peer-reviewed. To discount the consensus, they may offer cherry-picked evidence, or present plausible but ultimately misleading arguments. Or they may even tout a bogus ‘alternative’ consensus such as the long lists of signatures against climate change assembled in the 1990s – for example, the Leipzig Declaration and the Oregon Petition (Goldberg et al., Citation2022). They may also create bogus institutions, with impressive names, pretending to represent an authentic scientific community. In short, this is a problem that has emerged in recent decades because of the way science is used and abused.

The first judgement to be made by the competent outsider of any science-related claim should not be ‘what is their argument?’ nor ‘what is their evidence?’ but: who is making this claim, and for what purpose? Do they exhibit a conflict of interest: a context in which they may gain something for themselves by misleading us into believing certain things? For example, fossil fuel companies profit from denying climate change, or alternatively, enlisting others to do so on their behalf (Union of Concerned Scientists, Citation2007). Similarly, manufacturers of sugared beverages benefit from misleading scientific messages about the role of fats vs. sugars in obesity, or the role of exercise vs. caloric intake in dieting (Nestle, Citation2018; O’Connor, Citation2015).

The second judgement is whether the source reporting the claims has either legitimate authority or legitimate expertise to represent the scientific consensus. Science is a highly specialised activity. Normally the minimum requirement for expertise is a PhD within a well-defined area. Further markers of expertise are credentials such as a body of relevant publications in well-respected scientific journals, a program of funded research, or an academic position within a university or research organisation with standing within the scientific community. Other markers of note are awards or distinctions, such as elected Fellows of scientific institutions, Nobel or other prestigious positions within scientific institutions. Alternatively, an indicator of credibility is a track record of reliable and informed reporting and the authority to speak for science.

All of these checks establish whether the source can vouch for the scientific consensus. For example, scientific institutions and leading experts may generally be considered reliable sources. Experienced science journalists, too, may be knowledgeable and (even if they are not scientists themselves) have the resources to consult with the appropriate experts in the field (Osborne et al., Citation2022; Zucker et al., Citation2020). In addition, students should be alerted to various persuasive tactics. For example, industries may wish to generate an aura of scientific uncertainty in order to postpone public policy actions adverse to their business, even when the relevant scientists have reached a firm consensus (Michaels, Citation2020; Oreskes & Conway, Citation2010).Footnote3 An ambitious dissenter may style himself as another Galileo – a heroic lone voice who defends truth against an intellectually corrupted establishment (Johnson, Citation2018). Efforts at persuasion may come in the form of flooding the media with repeated messages, or appeals to one’s social group or identity, along with many other techniques (Allchin, Citation2022). Others may encourage the naive consumer to ‘Do Your Own Research’ and then provide biased information. Indeed, there are many strategies in the ‘disinformation playbook’ (Union of Concerned Scientists, Citation2019). Foremost is that the purveyors of misinformation try to distract the consumer from ever probing their credibility.

Making these judgements of credibility requires a suite of competencies developed by those working in media literacy, for example: ‘click restraint’ – looking not at the first source that emerges in a Google Search, but for the one that might be the most trustworthy; or ‘lateral reading’ – opening another tab, exploring what can be learnt about the source the source using other reputable sources using dependable sites like Wikipedia, Snopes.com and websites of respected institutions (Breakstone et al., Citation2021b; Caulfield & Wineburg, Citation2023).

Such competencies must be developed in science classrooms because the warrants so commonly used are ostensibly scientific. Only science teachers can illuminate what distinguishes good science from the bad – essentially the significance of consensus, the role of peer review, and what the markers of scientific expertise are. In short, who can legitimately speak for science? Hence, as argued in the report Science Education in an Age of Misinformation (Osborne et al., Citation2022), media literacy has now become an essential component of scientific literacy in the (Mis)Information Age of the twenty-first century.

(C) An understanding of the major explanatory ideas of science

The third enduring goal of science education in Rudolph’s analysis embodies the humanist view that science is a great intellectual achievement. Students are heirs to the major explanatory ideas that have helped us to understand the material and living world (Kind & Osborne, Citation2017; Oakeshott, Citation1965; Rutherford & Ahlgren, Citation1989). We could not agree more. Understanding a set of basic ‘big ideas’ and their intellectual achievement has to be a major goal articulated in any vision of science education (Harlen, Citation2010). Moreover, it is an essential knowledge for the competent outsider as the trust between the expert and non-expert is increased when the concepts and ideas that permeate the discourse of the expert are at least recognisable and familiar to the non-expert.

Moreover, incorporating this goal more overtly would also encourage the exploration of the scientific contributions from diverse cultures and by scientists of all backgrounds. With less emphasis on the narrow utilitarian apprenticeship model there would be more opportunity to celebrate the cultural contexts and human dimensions in which scientific knowledge is produced and valued. Teachers are ready to describe such achievements as the Big Horn Medicine Wheel (of Native American astronomers), the metallurgy of ancient India (the production of wootz steel, or the first smelting of zinc), the compass of China (or other innovations such as the water clock, paper, printing, earthquake vane, and gunpowder), the agronomy of equatorial Africans, Oaxacan campesinos or farmers in the Amazonian Varzea, to name just a few (e.g. Allchin, Citation2019; Allchin & DeKosky, Citation2008; Ronan, Citation1982; Teresi, Citation2010) as well as the scientific contributions of diverse people: women, minorities, and those whose social status obscured recognition of their intellect, creativity and labor (e.g. Allchin, Citation1997; Matyas & Haley-Oliphant, Citation1997; Shapin, Citation1989; Teresi, Citation2010).

As one of us wrote 25 years ago, a fundamental concern is that:

We have lost sight of the major ideas that science has to tell. To borrow an architectural metaphor, it is impossible to see the whole building if we focus too closely on the individual bricks. Yet, without a change of focus, it is impossible to see whether you are looking at St Paul’s Cathedral or a pile of bricks, or to appreciate what it is that makes St Paul’s one the world’s great churches. In the same way, an over-concentration on the detailed content of science may prevent students appreciating why Dalton’s ideas about atoms, or Darwin’s ideas about natural selection, are among the most powerful and significant pieces of knowledge we possess. Consequently, it is perhaps unsurprising that many pupils emerge from their formal science education with the feeling that the knowledge they acquired had as much value as a pile of bricks and that the task of constructing any edifice of note was simply too daunting – the preserve of the boffins of the scientific elite. (Millar & Osborne, Citation1998) (p. 13)

Here, however, we wish to highlight another important reason for learning the major explanatory concepts of science necessary to achieving a goal of developing competent outsiders. The trust between the expert and the non-expert is enhanced when the concepts and ideas that permeate the discourse of the expert are at least recognisable and familiar to the non-expert. And while, competent outsiders may not be experts, they are nonetheless entitled to an education that enables them to understand the underlying ideas in experts’ conclusions such as atoms, cells, DNA, energy, forces, photosynthesis, nutrients, geological history, ecosystems. For instance, a conversation with a doctor or a veterinarian will be facilitated by an understanding of the basic structure and function of the mammalian body, and the nature of common diseases and their prevention. Jury members need to understand in overview claims about liability in bridge collapses or oil spills, or forensic evidence in criminal cases, even though they are not experts themselves. Such knowledge and understanding also contributes critically to ethical discussions and public policy about genetic engineering, gene therapy, artificial intelligence, high-tech weaponry, or sharing agricultural or energy technologies with developing nations. Thus, learning a foundational core of the major (rather than minor) explanatory concepts that guides the work of scientists and engineers is an essential element of educating the competent outsider, as well as addressing Rudolph’s third goal of illuminating the achievements of science and its cultural value. Clearly what specific ideas and concepts should be included is a matter of further debate.

The failure of the standard model of science education to achieve this goal – by focusing on the bricks and not the edifice – leaves students bereft of that would help them to make better sense of science and an understanding of the social practices that see contention and disagreement as a positive feature. Rather, in singularly pursuing the needs of the future scientists, often answering questions they never asked, the evidence shows young people’s enthusiasms for science has been sacrificed ‘on the altar of academic science education for all’ (Aikenhead, Citation2023; Osborne et al., Citation2003; Potvin & Hasni, Citation2014). Nurturing interest in pursuing science as a career requires students first to be fascinated, intrigued and inspired by the prospect. In short, teaching the cultural value of science, its social relevance and the diversity of contributors may, ironically, not only be an essential element of the education of the competent outsider, but also a prerequisite to the subsidiary economic goal of sustaining a healthy research workforce. For, above all, enthusiasm for the academic study of science requires interest and fascination.

(D) Scientific reasoning

Rudolph’s first goal – imbuing young people with scientific modes of reasoning is also an important element of educating the competent outsider. Arguments for its value have been made ever since its inception (Dewey, Citation1910; Layton, Citation1973). Science uses a diversity of styles of reasoning (Crombie, Citation1994; Kind & Osborne, Citation2017) and illustrating these forms of reasoning should be a significant feature of any curriculum. Why is it, then, that science education commonly restricts itself to the hypothetico-deductive method? Despite decades of criticism, the widespread myth still exists that the production of reliable knowledge simply requires the application of a singular scientific method. Essentially, that all that reliable knowledge requires is the application of a hypothetic-deductive argument using experiments that have controlled all the relevant variablesFootnote4 (Rudolph, Citation2019).

Yet many arguments in science are abductive – developing explanatory models that seek the simplest and most likely conclusion from evidence. And they are not hard to exemplify. For instance, the following example drawn from the experience for one of us from the early Nuffield Schemes in Britain.

As class of 11-year-olds, we began by growing crystals on pieces of string in saturated copper sulphate solution. This required several attempts, but after 2–3 weeks we all had a selection of crystals. We removed them from the solution and compared them – most of us childishly obsessed with who had the largest. However, the teacher asked us to look at the crystals and tell us what we noticed. They were blue. They had a strange shape (rhomboidal). None of us had grasped the feature to which he next drew our attention – that they were all the same shape regardless of size. ‘How could that be?’ he asked. The obvious observation was profoundly dumbfounding. Here, the teacher helped us interpret the puzzle. Had we seen grocery displays of stacked oranges? ‘Yes, of course’, we replied. But had we noted the clean lines like our crystals? ‘Is the shape of the stack not the same whether it is a small stack or a large stack?’ Here, we had a model for understanding the atomic structure of the copper sulphate, built out of particles too small to see.

And, as well as abductive reasoning, inductive reasoning is essential to establishing patterns in the world. Examples are the generalisation that all mammals are warm-blooded and respire; all metal oxides are alkali when dissolved in water; or forces always come in pairs. Essentially, a major endeavor of science is the search for patterns. This often requires probabilistic reasoning, where scientists use statistical tests to evaluate the likelihood that any pattern might exist by chance. But, once identified, the work of developing an explanatory hypothesis begins.

Science is a human endeavor, however. So it should surprise no one that scientific reasoning may carry traces of its sociocultural contexts or that individual scientists may exhibit lapses in reasoning (Allchin, Citation2001). Or even cultural biases, based on gender, race, class, religion, nationality, and so on (e.g. Allchin, Citation2008a). Exploring cases of these offers an important window into the nature of science-in-the-making (Allchin, Citation2012, Citation2020).

Students will hardly be able to learn about errors and biases through the familiar vehicle of student-led inquiry. Classroom inquiries are typically contrived and highly artificial in scope and methods. Rather, students will need to engage with case studies of science, whether contemporary or historical (Allchin et al., Citation2014). That is, the most effective way to appreciate the meandering (sometimes wayward) paths of reasoning is to follow the narratives of real scientists (e.g. (Allchin, Citation2012)) – even those of great renown (Allchin, Citation2008b, Citation2009). Case studies show how scientists manage these errors. Here, the social practices of science – described above – are critical. Students need to see how such problems are resolved within the expert community, and how consensus typically marks the successful resolution of such episodes. Individual scientific reasoning is important, yes. But it is also regulated through the social dimension of science.

Exemplifying the core commitment of science to evidence and explaining the forms of reasoning it uses are an essential component of a science education whose goal is informed epistemic trust. Understanding how science justifies its claims to know illuminates the epistemic foundations on which scientific beliefs rest.

The way forward

By failing to offer a window into the social practices that make science an epistemological achievement (Harré, Citation1986; Ziman, Citation1968), current science education fails to show the important reasons for why (and precisely when) science should be trusted. By sustaining the utilitarian approach that serves the needs of only a small minority – future scientists – its salience and relevance for most students is questionable, if not alienating. Change is needed urgently.

But can change be achieved? Science education since the 1960s has seen a cavalcade of repeated attempts at thoughtful and careful reforms that have all been and gone (` in the US, Harvard Project Physics, ChemComm, BioComm, Klopfer’s case histories, or in the UK, SATIS, Science in Process, Science at Work). Veteran educators may justifiably be cynical of any attempt at genuine, long-lasting change at the institutional level, exposed as it is to the winds of politics and ideological and academic fads. For instance, even twenty-first Century Science (Scott et al., Citation2007), the product of the report Beyond 2000: Science Education for the Future fell prey to the traditionalists who, drawing on the work of E.D Hirsch (Citation1987) and Daniel Willingham (Citation2008), argued for a set of top-down standards emphasising foundational knowledge.Footnote5

For an educational community interested in transformation and innovation, these experiences are salutary. They force us to recognise that the forms and structures of science education have become institutionalised and that the neo-liberal project has transformed schools from sites of learning and an exploration of culture, meaning and identity into institutions whose role is to produce the next generation of workers and sustain the research-based competitiveness of the economy (Apple, Citation1990; Ball, Citation1998). Schools are monitored through national and state tests and teachers are under pressure to ensure that their students do well. The overwhelming consequence is an impoverished form of education marked by a combination of ‘contracting curricular content, fragmentation of the structure of knowledge, and increasing teacher-centered pedagogy in response to high stakes testing’ (Au, Citation2007) (p. 263).

The lesson seems to be clear. Attempting to change existing institutional structures exogenously is likely to be futile – an exercise with many thousands of hours of effort spent on arguing for different standards and producing curricula which, like their predecessors, will wither on the vine. Instead, we argue that the site of innovation must consist of small piecemeal local steps undertaken endogenously in the classroom. If this is to happen, there must be space for teachers to follow both theirs and their students’ interests. For, if any curriculum is to succeed, teachers must take ownership and transform it into something which they perceive to be a meaningful and valued education. To do that they must have a degree of flexibility, authority and trust to make it their own. As Ogborn argues:

One of the strongest conclusions to come out of decades of studies of the success and failure of a wide variety of curriculum innovations is that innovations succeed when teachers feel a sense of ownership of the innovation: that it belongs to them and is not simply imposed on them. (Ogborn, Citation2002, p. 143)

Thus, the first step in the road to facilitating any vision such as the one we have presented here is to argue for fewer standards. Fewer detailed checklists. Fewer tests. Instead, we need more respect for teachers who must be recognised as professionals. Professionals trained and capable of developing and implementing their own local curriculum, guided by a broad framework outlining the overall scope and major themes. What Dewey wrote over a hundred years ago is as true today as it was then:

‘The dictation, in theory at least, of the subject matter to be taught, to the teacher who is to engage in the natural work of instruction, and frequently, under the name of close supervision, … mean nothing more or less than the deliberate restriction of intelligence, the imprisoning of the spirit. (Dewey, Citation1916, p. 196)

One natural consequence of this shift in the locus of control would be the adaptation of curricula to particular local contexts, and to the make-up and character of the local student population and of the surrounding community. ‘Science for all’ will, as a matter of course, have to acknowledge and accommodate diversity – in culture, ethnicity, socioeconomic class – in common with other visions of educational change promoted in recent years (Bencze et al., Citation2020; Roth & Lee, Citation2004; Sjöström & Eilks, Citation2018). Local adaptation will also no doubt promote contextualising the science in the social issues and personal concerns that are especially relevant in those local contexts (but which vary widely from place to place). We see these as both inevitable and desirable outcomes but leave it to others to elaborate on how those approaches might unfold.

Summary and conclusion

We have argued that the current curricular structures of school science are failing the needs of most students. Hopes that the latest incarnation of standards – notably the Next Generation Science Standards in the US – might offer a transformative curriculum are misplaced. The rise of misinformation in the media (along with those who would exploit the epistemic authority of science with plausible sounding scientific arguments and cherry-picked evidence), coupled with research which shows how ill-prepared young people are to deal with this challenge (Breakstone et al., Citation2021a), suggests that there is an urgent challenge in need of addressing. Preparing students to become competent outsiders, able to deal with ‘science in the wild,’ requires new standards. These must place less emphasis on mastering the foundational content and the ‘scientific practices’ required for future careers. Rather, any new standards must offer an understanding of what the foundations of epistemic trust in science are, the reasoning that has yielded such outcomes, and the social practices that ensure the production of reliable knowledge, as well as its faithful communication – all of which are elements of the ‘nature of science’. Fortunately, a rising tide of scholars and authors are now pointing to how that challenge can be addressed effectively (Caulfield & Wineburg, Citation2023; Kozyreva et al., Citation2022).

Current standards also fail to represent the intellectual achievement of science and its diverse origins; they fail to offer students a justification for the value of the time spent studying science; and they fail to provide teachers the professional autonomy they rightfully deserve. If we are to restore trust in science among students, we must first restore trust in science teachers themselves. We must give them the space to craft a vision of education informed by (and balancing) both general social goals and local needs. We believe that if we provide standards and curricula informed by arguments such as those we have made here, teachers can transform their classroom, albeit in many different, locally adapted forms, and provide a more meaningful and relevant science education for all their students.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1 The NRC list matches Rudolph’s almost exactly, merely separating the first purpose into two: (a) developing the ability ‘to engage in public discussions’; and (b) to become ‘careful consumers of scientific and technological information related to their everyday lives’ (Rudolph, Citation2022, p. 1).

3 A good example of this is the attempt to undermine any need to do anything about climate change by casting doubt on the efficacy of green technologies. See https://counterhate.com/research/new-climate-denial/

4 See Understanding Science (2022) https://undsci.berkeley.edu/the-understanding-science-flowchart-text-description/ or OpenSciEd (2024) https://www.openscied.org/why-openscied/instructional-model/

5 A similar outcome can be seen in the innovative attempt to replace Algebra II by a Data Science course in California – a course offering a mix of math, statistics and computer science with widely agreed upon standards. Developed by those who saw Algebra II as a gatekeeper to college admission with many inherent inequities for under-served communities, this course was made an alternative to Algebra II for admission to public universities in the state in 2019. In 2023, after faculty protests that this course lowered standards, the State Board withdrew its endorsement of this alternative on the argument that it was insufficiently rigorous Harmon, A. (Citation2023, July 13, 2023). In California, a Math Problem: Does Data Science = Algebra II? New York Times. https://www.nytimes.com/2023/07/13/us/california-math-data-science-algebra.html?searchResultPosition=1. Effectively, the demands of higher education yet again determine what counts as valued education and attempts to address the inequities that surround the role of Algebra II[5] will be thwarted.

References

- Aikenhead, G. S. (2023). Humanistic school science: Research, policy, politics and classrooms. Science Education, 107(2), 237–260. https://doi.org/10.1002/sce.21774

- Alberts, B. (2022). Why trust science. In B. Alberts, R. Heald, K. Hopkin, A. Johnson, D. Morgan, K. Roberts, P. Walter, & R. Heald (Eds.), Essential cell biology (Sixth Edition). New York: W.W. Norton & Co. Available from https://digital.wwnorton.com/ecb6

- Allchin, D. (1997). In the shadow of giants: Boyle’s law?, Bunsen’s burner, Petri’s dish and the politics of scientific renown. SHiPS Network News. http://shipseducation.net/updates/shadows.htm

- Allchin, D. (2001). Error types. Perspectives on Science, 9(1), 38–58. https://doi.org/10.1162/10636140152947786

- Allchin, D. (2008a). Naturalizing as an error-type in biology. Filosofia e História da Biologia, 3, 95–117.

- Allchin, D. (2008b). From the president. The American Biology Teacher, 70(7), 389–389. https://doi.org/10.1662/0002-7685(2008)70[389:ftp]2.0.CO;2

- Allchin, D. (2009). American Biology Teacher, 71, 116–119.

- Allchin, D. (2012). Teaching the nature of science through scientific errors. Science Education, 96(5), 904–926. https://doi.org/10.1002/sce.21019

- Allchin, D. (2019). Science without shiny labs. The American Biology Teacher, 81(1), 61–64. https://doi.org/10.1525/abt.2019.81.1.61

- Allchin, D. (2020). From science as “special” to understanding its errors and justifying trust. Science Education, 104(3), 605–613. https://doi.org/10.1002/sce.21571

- Allchin, D. (2022). Who speaks for science? Science & Education, 31(6), 1475–1492. https://doi.org/10.1007/s11191-021-00257-4

- Allchin, D., & DeKosky, R. (2008). An introduction to the history of science in Non-western tradition. History of Science Society. https://hssonline.org/page/teaching_nonwestern

- Allchin, D., Andersen, H. M., & Nielsen, K. (2014). Complementary approaches to teaching nature of science: Integrating student inquiry, historical cases, and contemporary cases in classroom practice. Science Education, 98(3), 461–486.

- Allchin, D., Bergstrom, C. T., & Osborne, D. (2024). Transforming science education in an age of misinformation. Journal of College Science Teaching, 53, 40–43. https://doi.org/10.1080/0047231X.2023.2292409

- Apple, M. (1990). Ideology and the curriculum (2nd ed.). Routledge.

- Au, W. (2007). High-Stakes testing and curricular control: A qualitative metasynthesis. Educational Researcher, 36(5), 258–267. https://doi.org/10.3102/0013189X07306523

- Avraamidou, L., & Osborne, J. F. (2009). The role of narrative in communicating science. International Journal of Science Education, 31(12), 1683–1707.. http://search.ebscohost.com/login.aspx?direct = true&db = aph&AN = 42746798&site = ehost-live

- Ball, S. (1998). Introduction: International perspectives on education policy. Comparative Education, 34(2), 117–117. https://doi.org/10.1080/03050069828216

- Ballantyne, N., Celniker, J. B., & Dunning, D. (2022). Do your own research. Social Epistemology, 1–16. https://doi.org/10.1080/02691728.2022.2146469

- Banilower, E. R., Sean Smith, P., Weiss, I. R., Malzahn, K. M., Campbell, K. M., & Weis, A. M. (2013). Report of the 2012 national survey of science and mathematics education.

- Banilower, E. R., Smith, P. S., Malzahn, K. A., Plumley, C. L., Gordon, E. M., & Hayes, M. L. (2018). Report of the 2018 NSSME+. Horizon Research, Inc.

- Bencze, L., Pouliot, C., Pedretti, E., Simonneaux, L., Simonneaux, J., & Zeidler, D. (2020). SAQ, SSI and STSE education: Defending and extending “science-in-context”. Cultural Studies of Science Education, 15(3), 825–851. https://doi.org/10.1007/s11422-019-09962-7

- Breakstone, J., Smith, M., Connors, P., Ortega, T., Kerr, D., & Wineburg, S. (2021a). Lateral Reading: College students learn to critically evaluate internet sources in an online course. Harvard Kennedy School (HKS) Misinformation Review, 2.

- Breakstone, J., Smith, M., Wineburg, S., Rapaport, A., Carle, J., Garland, M., & Saavedra, A. (2021b). Students’ civic online reasoning: A national portrait. Educational Researcher, 50(8), 505–515. https://doi.org/10.3102/0013189X211017495

- Caulfield, M., & Wineburg, S. (2023). Verified:How to think straight, Get duped less, and make wise decisions about what to believe online. Harvard University Press.

- Charette, R. N. (2013). The STEM crisis is a myth. IEEE Spectrum (Institute of Electrical and Electronics Engineers). http://spectrum.ieee.org/at-work/education/the-stem-crisis-is-a-myth

- Cohen, I. B. (1952). The education of the public in science. Impact of Science on Society, 3, 67–101.

- Crombie, A. C. (1994). Styles of scientific thinking in the European tradition: The history of argument and explanation especially in the mathematical and biomedical sciences and arts. Duckworth.

- DeBoer, G. (2000). Scientific literacy: Another look at Its historical and contemporary meanings and Its relationship to science education reform. Journal of Research in Science Teaching, 37(6), 582–601. https://doi.org/10.1002/1098-2736(200008)37:6<582::AID-TEA5>3.0.CO;2-L

- Dewey, J. (1910). How we think. D.C.Heath and Company.

- Dewey, J. (1916). Democracy and education. The MacMillan Company.

- Feinstein, N. (2011). Salvaging science literacy. Science Education, 95(1), 168–185. https://doi.org/10.1002/sce.20414

- French, S. J. (1952). General education and speical education in the sciences. In I. B. Cohen, & F. G. Watson (Eds.), General education in science (pp. 16–33). Harvard University Press.

- Gieryn, T. (1999). The cultural boundaries of science: Credibility on the line. University of Chiacgo Press.

- Goldberg, M. H., Gustafson, A., van der Linden, S., Rosenthal, S. A., & Leiserowitz, A. (2022). Communicating the scientific consensus on climate change: Diverse audiences and effects over time. Environment and Behavior, 54. https://doi.org/10.1177/00139165221129539

- Goldman, A. I. (2001). Experts: Which ones should You trust? Philosophy and Phenomenological Research, 63(1), 85–110. https://doi.org/10.1111/j.1933-1592.2001.tb00093.x

- Hamilton, A., Hattie, J., & Wiliam, D. (2023). Making room for impact. Corwin.

- Hardwig, J. (1985). Epistemic dependence. The Journal of Philosophy, 82(7), 335–349. https://doi.org/10.2307/2026523

- Harlen, W. E. (Ed.) (2010). Principles and big ideas of science education. Hatfield, Herts: Association for Science Education.

- Harmon, A. (2023, July 13, 2023). In California, a math problem: Does data science = algebra II? New York Times. https://www.nytimes.com/2023/07/13/us/california-math-data-science-algebra.html?searchResultPosition = 1

- Harré, R. (1986). Varieties of realism: A rationale for the natural sciences. Basil Blackwell.

- Hirsch, E. D. (1987). Cultural literacy: What every American needs to know. Houghton Mifflin.

- Holden Thorp, H., Vinson, V., & Yeston, J. (2023). Strengthening the scientific record. Science, 380(6640), 13. https://doi.org/10.1126/science.adi0333

- Hopmann, S. (2007). Restrained teaching: The common core of didaktik. European Educational Research Journal, 6(2), 109–124. https://doi.org/10.2304/eerj.2007.6.2.109

- Höttecke, D., & Allchin, D. (2020). Reconceptualizing nature-of-science education in the age of social media. Science Education, 104(4), 641–666. https://doi.org/10.1002/sce.21575

- IPCC. (2021). The physical science basis. Contribution of working group I to the sixth assessment report of the intergovernmental panel on climate change. Cambridge University Press.

- Johnson, D. K. (2018). Bad arguments. In Bad Arguments, 152–156. https://doi.org/10.1002/9781119165811.ch27

- Kind, P. M., & Osborne, J. F. (2017). Styles of scientific reasoning: A cultural rationale for science education? Science Education, 101(1), 8–31. https://doi.org/10.1002/sce.21251

- Kozyreva, A., Lewandowsky, S., & Hertwig, R. (2020). Citizens versus the internet: Confronting digital challenges With cognitive tools. Psychological Science in the Public Interest, 21(3), 103–156. https://doi.org/10.1177/1529100620946707

- Kozyreva, A., Lorenz-Spreen, P., Herzog, S. M., Ecker, U. K. H., Lewandowsky, S., Hertwig, R., Ali, A. B.-C., Barzilai, S., Basol, M., Berinsky, A., Betsch, C., Cook, J., Fazio, L. K., Geers, M., Guess, A. M., Huang, H., Larreguy, H., Maertens, R., Panizza, F., … Wineburg, S. (2022). Toolbox of interventions against online misinformation.

- Latour, B., & Woolgar, S. (1986). Laboratory life: The construction of scientific facts (2nd ed.). Princeton University Press.

- Layton, D. (1973). Science for the people: The origins of the school science curriculum in England. Allen and Unwin.

- Lindsay, W. E., & Otero, V. K. (2023). Leveraging purposes and values to motivate and negotiate reform. Science Education, 107, 1531–1560. https://doi.org/10.1002/sce.21815

- Longino, H. E. (1990). Science as social knowledge. Princetown University Press.

- Lynch, M. P. (2016). The internet of US: Knowing more and understanding less in the age of big data. WW Norton & Company.

- Matyas, M. L., & Haley-Oliphant, A. E. (1997). Women life scientists: Past, present, and future: Connecting role models to the classroom curriculum. American Physiological Society.

- McIntyre, L. (2018). Post-Truth. MIT Press.

- Mehta, J., & Fine, S. (2019). In search of deeper learning: The quest to remake the American high school. Harvard University Press.

- Merton, R. K.1973). The sociology of science: Theoretical and empirical investigations. University of Chicago Press.

- Metropolis, N., Rota, G.-C., & Sharp, D. (Eds.). (1984). Oppenheimer, J.R: Uncommon sense. Birkhäuser.

- Michaels, D. (2020). The triumph of doubt: Dark money and the science of deception. Oxford University Press.

- Millar, R. (2006). Twenty first century science: Insights from the design and implementation of a scientific literacy approach in school science. International Journal of Science Education, 28(13), 1499–1521. https://doi.org/10.1080/09500690600718344

- Millar, R., & Osborne, J. F. 1998). Beyond 2000: Science education for the future. King's College.

- National Research Council. (2007). Taking science to school: Learning and teaching in grades K-8. Washington DC: National Research Council.

- National Research Council. (2012). A framework for K-12 science education: Practices, crosscutting concepts, and core ideas. N. A. Press.

- National Science Board. (2022). Science and Engineering Indicators. http://www.nsf.gov/statistics/seind08/front/letter.htm

- Nestle, M. (2018). Unsavory truth. Basic Books.

- Nichols, T. (2017). The death of expertise: The campaign against established knowledge and Why it matters. Oxford University Press.

- Norris, S. P. (1997). Intellectual independence for nonscientists and other content-transcendent goals of science education. Science Education, 81(2), 239–258. https://doi.org/10.1002/(SICI)1098-237X(199704)81:2<239::AID-SCE7>3.0.CO;2-G

- Norris, S. P., & Phillips, L. (2009). Scientific literacy. In D. R. Olson, & N. Torrance (Eds.), Handbook of literacy (pp. 271–285). Cambridge University Press.

- Norris, S. P., Phillips, L., & Burns, D. (2014). Conceptions of scientific literacy: Identifying and evaluating their programmatic elements. In M. Matthews (Ed.), International handbook of research in history, philosophy and science teaching (pp. 1317–1344). Springer.

- Oakeshott, M. (1965). Learning and teaching. In T. Fuller (Ed.), The voice of liberal learning: Michael oakshott on education (pp. 43–62). Yale University Press.

- O’Connor, A. (2015, Aug. 12, 2015). Coca-Cola funds Funds scientists who shift blame for obesity away from bad diets. New York Times. https://archive.nytimes.com/well.blogs.nytimes.com/2015/08/09/coca-cola-funds-scientists-who-shift-blame-for-obesity-away-from-bad-diets/

- Ogborn, J. (2002). Ownership and transformation: Teachers using curriculum innovations. Physics Education, 37(2), 142–146. https://doi.org/10.1088/0031-9120/37/2/307

- Oreskes, N. (2019). Why trust science? Princeton University Press.

- Oreskes, N., & Conway, E. M. (2010). Merchants of doubt. Bloomsbury Press.

- Origgi, G. (2019). Reputation: What it is and why it matters. Princeton University Press.

- Osborne, J., Zucker, A., & Pimentel, D. (2023). Tackling Scientific Misinformation in Science Educationi.

- Osborne, J. F., Pimentel, D., Alberts, B., Allchin, D., Barzilai, S., Bergstrom, C., Coffey, J., Donovan, B., Kivinen, K., K, A., & Wineburg, S. (2022). Science Education in an Age of Misinformation. https://sciedandmisinfo.stanford.edu/

- Osborne, J. F., Simon, S., & Collins, S. (2003). Attitudes towards science: A review of the literature and its implications. International Journal of Science Education, 25(9), 1049–1079. https://doi.org/10.1080/0950069032000032199

- Palmer, S., Campbell, M., Johnson, E., & West, J. (2018). Occupational outcomes for bachelor of science graduates in Australia and implications for undergraduate science curricula. Research in Science Education, 48(5), 989–1006. https://doi.org/10.1007/s11165-016-9595-x

- Pink, D. H. (2011). Drive: The surprising truth about what motivates US. Penguin.

- Polman, J. L., Newman, A., Saul, E. W., & Farrar, C. (2014). Adapting practices of science journalism to foster science literacy. Science Education, 98(5), 766–791. https://doi.org/10.1002/sce.21114

- Potvin, P., & Hasni, A. (2014). Interest, motivation and attitude towards science and technology at K-12 levels: A systematic review of 12 years of educational research. Studies in Science Education, 50(1), 85–129. https://doi.org/10.1080/03057267.2014.881626

- Roberts, D. A. (2007). Scientific literacy/science literacy. In S. Abell, & N. G. Lederman (Eds.), Handbook of research on science education (pp. 729–780) Mahwah, NJ: Lawrence Erlbaum).

- Roberts, D. A. (2011). Competing visions of scientific literacy: Influence of a science curriculum policy image. In C. J. Linder, L. Ostman, D. A. Roberts, P. O. Wickman, G. Erickson, & A. Mackinnon (Eds.), Exploring the landscape of scientific literacy (pp. 11–27). Routledge/Taylor and Francis Group.

- Ronan, C. A. (1982). Science: Its history and development among the world's cultures. Facts on File.

- Roth, W.-M., & Lee, S. (2004). Science education as/for participation in the community. Science Education, 88(2), 263–291. https://doi.org/10.1002/sce.10113

- Rudolph, J. (2022). Why We teach science (and Why We should). Oxford University Press.

- Rudolph, J. L. (2019). How We teach science-what′ s changed, and Why It matters. Harvard University Press.

- Rutherford, F. J., & Ahlgren, A. (1989). Science for All Americans: A project 2061 report. AAAS.

- Scott, P., Amettler, J., Hall, K., Leach, J., Lewis, J., & Ryder, J. (2007). Twenty First Century Science Evaluation Report: Study 1 Knowledge and Understanding. http://www.21stcenturyscience.org/rationale/pilot-evaluation,1493,NA.html

- Shapin, S. (1989). The invisible technician. American Scientist, 77, 554–563.

- Simon, H. A. (1955). A behavioral model of rational choice. The Quarterly Journal of Economics, 69(1), 99–118. https://doi.org/10.2307/1884852

- Sjöström, J., & Eilks, I. (2018). Reconsidering different visions of scientific literacy and science education based on the concept of Bildung. In Y. Dori, Judy, Z. R. Mevarech, & D. R. Baker (Eds.), Cognition, metacognition, and culture in STEM education (pp. 65–88). Springer.

- Teitelbaum, M. (2007, Sept 17-18, 2007). Do We need more scientists and engineers? The National Value of Science Education, University of York.

- Teresi, D. (2010). Lost discoveries: The ancient roots of modern science–from the baby. Simon and Schuster.

- Union of Concerned Scientists. (2007). Smoke, mirrors & hot air: How ExxonMobil uses big tobacco's tactics to manufacture uncertainty on climate science. https://www.ucsusa.org/resources/smoke-mirrors-hot-air.

- Union of Concerned Scientists. (2019). The disinformation playbook. https://www.ucsusa.org/resources/disinformation-playbook

- van Lier, L. (1996). Interaction in the language curriculum. New York: Longman.

- Willingham, D. T. (2008). Critical thinking: Why Is It So hard to teach? Arts Education Policy Review, 109(4), 21–32. https://doi.org/10.3200/AEPR.109.4.21-32

- Ziman, J. (1968). Public knowledge: The social dimension of science. Cambridge University Press.

- Ziman, J. (2000). Real science: What it is and what it means. Cambridge University Press.

- Zucker, A., Noyce, P., & McCullough, A. (2020). Just Say No!. The Science Teacher, 87(5), 24–29. doi:10.2505/4/tst20_087_05_24