?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

In order to find conditions for biologically plausible, cognitive self-organisation, an adequate representation of the final stage of this process is crucial. The implications of this assumption are analysed for the area of visual-word processing, in particular for position-specific top-down processes from a word – to a letter representation. These processes pose a problem to reviewed models of word reading and computational models in general. A solution in the form of a conceptual network is proposed. In this general model for cognitive brain processes, neural binding of identity and location and of identity and position play a fundamental role: temporary connections emerge during word recognition and are reactivated later, when a letter at given position has to be identified. It is shown how modules active in word recognition are “re-used” in letter identification. In simulations, the role of a critical threshold of cell-assemblies is shown and the selective propagation of activation loops at task-dependent time scales. Requirements for prospective studies on cognitive self-organisation and relations with new empirical work on visual-word processing are discussed.

1. Introduction

What does the reading of words tell us about our brain? How do the more than sixty-five billion neurons in the brain cooperate to perform this task? How did this cooperation come into existence? These classical questions of cognitive self-organisation (for example, see Dalenoort, Citation1989; Grossberg, Citation1978; Kelso, Citation1995; van Leeuwen, Citation2008; Van Orden, Holden, & Turvey, Citation2003; von der Malsburg, Citation1999) form the central questions of this paper. In the contribution to an answer to these questions, we will focus on two types of description levels.

At the structural level, we will use a causal description of processes, whereas, at the functional level, we will describe them in terms of goals and information. Insight emerges if correspondences between levels are established, either bottom-up, i.e. from the structural to the functional level, when we identify a goal for a causally described process, or top-down, when we find the mechanism underlying a process described as being goal-oriented. In the chosen approach (Dalenoort, Citation1990; Dalenoort & de Vries, Citation1998), neither of the two levels is epistemologically superior to the other.

Both levels are necessary for the main goal of the paper: to explain why the proposed, constructed network is consistent with a biologically plausible account of the self-organisation underlying our cognition. The structure and processes of the network provide important constraints for computational studies in which a simulated, autonomous system develops in, and learns from, a changing environment. This aspect of the chosen approach has been referred to as “downward emergence” (de Vries, Citation1995) because arguments from the functional level guide the search for underlying mechanisms at the structural level. This is different from the bottom-up approach in the area of deep learning, cf. (LeCun, Bengio, & Hinton, Citation2015; Silver et al., Citation2016). Here, extensions of backpropagation and non-supervised forms of learning, made possible by the computing power of current information technology, are used. The design of a self-organising network, as proposed in the current paper, may be relevant to this approach as we will focus on the representation of different tasks in different stages of development of the network. Accordingly, the study of the proposed network may be part of an answer to the central questions on deep learning, e.g. implied by studies of Hinton (Citation2007) and Kirkpatrick et al. (Citation2017): what are the fundamental structures in a large-scale, distributed, parallel system, what is learned or acquired by this system, and what are the implied developmental dependencies, i.e. for a structure to come into existence, which structures must already be present?

In order to analyse cognition from the perspective of self-organisation, we will focus on the area of visual-word processing (Grainger, Citation2008, Citation2018). Although words may lead to dispute, the area is a good test field since it involves many aspects of the human cognitive system, as we will see.

2. Theoretical arguments

Our exploration of mechanisms of self-organisation will be guided by the far-reaching implications of theoretical arguments. In this paper, six arguments will play an essential role.

2.1. Coincidence of excitations is the basic mechanism underlying the self-organisation of cognitive processes

At the structural level, changes in the behaviour, state, or connectivity of a network have to be explained by means of coincidences of neural excitations. An example is the Tanzi-Hebb learning rule: the increase of the efficiency of a synapse due to the simultaneous firing of the pair of neurons involved, corresponds to learning at the functional level. On theoretical grounds, the learning rule was put forward by Hebb (Citation1949) but surmised by others, notably Tanzi (D’Anguilli & Dalenoort, Citation1996; Tanzi, Citation1893), although empirical evidence supporting the rule followed later, cf. Kandel and his co-workers (Carew, Pinsker, & Kandel, Citation1972; Kandel, Dudai, & Mayford, Citation2014) and Bi and Poo (Citation1998). For the emergence of inhibitory synapses, the role of long- and short-term synaptic changes in the logistics of cognitive self-organisation needs further specification. An answer to this question is central to the goal of this paper.

2.2. The Tanzi-Hebb learning rule leads to the formation of cell-assemblies.

According to Hebb (Citation1949), the learning rule leads to clusters of neurons more strongly connected to each other than to neurons in other clusters. Each cluster, or cell-assembly as he called it (for a recent review see Huyck & Passmore, Citation2013), corresponds to a memory trace. Already in the 1940s, it had to be assumed that the neurons of a memory trace must be widely distributed since lesions do not have specific effects on memory traces (Hebb, Citation1949; Lashley, Citation1951). The neurons carrying a memory trace do not belong exclusively to a single cluster. Rather, a memory trace should be considered as an excitation pattern in a large network of neurons. In different contexts, the same memory trace is carried by different, overlapping excitation patterns. Accordingly, a memory trace can contribute to various context-specific meanings (Dalenoort, Citation1982, p. 176). Although the neurons participating in the excitation pattern underlying a memory trace do not form a fixed set, i.e. a “grand-mother cell” (see Bowers, Citation2009), a nucleus of neurons firing in each occurrence of the pattern must exist, cf. Braitenberg's kernel-halo distinction (Citation1978). The memory trace obtains its identity from the specific connections of this nucleus with the sensory and motoric parts of the nervous system, cf. Dalenoort (Citation1996).

2.3. A cell-assembly must have a critical threshold.

Analogous to the notion of critical phenomena in physics, e.g. the critical temperature of a gas, a critical excitation threshold for each realistic network of threshold elements – such as neurons – exists. If the excitation level in the network – the sum of the firing rates of the elements – reaches this threshold, it will rise to its maximum value due to the self-strengthening excitation process in the network. If the excitation level does not pass the threshold, the neurons in the network will still fire but not strongly enough to produce the autonomous growth of excitation. The notion of a critical threshold (Dalenoort, Citation1985) is a necessary consequence of the neural clustering implied by the Tanzi-Hebb learning rule. It points to another central correspondence between the structural and the functional level. At the structural level, the former implies that the supra-threshold excitation (autonomous growth of excitation) of a cell-assembly is one of the conditions necessary to produce a conscious experience about the meaning or content of the corresponding memory trace, or – if we take a behaviourist view on consciousness – to produce appropriate action. At a functional level, the state corresponds to something being held in short-term – or working memory. The former refers to our ability to temporarily retain recent experiences, whereas the latter structures our experiences for application in a wide variety of tasks. Alternatively, the sub threshold excitation of a cell-assembly then agrees with a memory trace which does not produce a conscious experience but corresponds to a state of priming (Schacter & Tulving, Citation1994). According to Hebb’s (Citation1949) original notion, the active cell-assemblies in the network represent working memory, whereas the network itself corresponds to long-term memory. In addition, our discussion of word recognition later in this paper will show that the distinction between conscious and unconscious forms of memory can be represented in the same network, too. In these types of memory, excitations at a level above the critical threshold play different roles.

In studies of cognitive brain functioning, the notion of “critical threshold” has been around for a while. In one approach, it has been described as the “ignition of a cell-assembly” cf. the studies by Braitenberg (Citation1978), Pulvermüller (Citation1999), Huyck (Citation2007), and Palm, Knoblauch, Hauser, and Schuez (Citation2014). In the approach of physics and complex systems theory, cf. (Plenz & Niebur, Citation2014), the concept of criticality has been formalised and applied to brain processes, including aspects of cognition. Both approaches focus on the structural level. The role of the functional level in the search for constraints on the self-organisation of cognition, is not as strongly emphasised as in this study. Alternatively, MacKay (Citation1987) did reason from the functional – to the structural level. In his structural node theory, “conscious processing” and priming were explained by different strengths of excitations of network nodes. In this approach, however, the involved network was not described from a perspective of self-organisation, i.e. as a necessary consequence of mechanisms at the structural level, such as the Tanzi-Hebb rule.

2.4. The necessity of excitation loops and re-entrant excitation.

If a cognitive system behaves in a systematic way, conditions must exist under which something “becomes known” in the system. Considered from a perspective of self-organisation, this cognitive event must correspond to a dynamically stable network state, i.e. dynamic equilibrium. The simplest mechanism by means of which an equilibrium can come into existence at the neural level, is that of an excitation loop: neural excitation returning to its origin. The notion of an excitation loop is one form of adaptive resonance, as proposed by Grossberg (Citation1980), as well as a form of re-entrant excitation, the significance of which has been emphasised by Edelman (Citation1987).

2.5. The necessity of binding

The role of neural binding in perception and cognition has long been recognised. Several authors have argued its theoretical necessity: von der Malsburg (Citation1981), Dalenoort (Citation1985), Smolensky (Citation1990), Singer (Citation1999), Treisman (Citation1996), van der Velde and de Kamps (Citation2006), and Huyck (Citation2009), whereas various versions of the associated binding problem were analysed by Feldman (Citation2013).

Largely, the necessity of binding has been shown in studies of perception, where separate representations for the identity and the location of an object are necessary. If this were not the case, a brain would need an inefficiently large number of specific representations, i.e. one for each object-location combination, as argued by von der Malsburg (Citation1999).

Another theoretical argument showing the necessity of binding is our ability to directly associate two arbitrary words presented to us and, when asked, report one when given the other as a cue. At the functional level, a temporary association between the involved memory traces can be used to explain this ability. At the structural level, a binding problem occurs (von der Malsburg, Citation1995). How is it possible that only the two excitation patterns corresponding to the presented words start to resonate? This is only possible by means of a temporary connection: an existing neural pathway between these two excitation patterns that becomes temporarily available (Dalenoort, Citation1985; de Vries, Citation2004). Accordingly, re-entrant excitation is ensured. If one of the two temporarily connected patterns is re-excited, this event will cause the excitation of the other because of their common pathway. Following Feldman (Citation2013), this temporary connection is a form of variable binding. The given example shows that it has to work in two directions. Then, it explains why we can recall one word of a presented word pair when given the other as a cue.

2.6. Generalisation

In order to survive, our cognition has to be modular and is therefore built-up of chunks (Simon, Citation1962). A well-known, classical finding is that a chunk facilitates the processing of its elements (Chase & Simon, Citation1973; de Groot, Citation1965). One of the tasks demonstrating this facilitation effect is the recognition of a word. An experienced reader recognises a word as a whole and a letter in a word is recognised better as a letter in a non-word or even an isolated letter, a phenomenon long known as the word-superiority effect (Cattell, Citation1948 reprinted from 1886; Reicher, Citation1969; Baron & Thurston, Citation1973; Johnston & McClelland, Citation1974). In this paper, we will examine the downward effect of a chunk on its elements by means of the identification of a letter at a certain position in a presented word, the so-called letter-N-task. The discussion of the selected word recognition models will focus on their generalisation. In particular, we will examine if they can accommodate the letter-N-task next to word recognition.

3. Models of letter- and word-processing

Our faculty for reading words has inspired many scientific writings. Based on distinctions made in the previous section, a selection of seminal models of letter- and word recognition (cf. Table ) will be discussed: IAM, the interactive activation model (Hofmann & Jacobs, Citation2014; McClelland & Rumelhart, Citation1981; Rumelhart & McClelland, Citation1982), SERIOL, the sequential encoding regulated by inputs to oscillations within letter units model (Whitney, Citation2001a, Citation2001b), POBM, the parallel open bigram model (Grainger & Van Heuven, Citation2004; van Assche & Grainger, Citation2006), OM, the overlap model (Gomez, Ratcliff, & Perea, Citation2008), and SCM, the spatial coding model (Davis, Citation2010).

Table 1. Overview of models of word recognition: IAM = interactive activation model SERIOL = sequential encoding regulated by inputs to oscillations within letter units, POBM = parallel open bigram model, OM = overlap model, and SCM = spatial coding model.

Our discussion of the models will focus on three criteria: self-organisation, binding, and generalisation, represented by the rows in Table . Symbols indicate whether (plus-sign) or not (minus-sign) the criterion of a cell's row is satisfied by the model of its column. If a model partly satisfies a criterion, a plus-minus is used.

3.1. Self-organisation

Within the specific domain of word recognition, all models in Table except the OM, can be considered as being based on self-organisation. In these models, word recognition is the product of the local interactions between the nodes in a network. The word-superiority effect, for example, is explained by means of a bottom-up convergence of excitations: i.e. from letter- to word representations. In addition, the IAM posits a non-specific top-down process supporting the letter representations of a presented word.Footnote1 The criterion of self-organisation does not hold for the OM because its statistical procedures for string comparison are not related to underlying mechanisms.

In view of the formation of nodes and connections, the criterion of self-organisation does not hold for the models in Table : They are constructed without reference to autonomous learning or development. Accordingly, the corresponding row of Table contains plus-minus-signs. Related to the parallel open bigram model, the supervised learning of distributed word representations is described by Dandurand, Hannagan, and Grainger (Citation2013). Davis's SOLAR model (Citation1999), the predecessor of the SCM, cf. Table , explains the tuning of the connections between letter- and word nodes as a self-organising process.

3.2. Binding

Table points to a major drawback of the IAM, noticed by many, e.g. Grainger (Citation2008) and Davis (Citation2010): slot-based coding, the introduction of specific nodes for letter-position combinations. As a general solution to the binding problem, this representation is inadequate because the mentioned inefficiency of combined object-location representations, cf. von der Malsburg (Citation1999), also applies to slot-based coding.

Contrary to the IAM, letter representations are position-independent in Whitney's SERIOL model (cf. Table ). Based on a neural model proposed by Grossberg (Citation1978), the binding between a letter and its position takes place by means of the strengths of the excitation levels of the letter nodes in the network. The letter nodes excited by a presented word (or non-word) thus form a so-called positional gradient in which the node of first letter has the highest excitation level and where the excitation levels of the following letters decrease with position. Accordingly, the appropriate bigrams, i.e. ordered letter pairs characterising a word, become active, which in turn leads to the activation of the correct word node.

In the other bigram model in Table , the POBM, binding is only represented at the functional level by means of a system converting the absolute letter positions, produced by so-called letter detectors, into relative letter positions, necessary for the selection of the bigrams of the presented word. Likewise, there is no binding in the OM (cf. Table ). The binding between a letter and its position is implicitly represented in the procedures giving the statistical distributions of the perceived location of a letter.

In the SCM (cf. Table ), binding is ensured by means of “spatial patterns” dynamically assigned to letter nodes. Similar to the SERIOL model, the magnitudes of the excitation levels of these patterns represent letter order. Unlike the SERIOL model, the relative position of letters is represented by means of phase-dependent excitation levels instead of bigrams: only the bottom-up excitations corresponding to the letters of a presented word have the appropriate phase-differences necessary to activate the correct word representation. Because they accommodate binding, the SERIOL model and the SCM are the only ones qualified with a plus-sign in the corresponding row of Table .

3.3. Generalisation

Within the task of word recognition, the models in Table differ in generalisation because of their explanation of various forms of position priming (for a review see Grainger & Whitney, Citation2004; and also Davis, Citation2010; van Assche & Grainger, Citation2006). As demonstrated by Evett and Humphreys (Citation1981), this phenomenon can be induced by the brief presentation of a masked prime, usually a pseudo-word, followed by a target sequence of letters, of which the subject has to decide whether or not it is a word. If a word is presented, a priming effect occurs even when the prime contains letters of the target at different positions but in the same relative order. The explanation of this so-called relative-position priming is problematic for the IAM because of its letter-position nodes, which are restricted to the absolute positions of the letters.

Since the selected models are all tuned to the task of word recognition, they do not generalise to other tasks, cf. the minus signs in the last row of Table . For the letter-N task, all models lack the required, specific top-down excitation, as well as representations for sequence and re-entrant binding. In the next sections, we will discuss a general purpose network based on self-organisation and neural binding, capable of explaining the various priming effects as well as the letter-N task.

4. Conceptual networks

The tasks of word recognition and reporting a letter at a given position of a word will show the generality of the cognitive brain model presented in this paper. As we will see, the representation of these tasks will require inhomogeneous and non-uniform networks, which can in principle not be analysed fully in a statistical manner: the implementation of binding, for example, seems to be impossible in an analytical model. For these networks, to which we will refer as conceptual networks, only the process itself can be simulated, as argued by Dalenoort in (de Vries & van Slochteren, Citation2008, p. 189).

Although the presented, simulated networks are constructed, their design is a necessary step in the search for the conditions necessary for biologically plausible forms of self-organisation. Without the constraints imposed by a functioning system, this search is likely to fail since there are simply too many possible trajectories along which autonomous learning and development can take place.

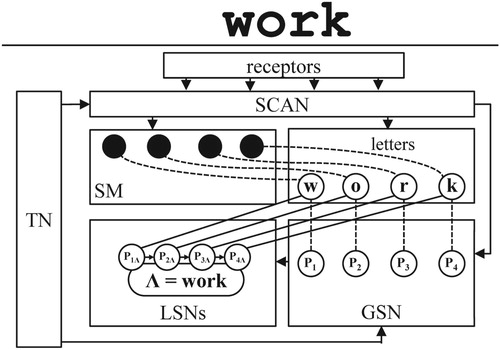

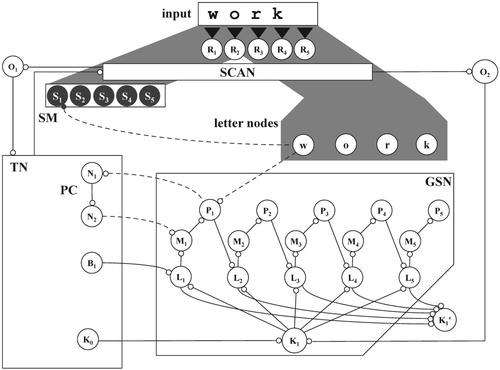

At a functional level of description, Figure provides a schematic overview of the components of a conceptual network applied to letter- and word processing (labels in the figure do not have a function in the network but only serve to explain its parts to the reader):

Memory traces for letters: i.e. the large circles denoting letter nodes “w”, “o”, “r” and “k”.

A spatial map (SM), representing the locations of presented objects, such as letters.

A global sequence network (GSN), a subnetwork with nodes representing memory traces for sequence, cf. memory traces for first, second, etc., indicated by global position nodes P1, P2, etc.

Local sequence networks (LSNs), each word representation, or word node, has an LSN with local position nodes for the involved letters.

A task network (TN) containing memory traces for procedural knowledge about tasks of word processing.

A scanning mechanism (SCAN), transforming parallel, external excitations into series of excitation pairs.

Figure 1. A conceptual network applied to tasks of visual-word processing; example input is the word “work”; rectangles denote network's components: receptors, task network (TN), scanning mechanism (SCAN), spatial map (SM), letter nodes, global sequence network (GSN) with global position nodes P1 .. P4, local sequence networks (LSNs), one for each word, exemplified by word node “work” (also denoted by the symbol Λ) and its LSN with local, word-specific position nodes P1Λ .. P4Λ; the solid arrows and lines indicate permanent connections; the dashed lines stand for temporary connections (binding).

In order to refer to word node “work” in Figure , representing the author's favourite word, the symbol Λ will be used throughout the paper. For a local position node representing letter position N in the LSN of word M, we will use the notation PNM. Accordingly, the LSN of word node Λ comprises local position nodes P1Λ .. P4Λ. Contrary to the slot-based coding in the IAM (cf. Table ), a local position node is only specific to a word and a letter position within that word.

In our discussion of the network, we will distinguish between the notions of excitation, activation, and priming. If we do not need to describe a node's excitation level as being below or above the critical threshold, we will use the general term excitation. In contradistinction, the terms activation, active, and activated refer to a node's excitation level if it is above the critical threshold. Alternatively, the terms priming or primed are appropriate for an excitation level below the critical threshold. Likewise, the description “node A activates node B” implies that node B's excitation level exceeds the critical threshold and the description “node A primes node B” implies that the excitation level of node B will be less than the critical threshold. Below we discuss the model's behaviour in self-organisation, binding and generalisation.

4.1. Self-organisation

Each node of a conceptual network represents the characteristics of a cell-assembly implied by the Tanzi-Hebb rule, notably a critical threshold (cf. the arguments of Sections 2.1, 2.2, and 2.3). In the network and its simulation, the neurons constituting a cell-assembly are not represented explicitly. All connections of the network consist of similar combinations of excitatory and inhibitory links, parts of which are implemented as specific functions and procedures (cf. Appendix A).

4.1.1. SPAL: selective propagation of an activation loop

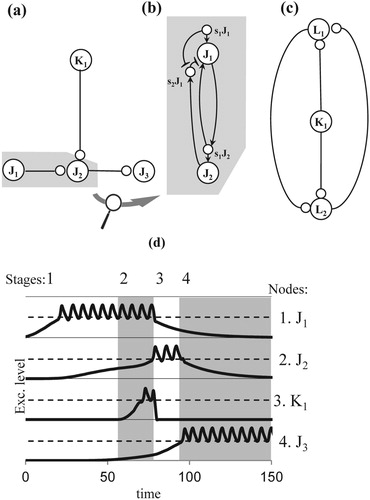

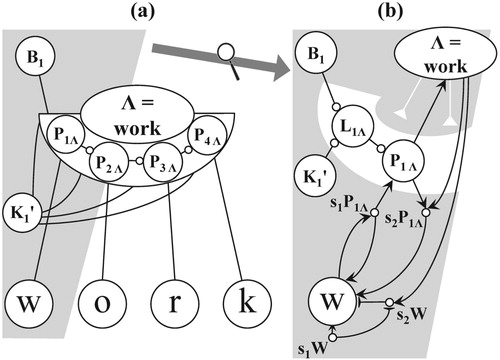

Human thought has many forms, such as a suddenly appearing, new insight or the recognition of a well-known, printed word. In a conceptual network, both forms correspond to the excitation level of a cell-assembly passing the critical threshold as the result of several incoming excitations. Whereas an insight may be produced by free-floating excitations, for an experienced reader, the recognition of a visual word requires a more disciplined excitation regime since it takes place so frequently and efficiently. In this regime, the notion of excitation loop, cf. Argument 2.2, plays a crucial role. We explain this notion by means of an intermediate node structure, cf. Figure a and b, and a cycle, cf. Figure c.

Figure 2. Networks for the propagation of an activation loop (a) nodes (large labelled circles), sub nodes (small circles) and connections of an intermediate node structure, (b) detailed representation of a connection comprising excitatory and inhibitory links, i.e. arrows and lines with T-endings, (c) cycle, (d) simulation of the propagation of an activation loop in an intermediate node structure; dashed lines indicate the level of the critical threshold, vertically aligned, indexed areas, alternately coloured white and grey, represent stages in the evolution of excitation levels, of which the graphs are aligned horizontally.

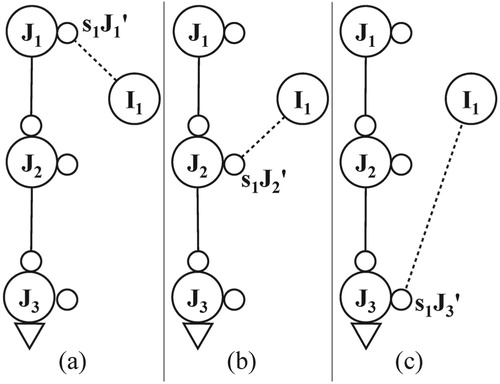

In Figure a, nodes are shown as large circles and sub nodes as small ones. At the neuronal level, a sub node is formed by some of the neurons constituting a cell-assembly. In a conceptual network, a sub node therefore belongs to a node, as is shown in the detailed representation of Figure b: a connection from node J1 to node J2 implies sub nodes s1J1 and s2J1, belonging to node J1, and sub node s1J2, belonging to node J2. In addition, the figure shows the links underlying an excitation loop: one from node J1 to sub node s1J2 and vice versa, and one from sub node s1J2 to node J2. So, if a node excites another node, it also excites itself by means of a sub node of the latter. We will refer to this process as recurrent excitation.

Consistent with the earlier distinction between excitation, activation, and priming, a node is then said to cause an activation loop if its excitation level surpasses the critical threshold. Recurrent excitation then ensures that its excitation level remains above the critical threshold and starts to oscillate. For node J1 in Figure a, the corresponding excitation curve is shown by the simulation in Stage 1 of Graph 1 in Figure d. Due to the critical threshold, a period of supra-threshold excitation is maintained, even when the external input has ended. Several causes for the oscillatory behaviour of a node exist. Inhibitory processes internal to a cell-assembly may decrease the excitation to the level of the critical threshold, every time the autonomous growth has reached its maximum value. Alternatively, it may be due to the refractory periods of the many neurons of a cell-assembly and the time required for their activation accumulation. It is possible that both inhibition and refractory periods play a role.

Selective propagation of an activation loop, SPAL, occurs if two conditions hold:

A node in an activation loop primes another node (e.g. node J1 primes node J2, cf. Figure a, Stage 1 of Graphs 1 and 2 in Figure d).

Simultaneously, a third node excites the primed node (node K1 excites node J2, cf. Figure , Stage 2 of Graph 3 in Figure d), which causes the latter's excitation level to pass the critical threshold (Stage 3 of Graph 2 in Figure d).

Although, in the example of Figure d, the excitation level of the third node is also above the critical threshold, a sub threshold excitation can be sufficient for SPAL conditions to apply. In addition, not all propagations are selective: an activation loop can propagate without SPAL conditions. As shown in Stages 3 and 4 in Graphs 3 and 4 of Figure d, for example, an activation loop propagates from node J2 to node J3, without additional input to the latter.

When a node becomes active, it will inhibit the nodes that caused its activation. Figure b shows the required links for the connection from node J1 to node J2: an excitatory link from node J2 to sub node s2J1 and an inhibitory link from this sub node to its node S1. We will refer to the involved process as back-inhibition. For example, after nodes J1 and K1 have activated node J2 (Figure a), they both become inhibited, cf. Stage 3 in Graphs 1 and 3 of Figure d. The simulation in Figure d also shows the different strengths of back-inhibitions: the suppression of node J1 by node J2 leads to a state of priming in the former, whereas the excitation level of node K1 is reduced completely by node J2.

The back-inhibition of one node by another involves a sub node of the former, in the example of Figure b: sub node s2J1. By means of this sub node, the inhibition of a node's back-inhibition is possible if the node is excited anew. As shown in Figure b, sub node s1J1 will then inhibit sub node s2J1 and suppress inhibition from node J2. Inhibition of back-inhibition forms an important mechanism for a flexible control regime of the network.

Table a illustrates part of the general scheme for our further discussions of loop propagation, consisting of begin- and end conditions as well as intermediate events. The table relates the loop propagation to the results of computer simulations by means of stage-graph pairs referring to certain periods of excitation. For the simulations in Figure d, for example, number pair (1, 1) of node J1 in Table a refers to Stage 1 of Graph 1 in Figure d, i.e. the start of this node's period of supra-threshold oscillation. Similarly, number pair (3, 2) of node J2 points to Stage 3 of Graph 2, which marks the beginning of the supra-threshold oscillation of node J2. In the previous stages, the node was already in a state of priming but the table thus shows that loop propagation from nodes J1 and K1 to node J2 in the network of Figure a, requires that nodes J1 and K1 are both excited at a supra-threshold level. We will use stage-graph pairs for the description of all simulations of loop propagation in this paper, in combination with the notation of Table b discussed in the next section.

Table 2. Tables for loop propagation in (a) the intermediate node structure of Figure a and (b) the cycle of Figure c; columns for the begin- and end conditions indicate the nodes excited at the start and end of a table's loop propagation; (a) each number pair below a node name indicates a stage and graph in Figure d and refers to a period of the node's excitation that is characteristic of the simulated loop propagation in the intermediate node structure in Figure a; (b) node K1 and actual nodes L1 and L2, listed within brackets below generic nodes Li and Lj, indicate successive excitations of the loop propagation in the cycle of Figure c.

So far, the discussed examples of loop propagation are convergent in nature, i.e. two (or more) excitations produce a single loop. In addition, divergent excitation is possible: an activated node M, then produces multiple excitation loops, a so-called fan out. Each of these loops will prime a node Ni and, depending on the fulfilment of the SPAL conditions, loop propagation will continue. Timing plays an important role: as soon as a node Ni becomes active, its back-inhibition to node M will stop any further loop propagations from the latter. Given appropriate timing, i.e. if the activations of two or more nodes Ni take place simultaneously, a bifurcation of activation loops occurs. Loop propagation then continues along several pathways in parallel.

At any moment, only a relatively small number of activation loops occur in the network. In contradistinction, many excitation loops will be present simultaneously, i.e. loops caused by nodes with an excitation level below the critical threshold. These loops do not propagate selectively. Accordingly, the involved nodes will prime all nodes they are connected with.

At the neuronal level, the successive propagations of an activation loop can be seen as an instance of the synfire chains studied by Abeles, Hayon, and Lehmann (Citation2004). In addition, neural mechanisms similar to those underlying the described propagation process, including back-inhibition, have been used in Fukhushima's neocognitron (Citation1980) and MacKay's node structure theory (Citation1987). Both studies, however, do not take into account the role of the critical threshold.

4.1.2. Cycles and sequences

In order to represent the notion of control at the structural level, intermediate node structures are incorporated in a cycle, cf. Figure c. To explain its functioning in general terms, we will distinguish the following nodes:

The centre node of a cycle, labelled K1 in Figure c, which has a similar function as node K1 in the network of Figure a, it produces the second input to an already primed node, causing the SPAL conditions to be fulfilled.

Two or more peripheral nodes, to some of which the centre node is connected, i.e. nodes L1 and L2 in Figure c.

A cycle can maintain one of several states. In Figure c this number is two, as shown in Table b. Simulations of loop propagation in cycles will be discussed later in Sections 6 and 7. The example cycle of Figure c serves to introduce the notion of a generic node name, central to our discussion of these simulations. Table b shows generic nodes Li and Lj, each of which can be substituted by actual nodes L1 and L2. Accordingly, the table shows that excitation loops will propagate from nodes K1 and L1 to node L2, if the nodes K1 and L1 become excited and that propagation will take place from nodes K1 and L2 to node L1, if the nodes K1 and L2 are excited. In our discussions, the required strengths of excitation will be indicated by stage-graph pairs referring to a simulated excitation level of an actual node, cf. Table a. Given the appropriate conditions, the cycle of Figure c will behave like a flip-flop. Loop propagation in cycles plays a crucial role in cognitive processes in general and in word recognition and letter identification in particular.

The activations of the centre node of a cycle determine when and at what frequency the peripheral nodes will become active. The centre node, however, is not to be conceived as a “control-node”. The moment and extent of its activation are dependent on the excitation levels of other nodes: the network has a collective control structure. Next to cycles, a conceptual network contains sequence networks. These are similar to cycles since they also have a centre node and several peripheral nodes. Sequence networks are crucial to visual-word processing because of their role in the representation of rank order. Cycles and sequence networks can interact because loop propagation starting from the centre node of a cycle or sequence network may be triggered by excitation from a peripheral node of another cycle or sequence network.

4.2. Binding

Various solutions to the binding problem discussed in Section 2.5 have been proposed, emphasising different aspects, like the role of attention, cf. (Reynolds & Desimone, Citation1999), or sparse coding of combinations of features (O’Reilly & Munakata, Citation2000; updated in O'Reilly, Munakata, Frank, Hazy, & Contributors, Citation2012). In another approach, solutions to the binding problem are based on the specific, temporal structure of neural spike patterns: binding by synchrony, cf. Hummel and Biederman (Citation1992), Shastri and Ajjanagadde (Citation1993), Hummel and Holyoak (Citation1997), von der Malsburg (Citation1999), Raffone and Wolters (Citation2001), and Sougné (Citation2001). Moreover, at the structural level, an attention-based solution to the binding problem also involves synchronous, neuronal firing, as argued by Fries (Citation2009) and Burwick (Citation2014).

A problem with binding by synchrony is its robustness. Can several, simultaneously occurring neural representations become mutually specific by means of their temporal spike patterns alone, without creating interference? Although this is theoretically possible, we will pursue the implications of an alternative answer to this question in this paper.

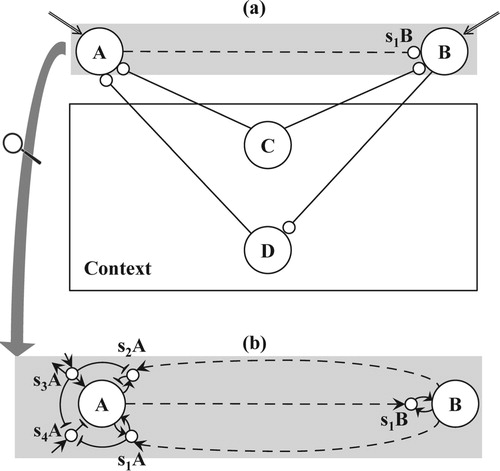

4.2.1. SEC: simultaneous excitation in context

For the explanation of a robust binding mechanism, we return to the exemplary task of Argument 2.5: the human capacity to directly associate two arbitrary words. How is it possible that only the two corresponding cell-assemblies in the brain start to resonate? It is not sufficient that their excitations occur simultaneously since this holds for many cell-assemblies. For example, when asked to associate the two presented words, the person involved may overhear a word spoken in the room next door. The cell-assembly excited by this word should not become bound to either of the cell-assemblies of the two presented words. As argued by Dalenoort (Citation1985) and de Vries (Citation2004), a pair of cell-assemblies, i.e. two nodes of a conceptual network (cf. Figure a), will only become temporarily connected if:

Both nodes are simultaneously excited (not necessarily at a level above the critical threshold),

Both nodes have compatible roles in the current context.

Figure 3. (a) Binding based on context: the temporary connection from node A to special sub node S1B of node B results from the current, simultaneous excitation of nodes A and B (indicated by double arrows) and the previous excitations from the sub network of their context, formed by nodes C and D, (b) detailed representation of a temporary connection containing nodes and their (special) sub nodes, next to permanent, excitatory links (solid arrows), temporary, excitatory links (dashed arrows), and permanent inhibitory links (solid lines with T-endings).

We will refer to these two conditions as simultaneous excitation in context or SEC. In the given example, the conditions only apply to the nodes of the two words presented to the person and prevent erroneous binding of either of them to the node of the third word in the example. Context corresponds to a subnetwork priming the two nodes prior to their simultaneous excitation. In our example, this sub network would include knowledge about the current situation, such as the concepts of “word” and “association”, next to knowledge about rules of communication. Later, we will discuss contexts based on visual cognition. In Figure a, context is depicted by node C, connected to both nodes involved in binding. At the neuronal level, SEC conditions cause the spike patterns of the involved pair of cell-assemblies to be in phase. As a consequence, an existing pathway becomes available by means of which both cell-assemblies can enter a state of resonance.

4.2.2. Temporary links, loop propagation, and re-entrant excitation

Since loop propagation is fundamental to the functioning of the network, the underlying mechanisms for temporary and permanent connections should be compatible. Accordingly, a temporary connection, like a permanent one, is directed from one node to another and has a similar decomposition into sub nodes and links (Figure a and b, resp. a and b), and contains so-called special sub nodes. The general scheme is illustrated in Figure b: A temporary connection from node A to node B requires two special sub nodes for node A, sub nodes s1A and s2A, and one for node B, sub node s1B.

Figure shows the distinctive feature of a special sub node. Whereas a non-special sub node only has a single outgoing connection to its node, either excitatory (e.g. sub node s3A of node A) or inhibitory (e.g. sub node s4A of node A), a special sub node has an excitatory connection coming from its node (e.g. the links to special sub nodes s1A and s2A from node A and to special sub node s1B from node B) and, in addition, either an excitatory connection in the opposite direction (e.g. the links from special sub node s1A to node A and from special sub node s1B to node B) or an inhibitory connection (e.g. the link from special sub node s2A to node A). The mutual connections of a node with one of its special sub nodes do not imply the self-excitation or – inhibition of that node. These processes, essential to loop propagation, only become effective if the special sub node receives sufficient input by means of its temporary, excitatory link (the dashed arrows in Figure b).

In order to understand why a directed, temporary link comes into existence, neural spike patterns play a central role. According to the model, the neurons of a node produce a richer, more varied spike pattern than the neurons of a special sub node excited by that node. By assumption, the pattern of the special sub node is always a part of the pattern of its node. Let us now consider the situation when SEC conditions hold for two nodes A and B. Since the spike patterns of both nodes are in phase, a special sub node of node B must have a spike pattern that – besides being part of the pattern of node B itself – is also part of the pattern of node A. Likewise, a special sub node of node A must have a pattern that is part of the pattern of node B.

For a temporary connection, the spike patterns of the involved nodes and special sub nodes determine the directions of the underlying links. If the spike pattern of a special sub node of a node is part of the spike pattern of another node, a temporary, excitatory link emerges from the last one to the special sub node. Due to these properties of spike patterns, the following three excitatory, temporary links emerge in the example of Figure b:

node A to special sub node s1B, ensuring forward excitation,

node B to special sub node s1A, ensuring recurrent excitation,

node B to special sub node s2A, ensuring backward inhibition.

As a consequence of the word association argument in Section 2.5, a temporary connection from node A to node B must allow re-entrant excitation from node B to node A, thus ensuring bidirectional variable binding. A permanent connection from one node to another does not allow bidirectional excitation as excitation cannot return from the latter to the former. This is illustrated by permanent connection from node J1 to J2 in Figure a and b, where the excitatory link from node J2 back to node J1 affects the latter's sub node s2J1 and can only produce back inhibition. By contrast, the temporary connection from node A to node B in Figure a and b, does produce re-entrant excitation because of the temporary, excitatory link from node B to special sub node s1A of node A. This special sub node will excite its node A when it receives excitation from node B. So, new input by means of a temporary connection can cancel an eventual back-inhibition of a permanent connection. In Figure b, for example, node A may be affected by back-inhibition from a permanent connection involving sub node s4A of node A. Excitation of special sub node s1A of node A will then cancel this back-inhibition by means of the inhibitory link from special sub node s1A to sub node s4A.

4.2.3. Limits of a conceptual network

At the neural level, the instantaneous formation of the links underlying a temporary connection is not possible on the basis of Tanzi-Hebb learning, since this process is far too slow. Various substrates for a temporary connection are possible. A temporary connection may correspond to a pathway in a specific brain structure by means of which two memory traces can enter a state of temporary resonance. Alternatively, a distributed organisation is possible. The state of temporary resonance may then be carried by neurons not involved in the actual task. Also, fast changes in the strength of synapses, distinct from the slowly developing synapses in Tanzi-Hebb learning, may play a role (Huyck, Citation2009).

Since binding can be accomplished by several mechanisms at the neuronal level, it will be defined functionally in the simulation of a conceptual network. Moreover, an excitation pattern in the network is not represented at the level of the spike trains produced by individual neurons. For the current tasks, this would make the network too complex. Instead, binding is based on two lists for each context. In the network, context Γiis characterised by an ordered pair of node lists (A1 .. An) (B1 .. Bm) with nodes A1 to An belonging to the same context as special sub nodes B1 to Bm (both lists need not have the same length). The two lists are an ordered pair since the direction of a temporary connection is implied by a special sub node, cf. sub node s1B in Figure . For a context, the two nodes that have the highest increase in excitation level within their list become temporarily connected (cf. the section on binding in Appendix A). The specific mechanism that accomplishes the binding is not crucial to the logistics of excitation processes at the level of a conceptual network.

4.3. Binding, context loops, and consolidation

In a conceptual network, binding based on SEC conditions is consistent with the notion of a dynamic equilibrium since it implies a loop, cf. the argument of Section 2.4. If a temporary connection emerges from node A to node B, it turns an existing pathway from the latter to the former into a so-called context loop, exemplified by the pathway formed by nodes B, D, and A in Figure . If excitation is maintained in a context loop, consolidation of the involved temporary connection will start.

Binding thus precedes consolidation, which in turn comes before learning. The three processes are related by means of changes in connection strengths in a conceptual network. Like a permanent connection produced by the Tanzi-Hebb learning rule, a temporary connection resulting from binding, has a strength. Processes of consolidation and learning affect the strengths of both temporary and permanent connections and will therefore play a role in the self-organisation of cognitive processes, in particular of letter- and word recognition.

The strength of a newly formed, temporary connection is dependent on the evolution of the excitation levels of both memory traces involved. In the model, the initial strength of a temporary connection is proportional to the sum of the excitation levels of these memory traces at the moment of their binding. If both excitation levels are high enough, i.e. if they exceed a so-called binding threshold, the strength of the temporary connection increases, i.e. at the functional level, consolidation starts. The degree of consolidation depends on the persistence of the excitations in both temporarily connected nodes, i.e. of the duration of the reverberation in the implied context loop and the levels of excitation produced.

As we will discuss later, a temporary connection may persist within the sub network of its context, dependent on the context's nature and the excitation levels of the implied nodes. At a functional level, several nodes temporarily connected within their context network then represent an episode, cf. Treisman (Citation1988), and Schacter and Tulving (Citation1994). As we will show in this paper, the letters of a presented word may also cause the formation of an episode. The role of context in the formation and consolidation of temporary connections is consistent with findings of Howard, Viskontas, Shankar, and Fried (Citation2012): excitation patterns underlying the context in which something is learned or remembered, re-occur during recall.

4.4. Spatial map

In a conceptual network, the separate representations of an object's location and identity imply different types of representation. Whereas object identities are represented by means of the network nodes discussed in the previous sections, locations are represented in a spatial map (SM in Figure ), cf. Dalenoort (Citation1985, Citation1987) and de Vries (Citation2004, Citation2016). The SM is a neural structure in which excitation patterns can occur temporarily as a result of external input of the network. The relationships of the spatial map's excitation patterns are isomorphic to those of the external objects causing these excitation patterns. Accordingly, the spatial map represents pure form.

Although the spatial map is a continuous structure, its excitation patterns do have the same characteristics as those of network nodes. In particular, the excitation level of a pattern in the spatial map is also subject to autonomous growth. According to the model, an active pattern, i.e. one in which the level of excitation exceeds the critical threshold, will then contribute to the neural conditions necessary to produce a conscious experience of a certain location. Alternatively, a pattern with an excitation level below the critical threshold, corresponds to a primed location at the functional level. In addition, an excitation pattern in the spatial map may become temporarily connected to a network node. The involved temporary connection is again a form of variable binding, cf. Feldman (Citation2013), like the example of the two arbitrary words. Therefore, binding has to work in both directions here, too. We can indicate the location of a presented object when given its identity or report its identity when given its location. Here, the context of the actual SEC condition is based on acquired, visual knowledge. By means of its temporary connection, an excitation pattern in the spatial map can take part in loop propagation and has the equivalent of a sub node of a node, cf. Figure b and 3b. In the simulations of a conceptual network discussed in this paper, a simplified map is used: a two-dimensional structure with nodes representing possible locations.

4.5. The hypothesis of serial binding

The proposed conditions for binding, SEC, are limited to a single pair of elements: either two nodes or a node and an excitation pattern in the spatial map. The temporary links underlying a single temporary connection, can come into existence in parallel: the spike patterns of the involved nodes and sub nodes ensure that the right links will emerge. With binding of location and identity, however, a fundamental problem in relation to the SEC conditions appears: how do the correct temporary connections come into existence for several simultaneously presented stimuli? The mere presentation of two letters, for example, already produces four excitation patterns, all present in the same visual context: two excitation patterns in the spatial map, representing letter locations, and two excitations in letter nodes, representing their identity. Now, the hypothesised SEC conditions do not produce the required temporary connections because all four excitations occur simultaneously in the same context. To solve this problem, scanning mechanism SCAN was introduced (see Figure ; see also de Vries, Citation2004). This mechanism reacts to simultaneously presented stimuli affecting the network's receptors, like the letters of a word, all presented at the same moment. When these stimuli occur, each of them leads to an excitation loop in the scanning mechanism, representing a combination of a stimulus’ location and identity. These excitation loops are released into the network in rapid succession. Once released, each of these excitation loops splits: one part excites the spatial map, thus producing an excitation pattern corresponding to the location of the stimulus, another excites the network node of its identity.Footnote2 In fact, the excitation of this node involves many of the aspects of feature binding mentioned by Feldman (Citation2013), a process that does not belong to the core of this paper. In agreement with Feldman's arguments, this process is understood relatively well in contradistinction to the aforementioned variable binding.

4.6. Different types of context in binding

An important issue in binding, consolidation, and learning is an adequate balance between robustness and plasticity, cf. (Huyck, Citation2009). To ensure stable, yet flexible binding, we will distinguish between outward and inward contexts. In an outward context, at least one of the two potential elements for binding, i.e. a node of the network or pattern in the spatial map, is excited externally, i.e. by a receptor of the network. A temporary connection formed in an outward context possibly consolidates, dependent on the excitation levels of the involved nodes. Within the subnetwork of a context, several temporary connections may therefore co-exist if the pairs of involved nodes or spatial map patterns received external excitation successively, for example, as a result of the functioning of the scanning mechanism.

Contrary to an outward context, a network node or spatial map pattern in an inward context receives excitation from an internal source, i.e. from non-receptor nodes. In the network, a temporary connection in an inward context does not consolidate. At any moment, only the most recent temporary connection therefore exists in the sub network of this context type. In the discussion of letter- and word processing, we will discuss further examples involving both context types and point out their necessity. The proposed hypothesis on serial binding is restricted to a single context. Therefore, binding processes involving nodes belonging to different contexts, can take place in parallel. For example, different contexts may imply different modalities such as the visual and the auditory domain.

4.7. Implications of serial binding: development of a representation for sequence

In a primordial stage of the network, the scanning mechanism could be a necessary condition for the autonomous development of a neural representation of sequence, i.e. a general sequence network, the GSN, Figure . A network with parallel binding would therefore be less plausible, not only because of its aforementioned lack of robustness, but also on developmental grounds.

Figure 4. Components of a conceptual network: receptors R1 .. R5, scanning mechanism SCAN, spatial map SM with five locations S1 .. S5, orienting nodes O1 and O2, letter nodes w, o, r, k, global sequence network GSN, task network TN and position cycle PC; solid lines indicate permanent connections, dashed lines depict temporary connections.

Compared to the flip-flop cycle in Figure , the GSN is more general: instead of one out of two stable excitation patterns, it can produce one out of n, with n = 5 in Figure . In addition, the GSN must be able to support a variety of tasks. For this purpose, the number of peripheral nodes in the GSN is increased and divided into sets, referred to as so-called L, M, and P nodes, forming an interleaved pattern. Figure shows this pattern for five elements of each set: the nodes L1 .. L5, M1 .. M5, and P1 .. P5. The latter are referred to as global position nodes, representing the notions of first to fifth. The temporary connections involved in the GSN are another example of the aforementioned, bidirectional variable binding and – for the process of word recognition – they are ensured by the contexts Γ1 .. Γ4 defined in Table .

Table 3. Contexts necessary for binding in the networks of Figures and ; each context is defined by: a symbol (for reference only), a list of nodes, a list of special sub nodes, and a type (inward or outward).

4.8. Generalisation

A conceptual network is an architecture for “computation” with the following properties:

An intermediate node structure enables a test of something,

A cycle or sequence network enables iteration,

Binding ensures the application of procedural knowledge, i.e. by means of temporary connections, a part of the network can function as a procedure and can interact with different other parts, representing data,

The propagation of excitation and activation loops enables sequential computation and – due to the bifurcation of a loop – parallel processing as well,

These four properties give the conceptual network the status of a general computational system, based on self-organisation.

5. Structures for word recognition

What more is needed for word recognition besides a representation of sequence? Figure already shows a crucial element in the representation of words in a conceptual network: centre node K1′. For the numerous local sequence networks (LSNs, cf. Figure and Figure a) in the network, one for each represented word, node K1′ is their centre node. Within the GSN, it has incoming excitatory connections from all L-nodes. Accordingly, one sequence network, the GSN, can trigger a multitude of other sequence networks, the LSNs of represented words.

Figure 5. Word node “work” (=Λ), and its LSN with local position nodes P1Λ to P4Λ, (b) sub network underlying the bi-directional connection of a local position node and its letter node.

5.1. Task network

In a conceptual network, the knowledge necessary for a task is represented in a so-called task network (TN), cf. Figures and . It is connected to the scanning mechanism. In turn, the task network has incoming connections from two, so-called, orienting nodes: nodes O1 and O2. At the functional level, the excitation of these nodes corresponds to an orienting reflex, cf. Sokolov (Citation1963): “something has happened outside”, the start of a new episode. Both orienting nodes are activated by the scanning mechanism. Node O1 becomes active when the scanning starts: i.e. when the first of a series of excitation loops from external origin is released into the network. Orienting node O2 becomes active each time an excitation loop of a series is released.

In addition, Figure shows several nodes necessary for a correct start of the word recognition process: two nodes of the so-called position cycle (PC), task network nodes N1 and N2, node K0, connected to the centre node of the GSN, and node B1, not only connected to the first of the so-called L-nodes in the GSN (Figure ) but also to all of its counter parts in the LSNs (Figure b).

5.2. Local sequence networks

As argued by Grainger (Citation2008), explanations based on a “word image” no longer hold and the recognition of a word depends on a representation of the involved letter-identities and -positions. For each word node in a conceptual network, both representations are combined in a local sequence network (LSN). Figure a shows the LSN of the word node for the word “work” (referred to by the symbol Λ). Consistent with the chosen framework of self-organisation, the structure and functioning of each LSN is analogous to that of the GSN. Both are sequence networks based on a centre node and enable SPAL but an LSN has a simpler structure of peripheral nodes. As displayed in Figure b, distinct L- and P-nodes are now interleaved and M-nodes are absent. In addition, P-nodes, referred to as local position nodes, are word-specific: For the example in Figure , nodes P1Λ .. P4Λ represent the positions of the letters of the word “work” (=Λ).

In the description of an LSN, three types of pathways are essential, each with a similar structure as the permanent and temporary connections shown in Figures b and b. For each letter node, a fan of excitatory bottom-up pathways exists, linking the letter node to its local position nodes of the various words containing the involved letter at the corresponding position. For example, in Figure b, the pathway comprises letter node “w”, sub node s1P1Λ, local position node P1Λ, and word node “work”.

In addition, an LSN contains excitatory top-down pathways. Each of them, contains links leading from a word node to a letter node. Figure b shows the top-down pathway from word node “work” to sub node s2P1Λ, and then to letter node “w”. The number of these excitatory, top-down pathways per word node equals the length of the represented word.

Finally, an inhibitory top-down pathway exists in an LSN as well. The example in Figure b shows the pathway from word node “work” to sub node s2W, and then to letter node “w”. By means of these pathways, back-inhibition (cf. Section 4.1.3) occurs if a word node becomes active: the excitations in its letter nodes are then suppressed to a level below the critical threshold. Next to the letter nodes, the back-inhibition also affects the (sub) nodes in the LSN, as indicated in Figure b by the inhibitory links from word node to LSN (white lines on a grey background). Similar to permanent and temporary connections, inhibition of the back-inhibition of letter node by a word node occurs if the former receives new input, cf. the roles of sub nodes s1W and s2W in Figure b.

6. Word recognition

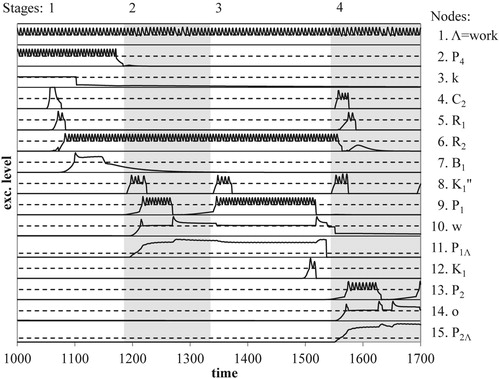

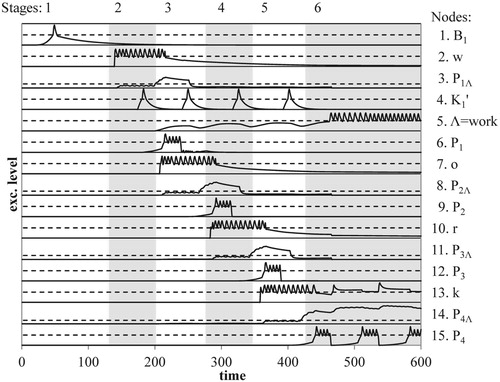

How do the discussed structures interact to produce the recognition of a word? This will be explained by means of the computer simulation illustrated in Figure , which is based on the same format as Figure d.

Figure 6. Evolution of excitation levels for the nodes of the conceptual network for word recognition: each numbered graph gives the excitation curve of a (sub) node of the network (cf. Figures and ); stages and graphs indicated as in Figure d.

6.1. Task set and word presentation.

In order to recognise a word, the network must have a task set, i.e. appropriate expectations about the upcoming stimuli and required responses. This means that all sequence networks, the GSN as well as all LSNs, and the position cycle (PC) are ready to start functioning. Accordingly, the nodes necessary for loop propagation in these sub networks are primed, i.e. nodes B1, K0, and N1 of the task network (cf. Figures and ). In addition, the task set includes the expectation of a left to right order of the presented letters. At the structural level, this corresponds to a specific priming of the scanning mechanism by the task network, cf. Figure .

The actual presentation of example word “work” causes the simultaneous excitation of four receptors, which in turn excite the scanning mechanism in parallel. This leads to the activation of orienting nodes O1 and O2 (see Figure ). Consistent with SPAL conditions, node O1's activation then leads to the activation of the already primed nodes B1, K0, and N1 of the task network. The active node B1 (Stage 1 of Graph 1 in Figure ) primes node L1, thus ensuring a correct start of the SPAL in the GSN (cf. Figure ). In addition, node B1 causes a fan of excitations, each of which primes a node L1M of all word nodes M, necessary for a potential SPAL in the corresponding LSNs (Figure ).

6.2. Synchronisation of loop propagation in global and local sequence networks

After the presentation of our example word, the scanning mechanism (SCAN) successively releases the excitation loops produced by four, parallel, external excitations of the network's receptors. For each receptor Ri, the corresponding excitation loop Ei released by SCAN leads to a bifurcation, causing the simultaneous excitation of node S in the spatial map and letter node A (cf. Figure , not shown in Figure ). According to SEC conditions (cf. outward context Γ1 in Table ), a temporary connection emerges from letter node A to a sub node of node S of the spatial map.

Consistent with the properties of orienting nodes (Section 5.1), each release of excitation by SCAN causes an activation of orienting node O2. This affects the already primed nodes in GSN and LSNs and produces the loop propagation shown in Table . For each excitation release Ei, the table describes how the excitation of letter node A (not necessarily at a level above the critical threshold) produces the SPAL conditions necessary for the synchronous loop propagations in some of the LSNs and the GSN. Each excitation of a local position node primes the involved word node whereas the excitation of a global position node leads to position-identity binding: SEC conditions hold for global position node Pi and letter node A, according to outward context Γ2, cf. Table . The emergence of the temporary connection from a letter node to a global position node does not interfere with a letter node's already existing temporary connection with the spatial map since both temporary connections come into existence in different contexts. In the model, a position-identity binding also causes the scanning mechanism to release another excitation. A next transition in the loop propagations in GSN and some of the LSNs will then occur.

Table 4. Word recognition: Loop propagation in the global – and local sequence network (GSN and LSN) of Figures and ; columns for the begin- and end, conditions indicate the nodes excited at the start and end of loop propagation necessary for the transition from position i to position i + 1 by means of permanent and temporary connections (solid resp. dashed arrows); for generic nodes their respective actual nodes are listed below them; a number pair below a node name gives a stage and graph in the node's excitation curve of Figure .

As excitations for the letters of the presented word are successively released by the scanning mechanism, the number of word nodes with newly activated local position nodes in the LSNs decreases. This is similar to a knock-out competition: After the first excitation release of the presented word “work”, for example, all word nodes of words starting with the letter “w” will be primed by the first local position node in their LSN, after the second release, only those starting with the letter pair “wo” will be primed by their second local position node, etc. Word nodes representing (some of) the presented letters, but not at the right position, will remain in a state of priming due to relatively weak coincidences of excitations in the position nodes of their LSN. Accordingly, mutual inhibition of word nodes, a winner-takes-all competition, cf. McClelland and Rumelhart (Citation1981) and O’Reilly and Munakata (Citation2000), does not play a central role in the recognition process.

After a series of position-identity bindings, the scanning mechanism will not produce an excitation loop any more. Accordingly, centre node K1 of the GSN will not become active and loop propagation in GSN and LSNs will halt. Contrary to the previously activated global position nodes, SPAL conditions now lead the loop propagation of the GSN toward node N1 of the task network, into the position cycle PC, i.e. nodes N1, N2, Mi, and Pi. The loop propagation in the PC keeps activating the global position node temporarily connected to the node of the last letter of a presented word. Accordingly, the continued activation of this position node will increase the excitation of the involved letter node. In turn, this reverberation causes the corresponding word node to become active, cf. the last jump in excitation in Stage 6 of the excitation curve of word node “work” in Graph 5 of Figure . The assumption of a position cycle thus assigns an essential role to the reverberation caused by the last letter in a string of letters. If the string forms a word, the reverberation ensures that word nodes corresponding to all but the last of the presented letters e.g. the word “bar” in the word “bark”, do not become active but remain primed.

At the functional level, the word node's persistent activation corresponds to the recognition of the presented word. Within the sub network of the implied context, the temporary connections from letter nodes to the GSN's global position nodes represent the episode of the presentation of a letter sequence.

7. From word recognition to letter identification

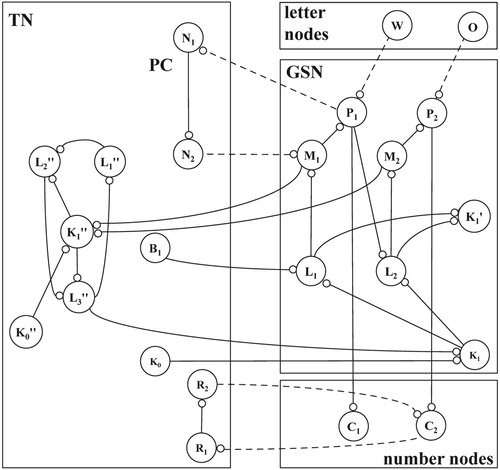

The general nature of the proposed model appears when it is applied to a different task, the identification of a letter at a certain position in a word, the letter-N task. As shown in Figure , the network then is a representation of ordinal numbers. Their emergence is an important step in the development of a GSN. In Figure , node Cn represents ordinal number n, connected to global position node Pn in the GSN. Accordingly, the network is capable of an elementary form of counting, corresponding – at the functional level – to the ability to go from one object to the next. In cognitive psychology (Shiffrin & Schneider, Citation1977), this type of process is well-known as an example of a so-called “controlled” task. By implication, it must be possible to interrupt the process. At the structural level, controlled processing implies constraints on loop propagation in the GSN. For this purpose, the TN contains a cycle: centre node K1″ with peripheral nodes L1″, L2″, and L3″, cf. Figure .

Figure 7. Parts of a conceptual network necessary for letter identification: global sequence network GSN, task network TN with position cycle PC, letter – and number nodes.

In addition to ordinal number nodes, a representation of the letter-N task requires the generic notion of a target position. For this purpose, nodes R1 and R2 are part of the task network, see Figure . In line with the aforementioned, bidirectional variable binding, each of them is comparable to the formal argument of a procedure, which becomes temporarily bound to an actual value, i.e. a number indicating a letter position.

As an example of the letter-N task, we will use the identification of the second letter of the already presented and recognised word “work”. The discussion will show how structures and processes of word recognition are “re-used” in the letter identification process. Before the letter-N task can start, the network must first settle on a new task set.

7.1. The task set for letter identification.

The task set of the letter-N task requires retention of the given target position and therefore a loop propagation in yet another cycle. For our example, the task set requires the activation of the number node C2 representing the actual target position (cf. Stage 1 of Graph 4 in Figure ) and the activation of node R1 (cf. Stage 1 in Graph 5 of Figure ) of the task network. Due to SEC conditions (cf. outward context Γ5 in Table ), a temporary connection is formed from node C2 to node R1, and then from node R2 to node C2.(cf. outward context Γ6 in Table ). The loop propagation from node R2 to number node C2, however, does not yet take place. This will happen when the number node receives its second excitation required for SPAL, i.e. when the appropriate global position node becomes activated, i.e. node P2 in Figure . Meanwhile, node R2 will oscillate at a supra-threshold level (Stages 1, 2, and 3 in Graph 6 of Figure ).

7.2. An elementary counting process.

In order to complete the letter-N task, an elementary counting process has to take place. At the structural level, this process is similar to word recognition because letter identification also requires a synchronous loop propagation in the GSN and LSNs. The process is initialised by the activation of node B1, cf. Stage 1 of Graph 7 in Figure , causing the position cycle PC to move from position node P4 to position node P1, due to changed SEC conditions, cf. Contexts Γ3 and Γ4 in Table . Unlike word recognition, however, the loop propagations in the GSN and the LSNs are now triggered by TN's cycle and not by the scanning mechanism, as shown in Table and Figures and . The components of the network now subserve a different goal and accordingly the general coordination of a distributed system changes. As Feldman (Citation2013) points out, this flexibility of control of behaviour is an important aspect of binding.

Table 5. Letter identification: Loop propagation in the global – and local sequence network (GSN and LSN) of Figures and ; items are indicated as in Table , numbers in stage and graph pairs refer to the simulation in Figure .

As an example of the network's flexibility, we mention the interruption of the letter-N task. This will take place if the SPAL conditions for TN's loop propagation are absent, i.e. if node K0″ of the TN cycle no longer excites the cycle's centre node K1″ (cf. Figure ). As can be inferred from the figure, loop propagation in the GSN then halts. When centre node K1″ becomes active again, the GSN's loop propagation can continue correctly because loop propagation in position cycle PC remained active at the interrupted position. Accordingly, the network accommodates controlled processing necessary for task performance.

7.3. Position-specific downward excitation and re-entrant excitation

During the letter-N task, the active word node continuously suppresses its letter nodes as a consequence of back-inhibition. As shown in Table , SPAL conditions occurring in the LSN of the active word node lead to position-specific downward excitation from a local position node to a letter node. How does this excitation break the inhibition of that letter node by its active word node? This shows the role of the inhibition of back-inhibition due to the new excitation implied by a temporary connection, cf. our discussion of Figure b. Simultaneous with the excitation of the local position node, the corresponding global position node becomes active, as indicated in Table , and generates new excitation for the letter node that it is temporarily connected with. During letter identification (Table ), the direction of excitation flow between position node and letter node is now opposite to that during word recognition (Table ). Since a temporary connection works in both directions, a position node can excite its letter node. The new excitation causes the suppression of the back-inhibition of a letter node by its word node and thus effectuates the re-entry of specific downward excitation from word node to letter node.

The response to the letter-N task is produced because the propagation of the activation loop in the GSN reaches the target position, global position node P2 (cf. Stage 4 of Graph 13 in Figure ). Because of its temporary connection with letter node “o” this position node produces the new excitation necessary for the re-entrant top-down excitation of that letter node. For the given example, only the activation of this position node then induces the SPAL-conditions necessary for the required propagation of the activation loop from primed number node C2 to node R1 of the task network (Figure , see Stage 4 in Graphs 4 and 5 of Figure ). The re-activation of node R1 then triggers a response, i.e. in our example, the report of the letter “o” as the second letter in the word “work” (cf. the discussion of in Section 11.5 of Appendix A).

8. Discussion

Biologically plausible, autonomous, cognitive development and learning pose a problem for neuro-computational studies because we only partially understand the necessary mechanisms of self-organisation involved. Largely, these processes take place without supervision and the underlying, key mechanism, the Tanzi-Hebb learning rule, is incompletely understood. However, the learning rule – in its present form – still has important, yet unrevealed implications. In this paper, we pursued one of the implications: the construction – based on biologically plausible principles of self-organisation – of a conceptual network for word-processing tasks. Because of the way it is constructed, it should be possible to find the conditions for an artificial, neuro-computational process of self-organisation producing the network. In the search for these conditions, the structure of the constructed network and the logistics of its excitation processes provide important constraints for the further specification of the Tanzi-Hebb learning rule, as well as for eventual other learning mechanisms.

8.1. Top-down constraints on self-organisation

The main conclusions on self-organisation, following from the construction of a conceptual network for visual-word processing, can be summarised as follows:

8.1.1. A cell-assembly must have a specific structure

The networks necessary for word-processing can be represented by means of intermediate node structures, sequence networks, and cycles. The cell-assembly underlying a network node must include sub-assemblies specific for permanent and temporary connections. The structure of a cell-assembly provides constraints for future simulation studies with networks of individual neurons, following on de Vries and van Slochteren (Citation2008). The aim of these studies is to show how an adapted Tanzi-Hebb learning rule can produce the required structure of a cell-assembly.

8.1.2. Binding requires a scanning mechanism

In the proposed network, interference in the binding process due to several external excitations occurring simultaneously in the same context, is kept at a minimum. In order to ensure a robust binding process, a mechanism for serial, pairwise binding of location and identity was proposed.

8.1.3. Binding must be compatible with loop propagation

Given the necessity of temporary connections in a network, the propagation of an activation loop must be possible by means of permanent and temporary connections. In addition, the propagation must function in a way that ensures the bi-directional excitation flow inherent to binding. The proposed model satisfies both requirements.

8.1.4. Binding is consistent with psychophysiological evidence