Abstract

Online second language instruction has boomed in recent years, aided by technological affordances and the forced changes in instructional modality resulting from the COVID-19 pandemic. This transformation has underscored the critical role of interaction in online pedagogy. Research suggests that increasing opportunities for interaction between students and instructors is essential for fostering second language acquisition (SLA). However, little research exists quantifying the production of diverse types of interaction in online language instruction, particularly among experienced instructors. The present study utilizes an interactionist framework to perform a quantitative analysis of interaction in online Spanish language coursework, categorized according to interaction initiation type: Instructor-Prompted Participation (IPP), Unprompted Oral Participation (UOP), Unprompted Text Participation (UTP), i.e., chat usage, and interaction length (as exhibited by both the number of student turns in an interaction and the average length of those turns). Data includes 18 h of video recordings of synchronous L2 Spanish language instruction across proficiency levels and lesson types at a distance-learning university in the UK. Lesson types included grammar workshops and exam preparation. Results show that the interactional patterns in online language courses are influenced by proficiency level and lesson type. Lower proficiency students engaged in interaction routines more frequently, while the ability to engage in extended discourse was contingent upon the specific activity/lesson type. The study helps address the dearth of research on interaction and language teaching in languages other than English (LOTE).

1. Introduction

Recent years have seen a surge in online second language (L2) instruction, attributable to both technological advancements (Tsai and Talley, Citation2014), and the necessity for instructional changes brought about by the COVID-19 pandemic. The pandemic has had a substantial impact on education, including the ‘onlinification’ process that led to the closure of schools, affecting over 90% of the world’s student population (Reuge et al., Citation2021). This shift had a widespread effect on teaching across all levels, emphasizing the centrality of interaction in online pedagogical approaches. Apart from having to adapt to the latest technological advancements and tools (Muñoz-Basols, Citation2019), instructors have had to face the prospect that the nature of interaction – whether instructor-student, student-student, or student-content – may be qualitatively different in the online modality.

Many educational settings have adopted the use of virtual platforms without adequately exploring how mediated interaction – the use of technology to both support and enhance interaction – can be optimized in language learning. While research has repeatedly highlighted the need to provide more opportunities for student-student and student-instructor interaction (Bikowski et al., Citation2022), there is a limited understanding of how interaction patterns change (or are maintained) in the online language classroom; how language educators manage and influence these interactional practices; or if and when students receive corrective feedback in this modality (Arroyo & Yilmaz, Citation2018; Granena & Yilmaz, Citation2019; Hampel & Stickler, Citation2012; Henderson, Citation2021). In order to maximize the potential of the online environment for language learning, these questions must be explored via the direct observation of language classrooms. As claimed by Moorehouse et al. (2023, pp. 126–127), teachers’ actual practices can facilitate a more in-depth understanding of the competences and skills that teachers need to develop to promote interaction in the language e-classroom.

When delivering synchronous online lessons, language teachers face a variety of challenges, such as the struggle to elicit student responses and the perception that lessons may become overly teacher-centered (Kaymakamoglu, Citation2018). These challenges can be exacerbated in online settings by technical constraints, as well as by social norms that instill apprehension about interrupting the teacher’s discourse (Sert, Citation2015). Likewise, Moorehouse et al. (2023, pp. 121, 122) point out a growing concern about the rise in teacher talking time in online learning settings, which potentially leaves less room for interaction. The quantification of interaction in online language learning, as performed in the present study, can complement the findings of research that measures teacher talk (such as Azhar et al., Citation2019; Blanchette, Citation2009; Malik et al., Citation2023). Additionally, it can offer fresh empirical insights into the prevalence of different interactional patterns of interaction within the language classroom.

While the aforementioned pitfalls of online language instruction have been theorized and qualitatively described, few studies have provided any sort of quantitative account of the type and relative frequencies of various interaction practices observable in the online language classroom (Moorhouse et al., Citation2023). None, to the best of our knowledge, has focused on Spanish Language Teaching (SLT). However, such quantitative accounting is necessary in order to more confidently report on the nature and extent of interaction practices in the online environment. This information, in turn, will allow practitioners to implement empirically-driven curricular and pedagogical decisions (Gironzetti and Muñoz-Basols, Citation2022) to improve online language education. This is also a clear gap in LOTE (Languages Other Than English) research, as most of the research in the realm of interaction has been carried out in English as an additional language.

The present study utilizes an interactionist framework (Gass & Mackey, Citation2006) to perform a quantitative analysis of interactional patterns in online Spanish language coursework. Building on previous assessments of online language instruction (e.g. Arellano-Soto & Parks, Citation2021; Strawbridge, Citation2021, Cheung, Citation2021), the initiation and extension of interaction – including via the presence of corrective feedback – is analyzed in three ways: (a) as ‘Instructor-Prompted Participation’ (IPP) (i.e., calling on a specific student without indication from the student to participate); (b) as ‘Unprompted Oral Participation’ (UOP) (i.e., a student giving explicit indication of a desire to participate); and (c) as ‘Unprompted Text Participation’ (UTP) (i.e., student spontaneous participation via the text-based chat function), and interaction length (as exhibited by both the number of student turns in an interaction and the average length of those turns). Through the quantitative analysis of online interaction in a diverse sample of authentic online language classrooms, and by tracing the sequential organization of interaction, including which agents are initiating and extending interactions, we aim to observe the effect of instructional practice and provide insight into the optimization of interactional practices in language teaching and learning.

We also analyze how certain explanatory (independent) variables may condition the amount of interaction and corrective feedback: student proficiency level (A1–A2, B1, B2, C1 in the Common European Framework of Reference), as well as lesson type (grammar workshop vs. exam preparation sessions). Different types of classroom tasks offer unique chances for engagement (Ellis et al., Citation2019). Our analysis illustrates that, when we examine grammar workshops and exam preparation classes, these two categories also give rise to diverse forms of interaction. Finally, we comment on how online-specific teaching devices, such as breakout rooms and classroom chats (Zhang et al., Citation2022), contribute to student engagement and classroom management.

2. Interaction in online language learning and teaching

There is general consensus that interaction plays a key role in promoting language learning (Gass, Citation1997, Gass & Mackey, Citation2006, Walsh, Citation2006, Citation2013; Walsh & Sert, Citation2019), including online language learning (Ko, Citation2022; Lim & Aryadoust, Citation2022; Zhang et al., Citation2022). Similarly, the importance of interaction has been recognized in other nontraditional settings, such as its function in social networks in SLA during study abroad (Strawbridge, Citation2023), robot-assisted language learning (Engwall & Lopes, Citation2022), and Artificial Intelligence (AI) mediated communication (Muñoz-Basols et al., Citation2023). In connection with L2 instruction, Classroom Interactional Competence (CIC), i.e., the ability of teachers and learners to use interaction as a tool for mediating and assisting learning (Walsh, Citation2013, p. 65), is of special relevance. CIC involves all the participants in the learning setting and can serve for analyzing how interactional resources are used in completing tasks (Balaman & Olcay, Citation2017). CIC facilitates dialogic, engaged, and safe classrooms that encourage students to take risks and increase learning (Moorhouse et al., Citation2023, p. 116). Therefore, by improving this competence, educators and students enhance learning opportunities and outcomes.

2.1. Types of interaction in online language learning

Interaction in online language learning can be categorized using several criteria. First, the nature of the interaction can be either asymmetrical (e.g. instructor-led lecture) or symmetrical (i.e., bidirectional communication involving student participation) (Holden & Westfall, Citation2006). Second, the mode of interaction can be either synchronous or asynchronous. Finally, the aim of the interaction can be instructional or social (Gilbert & Moore, Citation1989). Looking at the agents involved in interaction, Moore’s work on interaction in distance learning differentiates between three types of interaction that are widely followed (Roach & Attardi, Citation2022; Xiao, Citation2017): student-instructor interaction, student-student interaction, and student-content interaction.

According to Russell and Murphy-Judy (Citation2021), promoting student-content interaction, student-instructor interaction, and student-student interaction is crucial in the virtual language classroom. Bernard et al. (Citation2009), in a meta-analysis of interaction treatments in education, indicate that student-instructor interaction, on its own, is the least influential for students’ achievement; however, the combination of student-instructor and student-content interactions produces similar achievement outcomes to student-student and student-content interactions combined, and better results than student-student plus student-instructor interaction. Both of student-student and student-instructor interaction play an integral role in the creation of communities of practice (Hooper, Citation2020), and can serve to boost students’ motivation and satisfaction (Miao et al., Citation2022). Regarding the quality of interaction, there is some indication that interaction in the online environment exhibits the same features as in-person interaction, such as the production of corrective feedback (Strawbridge, Citation2021).

2.2. The role of the instructor and the student

It is often the instructor’s responsibility to design tasks that promote both student-instructor and student-student interaction, such as collaborative technology-mediated tasks (Belda-Medina, Citation2021; González-Lloret, Citation2020). While research suggests that online language learning does not necessarily result in less interaction between students and instructors (Ji et al., 2022; Ng et al., Citation2006), some studies have shown a decrease in student-student interaction compared to face-to-face classroom settings (Baralt, Citation2013; Harsch et al., Citation2021). Assessing the success of a particular interactive task can be challenging in online language learning settings, as the instructor lacks certain cues present in face-to-face instruction that indicate student engagement (Bikowski et al., Citation2022), although some studies suggest the lack of these cues is compensated by the multimodal nature of online language teaching (Meskill & Anthony, Citation2013).

To address the role of students in promoting interaction in online language learning, Bikowski et al. (Citation2022) assert that students must take an active role in interaction, and that meaningful student-student interaction can lead to student engagement. However, some students may not participate in interactive tasks due to factors such as perceived competence (Ng et al., Citation2006), language anxiety (O’Reilly & García-Castro, Citation2022; Russell, Citation2020), or boredom (Shimray & Wandgdi, Citation2023; Wang & Li, Citation2022). Nonetheless, interactive practices can provide students with affective benefits (Yacci, Citation2000). One approach to promoting interaction among less confident students is to scaffold student-instructor and student-student interactions (Lin, Zhang, et al., Citation2017). Nonetheless, these investigations tend to overlook the quantitative aspect of interaction, a critical factor in understanding the dynamics of interaction initiation and duration during language classes. This is particularly essential for educators who need to be mindful of the time allocated to interaction and effectively manage the language learning environment. Such considerations gain even greater significance, as we will explore in the following sections, when dealing with diverse levels of proficiency in different language learning lesson types.

2.3. Quantitative accounting of online classroom interaction practices

Interaction in online language learning can be quantified through various measures, including the frequency and duration of interactions, the number of participants involved, and the quality of the interactions (Chen et al., Citation2012; Herring, Citation2004; Rovai, Citation2002). This involves analyzing both the frequency and quality of student engagement in discussions, activities, and assignments (Borup et al., Citation2012); quantifying the number of interactions, such as messages, posts, or comments, within online language learning platforms (Hrastinski, Citation2008); and considering the depth and pertinence of their contributions. This makes it possible to assess the extent of learner engagement and participation (Mercer & Dörnyei, Citation2020).

Despite ongoing calls to further investigate the nature of interaction in online language learning contexts, few studies have quantitatively documented the frequency of different interaction types in the online language classroom (but, for one-on-one eTandem exchanges, see Arellano-Soto & Parks, Citation2021; Strawbridge, Citation2021; Cirit-Isikligil et al., Citation2023). Documenting interaction routines and their salient characteristics – e.g. frequency of student opportunities for interaction, average interaction length, and relative use of various multimodal communication tools – is critical to optimizing online language instruction. While the online language classroom is touted for its ‘enormous potential’ (Kohnke & Moorhouse, Citation2020), the extent to which particular interaction characteristics are present in the online classroom remains relatively unclear. It is possible, however, that interaction opportunities may be altered or limited by the online environment, as indicated by Moorhouse et al. (Citation2022) in a qualitative analysis of synchronous online English language coursework. The authors of that study report that teachers were limited in their ability to observe students’ nonverbal cues, and were consequently forced to initiate interaction themselves through direct nomination of students for participation throughout the class.

In another study, Cheung (Citation2021) performed a quantitative analysis of interaction practices in a primary education (ages 11–12) online EFL classroom, highlighting the need to analyze multimodal communication practices (i.e., combined use of voice, chat, and video communication). To account for interaction length and quality, Cheung proposes differentiating between ‘Expanded’ and ‘Restricted’ student responses in interaction, with ‘Expanded’ responses defined as those responses of at least one sentence in length, and which contain a ‘judgement or evaluation’ (‘Restricted’ responses were found to be more common). Cheung also quantified the frequency of verbal and nonverbal (i.e., text-based) interaction routines, reporting that text-based responses occurred over five times more frequently than verbal responses.

The potential changes to interactional patterns brought on by the online environment may have consequences for SLA outcomes. This is due not only to the possibility of a general decrease in L2 use, or a heavier burden being placed on instructors to initiate interaction routines, but to the potential for qualitative changes to the nature of interaction itself. One important area of alteration to interaction is in the provision of corrective feedback, long posited as being central to the power of interaction to fuel SLA, serving as a catalyst for learners to notice gaps between their interlocutor’s use of linguistics forms and their own (Gass & Mackey, Citation2006; Fernández, Citation2022). If the online environment provides less time-in-interaction for learners, or if the ways in which interaction is initiated in the online environment prove to be fundamentally different when compared to traditional in-person environments, then corrective feedback patterns may suffer in such a way that reduces learners’ opportunities to adjust their linguistic forms and conversational structure to be more target-like.

While research in these areas is growing, the studies cited earlier do not address the examination of interactional patterns in experienced online teachers or explore languages beyond the realm of EFL instruction. In contrast, our study not only introduces a fresh perspective by investigating interactional patterns in Spanish language classrooms but also contributes to the body of knowledge in applied linguistics. This is particularly significant as it centers on a language that has traditionally received less attention in such studies, even though its teaching and learning have seen consistent growth in recent decades (Muñoz-Basols et al., Citation2014; Muñoz-Basols et al., Citation2017; Muñoz-Basols & Hernández Muñoz, Citation2019).

3. The study

There is a clear need to better understand interaction in online language instruction, and to explore the question of whether quantitative measurement substantiates previous (largely qualitative) findings regarding the nature of interaction in this environment (Blaine, Citation2019; Lee, Citation2001; Moorhouse et al., Citation2022). However, few studies have accounted for this aspect of learning quantitatively. Grounded in theoretical work on online language learning, as well as quantitative work on interaction in one-on-one eTandem exchanges (e.g. Arellano-Soto & Parks, Citation2021; Strawbridge, Citation2021) and in the online primary classroom (e.g. Cheung, Citation2021), the present study developed three indices to analyze the types of interaction present in online Spanish language coursework:

‘Instructor-Prompted Participation’ (IPP), where the instructor called on a student to participate;

‘Unprompted Oral Participation’ (UOP), where a student explicitly indicated a desire to participate (e.g. by turning on their microphone and waiting to be called on);

‘Unprompted Text Participation’ (UTP), where students spontaneously participated via the text-based chat function.

In addition to accounting for the frequency of each interaction type, it was of interest to determine the length of student-instructor and student-student interactions within the discursive context; interaction ‘length’ was analyzed by the number of student turns in interaction, as well as the length of each student’s turn. Finally, the study set out to examine the occurrences of interaction for the presence and quality of corrective feedback, an integral aspect of interaction for language learning.

The following research questions guided this study:

RQ1: How frequently do university students engage in IPP, UOP, and UTP while completing online Spanish language coursework?

RQ2: How do interaction practices differ among students at different Spanish language proficiency levels?

RQ3: How frequently do observed student-student and student-instructor interactions exhibit instances of corrective feedback?

3.1. Context and participants

Data consist of recordings of online Spanish language classes at a large, distance-learning university located in the UK with over 25 years of experience in administering online coursework. These lessons were not originally recorded for this study, but rather as part of the institution’s policy, which requires the recording of several learning events so that students can rewatch at their leisure.

Ethical considerations played a significant role throughout the study. Students were informed that recorded learning events might be used for research purposes, as well as for vicarious learners, and were given the option to participate in nonrecorded sessions if they preferred. Demographic information was intentionally omitted to uphold ethical standards during the study. Additionally, as highlighted in the research, the actual names of students were anonymized to protect their privacy.

The sample included 18 h of classroom recordings from 14 different class sessions (average duration = 78 min; average number of students = 10; see ). All class sessions were part of a series of voluntary language classes that were supplemental to students’ primary language coursework. Three instructors who had taught Spanish online at the same institution for an extended period of time – Instructor A (18 years), Instructor B (20 years), and Instructor C (21 years) – were responsible for teaching the lessons in the sample. All were native Spanish speakers.

Table 1. Summary of online classroom recordings.

Recordings were collected from four language proficiency levels (Common European Framework of Reference, see Council of Europe, Citation2020): Four sessions were at the A1–A2 level; three were at level B1; three were at B2; and four were at C1. All class sessions at the A1–A2 and B1 levels (n = 7) were grammar workshops, designed to give students the opportunity to explicitly review grammar material with their instructor. At the upper levels (B2 and C1), lessons were divided between grammar workshops (n = 2) and exam preparation sessions (n = 5). In contrast to the grammar workshops, which emphasized language practice, the exam preparation class prioritized addressing questions related to the class exam, often including discussion-based analyses of course content. The different aims of each session enabled us to identify potential changes in interaction patterns related to activity-type variation. For lower levels (A1–A2), this class was predominantly conducted in English, while for upper levels (B1, B2, and C1), it was conducted in Spanish.

3.2. Instrument for data collection

An observation grid was created to examine the nature of interaction in an online environment (). In-class participation was divided into three categories, according to both the initiator of the communication exchange (‘student’ or ‘instructor’) and the mode of communication (‘text’ or ‘oral’ interaction). Student-instructor oral interaction that was initiated by the instructor (e.g. by calling on a student directly, without any prior indication from that student of an intention to participate) was labeled ‘instructor-prompted participation’ (IPP); student-instructor oral interaction initiated by the student (e.g. by turning on their microphone and waiting to be called on by the instructor) was labeled ‘unprompted oral participation’ (UOP); and student-instructor participation initiated by the student via the text-based chat function was labeled ‘unprompted text participation’ (UTP).

Data collection and analysis consisted of a series of steps:

First, a representative selection of video recordings, including a variety of student proficiency levels (A1–A2, B1, B2, C1), was obtained to ensure relevance to the study;

These video recordings were then transcribed with the transcription software Sonix, which automatically identifies and timestamps individual speaker turns;

The four researchers read the 18 hours of transcriptions and reached a consensus on identifying and categorizing three types of interaction deemed valuable for study based on the data’s nature, namely: a) IPP (Instructor-Prompted Participation), b) UOP (Unprompted Oral Participation), and c) (UTP) (Unprompted Text Participation, i.e., chat usage);

Subsequently, two researchers revised the transcriptions generated by Sonix. They also independently coded the various types of interaction;

These two researchers then compared and reached an agreement on how they had coded instances of interaction, categorizing them into the three main types designated for this study;

Then, the coding was individually reviewed by the other two researchers involved in the project;

These latter two researchers then arrived at a consensus regarding the coding proposed by the initial two coders;

Subsequently, the team collectively analyzed the data subsample, leading to the definition and finalization of the Online Interaction Classification Scheme. All four researchers convened to review each instance of interaction across the 18 hours of instruction;

Following this, a researcher conducted the data analysis using the defined classification scheme;

Finally, the entire research team reviewed the data and engaged in discussions to clarify any ambiguous examples or interpretations that arose during the analysis process.

The process was meticulously designed and executed in multiple stages, involving the input of four researchers to ensure rigorous quality control. Every interaction instance underwent consensus agreement among the four researchers. presents the classification scheme used for coding.

Table 2. Online interaction classification scheme.

The observation grid examines two aspects of interaction: the initiator (instructor or student) and the mode of communication (written or oral). The second part of the grid, ‘Interaction extension’, describes the length of the interaction according to the number of interactive turns exhibited by the student(s) involved in each interaction. The approach of this grid is intentionally wide-reaching, such that further descriptions can be made pertaining to various qualities of interaction routines (e.g. average length of student turns, presence of corrective feedback, etc.). Given the low number of class recordings at each group, it was not appropriate to carry out additional inferential statistical analyses in the present analysis.

3.3. Data analysis

3.3.1. Number of student turns, turn length

Classroom interaction routines (IPP, UOP, UTP) were analyzed according to whether a student contributed either a single-turn response (STR), or a response constituting more than one turn (‘multi-turn response’, from here ‘MTR’), in a given interactive exchange with the instructor. For oral exchanges, a ‘turn’ was defined as an uninterrupted utterance given by a student in the class; following Brock (Citation1986), the student’s turn was considered to have ended once the instructor spoke again. Only student turns are counted here, as the interest of the analysis is to account for the frequency and degree of student participation in online coursework. Below is an example of an STR (IPP), in which the student responds to the instructor’s participation prompt. The student’s turn is then followed by an additional turn by the instructor, who closes out the exchange and moves on to direct their attention to a different student. This exchange comes from a sentence-completion grammar activity (all names are pseudonyms) ().

Example 1. STR (level: B1/class type: grammar workshop/instructor C).

Interactions in which a student utters more than a single response were labeled MTR ().

Example 2. MTR (level: B1/class type: grammar workshop/instructor C).

IPP interactions labeled as MTR were also analyzed according to the number of turns produced by students (in Example 2 there are two turns produced by the student). Finally, STR and MTR interactions were analyzed for turn length, by measuring the number of words uttered by the student in each exchange.

3.3.2. Corrective feedback

All IPPs were analyzed for the presence of corrective feedback (CF), defined as any indication – explicit or implicit – from an interlocutor (in this case, the instructor) that a speaker’s utterance is not target-like (Fernández-García & Martínez-Arbelaiz, Citation2014). Instances of CF were further classified as constituting either ‘prompt’, ‘recast’, or ‘explicit correction’. A prompt was defined as any utterance made by the instructor in order to encourage the student to produce a correct target language form, after having produced a nontarget-like form, while at the same time withholding the correct form (Lyster, Citation2004). In contrast, a recast was defined as any ‘well-formed reformulation of a learner’s nontarget utterance with the original meaning intact’ (Lyster, Citation2004, p. 403). Finally, explicit corrections were defined as utterances in which the instructor corrected a student’s nontarget utterance by explicitly indicating the source of the error (Bueno-Alastuey, Citation2013). This selection of corrective feedback strategies represents a range of corrective feedback types that are considered implicit (i.e., recasts), explicit (i.e., explicit correction), and somewhere between implicit and explicit (i.e., prompt). Implicit forms of negative feedback have the advantage of causing the least amount of disruption to the content of conversational development, while explicit forms of feedback have the advantage of assuring – to a higher degree of confidence – that the learner will notice the gap between their own language and the language that their interlocutor is modeling for them. Below, an example of each type of CF is given (, & ). Note the use of English for some of the interaction in the example of explicit correction, common in lessons imparted at lower proficiency levels.

Example 3. Prompt (level: B1/class type: grammar workshop/instructor C).

Example 4. Recast (level: B1/class type: grammar workshop/instructor C).

Example 5. Explicit correction (level: B1/class type: grammar workshop/instructor C).

4. Results

The first research question (RQ1) inquired after the interactional patterns displayed by students. To address RQ1, an analysis was carried out that would determine to what extent participation in this online coursework was divided between ‘student-student’ and ‘student-instructor’ interaction types.

First, this analysis revealed that class sessions from all course levels allowed – in theory – for ample opportunity for student-student and student-instructor interaction (). However, while instances of IPP were frequent among all 14 online classes in the sample, occurrences of both UOP and UTP were extremely rare (see ).Footnote1 For this reason, the remainder of the analysis focuses solely on occurrences of IPPs. displays the breakdown of IPP interactions observed across all class recordings in the sample, by course level. Average values per 60 min of class time are given in parentheses.

Table 3. Total student participation, by type.

Table 4. Total average (per 60 min) and [standard deviation], instances of IPP, STR, and MTR, by course level.

As for interaction practices according to proficiency level (RQ2), overall, a greater number of IPPs are observed at the lower proficiency levels, with a marked difference between average IPP values for levels A1–A2 and B1, on one hand, and B2 and C1, on the other. This pattern is equally reflected in the average occurrences for both STRs and MTRs. However, there is no clear pattern, by course level, with regard to the proportion of IPPs that are MTR as opposed to STR ().

Table 5. Average presence of MTR as proportion of IPP, by course level.

Since lessons were also divided according to the type of content being imparted, ‘grammar workshop’ (n = 9) or ‘exam preparation class’ (n = 5), it was of interest to gauge the influence of lesson focus on the occurrence of IPPs (). Note that this table only displays the occurrence of IPPs for levels B2 and C1, as it was only these levels that contained ‘exam workshop’ lessons).

Table 6. Average occurrence (per 60 min) and [standard deviation] of IPPs, by lesson type (levels B2 and C1 only).

As observed in , there is a contrast in frequency of IPPs for Grammar vs Exam lessons (though the reduced sample size should be noted), with grammar-centric lessons exhibiting a greater average frequency of IPPs overall.

Next, IPP interactions were analyzed for their average ‘length’, measured as the average number of words per STR, the average number of words per MTR, and the average number of student turns per MTR ().

Table 7. Average words per turn (STR, MTR) and average turns per MTR, by course level.

There is a general increase in IPP words per turn that accompanies the increase in course level; as students become more advanced, they tend to engage in more extended discourse as measured by words per turn. Similar to the trend for average frequency of IPPs (where greater frequencies were observed for lower proficiency levels; ), there is a general division between A1–A2 and B1 groups, on one hand, and B2 and C1 groups, on the other. Here, however, it is the higher proficiency groups that exhibit greater average values. Nevertheless, it should be noted that no such trend is observed for the variable ‘turns/MTR’.

Average ‘length’ of IPP interactions was also measured according to lesson type (‘Grammar’ vs. ‘Exam preparation workshops’; ).

Table 8. Average words per turn (STR, MTR) and average turns per MTR, by lesson type (levels B2 and C1).

The length of STR and MTR interactions is notably greater during lessons that are designed for preparation of the exam. In contrast, no such difference is observed between lesson types for the average number of turns produced in MTR interactions.

Finally, to respond to RQ3, IPP interactions were analyzed for the presence and type of corrective feedback (CF) ().

Table 9. Average instances (per 60 min) and [standard deviation] of CF, by course level and CF type.

In line with results for overall IPP production (see ), it is the lower two course levels (A1–A2, B1) in which the greatest average numbers of CF are observed. Regarding the particular types of CF given, explicit correction is most common across all course levels, followed by recasts.

Several trends are observed. Overall, students in lower-level language classes have the opportunity to engage in a greater number of student-instructor interactions, prompted by the instructor (‘IPP’ or ‘instructor-prompted participation’). The dividing line appears to lie between students at A1–A2 and B1 levels, on one hand, and students at the B2 and C1 levels, on the other. This trend is equally true for both STRs (‘single turn responses’) and MTRs (‘multi-turn response’), though it does not appear that students at any particular course level engage in a greater or lesser number of MTRs as a proportion of all IPPs. The results also make clear that the average ‘length’ on interactions is much greater for students in more advanced coursework. Students at levels B2 and C1 produced roughly twice the number of words per turn, compared to students at the A1–A2 and B1 levels (this difference is sometimes much greater; see ).

5. Discussion

The present results reveal several insights into the nature of interaction in online language coursework. First, in line with previous research (Moorhouse et al., Citation2022), opportunities for student participation and interaction were overwhelmingly initiated by the instructors present, and were not the result of voluntary student initiative. ‘IPP’ interactions occurred over ten times as frequently as those categorized as ‘UTP’ (‘unprompted text participation’), and exactly 44 times as frequently as those categorized as ‘UOP’ (‘unprompted oral participation’) (). In contrast with previous studies (Cheung, Citation2021), the use of nonverbal features, such as icons, was not common in our data.

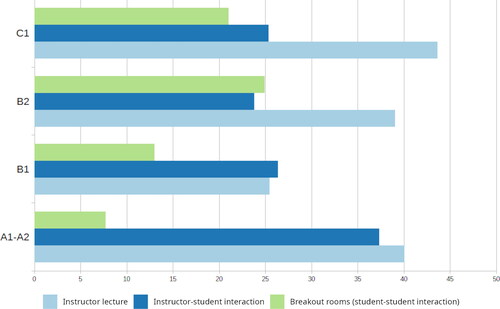

In line with previous research (Moorhouse et al., Citation2023), results demonstrate that, across proficiency levels, the majority of classroom time is dedicated to instructor lecture (). However, the data also make clear that all course levels provide frequent opportunities for instructor-student interaction. This type of interaction has been proved to have a positive impact on online learners’ learning satisfaction (Lin et al., Citation2017), learning engagement and psychological atmosphere (Sun et al., Citation2022). Our data reveal minimal time devoted to student-student interaction in the lower levels, with large increases in student-student interaction in the higher levels (). This trend may account for the sharp decrease in the provision of corrective feedback at higher proficiency levels; students at the A1–A2 were exposed to more than double the amount of corrective feedback at any other course level. While corrective feedback has been observed to be common in student-student eTandem exchanges (Strawbridge, Citation2021), it is possible that the virtual classroom environment – where an instructor and additional students are present – minimizes students’ willingness to provide corrective feedback to their classmates.

For IPPs, results indicate two trends, each displaying an important relation to activity type. First, students at lower proficiency levels (A1–A1, B1) engage in IPP interactions much more frequently than students at higher proficiency levels (B2, C1). Here, the dependence on the type of activity is apparent when considering the lesson type of the lower proficiency classes included in this sample, which were uniformly grammar workshops. In these workshops, instructors most frequently led students in activities of the ‘initiation-response-evaluation’ (IRE) variety, in which students were asked closed-ended questions in relation to grammar activities. This allowed for a much greater number of questions – and, therefore, interactions – to be initiated by instructors. This dynamic – in addition to the lesson focus on grammar – may have had the knock-on effect of granting instructors a greater number of opportunities to implement corrective feedback measures, which were most frequent at the A1–A2 and B1 levels.

While the IRE activities characteristic of grammar workshops may have allowed for a greater number of IPP interactions at the lower levels, they also seem to have limited students’ opportunities to engage in extended discourse. This is evident from observing the average turn length of STRs and MTRs for students at the B2 and C1 levels, where average length of STR and MTR turns in exam preparation workshops are more than three times those exhibited in grammar workshops (). Importantly, these average values for grammar workshop lessons at the B2 and C1 levels are nearly identical to those of the A1–A2 and B1 levels, indicating an exclusive effect of the activity as opposed to a dual effect of activity and proficiency level. The following fragments, both taken from advanced lessons, exemplify this pattern of contrast according to lesson type. In the first example (C1 level grammar workshop), the instructor leads the class in an activity which requires students to alter pre-written sentences in order to convert the verbs in those sentences to the subjunctive grammatical mood, putting a clear limit on the extension of the student’s response (total 13 words). In contrast, in the second example (C1 exam preparation), the student is asked to offer an original analysis of the linguistic landscape of Peru, and is therefore not limited in their response (total 52 words) ( & ).

Example 6. IPP (level: C1/class type: grammar workshop/instructor C).

Example 7. IPP (level: C1/class type: exam preparation class/instructor A).

It should be noted that while this contrast in lesson types (grammar workshop vs. exam preparation class) is stark, it is not uniform, and nor is it bound by proficiency level. Occasionally, instructors teaching lower-level grammar workshops were observed to implement language activities that allowed students the opportunity for open-ended discourse. In Example 8, students are asked to prepare a short description of their hometown. Such time to ‘rehearse’ or ‘prepare’ has been shown to improve the interactional environment of the classroom (Moorhouse et al., Citation2023). Aided by the instructor’s scaffolding, the student produced notably longer oral discourse. In this way, the length of student participation is largely controlled by the activity in question, not proficiency level.

Example 8. IPP (level: A1–A2/class type: grammar workshop/instructor C).

An additional similarity between lower- and higher-proficiency groups is the pattern of MTRs, in which students are allowed multiple turns in an interaction. The proportion of interactions that were labelled as MTR was comparable across course levels, indicating several trends. First, it would appear that MTRs are prompted by instructors’ perceived need to provide CF. In most grammar-oriented and close-ended activities, students only produce MTR when the instructor signals an error, which leads to an opportunity for the student to monitor their production and participate again with a correct response ().

The second context which appears to prompt the production of MTR interactions is in grammar-oriented activities in which the instructor encourages students to ask each other questions, resulting in longer student participation. In , students have to read a fact about the past, and their classmates have to react saying if it is true or false. Here, the students participate in MTR until the instructor steps in to close the interaction.

Example 9. MTR (level: B1/class type: grammar workshop/instructor C).

Several implications emerge for student-instructor interaction in on-line settings. Results indicate that students produce longer discourse and participate in more sustained dialogues when they participate in open-ended language activities, opening up a space to participate in the discourse and to contribute to class conversations. These are key features of e-Classroom Interactional Competence (e-CIC) (Moorhouse et al., Citation2022). By prompting extended student turns, instructors create a space for students to formulate their contributions and create co-constructed discourses. At beginner levels, this can be achieved by allowing time for preparation for the discourses and by scaffolding the students’ contributions with examples, the use of the L1, or use of requests for clarifications. In other words, students must be engaged in dialogues that go beyond the IRE pattern, with activities that promote the negotiation of meaning. However, our data indicate a scarcity of other effective practices for promoting classroom interaction, such as utilizing chat as a means of initial responses and incorporating nonverbal icons. This scarcity implies that teachers require additional professional development to improve their Online Environment Management Competence (Moorhouse et al., Citation2022). By doing so, they can create a wider range of opportunities for interaction in synchronous online environments.

6. Conclusions, limitations, and future lines of research

This study has drawn on recordings to investigate language instruction practices in online Spanish classes, including those targeted at both grammar development and exam preparation. Analysis of 18 h of class recordings revealed that online instructors of Spanish incorporate both instructor-student and student-student interactions in their instruction. However, the opportunities for interaction were heavily dependent on lesson type and, therefore, were often limited to short, mechanical interactions due to the nature of the activity at hand. Furthermore, the students were only exposed to regular corrective feedback at the lowest proficiency level, A1–A2.

Results underscore a need for increased opportunities for extended interaction in language classes at lower levels; as highlighted in , there is a need to focus teaching on encouraging spontaneous language production, in order to reinforce oral language skills and to elevate the role of interaction at lower levels. For A1–A2 and B1 students, the barrier to participate in extended discourse is not their own lack of language proficiency, but rather the language learning activity in which they are being led by the instructor. This should be viewed optimistically by language educators and those in the position to design language curricula, indicating as it does an opportunity to design lower-level coursework that engages students in extended, multi-turn interaction routines. An analogous lesson emerges with respect to advanced proficiency coursework, given that grammar workshop lessons imparted at the B2 and C1 levels in this study did not exhibit any greater degree of extended discourse or engagement in MTR interactions compared to A1–A2 and B1 grammar workshops. Lesson focus and activity type appear to be highly determinative. These areas could likewise be addressed in order to increase opportunities to expose learners to corrective feedback in the online environment, which was most common at lower proficiency levels and appeared to depend on instructor initiative.

The study is not exempt from limitations, including the inability to examine interaction at a micro-discursive level. This would have involved analyzing the linguistic mechanisms used to encourage and manage turn-taking during the class in more detail. Another limitation is not having been able to study the role of Breakout rooms (the software used to facilitate the sessions does not record breakout rooms). Finally, although the data for this study comprised a substantial amount of material, the relatively low number of recordings for each language level and class type precluded further inferential statistical analysis.

Future research should contrast instructors’ perceptions of the role of interaction in language classes. Such research could shed light on how to enhance the effectiveness of online language teaching by improving the quality and quantity of interaction between instructors and students. Moreover, studies on interaction in online language teaching should incorporate interviews with students to gather their perspectives. Finally, given the recent advancements in AI-based tools, particularly chatbots, there is a compelling opportunity to explore their potential in supporting interaction during autonomous language learning.

This study has built on previous work in order to offer a more comprehensive, quantitatively-driven analysis of how and when interaction occurs in the online L2 language classroom. Results reveal that students are, in some ways, limited in their ability to engage in interaction online; more class time consists of instructor-led lecture than instructor-student interaction, on average. However, the results also offer an optimistic outlook on how interaction can be more effectively fostered in the online language classroom environment, both through the careful selection of language learning activity and class focus, as well as through the use of effective prompting and scaffolding strategies implemented by the instructors in order to extend instructor-student L2 interaction routines. These findings emphasize the importance of implementing targeted teacher training programs that specifically address Classroom Interactional Competence to enhance online language interaction practices. By acknowledging the potential of interaction, language professionals may be empowered to foster increased opportunities for students to engage in meaningful exchanges.

Acknowledgements

Javier Muñoz-Basols acknowledges funding from the following research projects: “Hacia una diacronía de la oralidad/escrituralidad: variación concepcional, traducción y tradicionalidad discursiva en el español y otras lenguas románicas (DiacOralEs)/Towards a Diachrony of Orality/Scripturality: Conceptual Variation, Translation and Discourse Traditionality in Spanish and other Romance Languages” (PID2021-123763NA-I00 funded by MCIN/AEI/10.13039/501100011033/ and by FEDER Una manera de hacer Europa; DEFINERS: Digital Language Learning of Junior Language Teachers (TED2021-129984A-I00) funded by MCIN/AEI/10.13039/501100011033 and the European Union NextGenerationEU/PRTR; “OralGrab. Grabar videos y audios para ensenar y aprender” (PID2022-141511NB-100), funded by Agencia Estatal de Investigación and by Ministerio de Ciencia e Innovación of Spain. Mara Fuertes Gutiérrez acknowledges funding from the project: Language Acts and Worldmaking (AH/N004655/1) del Arts and Humanities Research Council of England.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1 Here are two examples of UOP and UTP respectively, both from a C1 level Spanish class: UOP [Docente: A ver, Peter tiene una pregunta, Peter, dime, Peter. Estudiante: Lo siento, Juana, tengo una pregunta. / Teacher: Let’s see, Peter has a question, Peter, tell me, Peter. Student: I’m sorry, Juana, I have a question]; UTP [Docente: Muy bien. Estupendo. […] Otras personas… Estudiante en el chat: ¿Hay que incluir los inventos? Docente: Ah, no, Maggie. Es simplemente porque para el examen se pide describir, explicar y comentar… / Teacher: Very good. Great. […] Other people…. Student in chat: Are there inventions to include? Teacher: Ah, no, Maggie. It is simply because for the exam you are asked to describe, explain and comment…]

References

- Arellano-Soto, G., & Parks, S. (2021). A video-conferencing English-Spanish eTandem exchange: Negotiated interaction and acquisition. CALICO Journal, 38(2), 222–244. https://doi.org/10.1558/cj.38927

- Arroyo, D.C., & Yilmaz, Y. (2018). An open for replication study: The role of feedback timing in synchronous computer-mediated communication. Language Learning, 68, 942–972. https://doi.org/10.1111/lang.12300

- Azhar, K.A., Iqubal, N., & Khan, M.S. (2019). Do I talk too much in class? A quantitative analysis of ESL classroom interaction. OKARA, 13(2), 193–202. https://doi.org/10.19105/ojbs.v13i2.2491

- Balaman, U., & Olcay, S. (2017). Development of L2 interactional resources for online collaborative task accomplishment. Computer Assisted Language Learning, 30(7), 601–630. https://doi.org/10.1080/09588221.2017.1334667

- Baralt, M. (2013). The impact of cognitive complexity on feedback efficacy during online versus face-to-face interactive tasks. Studies in Second Language Acquisition, 35(4), 689–725. https://doi.org/10.1017/S0272263113000429

- Belda-Medina, J. (2021). Enhancing multimodal interaction and communicative competence through Task-Based Language Teaching (TBLT) in Synchronous Computer-Mediated Communication (SCMC). Education Sciences, 11(11), 723. https://doi.org/10.3390/educsci11110723

- Bernard, R.M., Abrami, P.C., Borokhovski, E., Wade, C.A., Tamim, R.M., Surkes, M.A., & Bethel, E.C. (2009). A meta-analysis of three types of interaction treatments in distance education. Review of Educational Research, 79(3), 1243–1289. https://doi.org/10.3102/0034654309333844

- Bikowski, D., Keira Park, H., & Tytko, T. (2022). Teaching large-enrollment online language courses: Faculty perspectives and an emerging curricular model. System, 105, 11–14. https://doi.org/10.1016/j.system.2021.102711

- Blaine, A. (2019). Interaction and presence in the virtual classroom: An analysis of the perceptions of students and teachers in online and blended advanced placement courses. Computers & Education, 132, 31–43. https://doi.org/10.1016/j.compedu.2019.01.004

- Blanchette, J. (2009). Characteristics of teacher talk and learner talk in the online learning environment. Language and Education, 23(5), 391–407. https://doi.org/10.1080/09500780802691736

- Borup, J., West, R.E., & Graham, C.R. (2012). Improving online social presence through asynchronous video. The Internet and Higher Education, 15(3), 195–203. https://doi.org/10.1016/j.iheduc.2011.11.001

- Brock, C.A. (1986). The effects of referential questions on ESL classroom discourse. TESOL Quarterly, 20(1), 47–59. https://doi.org/10.2307/3586388

- Bueno-Alastuey, M.C. (2013). Interactional feedback in synchronous voice-based computer mediated communication: Effect of dyad. System, 41(5), 543–559. https://doi.org/10.1016/j.system.2013.05.005

- Chen, H., Chiang, R.H., & Storey, V.C. (2012). Business intelligence and analytics: From big data to big impact. MIS Quarterly, 36(4), 1165–1188. https://doi.org/10.2307/41703503

- Cheung, A. (2021). Synchronous online teaching, a blessing or a curse? Insights from EFL primary students’ interaction during online English lessons. System, 100, 1–13. https://doi.org/10.1016/j.system.2021.102566

- Cirit-Isikligil, N.C., Sadler, R.W., & Arica-Akkök, E. (2023). Communication strategy use of EFL learners in videoconferencing, virtual world and face-to-face environments. ReCall, 35(1), 122–138. https://doi.org/10.1017/S0958344022000210

- Council of Europe. (2020). Common European framework of reference for languages. Learning, teaching, assessment. Council of Europe Publishing.

- Ellis, R., Skehan, P., Li, S., Shintani, N., & Lambert, C. (2019). Task-based language teaching: Theory and practice. Cambridge University Press.

- Engwall, O., & Lopes, J. (2022). Interaction and collaboration in robot assisted language learning for adults. Computer Assisted Language Learning, 35(5–6), 1273–1309. https://doi.org/10.1080/09588221.2020.1799821

- Fernández, S. S. (2022). Feedback interactivo y producción escrita en la enseñanza del español LE/L2. Journal of Spanish Language Teaching, 9(1), 36–51. https://doi.org/10.1080/23247797.2022.2055302

- Fernández-García, M., &Martínez-Arbelaiz, A. (2014). Native speaker–non-native speaker study abroad conversations: Do they provide feedback and opportunities for pushed output?. System, 42, 93–104. https://doi.org/10.1016/j.system.2013.10.020

- Gass, S.M. (1997). Input, interaction, and the second language learner. Lawrence Erlbaum.

- Gass, S.M., & Mackey, A. (2006). Input, interaction and output: An overview. AILA Review, 19, 3–17. https://doi.org/10.1075/aila.19.03gas

- Gilbert, L., & Moore, D.R. (1989). Building interactivity in Web-courses: Tools for social and instructional interaction. Educational Technology, 38(3), 29–35.

- Gironzetti, E., &Muñoz-Basols, J. (2022). Research engagement and research culture in Spanish language teaching (SLT): Empowering the profession. Applied Linguistics, 43(5), 978–1005. https://doi.org/10.1093/applin/amac016

- González-Lloret, M. (2020). Collaborative tasks for online language teaching. Foreign Language Annals, 53(2), 260–269. https://doi.org/10.1111/flan.12466

- Granena, G., & Yilmaz, Y. (2019). Corrective feedback and the role of implicit sequence-learning ability in L2 online performance. Language Learning, 69, 127–156. https://doi.org/10.1111/lang.12319

- Hampel, R., & Stickler, U. (2012). The use of videoconferencing to support multimodal interaction in an online language classroom. ReCALL, 24(2), 116–137. https://doi.org/10.1017/S095834401200002X

- Harsch, C., Müller-Karabil, A., & Buchminskaia, E. (2021). Addressing the challenges of interaction in online language courses. System, 103, 1–15. https://doi.org/10.1016/j.system.2021.102673

- Henderson, C. (2021). The effect of feedback timing on L2 Spanish vocabulary acquisition in synchronous computer-mediated communication. Language Teaching Research, 25(2), 185–208. https://doi.org/10.1177/1362168819832907

- Herring, S.C. (2004). Computer-mediated discourse analysis: An approach to researching online behavior. In S.A. Barab, R. Kling, & J.H. Gray (Eds.), Designing for virtual communities in the service of learning (pp. 338–376). Cambridge University Press.

- Holden, J.T., & Westfall, P.J.-L. (2006). An instructional media selection guide for distance learning. United States Distance Learning Association.

- Hooper, D. (2020). Understanding communities of practice in a social language learning space. In J. Mynard, M. Burke, & D. Hooper (Eds.), Dynamics of a social language learning community. Beliefs, membership and identity (pp. 108–124). Multilingual Matters. https://doi.org/10.21832/9781788928915-015

- Hrastinski, S. (2008). Asynchronous and synchronous e-learning. Educause Quarterly, 31(4), 51–55.

- Kaymakamoglu, S.E. (2018). Teachers’ beliefs, perceived practice and actual classroom practice in relation to traditional (teacher-centered) and constructivist (learner-centered) teaching. Journal of Education and Learning, 7(1), 29–37.

- Ko, C.-J. (2022). Online individualized corrective feedback on EFL learners’ grammatical error correction. Computer Assisted Language Learning. Advance online publication. https://doi.org/10.1080/09588221.2022.2118783

- Kohnke, L., & Moorhouse, B.L. (2020). Facilitating synchronous online language learning through Zoom. RELC Journal, 53(1), 296–301. https://doi.org/10.1177/0033688220937235

- Lee, L. (2001). Online interaction: Negotiation of meaning and strategies used among learners of Spanish. ReCALL, 13(2), 232–244. https://doi.org/10.1017/S0958344001000829a

- Lim, M.H., & Aryadoust, V. (2022). A scientometric review of research trends in computer-assisted language learning (1977–2020). Computer Assisted Language Learning, 35(9), 2675–2700. https://doi.org/10.1080/09588221.2021.1892768

- Lin, C.-H., Zhang, Y., & Zheng, B. (2017). The roles of learning strategies and motivation in online language learning: A structural equation modeling analysis. Computers & Education, 113, 75–85. https://doi.org/10.1016/j.compedu.2017.05.014

- Lin, C.H., Zheng, B., & Zhang, Y. (2017). Interactions and learning outcomes in online language courses. British Journal of Educational Technology, 48(3), 730–748. https://doi.org/10.1111/bjet.12457

- Lyster, R. (2004). Differential effects of prompts and recasts in form-focused instruction. Studies in Second Language Acquisition, 26(3), 399–432. https://doi.org/10.1017/S0272263104263021

- Malik, A.H., Jalall, M.A., RazaAbbasi, S.A., & Rahid, A. (2023). Exploring the impact of excessive teacher talk time on participation and learning of English language learners. VFAST Transactions on Education and Social Sciences, 11(2), 43–55.

- Mercer, S., & Dörnyei, Z. (2020). Engaging language learners in contemporary classrooms. Cambridge University Press.

- Meskill, C., & Anthony, N. (2013). Managing synchronous polyfocality in new media/new learning: Online language educators’ instructional strategies. System, 42, 177–188. https://doi.org/10.1016/j.system.2013.11.005

- Miao, J.M., Chang, J.M., & Ma,L. (2022). Teacher-student interaction, student-student interaction and social presence: Their impacts on learning engagement in online learning environments. Journal of Genetic Psychology, 183(6), 514–526. https://doi.org/10.1080/00221325.2022.2094211

- Moorhouse, B.L., Li, Y., & Walsh, S. (2023). E-classroom interactional competencies: Mediating and assisting language learning during synchronous online lessons. RELC Journal, 54(1), 114–128. https://doi.org/10.1177/0033688220985274

- Moorhouse, B.L., Walsh, S., Li, Y., & Wong, L.L.C. (2022). Assisting and mediating interaction during synchronous online language lessons: Teachers’ professional practices. TESOL Quarterly, 56(3), 934–960. https://doi.org/10.1002/tesq.3144

- Muñoz-Basols, J.,Rodríguez-Lifante, A., &Cruz-Moya, O. (2017). Perfil laboral, formativo e investigador del profesional de español como lengua extranjera o segunda (ELE/EL2): datos cuantitativos y cualitativos. Journal of Spanish Language Teaching, 4(1), 1–34. https://doi.org/10.1080/23247797.2017.1325115

- Muñoz-Basols, J.,Muñoz-Calvo, M., &Suárez García, J. (2014). Hacia una internacionalización del discurso sobre la enseñanza del español como lengua extranjera. Journal of Spanish Language Teaching, 1(1), 1–14. https://doi.org/10.1080/23247797.2014.918402

- Muñoz-Basols, J., &Hernández Muñoz, N. (2019). El español en la era global: agentes y voces de la polifonía panhispánica. Journal of Spanish Language Teaching, 6(2), 79–95. https://doi.org/10.1080/23247797.2020.1752019

- Muñoz-Basols, J.,Neville, C.,Lafford, B. A., &Godev, C. (2023). Potentialities of applied translation for language learning in the era of artificial intelligence. Hispania, 106(2), 171–194. https://doi.org/10.1353/hpn.2023.a899427

- Muñoz-Basols, J. (2019). Going beyond the comfort zone: Multilingualism, translation and mediation to foster plurilingual competence. Language, Culture and Curriculum, 32(3), 299–321. https://doi.org/10.1080/07908318.2019.1661687

- Ng, C., Yeung, A.S., & Hon, R.Y.H. (2006). Does online language learning diminish interaction between student and teacher? Educational Media International, 43(3), 219–232. https://doi.org/10.1080/09523980600641429

- O’Reilly, J., & García-Castro, V. (2022). Exploring the relationship between foreign language anxiety and students’ online engagement at UK universities during the Covid-19 pandemic. Language Learning in Higher Education, 12(2), 409–427. https://doi.org/10.1515/cercles-2022-2054

- Reuge, N.,Jenkins, R.,Brossard, M.,Soobrayan, B.,Mizunoya, S.,Ackers, J.,Jones, L., &Taulo, W. G. (2021). Education response to COVID 19 pandemic, a special issue proposed by UNICEF: Editorial review. International Journal of Educational Development, 87, 102485.https://doi.org/10.1016/j.ijedudev.2021.102485

- Roach, V.A., & Attardi, S.M. (2022). Twelve tips for applying Moore’s Theory of Transactional Distance to optimize online teaching. Medical Teacher, 44(8), 859–865. https://doi.org/10.1080/0142159X.2021.1913279

- Rovai, A.P. (2002). Building sense of community at a distance. International Review of Research in Open and Distance Learning, 3(1), 1–16.

- Russell, V., & Murphy-Judy, K. (2021). Teaching language online: A guide for designing, developing, and delivering online, blended, and flipped language courses. Routledge.

- Russell, V. (2020). Language anxiety and the online learner. Foreign Language Annals, 53(2), 338–352. https://doi.org/10.1111/flan.12461

- Sert, O. (2015). Social interaction and L2 classroom discourse. Edinburgh University Press.

- Shimray, R., & Wangdi, T. (2023). Boredom in online foreign language classrooms: Antecedents and solutions from students’ perspective. Journal of Multilingual and Multicultural Development. Advance online publication. https://doi.org/10.1080/01434632.2023.2178442

- Strawbridge, T. (2021). Modern language: Interaction in conversational NS-NNS video SCMC eTandem exchanges. Language Learning & Technology, 25(2), 94–110. http://hdl.handle.net/10125/73435

- Strawbridge, T. (2023). The relationship between social network typology, L2 proficiency growth, and curriculum design in university study abroad. Studies in Second Language Acquisition, 45(5), 1131–1161. https://doi.org/10.1017/S0272263123000049

- Sun, H.-L., Sun, T., Sha, F.-Y., Gu, X.-Y., Hou, X.-R., Zhu, F.-Y., & Fang, P.-T. (2022). The influence of teacher–student interaction on the effects of online learning: Based on a serial mediating model. Frontiers in Psychology, 13. https://doi.org/10.3389/fpsyg.2022.779217

- Tsai, Y.-R., &Talley, P. C. (2014). The effect of a course management system (CMS)-supported strategy instruction on EFL reading comprehension and strategy use. Computer Assisted Language Learning, 27(5), 422–438. https://doi.org/10.1080/09588221.2012.757754

- Walsh, S. (2006). Investigating classroom discourse. Routledge.

- Walsh, S. (2013). Classroom discourse and teacher development. Edinburgh University Press.

- Walsh, S., & Sert, O. (2019). Mediating L2 learning through classroom interaction. In X. Gao (Ed.), Second handbook of English language teaching (pp. 737–755). Springer.

- Wang, X.P., & Li, Y. (2022). The predictive effects of foreign language enjoyment, anxiety, and boredom on general and domain-specific English achievement in online English classrooms. Frontiers in Psychology, 13, 1–12. https://doi.org/10.3389/fpsyg.2022.1050226

- Xiao, J. (2017). Learner-content interaction in distance education: The weakest link in interaction research. Distance Education, 38(1), 123–135. https://doi.org/10.1080/01587919.2017.1298982

- Yacci, M. (2000). Interactivity demystified: A structural definition for online learning and intelligent CBT. Educational Technology, 40(4), 5–16.

- Zhang, Y., Zheng, B., & Tian, Y. (2022). An exploratory study of English-language learners’ text chat interaction in synchronous computer-mediated communication: Functions and change over time. Computer Assisted Language Learning. Advance online publication. https://doi.org/10.1080/09588221.2022.2136202