ABSTRACT

Reliable and valid cognitive screening tools are essential in the assessment of those with traumatic brain injury (TBI). Yet, there is no consensus about which tool should be used in clinical practice. This systematic review assessed psychometric properties of cognitive screening tools for detecting cognitive impairment in TBI. Inclusion criteria were: peer-reviewed validation studies of a cognitive screening tool(s); with a sample of adults aged 18–80 diagnosed with TBI (mild-severe); and with psychometrics consistent with COSMIN guidelines. Published literature was retrieved from MEDLINE, Web of Science Core Collection, EMBASE, CINAHL, and PsycINFO on 27 January 2022. A narrative synthesis was performed. Thirty-four studies evaluated the psychometric properties of a total of 22 cognitive screening tools, in a variety of languages. Properties assessed included structural validity, internal consistency, reliability, criterion validity (or diagnostic test accuracy), convergent/divergent validity, and discriminant validity. The Montreal Cognitive Assessment (MoCA) and the Mini Mental State Examination (MMSE) were the most widely validated cognitive screening tools for use in TBI. The MoCA had the most promising evidence of its psychometric properties, which has implications for clinical practice. Future research should aim to follow standard criteria for psychometric studies to allow meaningful comparisons across the literature.

Introduction

Traumatic brain injuries (TBI) are a prevalent cause of hospital admission, with 155,919 cases of head injury presenting to UK hospitals annually (Headway, Citation2017). The Global Burden of Disease study estimated that there were 27 million new cases of traumatic brain injury (TBI) in 2016 (James et al., Citation2019). Cognitive impairments, particularly in attention, memory and executive function domains, are common in those with moderate-severe TBI, and in the acute phase of mild TBI (Barman et al., Citation2016). In addition, cognitive impairment is associated with real-life functional impairment in TBI populations (Wilson et al., Citation2021).

A complete neuropsychological assessment is the gold-standard method of identifying cognitive impairment in TBI, allowing the detection and characterization of difficulties post-TBI in multiple domains, and synthesizing the findings with consideration of the wider biopsychosocial framework. However, this approach is costly and resource intensive and if all patients were to access neuropsychological assessment, this may result in lengthy waits. Given the prevalence of TBI and the demands on services, there is a need for brief, valid, and reliable screening tools. Cognitive screening tools are tools which are designed or used to screen for cognitive impairment; and typically include a brief set of cognitive test items. They can be multidimensional, with different sub-scales each aiming to measure different cognitive functions separately, or unidimensional, having an overall score representing overall cognitive function. A standard brief cognitive test, often assessing one domain, may be trialled as a screening tool. Screening tools have the potential to identify cognitive impairment in an efficient and cost-effective way at an early stage, allowing the targeting of gold-standard neuropsychological assessment, as well as rehabilitation (NICE, Citation2014; Scottish Acquired Brain Injury Network, Citation2017; Teager et al., Citation2020). While they have the benefit of detecting possible cognitive impairment at an early stage, they do not allow for the same level of detail and understanding of a person’s cognitive function as a neuropsychological assessment.

A variety of cognitive screening tools exist which may be helpful for detecting cognitive impairment in TBI (Abd Razak et al., Citation2019; Cullen et al., Citation2007). However, the heterogeneity of cognitive impairments in TBI has presented a challenge to researchers to identify valid and reliable tools (Teager et al., Citation2020). A number of individual studies were identified which reported on the validity of certain cognitive screening tools, such as the Mini-Mental State Examination (MMSE) and the Montreal Cognitive Examination (MoCA), in TBI populations (Gaber, Citation2008; Zhang et al., Citation2016). These tools were initially developed for and are validated for use in dementia populations. Major trauma centres often use widely available tools to assess TBI patients, such as the Addenbrooke’s Cognitive Examination-III (Hsieh et al., Citation2013) and the MoCA (Nasreddine et al., Citation2005; Teager et al., Citation2020), although the psychometric properties of these tools are either unknown or are yet to be summarized in a meaningful way.

A rigorous review of the psychometric properties of cognitive screening measures for use in TBI has never been completed. One previous review was identified; however, it focused only on diagnostic test accuracy designs and collated the findings of included studies, without evidence-based critical review (Canadian Agency for Drugs and Technologies in Health (CADTH), Citation2014). Other systematic reviews have been published on cognitive screening tool use in stroke populations, and have found that multi-domain tools such as the MoCA and Oxford Cognitive Screen (OCS) demonstrated acceptable psychometric qualities (Kosgallana et al., Citation2019; Stolwyk et al., Citation2014). However, important differences exist between stroke and TBI populations in pathology and the most prevalent profiles of cognitive impairment. Zhang et al. (Citation2016) compared the validity of two different cognitive screening tools in TBI and stroke populations. The sensitivity of these tools to identify cognitive impairment differed between TBI and stroke groups, suggesting the psychometric properties of cognitive screening tools should not be assumed to be consistent across brain injury groups. No systematic review or meta-analysis could be identified on the psychometric properties of cognitive screening tools, specifically in TBI populations.

Therefore, there is a need to review the evidence for the psychometric properties of cognitive screening tools in TBI. This will support the refinement of clinical guidelines which, in turn, would contribute to evidence-based practice.

Objectives

This systematic review aimed to determine the validity and reliability of cognitive screening tools for detecting cognitive impairment in TBI populations. Research questions were:

How reliable are the screening tools used in TBI populations?

How valid are the screening tools used in TBI populations?

A secondary objective was to determine the comparative validity and reliability of identified screening tools between different severities (mild and moderate/severe) of TBI.

Materials and methods

Protocol and registration

This systematic review was written in accordance with PRISMA (Page et al., Citation2021; Appendix 1 in the Supplement). The protocol was registered on PROSPERO on 11/01/22, and amended on 27/07/22 with additional exclusion criteria (Registration number CRD42022297346 [available from: https://www.crd.york.ac.uk/prospero/display_record.php?RecordID = 297346]).

Eligibility criteria

All eligible articles with an English language version, up to the search date (27/01/22) were included. During screening and extraction, eligibility criteria were clarified to account for unanticipated nuances in the literature.

Patient population

Studies were required to include human adults (aged 18–80) diagnosed with TBI of any severity or stage (mild, moderate, or severe; acute or post-acute). The TBI may have been sustained when the individual was <18 years old. TBI was defined as an injury to the brain caused by an external force. Mixed samples which included conditions other than TBI (including other acquired brain injuries [ABI] such as stroke) in the same analysis with people with TBI, were excluded. If the study included a mixed sample of children and adults, a separate analysis with adults 18–80 only was required. Studies with adult participants which did not specify an upper or lower age limit were included.

Index test

Studies were required to include a cognitive screening test or tool, using the definition outlined in Cullen et al. (Citation2007); the test must have been designed to screen for cognitive impairment or be used for that purpose, have an administration time of less than 20 min and be available in English. Screens were required to be administered to patients and directly measure their cognitive performance. The screen could assess multiple domains or a single domain of cognitive function. Screening tests were excluded which were measures of, or were being used to measure: functional ability (including driving); malingering, effort, or performance validity; or consciousness, lower-order, subcortical or sensory functions (including basic visuo-ocular, vestibular, or auditory function). Where relevant, it was recorded whether a “Modified” (i.e., an adapted or non-standard version of the original tool) or non-English language version was utilized.

Comparator test

Studies which included a comparator test (i.e., any other screening test that may have been used in the study for comparison) were included, but this was not a requirement.

Outcomes of interest

A variety of psychometric outcomes were accepted depending on the study design. Accepted psychometric qualities and their measurement properties were consistent with those outlined in the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) guidelines (Mokkink et al., Citation2018). Examples of permitted psychometric qualities, designs, and statistics are found in ; for full details of permitted outcomes please see the COSMIN Manual (pp. 28–29; Mokkink et al., Citation2018).

Table 1. Examples of permitted psychometric qualities, designs, and statistics.

Test-retest reliability was only recorded where the researchers deliberately administered the measure twice in close succession, within a baseline phase during which natural change is unlikely to have occurred (e.g., consecutive days within the first week in a rehabilitation ward, or during the baseline phase of an intervention study before the intervention was introduced). In addition to the above outcomes, we aimed to extract information on measurement error, cross-cultural validity, and responsiveness (only where a standard cognitive test was used as a comparator) in accordance with COSMIN (Mokkink et al., Citation2018). Outcomes related to clinical utility, feasibility or acceptability were recorded where available. These outcomes were all of equal interest and none were prioritized.

Study type

Included reports were full-length articles (in a peer-reviewed journal) reporting primary research (not systematic reviews or conference abstracts etc.). Eligible studies assessed the psychometric properties of a cognitive screening tool, in accordance with the outcomes of interest, and had a group design (i.e., an observational or experimental design, but not a single case study or case series design). Examples of excluded designs were:

the screening tool is compared to a self-report measure or brain injury measure or across different groups; but is being used as an outcome measure, there is no clear psychometric relevance or it is not being validated as a screen

a cognitive screening tool is used to track improvement in an intervention or recovery study (where no additional tool is used to compare the screen to a gold-standard or any additional relevant psychometrics are calculated)

the tool is evaluated in conditions not ecologically valid for clinical use; for example outside of a clinic setting

psychometrics are present but are focused on an aspect of the tool other than cognitive function (e.g., performance validity).

In addition to the above criteria, after full-text screening, additional exclusion criteria were applied to account for unanticipated nuances in the literature:

Sports-related concussion (SRC) criteria: studies with a SRC sample were excluded. These studies often used measures designed for use in SRC settings (i.e., at pitch-side, in the acute phase, integrating neurological exam elements). Results were often compared to “baseline” screening, conducted pre-morbidly. Given these factors, it was felt this area of literature was distinct, less applicable to clinical settings; and would increase the heterogeneity of included studies.

COSMIN criteria: COSMIN outlines that studies should be excluded if they “only use the PROM as an outcome measurement instrument … ” or “ … studies in which the PROM is used in a validation study of another instrument”, as screening would be “extremely time consuming” without this criterion (Mokkink et al., Citation2018, p. 20). Therefore, studies were excluded where the purpose of the study was not to validate the screen and/or where the screen is used as an outcome measure or to validate another tool. Studies which did not have a design consistent with COSMIN (e.g., using multiple regression) were excluded.

Information sources, search strategy, and study selection

Scoping searches were conducted to refine search terms. Key papers identified in scoping were noted. The sensitivity of the search strategy was evaluated by its ability to detect the key papers. Search terms included variants of keywords related to population (e.g., “Traumatic head injury”, “head injury”, “brain injury” or “concussion”), construct (e.g., “cognition”, “neuro psychological”, “executive function” or “memory”) type of instrument (e.g., “screening tool”, “screening assessment” “screening test” or “screening instrument”) and measurement properties (e.g., “psychometric”, “validity”, “reliability”, “sensitivity”). The full search strategy is outlined in Appendix 2 in the Supplement. No additional limits were used in search filters. Published literature was retrieved from MEDLINE, Web of Science Core Collection, EMBASE, CINAHL, and PsycINFO, from inception up until the date of extraction (27/01/22). Search results were managed in the first author’s RefWorks library (www.refworks.com/refworks2/). Duplicates were removed during database extraction. Information of interest was extracted into an Excel spreadsheet and decision making was recorded.

Titles and/or abstracts were screened by J.M. for eligibility. The second reviewer (A.F.) independently repeated this process for 100 records to check for consistency. For the second screening phase, the full text was read by J.M. for all records identified as “maybe eligible” at the title/abstract stage and a decision was made about its eligibility. A.F. independently repeated this process for 50 records to check for consistency.

The reference lists of eligible papers and relevant systematic reviews were then searched by J.M. by hand (backward citation). Subsequent papers which have cited eligible papers, identified electronically using the “cited by” function in Google Scholar, were searched (forward citation) by J.M. on 10/07/22. No additional studies or data were sought by contacting authors.

Data collection and data items

Three data extraction templates were created to extract relevant data from eligible studies. Data extracted included: patient characteristics (sample size, age, sex, TBI severity, time since injury; for both TBI and relevant control groups); cognitive screens (tool name, original reference, domains assessed, items, range of scores, time to administer etc.) and psychometric properties (including psychometric quality assessed, details of the study design and relevant measurement property; as defined in COSMIN). Additional information was gathered (including inclusion/exclusion criteria, TBI diagnosis criteria, and study setting) but was not reported for brevity. J.M. completed all data extraction and A.F. checked extraction for five of the papers. Only minor formatting and spelling errors were identified that would have been recognized by the primary author at the synthesizing stage.

Risk of bias

The COSMIN Risk of Bias (RoB) checklist was used by J.M. to assess the quality of all included studies (Mokkink et al., Citation2021). It is a consensus-based checklist for evaluating the methodological quality of psychometric studies. Risk of bias was rated as: “very good” (V), “adequate” (A), “doubtful” (D), “inadequate” (I) or “not applicable” (N) according to COSMIN criteria. A “worst counts score” approach was used for overall rating. Additional details are given in Appendix 3 in the Supplement. It should be noted that the COSMIN (Mokkink et al., Citation2018) was developed for evaluation of patient-reported outcome measures (PROMS); however, it can be utilized for performance-based measures (for example, De Martino et al., Citation2020). Risk of bias (for any relevant measurement properties) was assessed independently for five studies by A.F.

The Quality Assessment of Diagnostic Accuracy Studies (QUADAS 2) tool was used to assess the quality, only for studies which specifically claimed to have a “diagnostic accuracy” design (or similar) (Whiting et al., Citation2011). It includes items which assess both the risk of bias and applicability of results. J.M. and A.F. independently rated the quality of three such studies.

Summary measures and synthesis of results

The evidence was graded using the COSMIN checklist for good measurement properties by J.M. Measurement properties were rated as (+) sufficient; (-) insufficient; or (?) indeterminate, using criteria defined in COSMIN, which varied for each type of design (for example, criterion validity designs should report an AUC ≥0.7 for a sufficient (+) rating). A narrative synthesis was performed, with data presented in text, tables, and figures. Each study was summarized including a description of the sample characteristics. Details of cognitive screening tool(s) used were reported. The type(s) of validity and reliability, relevant details of the study design, and the resulting psychometric statistic(s) were reported. Measurement properties of the tools in TBI populations were discussed, considering the quality of the studies. The review employed the COSMIN-recommended Grading of Recommendations, Assessment, Development, and Evaluation (GRADE) approach to synthesize the evidence across studies. GRADE ratings were determined by A.F. and ambiguities were resolved by discussion with the other authors. Please see Appendix 3 in the Supplement for more detail on how this review adapted the COSMIN process.

Results

The initial title-abstract calibration between J.M. and A.F. resulted in “fair” agreement (81.2% agreement: Cohen’s Kappa = 0.29). After ambiguities within the criteria were clarified, 100% agreement was reached. Independent full-text screening resulted in 92.0% agreement between reviewers (Cohen’s Kappa = 0.73, “substantial agreement”). Remaining disagreements were resolved by consensus between all three authors.

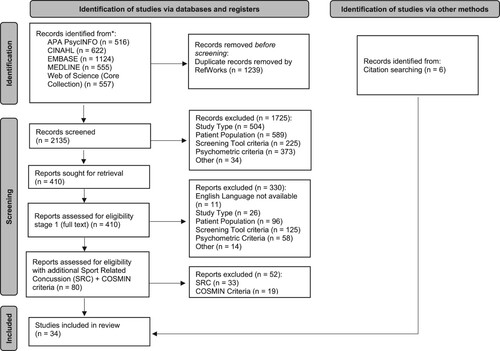

Eighty studies were deemed eligible for inclusion via the above screening process (see PRISMA flow chart in ). Several studies which might have been expected to be included were found to be ineligible, including Gaber (Citation2008) and Hazan et al. (Citation2017), whose inclusion criteria did not meet the age criteria.

Figure 1. PRISMA flowchart displaying excluded and included studies at each stage of the review process.

After applying the additional COSMIN and SRC criteria, and with the addition of six papers from citation searching, 34 publications were eligible for inclusion, in which 22 cognitive screening tools were evaluated. Within these 34 publications there were a greater number of individual studies (i.e., studies of each individual psychometric property per each tool): 4 structural validity, 7 internal consistency, 3 inter-rater reliability, 3 test-retest reliability, 32 criterion validity, 14 convergent or divergent validity, and 36 discriminant validity studies. Sample characteristics and cognitive screens are outlined in and , respectively. Demographic, clinical, and screening tool metrics sought by this review were not consistently reported, and missing data indicates this information was not available. Information not reported was sought from referenced sources within the paper where available. The studies were heterogeneous in terms of TBI severity (13 mild or “concussion”; 6 moderate-severe; 11 mixed; and 4 unclear) and time since injury (range of mean durations: 5.6 h to 60.33 months).

Table 2. Sample characteristics.

Table 3. Screening tools.

Of the 22 tools, administration time ranged from 2 to 20 min (where reported). Some measures were designed as screening tools e.g., MoCA; others were designed as brief tests and were being evaluated as a cognitive screen e.g., Controlled Oral Word Association Test (COWAT). Some evaluated multiple cognitive domains e.g., MMSE; others focused on one area of cognitive function e.g., Bethesda Eye and Attention Measure (BEAM).

Risk of bias

COSMIN RoB ratings are reported within the detailed psychometric results tables which can be found in the Supplement (Tables S1-S3). Initial agreement in RoB ratings between independent reviewers was low (<50%). Key areas of ambiguity were clarified, and after independent rating for an additional three papers, 100% agreement was reached.

While RoB relating to structural validity, criterion validity, and internal consistency was generally rated as “very good”, issues were raised within reliability and discriminant validity designs. Of note, many studies did not report an effect size or correlation statistic for their discriminant validity analyses, giving an inadequate understanding of the magnitude of difference between groups. Other issues included: limited information of diagnosis procedures; the time delay between reliability measurements; and poor reporting of statistical information.

Independent rating using the QUADAS 2 tool resulted in 76% agreement between the reviewers, and differences in opinion were discussed until consensus was reached. The results () revealed that all diagnostic accuracy studies had a source of potential bias (diagnostic accuracy for either TBI or cognitive impairment). In most studies, examiners were not blinded to the results of the patient status, participants were recruited into pre-determined groups based on diagnosis status and exploratory cut-offs were used in ROC curve analyses; all of which are potential sources of bias, as outlined in QUADAS 2.

Table 4. QUADAS 2 Risk of Bias and Applicability Ratings (Diagnostic Test Accuracy Studies only).

Measurement properties

Detailed psychometric properties of the tools are reported in Tables S1–S3 in the Supplement.

No studies measured cross-cultural validity, measurement invariance, or measurement error or responsiveness (in a way that was suggested in the COSMIN guidelines and which could be evaluated using their criteria). Consequently, the psychometric properties that were able to be assessed for the tools reviewed were structural validity, internal consistency, inter-rater reliability, test-retest reliability, and convergent or discriminant hypothesis testing. There was significant heterogeneity across studies in types of psychometric qualities assessed and subsequent outcome statistic. Discriminant validity was the most common type of validity assessed (36: results per study, per tool), followed by criterion validity (37 results), convergent/divergent validity (14 results), internal consistency (7 results), structural validity (4 results) and reliability (3 results). Ten studies also specified that they had a “diagnostic accuracy” design (or similar) ().

After consideration of risk of bias, attention can be paid to the measurement properties of sufficient (+), insufficient (-) or indeterminate (?). It should be noted that tools varied in language version, which may impact on the confidence in results. Given the heterogeneity of the literature and the poor quality of reporting (e.g., in discriminant validity studies) it was not possible to evaluate the psychometrics of tools across severities. As per the recommended COSMIN process, overall ratings were produced for each assessed property for each instrument. These ratings, together with GRADE ratings of the quality of the evidence used to form these ratings, can be found in and . GRADE ratings were not produced when the overall rating was indeterminate or inconsistent, or in cases where a firm rating was given but sample size information was lacking (as this prevents evaluation of the GRADE imprecision criterion).

Table 5. Overall property rating and GRADE rating of quality of evidence for structural validity, internal consistency, and reliability.

Table 6. Overall property rating and GRADE rating of quality of evidence for criterion validity and hypothesis testing.

Structural validity

The MMSE and MoCA (Swahili versions) both demonstrated sufficient structural validity in a TBI sample with high and moderate quality of evidence respectively. Cognistat structural validity was rated as indeterminate due to insufficient outcomes reporting from the Rasch analyses.

Internal consistency

Sufficient internal consistency properties were established for the MoCA (based upon high-quality evidence), RUDAS (Rowland Universal Dementia Assessment Scale, based on moderate quality evidence), and SLUMS (Saint Louis University Mental Status Examination, based on low-quality evidence). Insufficient internal consistency in particular subtests lead to insufficient property ratings for the Cognistat (based on low-quality evidence) and RQCST (Revised Quick Cognitive Screening Test, with high-quality evidence). One high-quality study was extracted, which found internal consistency of the MMSE to be insufficient.

Reliability

The inter-rater reliability property was found sufficient for three measures, the Cognistat (based on low-quality evidence), RUDAS (moderate quality evidence), and SLUMS (based on low-quality evidence). The Cognistat received an insufficient rating for test-retest reliability (based on very low-quality evidence), the RUDAS a sufficient rating (based on moderate quality evidence) and the SLUMS an insufficient rating (based on very low-quality evidence).

Criterion validity

Four studies found the MoCA had sufficient criterion validity to distinguish between TBI patients and controls. An additional study found the MoCA had sufficient criterion validity for those who were impaired/not impaired on the RBANS (Repeatable Battery for the Assessment of Neuropsychological Status). Similarly, four studies found the MMSE had sufficient criterion validity to distinguish between TBI patients and controls. However, one study found varying sensitivity and specificity values for MMSE domains to predict impairment on corresponding standard neuropsychological tests; many of these fell below the 80% standard typically suggested. A number of measures all had initial promising evidence of their criterion validity, however were only supported by 1–2 studies. These were the COWAT (high-quality evidence), IFS (INECO Frontal Screen, moderate quality evidence), RQCST (high-quality evidence), RUDAS (high-quality evidence), SLUMS (high-quality evidence), SCWT (Stroop Colour-Word Test, high-quality evidence), and TMT (Trail Making Test, high-quality evidence). Insufficient overall ratings were awarded to the ImPACT (Immediate Post-Concussion Assessment and Cognitive Testing) based on moderate quality evidence, as was the case for the CogState (moderate quality evidence), together with the BEAM and King-Devick tests (which were not given GRADE quality ratings due to lacking sample size information). The SAC overall rating was inconsistent and the overall rating was indeterminate for the 2&7, Cognistat, CPTA, Digit Span, and PASAT.

Hypothesis testing: convergent validity

Given the heterogeneity of the measures used in convergent validity analyses it is difficult to draw conclusions across the studies. Cognitive screening tools, many of which have limited evidence of established validity in TBI samples, are mainly used as comparators. The two studies involving the MMSE showed different property ratings, producing an overall rating of inconsistent. Cognistat also received an overall rating of inconsistent because the one study which evaluated convergent validity found inconsistent results across different sub-test comparisons. Insufficient property ratings were awarded to the SCWT (no GRADE rating due to non-reporting of sample size) and the TMT and COWAT (based on moderate quality evidence), as well as the SAC (based on very low-quality evidence). However, the MoCA, RQCST, RUDAS, and SLUMS were each awarded a sufficient property rating, based on high-quality evidence. The MoCA was found to be moderately positively correlated with functional ability measures and the RQCST was found to be correlated with activities of daily living and quality of life measures; the RUDAS and SLUMS were each positively correlated with other cognitive screen scores (MMSE and MoCA).

Hypothesis testing: discriminant validity

There was a lack of clear hypotheses and poor effect size reporting across the discriminant validity studies; however, by estimating effect sizes and applying hypotheses (outlined in the method section) this allowed estimation of measurement properties across a wider range of studies. In general, most tools evaluated this way were awarded ratings of sufficient discriminant validity between TBI and control groups although the GRADE ratings of evidence were variable. These tools were: BNIS, Cognistat, DCAT, IFS, and SNST (very low-quality evidence); SLUMS (low-quality evidence); MMSE, MoCA and RUDAS (moderate quality evidence); and SAC, SCWT and TMT (high-quality evidence). Three tools were awarded insufficient ratings: CogState (low-quality evidence), K-D (moderate quality evidence) and RTLT (very low-quality evidence). The BNIS orientation subscale was assessed in a separate study but the rating was indeterminate (very low-quality evidence).

Discussion

This systematic review found a substantial literature on the psychometric properties of cognitive screening tools in TBI populations. The MoCA, in particular, demonstrated sufficient internal consistency and discriminant, convergent, structural, and criterion validity. The MMSE demonstrated sufficient structural, discriminant, and criterion validity. In contrast, convergent validity for the MMSE varied depending on the standard neuropsychological test comparator and there was evidence to suggest the internal consistency of the measure was insufficient. Tools such as RUDAS, SLUMS, SCWT, and RQCST demonstrated some promising indications of validity. The properties of other tools were generally inconsistent, insufficient, or indeterminate; and limited to a small number of studies. These findings have implications for the refinement of clinical guidelines and should inform clinical practice. With the current evidence base, the MoCA can be tentatively considered to be the most well-validated tool for TBI populations based on sufficient ratings for all assessed properties with quality of evidence being moderate or high for all ratings. Notable limitations are that there were no studies, identified through this review, which investigated its reliability or responsiveness. These gaps are particularly important, given the implications for detecting change over time in clinical and research contexts. The MMSE, though widely used and evaluated in moderate to high-quality studies, warrants some caution, given that it demonstrated sufficient validity in only some respects and there was high-quality evidence that its internal consistency was insufficient.

These findings should be considered within the context of the heterogeneity and poor reporting across studies in terms of key sample characteristics (TBI severity and time since injury). Many studies assessed the psychometric properties in acute or mild TBI settings, and these findings are unlikely to be generalizable to other clinical contexts, such as post-acute rehabilitation settings (Barman et al., Citation2016). Poor statistical reporting, poor reporting of TBI diagnosis methods, and time delays between reliability measurements were some of the risk of bias issues identified.

These findings are broadly consistent with the findings in ABI (including stroke) populations, which find that tools which include items that reflect a variety of cognitive domains, particularly those which assess executive function, such as the MoCA, are typically the most well-validated (Kosgallana et al., Citation2019; Stolwyk et al., Citation2014). TBI often results in multi-domain cognitive difficulties, including attention, memory, and executive function, which may explain this finding (Barman et al., Citation2016). It is not apparent why the MMSE did not demonstrate adequate internal consistency. As it is more heavily weighted towards language, attention, and memory, its items may comparatively neglect domains more relevant to TBI than to dementia samples. It is notable that other multi-domain tools such as the ACE-III (which is referenced in the SABIN guidelines) or Oxford Cognitive Screen (OCS) (which has been identified to have good criterion validity in stroke samples) have not been validated in TBI (Demeyere et al., Citation2015; Hsieh et al., Citation2013; Kosgallana et al., Citation2019; Scottish Acquired Brain Injury Network, Citation2017). Notably, many of the screening tools investigated, including the MoCA and MMSE, were originally designed for detecting cognitive impairment associated with dementia and hence tend to feature fewer executive function items (Folstein et al., Citation1975; Nasreddine et al., Citation2005). Many cognitive screens evaluated in this review assessed only one domain (for example; the BEAM, which measures visual attention) or did not measure key domains impacted in TBI (for example the COWAT does not measure key aspects of memory function). Notably, the MMSE does not have an executive function subscale, but did show promising psychometric properties for use as a cognitive screen in this population in this review. It would be interesting to evaluate whether its psychometric properties may be improved with a supplementary executive function measure. An alternative approach may be to design a new, bespoke measure designed for use in TBI populations, which is tailored to capture the executive difficulties which are common in these patients (Barman et al., Citation2016).

A possible limitation is the exclusion of SRC samples, as those with SRC do present to clinical settings. However, the SRC literature had key distinctive features (noted in the methods section) which may have increased the heterogeneity of the studies further. It may therefore be beneficial to conduct a separate review on the SRC literature. Using COSMIN criteria may have excluded studies which contained relevant psychometric information. However, it would have been impractical to screen every study which inadvertently measured a tool’s validity. It also would not have been feasible or meaningful to attempt to rate the measurement properties of studies which used unusual designs or statistics which did not allow for comparison with COSMIN. Applying age criteria resulted in several studies being excluded. However, this decision was made as there are differences in the nature and assessment of TBI in adolescents or older adults, due to the interaction of developmental factors (Christensen et al., Citation2021; Peters & Gardner, Citation2018). As COSMIN was not designed for use with performance-based measures, the criteria for rating of test-retest reliability designs does not consider the possible risk of practice effects, for example if a cognitive screen is administered in short succession, without an alternate version. On balance, this review chose to include test–retest reliability data as this is an important indicator of reliability; however, we acknowledge practice effects may be a significant potential source of bias in the test–retest reliability designs. A further limitation is that in the absence of structural validity data, tools were assumed to be unidimensional. However, for tools which may in fact be multi-dimensional, internal consistency across domains may not be relevant to consider. Moreover, some tools can be considered to assess multiple cognitive domains (e.g., MoCA), yet statistically be considered unidimensional. A strength of this review is the use of two rigorous quality assessment tools, the COSMIN and the QUADAS 2, which contain detailed considerations on the assessment of psychometric methodology, and which have been specifically designed for use in these types of studies. However, we acknowledge the methodological limitation that we did not use independent double-rating at all stages of the review, from screening to data extraction and quality rating. A second reviewer independently evaluated a pre-defined number of records at each stage according to the pre-registered protocol, and agreement between raters was carefully monitored and revised to achieve concordance, but our results may not be fully robust given the absence of gold-standard full double-rating (although also recognizing that full double-rating is not always practical and does not preclude the production of a high-quality systematic review; Siddaway et al., Citation2019).

In conclusion, this review found that the MoCA had the most evidence for good psychometric qualities in TBI populations. However, its validity across key TBI characteristics requires further clarification and additional psychometric qualities including reliability and measurement error need to be evaluated. Tools such as RUDAS, SLUMS, and RQCST show promise but require additional investigation. The MMSE was found to have insufficient evidence for internal consistency. However, if future structural validity designs find that the MMSE may have multi-dimensional properties, then the finding of poor internal consistency may be less notable. Future research should clarify the validity of tools identified in this review, and additional multi-domain tools which have been validated in similar populations or which are used in clinical practice, particularly the ACE-III and OCS. Psychometric studies should take consideration of COSMIN and QUADAS 2 guidelines in their design, to allow for meaningful comparison across tools.

McLaren_supplement_R3.docx

Download MS Word (149.5 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Abd Razak, M. A., Ahmad, N. A., Chan, Y. Y., Mohamad Kasim, N., Yusof, M., Abdul Ghani, M. K. A., Omar, M., Abd Aziz, F. A., & Jamaluddin, R. (2019). Validity of screening tools for dementia and mild cognitive impairment among the elderly in primary health care: A systematic review. Public Health, 169, 84–92. https://doi.org/10.1016/j.puhe.2019.01.001

- Adjorlolo, S. (2016). Ecological validity of executive function tests in moderate traumatic brain injury in Ghana. The Clinical Neuropsychologist, 30(Suppl 1), 1517–1537. https://doi.org/10.1080/13854046.2016.1172667

- Adjorlolo, S. (2018). Diagnostic accuracy, sensitivity, and specificity of executive function tests in moderate traumatic brain injury in Ghana. Assessment, 25(4), 498–512. https://doi.org/10.1177/1073191116646445

- Barman, A., Chatterjee, A., & Bhide, R. (2016). Cognitive impairment and rehabilitation strategies after traumatic brain injury. Indian Journal of Psychological Medicine, 38(3), 172–181. https://doi.org/10.4103/0253-7176.183086

- Bin Zahid, A., Hubbard, M., Dammavalam, V., Balser, D., Pierre, G., Kim, A., Kolecki, R., Mehmood, T., Wall, S. P., Frangos, S. G., Huang, P. P., Tupper, D. E., Barr, W., & Samadani, U. (2018). Assessment of acute head injury in an emergency department population using sport concussion assessment tool - 3rd edition. Applied Neuropsychology: Adult, 25, 110–119. https://doi.org/10.1080/23279095.2016.1248765

- Borgaro, S. R., Kwasnica, C., Cutter, N., & Alcott, S. (2003). The use of the BNI screen for higher cerebral functions in assessing disorientation after traumatic brain injury. Journal of Head Trauma Rehabilitation, 18(3), 284–291. https://doi.org/10.1097/00001199-200305000-00006

- Borgaro, S. R., & Prigatano, G. P. (2002). Early cognitive and affective sequelae of traumatic brain injury: A study using the BNI screen for higher cerebral functions. Journal of Head Trauma Rehabilitation, 17(6), 526–534. https://doi.org/10.1097/00001199-200212000-00004

- Canadian Agency for Drugs and Technologies in Health. (2014). Screening tools to identify adults with cognitive impairment associated with a cerebrovascular accident or traumatic brain injury: Diagnostic accuracy. https://www.cadth.ca/sites/default/files/pdf/htis/nov-2014/RB0751%20Cognitive%20Assessments%20CVA%20and%20TBI%20Final.pdf

- Cheng, Y., Wang, Y.-Z., Zhang, Y., Wang, Y., Xie, F., Zhang, Y., Wu, Y.-H., Guo, J., & Fei, X. (2021). Comparative analysis of rowland universal dementia assessment scale and mini-mental state examination in cognitive assessment of traumatic brain injury patients. NeuroRehabilitation, 49(1), 39–46. https://doi.org/10.3233/NRE-210044

- Cheng, Y., Zhang, Y., Zhang, Y., Wu, Y.-H., & Zhang, S. (2022). Reliability and validity of the rowland universal dementia assessment scale for patients with traumatic brain injury. Applied Neuropsychology: Adult, 29(5), 1160–1166. https://doi.org/10.1080/23279095.2020.1856850

- Christensen, J., Eyolfson, E., Salberg, S., & Mychasiuk, R. (2021). Traumatic brain injury in adolescence: A review of the neurobiological and behavioural underpinnings and outcomes. Developmental Review, 59, Article 100943. https://doi.org/10.1016/j.dr.2020.100943

- Cicerone, K. D., & Azulay, J. (2002). Diagnostic utility of attention measures in postconcussion syndrome. The Clinical Neuropsychologist, 16(3), 280–289. https://doi.org/10.1076/clin.16.3.280.13849

- Coldren, R. L., Kelly, M. P., Parish, R. V., Dretsch, M., & Russell, M. L. (2010). Evaluation of the military acute concussion evaluation for use in combat operations more than 12 h after injury. Military Medicine, 175(7), 477–481. https://doi.org/10.7205/MILMED-D-09-00258

- Cole, W. R., Arrieux, J. P., Ivins, B. J., Schwab, K. A., & Qashu, F. M. (2018). A comparison of four computerized neurocognitive assessment tools to a traditional neuropsychological test battery in service members with and without mild traumatic brain injury. Archives of Clinical Neuropsychology, 33(1), 102–119. https://doi.org/10.1093/arclin/acx036

- Cullen, B., O’Neill, B., Evans, J. J., Coen, R. F., & Lawlor, B. A. (2007). A review of screening tests for cognitive impairment. Journal of Neurology, Neurosurgery & Psychiatry, 78(8), 790–799. https://doi.org/10.1136/jnnp.2006.095414

- De Martino, M., Santini, B., Cappelletti, G., Mazzotta, A., Rasi, M., Bulgarelli, G., Annicchiarico, L., Marcocci, A., & Talacchi, A. (2020). The quality of measurement properties of neurocognitive assessment in brain tumor clinical trials over the last 30 years: A COSMIN checklist-based approach. Neurological Sciences, 41(11), 3105–3121. https://doi.org/10.1007/s10072-020-04477-4

- Demeyere, N., Riddoch, M. J., Slavkova, E. D., Bickerton, W.-L., & Humphreys, G. W. (2015). The Oxford Cognitive Screen (OCS): Validation of a stroke-specific short cognitive screening tool. Psychological Assessment, 27(3), 883–894. https://doi.org/10.1037/pas0000082

- Doninger, N. A., Bode, R. K., Heinemann, A. W., & Ambrose, C. (2000). Rating scale analysis of the neurobehavioral cognitive status examination. Journal of Head Trauma Rehabilitation, 15(1), 683–695. https://doi.org/10.1097/00001199-200002000-00007

- Doninger, N. A., Ehde, D. M., Bode, R. K., Knight, K., Bombardier, C. H., & Heinemann, A. W. (2006). Measurement properties of the neurobehavioral cognitive status examination (cognistat) in traumatic brain injury rehabilitation. Rehabilitation Psychology, 51(4), 281–288. https://doi.org/10.1037/0090-5550.51.4.281

- Ettenhofer, M. L., Hungerford, L. D., & Agtarap, S. (2021). Multimodal neurocognitive screening of military personnel with a history of mild traumatic brain injury using the Bethesda eye & attention measure. Journal of Head Trauma Rehabilitation, 36(6), 447–455. https://doi.org/10.1097/HTR.0000000000000683

- Fischer, T. D., Red, S. D., Chuang, A. Z., Jones, E. B., McCarthy, J. J., Patel, S. S., & Sereno, A. B. (2016). Detection of subtle cognitive changes after mTBI using a novel tablet-based task. Journal of Neurotrauma, 33(13), 1237–1246. https://doi.org/10.1089/neu.2015.3990

- Folstein, M. F., Folstein, S. E., & McHugh, P. R. (1975). Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research, 12(3), 189–198. https://doi.org/10.1016/0022-3956(75)90026-6

- French, L., Mccrea, M., & Baggett, M. (2008). The military acute concussion evaluation (MACE). Journal of Special Operations Medicine, 8, 68–77.

- Frenette, L. C., Tinawi, S., Correa, J. A., Alturki, A. Y., LeBlanc, J., Feyz, M., & de Guise, E. (2019). Early detection of cognitive impairments with the Montreal cognitive assessment in patients with uncomplicated and complicated mild traumatic brain injury. Brain Injury, 33(2), 189–197. https://doi.org/10.1080/02699052.2018.1542506

- Gaber, T. A.-Z. K. (2008). Evaluation of the Addenbrooke’s Cognitive Examination’s validity in a brain injury rehabilitation setting. Brain Injury, 22(7-8), 589–593. https://doi.org/10.1080/02699050802132461

- Gupta, A., & Kumar, N. K. (2009). Indian adaptation of the cognistat: Psychometric properties of a cognitive screening tool for patients of traumatic brain injury. The Indian Journal of Neurotrauma, 6(2), 123–132. https://doi.org/10.1016/S0973-0508(09)80006-3

- Guskiewicz, K. M., Register-Mihalik, J., McCrory, P., McCrea, M., Johnston, K., Makdissi, M., Dvořák, J., Davis, G., & Meeuwisse, W. (2013). Evidence-based approach to revising the SCAT2: Introducing the SCAT3. British Journal of Sports Medicine, 47(5), 289–293. https://doi.org/10.1136/bjsports-2013-092225

- Hatta, T., Yoshizaki, K., Ito, Y., Mase, M., & Kabasawa, H. (2012). Reliability and validity of the digit cancellation TEST, a brief screen of attention. Psychologia: An International Journal of Psychological Sciences, 55(4), 246–256. https://doi.org/10.2117/psysoc.2012.246

- Hazan, E., Zhang, J., Brenkel, M., Shulman, K., & Feinstein, A. (2017). Getting clocked: Screening for TBI-related cognitive impairment with the clock drawing test. Brain Injury, 31(11), 1501–1506. https://doi.org/10.1080/02699052.2017.1376763

- Headway. (2017). ABI 2016-2017 Statistics based on UK admissions. Retrieved May 2, 2022, from https://www.headway.org.uk/about-brain-injury/further-information/statistics/

- Hsieh, S., Schubert, S., Hoon, C., Mioshi, E., & Hodges, J. R. (2013). Validation of the Addenbrooke’s Cognitive Examination III in frontotemporal dementia and Alzheimer’s disease. Dementia and Geriatric Cognitive Disorders, 36, 242–250. https://doi.org/10.1159/000351671

- James, S. L., Theadom, A., Ellenbogen, R. G., Bannick, M. S., Montjoy-Venning, W., Lucchesi, L. R., Abbasi, N., Abdulkader, R., Abraha, H. N., Adsuar, J. C., Afarideh, M., Agrawal, S., Ahmadi, A., Ahmed, M. B., Aichour, A. N., Aichour, I., Aichour, M. T. E., Akinyemi, R. O., Akseer, N., … Murray, C. J. L. (2019). Global, regional, and national burden of traumatic brain injury and spinal cord injury, 1990–2016: A systematic analysis for the global burden of disease study 2016. The Lancet Neurology, 18(1), 56–87. https://doi.org/10.1016/S1474-4422(18)30415-0

- Joseph, A.-L. C., Peterson, H. A., Garcia, K. M., McNally, S. M., Mburu, T. K., Lippa, S. M., Dsurney, J., & Chan, L. (2019). Rey’s tangled line test: A measure of processing speed in TBI. Rehabilitation Psychology, 64(4), 445–452. https://doi.org/10.1037/rep0000284

- Kosgallana, A., Cordato, D., Chan, D. K. Y., & Yong, J. (2019). Use of cognitive screening tools to detect cognitive impairment after an ischaemic stroke: A systematic review. SN Comprehensive Clinical Medicine, 1(4), 255–262. https://doi.org/10.1007/s42399-018-0035-2

- Luoto, T. M., Silverberg, N. D., Kataja, A., Brander, A., Tenovuo, O., Ohman, J., & Iverson, G. L. (2014). Sport concussion assessment tool 2 in a civilian trauma sample with mild traumatic brain injury. Journal of Neurotrauma, 31(8), 728–738. https://doi.org/10.1089/neu.2013.3174

- Maruff, P., Thomas, E., Cysique, L., Brew, B., Collie, A., Snyder, P., & Pietrzak, R. H. (2009). Validity of the CogState brief battery: Relationship to standardized tests and sensitivity to cognitive impairment in mild traumatic brain injury, schizophrenia, and AIDS dementia complex. Archives of Clinical Neuropsychology, 24(2), 165–178. https://doi.org/10.1093/arclin/acp010

- Mokkink, L. B., Boers, M., van der Vleuten, C., Patrick, D. L., Alonso, J., Bouter, L. M., & Terwee, C. B. (2021). COSMIN risk of bias tool to assess the quality of studies on reliability and measurement error of outcome measurement instrument. User Manual Version, 1. https://www.cosmin.nl/wp-content/uploads/user-manual-COSMIN-Risk-of-Bias-tool_v4_JAN_final.pdf

- Mokkink, L. B., Prinsen, C. A.C., Patrick, D. L., Alonso, J., Bouter, L. M., De Vet, H. C. W., & Terwee, C. B. (2018). COSMIN methodology for systematic reviews of patient-reported outcome measures (PROMs). User manual. https://www.cosmin.nl/wp-content/uploads/COSMIN-syst-review-for-PROMs-manual_version-1_feb-2018-1.pdf

- Nabors, N. A., Millis, S. R., & Rosenthal, M. (1997). Use of the neurobehavioral cognitive status examination (cognistat) in traumatic brain injury. Journal of Head Trauma Rehabilitation, 12(3), 79–84. https://doi.org/10.1097/00001199-199706000-00008

- Nasreddine, Z. S., Phillips, N. A., Bédirian, V., Charbonneau, S., Whitehead, V., Collin, I., Cummings, J. L., & Chertkow, H. (2005). The Montreal cognitive assessment, MoCA: A brief screening tool for mild cognitive impairment. Journal of the American Geriatrics Society, 53(4), 695–699. https://doi.org/10.1111/j.1532-5415.2005.53221.x

- NICE. (2014). Head injury: Assessment and early management. Retrieved May 5, 2022, from https://www.nice.org.uk/guidance/cg176/chapter/introduction

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Systematic Reviews, 10(1), 89. https://doi.org/10.1186/s13643-021-01626-4

- Peters, M. E., & Gardner, R. C. (2018). Traumatic brain injury in older adults: Do we need a different approach? Concussion, 3(3), CNC56. https://doi.org/10.2217/cnc-2018-0001

- Pinasco, C., Oviedo, M., Goldfeder, M., Bruno, D., Lischinsky, A., Torralva, T., & Roca, M. (2023). Sensitivity and specificity of the INECO frontal screening (IFS) in the detection of patients with traumatic brain injury presenting executive deficits. Applied Neuropsychology: Adult, 30(3), 289–296. https://doi.org/10.1080/23279095.2021.1937170

- Rojas, D. C., & Bennett, T. L. (1995). Single versus composite score discriminative validity with the Halstead–Reitan battery and the Stroop test in mild brain injury. Archives of Clinical Neuropsychology, 10, 101–110. https://doi.org/10.1093/arclin/10.2.101

- Scottish Aquired Brain Injury Network. (2017). Assessment of cognition – brain injury e-learning resource. Retrieved December 13, 2020, from https://www.acquiredbraininjury-education.scot.nhs.uk/impact-of-abi/cognitive-problems/assessment-of-cognition/

- Siddaway, A. P., Wood, A. M., & Hedges, L. V. (2019). How to do a systematic review: A best practice guide for conducting and reporting narrative reviews, meta-analyses, and meta-syntheses. Annual Review of Psychology, 70(1), 747–770. https://doi.org/10.1146/annurev-psych-010418-102803

- Silverberg, N. D., Luoto, T. M., Öhman, J., & Iverson, G. L. (2014). Assessment of mild traumatic brain injury with the King-Devick test in an emergency department sample. Brain Injury, 28(12), 1590–1593. https://doi.org/10.3109/02699052.2014.943287

- Srivastava, A., Rapoport, M. J., Leach, L., Phillips, A., Shammi, P., & Feinstein, A. (2006). The utility of the mini-mental status exam in older adults with traumatic brain injury. Brain Injury, 20(13-14), 1377–1382. https://doi.org/10.1080/02699050601111385

- Stolwyk, R. J., O’Neill Megan, H., McKay Adam, J. D., & Wong Dana, K. (2014). Are cognitive screening tools sensitive and specific enough for use after stroke? Stroke, 45(10), 3129–3134. https://doi.org/10.1161/STROKEAHA.114.004232

- Stone, M. E. J., Safadjou, S., Farber, B., Velazco, N., Man, J., Reddy, S. H., Todor, R., & Teperman, S. (2015). Utility of the military acute concussion evaluation as a screening tool for mild traumatic brain injury in a civilian trauma population. Journal of Trauma and Acute Care Surgery, 79(1), 147–151. https://doi.org/10.1097/TA.0000000000000679

- Tay, M. R. J., Soh, Y. M., Plunkett, T. K., Ong, P. L., Huang, W., & Kong, K. H. (2019). The validity of the Montreal cognitive assessment for moderate to severe traumatic brain injury patients: A pilot study. American Journal of Physical Medicine & Rehabilitation, 98(11), 971–975. https://doi.org/10.1097/PHM.0000000000001227

- Teager, A., Methley, A., Dawson, B., & Wilson, H. (2020). The use of cognitive screens within major trauma centres in England: A survey of current practice. Trauma, 22(3), 201–207. https://doi.org/10.1177/1460408619871801

- Vissoci, J. R. N., de Oliveira, L. P., Gafaar, T., Haglund, M. M., Mvungi, M., Mmbaga, B. T., & Staton, C. A. (2019). Cross-cultural adaptation and psychometric properties of the MMSE and MoCA questionnaires in Tanzanian swahili for a traumatic brain injury population. BMC Neurology, 19(1), 57. https://doi.org/10.1186/s12883-019-1283-9

- Waldron-Perrine, B., Gabel, N. M., Seagly, K., Kraal, A. Z., Pangilinan, P., Spencer, R. J., & Bieliauskas, L. (2019). Montreal Cognitive Assessment as a screening tool: Influence of performance and symptom validity. Neurology Clinical Practice, 9(2), 101–108. https://doi.org/10.1212/CPJ.0000000000000604

- Walsh, D. V., Capó-Aponte, J. E., Beltran, T., Cole, W. R., Ballard, A., & Dumayas, J. Y. (2016). Assessment of the King-Devick® (KD) test for screening acute mTBI/concussion in warfighters. Journal of the Neurological Sciences, 370, 305–309. https://doi.org/10.1016/j.jns.2016.09.014

- Whiting, P. F., Rutjes, A. W., Westwood, M. E., Mallett, S., Deeks, J. J., Reitsma, J. B., Leeflang, M. M., Sterne, J. A., Bossuyt, P. M., & QUADAS-2 Group. (2011). QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Annals of Internal Medicine, 155(8), 529–536. https://doi.org/10.7326/0003-4819-155-8-201110180-00009

- Wilson, L., Horton, L., Kunzmann, K., Sahakian, B. J., Newcombe, V. F., Stamatakis, E. A., von Steinbuechel, N., Cunitz, K., Covic, A., Maas, A., Van Praag, D., & Menon, D. (2021). Understanding the relationship between cognitive performance and function in daily life after traumatic brain injury. Journal of Neurology, Neurosurgery & Psychiatry, 92(4), 407–417. https://doi.org/10.1136/jnnp-2020-324492

- Wong, G. K. C., Ngai, K., Lam, S. W., Wong, A., Mok, V., & Poon, W. S. (2013). Validity of the Montreal Cognitive Assessment for traumatic brain injury patients with intracranial haemorrhage. Brain Injury, 27(4), 394–398. https://doi.org/10.3109/02699052.2012.750746

- Wu, Y., Wang, Y., Zhang, Y., Yuan, X., & Gao, X. (2023). A preliminary study of the saint louis university mental status examination (SLUMS) for the assessment of cognition in moderate to severe traumatic brain injury patients. Applied Neuropsychology: Adult, 30(4), 409–413. https://doi.org/10.1080/23279095.2021.1952414

- Zhang, H., Zhang, X.-N., Zhang, H.-L., Huang, L., Chi, Q.-Q., Zhang, X., & Yun, X.-P. (2016). Differences in cognitive profiles between traumatic brain injury and stroke: A comparison of the Montreal cognitive assessment and mini-mental state examination. Chinese Journal of Traumatology, 19(5), 271–274. https://doi.org/10.1016/j.cjtee.2015.03.007

- Zhang, S., Wu, Y.-H., Zhang, Y., Zhang, Y., & Cheng, Y. (2021). Preliminary study of the validity and reliability of the Chinese version of the Saint Louis University Mental Status Examination (SLUMS) in detecting cognitive impairment in patients with traumatic brain injury. Applied Neuropsychology: Adult, 28(6), 633–640. https://doi.org/10.1080/23279095.2019.1680986