ABSTRACT

In response to the replication crisis in psychology, the scientific community has advocated open science practices to promote transparency and reproducibility. Although existing reviews indicate inconsistent and generally low adoption of open science in psychology, a current-day, detailed analysis is lacking. Recognising the significant impact of false memory research in legal contexts, we conducted a preregistered systematic review to assess the integration of open science practices within this field, analysing 388 publications from 2015 to 2023 (including 15 replications and 3 meta-analyses). Our findings indicated a significant yet varied adoption of open science practices. Most studies (86.86%) adhered to at least one measure, with publication accessibility being the most consistently adopted practice at 73.97%. While data sharing demonstrated the most substantial growth, reaching about 75% by 2023, preregistration and analysis script sharing lagged, with 20–25% adoption in 2023. This review highlights a promising trend towards enhanced research quality, transparency, and reproducibility in false memory research. However, the inconsistent implementation of open science practices may still challenge the verification, replication, and interpretation of research findings. Our study underscores the need for a comprehensive adoption of open science to improve research reliability and validity substantially, fostering trust and credibility in psychology.

In response to the replication crisis in psychology, the scientific community has emphasized transparency and reproducibility, which are crucial for enhancing research validity, reliability and efficiency (Chartier et al., Citation2018; McNutt, Citation2014). Ultimately, the open science movement is fundamental to bolster trust in psychological knowledge (Fecher & Friesike, Citation2013). Empirical legal psychology, specifically false memory research, holds significant practical importance in legal proceedings and policy decisions (Brainerd & Reyna, Citation2005). Despite this, the progressive integration of open science into this relevant field has not been evaluated thus far. Our article addresses this gap by systematically reviewing open science practices in false memory research from 2015 to the present (2023).

False positive psychology

In Citation2011, a provocative study by Simmons et al. demonstrated how then-standard research methods could be (un-)intentionally manipulated to produce a favoured result, even absurdly suggesting that listening to the Beatles’ song “When I’m Sixty-Four” could make someone younger. The study highlighted questionable research practices, including p-hacking (i.e., adjusting the analysis parameters until producing a statistically significant p-value) and exploiting researcher degrees of freedom (i.e., flexibility in designing, conducting and analysing a scientific study). Importantly, this was not an isolated case; related research (e.g., Wagenmakers et al., Citation2011) further illustrated the detrimental effects of such data-driven practices on the validity of study findings. This growing concern prompted a wave of large-scale replication studies to estimate the reproducibility of psychological science (e.g., Camerer et al., Citation2018; Klein et al., Citation2014, Citation2018; Open Science Collaboration, Citation2015). These efforts revealed discouraging results, with some showing replication success rates as low as 36% (i.e., same-directionality significant findings; Open Science Collaboration, Citation2015), marking the beginning of what is now known as the replication crisis.

The open science revolution

Reproducibility is a core principle of scientific advancement (McNutt, Citation2014; Open Science Collaboration, Citation2015). Therefore, following the replication crisis, the scientific world emphasized transparency and reproducibility within the scientific literature to restore trust in the scientific process and knowledge. Next to large-scale replication studies, these efforts also included the formulation of best research practices and the establishment of organisations dedicated to promoting responsible research (e.g., Open Science Framework: infrastructure for researchers to preregister studies and share materials, data and analysis scripts; Psychological Science Accelerator: globally distributed network of researchers that pool intellectual and material resources; Society for the Improvement of Psychological Science: collaboration of scholars to improve methods and practices in psychological science; Nosek & Lakens, Citation2014).

Hence, the open science revolution began (Chartier et al., Citation2018). Collectively termed Open Science Practices, these methods aim to make the entire research cycle open and accessible, thereby increasing transparency, accessibility of knowledge, and rigour (Fecher & Friesike, Citation2013). The methods include publishing open access (i.e., making the publication freely accessible to the public; Fecher & Friesike, Citation2013), sharing resources (i.e., making materials, data and analysis scripts freely available; Finkel et al., Citation2015), and preregistering projects (i.e., solidifying the research plans, including hypotheses, design and analysis plans before data collection begins; Finkel et al., Citation2015). The former methods intend to increase transparency, while preregistration, in particular, is aimed at reducing questionable data-driven research practices such as p-hacking and exploiting researcher degrees of freedom. Specifically, there are two types of preregistrations: unreviewed and reviewed (i.e., registered reports; Lindsay et al., Citation2016), with reviewed preregistration involving an additional step of a journal agreeing to publish the project based on the strength of the research plans before any data is collected.

Evaluating the progress

Since these open science methods and initiatives were launched, evidence of their implementation and progression in the research literature remains limited. The few recent investigations into open science practices in the psychology research literature reveal a continued need for more transparency and reproducibility (Hardwicke et al., Citation2020, Citation2021, Citation2022; Louderback et al., Citation2023). These studies, examining randomly selected samples from general psychology or investigating specific subfields (e.g., gambling research, Louderback et al., Citation2023), found that about half of the sample were published with open access. A small proportion involved preregistrations or openly shared materials, data, and analysis scripts, with percentages ranging from 2 to 8%. Additionally, an observational study by Chin et al. (Citation2024) specifically assessed transparency in legal research, reporting high article accessibility (86%) but lower availability of data (19%), analysis scripts (6%), and preregistration (3%).

While these previous systematic reviews offer valuable insights into the state of research practices within psychology, they are constrained by several factors. First, they do not cover recent publications (i.e., post-2020), potentially overlooking recent developments and failing to reflect the field’s ongoing progress (i.e., newer initiatives and their potential effects). And, the reviews’ focus on narrow timeframes limits their ability to observe long-term trends. Second, by relying on random samples from broad disciplines, these reviews may not adequately represent the specific practices and norms of subfields in psychology. Our study addresses these shortcomings by extending the analysis to include more recent publications, covering a longer timeframe, and focusing on one ecologically relevant subfield. Thus, this approach provides a more updated, deeper and more nuanced understanding of open science adoption within psychological research.

False memory research

Legal psychological research, particularly in the realm of false memory, holds critical practical relevance for legal contexts (e.g., see Brainerd & Reyna, Citation2005; Kassin & Gudjonsson, Citation2004; Lacy & Stark, Citation2013; Redding, Citation1998; Schacter & Loftus, Citation2013). It plays a crucial role in developing interrogation and identification protocols to estimate or enhance the reliability of witness testimonies adequately, ultimately influencing court rulings and trial fairness (Brainerd & Reyna, Citation2005). Hence, such research informs policy-making and educational programmes for legal professionals and is essential in preventing miscarriages of justice (Bornstein & Meissner, Citation2023). However, the credibility of legal research has also been questioned during the replication crisis (Chin et al., Citation2024). Although there has been a growing recognition of the importance of open science practices in ensuring the reliability and validity of memory research (e.g., Chin et al., Citation2024; Otgaar & Howe, Citation2024; Wessel et al., Citation2020), data on the adoption and effectiveness of these practices remain sparse. Therefore, our study aims to provide a comprehensive overview of the current state and the progression of open science practices, specifically within false memory research.

Current study

In the current study, we systematically reviewed the application of open science practices within the false memory field. Due to the field’s extensive research scope, we employed a targeted approach to enable detailed paper-by-paper analysis and scoring, providing contextual and in-depth insights. Specifically, we focused on well-established false memory paradigms to offer a systematic and comprehensive overview, maintain feasibility, and facilitate standardised evaluation criteria across studies, ultimately allowing reliable comparisons and trend analyses within this field. To this end, we included the Deese-Roediger-McDermott (DRM) paradigm (Roediger & McDermott, Citation1995), the misinformation paradigm (Loftus et al., Citation1978; see Ayers & Reder, Citation1998, and Pickrell et al., Citation2016, for an overview) and memory implantation techniques (involving strategies such as guided imagery, false feedback, imagination inflation or suggestive questioning; see Brewin & Andrews, Citation2017; Muschalla & Schönborn, Citation2021; Scoboria et al., Citation2017, for more information).

We focused on evaluating the adoption of open access reports (gold open access, and general accessibility of the report including gold and green open access status), preregistration, and sharing of materials, data, and analysis scripts, both overall and its change over time. First, drawing on prior research (Hardwicke et al., Citation2020, Citation2021, Citation2022; Louderback et al., Citation2023), we hypothesised that only a small proportion of publications in our sample would report the use of open science practices. Second, we expected that more recent publications would adopt open science practices more compared to older publications. Third, we assessed whether adopting open science was associated with higher citation counts. Based on Louderback et al. (Citation2023), we anticipated that publications using such practices (vs those that do not) would be cited more frequently. Lastly, we examined the distribution of study designs, i.e., primary study, replication study and meta-analysis. Given their importance in the hierarchy of scientific evidence for assessing replicability and generalizability (Borgerson, Citation2009; Corker, Citation2018; Eden, Citation2002), increased replications or meta-analyses (vs primary studies) would indicate a shift towards more re-evaluation and reproducibility in the field. Based on considerations such as feasibility, resource constraints, or the nature of the research questions, we expected primary studies to dominate. We also explored whether replications and meta-analyses increased over time.

Notably, we reviewed publications since January 2015. Considering the typical research cycle of 1–2 years from inception to publication, and the introduction and rising prominence of open science measures and initiatives around 2013 (e.g., Center for Open Science), we determined that publications from 2015 onwards would most adequately reflect the adoption of these practices. This starting point aligns with similar studies (e.g., Hardwicke et al., Citation2020; Louderback et al., Citation2023), facilitating future comparative analyses across different domains.

Method

Transparency and openness

All data, analysis code, and research materials (including our coding scheme) are available at https://osf.io/f58kj/. Data were analysed using RStudio (Version 1.2.5019) and JASP (Version 0.17.1). This project was preregistered after a literature search and screening (including a pilot coding procedure) to verify the planned procedures, but crucially, before the data extraction and analysis phase. The preregistration can be found at https://osf.io/9ymn5. We followed the PRISMA-P checklist and PRISMA reporting guidelines for the manuscript.

Exclusion criteria

We used five criteria to determine study eligibility. First, we excluded papers that did not adopt an experimental design or statistical analysis of participant data (i.e., excluding theoretical, qualitative literature or literature reviews; tagged as “design”). Notably, we included meta-analyses as they conduct statistical analyses on sample data and, thus, require many data-related decisions. Second, we excluded papers that were not published in a scientific journal (i.e., excluding book chapters, conference papers, reports, theses or online preprint publications on a website or an academic platform; tagged as “publication”). Third, we excluded papers published before January 2015 (i.e., as open science practices in publications only became a new norm post-2015; tagged as “time”). Fourth, we excluded papers written in a language other than English (i.e., to avoid difficulties and inconsistencies in the extraction phase; tagged as “language”). Lastly, we excluded papers that did not use one of the preregistered paradigms to study false memories: the DRM paradigm (Roediger & McDermott, Citation1995), misinformation paradigm (Loftus et al., Citation1978) or memory implantation methods (e.g., Scoboria et al., Citation2017; tagged as “topic”). For more details on the exclusion criteria and their rationale, please refer to Exclusion Criteria and Search Introduction at https://osf.io/f58kj/.

Literature search and screening

We conducted our literature search on two databases (PsycInfo and Web of Science). As we were solely interested in work published in scientific journals, we did not conduct other searches, e.g., through Google Scholar, the Open Science Framework (OSF), or ProQuest Dissertation Abstracts (i.e., for unpublished theses), or directly contact authors.

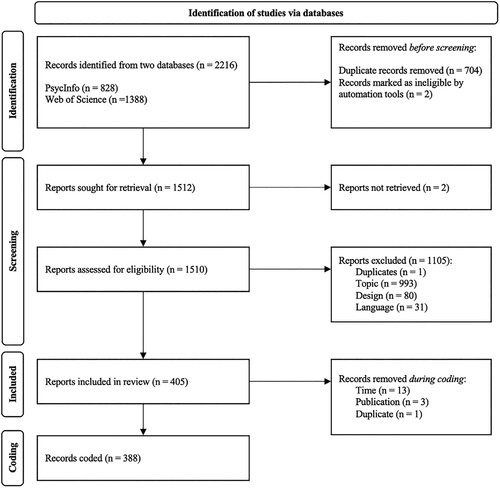

The literature search, limited to articles published since January 2015, was conducted in October 2023, and 2216 publications were initially retrieved (n PsycInfo = 828; n Web of Science = 1388). The search terms can be found in the document Search Phase at https://osf.io/f58kj/. We used the DedupEndNote platform (Version 1.0.0.) to de-duplicate the total of the retrieved published literature (n after de-duplication = 1512). Following this, we used the systematic review web app Rayyan to screen reports (Ouzzani et al., Citation2016); the reviewer (PL) was not blind to authors, institutions, journals, and results. An overview of the screening procedure and exclusions is displayed in .

Figure 1. PRISMA flowchart.

Note. As soon as the reviewer found one violation of an inclusion criterion, screening for other violations stopped.

The screening procedure consisted of a pilot and a main screening phase. The pilot screening sample included 20 papers published before 2015 to avoid overlap with the main sample, with two independent screeners (PL and EG) blinded to each other’s decisions. In this stage, each screener independently assessed the same 20 papers. The pilot aimed to ensure a consistent understanding of the in- and exclusion criteria; screeners initially agreed on 85% of the publications. Conflicts were resolved through discussion under the supervision of a third investigator (SW). In the main screening based on papers’ titles and abstracts (and full text if there was any uncertainty about the decision), the same two researchers independently reviewed half of the papers (PL and EG). No blinding was applied during the main screening phase as both screeners reviewed their own set of papers. If screeners were unsure about their screening decision after carefully reviewing the full text, the third supervising investigator (SW) was consulted. In total, 1510 publications were screened (two reports could not be retrieved), and 1105 were excluded. Thus, 405 reports entered the coding phase, during which an additional 17 reports were excluded post-hoc, resulting in a final extracted sample of 388 publications.

Coding system and extraction

We used a standard coding system for each study’s general information (i.e., Doi, month and year of the publication, Google Scholar citation count, journal name and impact), design (i.e., study design, replication type), and open science characteristics (i.e., gold open access status and general accessibility of the report, registration status and time, and material, data and analysis script sharing and information). When assessing registration status and material, data and analysis script sharing, we used a dual coding strategy to evaluate both reported and actual adoption. We extracted what publications claimed and checked these claims by trying to access the described open science feature during data extraction. For example, if a study claimed its data was available in an online repository, but we could not access it at the time of coding, we noted it as reported but unverified data sharing. Hereby, we aimed to highlight discrepancies between open science claims and their actual use in the given moment, affecting direct research reproducibility and pushing for transparency and accountability standards in reporting.

Before the main data extraction, researcher PL extracted the data from five articles, which the supervising investigator (SW) verified to confirm a proper understanding of the procedure. PL then extracted the data from the remaining articles. For details on the coding system and form, please see the Coder Instruction and Coding Form at https://osf.io/f58kj/.

Statistical analyses

We used frequentist and Bayesian statistical approaches. For the frequentist analyses, we used the standard p < .05 criteria to determine whether the analysis reached statistical significance.Footnote1 For Bayesian statistics, we calculated Bayes Factors (BF01), which indicates how likely the data are under the null hypothesis compared to the alternative hypothesis (Jarosz & Wiley, Citation2014). The inverse ratio (BF10) allows one to speak of the likelihood of the alternative hypothesis compared to the null (Jarosz & Wiley, Citation2014). We classified BF10 > 5 as convincing evidence in favour of the alternative over the null hypothesis and BF01 > 5 as convincing evidence in favour of the null over the alternative hypothesis. If Bayesian analyses showed BF10 < 5 and BF01 < 5, we did not draw definite conclusions on the directionality of the effect. One researcher analysed the data, and another confirmed the analyses’ accuracy.

First, we examined our sample’s overall adoption of open science practices. Each open science variable was coded as either employed or not, and we reported the adoption rate for each measure individually. Second, we assessed whether open science practices increased from 2015 to 2023. For this, we visualised the proportion of practices employed for each open science variable by publication year. Additionally, we conducted frequentist and Bayesian logistic regressions for each variable to test the null hypothesis (i.e., no change in open science adoption over time) against a one-sided alternative (i.e., increase of open science adoption over time). Third, we examined whether publications that implemented open science practices, evaluated for each variable individually, were cited more frequently. We used frequentist and Bayesian independent samples t-tests to test the null hypothesis (i.e., no difference in citation count depending on open science application) against a one-sided alternative (i.e., open science application is associated with higher citation counts). Lastly, we documented the distribution of study designs within the sample, including primary studies, replications, and meta-analyses.

Deviations from preregistration

In the preregistration, we indicated using chi-square tests to examine open science progress over time. However, we realised that logistic regression analyses might be more appropriate given the eight-category ordinal nature of the publication year variable. As both approaches yielded comparable conclusions, only the logistic regression results are presented. Moreover, in the preregistration we indicated to assess journal open access publishing. During extraction we realised that in addition to journal open access status (i.e., gold open access), general publication accessibility (i.e., open access publications including gold and green open access status) would provide additional value. Therefore, we included publication accessibility next to journal open access status.

Results

Sample

Twelve of the 388 reports described experiments with varying adherence to open science practices. For these, our guiding principle was to code each report based on the strongest evidence or endorsement reported. For instance, seven reports included preregistered and non-preregistered experiments, which we categorised as preregistered research. Three reports featured primary and replication experiments and were thus classified as replications. One report combined a replication and a meta-analysis and was categorised as a meta-analysis, given its scarcity in our dataset. Finally, one report showed inconsistent data sharing across experiments and was coded as openly sharing data.

During the coding process, we also encountered challenges in assessing the status of study materials. Of the 388 reports, 53 included their stimuli sets within the manuscript or appendices, which made it unclear whether all essential materials were fully shared. We initially coded these instances to comply with material sharing. However, since these cases represent 14% of our sample, we performed sensitivity analyses detailed in Appendix A, in which these 53 cases were considered not sharing their materials.

Overall, the 388 publications were published in 132 unique journals. The corresponding authors of the publications were affiliated with universities from 34 countries,Footnote2 with the US (n = 130), UK (n = 35), and the Netherlands (n = 21) most represented.

Open science endorsement

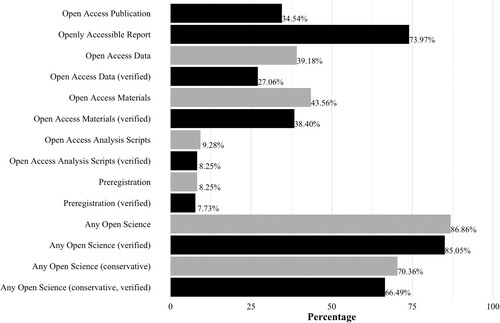

General open science endorsement

In our analysis of open science practices within the false memory literature in legal psychology, the results displayed in show endorsement across variables. In contrast to our hypothesis that only a small proportion of our sample would adopt open science practices, we found that a significant majority, 86.86% (n = 337), adopted at least one open science practice, with 85.05% (n = 330) of these verifiable. The most prevalent practice was ensuring publication accessibility (n = 287; 73.97%). Since making the publication openly accessible is the simplest and quickest post-hoc open science measure to adopt, we also applied a more conservative criterion for the variable of adopting at least one open science practice regardless of general publication accessibility. Using this conservative measure, about two-thirds of the sample (n = 273; 70.36%) still reported adopting at least one open science practice, with 66.49% (n = 258) being verifiable.

Figure 2. Open science endorsement graph.

Note. The proportions per graph are presented. The preregistration variable includes registered reports. Open access publication concerns only gold open access status whereas open accessibility includes general accessibility of the report (including gold and green open access status). The latter, thus, is more highly endorsed compared to the former. Claimed practices are portrayed in grey and verified practices are presented in black.

Overall, over a third of our sample’s reports were published with gold open access (n = 134, 34.54%)Footnote3 and claimed to openly share their data (n = 152; 39.18%; 105 of these cases being verifiable) and materials (n = 169; 43.56%; 149 of these cases being verifiable). For a stricter assessment of material sharing, see Appendix A, where the endorsement rate is slightly lower. Finally, the least adopted open science practices were preregistration (n = 32; 8.25%), verified in 30 cases, and the sharing of analysis scripts (n = 36; 9.28%), with verification in 32 cases.

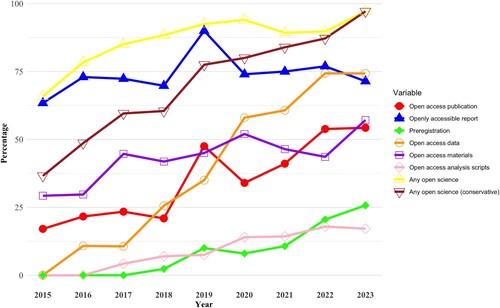

Open science endorsement over the years

We further analysed the endorsement of open science practices in the false memory field by publication year, with visualisations in and statistical details in and . Over the years, there was a consistently high rate of openly accessible publications, with a notable peak in 2019. This trend of publication accessibility heavily contributed to the overall high rates of adopting at least one open science practice. Notably, when excluding publication accessibility, the stricter criterion revealed a marked growth in open science adoption, increasing from about one-third in 2015 to nearly 100% in 2023.

Figure 3. Open science endorsement graph across the publication years (2015–2023).

Note. For clarity in our visual presentation, we included only the variables indicating claimed open science practices but not the verified implementation of these practices. The preregistration variable includes registered reports. Open access publication concerns only gold open access status whereas open accessibility includes general accessibility of the report (including gold and green open access status). The latter, thus, is more highly endorsed compared to the former.

Table 1. Frequentist and Bayesian logistic regression results predicting open science endorsement from year.

Table 2. Frequentist and Bayesian logistic regression results predicting verified open science endorsement from year.

A detailed analysis of candidate practices showed a general increase in open science adoption over the eight years. Noteworthy increases were seen in gold open access status and material sharing, approaching around 50% in 2023. In contrast, the adoption of analysis script sharing and preregistration was more gradual, reaching only about 17% and 25% in 2023, respectively. The most substantial increase was observed in data sharing, which grew from 0% in 2015 to about 75% in 2023, although only 18 of the 26 reports from 2023 were verifiable.

Our statistical analyses, detailed in and , support the visually observed trends. The logistic regression results indicated significant and conclusive evidence for a time-related increase in gold open access publication status, preregistration, and the sharing of data and analysis scripts. Material sharing, both reported and verified, did not demonstrate significant or conclusive evidence for an upward trend through the years. However, when applying stricter criteria to material sharing (see Table A1) – which includes only comprehensive sharing via online repositories or supplementary materials – we observed evidence for an upward trend. This distinction indicates a shift towards more thorough material sharing in recent years, advancing beyond the basic in-text sharing of stimuli (e.g., list items in the DRM paradigm). Lastly, general open accessibility of publications (i.e., including gold and green open access) did not increase over time. This suggests that publication accessibility may have been a common practice already in 2015 and, therefore, did not grow over the years.

Open science endorsement and citations

We investigated whether adopting open science practices, viewed as a contemporary indicator of study quality, correlated with increased citation counts. Initially, we preregistered two metrics for the analysis: total and annually averaged citations. However, total citation counts might be confounded by the wide range of publication years in our study (eight years vs three in Louderback et al., Citation2023) because older studies typically adopt less open science practices () and have had more time to accumulate citations. Therefore, we present the total citation analysis on OSF (see Overall Citation Count Analysis at https://osf.io/f58kj/) but only report the analysis of annually averaged citation counts in this manuscript (see and ). Our findings did not support the hypothesis that open science practices lead to increased citations, except for general open publication accessibility, which on average garnered more annual citations (M = 2.69, SD = 2.80) compared to those not openly accessible (M = 1.89, SD = 2.18; t(386) = −2.60, p = .005, BF10 = 6.24; Cohen’s d = 0.30, 95% CI [0.07; 0.53]).Footnote4

Table 3. Independent samples t-tests comparing citation count per year across various open science research practices.

Table 4. Independent samples t-tests comparing citation count per year across various verified open science research practices.

Study design

The majority of our sample consisted of primary studies (n = 370, 95.36% of total sample), followed by replication studies (n = 15, 3.87% of total sample) and meta-analyses (n = 3, 0.77% of total sample; one from 2016 and two from 2023). Although the small number of replication studies limits the ability to draw meaningful conclusions, our data suggests an increase in replication reports over time. While only four replication studies were published in the first half of the examined period, 11 out of the 15 (73.33%) appeared in or after 2019.

Given the small sample of replications and meta-analyses, we also present descriptive information on open science adoption within these designs. Five of the 15 replication reports were published with gold open access status (33.33%), and 13 were openly accessible (86.67%). Three replications were preregistered (20%, verified in all cases), ten openly shared their data (66.67%, verified in seven cases), eight their materials (53.33%, verified in seven cases), and one shared their analysis scripts (6.67%, verified). Two of the three meta-analyses (66.67%) were published with gold open access status, while all three reports were openly accessible. One report was preregistered and shared its data and materials (33.33%, verified), but none shared their analysis scripts. Hence, for most practices, replication and meta-analysis metrics are comparable to or slightly higher than the overall sample results.

Discussion

The adoption and efficacy of open science practices are crucial for enhancing reliability in memory research, as acknowledged by recent studies (e.g., Chin et al., Citation2024; Otgaar & Howe, Citation2024; Wessel et al., Citation2020). Yet, a comprehensive evaluation of the implementation of these practices is lacking. Therefore, our systematic review assessed the application of open science practices in false memory research from 2015 to 2023 – a period marked by the rapid development and dissemination of open science initiatives (Nosek et al., Citation2015). Unlike previous work that randomly sampled across various psychology domains (e.g., Hardwicke et al., Citation2020, Citation2022) or focused on narrower timeframes in specific areas (e.g., Chin et al., Citation2024; Louderback et al., Citation2023), our review examined all relevant literature of established paradigms in the false memory field over an extended period. Thereby, our approach involved a detailed paper-by-paper analysis, providing a summary of the current state and evolution of open science practices in this specialised area up to the present day.

Notably, our sample mainly consisted of primary studies, while replications and meta-analyses were scarce. Their rarity is particularly revealing; replications and meta-analyses are vital for validating and expanding existing research, thus driving scientific progress. Their underrepresentation is particularly concerning amid the replication crisis, highlighting the field’s slow pace in evaluating and enhancing its credibility through these essential approaches. Specifically, in light of the replication crisis, replication studies play a vital role by independently verifying the reliability and validity of original findings, reducing the likelihood of results being due to chance, artifacts, and specific conditions, and enhancing the generalizability of findings across different samples, settings, and methodologies (Schmidt, Citation2009). Additionally, they help identify and mitigate potential biases in the original research and promote rigorous research practices and transparency (Schmidt, Citation2009).

In contrast to our preregistered hypothesis, our findings indicated that a substantial majority of publications – 86.86% – employed at least one open science practice, a rate that surpassed those reported in previous reviews (e.g., 55% in Louderback et al., Citation2023). Compared to the earlier reviews ending latest in 2020 (Chin et al., Citation2024), this marked increase signals a clear trend towards broader adoption of open science practices over time. Despite these gains, the extent of adoption and the progression of its implementation varied greatly among practices. General open accessibility of publications was both the most common and most consistently adhered practice, maintaining a rate of about 75% across years (vs 40–86% in Chin et al., Citation2024; Hardwicke et al., Citation2020, Citation2022). This stability may reflect a long-established norm within the field (both by journals and individuals) and may have been further facilitated by the relative ease of sharing manuscripts openly, supported by the increased availability of platforms such as OSF preprints and ResearchGate, and institutional guidelines (e.g., all publications becoming openly accessible after a specified time). Thus, the high rate of open accessibility highlights a positive indicator of inclusive scientific communication. Still, we note that this steady rate may also be biased by the ability to implement such practice (long) after publication, complicating accurate assessments of its initial adoption based solely on publication year.

Data sharing demonstrated the most substantial growth among open science practices, rising from none in 2015 to about 75% by 2023. In contrast, preregistration and analysis script sharing showed the least adoption and slowest increase, with only about 20–25% of publications incorporating them by 2023. This variation in adoption rates may be attributed to several factors. One factor might be the resource demands: straightforward practices such as open publication accessibility or data sharing are more widely adopted, while more demanding practices such as preregistration lag behind. Additionally, this disparity may also reflect the evolving policies and incentives of academic journals and funding agencies. These bodies increasingly mandate data sharing (or require explicit justification for not sharing) as a condition for publication or funding (Barbui et al., Citation2016; Lindsay, Citation2017; Martone et al., Citation2018; Woods & Pinfield, Citation2022). However, the sharing of analysis scripts and preregistration may lack similar clear and standardised incentives, hindering their broader adoption.

Significantly, the disparity in open science adoption rates raises critical concerns regarding the effectiveness of partial implementation, particularly in enabling reproducibility (Stodden et al., Citation2018). For example, analysis scripts are critical for providing detailed documentation of data processing, which helps overcome the ambiguity often present in verbal descriptions of analysis procedures (Hardwicke et al., Citation2022). Without these scripts, shared data may not fully support the verification, replication, or correct interpretation of findings.

Similarly, although increasingly recognised (Nosek et al., Citation2018, Citation2019), the persistently low preregistration rates indicate it has not yet become routine. Without preregistration, it becomes challenging to distinguish between confirmatory and exploratory analyses or to understand data processing and exclusion steps, again complicating the verification of research plans and increasing the risk of questionable research practices. Combined with low replication rates observed in large-scale studies (e.g., Open Science Collaboration, Citation2015) and the small number of replication studies in our sample, the false memory literature may then risk being left with non-replicated and unverified exploratory findings. Thus, while each open science practice is beneficial, their partial adoption may not sufficiently enhance research quality, reproducibility and transparency. A comprehensive approach to open science, encompassing all essential components, is crucial for validating scientific knowledge and ensuring robust research evaluation (Ioannidis, Citation2012; Vazire, Citation2017).

Despite concerns over partial adoption, the overall rise in open science use is promising, indicating a growing commitment to enhancing research credibility. Still, this commitment might only be modestly linked to increased academic recognition. In line with Louderback et al. (Citation2023), our study found no association between the adoption of most open science practices and increased citations. An exception was noted for publication accessibility, which received higher average annual citations compared to reports that were not accessible. To note, the evidence for this effect varied depending on the chosen time windows for analysis. While this may hint at the variability of the phenomenon, it may also be explained by reduced statistical power within smaller time windows. Importantly, this finding aligns with the open access citation advantage phenomenon, where increased visibility and accessibility enhance citation rates (Gargouri et al., Citation2010; Langham-Putrow et al., Citation2021; Lawrence, Citation2001) and highlights that restricted access can hinder the research community and other stakeholders from engaging with scientific work (Hardwicke et al., Citation2022).

Nevertheless, the lack of an association between other open science practices and citation frequency suggests that open science use may not be a primary driver of academic recognition. Instead, factors such as the study’s field, scope, design, or the prestige of the institution and journal might exert a greater influence on citation frequency (Abramo et al., Citation2019; Hanel & Haase, Citation2017),Footnote5 indicating that traditional metrics such as journal prestige may continue to overshadow contemporary open science-related indicators of research quality.

Several limitations of this review should be acknowledged. First, while our focused analysis within the false memory field allowed for a detailed summary, it may have limited the generalizability of our findings to other areas of psychology. Second, while we focused on covering all levels of false memories, from intrusions to rich false memories, we may not have included all potential false memory research in the literature. During the literature search and screening, we set guidelines representing significant false memory paradigms to avoid subjective screening criteria for what constitutes a false memory paradigm or phenomenon. Therefore, although some instances of other false memory phenomena may have been included (e.g., crashing memories classifying as misinformation effect), we cannot guarantee comprehensive coverage of all false memory paradigms. Third, the mere adoption of open science practices – intended to enhance transparency and reproducibility – does not necessarily ensure their effectiveness. For example, inadequate data documentation can compromise reproducibility (Hardwicke et al., Citation2018), or vague preregistrations may fail to mitigate researcher bias (Bakker et al., Citation2020; Claesen et al., Citation2021). While we verified the claimed practices, we did not assess the completeness or appropriateness of the shared resources, as this would have required specialised expertise or direct author queries beyond the scope of our review. Lastly, the low occurrences of certain open science practices may have influenced our inability to detect associations with citation counts.

Our systematic review revealed a significant shift toward open science within false memory research. Yet, adoption rates varied greatly across practices, highlighting the need for further progress. Change takes time, and wholly embracing open science requires ongoing advocacy, support and incentive. For this, a multi-faceted strategy may be crucial (Hardwicke et al., Citation2022). This strategy should encompass education, encouragement and incentivization at various levels, ensuring that researchers are informed about the benefits of open science and that peer reviewers, journals, funding agencies, and career advancement structures enforce stringent guidelines consistently. Open science practices should become the default, with exceptions only under specific, justifiable circumstances (Morey et al., Citation2016). Initiatives such as the Transparency and Openness Promotion (Nosek et al., Citation2015), badge systems for material and data sharing or preregistration (e.g., Kidwell et al., Citation2016), and the Reviewers Openness Initiative (Morey et al., Citation2016) exemplify such efforts. Moreover, special issues (e.g., April 2023 replication special issue in Current Research in Behavioral Sciences) and editorials (e.g., Asarnow et al., Citation2018; Otgaar & Howe, Citation2024) dedicated to replication and open science in research may raise awareness among scholars and promote engagement with such practices.

Note, however, that the benefits of open science are not fully acknowledged by all researchers, and some continue to resist adopting proposed norms (e.g., Washburn et al., Citation2018). Concerns often involve their limited utility or feasibility (Washburn et al., Citation2018), as well as their potential restrictions on creativity and scientific discovery (Verschuere et al., Citation2021). To effectively enhance psychological science through the above-stated education, encouragement and incentivization, it is crucial to explicitly address the skepticism of researchers who question the necessity of open science. This involves engaging in open dialogues, providing evidence of the benefits of open science, and offering practical solutions to perceived obstacles. Fostering a more transparent and reliable scientific process will only be possible if skeptics are heard, their concerns are addressed, and acceptance is formed.

Finally, by continuously and carefully evaluating the effectiveness of these guidelines and initiatives through reviews such as the current one, we can monitor temporal developments and identify ongoing challenges. These insights are vital for researchers, journals, peer reviewers, and funding bodies as they work together to enhance research quality, transparency, and reproducibility.

Open Scholarship

This article has earned the Center for Open Science badges for Open Data, Open Materials and Preregistered. The data and materials are openly accessible at https://osf.io/f58kj/, the preregistration is openly accessible at https://osf.io/9ymn5.

Acknowledgments

We thank Elise de Groot for her help in the screening and piloting process.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1 To control for multiple testing within our research questions, we applied Bonferroni corrections to adjust the significant threshold.

2 When the corresponding author reported multiple affiliations, the first presented one was extracted.

3 Given that gold open access publishing can heavily depend on the resources available to researchers and is handled differently across institutions in various countries, we exploratorily investigated gold open access status in the three most represented countries of affiliation. Notably, gold open access publishing was present in 21.54% of US-based publications (28 of 130 publications). In contrast, the rates in the UK and the Netherlands were higher, exceeding the average across the entire sample (UK: 42.85%, 15 of 35 publications; Netherlands: 61.90%, 13 of 21 publications).

4 To ensure the robustness of our findings, we conducted sensitivity analyses excluding the most recent publications in the citation analysis because recent publications may not have had time to accumulate citations. This analysis showed that while the general trend of the results remained consistent, the strength of the evidence for the relationship between publication accessibility and annual citation counts varied depending on the exclusion criteria applied. When excluding the last six months of publication, there was still conclusive evidence for the retrieved effect (t(370) = −2.52, p = .006, BF10 = 5.27; Cohen’s d = 0.30, 95% CI [0.10; inf]), while when excluding all 2023 publications, the effect did not reach the conclusive threshold (t(351) = −2.35, p = .010, BF10 = 3.61; Cohen’s d = 0.29, 95% CI [0.09; inf]). While the weaker evidence may be explained by reduced statistical power, this result should still be interpreted with caution.

5 To validate such an explanation, we also explored journal impact on citation rates, a factor associated consistently with academic recognition in previous research (Hanel & Haase, Citation2017). Our findings confirmed journal impact as a strong predictor of citation rates (see https://osf.io/f58kj/ for this exploratory analysis).

References

- Abramo, G., D’Angelo, C. A., & Felici, G. (2019). Predicting publication long-term impact through a combination of early citations and journal impact factor. Journal of Informetrics, 13(1), 32–49. https://doi.org/10.1016/j.joi.2018.11.003

- Asarnow, J., Bloch, M. H., Brandeis, D., Alexandra Burt, S., Fearon, P., Fombonne, E., Green, J., Gregory, A., Gunnar, M., Halperin, J. M., Hollis, C., Jaffee, S., Klump, K., Landau, S., Lesch, K., Oldehinkel, A. J., Peterson, B., Ramchandani, P., Sonuga-Barke, E., … Zeanah, C. H. (2018). Special editorial: Open science and the Journal of Child Psychology & Psychiatry–next steps? Journal of Child Psychology and Psychiatry, 59(7), 826–827. https://doi.org/10.1111/jcpp.12929

- Ayers, M. S., & Reder, L. M. (1998). A theoretical review of the misinformation effect: Predictions from an activation-based memory model. Psychonomic Bulletin & Review, 5(1), 1–21. https://doi.org/10.3758/BF03209454

- Bakker, M., Veldkamp, C. L., van Assen, M. A., Crompvoets, E. A., Ong, H. H., Nosek, B. A., Soderberg, C. K., Mellor, D., & Wicherts, J. M. (2020). Ensuring the quality and specificity of preregistrations. PLoS Biology, 18(12), e3000937. https://doi.org/10.1371/journal.pbio.3000937

- Barbui, C., Gureje, O., Puschner, B., Patten, S., & Thornicroft, G. (2016). Implementing a data sharing culture. Epidemiology and Psychiatric Sciences, 25(4), 289–290. https://doi.org/10.1017/S2045796016000330

- Borgerson, K. (2009). Valuing evidence: Bias and the evidence hierarchy of evidence-based medicine. Perspectives in Biology and Medicine, 52(2), 218–233. https://doi.org/10.1353/pbm.0.0086

- Bornstein, B. H., & Meissner, C. A. (2023). Influencing policy and procedure with law-psychology research: Why, when, where, how, and what. In D. DeMatteo & K. C. Scherr (Eds.), The Oxford handbook of psychology and law (pp. 18–35). Oxford Academic.

- Brainerd, C. J., & Reyna, V. F. (2005). The science of false memory. Oxford University Press.

- Brewin, C. R., & Andrews, B. (2017). Creating memories for false autobiographical events in childhood: A systematic review. Applied Cognitive Psychology, 31(1), 2–23. https://doi.org/10.1002/acp.3220

- Camerer, C. F., Dreber, A., Holzmeister, F., Ho, T. H., Huber, J., Johannesson, M., Kirchler, M., Nave, G., Nosek, B. A., Pfeiffer, T., Altmejd, A., Buttrick, N., Chan, T., Chen, Y., Forsell, E., Gampa, A., Heikensten, E., Hummer, L., Imai, T., … Wu, H. (2018). Evaluating the replicability of social science experiments in Nature and Science between 2010 and 2015. Nature Human Behaviour, 2(9), 637–644. https://doi.org/10.1038/s41562-018-0399-z

- Chartier, C., Kline, M., McCarthy, R., Nuijten, M., Dunleavy, D. J., & Ledgerwood, A. (2018). The cooperative revolution is making psychological science better. APS Observer, 31(10). https://www.psychologicalscience.org/observer/the-cooperative-revolution-is-making-psychological-science-better

- Chin, J., Zeiler, K., Dilevski, N., Holcombe, A., Gatfield-Jeffries, R., Bishop, R., Vazire, S., & Schiavone, S. (2023). The transparency of quantitative empirical legal research published in highly ranked law journals (2018-2020): An observational study. F1000Research, 12, 144. https://doi.org/10.12688/f1000research.127563.1

- Claesen, A., Gomes, S., Tuerlinckx, F., & Vanpaemel, W. (2021). Comparing dream to reality: An assessment of adherence of the first generation of preregistered studies. Royal Society Open Science, 8(10), 211037. https://doi.org/10.1098/rsos.211037

- Corker, K. S. (2018). Strengths and weaknesses of meta-analyses. In L. Jussim, S. Stevens, & J. Krosnick (Eds.), Research integrity: Best practices for the social and behavioral sciences (pp. 150–174). Oxford University Press.

- Eden, D. (2002). From the editors: Replication, meta-analysis, scientific progress, and AMJ's publication policy. Academy of Management Journal, 45(5), 841–846. https://doi.org/10.5465/amj.2002.7718946

- Fecher, B., & Friesike, S. (2013). Open science: One term, five schools of thought. In S. Bartling & S. Friesike (Eds.), Opening science (pp. 17–47). Springer.

- Finkel, E. J., Eastwick, P. W., & Reis, H. T. (2015). Best research practices in psychology: Illustrating epistemological and pragmatic considerations with the case of relationship science. Journal of Personality and Social Psychology, 108(2), 275–297. https://doi.org/10.1037/pspi0000007

- Gargouri, Y., Hajjem, C., Larivière, V., Gingras, Y., Carr, L., Brody, T., & Harnad, S. (2010). Self-selected or mandated, open access increases citation impact for higher quality research. PLoS One, 5(10), e13636. https://doi.org/10.1371/journal.pone.0013636

- Hanel, P. H., & Haase, J. (2017). Predictors of citation rate in psychology: Inconclusive influence of effect and sample size. Frontiers in Psychology, 8, 1160. https://doi.org/10.3389/fpsyg.2017.01160

- Hardwicke, T. E., Bohn, M., MacDonald, K., Hembacher, E., Nuijten, M. B., Peloquin, B. N., deMayo, B. E., Long, B., Yoon, E. J., & Frank, M. C. (2021). Analytic reproducibility in articles receiving open data badges at the Journal Psychological Science: An observational study. Royal Society Open Science, 8(1), 201494. https://doi.org/10.1098/rsos.201494

- Hardwicke, T. E., Mathur, M. B., Macdonald, K., Nilsonne, G., Banks, G. C., Kidwell, M. C., ... & Frank, M. C. (2018). Data availability, reusability, and analytic reproducibility: Evaluating the impact of a mandatory open data policy at the journal Cognition. Royal Society open science, 5(8), 180448.

- Hardwicke, T. E., Thibault, R. T., Kosie, J. E., Wallach, J. D., Kidwell, M. C., & Ioannidis, J. P. (2022). Estimating the prevalence of transparency and reproducibility-related research practices in psychology (2014–2017). Perspectives on Psychological Science, 17(1), 239–251. https://doi.org/10.1177/1745691620979806

- Hardwicke, T. E., Wallach, J. D., Kidwell, M. C., Bendixen, T., Crüwell, S., & Ioannidis, J. P. (2020). An empirical assessment of transparency and reproducibility-related research practices in the social sciences (2014–2017). Royal Society Open Science, 7(2), 190806. https://doi.org/10.1098/rsos.190806

- Ioannidis, J. P. (2012). Why science is not necessarily self-correcting. Perspectives on Psychological Science, 7(6), 645–654. https://doi.org/10.1177/1745691612464056

- Jarosz, A. F., & Wiley, J. (2014). What are the odds? A practical guide to computing and reporting Bayes factors. The Journal of Problem Solving, 7(1), 2–9. https://doi.org/10.7771/1932-6246.1167

- Kassin, S. M., & Gudjonsson, G. H. (2004). The psychology of confessions: A review of the literature and issues. Psychological Science in the Public Interest, 5(2), 33–67. https://doi.org/10.1111/j.1529-1006.2004.00016.x

- Kidwell, M. C., Lazarević, L. B., Baranski, E., Hardwicke, T. E., Piechowski, S., Falkenberg, L. S., Kennett, C., Slowik, A., Sonnleitner, C., Hess-Holden, C., Errington, T. M., Fiedler, S., & Nosek, B. A. (2016). Badges to acknowledge open practices: A simple, low-cost, effective method for increasing transparency. PLoS Biology, 14(5), e1002456. https://doi.org/10.1371/journal.pbio.1002456

- Klein, R. A., Ratliff, K. A., Vianello, M., Adams, Jr., R. B., Bahník, Š, Bernstein, M. J., Bocian, K., Brandt, M. J., Brooks, B., Brumbaugh, C. C., Cemalcilar, Z., Chandler, J., Cheong, W., Davis, W. E., Devos, T., Eisner, M., Frankowska, N., Furrow, D., Galliani, E. M., … Nosek, B. A. (2014). Investigating variation in replicability. Social Psychology, 45(3), 142–152. https://doi.org/10.1027/1864-9335/a000178

- Klein, R. A., Vianello, M., Hasselman, F., Adams, B. G., Adams, Jr., R. B., Alper, S., Aveyard, M., Axt, J. R., Babalola, M. T., Bahník, Š., Batra, R., Berkics, M., Bernstein, M. J., Berry, D. R., Bialobrzeska, O., Binan, E. D., Bocian, K., Brandt, M. J., Busching, R., … Sowden, W. (2018). Many Labs 2: Investigating variation in replicability across samples and settings. Advances in Methods and Practices in Psychological Science, 1(4), 443–490. https://doi.org/10.1177/2515245918810225

- Lacy, J. W., & Stark, C. E. (2013). The neuroscience of memory: Implications for the courtroom. Nature Reviews Neuroscience, 14(9), 649–658. https://doi.org/10.1038/nrn3563

- Langham-Putrow, A., Bakker, C., & Riegelman, A. (2021). Is the open access citation advantage real? A systematic review of the citation of open access and subscription-based articles. PLoS One, 16(6), e0253129. https://doi.org/10.1371/journal.pone.0253129

- Lawrence, S. (2001). Free online availability substantially increases a paper's impact. Nature, 411(6837), 521–521. https://doi.org/10.1038/35079151

- Lindsay, D. S. (2017). Sharing data and materials in psychological science. Psychological Science, 28(6), 699–702. https://doi.org/10.1177/0956797617704015

- Lindsay, D. S., Simons, D. J., & Lilienfeld, S. O. (2016). Research preregistration 101. APS Observer, 29(10), 14–16.

- Loftus, E. F., Miller, D. G., & Burns, H. J. (1978). Semantic integration of verbal information into a visual memory. Journal of Experimental Psychology: Human Learning and Memory, 4(1), 19–31. https://doi.org/10.1037/0278-7393.4.1.19

- Louderback, E. R., Gainsbury, S. M., Heirene, R. M., Amichia, K., Grossman, A., Bernhard, B. J., & LaPlante, D. A. (2023). Open science practices in gambling research publications (2016–2019): A scoping review. Journal of Gambling Studies, 39(2), 987–1011. https://doi.org/10.1007/s10899-022-10120-y

- Martone, M. E., Garcia-Castro, A., & VandenBos, G. R. (2018). Data sharing in psychology. American Psychologist, 73(2), 111–125. https://doi.org/10.1037/amp0000242

- McNutt, M. (2014). Reproducibility. Science, 343(6168), 229. https://doi.org/10.1126/science.1250475

- Morey, R. D., Chambers, C. D., Etchells, P. J., Harris, C. R., Hoekstra, R., Lakens, D., Lewandowsky, S., Morey, C. C., Newman, D. P., Schönbrodt, F. D., Vanpaemel, W., Wagenmakers, E.-J., & Zwaan, R. A. (2016). The Peer Reviewers’ Openness Initiative: Incentivizing open research practices through peer review. Royal Society Open Science, 3(1), 150547. https://doi.org/10.1098/rsos.150547

- Muschalla, B., & Schönborn, F. (2021). Induction of false beliefs and false memories in laboratory studies—A systematic review. Clinical Psychology & Psychotherapy, 28(5), 1194–1209. https://doi.org/10.1002/cpp.2567

- Nosek, B. A., Alter, G., Banks, G. C., Borsboom, D., Bowman, S. D., Breckler, S. J., Buck, S., Chambers, C. D., Chin, G., Christensen, G., Contestabile, M., Dafoe, A., Eich, E., Freese, J., Glennerster, R., Goroff, D., Green, D. P., Hesse, B., Humphreys, M., … Yarkoni, T. (2015). Promoting an open research culture. Science, 348(6242), 1422–1425. https://doi.org/10.1126/science.aab2374

- Nosek, B. A., Beck, E. D., Campbell, L., Flake, J. K., Hardwicke, T. E., Mellor, D. T., van ’t Veer, A. E., & Vazire, S. (2019). Preregistration is hard, and worthwhile. Trends in Cognitive Sciences, 23(10), 815–818. https://doi.org/10.1016/j.tics.2019.07.009

- Nosek, B. A., Ebersole, C. R., DeHaven, A. C., & Mellor, D. T. (2018). The preregistration revolution. Proceedings of the National Academy of Sciences, 115(11), 2600–2606. https://doi.org/10.1073/pnas.1708274114

- Nosek, B. A., & Lakens, D. (2014). Registered reports. Social Psychology, 45(3), 137–141. https://doi.org/10.1027/1864-9335/a000192

- Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349(6251), aac4716. https://doi.org/10.1126/science.aac4716

- Otgaar, H., & Howe, M. (2024). Testing memory. Memory (Hove, England), 32(3), 293–295. https://doi.org/10.1080/09658211.2024.2325293

- Ouzzani, M., Hammady, H., Fedorowicz, Z., & Elmagarmid, A. (2016). Rayyan—A web and mobile app for systematic reviews. Systematic Reviews, 5(1), 1–10. https://doi.org/10.1186/s13643-016-0384-4

- Pickrell, J. E., McDonald, D. L., Bernstein, D. M., & Loftus, E. F. (2016). Misinformation effect. In R. F. Pohl (Ed.), Cognitive illusions (pp. 406–423). Psychology Press.

- Redding, R. E. (1998). How common-sense psychology can inform law and psycholegal research. U. Chi. L. Sch. Roundtable, 5(1), 6.

- Roediger, H. L., & McDermott, K. B. (1995). Creating false memories: Remembering words not presented in lists. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21(4), 803–814. https://doi.org/10.1037/0278-7393.21.4.803

- Schacter, D. L., & Loftus, E. F. (2013). Memory and law: What can cognitive neuroscience contribute? Nature Neuroscience, 16(2), 119–123. https://doi.org/10.1038/nn.3294

- Schmidt, S. (2009). Shall we really do it again? The powerful concept of replication is neglected in the social sciences. Review of General Psychology, 13(2), 90–100. https://doi.org/10.1037/a0015108

- Scoboria, A., Wade, K. A., Lindsay, D. S., Azad, T., Strange, D., Ost, J., & Hyman, I. E. (2017). A mega-analysis of memory reports from eight peer-reviewed false memory implantation studies. Memory (Hove, England), 25(2), 146–163. https://doi.org/10.1080/09658211.2016.1260747

- Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22(11), 1359–1366. https://doi.org/10.1177/0956797611417632

- Stodden, V., Seiler, J., & Ma, Z. (2018). An empirical analysis of journal policy effectiveness for computational reproducibility. Proceedings of the National Academy of Sciences, 115(11), 2584–2589. https://doi.org/10.1073/pnas.1708290115

- Vazire, S. (2017). Quality uncertainty erodes trust in science. Collabra: Psychology, 3(1), 1. https://doi.org/10.1525/collabra.74

- Verschuere, B., Yasrebi-de Kom, F. M., van Zelm, I., & Lilienfeld, S. O. (2021). A plea for preregistration in personality disorders research: The case of psychopathy. Journal of Personality Disorders, 35(2), 161–176. https://doi.org/10.1521/pedi_2019_33_426

- Wagenmakers, E.-J., Wetzels, R., Borsboom, D., & van der Maas, H. L. J. (2011). Why psychologists must change the way they analyze their data: The case of psi: Comment on Bem (2011). Journal of Personality and Social Psychology, 100(3), 426–432. https://doi.org/10.1037/a0022790

- Washburn, A. N., Hanson, B. E., Motyl, M., Skitka, L. J., Yantis, C., Wong, K. M., Sun, J., Prims, J. P., Mueller, A. B., Melton, Z. J., & Carsel, T. S. (2018). Why do some psychology researchers resist adopting proposed reforms to research practices? A description of researchers’ rationales. Advances in Methods and Practices in Psychological Science, 1(2), 166–173. https://doi.org/10.1177/2515245918757427

- Wessel, I., Albers, C. J., Zandstra, A. R. E., & Heininga, V. E. (2020). A multiverse analysis of early attempts to replicate memory suppression with the Think/No-think Task. Memory (Hove, England), 28(7), 870–887. https://doi.org/10.1080/09658211.2020.1797095

- Woods, H. B., & Pinfield, S. (2022). Incentivising research data sharing: A scoping review. Wellcome Open Research, 6, 355. https://doi.org/10.12688/wellcomeopenres.17286.2