Abstract

Deep Learning (DL) has a wide variety of applications in various thematic domains, including spatial information. Although with limitations, it is also starting to be considered in operations related to Digital Elevation Models (DEMs). This study aims to review the methods of DL applied in the field of altimetric spatial information in general, and DEMs in particular. Void Filling (VF), Super-Resolution (SR), landform classification and hydrography extraction are just some of the operations where traditional methods are being replaced by DL methods. Our review concludes that although these methods have great potential, there are aspects that need to be improved. More appropriate terrain information or algorithm parameterisation are some of the challenges that this methodology still needs to face.

1. Introduction

From a geomatic point of view, DEMs represent altimetric spatial information using raster and vector models. Raster DEMs are based on grids, composed of a regular array of cells, including heights as attribute values (this model is commonly referred to as 2.5D data because it only supports a single z-value for each planimetric location in a specific column and row) (Ureña-Cámara and Mozas-Calvache Citation2023). On the other hand, vector DEMs are based on 3D surfaces composed of meshes derived from a set of 3D points. In any case, both raster and vector DEMs have the same characteristic: the discretisation in the representation of spatial elevation (Lin et al. Citation2022; Zhang et al. Citation2022). This discretisation necessarily implies a loss of information that affects, as will be addressed below, the quality of the representation (Mesa-Mingorance and Ariza-López et al. Citation2020).

A wide range of sciences and disciplines use DEMs as essential tools for the development of applications such as modelling for the prevention of natural disasters (flood risk and fire risk studies), soil erosion mapping, weather forecasting, climate change, etc. Therefore, DEMs must possess quality levels that satisfy the needs of the different applications in which they are involved. These quality levels are directly related to the completeness of the model and its resolution. Two of the main operations traditionally performed on DEMs to ensure and improve these parameters are VF and SR. The first one aims to ensure the completeness of the DEM, as during the acquisition phase problems may arise that result in void areas where topographical information is lacking (Hall et al. Citation2005) VF. As for the second operation, its goal is to improve the resolution of the DEMs without increasing the cost associated with the acquisition processes (Han et al. Citation2023) SR. There are also other important operations in which DEMs play a key role in the development of applications, without directly affecting or altering the DEM itself. These operations are related to landform classification and information extraction processes. Some of the disciplines that make extensive use of these types of operations are Geology, Hydrology, and Cartography, and the main applications they involve include terrain feature classification (Li et al. Citation2020), extraction of basins and hydrographic networks (Shin and Paik Citation2017) and relief shading (Li et al. Citation2022a). Finally, another group of operations in which DEMs are involved are those aimed at segmenting and extracting features from the DEM itself. These operations, without altering the model, fragment it and are involved in applications such as the semantic segmentation of point clouds and extraction of elements from images.

Since its beginning, Artificial Intelligence (AI) has played a valuable role in some of the sciences and disciplines mentioned above. With an increasing amount of ‘big data’ from earth observation and rapid advances in AI, increasing opportunities for novel methods have emerged to aid in earth monitoring. Most current approaches to developing AI are based primarily on Machine Learning (ML). The most widely used and successful form of ML to date is DL (Goodfellow et al. Citation2020). DL has a wide range of applications in different thematic areas including Natural Language Processing (NLP), pattern recognition and image processing. Although still relatively recent due to their specificity, DL techniques are also starting to be considered in applications related to DEMs, emerging as alternative methods to those traditionally used in operations that are performed on DEMs for various purposes. Examples of this include interpolation methods in VF and SR operations, expert knowledge-based methods in terrain feature classification, extraction of hydrographic basins and relief shading, and manual semantic segmentation methods in 3D point cloud operations. In any case DEMs have their own characteristics inherent to the spatial nature of the information they contain, which introduces even greater complexity to the DL processes involved in all of the aforementioned operations. Therefore, DL operations on DEMs usually require a larger amount of resources for processing due to this richness of content.

In general it could be said that, although the number of documents reviewed for the development of this work is high, the application of DL methods and techniques to DEM-related applications is still in its early stages. Nonetheless, conducting a thorough analysis of the existing literature is essential. In recent years, various analyses have been conducted on the progress in the field of geomorphometry and earth sciences (Minár et al. Citation2020; Sofia Citation2020; Xiong et al. Citation2022; Maxwell and Shobe Citation2022). However, none of these studies specifically focus on the application of DL. For this reason, and considering the impact that DL technology is having across all scientific domains, in this paper, we conduct a detailed analysis of the existing literature related to the application of DL to DEMs.

The rest of the paper is organised as follows: Section 2 presents the criteria followed for the selection of the reviewed documents and their organisation for analysis. In Section 3 the main operations involving DEM and DL methods are addressed. Section 4 conducts a discussion highlighting the insights and a critical analysis. The paper finishes with a series of concluding remarks drawn in Section 5.

2. Method

Before describing the methodology followed it is important to clarify some aspects that help to understand the structure and content of this document, as well as to understand the criteria followed for the selection of the documents reviewed. First, as previously mentioned, the use of DL methods in both raster and vector DEMs related applications is relatively recent. However, their use in procedures involving other types of spatial data, such as remote sensing images, is widely extended and has a longer history. In this sense, it must be noted that any type of raster model can be managed using images. In the specific case of raster DEMs, they can be managed using images that represent height as digital levels of pixels determined by a given bit-depth capacity (e.g. ArcGIS Pro uses 32-bit depth in grids (Ureña-Cámara and Mozas-Calvache Citation2023). This is why although DEMs do not directly take part in some of the processes and operations analysed here, it is important to make reference to the DL techniques initially applied to remote sensing images since there are significant analogies between them and raster DEMs. A second issue that needs to be clarified is that a review of the different DL methods used in the operations addressed will not be conducted, as it goes beyond of the scope of this study. After addressing these two aspects, we must note that the methodology followed for developing our review is based on the guidelines proposed by Kitchenham and Charters (Citation2007). This technical report provides comprehensive guidelines for systematic literature reviews appropriate for software engineering researchers. Derived from other existing guidelines used by researchers from different areas of study, they have been adapted to our topic providing information about the effects of certain phenomena across a wide range of settings and empirical methods. After identifying the need for a review and developing a review protocol, the key stage of the procedure is the study selection criteria. According to these authors, ‘study selection criteria are intended to identify those primary studies that provide direct evidence about the research question’.

Based on this, the following criteria have been taken into account for selecting the documents analysed in this study:

All types of available materials will be considered. However, as previously mentioned, the relative novelty of DL techniques in their application to DEMs influences the types of materials available and their temporal distribution.

A set of keywords has been established for conducting the search and selection, such as ‘Deep Learning’, ‘Digital Elevation Models’, ‘Void Filling’, ‘Super-resolution’, ‘Landform Classification’ ‘Shaded Relief’, ‘Hydrography Extraction’, ‘Semantic Segmentation’, ‘Element Extraction’.

These keywords were used to perform searches on the most relevant search engines: ScopusFootnote1, ScienceDirectFootnote2, and Web of ScienceFootnote3

All papers that were not written in English were discarded.

In order to ensure scientific rigour in the content, efforts have been made to include a majority of papers that are indexed in the JCR (Journal Citation Reports) and communications of internationally renowned conferences.

Taking into account these premises, the selected documents have been organised as follows:

Studies on DL methods for direct operations on DEMs. These are operations that modify the model, aiming to improve its quality in terms of completion and resolution:

○ Studies related to VF in DEMs.

○ Studies related to SR of DEMs.

Studies on DL methods for the classification and extraction of elements from the model:

○ Studies related to landform classification, including studies related to crater detection and classification.

○ Studies related to hydrography extraction.

○ Studies related to relief shading.

Studies on DL methods for generating new products:

○ Studies related to the semantic segmentation of 3D point clouds.

○ Studies related to image-based element extraction.

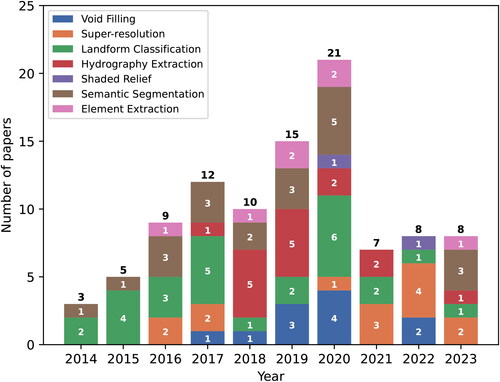

With all of this, the total number of papers selected has been 98, covering the timeframe from 2014 to 2023. depicts the distribution of papers published annually. Additionally, it also shows the number of papers published within each category. displays the references of the selected papers, classified into the following categories: VF, SR, hydrography extraction, shaded relief, semantic segmentation, and element extraction.

Table 1. Selected papers organised by categories.

Based on the selected articles focusing on the application of DL to DEMs, different types of analyses were conducted to explore:

co-occurring keywords in these papers,

the countries of the institutions where the authors belong to,

the journals with the most publications on this topic, and

the interrelationships between the journals that publish papers related to this subject.

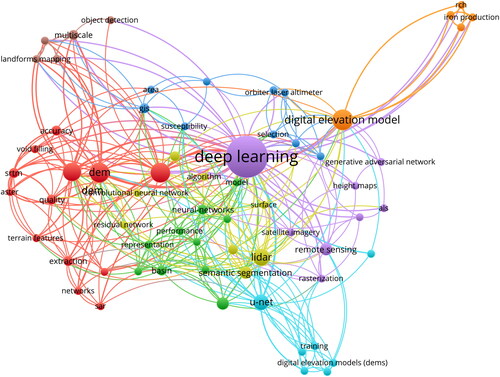

Regarding (i), depicts a network with the keywords that appear in the selected papers. The coloured classification of keywords groups related terms together in this figure, making it easy to identify the most common trends in DL applied to DEMs. For example, ‘semantic segmentation’ is a major area of research in DL applied to DEMs and is linked to other keywords like ‘performance’.

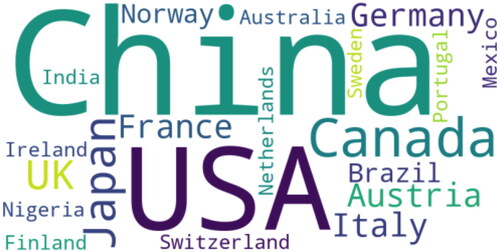

Concerning (ii), depicts a word cloud representing the countries with publications on DL applied to DEMs. The size of each country’s name is proportional to the number of papers published. Notably, China, the United States, Canada, Japan, and the United Kingdom stand out as the countries with the most publications on this topic.

Figure 3. Word cloud with the countries of the institutions participating in the authorship of the selected papers.

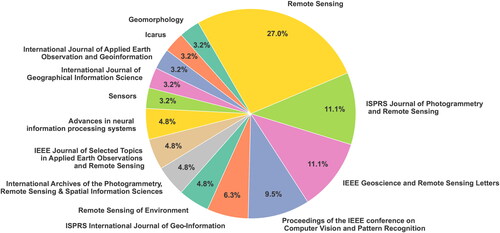

As for (iii), presents a pie chart illustrating the distribution of articles on DL applied to DEMs among the top 15 journals with the highest number of publications on this subject. The journal ‘Remote Sensing’ stands out, accounting for more than a quarter of the publications, followed by ‘ISPRS Journal of Photogrammetry and Remote Sensing’ and ‘IEEE Geoscience and Remote Sensing Letters.’ These three journals collectively represent almost half of all papers published on this topic.

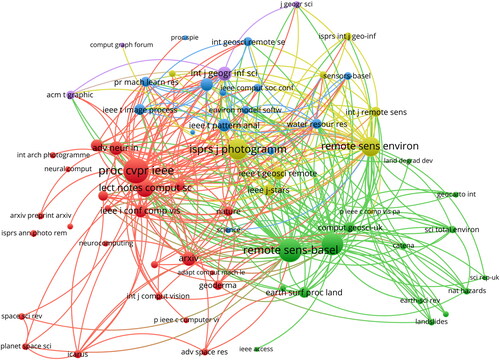

Finally, regarding (iv), represents the network of scientific journals in which articles related to DEM and DL have been published. For the sake of clarity, the network considers the source of each work and the links are the citations between papers. Indeed, the thicker a link is the more citations between papers there are. In this case, the majority of the citations come from the journals ‘Remote Sensing’, ‘ISPRS Journal of Photogrammetry and Remote Sensing’, ‘Remote Sensing of Environment’, and the ‘Proceedings of the IEEE conference on Computer Vision and Pattern Recognition’.

3. Deep learning operations over DEM

Below we have carried out an analysis of the diverse DL methods that take part in the different operations that are typically performed over altimetric spatial information (mainly DEMs). As it has been previously stated, these methods are presented as a solid alternative to traditional ones due to the fact that they address some issues of the latter.

3.1. DEM reconstruction

3.1.1. Void filling in DEM

The main factor responsible for the presence of data voids in DEMs is the influence exerted by rough terrain surfaces on signals emitted by remote sensors (Hall et al. Citation2005; Dong et al. Citation2020). For instance, in mountainous areas the large differences in elevation and slope interfere with the signals, resulting in a high number of voids in crest and valley areas (Hall et al. Citation2005; Boulton and Stokes Citation2018; Dong et al. Citation2020). These data voids lead to a significant loss of topographic information and, consequently, reduce the quality of the DEMs derived in terms of completeness.

There are various methods for addressing issues related to data voids in DEMs. These methods include manual model reconstruction (Dong et al. Citation2020; Li et al. Citation2020), integration, fusion or conflation with auxiliary DEMs from other data sources (Ling et al. Citation2007; Karkee et al. Citation2008; Milan et al. Citation2011), interpolation methods (Reuter et al. Citation2007; Gavriil et al. Citation2019; Dong et al. Citation2018, Citation2020). However, the time and labour costs associated with manual reconstruction, inconsistent quality in overlapping areas when integrating DEMs from different sources and difficulties in interpolating in areas with complex topography in the case of the last method mentioned have led DL techniques to dominate in solving data void-related problems in DEMs due to their powerful learning capabilities (LeCun et al. Citation2015; Dong et al. Citation2020; Li and Hsu Citation2020). Thus, DL methods are capable of learning not only local features but also global information and contextual characteristics (Li et al. Citation2020; Li and Hsu Citation2020; Yuan et al. Citation2020), which aid in the extraction of latent topographic information and subsequent reconstruction of DEMs from consistent topographic features (Dong et al. Citation2020; Zhu et al. Citation2020). An example of such methods is the development and application of Convolutional Generative Adversarial Networks (sCGANs) (Mirza and Osindero Citation2014; Iizuka et al. Citation2017; Goodfellow et al. Citation2020), which are a specific type of Convolutional Neural Network (CNN) and are employed in the vast majority of the studies reviewed (Gavriil et al. Citation2019; Qiu et al. Citation2019; Dong et al. Citation2018, Citation2020; Li and Hsu Citation2020; Zhang et al. Citation2020; Zhou et al. Citation2022; Li et al. Citation2022a).

As mentioned above, data voids in a given DEM often occur in areas with complex terrain features that interfere with signals from remote sensors (Dong et al. Citation2019; Farr et al. Citation2007). Therefore, most of the papers reviewed attempt to integrate topographic information into DL algorithms for void filling, aiding in the reconstruction of DEMs with additional terrain information (Qiu et al. Citation2019; Zhu et al. Citation2020). Generally, this topographic information includes elements such as valley lines (Ling et al. Citation2007), hill shading (Dong et al. Citation2019) and textures (Qiu et al. Citation2019).

With the common feature of using CNNs and their variants to predict complete DEMs from incomplete ones, the main differences among the various methods proposed in the existing literature are established based on the type of constraints and information provided to the DL algorithm during the training process. In the previously-mentioned case of Dong et al. (Citation2019), automatically extracted projected shadow maps and known sun directions are used to calculate shadow-based supervision signals, in addition to direct supervision from the DEM. On the other hand, in the case of Qiu et al. (Citation2019), the algorithm is trained with patches of 1 arc-second Shuttle Radar Topography Mission (SRTM) data from mountainous regions worldwide. Elevation data, terrain slope, and relief degree are part of the training samples. In both cases, the networks successfully predict restored DEMs from incomplete ones through the inference of information from neighbouring areas of data voids. Furthermore, building upon CGANs, (Zhu et al. Citation2020) design a novel DL architecture combining encoder-decoder structure with adversarial learning in order to capture representations of sampled spatial data and their interactions with local structural patterns.

Although in most of the previously-cited studies terrain-related characteristics have been taken into account in one way or another, there are aspects that have not been addressed in detail, such as what type of terrain information can optimise the improvement of the quality of the reconstructed DEM or how to efficiently integrate this information into advanced models to generate DEMs that are consistent with the surrounding terrain of the original data voids. In order to address these issues Zhou et al. (Citation2022) and Li et al. (Citation2022a) propose new methods which, although also based on the use of CGAN, represent an evolution of previous approaches. Li et al. (Citation2022a) propose a method based on restricted topographic knowledge CGAN, called TKCGAN, to improve the quality of reconstructed DEMs and recover key terrain features. The knowledge of topographic characteristics is summarised and practically transferred in order to restrict the training processes of the DL models. On the other hand, Zhou et al. (Citation2022) propose a multi-scale feature fusion CGAN that performs an initial VF followed by a multi-attention inpainting network that recovers detailed terrain features surrounding the void area. Similar to the previous authors, Zhou et al. (Citation2022) propose a channel-spatial cropping mechanism as an enhancement of the network.

3.1.2. DEM super-resolution

The cost, time, and computing capabilities required to produce a DEM increase exponentially with its resolution, making it difficult to meet the practical production needs of DEMs (Chen et al. Citation2016; Zhu et al. Citation2020). Therefore, it is necessary to implement methods that help complement high-resolution DEM data. One of the existing methods for complementing DEM data is the SR method. Initially developed for implementation on digital images (Tsai and Huang Citation1984), the application of SR methods to a given DEM aims to improve its resolution without increasing the cost associated with the acquisition or capture processes (Han et al. Citation2023).

Traditionally, these resolution enhancement methods have been performed using interpolation techniques (Li and Heap Citation2011; Han et al. Citation2023; Ma et al. Citation2023; Xu et al. Citation2015). Interpolation-based methods rely on the degree of concordance between the interpolation kernel and the global distribution of the DEM in the surrounding region, achieving good results only when this degree of concordance is high (Li and Heap Citation2011; Zhang et al. Citation2022). However, due to the complex nature of real-world terrains it is difficult for interpolation-based methods to provide interpolation values that adapt to local distributions of the DEM, resulting in limitations in the results obtained.

To address this issue, DL-based methods utilise a large number of parameters that help capture local trends (high-level features) of terrain distribution during the training process, and therefore have higher potential in DEM SR tasks (LeCun et al. Citation2015; Chen et al. Citation2016; Zhou et al. Citation2021; Zhang and Yu Citation2022). In terms of performance achieved in this task by DL methods, (Zhang and Yu Citation2022) investigate the efficiency and suitability of DL-based SR methods for DEMs compared to interpolation methods. Specifically, they compare bicubic interpolation with three SR methods based on CGANs: SRGAN (Ledig et al. Citation2017), ESRGAN (Wang et al. Citation2018b), and CEDGAN (Zhu et al. Citation2020); comparing indices related to terrain characteristics, including elevations and terrain derivatives.

However, excessive focus on local (high-level) features may lead to ignoring the global information contained in the terrain (low-level features), paying little attention to spatial correlation (a very common source of global information in the terrain) in the DEM (Chen et al. Citation2016; Zhu et al. Citation2020), limiting the performance of DL-based SR methods. This is demonstrated by Han et al. (Citation2023), who compare spatial autocorrelation values obtained from distribution patterns among elevation points with global information obtained from feature extraction modules (CNN module (Kattenborn et al. Citation2021), ResNet module (Targ et al. Citation2016), Pixel Shuffle module (Shi et al. Citation2016), and deformable convolution module (Dai et al. Citation2017)). These authors conclude that due to the limitation of convolution kernel size the probability of capturing and aggregating low-level features (global information) into high-level information is very low (Vaswani et al. Citation2017; Han et al. Citation2021), which explains the loss of global information in traditional networks such as CNNs. To address this, (Zhou et al. Citation2021) proposed an improved method of deep residual CNN with double filtering (EDEM-SR) that captures more DEM features by introducing residual structures to deepen the network; (Zhang et al. Citation2021) also employ a deep CNN with a ResNet structure that improves the performance of the SR process, and finally (Zhang et al. Citation2022) take into account the morphological characteristics of the DEM and introduce a deformable convolution module, which represents a significant advancement over the limitation imposed by the morphology of the convolution kernel and improves the ability to capture irregular features of DEMs. Ma et al. (Citation2023) proposed a novel featured-enhanced DL network (FEN) considering both global and local feature SR.

Finally, in recent years some novel DL methods for SR of DEM have emerged. For example, (Demiray et al. Citation2021) created a DEM with 16 times higher spatial resolution by improving MobileNetV3; (Lin et al. Citation2022) introduced the internally learned zero-shot SR (ZSSR) method to solve the SR task of DEMs; (Zhang et al. Citation2021) proposed the recursive sub-pixel convolutional neural networks (RSPCN), which showed significant improvements in both accuracy and robustness; and (He et al. Citation2022) introduced a Fourier transform as an encoder and achieved good performance, enriching the existing SR encoder. Finally, (Han et al. Citation2023) proposed a DL network with global information constraints that can optimise the SR process to generate global terrain features (low-level) and achieve advanced results. Specifically, compared to traditional bicubic interpolation and some existing CNN-based methods (TfaSR0 (Zhang et al. Citation2022), SRResNet (Ledig et al. Citation2017) y SRCNN (Dong et al. Citation2016), the Mean Squared Error obtained by these authors improved by 20% to 200%, whereas the Mean Absolute Error improved by 20% to 300%.

3.2. Fundamental analysis about DEM

3.2.1. Landform classification

The classification of landforms is one of the most important procedures in Geomorphology for understanding processes on the Earth’s surface (Wang et al. Citation2010; Li et al. Citation2020), playing a significant role in analysing the evolution of landforms (Drăguţ and Blaschke Citation2006; Hiller and Smith Citation2008; Wang et al. Citation2010). Apart from the use of classical techniques such as the visual interpretation of topographic maps and aerial photographs, landform classification has traditionally been carried out using two types of automated techniques: pixel-based and object-based (Xiong et al. Citation2014). Specifically, the Object-Based Image Analysis (OBIA) (Na et al. Citation2021) method for landform has traditionally been used to identify and classify forms and features of the terrain using satellite images (Benz et al. Citation2004; Blaschke Citation2010), aerial images (Hay et al. Citation2005), or LiDAR data (Li et al. Citation2015). All these authors introduce the OBIA-based approach for terrain shape classification and address related topics such as image segmentation, feature extraction, and the use of classification algorithms. However, in transition zones (where geographical features change gradually from one type to another and their characteristics change continuously), it is difficult for these methods to define appropriate criteria for distinguishing different landforms (Drăguţ and Blaschke Citation2006). Therefore, it is necessary to develop methods that possess high learning capacity and are effective in classification tasks (Verhagen and Drăguţ Citation2012).

In this regard, DL algorithms have become powerful tools for identifying terrain features, i.e. recognising landforms, due to their powerful capability of recognition and information extraction (Li and Hsu Citation2020). These processes of shape recognition are used for classifying landforms into different types (Heung et al. Citation2016). Currently, most applications of DL methods in landform classification research employ models based on CNNs and focus on the analysis of macro-landforms at a wide range of scales (Ehsani and Quiel Citation2008; Li et al. Citation2017; Palafox et al. Citation2017; Wu et al. Citation2023).

As in other types of applications, such as those already addressed in Remote Sensing (RS), DL techniques for terrain recognition and classification were initially applied to images (Deng et al. Citation2009), with deep CNNs being the most commonly used DL models (Krizhevsky et al. Citation2017). Since the initial models, the evolution of these types of networks has occurred through the addition of layers (Zeiler and Fergus Citation2014; Simonyan and Zisserman Citation2014) and the improvement of connectivity between them (Huang et al. Citation2017), which has improved the average accuracy in tasks related to element classification in images (Cheng et al. Citation2017; Chen et al. Citation2020). In this context, the work of Li et al. (Citation2017) stands out. Namely, it combines a Region-based CNN (RCNN) architecture (Girshick Citation2015) with a classic CNN architecture (Zeiler and Fergus Citation2014) to automatically detect terrain features from aerial and RS images. Similarly, the work of Li and Hsu (Citation2020) extends the RCNN architecture with deep CNNs and adopts ensemble learning for the detection of nine different types of terrain features from RS images. Recently, Ganerød et al. (Citation2023) propose an automatic approach that uses Fully connected CNN (FCNN) and U-Net to optimise the regional bedrock identification and mapping processes, obtaining promising results with an F1 score around 80% for DEM terrain derivatives compared to a manually-mapped ground truth.

However, image-based models face challenges in recognising certain cases where multiple geographical features are mixed. This issue is partly addressed by combining images with data from DEMs. For example, (Li et al. Citation2020) employ a deep CNN with a U-Net structure to improve the classification accuracy of terrain forms by more than 87% with a combination of DEM and image data, compared to using only images. Furthermore, the method proposed by these authors achieves higher accuracy in classifying landforms with better-defined boundaries compared to other methods.

Among other classification applications involving DEMs, ML methods for elevation data analysis are prominent. For instance, in Marmanis et al. (Citation2015), the authors tackle object classification on the ground in urban environments using a Multilayer Perceptron model. In (Hu and Yuan Citation2016), the authors proposed a DL method for extracting DEMs from airborne laser scanning point cloud data. Their approach maps the relative height difference of each point with respect to its neighbours in a square window to an image. Thus, the classification of a point is treated as image classification, resulting in low error rates in the detection of ground and non-ground points, even in mountainous areas. Finally, (Torres et al. Citation2020) present preliminary results of using a Simple -LeNet (LeCun et al. Citation2015)- for mountain peak detection from DEM data in their study.

A field that should be addressed separately in the context of terrain feature detection and classification using ML techniques (Di et al. Citation2014; Vaz et al. Citation2015) is Planetary Astronomy. From the use of Genetic Algorithms for automatic crater detection (Cohen et al. Citation2011; Cohen and Ding Citation2014), the approach has evolved towards a model based on the application of CNNs on remotely sensed images. In the initial studies in this field (Emami et al. Citation2015; Cohen et al. Citation2016; Palafox et al. Citation2017), CNNs are applied as binary classifiers for crater classification in images such as those obtained from High Resolution Imaging Science Experiment (HiRISE) for Mars or Lunar Reconnaissance Orbital Camera (LROC) and High Resolution Stereo Camera (HRSC) for the Moon. These studies confirm the high accuracy of CNNs in identification compared to other ML methods, such as Support Vector Machine (SVM). Additionally, (Wang et al. Citation2018a) developed a novel fully convolutional CNN for crater classification and positional regression at the cell level using HRSC images. (Hashimoto and Mori Citation2019) adopted the U-Net structure (Ronneberger et al. Citation2015) and CGANs for crater classification based on grids in LROC images.

Recently, some studies have combined the identification and classification capabilities of CNNs with the robustness of DEMs (Silburt et al. Citation2019). They propose a classical crater classifier algorithm based on DEMs using DL, which is supported by a U-Net structure. Ali-Dib et al. (Citation2020) apply the Mask R-CNN model (He et al. Citation2017) to detect and classify craters. This model generates bounding boxes and segmentation masks for each instance of an object (crater) in the image. Jia et al. (Citation2021) combined an improved CNN and transfer learning method for crater detection using multiple data sources. Recently, other studies (Wang et al. Citation2020b; Jia et al. Citation2021; Wu et al. Citation2021) have attempted to improve the method of Silburt et al. (Citation2019) by designing a new CNN with a similar U-Net-like structure. Most of these studies involve a two-phase processing approach: first, an image patch is input into a CNN model for edge segmentation, and then crater regions are extracted using a Pattern Matching Algorithm (PMA). However, the latter is computationally expensive as it needs to calculate the matching probability for each segmentation object by iteratively sliding a group of patterns with a discrete size distribution over an image patch. On the other hand, many other studies (Ren et al. Citation2015; Liu et al. Citation2016; Lin et al. Citation2017; Tian et al. Citation2019) propose object detection and classification by directly predicting the position and size of a visual target. Lin et al. (Citation2022) propose a solution that aims to bridge the gap between existing DEM-based crater classification algorithms and advanced CNN methods for object detection, and they propose an end-to-end DL model for lunar crater detection. Through their proposal they evaluate nine representative CNN models including the three most common architectures for detection. Their proposal improves performance in terms of accuracy (82.97%) and recall (79.39%). Furthermore, they develop a crater verification tool to manually validate the detection results, and the visualisation results show that the detected craters are reasonable and can be used as a complement to existing manually-labelled datasets.

3.2.2. Hydrography extraction

Currently, the widespread availability of accurate terrain information in the form of DEMs has improved hydrological modelling and methods for extracting basins and hydrographic networks (Clubb et al. Citation2014; Woodrow et al. Citation2016; Shin and Paik Citation2017), mainly water bodies. This last type of hydrological structure refers to water lying on the surface or flowing over the earth, including but not limited to streams, rivers, lakes, ponds, potholes, wetlands, etc. Hydrographic information extraction using high-resolution DEMs involves procedures that require expert knowledge and techniques such as parameterising extraction thresholds, identifying headwater locations, etc. (Stanislawski et al. Citation2021). Performing these tasks using traditional methods is costly and involves human intervention, which inevitably leads to inaccuracies.

The application of DL techniques has become a powerful alternative to traditional methods as it provides more accurate and reliable results through consistently applied workflows in time and space (Xu et al. Citation2018). This consistency is supported by training processes that allow for identifying patterns of more complex features. Similar to other applications, DL techniques for hydrography extraction have typically been applied to images, with CNNs being the most commonly used models. For example, (Chen et al. Citation2018) employed the adaptive clustering CNN model for extracting surface water bodies through superpixel segmentation, and Feng et al. (Citation2018) exploited a combined application of U-Net and Conditional Random Fields (CRF) for extracting surface water bodies through superpixels obtained by the Simple Linear Iterative Cluster (SLIC) method. However, the aforementioned CNN models have certain drawbacks, including: (i) their performance is heavily dependent on the accuracy of the superpixel segmentation process, (ii) due to the inherent convolutional operations CNNs are directly applied to water body extraction, causing the boundaries to be blurred (Cheng et al. Citation2020), (iii) they fail to simultaneously extract the spatial-spectral correlation feature, which is critical for water body extraction (Miao et al. Citation2018; Gebrehiwot et al. Citation2019), and (iv) they use multiscale features point-wise, which is not suitable for the segmentation of water bodies as they tend to ignore narrow rivers and small ponds (Duan and Hu Citation2019).

Therefore, in order to achieve good results in water body extraction 2D CNN models based on limited spectral information should consider other types of information such as multiscale semantic information provided by DEMs, among other sources. Recent studies have shown promising results in hydrographic information extraction using mainly 3D CNN models, whether they are water bodies (Chen et al. Citation2020), watersheds (Xu et al. Citation2018; Lin et al. Citation2021; Wang et al. Citation2020b), or other associated features (Shaker et al. Citation2019; Stanislawski et al. Citation2018, Citation2019), from LiDAR point clouds and other RS data. For instance, Chen et al. (Citation2020) propose a refined water body extraction CNN (WBE-CNN) that does not rely on superpixel-based segmentation accuracy. The proposed method is based on three modules: (i) a global spatial-spectral convolution module, that extracts spatial and spectral features simultaneously; (ii) a multiscale learning module; (iii) and a boundary refinement module. Using a 3D CNN, Xu et al. (Citation2018) develop an effective data fusion model that utilises 3D features from LiDAR and multitemporal images for high-precision classification and extraction processes. Lin et al. (Citation2021) develop a global hydrography database using the most recent DEMs and CNN-based methods to estimate the spatial variability of drainage density at a global level. Specifically, they use a high-resolution and high-accuracy DEM called MERIT (Multi-Error-Removed Improved Terrain) DEM (Yamazaki et al. Citation2017), along with raster information on flow direction and accumulation in MERIT Hydro (Yamazaki et al. Citation2019) as underlying data layers for global river network extraction. The CNN is trained with basin-scale climatic and worldwide physiographical data. Stanislawski et al. (Citation2021) test the capability of a U-net CNN model to extract water bodies and drainage basins in Alaska using elevation data such as curvature or topographic position index, which have been shown to reflect geomorphic conditions (Passalacqua et al. Citation2010; Newman et al. Citation2018), derived from IfSAR. After testing different parameters to estimate the suitability of each layer in identifying water bodies and streams (Stanislawski et al. Citation2018, Citation2019; Xu et al. Citation2021), the layers considered suitable are used as input data for U-net CNN models. The U-net structure is optimised by testing sample size, window size, and sample augmentation. The probability values predicted by the U-net model are then used as weights to inform the flow accumulation models for extracting a complete vector drainage network. Recently, Wu et al. (Citation2023) presented the development of various DL models, utilising CNNs to classify images containing flow barrier locations. Their results demonstrate accuracy over 90%. Additionally, Sun et al. (Citation2023) introduced a check dam detection framework using DL and geospatial analysis for broad areas from high-resolution remote sensing images. As a result, they improved check dam identification accuracy from 78.6% to 87.6% by eliminating false detection boxes.

3.2.3. Shaded relief

The technique of relief shading is based on the use of light and shadow distribution to represent the terrain, providing a strong sense of three-dimensionality (Jenny Citation2001). Currently, this technique can be applied using various software packages such as ArcGISFootnote4, QGISFootnote5, or GlobalMapperFootnote6, and the shading it provides is referred to as analytical shading (Li et al. Citation2022a). Despite the computational efficiency, analytical relief shading has significant limitations, especially when compared to manually created shading, as the grayscale levels provided by the software are strictly calculated based on the amount of light each pixel receives within the corresponding DEM. This results in a lack of expressiveness that leads to issues with the perception of relief. However, manual production of high-quality relief shading requires expert drawing skills and a significant amount of time, making it impractical nowadays.

As an alternative to analytical and manual techniques, DL methods provide a viable alternative to relief shading procedures. These methods can be used to learn the local lighting adjustments from manual relief shading, combining the advantages of analytical and manual shading. Building on CGAN (Mirza and Osindero Citation2014), relief shading using DEMs can be interpreted as the conversion from an input image to an output image, and as demonstrated throughout this paper CGAN is well-suited to solving this type of problem (Creswell et al. Citation2018).

Examples of this can be found in various studies. Li et al. (Citation2022a) introduced the idea of transferring DL to terrain shading, which would improve the expressiveness and artistic sense of it. Jenny et al. (Citation2021) applied a U-Net-based conditional CGAN to generate terrain shading, achieving positive results (Jenny et al. Citation2021). The U-Net structure (Ronneberger et al. Citation2015) was improved and trained using information from manual terrain shading techniques. By following the design principles of manual terrain shading, the expressiveness of the shaded relief was significantly improved. However, these authors also pointed out some negative effects of the process, such as: (i) blurred flat areas and sharp ridges generated in the shaded relief, and (ii) the CGAN does not perform well when the cell size of the DEM differs significantly from the one used for training the network.

These issues are partly addressed by Li et al. (Citation2022a). These authors propose a new method for generating shaded relief. Based on CGANs and manual shading of DEMs, the method preprocesses the information and generates a series of cuttings in the DEM with their corresponding manual shading. This information is then used to train the CGAN. The trained network is finally used to convert the DEM of any area into shaded relief. The results of the tests indicate that the proposed method retains the advantages of manual terrain shading and can quickly generate shaded relief with a similar quality and artistic style as manual shading. Furthermore, the shaded relief generated by the proposed method not only clearly represents the terrain, but also achieves good generalisation effects. Moreover, by using a CGAN the network demonstrates a greater generation capacity at different scales.

3.3. Other applications of DEM

3.3.1. Semantic segmentation of point clouds

A points cloud is essentially a discrete data set that contains no semantic information. In contrast to classification processes, which aim to categorise an entire set or cloud of points, semantic segmentation seeks to classify each point into a specific part within the cloud based on a semantic understanding (Zhang et al. Citation2019). Traditionally, point cloud segmentation has been carried out manually by experts in diverse fields such as 3D architectural modelling (Llamas et al. Citation2017), object detection in robotics (Maturana and Scherer Citation2015), autonomous navigation (Zhou and Tuzel Citation2018; Shi et al. Citation2019), and urban analysis (Zhang et al. Citation2018; Li et al. Citation2019; Che et al. Citation2019). However, this manual approach has two major drawbacks (Pierdicca et al. Citation2020): (i) it is time-consuming, and (ii) it wastes a significant amount of data, as a 3D scan (either from terrestrial laser scanning or close-range photogrammetry) contains much more information than strictly necessary to describe an object. Despite these limitations, most studies (Murtiyoso and Grussenmeyer Citation2019a,Citation2019b; Grilli et al. Citation2019; Spina et al. Citation2011) show that traditional segmentation methods still rely on manual operations to capture objects from point clouds.

Over the past decade, in order to address the aforementioned limitations new approaches to semantic segmentation of point clouds have been developed within the framework of DL, such as Point-Net/Pointnet++ (Qi et al. Citation2017a, Citation2017b), which have achieved much more efficient models (with less information waste) for handling 3D data (Wang et al. Citation2018a). Although the existing literature for 3D object segmentation is limited, especially compared to 2D segmentation (mainly due to the high computational and memory costs required by CNNs for handling large point clouds (Song and Xiao Citation2014, Citation2016; Ma et al. Citation2019)), this new DL framework facilitates both object recognition and segmentation tasks with an appropriate level of detail, as well as the process of geometry reconstruction in the BIM environment or object-oriented software (Adam et al. Citation2023; Jhaldiyal and Chaudhary Citation2023; Macher et al. Citation2017; Tamke et al. Citation2016; Tang et al. Citation2010; Thomson and Boehm Citation2015).

A clear example of the above can be found in the recent paper by Pierdicca et al. (Citation2020). These authors used data corresponding to 3D point clouds to carry out tasks of segmentation on places of interest for cultural heritage. However, although these tasks proved to be very useful for the 3D documentation of monuments of historical interest, they were not efficient due to their excessively complex geometry and the high level of detail required for their proper representation. In this regard, Grilli et al. (Citation2019) studied the potential offered by DL-based approaches for the supervised classification of 3D heritage and concluded that although promising results were obtained, there is a lot of uncertainty regarding the inefficiency caused by the irregular nature of the data. Other approaches for the semantic segmentation of point clouds based on DL have demonstrated the viability of transfer learning using synthetic data (Kemker et al. Citation2018) or manually-labelled secondary data; as well as the effectiveness of reducing the dimensionality of 3D point clouds in order to reduce computational load without compromising accuracy (López et al. Citation2020). Yan et al. (Citation2020) focused on data preprocessing and the development of an auxiliary network to simplify and improve the segmentation of 3D point clouds corresponding to mining areas.

Regarding the most frequently used DL architectures for the development of 3D point cloud segmentation tasks, these were: (i) the CNN for regular grids, and (ii) the point-based CNN for 3D point clouds (Mullissa et al. Citation2019). Most of the segmentations of regular grids employ architectures based on FCNNs, such as SegNets, ResNets, UNets, and DeepLabV3+. For example, Yang et al. (Citation2023) employed a semantic segmentation model from computer vision to classify elementary landform types in DEMs. For this purpose, they developed a semantic segmentation model that combines a ResNet to extract features and achieves pixel-level segmentation of the DEM through a FCNN.

On the other hand, segmentation operations on point clouds are performed directly on 3D points, rather than on projected surfaces or voxels. Bachhofner et al. (Citation2020) used Gated Shape CNNs (sGSCNNs) based on the U-Net structure and constructed with sparse convolution blocks to segment 3D points generated from satellite images. The segmentation of archaeological sites and cultural heritage developed by Pierdicca et al. (Citation2020) was performed with a Dynamic Graph CNN (DGCNN) constructed with multiple blocks of edge convolutional layers. Finally, as mentioned before, Yan et al. (Citation2020) developed an auxiliary network called the Rotation Density Network that efficiently extracts structural features based on the density of the point cloud, and used it along with point-based networks such as PointNet or PointCNN to improve the segmentation of the point cloud in the mining area.

The problem of insufficient training samples for the data was addressed through data augmentation techniques such as flipping, rotation, Gaussian transformation, Gaussian blur, random scaling, random translation, and transfer learning (Mi and Chen Citation2020; Bachhofner et al. Citation2020). Some authors used CRF as a post-processing technique to refine the segmentation results (Yao et al. Citation2016; Miyoshi et al. Citation2020).

3.3.2. Extraction of elements (buildings) from satellite images

Another common example of the use of DL methods for DEM is the extraction of specific types of elements from satellite images. In this case, the majority of the studies and works analysed focus on very specific applications, including tree detection for forest management (Miyoshi et al. Citation2020; Braga et al. Citation2020), building detection (Hui et al. Citation2019), and urban area detection for urban planning tasks.

Based on the data source, the majority of the studies reviewed use satellite images as the primary data source, while images obtained from Unmanned Aerial Vehicles (sUAVs) and Synthetic Aperture Radar (SAR) are used to a lesser extent. In any case, the data sources are very diverse in terms of spectral, spatial, and temporal resolution. Additionally, most of the papers reviewed use a variation of CNNs for element extraction. Specifically, Mask R-CNN for tree detection and extraction, and U-Net CNN for building extraction. In this regard, it should be noted that each modified version of the CNN consists of several layers: (i) the convolutional layer, (ii) the pooling layer, and (iii) the fully connected layer. In the case of Mask R-CNN (Braga et al. Citation2020) the first layers extract the feature map, which is then used in subsequent layers for object detection. The U-Net architecture is employed by Chen et al. (Citation2016) using an encoder-decoder block, where the encoder block has three convolutional layers and a max pooling layer, while the decoder block has a normalisation and activation convolutional layer followed by another set of layers.

In Hui et al. (Citation2019), the U-Net structure is modified with the Xception module in order to extract effective features from RS images. In addition, multitasking is implemented to incorporate building structure information. The convolutional block of the U-Net encoder is replaced by the Xception module. The U-Net structure consists of a preceding convolutional layer followed by five successive Xception modules on the encoder side, while the convolutional block of the decoder is similar to that of the original U-Net.

Liu et al. (Citation2018) used a region proposal network based on CNN (called BRPN) to generate candidate building areas, instead of the sliding windows used in the Faster R-CNN model. Unlike the latter network, BRPN constructs the network by combining multi-level spatial hierarchies of the image. However, few studies explore other possibilities for feature extraction beyond the scope of CNNs. Chen et al. (Citation2016) implemented an Attention Balanced Feature Pyramid (ABFP) network to generate feature maps using the concepts of balanced feature pyramid and attention mechanism. Since the ABFP network can better aggregate low-level and high-level features, while the attention mechanism passes useful information to the next level and ignores useless information, the integration of these two techniques improves the overall accuracy of object recognition in SAR images. Similarly, a fully convolutional CNN was proposed for pixel-wise image segmentation with a symmetric encoder-decoder module in order to extract multi-scale features and residual connections, and effectively train the network (Li et al. Citation2019).

4. Discussion

This section includes an overview of the conducted research and a critical analysis.

4.1. Overview

In this study, a series of papers that utilise DL methods in the field of altimetric spatial information, specifically DEMs, have been reviewed. These methods are applied in operations such as VF, SR, landform classification, hydrography extraction, relief shading, semantic point cloud segmentation, and building extraction.

In the past decade, AI and DL methods have had a transformative impact on various fields of geospatial information science, such as segmentation, image fusion, and object detection, among others. These DL methods have already demonstrated their great potential in Geomatics. However, there are areas where further advancement is needed. This is the case for their application to altimetric spatial information, particularly DEMs. Thus, for some of the aforementioned operations it is necessary to continue improving the applied DL methods, as they have not yet achieved the performance levels attained by traditional methods. Additionally, their application requires a high level of expertise, making access difficult for end-users.

In general, this is due to the characteristics of the model used, which are CNNs with their various structures and architectures. CNNs are very popular due to their ability to extract features from large datasets without the need for manual intervention (supervision) nor intensive learning, and their efficiency in utilising multiple data sources to solve a problem. However, it is difficult to evaluate these characteristics as visualisation in DL rarely goes beyond the second layer, where only very basic features can be represented. In this sense, there are few attempts that have been made to visualise automatically extracted features in different applications. All of this negatively affects some of the operations that are directly performed on DEMs. For example, in the case of VF there are aspects that have not been treated rigorously, such as what type of terrain information can optimise the quality improvement of the reconstructed DEM, or how to efficiently integrate that information into advanced models to generate DEMs with similarity consistent with the surrounding terrain of the original void. Regarding DEM SR, limitations related to the morphology of the convolutional kernel still pose a significant constraint, especially in terms of size, as it affects the probability of capturing and aggregating low-level features (global information). Finally, the high computational and implementation cost are common drawbacks in most of these operations. However, the performance achieved in other operations, primarily associated with the main applications of DL in DEMs, is considerably higher. These applications include landform classification and extraction of basins and hydrographic networks, among others. Even so, there are neither functional quality metrics for the results nor criteria for comparability between solutions.

This good performance necessitates continued work in search of a DL model that generates confidence for the rest of the applications. A model that helps us to understand how the input data is processed and explains the prediction, as well as access the entire process instead of just the result. In many sectors, there is already intensive work being done on ‘clear box’ DL models, but no studies have been found in the field of altimetric spatial information regarding this matter.

4.2. Critical analysis

The critical analysis of the application of DL to DEMs entails evaluating both the advantages and limitations of this approach. On the one hand, the main advantages are:

Improved classification accuracy. DL has demonstrated its ability to enhance the accuracy of terrain shape classification in DEMs. By utilising DL algorithms, more complex and detailed terrain features can be identified and classified, enabling a higher precision in environmental characterisation.

Automation and efficiency. The application of DL to DEMs can automate the classification process, saving time and effort compared to manual or traditional methods. By training DL models with large volumes of data, efficient and rapid classification of terrain shapes can be achieved across a broad geographic range.

Capability to handle heterogeneous data. DL can effectively handle DEM data from various sources such as satellite imagery, aerial images, and LiDAR data. This allows for more effective integration of multiple data sources to obtain a comprehensive and accurate representation of the terrain.

On the other hand, we have identify the following limitations:

Data and resource requirements. The application of DL to DEMs requires large amounts of labelled training data and adequate computing power. Acquiring and labelling high-quality datasets can be costly and time-consuming. Additionally, training and executing DL models may require specialised hardware and considerable processing time.

Interpretability. One of the challenges of DL is its lack of interpretability. While DL models can achieve high levels of accuracy, understanding and explaining how they make decisions is often difficult. This can be problematic when a clear justification or explanation of results is required.

Dependence on representative training data. The performance of DL models in terrain shape classification is strongly influenced by the quality and representativeness of the training data. If the training data is not representative of the variability in landscapes or is biassed towards certain types of terrain, the models may struggle to generalise and obtain accurate results in new locations.

In conclusion, the application of DL to DEMs has the potential to improve accuracy and efficiency in terrain shape classification. However, it also presents challenges related to data requirements, computational resources, interpretability, and representativeness of training data. Addressing these limitations is crucial to fully leverage the potential of DL in the characterisation and analysis of DEMs.'

5. Conclusions

In this review, the methods and application areas of DL approaches using altimetric spatial information datasets have been analysed. Obviously, DL methods represent both an opportunity and a significant challenge in the field of geospatial information in general, and DEMs in particular. Despite their promising results, there is a need to continue exploring new approaches of DL. The source of information, the method, and the potential applications are the three aspects used to outline the implications of DL in different operations related to altimetric information. Therefore, research in these three areas should provide us with significant advancements. Firstly, the possibility of using multi-model datasets should be explored, considering semi-supervised or unsupervised approaches to reduce dependence on dataset manipulation. Additionally, sufficient attention should be given to the transparency of models by developing white-box models. Finally, new applications where DL can reach its full potential should be sought. It should be noted that the true potential of DL in Geomatics has not yet been fully exploited, and it has a great deal of possibilities to dominate research for decades to come.

| Acronyms | ||

| ABFP | = | Attention Balanced Feature Pyramid |

| AI | = | Artificial Intelligence |

| CGAN | = | Convolutional Generative Adversarial Network |

| CNN | = | Convolutional Neural Network |

| CRF | = | Conditional Random Fields |

| DEM | = | Digital Elevation Model |

| DGCNN | = | Dynamic Graph CNN |

| DL | = | Deep Learning |

| FCNN | = | Fully connected CNN |

| GSCNN | = | Gated Shape CNN |

| HiRISE | = | High Resolution Imaging Science Experiment |

| HRSC | = | High Resolution Stereo Camera |

| LROC | = | Lunar Reconnaissance Orbital Camera |

| ML | = | Machine Learning |

| NLP | = | Natural Language Processing |

| PMA | = | Pattern Matching Algorithm |

| RCNN | = | Region-based CNN |

| RS | = | Remote Sensing |

| SAR | = | Synthetic Aperture Radar |

| SLIC | = | Simple Linear Iterative Cluster |

| SR | = | Super-Resolution |

| SRTM | = | Shuttle Radar Topography Mission |

| SVM | = | Support Vector Machine |

| UAV | = | Unmanned Aerial Vehicle |

| VF | = | Void Filling |

| ZSSR | = | zero-shot SR |

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes

References

- Adam JM, Liu W, Zang Y, Afzal MK, Bello SA, Muhammad AU, Wang C, Li J. 2023. Deep learning-based semantic segmentation of urban-scale 3d meshes in remote sensing: a survey. Int J Appl Earth Obs Geoinf. 121:103365. doi: 10.1016/j.jag.2023.103365.

- Ali-Dib M, Menou K, Jackson AP, Zhu C, Hammond N. 2020. Automated crater shape retrieval using weakly-supervised deep learning. Icarus. 345:113749. doi: 10.1016/j.icarus.2020.113749.

- Bachhofner S, Loghin A, Otepka J, Pfeifer N, Hornacek M, Siposova A, Schmidinger N, Hornik K, Schiller N, Kähler O. 2020. Generalized sparse convolutional neural networks for semantic segmentation of point clouds derived from tri-stereo satellite imagery. Remote Sens. 12(8):1289. doi: 10.3390/rs12081289.

- Benz UC, Hofmann P, Willhauck G, Lingenfelder I, Heynen M. 2004. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for gis-ready information. ISPRS J Photogramm Remote Sens. 58(3-4):239–258. doi: 10.1016/j.isprsjprs.2003.10.002.

- Blaschke T. 2010. Object based image analysis for remote sensing. ISPRS J Photogramm Remote Sens. 65(1):2–16. doi: 10.1016/j.isprsjprs.2009.06.004.

- Boulton SJ, Stokes M. 2018. Which DEM is best for analyzing fluvial landscape development in mountainous terrains? Geomorphology. 310:168–187. doi: 10.1016/j.geomorph.2018.03.002.

- Braga JR, Peripato V, Dalagnol R, P. Ferreira M, Tarabalka Y, Aragão LE, F. de Campos Velho H, Shiguemori EH, Wagner FH. 2020. Tree crown delineation algorithm based on a convolutional neural network. Remote Sens. 12(8):1288. doi: 10.3390/rs12081288.

- Che E, Jung J, Olsen MJ. 2019. Object recognition, segmentation, and classification of mobile laser scanning point clouds: a state of the art review. Sensors. 19(4):810. doi: 10.3390/s19040810.

- Chen Y, Fan R, Yang X, Wang J, Latif A. 2018. Extraction of urban water bodies from high-resolution remote-sensing imagery using deep learning. Water. 10(5):585. doi: 10.3390/w10050585.

- Chen Y, Tang L, Kan Z, Bilal M, Li Q. 2020. A novel water body extraction neural network (wbe-nn) for optical high-resolution multispectral imagery. J Hydrol. 588:125092. doi: 10.1016/j.jhydrol.2020.125092.

- Chen Z, Wang X, Xu Z. 2016. Convolutional neural network based DEM super resolution. Int Arch Photogramm Remote Sens Spat Inf Sci. 41:247–250

- Cheng G, Han J, Lu X. 2017. Remote sensing image scene classification: benchmark and state of the art. Proc IEEE. 105(10):1865–1883. doi: 10.1109/JPROC.2017.2675998.

- Cheng G, Xie X, Han J, Guo L, Xia G. 2020. Remote sensing image scene classification meets deep learning: challenges, methods, benchmarks, and opportunities. IEEE J Sel Top Appl Earth Obs Remote Sens. 13:3735–3756. doi: 10.1109/JSTARS.2020.3005403.

- Clubb FJ, Mudd SM, Milodowski DT, Hurst MD, Slater LJ. 2014. Objective extraction of channel heads from high-resolution topographic data. Water Resour Res. 50(5):4283–4304. doi: 10.1002/2013WR015167.

- Cohen JP, Ding W. 2014. Crater detection via genetic search methods to reduce image features. Adv Space Res. 53(12):1768–1782. doi: 10.1016/j.asr.2013.05.010.

- Cohen JP, Liu S, Ding W. 2011. Genetically enhanced feature selection of discriminative planetary crater image features. AI 2011: Advances in Artificial Intelligence: 24th Australasian Joint Conference, Perth, Australia, December 5–8, 2011. Proceedings 24. Springer. p. 61–71

- Cohen JP, Lo HZ, Lu T, Ding W. 2016. Crater detection via convolutional neural networks. arXiv Preprint arXiv:1601.00978.

- Creswell A, White T, Dumoulin V, Arulkumaran K, Sengupta B, Bharath AA. 2018. Generative adversarial networks: an overview. IEEE Signal Process Mag. 35(1):53–65. doi: 10.1109/MSP.2017.2765202.

- Dai J, Qi H, Xiong Y, Li Y, Zhang G, Hu H, Wei Y. 2017. Deformable convolutional networks. Proceedings of the IEEE International Conference on Computer Vision. p. 764–773.

- Demiray BZ, Sit M, Demir I. 2021. D-srgan: dem super-resolution with generative adversarial networks. SN Comput Sci. 2:1–11. doi: 10.1007/s42979-020-00442-2.

- Deng J, Dong W, Socher R, Li L, Li K, Fei-Fei L. 2009. Imagenet: a large-scale hierarchical image database. 2009 IEEE conference on computer vision and pattern recognition. . IEEE. p. 248–255.

- Di K, Li W, Yue Z, Sun Y, Liu Y. 2014. A machine learning approach to crater detection from topographic data. Adv Space Res. 54(11):2419–2429. doi: 10.1016/j.asr.2014.08.018.

- Dong C, Loy CC, Tang X. 2016. Accelerating the super-resolution convolutional neural network. Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part II 14. Springer. p. 391–407.

- Dong G, Chen F, Ren P. 2018. Filling srtm void data via conditional adversarial networks. IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium. IEEE. p. 7441–7443.

- Dong G, Huang W, Smith WA, Ren P. 2019. Filling voids in elevation models using a shadow-constrained convolutional neural network. IEEE Geosci Remote Sens Lett. 17(4):592–596. doi: 10.1109/LGRS.2019.2926530.

- Dong G, Huang W, Smith WA, Ren P. 2020. A shadow constrained conditional generative adversarial net for srtm data restoration. Remote Sens Environ. 237:111602. doi: 10.1016/j.rse.2019.111602.

- Drăguţ L, Blaschke T. 2006. Automated classification of landform elements using object-based image analysis. Geomorphology. 81(3–4):330–344. doi: 10.1016/j.geomorph.2006.04.013.

- Duan L, Hu X. 2019. Multiscale refinement network for water-body segmentation in high-resolution satellite imagery. IEEE Geosci Remote Sens Lett. 17(4):686–690. doi: 10.1109/LGRS.2019.2926412.

- Ehsani AH, Quiel F. 2008. Geomorphometric feature analysis using morphometric parameterization and artificial neural networks. Geomorphology. 99(1-4):1–12. doi: 10.1016/j.geomorph.2007.10.002.

- Emami E, Bebis G, Nefian A, Fong T. 2015. Automatic crater detection using convex grouping and convolutional neural networks. In Advances in Visual Computing: 11th International Symposium, ISVC 2015, Las Vegas, NV, USA, December 14 – 16, 2015, Proceedings, Part II 11. Springer. p. 213–224.

- Farr TG, Rosen PA, Caro E, Crippen R, Duren R, Hensley S, Kobrick M, Paller M, Rodriguez E, Roth L. 2007. The shuttle radar topography mission. Rev Geophys. 45(2):1–33 doi: 10.1029/2005RG000183.

- Feng W, Sui H, Huang W, Xu C, An K. 2018. Water body extraction from very high-resolution remote sensing imagery using deep u-net and a superpixel-based conditional random field model. IEEE Geosci Remote Sens Lett. 16(4):618–622. doi: 10.1109/LGRS.2018.2879492.

- Ganerød AJ, Bakkestuen V, Calovi M, Fredin O, Rød JK. 2023. Where are the outcrops? Automatic delineation of bedrock from sediments using deep-learning techniques. Appl Comput Geosci. 18:100119. doi: 10.1016/j.acags.2023.100119.

- Gavriil K, Muntingh G, Barrowclough OJ. 2019. Void filling of digital elevation models with deep generative models. IEEE Geosci Remote Sens Lett. 16(10):1645–1649. doi: 10.1109/LGRS.2019.2902222.

- Gebrehiwot A, Hashemi-Beni L, Thompson G, Kordjamshidi P, Langan TE. 2019. Deep convolutional neural network for flood extent mapping using unmanned aerial vehicles data. Sensors. 19(7):1486. doi: 10.3390/s19071486.

- Girshick R. 2015. Fast r-Cnn. Proceedings of the IEEE International Conference on Computer Vision . p. 1440–1448.

- Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. 2020. Generative adversarial networks. Commun ACM. 63(11):139–144. doi: 10.1145/3422622.

- Grilli E, Özdemir E, Remondino F. 2019. Application of machine and deep learning strategies for the classification of heritage point clouds. Int Arch Photogramm Remote Sens Spat Inf Sci. 42:447–454.

- Hall O, Falorni G, Bras RL. 2005. Characterization and quantification of data voids in the shuttle radar topography mission data. IEEE Geosci Remote Sens Lett. 2(2):177–181. doi: 10.1109/LGRS.2004.842447.

- Han K, Xiao A, Wu E, Guo J, Xu C, Wang Y. 2021. Transformer in transformer. Adv Neural Inf Process Syst. 34:15908–15919.

- Han X, Ma X, Li H, Chen Z. 2023. A global-information-constrained deep learning network for digital elevation model super-resolution. Remote Sens. 15(2):305. doi: 10.3390/rs15020305.

- Hashimoto S, Mori K. 2019. Lunar crater detection based on grid partition using deep learning. 2019 IEEE 13th International Symposium on Applied Computational Intelligence and Informatics (SACI). IEEE . p. 75–80. doi: 10.1109/SACI46893.2019.9111474.

- Hay GJ, Castilla G, Wulder MA, Ruiz JR. 2005. An automated object-based approach for the multiscale image segmentation of forest scenes. Int J Appl Earth Obs Geoinf. 7(4):339–359. doi: 10.1016/j.jag.2005.06.005.

- He K, Gkioxari G, Dollár P, Girshick R. 2017. Mask r-CNN. Proceedings of the IEEE International Conference on Computer Vision . p. 2961–2969.

- He P, Cheng Y, Qi M, Cao Z, Zhang H, Ma S, Yao S, Wang Q. 2022. Super-resolution of digital elevation model with local implicit function representation. 2022 International Conference on Machine Learning and Intelligent Systems Engineering (MLISE). IEEE . p. 111–116 doi: 10.1109/MLISE57402.2022.00030.

- Heung B, Ho HC, Zhang J, Knudby A, Bulmer CE, Schmidt MG. 2016. An overview and comparison of machine-learning techniques for classification purposes in digital soil mapping. Geoderma. 265:62–77. doi: 10.1016/j.geoderma.2015.11.014.

- Hiller JK, Smith M. 2008. Residual relief separation: digital elevation model enhancement for geomorphological mapping. Earth Surf Processes Landforms. 33(14):2266–2276. doi: 10.1002/esp.1659.

- Hu X, Yuan Y. 2016. Deep-learning-based classification for DTM extraction from ALS point cloud. Remote Sens. 8(9):730. doi: 10.3390/rs8090730.

- Huang J, Rathod V, Sun C, Zhu M, Korattikara A, Fathi A, Fischer I, Wojna Z, Song Y, Guadarrama S. 2017. Speed/accuracy trade-offs for modern convolutional object detectors. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition . p. 7310–7311.

- Hui J, Du M, Ye X, Qin Q, Sui J. 2019. Effective building extraction from high-resolution remote sensing images with multitask driven deep neural network. IEEE Geosci Remote Sens Lett. 16(5):786–790. doi: 10.1109/LGRS.2018.2880986.

- Iizuka S, Simo-Serra E, Ishikawa H. 2017. Globally and locally consistent image completion. ACM Trans Graph. 36(4):1–14. doi: 10.1145/3072959.3073659.

- Jenny B. 2001. An interactive approach to analytical relief shading. Cartogr: Int J Geogr Inf Geovisualization. 38(1–2):67–75. doi: 10.3138/F722-0825-3142-HW05.

- Jenny B, Heitzler M, Singh D, Farmakis-Serebryakova M, Liu JC, Hurni L. 2021. Cartographic relief shading with neural networks. IEEE Trans Vis Comput Graph. 27(2):1225–1235. doi: 10.1109/TVCG.2020.3030456.

- Jhaldiyal A, Chaudhary N. 2023. Semantic segmentation of 3d lidar data using deep learning: a review of projection-based methods. Appl Intell. 53(6):6844–6855. doi: 10.1007/s10489-022-03930-5.

- Jia Y, Wan G, Liu L, Wang J, Wu Y, Xue N, Wang Y, Yang R. 2021. Split-attention networks with self-calibrated convolution for moon impact crater detection from multi-source data. Remote Sens. 13(16):3193. doi: 10.3390/rs13163193.

- Karkee M, Steward BL, Aziz SA. 2008. Improving quality of public domain digital elevation models through data fusion. Biosyst Eng. 101(3):293–305. doi: 10.1016/j.biosystemseng.2008.09.010.

- Kattenborn T, Leitloff J, Schiefer F, Hinz S. 2021. Review on convolutional neural networks (CNN) in vegetation remote sensing. ISPRS J Photogramm Remote Sens. 173:24–49. doi: 10.1016/j.isprsjprs.2020.12.010.

- Kemker R, Salvaggio C, Kanan C. 2018. Algorithms for semantic segmentation of multispectral remote sensing imagery using deep learning. ISPRS J Photogramm Remote Sens. 145:60–77. doi: 10.1016/j.isprsjprs.2018.04.014.

- Kitchenham BA, Charters S. 2007. Guidelines for performing systematic literature reviews in software engineering,

- Krizhevsky A, Sutskever I, Hinton GE. 2017. Imagenet classification with deep convolutional neural networks. Commun ACM. 60(6):84–90. doi: 10.1145/3065386.

- LeCun Y, Bengio Y, Hinton G. 2015. Deep learning. Nature. 521(7553):436–444. doi: 10.1038/nature14539.

- Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z. 2017. Photo-realistic single image super-resolution using a generative adversarial network. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition . p. 4681–4690.

- Li J, Heap AD. 2011. A review of comparative studies of spatial interpolation methods in environmental sciences: performance and impact factors. Ecol Inf. 6(3-4):228–241. doi: 10.1016/j.ecoinf.2010.12.003.

- Li S, Xiong L, Tang G, Strobl J. 2020. Deep learning-based approach for landform classification from integrated data sources of digital elevation model and imagery. Geomorphology. 354:107045. doi: 10.1016/j.geomorph.2020.107045.

- Li S, Hu G, Cheng X, Xiong L, Tang G, Strobl J. 2022a. Integrating topographic knowledge into deep learning for the void-filling of digital elevation models. Remote Sens Environ. 269:112818. doi: 10.1016/j.rse.2021.112818.

- Li S, Yin G, Ma J, Wen B, Zhou Z. 2022b. Generation method for shaded relief based on conditional generative adversarial nets. ISPRS Int J Geo-Inf. 11(7):374. doi: 10.3390/ijgi11070374.

- Li W, Hsu C. 2020. Automated terrain feature identification from remote sensing imagery: a deep learning approach. Int J Geogr Inf Sci. 34(4):637–660. doi: 10.1080/13658816.2018.1542697.

- Li W, Zhou B, Hsu C, Li Y, Ren F. 2017. Recognizing terrain features on terrestrial surface using a deep learning model: an example with crater detection. Proceedings of the 1st Workshop on Artificial Intelligence and Deep Learning for Geographic Knowledge Discovery . p. 33–36.

- Li X, Cheng X, Chen W, Chen G, Liu S. 2015. Identification of forested landslides using lidar data, object-based image analysis, and machine learning algorithms. Remote Sens. 7(8):9705–9726. doi: 10.3390/rs70809705.

- Li Y, Wu B, Ge X. 2019. Structural segmentation and classification of mobile laser scanning point clouds with large variations in point density. ISPRS J Photogramm Remote Sens. 153:151–165. doi: 10.1016/j.isprsjprs.2019.05.007.

- Lin P, Pan M, Wood EF, Yamazaki D, Allen GH. 2021. A new vector-based global river network dataset accounting for variable drainage density. Sci Data. 8(1):28. doi: 10.1038/s41597-021-00819-9.

- Lin T, Dollár P, Girshick R, He K, Hariharan B, Belongie S. 2017. Feature pyramid networks for object detection. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition . p. 2117–2125.

- Lin X, Zhu Z, Yu X, Ji X, Luo T, Xi X, Zhu M, Liang Y. 2022. Lunar crater detection on digital elevation model: a complete workflow using deep learning and its application. Remote Sens. 14(3):621. doi: 10.3390/rs14030621.

- Ling F, Zhang QW, Wang C. 2007. Filling voids of SRTM with Landsat sensor imagery in rugged terrain. Int J Remote Sens. 28(2):465–471. doi: 10.1080/01431160601075509.

- Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C, Berg AC. 2016. Ssd: single shot multibox detector. Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14 . Springer . p. 21–37

- Liu Y, Zhang Z, Zhong R, Chen D, Ke Y, Peethambaran J, Chen C, Sun L. 2018. Multilevel building detection framework in remote sensing images based on convolutional neural networks. IEEE J Sel Top Appl Earth Obs Remote Sens. 11(10):3688–3700. doi: 10.1109/JSTARS.2018.2866284.

- Llamas J, M. Lerones P, Medina R, Zalama E, Gómez-García-Bermejo J. 2017. Classification of architectural heritage images using deep learning techniques. Appl Sci. 7(10):992. doi: 10.3390/app7100992.

- López J, Torres D, Santos S, Atzberger C. 2020. Spectral imagery tensor decomposition for semantic segmentation of remote sensing data through fully convolutional networks. Remote Sens. 12(3):517. doi: 10.3390/rs12030517.

- Ma L, Liu Y, Zhang X, Ye Y, Yin G, Johnson BA. 2019. Deep learning in remote sensing applications: a meta-analysis and review. ISPRS J Photogramm Remote Sens. 152:166–177. doi: 10.1016/j.isprsjprs.2019.04.015.

- Ma X, Li H, Chen Z. 2023. Feature enhanced deep learning network for digital elevation model super-resolution. IEEE J Sel Top Appl Earth Obs Remote Sens. 16:5670–5685. doi: 10.1109/JSTARS.2023.3288296.

- Macher H, Landes T, Grussenmeyer P. 2017. From point clouds to building information models: 3d semi-automatic reconstruction of indoors of existing buildings. Appl Sci. 7(10):1030. doi: 10.3390/app7101030.

- Marmanis D, Datcu M, Esch T, Stilla U. 2015. Deep learning earth observation classification using imagenet pretrained networks. IEEE Geosci Remote Sens Lett. 13(1):105–109. doi: 10.1109/LGRS.2015.2499239.

- Maturana D, Scherer S. 2015. Voxnet: a 3d convolutional neural network for real-time object recognition. 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). p . 922–928. IEEE, doi: 10.1109/IROS.2015.7353481.

- Maxwell AE, Shobe CM. 2022. Land-surface parameters for spatial predictive mapping and modeling. Earth Sci Rev. 226:103944. doi: 10.1016/j.earscirev.2022.103944.

- Mesa-Mingorance JL, Ariza-López FJ. 2020. Accuracy assessment of digital elevation models (DEMs): a critical review of practices of the past three decades. Remote Sens. 12(16):2630. doi: 10.3390/rs12162630.

- Mi L, Chen Z. 2020. Superpixel-enhanced deep neural forest for remote sensing image semantic segmentation. ISPRS J Photogramm Remote Sens. 159:140–152. doi: 10.1016/j.isprsjprs.2019.11.006.

- Miao Z, Fu K, Sun H, Sun X, Yan M. 2018. Automatic water-body segmentation from high-resolution satellite images via deep networks. IEEE Geosci Remote Sens Lett. 15(4):602–606. doi: 10.1109/LGRS.2018.2794545.

- Milan DJ, Heritage GL, Large AR, Fuller IC. 2011. Filtering spatial error from DEMs: implications for morphological change estimation. Geomorphology. 125(1):160–171. doi: 10.1016/j.geomorph.2010.09.012.

- Minár J, Evans IS, Jenčo M. 2020. A comprehensive system of definitions of land surface (topographic) curvatures, with implications for their application in geoscience modelling and prediction. Earth Sci Rev. 211:103414. doi: 10.1016/j.earscirev.2020.103414.

- Mirza M, Osindero S. 2014. Conditional generative adversarial nets. arXiv Preprint arXiv:1411.1784.