Abstract

Although virtual reality (VR) wayfinding systems are available for people with visual impairment, there is a lack of studies providing the choice of VR environments can affect their spatial information acquisition. To address these issues, we designed two VR-based wayfinding systems for people with visual impairment. We compared the efficacy of walk-in-place, where the user walks in a virtual environment by walking on a treadmill in the real world, and overground walking, where the user walks in a virtual environment by walking in a constrained analogous real walking space without walls and obstacles. By conducting user experiments on these two VR-based wayfinding systems, we evaluated how people with visual impairments acquired spatial information regarding paths and obstacles. Our results showed that people with visual impairment memorized the paths more accurately after the wayfinding with walk-in-place than with the overground walking. Conversely, they memorized the obstacles on the path more accurately after the wayfinding task with the overground walking than the walk-in-place. Lastly, we discussed the rationale for these results of task on the walk-in-place and the overground walking.

1. Introduction

People with visual impairment tend to walk only familiar routes, such as always using the same subway exit (Alwi & Noh, 2013). As walking on unfamiliar roads can be dangerous for them, it is desirable to train people with visual impairment in advance about spatial information, such as the route and location of obstacles (Riazi et al., Citation2016). Although a common way for them to familiarize themselves with the new route is to walk outdoors with a wayfinding assistant or a guide dog, it is neither always feasible nor completely safe. To overcome these limitations, many researchers have developed a virtual reality (VR) wayfinding training systems to help people with visual impairment learn the spatial information of a new road through virtual simulations (Ricci, Boldini, Beheshti, et al., Citation2023; Ricci, Boldini, Ma, et al., Citation2023; Siu et al., Citation2020; Zhang et al., Citation2020; Zhao et al., Citation2018). As an extension of previous studies, this study develops two VR wayfinding systems and compares the effectiveness of the systems in the acquisition of spatial information by people with visual impairment.

Researchers have developed a variety of map systems that help people with visual impairment get information about the routes. For example, a Braille map system helps people with visual impairment to tactilely learn the directions with a unique Braille pattern (Ivanchev et al., Citation2014). The augmented reality map system with a touchpad can recognize the touch and provide verbally audible information about the touched location on the map (Albouys-Perrois et al., Citation2018; Brock et al., Citation2015; Wang et al., Citation2009). Another map system provides ambient sounds at the pointing location. (Papadopoulos et al., Citation2017). However, these systems require people with visual impairment to transform and apply the spatial information obtained in the 2D map to the 3D real-world situation when they actually walk on the road. Additionally, the spatial information obtained from the 2D map does not provide detailed information that can be known by actually walking on the road, such as the locations of various obstacles.

Through VR, the trained sense of space can be intuitively applied when walking on a real road, and hence, the obstacles, which are difficult to show on a map, can be provided. There are several ways to move in VR, such as by using a controller (Cirio et al., Citation2013) or trackball (Bozgeyikli et al., Citation2016), moving their arms (Usoh et al., Citation1999), or doing walk-in-place (Bozgeyikli et al., Citation2019). As locomotion methods of our system, we implemented walk-in-place, which enables people to move in an infinite virtual space by dragging the foot on a limited area, but different from a natural gait, and overground walking which applies the original gait, but in the limited space by a real environment. Previous studies presented opinions on two locomotion methods (Kreimeier, Karg, et al., Citation2020; Kreimeier, Ullmann, et al., Citation2020) and stated that walk-in-place was less intuitive and required more physical effort, compared to overground walking. Conversely, overground walking was more realistic (Kreimeier, Karg, et al., Citation2020). However, a comprehensive study is needed on whether there is a difference in memorizing spatial information by people with visual impairment between walk-in-place and overground walking. In this study, we investigated how effectively people with visual impairment were able to acquire spatial information about routes and obstacles after conducting a wayfinding task using walk-in-place and overground walking.

The research questions for this study are as follows.

Research Question 1: When people with visual impairment memorize the routes after wayfinding task, is there a difference in the accuracy of the memory during walk-in-place and overground walking?

Research Question 2: When people with visual impairment memorize the obstacles encountered on the route after wayfinding task, is there a difference in the accuracy of the memory during walk-in-place and overground walking?

To answer the first research question, we conducted the first experiment wherein people with visual impairment did wayfinding for five routes either by walk-in-place on the VR treadmill, or overground walking using VR trackers. After the experiment, they answered the route they had walked by touching and choosing one of the four route blocks, which were printed using a 3D printer. To answer the second research question, we conducted the second experiment wherein people with visual impairment did wayfinding for a route with five obstacles either by walk-in-place on the VR treadmill or by overground walking using VR trackers. After the experiment, they were asked to mark the locations of the five obstacles they experienced with bricks, on a LegoTM block, which represented the route they walked.

In this study, we conducted experiments with two different gait methods: walk-in-place and overground walking, in a virtual environment and studied how different gaits affected ability of people with visual impairment to memorize the routes and obstacles on the route. The results of the experiments showed that people with visual impairment memorized the route information more accurately by walk-in-place than by overground walking. Conversely, they remembered the location of obstacles more accurately by overground walking than by walk-in-place. Based on these results, we discussed how characteristics of the locomotion method had an effect on acquiring spatial information for people with visual impairment.

2. Related studies

We reviewed related studies of VR wayfinding for people with visual impairment.

2.1. Wayfinding for people with visual impairment

The lack of spatial information forces people with visual impairment to move only along familiar paths during wayfinding (Andrade et al., Citation2021). Therefore, it is necessary to update their cognitive maps, which is a mental image formed by gathering spatial information about the structure of the environment, location of objects, and the spatial relationship between the objects (May et al., Citation2020). This cognitive map is also a representation of the distance and direction among landmarks, paths, and objects (Fazzi & Petersmeyer, Citation2001). To walk independently and confidently within an environment, people with visual impairment need a cognitive map of their particular environments (May et al., Citation2020). Therefore, it is necessary to effectively utilize the visual, auditory, tactile, and kinesthetic senses to acquire spatial information.

Among the various senses, hearing has been used in VR wayfinding systems for people with visual impairment (Siu et al., Citation2020; Zhao et al., Citation2018). Zhao et al. produced a VR Canetroller that supports an auditory interface on the cane mainly used by people with visual impairment in virtual wayfinding systems (Zhao et al., Citation2018). Canetroller was designed to detect and interact with objects based on spatial sound in VR and gain an understanding of their environment. Additionally, several studies show that people with visual impairment can form a cognitive map using tactile information. In the case of Kim et al., people with visual impairment were able to recognize braille on the road utilizing the tactile sense transmitted through a white cane in VR, which confirmed that the information learned from VR could be useful in actual wayfinding (Kim, Citation2020). In the case of Zhang et al., an MR cane was developed that simultaneously provides auditory and tactile feedback whenever the virtual cane contacts a virtual object (Zhang et al., Citation2020).

In terms of kinesthetic sense, the wayfinding of people with visual impairment is closely related to the rotational ability of the body (Ferrell, Citation2011). In efficient walking, the pelvis turns and the legs step forward so that the body continues to face forward. People with visual impairment often have poor body rotational ability (Ferrell, Citation2011) because their vision cannot contribute to balancing the body (Gipsman, 1981). Therefore, they try to stabilize their bodies either consciously or unconsciously in various ways (Gipsman, 1981). For example, some use shorter steps to reduce the time it takes to balance the body between steps (Gipsman, 1981). Some try dragging their feet or extending their stride length to increase the time their soles are in touch with the ground. Variations like these indicate that it is necessary to compare the effects of the walk-in-place approach with a VR treadmill and of the overground walking approach with a VR tracker in perspective of spatial acquisition. Kreimeier et al. tried to compare the walk-in-place and overground walking from the perspective of subjective evaluations of people with visual impairment (Kreimeier, Karg, et al., Citation2020). However, it is difficult to find a study that compares the walk-in-place to overground walking in terms of memorizing the path and obstacles, which are important elements in wayfinding task.

2.2. Gait of people with visual impairment

As people walk alone in our proposed system, there are some distinguishable characteristics from walking with a walking assistant or a guide dog. First, people with visual impairment often do not walk straight alone because of veering, which refers to getting out of the intended path in a restricted state of vision (Cornell & Heth, Citation2004; Guth & LaDuke, Citation1994, Citation1995). Veering is caused due to unrecognizable movement noise that occurs during individual gait (Kallie et al., Citation2007). Veering may cause distorted memory of route and obstacles owing to the difference between the perceived and actual movement. One of the main ways to prevent veering is to use a guide dog or a walking assistant (Ahmetovic et al., Citation2019); however, veering can only be overcome when they detect their own direction through objects in the surrounding environment (Cornell & Heth, Citation2004). Second, people with visual impairment also need mobility monitoring, which means focusing on the gait itself to walk safely and efficiently. In the previous study, because of mobility monitoring, people with simulated visual impairment had less obstacle memory than people with normal vision (Rand et al., Citation2015). One of the solutions for reducing this distraction is to navigate with a walking assistant.

These kinesthetic characteristics of gait are influenced by VR locomotion. For example, overground walking evokes less mobility monitoring than walk-in-place does because overground walking demands the same gait people with visual impairment are used to Kreimeier, Karg, et al., (Citation2020). However, there are lack of studies on how VR locomotion affects the acquisition of spatial information based on these characteristics. However, this study shows how walk-in-place and overground walking affects the acquisition of spatial information for people with visual impairment.

2.3. Spatial sound in VR wayfinding

Existing VR systems have mainly developed visually-oriented display functions, making it difficult for people with visual impairment to access VR systems (Creed et al., Citation2023; Sylaiou & Fidas, Citation2022). Therefore, researchers have helped people with visual impairment recognize their spatial location in VR using head-related transfer function (HRTF) (Kolarik et al., Citation2016; Zhang et al., Citation2023) –– a 3D acoustic technology used for delivering various spatial information in VR wayfinding systems for people with visual impairment. One of the spatial information with HRTF was an obstacle collision sound evoked by a VR white cane at a location where it collided with an obstacle (Siu et al., Citation2020; Zhao et al., Citation2018). Some used HRTF to implement echolocation by which sounds are reflected differently depending on the surrounding VR environment (Andrade et al., Citation2018; Wu et al., Citation2017). Echolocation helped people with visual impairment understand the surrounding environment by recognizing various sound reflections (echoes) according to surrounding physical characteristics (Kolarik et al., Citation2014). Some used HRTF to generate the sound of the destination where people with visual impairment should arrive (Kreimeier & Götzelmann, Citation2019). Others applied HRTF to the sound of cars passing through virtual streets so that people with visual impairment can recognize virtual cars passing-by, simulating real environments of crosswalk (Thevin et al., Citation2020).

Similar to previous studies (Andrade et al., Citation2018; Kreimeier & Götzelmann, Citation2019; Kreimeier, Karg, et al., Citation2020; Wu et al., Citation2017), we used HRTF to implement spatial obstacle sounds in the VR wayfinding system for people with visual impairment. However, unlike previous studies, which used HRTF for the sound of collisions (Siu et al., Citation2020; Zhao et al., Citation2018) or for the sound that only indicated the location of the destination (Kreimeier & Götzelmann, Citation2019; Kreimeier, Karg, et al., Citation2020), this study used HRTF to the sound generation of a moving guide dog. The guide dog informs people with visual impairment of the direction required for the next step so that they can change directions in real-time.

2.4. VR locomotion for people with visual impairment

Among various locomotion methods in virtual environments, controller-based moving methods allow people with visual impairment to move in a specific direction and speed using various devices such as keyboards (Connors et al., Citation2014; Maidenbaum et al., Citation2013), joysticks (Picinali et al., Citation2014; Sánchez et al., Citation2012) or Nintendo Nunchuck (Lahav et al., Citation2018) instead of their legs. Conversely, the teleport-based moving method guides the user to teleport a location that is indicated by the player (i.e., point & teleport). However, the teleport-based locomotion methods rely heavily on visual information, making it difficult for people with visual impairment to use this method (Thevin et al., Citation2020). Instead, some VR systems apply motion-based locomotion methods, allowing them to move in virtual environments by using their legs (Siu et al., Citation2020; Thevin et al., Citation2020; Zhao et al., Citation2018). Motion-based locomotion methods (e.g., walk-in-place (Lee & Hwang, Citation2019), redirected walking (Nescher et al., Citation2016), overground walking (Kreimeier, Karg, et al., Citation2020)) detect the movement of the head or legs of the player so that they can move in a virtual environment with the gaits they are used to. To use these methods, HTC Vive calculates steps of the player based on their head movement (Lee & Hwang, Citation2019) or via VR trackers that are attached directly to the leg (Siu et al., Citation2020; Zhao et al., Citation2018). In contrast, using a smartphone’s camera and inertial sensors with Google’s ARCore, the head movement can be tracked and calculated while walking in VR (Thevin et al., Citation2020). Additionally, recent related studies confirm that even people with visual impairment can walk in place with the VR Treadmill (Kreimeier & Götzelmann, Citation2019; Kreimeier, Karg, et al., Citation2020). Kreimeier et al. tested four VR mobile devices (Virtuix Omni, Virtualizer, Vive tracker, and Joystick) by people with visual impairment (Kreimeier, Karg, et al., Citation2020). The Vive tracker was attached to the leg so that participants could move in VR as they would walk in the real world. Virtuix Omni and Virtualizer are VR treadmills people can use during walk-in-place. The participants reported that they felt that Virtuix Omni, which supported walk-in-place, was faster and safer than Vive tracker, which supported overground walking. However, this study simply reports what the participants felt when they walked using given locomotion methods, and it is not known how each walking method influenced their memory about the route. In our study, we used two locomotion methods (walk-in-place and overground walking) with two different locomotion devices (Virtuix Omni and Vive Tracker) for wayfinding task in virtual environments. Through the research, we obtained an objective evaluation for wayfinding as well as a subjective evaluation of the participants using each locomotion method.

3. Implementation

We designed a VR wayfinding system for people with visual impairment using VR locomotion and HRTF. We built two different VR systems: a VR treadmill for walking in place (Virtuix Omni) and a VR tracker for overground walking (Vive tracker). All sounds in both VR locomotion environments have been filtered with head-related transfer function (HRTF) technology, which can lead people with visual impairment in the right direction by the sound of a guide dog.

3.1. Apparatus

In the walk-in-place environment, participants can walk around in place using the Virtuix Omni treadmill (Virtuix, Citation2022). Conversely, in the overground walking environment, participants can walk on the ground by wearing the Vive tracker (Vive, Citation2022). A Vive ProTM head-mounted display (HMD) was used in both environments to reflect the head movements of the participants. Although people with visual impairment have difficulty viewing the display of the HMD, they still wear the HMD to implement HRTF by determining the position and orientation of their ears. The VR controller is also implemented as a virtual white cane to detect virtual obstacles. Moreover, this controller vibrates when the virtual white cane hits a virtual object, such as a wall or obstacles.

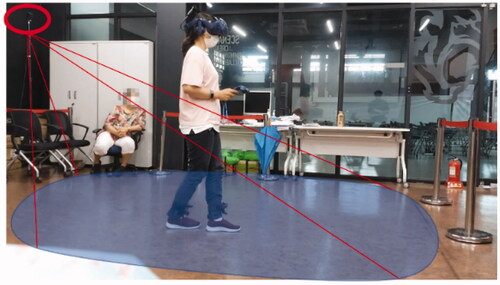

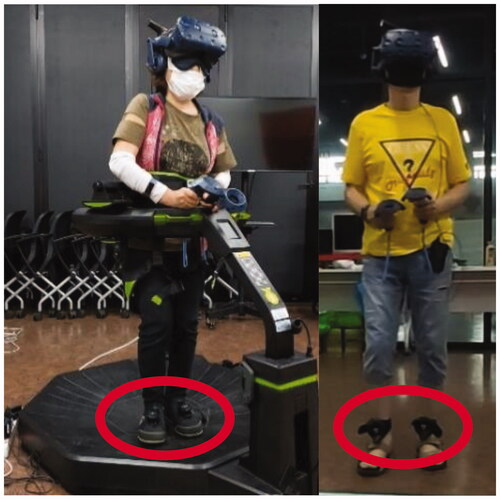

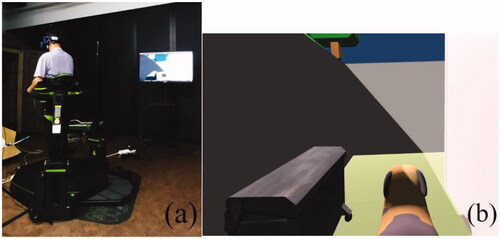

The left of shows the completed appearance of the walk-in-place environment. To develop walk-in-place environment, we used Virtuix Omni as a VR treadmill. There are various VR treadmills such as Kat Walk Mini S (KATVR, Citation2021), Infinadeck (INFINADECK, Citation2020), Cyberith Virtualizer (Cyberith, Citation2021), and SpaceWalkerVR (Keles, Citation2015). However, we used the Virtuix Omni as it holds the body with a soft belt around the waist and a height-adjustable ring, thereby preventing users from falling or protruding from the treadmill. The Virtuix Omni has a low-friction Omni shoe and a smooth surface Omni body to facilitate easy walk-in-place (i.e., without actual forward movement). Additionally, tracking pods, which are inertial measurement unit (IMU)-based tracking devices comprising a gyroscope, an accelerometer, and a geomagnetic sensor, were attached to the Omni shoes to track foot movements. The sensors detect the speed and direction of the participants’ feet by measuring acceleration and inertia.

Figure 1. The Virtuix Omni and HTC Vive tracker used in the experiments. The red circles on the left and right indicate the IMU sensor used for Virtuix Omni and the HTC Vive tracker.

The right of and show the completed appearance of the overground walking environment. The overground walking was developed using Vive trackers as the VR tracker. Two Vive trackers were used to track the movements of both feet by attaching straps to the user’s ankles. To track the Vive trackers, Vive base stations were installed at both ends of the floor space. With these setups, the VR system with overground walking could track the gait in real space. For the overground walking environment, a 5 × 5 m2 actual safety zone was created to minimize the risk of participants falling, and participants were allowed to move within the 3 × 4 m2 space.

3.2. 3D spatial audio system

To implement the 3D spatial audio system, we used Google Resonance Audio. This software development kit (SDK) simulates spatial sound transmitted in the real world, giving the user the effect of hearing the sound from a specific location (Google, Citation2022). Google Resonance Audio was applied to all sounds of the virtual objects in our wayfinding system, allowing the participants to hear spatial sounds. The wayfinding system included the sound of a guide dog, the sound of interaction with the white cane (wall, floor, obstacle), and the sound of interaction with the avatar (wall, obstacle). During wayfinding, the sound of the guide dog continuously occurs until participants arrive to the destination. The sound of the guide dog was implemented as 3 octaves and C major based on a previous finding by Shi’s study (Shi et al., Citation2019) to give people with visual impairment a comfortable sound. The characteristic of the sound is monotone and the pitch of the sound is always the same, but the volume and arrival time of the sound to each ear varies depending on the position of the guide dog by HRTF. Through the sound of the guide dog, participants are aware of which direction they should go. The sound of the white cane hitting the floor was recorded by tapping a short and simple wooden stick, while the bumping sound of the wall was indicated by tapping the wooden stick three times in a row. The sound of a collision between obstacles and the stick was implemented depending on the material of the respective obstacles. We used virtual object sounds to represent seven materials: bush, cloth, glass, plastic, stone, iron, and wood. The sound of a collision with a participant used the animation-style bumping sound.

3.3. Software

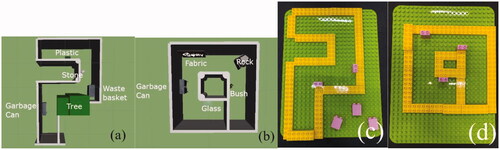

For wayfinding applications, we created various virtual routes based on space of 3 × 4 m2 routes in VR. We used the Unity Omni SDK for the treadmill and the SteamVR for the tracker. We attached the “Audio Listener” object to the virtual avatar and produced the spatial sound depending on the HMD location and direction. To detect collisions between the virtual avatar and the virtual wall, we attached a collider component to the virtual body of the participant to recognize collisions. The guide dog moved to the destination while maintaining a distance of 0.5 m from the participant to prevent a collision with the avatar. We also developed the narrow moveable area for the guide dog so that it may move only along the center of the route. A total of 10 routes were created as wayfinding tasks for Experiment 1. All routes included two to three turns. For Experiment 2, we chose two routes and added five obstacles. We added to all environment objects such as floors, walls, and all obstacles (e.g. bushes, stones, trees, glass, iron, plastic, and cloth) Unity’s collider components to generate each collision sound when they are tapped with the virtual white cane.

4. Experiments

We conducted two experiments to study how the respective locomotion method affected the acquisition of various spatial information. First, we conducted virtual wayfinding task for people with visual impairment to memorize the paths using two different locomotion methods, walk-in-place and overground walking. Second, we conducted virtual wayfinding task for people with visual impairment to memorize obstacles using those two different locomotion methods.

4.1. Participants with visual impairment

A total of sixteen people with visual impairment were recruited. Eleven (V1 to V11) participated in both Experiments 1 and 2. Participants V12 to V16 only participated in Experiment 2. Each experiment was conducted after a sufficient tutorial was provided to familiarize the participants with the locomotion method. Participants were aged between 41 to 79 years (M = 53.43). Four participants had previously experienced VR, while only one (V5) had previous experience on a VR Treadmill.

The navigation habits of participants with visual impairment were studied. Seven participants with visual impairment navigated routes with a wayfinding assistant. Three people with visual impairments relied on their residual vision during navigation. One participant received remote assistance from family members using a smartphone camera to show the surroundings. Additionally, seven of them used a white cane for navigation. One participant used the tactical senses of their feet to check their current position on the memorized route.

For the personal history of rehabilitation, seven participants with visual impairment were trained to use white canes. Seven participants with visual impairment had experienced blindness rehabilitation related to mobility, such as how to walk from one place to another, how to walk around the schoolyard, how to move in case of fire emergencies, and how to get help when lost.

All participants used blindfolds during the experiments to reduce the effect of various visual ability. Detailed information about all participants is shown in . The experiments were approved by the Institutional Review Board (IRB No. HYU-2019-08-001-1)

4.2. Experiment 1: Comparing walk-in-place and overground walking regarding the memory of the route

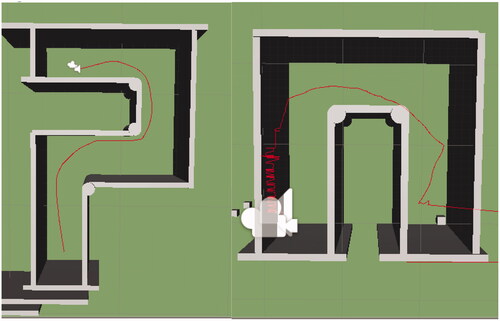

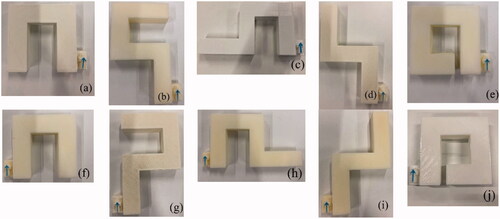

The goal of the first experiment was to determine which wayfinding environments between overground walking and walk-in-place is better for people with visual impairment to memorize the path taken. In this experiment, the virtual guide dog continuously informed the participants of the direction along the path with spatial sound. This progressive updating of directions was used considering it is difficult to navigate a route by depending on sounds that only indicate the final destination (Kreimeier & Götzelmann, Citation2019). In Experiment 1, the participants walked five paths in VR. After walking each path, participants selected one of four 3D-printed objects that accurately represented the path they had walked (see ). The research question for Experiment 1 was as follows.

Figure 3. The ten routes used in Experiment 1. Participants with visual impairment are presented with four 3D-printed route blocks, and they choose a one block that shows the route they walked during the experiment by touching with hands. For a given path, the path may vary depending on the initial direction the participant is facing; therefore, an arrow is added to the starting point to make the direction right.

RQ 1: When people with visual impairment memorize the routes after wayfinding task, is there a difference in the accuracy of the memory by walk-in-place and overground walking?

4.2.1. Experiment 1 setting

The basic experimental environment setting was the same as described in the Section 3. We used an additional monitor to continuously monitor the location of the participants in the VR environment (see ). The view on the monitor was the same as that on the HMD, but the participants could not see that because they were blind-folded (see ). For reporting the memory of the route they had walked, each path was printed by a 3D printer so that the participants could select the path they had taken through tactile feedback (). The ten routes used in Experiment 1 comprised left or right turns two to three times.

Figure 4. (a) The experiment apparatus in the walk-in-place environment. The monitor shows the surrounding environment according to the direction the participant’s head is facing so that the researcher can check the current status. (b) The scene in the monitor. The participants cannot see this scene because they are blind-folded.

4.2.2. Experimental procedure and the collected data

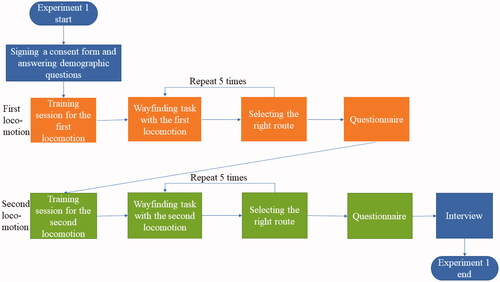

Each participant signed a consent form and answered demographic questions, such as their age and gender. All participants wore eye-patches on both eyes to control errors and variations caused by various levels of visual impairment. Before starting the main experiment, the participants practiced for 20 min to become accustomed to each locomotion method. Then, the participants engaged in wayfinding task on the five paths. Wayfinding task was conducted by walking the given path with the guide dog. After the wayfinding task on each path, 3D-printed block of four different paths were presented to the participants, and they chose one block that represented the route they walked. To minimize the learning effect, the order of the walking paths was randomized by the Latin Square. The order of two locomotion methods, walk-in-place and overground walking, was also counter-balanced. After each wayfinding task, the participants answered the questionnaire. After finishing all the tasks, researchers interviewed participants on what they felt during the experiment. shows a flowchart of Experiment 1.

Figure 5. Experiment Flow of Experiment 1 (orange: first locomotion device experiment, green: second locomotion device experiment).

Correct answer rates, which was calculated by answering five questions given after walking each route with each locomotion method, were used to determine how well participants remembered the route after walking the virtual path. Completion time was the time elapsed while walking the route from start to end. We also collected the number of collisions with the virtual wall. Lastly, the number of errors was measured as the number of facing wrong directions, and the error time was measured as the time taken in the wrong direction.

A questionnaire was used in this research. The questionnaire asked 30 questions in 9 categories. The wayfinding category (W) questions ask whether the wayfinding system is generally useful (Darken & Sibert, 1996; Kalawsky, Citation1999; Wickens & Baker, Citation1995; Woodrow & Thomas, Citation1995). Mobility category (M) questions ask whether the locomotion method is easy to use (Kalawsky, Citation1999; Slater et al., Citation1995). The white cane category (C) questions were whether it was easy to find obstacles with the controller (Kalawsky, Citation1999; Kontarinis & Howe, Citation1995; Shneiderman & Plaisant, Citation2010). The audio category (A) questions asked that all collision sounds, and the spatial audio of the guide dog sounds are spatially informative (Gabbard, Citation1997; Richard et al., Citation1996; Woodrow & Thomas, Citation1995). Presence category (P) (Kalawsky, Citation1999; Witmer & Singer, Citation1998), ease category (E) (Gabbard, Citation1997; Kennedy et al., Citation1993; Zhai et al., 1996), distress category (D)(Kennedy et al., Citation1993), and chain reaction category (R) (Kennedy et al., Citation1993) questions asked about the overall usefulness of this VR system from various perspectives. Finally, the satisfaction category (S) questions asked whether the wayfinding task was successful. All items are answered on a 5-point Likert scale. Each item of the questionnaire is listed in . Additionally, line data which showed how participants walked in the virtual environment was recorded.

4.2.3. Experiment 1 results

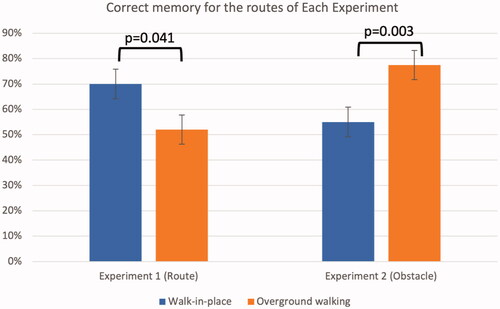

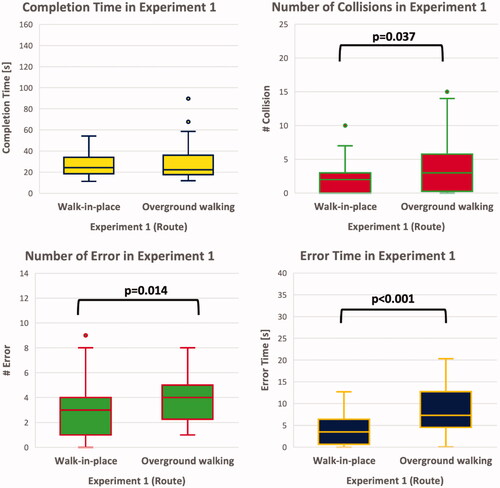

We collected data for Experiment 1 from eleven participants. In this study, a within-subject design was used wherein participants were involved in both locomotion environments. Therefore, participants were measured repeatedly over five paths with both locomotion methods. To examine the significant differences between walk-in-place and overground walking, the Wilcoxon signed-rank test was used because of the discrete variables. The correct memory rate for the route is shown on the left side of , whereas shows the remaining results from Experiment 1.

Figure 6. Correct memory from experiments 1 and 2 between two locomotion methods. Walk-in-place showed significantly better results than overground walking for memorizing routes. Conversely, overground walking showed significantly better results than walk-in-place for memorizing obstacles.

Figure 7. Results of Experiment 1 between two locomotion methods. (b, c) The number of collisions and errors show significant differences with p < 0.05. (d) The error times show significant differences with p < 0.001.

The rate of correct memory showed a significant difference between ground walking and walk-in-place (Z=-2.041, p = 0.041). Higher memorization accuracy was observed for walk-in-place (M = 71%) than for overground walking (M = 53%). In other measures, the number of collisions for walk-in-place was significantly lower (M = 2.18) than that for overground walking (M = 3.48), (Z=-2.097, p = 0.036). The number of errors for walk-in-place was significantly lower (M = 2.80) than that for overground walking (M = 3.74) (Z = −2.469, p = 0.014). The error time was significantly shorter in walk-in-place (M = 3.83) than that in overground walking (M = 8.64), (Z=-4.790, p < 0.001). According to the questionnaire results, there were no significant differences between the two walking methods.

Additionally, to determine the trade-off between speed and accuracy, we used Spearman correlation with the average speed and correct answer rate for each walking method. The average speed for each participant was the average of the speeds across the five paths, and the speed for each path was calculated as the length of each path divided by the completion time. There was no significant negative correlation between the average speed and correct answer rate in walk-in-place and overground walking in Experiment 1 (Walk-in-place: rs(9) = −0.269, p = 0.212/Overground walking: rs(9) = −0.229, p = 0.249).

One interesting finding of line data about how participant walked during Experiment 1 was that V6 continued to attempt to pass through walls while overground walking, indicating that people with visual impairment attempted to walk in a straight line but actually walked in a curved direction. This phenomenon is referred to as veering, which is the gap between perceived and actual walking due to imperceptible movement noise (Kallie et al., Citation2007). Conversely, V6 did not attempt to pass through a wall while walk-in-place. This result can be interpreted as no veering with walk-in-place, considering the orientation of the participant was fixed by a soft belt around the waist and a height-adjustable ring of the Virtuix Omni. shows how V6 did walk-in-place and overground walking in Experiment 1.

4.3. Experiment 2: Comparing walk-in-place and overground walking regarding the memory of the obstacles

The goal of the second experiment was to find out whether overground walking or walk-in-place was better in terms of memorizing obstacles on the route in wayfinding task for people with visual impairment. The likelihood of surprise obstacles always exists for people with visual impairment and collision can lead to injury. Therefore, many people with visual impairment use a white cane to check if there are any obstacles in front of them. Accordingly, many researchers have developed a virtual white cane (Siu et al., Citation2020; Zhang et al., Citation2020; Zhao et al., Citation2018). When people with visual impairment recognize an obstacle with a white cane, they notice the presence of the obstacle as well as the obstacle’s material and location. In our system, by vibration and spatial sound on a VR white cane, people with visual impairment can sense the position and material of the obstacle. In Experiment 2, we attempted to determine how well the participants could remember the detected obstacles while wayfinding using the two different locomotion methods. Research question for Experiment 2 was as follows.

RQ 2: When people with visual impairment memorize the obstacles encountered on the route after wayfinding, is there a difference in the accuracy of the memory by walk-in-place and by overground walking?

4.3.1. Experiment 2 setting

Experiment 2 used the same systems as Experiment 1, but only two routes in Experiment 1 were used and five virtual obstacles were added on those routes. The placement and types of added obstacles are shown in the two images on the left in . After wayfinding task, participants expressed their perceived obstacles by placing LegoTM blocks, as shown in the two images on the right in .

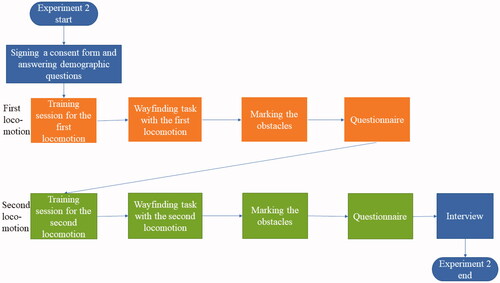

4.3.2. Experimental procedures and collected data

Each participant signed the consent form and wore eye patches on both eyes to control errors that could be caused by the various levels of vision impairment among the participants. Before starting the main experiment, the participants had a 20 min practice session, familiarizing them with the environment and obstacles in VR wayfinding. During the experiment, each participant walked one walk-in-place path and one overground walking path for wayfinding task. The order of locomotion methods and path for each method was balanced according to the Latin Square. The main task of the experiment was to memorize the positions of the five obstacles. After wayfinding, participants had to place the blocks on the cloned route indicating the locations of the identified obstacles. We collected data regarding how accurately participants positioned the blocks in both routes. The participants answered the questionnaire after all blocks were placed. Then, the researchers interviewed the participants on what they felt during the experiment. shows the experiment flow of Experiment 2.

Figure 10. Experiment Flow of Experiment 2 (orange: first locomotion device experiment, green: second locomotion device experiment).

Data collection was the same as in Experiment 1. However, the correct memory was collected differently from Experiment 1 by checking whether the position of the detected obstacle was correct. Among the answers, only the answer that detected the obstacle and placed it in the correct location or the answer that not detected the obstacle and placed it considered as the correct answer. Additionally, only when an obstacle was marked at the correct location but not detected, or when an obstacle was detected but marked at the wrong location, it was determined as an incorrect answer.

4.3.3. Experiment 2 results

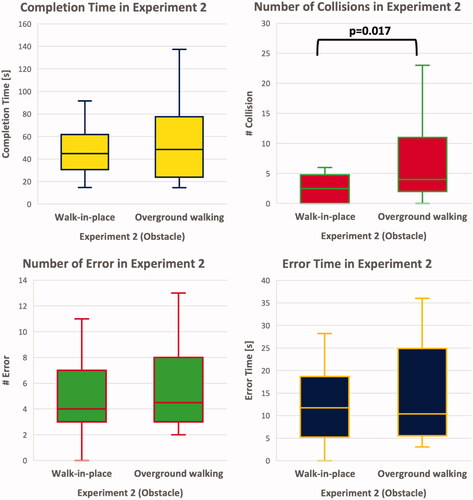

We conducted the within-subject study with sixteen participants (V1–V16). To examine the significant differences between walk-in-place and overground walking, the Wilcoxon signed-rank test was used because of the discrete variables. To assess obstacle memorization, five researchers individually judged whether all 16 participants responded to the correct locations of the obstacles (See ). Fleiss kappa showed a strong agreement between the assessments of the five raters (κ=.83, p < 0.001). Participants could memorize the position of the obstacles on the route more accurately with overground walking than with walk-in-place. The results for the correct memory of the obstacles are shown on the right side of , and the remaining results in Experiment 2 are depicted in .

Figure 11. Results of Experiment 2 between two locomotion methods. (b) The number of collisions show significant differences with p < 0.05.

All sixteen participants walked two different paths with five obstacles in each path using walk-in-place and overground walking. Therefore, each environment (walk-in-place and overground walking) had a maximum of 80 correctly recognized obstacles (16 people and 5 obstacles). The results showed that participants correctly recognized 44 obstacles in the walk-in-place environment and 62 obstacles in the overground walking environment. Using the Wilcoxon signed-rank test, the results indicated a significant difference in the number of correctly recognized obstacles between walk-in-place and overground walking experiences (Z=-3, p = 0.003). The rate of correctly recognizing obstacles was higher for overground walking (62/80 = 77.50%) than for walk-in-place (44/80 = 55%). Although the number of collisions for walk-in-place (M = 2.63) was significantly lower than that for overground walking (M = 6.81, Z = −2.219, p = 0.026), other measurements did not show significant differences between the two environments. We found that the overground walking environment allows people with visual impairment to remember obstacles better than the walk-in-place environment. The questionnaire results indicated significant difference in E2 question (i.e., “Fatigue did not increase with time while I was using the device”). Walk-in-place (M = 4.25, SE = 0.250) caused more fatigue in the participants than overground walking (M = 4.69, SE = 0.120) (Z=−2.070, p = 0.038). However, there was no significant difference in the rest of the questions in the questionnaire.

Moreover, we used Spearman correlation with the average speed and correct answer rate for each walking method to determine the trade-off between speed and accuracy. The average speed for each participant was calculated as the length of each path divided by the completion time. While there was no significant negative correlation between the average speed and answer rate in overground walking (rs(14) = −0.132, p = 0.313), a significant negative correlation between the two variables in walk-in-place was observed in Experiment 2(rs(14) = −0.453, p = 0.039).

Because participants V1–V11 engaged in Experiments 1 and 2 and V12–V16 participated in Experiment 2, the effect of learning by participating in both experiments on the results of Experiment 2 must be considered. To analyze the learning effect, the answer rates of V1–V11 and V12–V16 in Experiment 2 were compared using the Mann-Whitney U test. The results showed no significant differences in the answer rate among the V1–V11 group and the V12–V16 group in walk-in-place(mean answer rate of V1-V11: 0.51; mean answer rate of V12-V16: 0.64; Comparison in walk-in-place: U = 597.5; p = 0.278) or overground walking(mean answer rate of V1-V11: 0.73; mean answer rate of V12-V16: 0.88; Comparison in overground walking: U = 582.5; p = 0.132).

In the interview, participants mentioned the advantages and disadvantages of the two wayfinding methods. V7 commented that the walk-in-place was fun and gave him a sense of stability. However, the gait in the walk-in-place was unnatural, and the number of steps and distance were difficult to predict. V9 reported that in walk-in-place, it was difficult to predict how far he moved. Participants reported that they could walk more comfortably in overground walking without additional physical burden than walk-in-place. V2 said that overground walking was easier than walking in place because it was easy for him to apply the familiar sense of walking. However, it was difficult to walk in a straight line due to veering. V8 reported that walking in a straight line was somewhat daunting.

5. Discussion

In this study, we confirmed whether the people with visual impairment memorized the path they walked and the obstacles on the path using walk-in-place and overground walking, which are locomotion methods where the people with visual impairment can move in virtual reality using their legs. The major difference between the two methods lies in the gait variability, which refers to the extent to which movements are made during walking deviation (Hollman et al., Citation2016). The results showed that walk-in-place had lower gait variability than overground walking. The fluctuations of walk-in-place in the time taken to move one step and the maximum tilt of the trunk in the sagittal plane were smaller than those of overground walking (Dingwell et al., Citation2001; Hollman et al., Citation2016; Terrier & Dériaz, Citation2011). As a result of Experiment 1, walk-in-place made people with visual impairment memorize the path more accurately than overground walking, indicating that the lower the variability of gait, such as walk-in-place, the better the route is remembered owing to the low cognitive load characteristics. In an experiment where the cognitive task of answering an array of numbers by subtracting seven from a given random number was performed with walk-in-place and overground walking, the cognitive performance of the walk-in-place environment was significantly better than the performance of the overground walking environment (Penati et al., Citation2020).

For people with visual impairment who cognitively require more concentration on walking than people with normal vision, the result of Experiment 1 can interpreted as they also felt less cognitive load with walk-in-place than overground walking. The cognitive load for checking their own gait is called mobility monitoring, and this load is required for people with visual impairment owing to reduced visual ability (Rand et al., Citation2015). This load can be alleviated with a walking assistant. Rand et al. found that among people who had impaired visual ability by low vision glasses, those who memorized obstacles without a walking assistant had lower memory accuracy for obstacles than those who memorized obstacles with the help of a walking assistant (Rand et al., Citation2015). In our experiment which people with visual impairment walk alone, walk-in-place helps people with visual impairment to memorize the road by lowering the cognitive load on gait. In the interview, V6 mentioned that walk-in-place was able to memorize the route better because this gait method was more stable than overground walking. Additionally, the phenomenon of getting out of the intended path in a restricted state of vision is called veering, which was observed in overground walking in Experiment 1 (Kallie et al., Citation2007). However, in many existing studies on gait variability of overground walking, it is difficult to research veering because it does not occur in people with normal visual ability in a controlled environment (Ekvall Hansson et al., Citation2021; Kaipust et al., Citation2012; Nohelova et al., Citation2021). Moreover, existing studies on walk-in-place mainly use treadmills that move in a straight line, so it is difficult to study the directional variability of walking (Houdijk et al., Citation2012; Penati et al., Citation2020; Smulders et al., Citation2009). However, Kallie et al. confirmed that people with visual impairment had about 1.3 degrees of variability in walking direction (Kallie et al., Citation2007). In Experiment 1, overground walking confirmed that veering occurred due to the variability of walking direction, and confirmed that people with visual impairment collided with the wall or missed the dog by veering. For example, V1 veered to the right from a straight line and wandered off by hitting the virtual wall in overground walking environment. Because veering has a characteristic in which the direction people with visual impairment perceive and the actual movement direction are different (Kallie et al., Citation2007), we confirmed that people with visual impairment had answered the wrong path with overground walking more than walk-in-place. However, in walk-in-place environment, people with visual impairment did not veer from virtual reality and memorized the path more accurately than in an overground walking environment. Walk-in-place in this study was implemented with Virtuix Omni, so it was possible to change direction. Additionally, because the safety parts of the device held the torso of people with visual impairment, they moved in a straight line without veering. V1 went all the way in a straight line with Virtuix Omni, then turned at once to match the turn of the virtual guide dog. This phenomenon is also confirmed in , which shows the line data that recorded the walking direction of V6.

In Experiment 2, the obstacle memory accuracy was higher in overground walking than in walk-in-place considering overground walking is easier to proceed obstacle negotiation than walk-in-place. Obstacle negotiation or obstacle avoidance refers to the process of identifying an obstacle and stepping on it or avoiding it (Hill et al., Citation1997, Citation1999; Hofstad et al., Citation2006; Hofstad et al., Citation2009; Vrieling et al., Citation2007). Obstacle avoidance is one of the characteristics to be considered in wayfinding because it can prevent falls and injuries caused by obstacles. To avoid an obstacle while walking, it is necessary to reduce the speed and step size while walking (Galna et al., Citation2009; McFadyen & Prince, Citation2002). In overground walking, it is easy to change the speed and reduce the step size during walking, but it is not easy to control the gait speed and stride length in walk-in-place because the ability to control both gait speed and stride length is limited (Nevisipour et al., Citation2023). In this study, the locomotion difference between walk-in-place and overground walking seemed to have affected the perception of obstacles. By analyzing the video of wayfinding, it was confirmed that V12 reacted differently to obstacles according to each locomotion method in the process of navigating obstacles. When using overground walking, V12 identified the location of the obstacle by striking it a few more times where it heard the sound of the cane hitting it. But when walk-in-place was used, V12 kept going forward despite the sound of the cane hitting an obstacle.

The results of Experiment 2 can be explained by the differences in the vestibular feedback that each walking method can support. Overground walking provides more accurate vestibular feedback for calculating the position than walk-in-place does because the vestibular feedback of feet movement in walk-in-place makes it difficult to estimate the length that the participants have walked, as reported by V9. V9 stated that walk-in-place made it difficult to predict how far one had moved. A related study reported that people with visual impairment are required to change their motion-based perception of position and apply it to their own spatial mechanisms (Seemungal et al., Citation2007). In other words, if people with visual impairment do not receive correct or sufficient vestibular feedback to estimate length, their ability to infer distance is impaired. Experiment 2 required complex vestibular information to calculate the relative positions of the obstacles. The lack of translational vestibular information from walk-in-place results in less accurate memorization of obstacle positions compared to overground walking.

The results confirmed that the characteristics of the locomotion method had an effect on memorizing roads and obstacles. First, because walk-in-place has lower mobility monitoring than overground walking, it makes people with visual impairment pay less attention to walking, helping them memorize the route better. Conversely, in memorizing obstacles, overground walking is easier to control the walking speed and length to avoid obstacles than walk-in-place, so it helps take action to perceive the position of an obstacle.

This result shows that walk-in-place is a better alternative for acquiring route information than overground walking. As walk-in-place is not affected by real-space constraints, people with visual impairment can walk in unlimited virtual environments. Further, it requires less real estate to develop a virtual environment than does overground walking. However, overground walking is a better choice for acquiring detailed spatial information (e.g., obstacle position). Compared with walk-in-place, overground walking was closer to real-world walking, and participants could remember obstacle positions more accurately with overground walking than with walk-in-place in this study.

These results can be extended to construct cognitive maps or graphs (Peer et al., Citation2021). In the context of the research by Peer et al., a cognitive map represents spatial information based on Euclidean coordinates such as the latitude and longitude. The cognitive graph represents the location based on distinct links between local cues. The task of Experiment 1 required building a cognitive map that relied heavily on a Euclidean grid by memorizing the order of rotation directions. However, the task in Experiment 2 required creating a cognitive graph to memorize the positions of route landmarks that were obstacles by linking information together. This result implies that walk-in-place is better than overground walking for memorizing cognitive maps such as grid-like cities. Otherwise, overground walking is superior to walk-in-place walking in terms of memorizing cognitive graphs such as cities with complex buildings.

6. Limitations and future work

A limitation of our virtual guide dog is that it is different from a real guide dog in its functionality. Our virtual guide dog continuously produces spatial sounds to assist people with visual impairment in determining their direction, whereas a real guide dog only produces sounds to alert individuals of immediate danger (e.g., approaching cars or people) or if it is separated from the owner. Additionally, a real guide dog can provide force feedback via a harness to guide them, which our virtual guide dog cannot provide. As a suggestion for future studies, we propose the development of a virtual guide dog that can provide force feedback to assist people with visual impairment in navigation. Another limitation is that all participants were blindfolded during wayfinding, contrary to real-life scenarios. The reason for blindfolding was to create a consistent visual environment for comparing the two walking methods. Therefore, this study could be extended to compare people with various visual conditions, including those who have been blind for a few years and those who have been chronically blind without a blindfold. The questionnaire used herein lacked validity and unidimensionality. A new questionnaire was created by adopting questions from previous studies to analyze the perceived feelings concerning each walking method. However, due to the limited number of participants (16), it was difficult to proceed with exploratory or confirmatory factor analysis and Rasch analysis, which estimate the reliability of items and participants. Therefore, we suggest conducting the experiment with a larger number of participants in future studies. With a sufficient number of participants, Rasch analysis can be employed to verify the psychological characteristics of respondents, appropriateness and difficulty of questions, and suitability of response categories based on item response theory (IRT). We suggest conducting a study to compare different walking methods that restrict various parts of the vestibular feedback to acquire spatial information. In this context, a previous study demonstrated the utility of various walking methods in virtual reality, such as stepper machines or redirected walking, but did not compare their effectiveness in terms of memorizing spatial information (Bozgeyikli et al., Citation2019).

7. Conclusion

This study compares two locomotion methods (walk-in-place and overground walking) in VR wayfinding systems for people with visual impairment to acquire spatial information (routes and obstacles). In this system, the people with visual impairment acquire spatial information about the virtual environment through wayfinding by moving their legs. The results showed that while people with visual impairment memorized routes better with walk-in-place than overground walking due to less cognitive load on mobility monitoring and less veering in walk-in-place. On the other hand, they memorized the location of obstacles better with overground walking than walk-in-place owing to better obstacle negotiation and obstacle avoidance ability in overground walking. Veering in overground walking causes a distorted cognitive map owing to the difference between the perceived and actual movements. Difficulty of controlling gait speed and step length in walk-in-place makes obstacle perception hard due to abnormal gait by Virtuix Omni. Based on these results, we suggest that walk-in-place is effective for memorizing a route whereas overground walking is effective for memorizing an obstacle. We believe our findings will be helpful to those designing VR wayfinding systems for people with visual impairment.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Sangsun Han

Sangsun Han is formerly ME-PhD student in Human-Computer Interaction from Hanyang University. After graduation, he is currently the post-doc researcher in bionics research center of Korea Institutes of Science and Technology. He is interested in digital therapies based on XR(eXtended Reality).

Pilhyoun Yoon

Pilhyoun Yoon graduated from Hanyang University’s HCI PhD program and is currently a professor at Inha Technical college of Digital Marketing Engineering. His research interests include authentic learning, virtual learning, and distance education using VR and AR.

Xiangshi Ren

Xiangshi Ren is a lifetime tenured professor in the School of Information and founding director of the Center for Human-Engaged Computing (CHEC) at Kochi University of Technology, Japan. His research interests include all aspects of Human-Computer Interaction and Human-Engaged Computing (HEC).

Kibum Kim

Kibum Kim is a full professor in the Department of Human-Computer Interaction at Hanyang University in South Korea. He researches in the areas of HCI, CSCW (Computer-Supported Cooperative Work), CSCL (Computer-Supported Collaborative Learning), VR, and computer education.

References

- Ahmetovic, D., Guerreiro, J., Ohn-Bar, E., Kitani, K. M., & Asakawa, C. (2019). Impact of Expertise on Interaction Preferences for Navigation Assistance of Visually Impaired Individuals [Paper presentation]. Proceedings of the 16th International Web for All Conference. https://doi.org/10.1145/3315002.3317561

- Albouys-Perrois, J., Laviole, J., Briant, C., & Brock, A. M. (2018). Towards a Multisensory Augmented Reality Map for Blind and Low Vision People: A Participatory Design Approach [Paper presentation]. Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. https://doi.org/10.1145/3173574.3174203

- Alwi, S. R. A. W., & Noh, M. (2013). Survey on outdoor navigation system needs for blind people [Paper presentation]. 2013 IEEE Student Conference on Research and Developement, Putrajaya, Malaysia. https://doi.org/10.1109/SCOReD.2013.7002560

- Andrade, R., Baker, S., Waycott, J., & Vetere, F. (2018). Echo-house: Exploring a virtual environment by using echolocation [Paper presentation]. Proceedings of the 30th Australian Conference on Computer-Human Interaction. https://doi.org/10.1145/3292147.3292163

- Andrade, R., Waycott, J., Baker, S., & Vetere, F. (2021). Echolocation as a Means for People with Visual Impairment (PVI) to Acquire Spatial Knowledge of Virtual Space. ACM Transactions on Accessible Computing, 14(1), 1–25. https://doi.org/10.1145/3448273

- Bozgeyikli, E., Raij, A., Katkoori, S., & Dubey, R. (2016). Locomotion in Virtual Reality for Individuals with Autism Spectrum Disorder [Paper presentation]. Proceedings of the 2016 Symposium on Spatial User Interaction. https://doi.org/10.1145/2983310.2985763

- Bozgeyikli, E., Raij, A., Katkoori, S., & Dubey, R. (2019). Locomotion in virtual reality for room scale tracked areas. International Journal of Human-Computer Studies, 122, 38–49. https://doi.org/10.1016/j.ijhcs.2018.08.002

- Brock, A. M., Truillet, P., Oriola, B., Picard, D., & Jouffrais, C. (2015). Interactivity improves usability of geographic maps for visually impaired people. Human–Computer Interaction, 30(2), 156–194. https://doi.org/10.1080/07370024.2014.924412

- Cirio, G., Olivier, A. H., Marchal, M., & Pettré, J. (2013). Kinematic Evaluation of Virtual Walking Trajectories. IEEE Transactions on Visualization and Computer Graphics, 19(4), 671–680. https://doi.org/10.1109/TVCG.2013.34

- Connors, E. C., Chrastil, E. R., Sánchez, J., & Merabet, L. B. (2014). Virtual environments for the transfer of navigation skills in the blind: A comparison of directed instruction vs. Video Game Based Learning Approaches. Frontiers in Human Neuroscience, 8, 223. https://doi.org/10.3389/fnhum.2014.00223

- Cornell, E. H., & Heth, C. D. (2004). Memories of travel: Dead reckoning within the cognitive map (Human Spatial Memory (pp. 211–236). Psychology Press. https://doi.org/10.4324/9781410609984-18

- Creed, C., Al-Kalbani, M., Theil, A., Sarcar, S., & Williams, I. (2023). Inclusive augmented and virtual reality: A research agenda. International Journal of Human–Computer Interaction, 1–20. https://doi.org/10.1080/10447318.2023.2247614

- Cyberith (2021). CYBERITH. https://www.cyberith.com/

- Darken, R. P., & Sibert, J. L., (1996). Wayfinding strategies and behaviors in large virtual worlds [Paper presentation]. Proceedings of the SIGCHI conference on human factors in computing systems. https://doi.org/10.1145/238386.238459

- Dingwell, J. B., Cusumano, J. P., Cavanagh, P. R., & Sternad, D. (2001). Local dynamic stability versus kinematic variability of continuous overground and treadmill walking. Journal of Biomechanical Engineering, 123(1), 27–32. https://doi.org/10.1115/1.1336798

- Ekvall Hansson, E., Valkonen, E., Olsson Möller, U., Chen Lin, Y., Magnusson, M., & Fransson, P.-A. (2021). Gait flexibility among older persons significantly more impaired in fallers than non-fallers—a longitudinal study. International Journal of Environmental Research and Public Health, 18(13), 7074. https://www.mdpi.com/1660-4601/18/13/7074 https://doi.org/10.3390/ijerph18137074

- Fazzi, D. L., & Petersmeyer, B. A. (2001). Imagining the possibilities: Creative approaches to orientation and mobility instruction for persons who are visually impaired. American Foundation for the Blind.

- Ferrell, K. A. (2011). Reach out and teach: Helping your child who is visually impaired learn and grow. American Foundation for the Blind.

- Gabbard, J. L. (1997). A taxonomy of usability characteristics in virtual environments. Virginia Tech.

- Galna, B., Peters, A., Murphy, A. T., & Morris, M. E. (2009). Obstacle crossing deficits in older adults: A systematic review. Gait & Posture, 30(3), 270–275. https://doi.org/10.1016/j.gaitpost.2009.05.022

- Gipsman, S. C. (1981). Effect of visual condition on use of proprioceptive cues in performing a balance task. Journal of Visual Impairment & Blindness, 75(2), 50–54. https://doi.org/10.1177/0145482X8107500203

- Google (2022). Google Resonance Audio. https://resonance-audio.github.io/resonance-audio/

- Guth, D., & LaDuke, R. (1994). The veering tendency of blind pedestrians: An analysis of the problem and literature review. Journal of Visual Impairment & Blindness, 88(5), 391–400.

- Guth, D., & LaDuke, R. (1995). Veering by blind pedestrians: Individual differences and their implications for instruction. Journal of Visual Impairment & Blindness, 89(1), 28–37. https://doi.org/10.1177/0145482X9508900107

- Hill, S. W., Patla, A. E., Ishac, M. G., Adkin, A. L., Supan, T. J., & Barth, D. G. (1997). Kinematic patterns of participants with a below-knee prosthesis stepping over obstacles of various heights during locomotion. Gait & Posture, 6(3), 186–192. https://doi.org/10.1016/S0966-6362(97)01120-X

- Hill, S. W., Patla, A. E., Ishac, M. G., Adkin, A. L., Supan, T. J., & Barth, D. G. (1999). Altered kinetic strategy for the control of swing limb elevation over obstacles in unilateral below-knee amputee gait. Journal of Biomechanics, 32(5), 545–549. https://doi.org/10.1016/S0021-9290(98)00168-7

- Hofstad, C. J., van der Linde, H., Nienhuis, B., Weerdesteyn, V., Duysens, J., & Geurts, A. C. (2006). High Failure Rates When Avoiding Obstacles During Treadmill Walking in Patients With a Transtibial Amputation. Archives of Physical Medicine and Rehabilitation, 87(8), 1115–1122. https://doi.org/10.1016/j.apmr.2006.04.009

- Hofstad, C. J., Weerdesteyn, V., van der Linde, H., Nienhuis, B., Geurts, A. C., & Duysens, J. (2009). Evidence for bilaterally delayed and decreased obstacle avoidance responses while walking with a lower limb prosthesis. Clinical Neurophysiology: official Journal of the International Federation of Clinical Neurophysiology, 120(5), 1009–1015. https://doi.org/10.1016/j.clinph.2009.03.003

- Hollman, J. H., Watkins, M. K., Imhoff, A. C., Braun, C. E., Akervik, K. A., & Ness, D. K. (2016). A comparison of variability in spatiotemporal gait parameters between treadmill and overground walking conditions. Gait & Posture, 43, 204–209. https://doi.org/10.1016/j.gaitpost.2015.09.024

- Houdijk, H., van Ooijen, M. W., Kraal, J. J., Wiggerts, H. O., Polomski, W., Janssen, T. W. J., & Roerdink, M. (2012). Assessing Gait Adaptability in People With a Unilateral Amputation on an Instrumented Treadmill With a Projected Visual Context. Physical Therapy, 92(11), 1452–1460. https://doi.org/10.2522/ptj.20110362

- INFINADECK (2020). Infinadeck. https://www.infinadeck.com/

- Ivanchev, M., Zinke, F., & Lucke, U. (2014). Pre-journey Visualization of Travel Routes for the Blind on Refreshable Interactive Tactile Displays [Paper presentation]. Computers helping people with special needs. https://doi.org/10.1007/978-3-319-08599-9_13

- Kaipust, J. P., Huisinga, J. M., Filipi, M., & Stergiou, N. (2012). Gait variability measures reveal differences between multiple sclerosis patients and healthy controls. Motor Control, 16(2), 229–244. https://doi.org/10.1123/mcj.16.2.229

- Kalawsky, R. S. (1999). VRUSE—a computerised diagnostic tool: For usability evaluation of virtual/synthetic environment systems. Applied Ergonomics, 30(1), 11–25. https://doi.org/10.1016/S0003-6870(98)00047-7

- Kallie, C. S., Schrater, P. R., & Legge, G. E. (2007). Variability in stepping direction explains the veering behavior of blind walkers. Journal of Experimental Psychology. Human Perception and Performance, 33(1), 183–200. https://doi.org/10.1037/0096-1523.33.1.183

- KATVR (2021). KAT Walk Mini S. https://www.kat-vr.com/products/kat-walk-mini-s

- Keles, H. (2015). SpaceWalkerVR first test video VIRTUAL REALITY WALKING SIMULATOR. https://www.youtube.com/watch?v=6ySx4L0Ic74

- Kennedy, R. S., Lane, N. E., Berbaum, K. S., & Lilienthal, M. G. (1993). Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. The International Journal of Aviation Psychology, 3(3), 203–220. https://doi.org/10.1207/s15327108ijap0303_3

- Kim, J. (2020). VIVR: Presence of immersive interaction for visual impairment virtual reality. IEEE Access. 8, 196151–196159. https://doi.org/10.1109/ACCESS.2020.3034363

- Kolarik, A. J., Cirstea, S., Pardhan, S., & Moore, B. C. J. (2014). A summary of research investigating echolocation abilities of blind and sighted humans. Hearing Research, 310, 60–68. http://www.sciencedirect.com/science/article/pii/S0378595514000185 https://doi.org/10.1016/j.heares.2014.01.010

- Kolarik, A. J., Moore, B. C. J., Zahorik, P., Cirstea, S., & Pardhan, S. (2016). Auditory distance perception in humans: A review of cues, development, neuronal bases, and effects of sensory loss. Attention, Perception & Psychophysics, 78(2), 373–395. https://doi.org/10.3758/s13414-015-1015-1

- Kontarinis, D. A., & Howe, R. D. (1995). Tactile display of vibratory information in teleoperation and virtual environments. Presence: Teleoperators and Virtual Environments, 4(4), 387–402. https://doi.org/10.1162/pres.1995.4.4.387

- Kreimeier, J., & Götzelmann, T. (2019). First Steps Towards Walk-In-Place Locomotion and Haptic Feedback in Virtual Reality for Visually Impaired [Paper presentation]. Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems. https://doi.org/10.1145/3290607.3312944

- Kreimeier, J., Karg, P., & Götzelmann, T. (2020). BlindWalkVR: Formative insights into blind and visually impaired people’s VR locomotion using commercially available approaches [Paper presentation]. Proceedings of the 13th ACM international conference on pervasive technologies related to assistive environments. https://doi.org/10.1145/3389189.3389193

- Kreimeier, J., Ullmann, D., Kipke, H., & Götzelmann, T. (2020). Initial Evaluation of Different Types of Virtual Reality Locomotion Towards a Pedestrian Simulator for Urban and Transportation Planning [Paper presentation]. Extended abstracts of the 2020 CHI conference on human factors in computing systems. https://doi.org/10.1145/3334480.3382958

- Lahav, O., Gedalevitz, H., Battersby, S., Brown, D., Evett, L., & Merritt, P. (2018). Virtual environment navigation with look-around mode to explore new real spaces by people who are blind. Disability and Rehabilitation, 40(9), 1072–1084. https://doi.org/10.1080/09638288.2017.1286391

- Lee, J., & Hwang, J.-I. (2019). Walk-in-Place Navigation in VR [Paper presentation]. Proceedings of the 2019 ACM International Conference on Interactive Surfaces and Spaces, Daejeon, Republic of Korea. https://doi.org/10.1145/3343055.3361926

- Maidenbaum, S., Levy-Tzedek, S., Chebat, D.-R., & Amedi, A. (2013). Increasing accessibility to the blind of virtual environments, using a virtual mobility aid based on the "EyeCane": feasibility study. PloS One, 8(8), e72555. https://doi.org/10.1371/journal.pone.0072555

- May, K. R., Tomlinson, B. J., Ma, X., Roberts, P., & Walker, B. N. (2020). Spotlights and soundscapes: On the design of mixed reality auditory environments for persons with visual impairment. ACM Transactions on Accessible Computing, 13(2), 1–47. https://doi.org/10.1145/3378576

- McFadyen, B. J., & Prince, F. (2002). Avoidance and accommodation of surface height changes by healthy, community-dwelling, young, and elderly men. The Journals of Gerontology. Series A, Biological Sciences and Medical Sciences, 57(4), B166–B174. https://doi.org/10.1093/gerona/57.4.B166

- Nescher, T., Zank, M., & Kunz, A. (2016). Simultaneous mapping and redirected walking for ad hoc free walking in virtual environments [Paper presentation]. 2016 IEEE Virtual Reality (VR). https://doi.org/10.1109/VR.2016.7504742

- Nevisipour, M., Sugar, T., & Lee, H. (2023). Multi-tasking deteriorates trunk movement control during and after obstacle avoidance. Human Movement Science, 87, 103053. https://doi.org/10.1016/j.humov.2022.103053

- Nohelova, D., Bizovska, L., Vuillerme, N., & Svoboda, Z. (2021). Gait variability and complexity during single and dual-task walking on different surfaces in outdoor environment. Sensors, 21(14), 4792. https://www.mdpi.com/1424-8220/21/14/4792 https://doi.org/10.3390/s21144792

- Papadopoulos, K., Koustriava, E., & Barouti, M. (2017). Cognitive maps of individuals with blindness for familiar and unfamiliar spaces: Construction through audio-tactile maps and walked experience. Computers in Human Behavior, 75, 376–384. https://doi.org/10.1016/j.chb.2017.04.057

- Peer, M., Brunec, I. K., Newcombe, N. S., & Epstein, R. A. (2021). Structuring knowledge with cognitive maps and cognitive graphs. Trends in Cognitive Sciences, 25(1), 37–54. https://doi.org/10.1016/j.tics.2020.10.004

- Penati, R., Schieppati, M., & Nardone, A. (2020). Cognitive performance during gait is worsened by overground but enhanced by treadmill walking. Gait & Posture, 76, 182–187. https://doi.org/10.1016/j.gaitpost.2019.12.006

- Picinali, L., Afonso, A., Denis, M., & Katz, B. F. G. (2014). Exploration of architectural spaces by blind people using auditory virtual reality for the construction of spatial knowledge. International Journal of Human-Computer Studies, 72(4), 393–407. https://doi.org/10.1016/j.ijhcs.2013.12.008

- Rand, K. M., Creem-Regehr, S. H., & Thompson, W. B. (2015). Spatial learning while navigating with severely degraded viewing: The role of attention and mobility monitoring. Journal of Experimental Psychology. Human Perception and Performance, 41(3), 649–664. https://doi.org/10.1037/xhp0000040

- Riazi, A., Riazi, F., Yoosfi, R., & Bahmeei, F. (2016). Outdoor difficulties experienced by a group of visually impaired Iranian people. Journal of Current Ophthalmology, 28(2), 85–90. https://doi.org/10.1016/j.joco.2016.04.002

- Ricci, F. S., Boldini, A., Beheshti, M., Rizzo, J.-R., & Porfiri, M. (2023). A virtual reality platform to simulate orientation and mobility training for the visually impaired. Virtual Reality, 27(2), 797–814. https://doi.org/10.1007/s10055-022-00691-x

- Ricci, F. S., Boldini, A., Ma, X., Beheshti, M., Geruschat, D. R., Seiple, W. H., Rizzo, J.-R., & Porfiri, M. (2023). Virtual reality as a means to explore assistive technologies for the visually impaired. PLOS Digital Health, 2(6), e0000275. https://doi.org/10.1371/journal.pdig.0000275

- Richard, P., Birebent, G., Coiffet, P., Burdea, G., Gomez, D., & Langrana, N. (1996). Effect of Frame Rate and Force Feedback on Virtual Object Manipulation. Presence: Teleoperators and Virtual Environments, 5(1), 95–108. https://doi.org/10.1162/pres.1996.5.1.95

- Sánchez, J., Espinoza, M., & Garrido, J. (2012). Videogaming for wayfinding skills in children who are blind [Paper presentation]. 9th Proceedings 9th International Conference Series on Disability, Virtual Reality and Associated Technologies.

- Seemungal, B. M., Glasauer, S., Gresty, M. A., & Bronstein, A. M. (2007). Vestibular perception and navigation in the congenitally blind. Journal of Neurophysiology, 97(6), 4341–4356. https://doi.org/10.1152/jn.01321.2006

- Shi, L., Tomlinson, B. J., Tang, J., Cutrell, E., McDuff, D., Venolia, G., Johns, P., & Rowan, K. (2019). Accessible video calling: Enabling nonvisual perception of visual conversation cues. Proceedings of the ACM on Human-Computer Interaction, 3(CSCW), 1–22. https://doi.org/10.1145/3359233

- Shneiderman, B., & Plaisant, C. (2010). Designing the user interface: Strategies for effective human-computer interaction. Pearson Education India.

- Siu, A. F., Sinclair, M., Kovacs, R., Ofek, E., Holz, C., & Cutrell, E. (2020). Virtual Reality Without Vision: A Haptic and Auditory White Cane to Navigate Complex Virtual Worlds [Paper presentation]. Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. https://doi.org/10.1145/3313831.3376353

- Slater, M., Usoh, M., & Steed, A. (1995). Taking steps: The influence of a walking technique on presence in virtual reality. ACM Transactions on Computer-Human Interaction, 2(3), 201–219. https://doi.org/10.1145/210079.210084

- Smulders, E., Schreven, C., Van Lankveld, W., Duysens, J., & Weerdesteyn, V. (2009). Obstacle avoidance in persons with rheumatoid arthritis walking on a treadmill. Clinical and Experimental Rheumatology, 27(5), 779–785.

- Sylaiou, S., & Fidas, C. (2022). Supporting people with visual impairments in cultural heritage: Survey and future research directions. International Journal of Human–Computer Interaction, 1–16. https://doi.org/10.1080/10447318.2022.2098930

- Terrier, P., & Dériaz, O. (2011). Kinematic variability, fractal dynamics and local dynamic stability of treadmill walking. Journal of NeuroEngineering and Rehabilitation, 8(1), 12. https://doi.org/10.1186/1743-0003-8-12

- Thevin, L., Briant, C., & Brock, A. M. (2020). X-road: Virtual reality glasses for orientation and mobility training of people with visual impairments. ACM Transactions on Accessible Computing, 13(2), 1–47. https://doi.org/10.1145/3377879

- Usoh, M., Arthur, K., Whitton, M. C., Bastos, R., Steed, A., Slater, M., & Brooks Jr, F. P. (1999). Walking > walking-in-place > flying, in virtual environments [Paper presentation]. Proceedings of the 26th annual conference on computer graphics and interactive techniques. https://doi.org/10.1145/311535.311589

- Virtuix (2022). Omni by Virtuix - The leading and most popular VR motion platform. https://www.virtuix.com/

- Vive (2022). VIVE United States | Next-level VR Headsets and Apps. https://www.vive.com/

- Vrieling, A. H., van Keeken, H. G., Schoppen, T., Otten, E., Halbertsma, J. P. K., Hof, A. L., & Postema, K. (2007). Obstacle crossing in lower limb amputees. Gait & Posture, 26(4), 587–594. https://doi.org/10.1016/j.gaitpost.2006.12.007

- Wang, Z., Li, B., Hedgpeth, T., & Haven, T. (2009). Instant tactile-audio map: Enabling access to digital maps for people with visual impairment [Paper presentation]. The 11th International ACM SIGACCESS Conference on Computers and Accessibility. https://doi.org/10.1145/1639642.1639652

- Wickens, C. D., & Baker, P. (1995). Cognitive issues in virtual reality (Virtual Environments and Advanced Interface Design). Oxford Academic Press Inc. https://doi.org/10.1093/oso/9780195075557.003.0024

- Witmer, B. G., & Singer, M. J. (1998). Measuring Presence in Virtual Environments: A Presence Questionnaire. Presence: Teleoperators and Virtual Environments, 7(3), 225–240. https://doi.org/10.1162/105474698565686

- Woodrow, B., & Thomas, A. F. (1995). Virtual environments and advanced interface design. Oxford University Press, Inc.

- Wu, W., Morina, R., Schenker, A., Gotsis, A., Chivukula, H., Gardner, M., Liu, F., Barton, S., Woyach, S., & Sinopoli, B. (2017). Echoexplorer: A game app for understanding echolocation and learning to navigate using echo cues. ICAD 2017, Pennsylvania State University.

- Zhai, S., Milgram, P., & Buxton, W. (1996). The influence of muscle groups on performance of multiple degree-of-freedom input [Paper presentation]. Proceedings of The SIGCHI Conference on Human Factors in Computing Systems. https://doi.org/10.1145/238386.238534

- Zhang, L., Wu, K., Yang, B., Tang, H., & Zhu, Z. (2020). Exploring virtual environments by visually impaired using a mixed reality cane without visual feedback [Paper presentation]. 2020 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct). https://doi.org/10.1109/ISMAR-Adjunct51615.2020.00028

- Zhang, S., Liu, Y., Song, F., Yu, D., Bo, Z., & Zhang, Z. (2023). The effect of audiovisual spatial design on user experience of bare-hand interaction in VR. International Journal of Human–Computer Interaction, 1–12. https://doi.org/10.1080/10447318.2023.2171761

- Zhao, Y., Bennett, C. L., Benko, H., Cutrell, E., Holz, C., Morris, M. R., & Sinclair, M. (2018). Enabling people with visual impairments to navigate virtual reality with a haptic and auditory cane simulation [Paper presentation]. CHI Conference on Human Factors in Computing Systems, Proceedings of the 2018 https://doi.org/10.1145/3173574.3173690

Appendices