?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This study employs an innovative mixed-reality game to investigate trust, self-efficacy, and collaboration willingness with a mobile robot in relation to information and mental models. Previous research has demonstrated the significant impact of introductory information on technology use and trust, which further evolves over the course of interactions. Expanding on this, we explore new facets of information content through comprehensive measurements in two empirical studies: In study 1, 68 participants watched one of two video tutorials (high or low information richness) before collaborating with a physical robot in a shared virtual 3D environment. Repeated measures served to investigate temporal dynamics. In study 2, 37 participants additionally engaged in qualitative interviews to better understand how their mental models changed with increasing robot experience. Both tutorials were found to influence user trust and self-efficacy over time, with no significant differences between the two variants. Users valued information on how to communicate with the robot, but still relied on trial-and-error approaches. We argue for a moderating effect of information content and richness and highlight the need for carefully designed tutorials to enhance human-robot interaction.

1. Introduction: About getting to know team partners

Due to technological advances and their increasing affordability, mobile collaborative robots are becoming popular in industrial work environments (Berg et al., Citation2019; Graetz & Michaels, Citation2018; Mara et al., Citation2021). However, workers have different social and task-related concerns about novel robots at their workplaces (Leichtmann et al., Citation2023). A survey of more than 500 factory workers conducted by Leichtmann et al. (Citation2023) revealed worries about how jobs might change due to novel robots, or fears that robots will disrupt the workflow. Thus, working with robots requires some familiarization. Individual work approaches and movement patterns must be identified, and the human-robot team must learn to coordinate actions jointly (Klein et al., Citation2004). In such interactions human users have mental models of the robot with certain expectations on how the robot will behave or how they, as users, should communicate with the new technology (Klein et al., Citation2004; Leichtmann et al., Citation2023; Phillips et al., Citation2011; Sciutti et al., Citation2018).

These mental models act as “frameworks that individuals construct in order to support their predictions and understanding of the world around them” (Phillips et al., Citation2011, p. 1491) which includes technical devices such as robots.

Based on these internal representations of robots, expectations regarding their behavior can be formed accordingly and shape variables such as trust (Beggiato & Krems, Citation2013; Kraus et al., Citation2020) or work-related concerns (Leichtmann et al., Citation2023). Additionally, these variables are dynamically adapted (Hoff & Bashir, Citation2015).

As such, mental models ultimately influence behavioral tendencies toward the robot such as safety distance (Leichtmann, Lottermoser, et al., Citation2022; Leichtmann & Nitsch, Citation2020). This might then affect the performance of the human-robot-team (St. Clair & Mataric, Citation2015; Wang et al., Citation2016). Thus, ensuring realistic mental models regarding robots is important (Endsley, Citation2000; Kieras & Bovair, Citation1984; Phillips et al., Citation2011). How different characteristics such as information richness – defined as the degree to which representations contain well-integrated information according to Gill et al. (Citation1998) – or information content influence dynamics of mental model development is still a topic of research in the field.

Previous research explored, for example, how new information affects user performance and psychological variables with technologies including autonomous driving (Körber, Citation2019) or video gaming (Morin et al., Citation2016) and has only focused on some specific aspects of such information (Körber, Citation2019, information on system limitations) or interaction effects with specific characteristics (Morin et al., Citation2016, user expertise). While there already exists a good basis of knowledge on the effects of new information on mental model building, research has been sparse (i) within the domain of human-robot interaction, (ii) exploring other information contents, and (iii) using multiple measures across the interaction – opening up possible research gaps that need further exploration.

In this article we thus focus on dynamic changes of mental models and associated psychological variables such as trust in a robot, utilizing a cooperative mixed-reality game. In this game, research participants played three cooperative mini-games with a real mobile manufacturing robot in a highly innovative virtual environment projected on wall and floor of a museum space to create immersive 3D experiences (Kuka et al. Citation2009). This game was used as the research basis for two empirical studies aimed at exploring the development of user mental models of a collaborative robot with mixed methods:

A quantitative user experiment tested the effects of prior information in two groups (two different tutorials varying in information richness) and effects over time with repeated measurements during the game. This aimed to test the change of psychological variables as indicators for mental model change with variance in information richness as information richness a) differs between groups and b) grows over the duration of a given interaction.

A qualitative semi-structured interview study was then conducted to get a deeper understanding of participants’ mental models and their adjustments.

The triangulation of the results from these two studies with different approaches derives a more comprehensive understanding of dynamic adjustments of mental models. The contributions of this work are three-fold:

Deeper knowledge on dynamic adjustments of expectations: We tested the effects of information richness on psychological variables (e.g., trust) in human-robot interactions to draw conclusions about the development of mental models.

More comprehensive understanding through mixed-methods approach: To exploit advantages and balance disadvantages of different research methods we used quantitative experimental exploration and qualitative in-depth interviews.

Introduction of a novel research environment: To further increase the diversity of research methods, we used a newly developed, innovative, and unique study setting of a mixed-reality game that enables human-robot collaboration in a highly immersive 3D environment.

The results will help to expand the theoretical basis on the effectiveness of tutorials and the formation of expectations throughout interaction with a robot. Furthermore, gained insights will allow for valuable practical conclusions. To address workers’ concerns regarding novel robots, training programs can be instrumental (see Leichtmann et al., Citation2023). Our work will therefore be helpful in the design of tutorials and training for users prior to the implementation of new robots in multiple practical domains such as industrial manufacturing, logistics, or agriculture.

In the next section, we lay the theoretical foundation on which this work is based and explore the current state of knowledge in the literature. Building on this, we derive our questions and show how the work can be integrated into this literature. We then provide a general methodological overview of the game and the two studies that build on it. We then describe the methods and results for each study separately, before integrating the findings into the discussion.

2. Theoretical basis and related works

Building on our research goals to explore the effect of information richness of prior information on mental model formation and subsequent dynamic adjustments over time, we first discuss what is known about dynamic adjustments of mental models and how such changes affect psychological variables such as trust in a system. Afterwards we discuss recent literature from other domains of human-machine interaction on the effect of prior information on human behavior and experience. Based on this knowledge we then derive our research questions.

2.1. Dynamics in psychological models

Dynamic changes in mental models of technical systems are accounted for in various psychological models. For example, Durso et al. (Citation2007) compared the process of how users achieve understanding of a technical system with text comprehension processes and conclude that a situation model is the integration of mental models based on prior information stored in the long-term memory and new information acquired bottom-up from the actual situation. Thus, the situation model is updated with dynamically changing information.

Similarly, dynamic models are also discussed in research on trust in automation (Hoff & Bashir, Citation2015; Kraus et al., Citation2020; Lee & See, Citation2004). For example, Hoff and Bashir (Citation2015) differentiate between “initial learned trust” which is based on preexisting knowledge prior to an interaction, and “dynamic learned trust” which is updated during an interaction based on system performance.

A model might be built on limited information at first (Gill et al., Citation1998; Phillips et al., Citation2011); for example on visual characteristics such as anthropomorphic appearance (Epley et al., Citation2007), or on prior information from instructions and training (Kieras & Bovair, Citation1984; Körber et al., Citation2018; Krampell et al., Citation2020). This model is then adapted during actual interaction with the system (Kraus et al., Citation2020), as found in studies where users adapt their safety distance toward robots with growing experience (Leichtmann, Lottermoser, et al., Citation2022; Leichtmann & Nitsch, Citation2020).

These different theoretical models indicate how mental models are based on prior information and are dynamically adapted based on new information acquired during an interaction. It is thus important to understand how mental models change dynamically depending on the type and richness of information available.

2.2. Effects of prior information and experience with the system

Before an actual interaction with a system, mental models are based on prior information. In user studies, this is often manipulated by tutorials in which users receive a certain amount or type of information, or by training with the system (Beggiato & Krems, Citation2013; Edelmann et al., Citation2020; Kieras & Bovair, Citation1984; Körber et al., Citation2018; Krampell et al., Citation2020; Robinson et al., Citation2020). Due to such a variance in prior information the mental representation of technical systems vary in richness (Gill et al., Citation1998). For example, Körber et al. (Citation2018) manipulated introductory information before a driving task with an automated driving system in two groups. Both a “trust promoted” and a “trust lowered” group saw a perfectly performing automated driving system in an introductory video while the “trust lowered” group saw an additional scene reminding them of system limitations. The group with this additional information had greater information richness, as they received more and also relevant information, which should have led to better integration in the mental model. This prior information had significant effect on user trust ratings and user driving behavior (e.g., looking on the road).

Other work has focused on changes as a function of interaction experience with a specific system as a source of information. This is often operationalized through repeated measurements (Edelmann et al., Citation2020; Kraus et al., Citation2020; Leichtmann, Lottermoser, et al., Citation2022; Rueben et al., Citation2021). For example, Kraus et al. (Citation2020) show in a longitudinal plot of multiple measurements how a specific system malfunction during a driving task changed the course of the trust levels. In a human-robot interaction laboratory experiment, Leichtmann, Lottermoser, et al. (Citation2022) show that the comfort distance toward an industrial robot (that is, the minimal distance at which participants felt comfortable) significantly decreased over the course of the study with multiple encounters with the system (without malfunctions) which could also be interpreted as higher trust. These studies indicate how trust in a system changes with growing interaction experience. It could be interpreted that information richness increases during interaction as new information comes in and is integrated in the system representation. Subsequently, participants’ mental models of the system, as the basis of factors such as trust, are adapted.

Valuable insights into the workings of tutorials are also provided by literature on video games (Andersen et al., Citation2012; Kao et al., Citation2021; Morin et al., Citation2016). For instance, a study on tutorials in video games by Morin et al. (Citation2016) confirms that tutorials were particularly helpful for individuals with little prior experience, but less so for experienced players. One could assume that experienced players already possess rich mental models that are scarcely enriched by a tutorial, whereas inexperienced players with less developed mental models benefit from a tutorial in enhancing their understanding. Similarly, the complexity of the interaction also plays a central role: Another video gaming study shows that tutorials have a greater impact on more complex games compared to simpler ones (Andersen et al., Citation2012). Kao et al. (Citation2021) also show how effects of different tutorials varying in content or user experience are moderated by task complexity. These results can also be applied to human-robot interaction: Interacting with a system that only has a limited range of operations demands a simpler mental model, which may be quickly acquired through trial and error. In contrast, machines with more complex behaviors require a more complex model, wherein a tutorial can yield more pronounced effects.

3. Research questions

Based on findings that the richness of prior information influences mental model building and subsequently variables such as trust, our goal was to further explore this information richness effect on interaction behavior and psychological variables. While Körber et al. (Citation2018) manipulated prior information by reminding users of system limitations in one group, we aimed to test a different aspect of information content to expand current knowledge. Instead of giving further information on system limitations which resulted in lower trust (Körber et al., Citation2018), we aimed to test the effect of additional information regarding how to interact with the system and system signals.

Based on findings that interaction experience with a system changes trust (Kraus et al., Citation2020; Leichtmann, Lottermoser, et al., Citation2022), a second goal was to explore changes of trust, willingness to work with the robot, and self-efficacy in working with the robot over the course of the study to test for changes with an increase in information richness over time.

Finally, while quantitative measures enable conclusions on mental model formation based on certain pre-defined indices, qualitative approaches have the advantage of allowing for a more in-depth investigation as “qualitative data can play an important role by interpreting, clarifying, describing, and validating quantitative results, as well as through grounding and modifying” (Johnson et al., Citation2007, p.115). Thus, a third aim was the triangulation of both approaches.

As a result, we conducted two studies to follow such a mixed-methods approach. To explore dynamic adjustments during human-robot interaction, we used a newly developed cooperative mixed-reality game with a real industrial robot and a virtual underwater environment in which users had to play three cooperative mini-games with the robot. This game-based interaction was the basis for both studies. In the quantitative study, self-reports and objective data collected during the game were used, and for the qualitative study, semi-structured interviews were conducted after participants played the game.

4. General methods

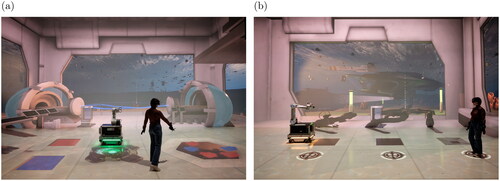

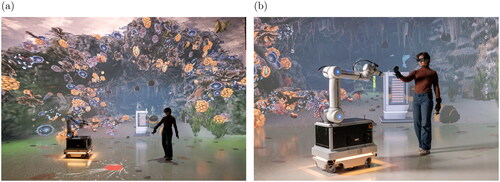

As both studies are based on the same mixed-reality game, we first describe the materials and the human-robot interaction setting used for the game, the game flow, and give a general overview of the two studies. En bref, in the cooperative mixed-reality game “CoBot Studio Clean-Up” players are tasked with three mini-games in which they play in cooperation with a real mobile manufacturing robot in a projected 3D virtual environment (Reiterer et al., Citation2023). The narrative is to clear an underwater landscape of floating trash by solving the mini-games.

4.1. Materials and human-robot interaction setting

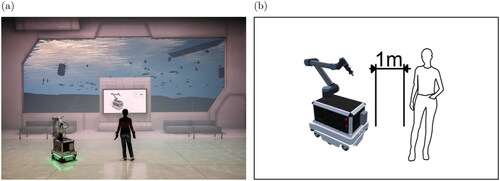

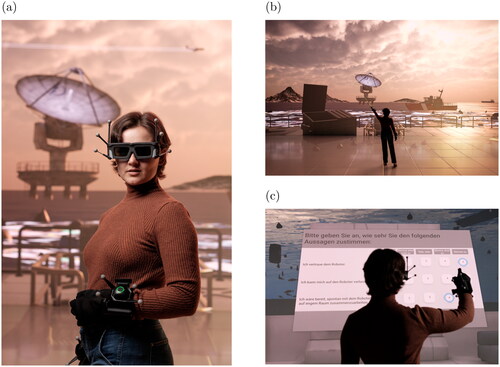

The game took place in the Deep Space 8K of the Ars Electronica Center Linz, a unique large-scale projection-based VR/MR environment with two projection screens of 16 by 9 meters on the floor and a wall serving as an interactive immersive media infrastructure (Kuka et al., Citation2009). The robot in the game was the prototype mobile manipulator CHIMERA (JOANNEUM RESEARCH Forschungsgesellschaft mbH, Citation2022; Mara et al., Citation2021) (see and ) which combines a MiR100 mobile platform (Mobile Industrial Robots, Citation2022) with a UR10 collaborative serial manipulator (Universal Robots, Citation2022). To ensure safety standards, emergency stop buttons and constant monitoring of the robot by a human operator allowed a shut down in case of technical problems.

4.2. Game flow

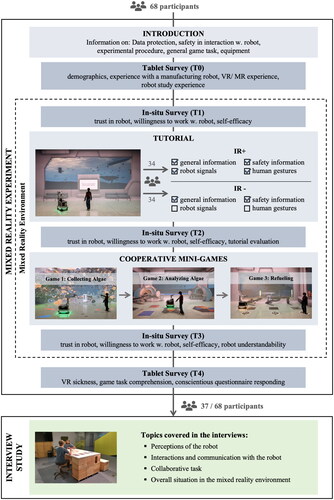

Before starting the game, participants were first informed about the course of the study, data protection regulations, the equipment and MR setup, safety information, and then gave their informed consent. Additionally, they completed a demographic questionnaire. In the game room (Deep Space 8K), participants were familiarized with the CHIMERA robot, the equipment (see ) as well as with the MR game environment (see ). Participants then answered an in-game questionnaire (see ) on trust in the robot (trust), willingness to work with the robot (WTW), and self-efficacy in working with the robot (self-efficacy) as a baseline measure (time point T1).

Figure 3. Pre-game mixed reality introduction. (a) Game equipment on a person (b) welcome scenario (c) in-game questionnaire example.

Next, participants received information on the robot and ways of interacting with the robot in a tutorial. The game tutorial featured two different versions, serving as an independent variable to manipulate information richness in the quantitative study. In both groups, participants were placed within the environment in front of a virtual screen near the robot to watch the tutorial (see ). The information was given by an artificial voice and corresponding illustrations were shown on the screen. Both groups received basic information about the robot including safety information and technical details (robot speed, the range of the robot arm, and minimal distance that the robot maintains with human users as shown in ). To manipulate information richness, half of the participants received additional elaborated information (IR+) about how to communicate with the robot, including robot light signals and robot gestures (e.g., robot calling for attention with an arm gesture as shown in ), as well as human gestures that can be used to get the robot’s attention or to command the robot to come (e.g., “come”-gesture shown in ). The tutorial with basic information (IR−) lasted 55s and the tutorial with additional elaborated information (IR+) 3 min 30s. Videos of the tutorials can be found online at https://osf.io/z9nx7/.

Figure 4. Tutorial examples for the group with high information richness. (a) robot attention gesture, (b) human “come”-gesture.

After the tutorial but before the game start (T2), participants answered another in-game questionnaire on trust, WTW, self-efficacy, and an evaluation of the tutorial. Finally, the three mini-games started.

For the first mini-game “Collecting Algae” (see ), the environment depicts a patch of sea floor. The task is to jointly classify and collect 10 pieces of algae with the mobile robot. They appear as 3D objects initially colored to provide only little contrast against the floor, upon which they are distributed in an irregular, non-random pattern. Only the player can reveal the actual color of an algae object (blue or red). The blue ones can be picked up by the player and the robot can collect red algae. This asymmetric task design leads to occasions in which either team member is required to get the other’s attention and convey that they should do a desired action. Accordingly, communication between human and robot includes mutual raised-hand and beckoning gestures. In addition, the robot points at an algae object the human should scan or take once they are close enough, and it can perform a shooing gesture if the human is in its path when it needs to collect an object.

Figure 5. Impressions of the mini-game “collecting algae” showing a human interacting with the industrial robot in the mixed-reality underwater environment..

The second mini-game, “Analyzing Algae”, (see ) takes place in a different room of the compound. The robot is stationary near the center. Three red and two blue algae objects are lying on the right side. They are to be analyzed by the robot, in which the player should assist by putting an object in front of the robot and, when the analysis is done, throwing it into the floor hatch covered with the according color on the left.

For the third mini-game, “Refueling” (see ), three hatches are arranged in a straight line from about the center to the right of the floor. On the far left along the same line a dispenser is located, where in each of five iterations one capsule appears. The robot takes one capsule at a time and moves it across the floor toward its pre-assigned destination. While traversing the path between the dispenser and the target hatch, the robot points at the destination by perpetually adjusting the angle of its elbow joint. At a position before the first hatch, the player must guess into which hatch the robot wants to push the capsule and choose one via its floor button. The robot then drops the capsule into the correct hatch, which is followed by the next iteration.

After these three mini-games, a third in-game questionnaire was displayed (T3) on trust, WTW, self-efficacy and on user understanding of robot signals and intentions.

After they had taken off the equipment and left the projection room, participants completed a final questionnaire (T4) on a tablet computer about player guidance (e.g., about VR sickness). Some of the persons were invited to participate in an interview study about their game experience.

4.3. Overview of studies

As noted earlier, we used this mixed-reality game for two different empirical studies to understand human mental model formation.

The first study was a quantitative experiment manipulating information richness in two different tutorials before the interaction with the robot game partner and further explored developments over time using repeated measures during the game with

participants.

In a second qualitative study,

participants were invited to interviews after the game to get an in-depth understanding of mental model formation.

An overview of the studies and data collection is depicted in .

In the following we will describe the quantitative experiment and the qualitative interviews separately for better clarity and readability, before results are integrated in a general discussion section.

4.4. Quality control and research ethics

The studies comply with ethical standards including the Declaration of Helsinki, the APA code of conduct, as well as national and institutional requirements. For both studies, every participant gave informed consent before participation and could have terminated participation at any point without negative consequences.

To ensure high scientific standards, we employed comprehensive strategies to minimize research biases. This includes randomization techniques for participant assignment, implementing structured protocols to ensure consistency, providing extensive training for study personnel to standardize instructions, and maintaining meticulous control over external influences through the enforcement of identical settings and procedures. To minimize research biases in interviews we ensured consistency and reliability in data collection by employing trained interviewers and by the use of structured interview guidelines with predefined questions. To further minimize researchers’ degrees of freedom, we preregistered our studies (Leichtmann, Meyer, et al., Citation2022).

5. Study 1: Quantitative experimentation

First, we describe the methods and results of the quantitative study and short interpretations.

5.1. Methods for quantitative analysis

5.1.1. Study design

The analysis is based on a between subjects design manipulating information richness on two levels, that is one group receiving only basic information about the mobile cooperative robot (IR−; i.e., technical and safety information), and one group receiving additional detailed information on robot signals and human gestures for communication with the robot (IR+). Additionally, the study included different repeated measurements to test how robot-related attitudes (e.g., trust) changed throughout the experiment.

5.1.2. Sample description

A total of participants played the game. After exclusion of participants due to simulation sickness, technical errors, or inattentional questionnaire responding, data of

participants was used for analysis, of which 30 received the more detailed tutorial and 31 the tutorial with basic information only. The sample size was justified by a preregistered power analysis (Leichtmann, Meyer, et al., Citation2022) using G*Power (Faul et al., Citation2007). From the

participants, 38 identified as female, 23 as male, and no person as non-binary gender. The mean age was

years (

). About 82% of participants hold a university degree. Most participants had never interacted with a manufacturing robot (90%) or participated in an HRI study before (92%). 52% of participants experienced VR and 63% the Ars Electronica Deep Space 8K or a comparable environment more than once before.

5.1.3. Dependent variables

Participants’ subjective ratings were collected through in-situ questionnaires in the virtual environment (time points: T1-T3) or on a tablet computer after the game (time point T4). In situ-questionnaires were presented either before the tutorial (T1), right after the tutorial (T2), or at the end of the game (T3). All items in these questionnaires were answered on a 5-point Likert scale ranging from 1 = “disagree” to 5 = “agree”, unless stated differently.

Trust in the robot, willingness to work with the robot, and self-efficacy in working with the robot were measured three times (T1-T3). Trust in the robot was measured using the two-item subscale “Trust in Automation” of the “Trust in Automation Questionnaire” by Körber (Citation2019). To assess the willingness to work with the robot, a self-developed one-item scale was used. Self-efficacy in working with the robot was measured with four items based on Neyer et al. (Citation2012) ( = .65 to .85). Additionally, after the tutorial (T2), participants evaluated the tutorial on five bipolar items (e.g., “useless (1) - useful (5)”) (

= .84). After the game (T3), participants’ perceived understanding of the robot’s signals and its intentions was measured using the four-item “Understandability” subscale of the “Trust in Automation Questionnaire” by Körber (Citation2019) (

= .64).

To measure participants’ performance in the interaction with the robot the time needed to complete each mini-game (in minutes) was automatically tracked by the VR system.

5.2. Results and discussion of quantitative study

Null-hypothesis significance testing (NHST) with a global level of .05 was used. For group comparisons, non-parametric Brunner-Munzel tests (Brunner & Munzel, Citation2000) were performed and local p-values were adjusted using the Bonferroni-Holm step-down procedure to control the family-wise error rate (FWER) (Holm, Citation1979). Additionally, Bayes Factors (BF) were calculated using the statistic program JASP (JASP Team, Citation2019; van Doorn et al., Citation2021). For the Bayesian t-tests a Cauchy prior distribution with

was used. The null-hypothesis assumes no difference in groups and thus

5.2.1. Prior information: Difference between tutorial groups

Based on the research question, we first explored the effect of prior information in which information richness was manipulated in two different tutorials. Building on the work of Körber et al. (Citation2018), we tested whether there was a difference between the two tutorial groups.

However, unlike Körber et al. (Citation2018) we did not find an effect of prior information on user trust in the system (see ). Similarly, the groups did not differ in their willingness to work with the robot at any time of measurement (see ) and they did also mostly not differ in their self-efficacy in working with the robot with one exception (see ). Surprisingly, before the tutorial (T1) one group reported significantly higher self-efficacy compared to the other group (see ). As this variable was measured even before the actual intervention, this difference might be caused by sampling error. However, while there was an imbalance in which the group with the basic tutorial (IR−) reported higher self-efficacy in the beginning, this difference vanished over time, as it is about three times more likely ( corresponds to

) that there is no difference between the groups than there is a difference after the game (T3). Hence, the self-efficacy of the group with additional tutorial information on human-robot communication (IR+) increased over the course of the collaborative game in such a way that the two tutorial groups were converging in their self-efficacy ratings toward the end of the game (T3). So while the IR + group may have had a biased self-assessment at the beginning, perhaps their perceived competence increased with the more elaborate tutorial as they learned information relevant for interaction (gestures and robot signals). The values of the group that only received basic information (IR−), however, may have remained largely the same on a descriptive level, since the tutorial may have contained little useful information for direct interaction with the system, which ultimately led to the alignment at the end of the game (T3).

Table 1. Trust.

Table 2. Willingness to work with robot.

Table 3. Self-efficacy in working with robot.

The effects on tutorial evaluation were stronger (see ). Participants with the more elaborated tutorial (IR+) evaluated their tutorial significantly more positive (e.g., more helpful) than the group with only basic information (IR−) with a large effect size of (see ). However, data did not show evidence for a difference in understandability of the robot and its intentions (see ). While one might expect individuals with a more refined mental model of the counterpart would complete tasks more efficiently, thus requiring less time, the tutorial did not show a significant effect on the duration needed for task completion. This observation could imply that the tutorial had little to no influence on shaping the mental model (see ).

Table 4. One-time measured constructs.

Table 5. Time needed to complete each mini game.

While the more elaborate tutorial was rated as more helpful, the results do not allow much inference about the mental models participants had of the robot, as most results do not provide evidence for group differences. Thus, it is questionable whether the tutorial with interaction-relevant information in addition to the basic information really resulted in higher information richness for users or whether it influenced the mental models in such a way that they caused direct changes in trust or willingness to work. Only in the case of self-efficacy could this have led to a compensation of an initial sampling bias.

5.3. Changes over time: Trust, self-efficacy, and willingness to work

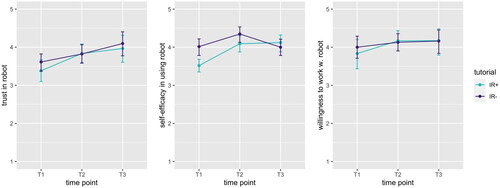

Additionally, we tested changes in variables over multiple measurements throughout the study - theoretically based on changes in mental models due to growth in information richness over time. The mixed ANOVAs including tutorial as the between-subject factor and repeated measurement time as within-subject factor revealed a significant change over time for trust in the robot (see ) and for self-efficacy (see ).

Table 6. Mixed ANOVA for trust.

Table 7. Mixed ANOVA for self-efficacy in working w. the robot.

Post-hoc analyses revealed that people expressed significantly more trust in the robot after the tutorial (T2) and at the end of the game (T3) compared to before the tutorial (T1) (). Since the trust ratings at T2 and T3 did not significantly differ, these findings suggest that receiving the tutorials led to an increase in participants’ trust which may have remained relatively stable throughout the interaction with the robot.

The results are similar for self-efficacy (see ). This could mean that while the increase in information richness due to the tutorial affected participants’ mental models in such a way that trust and self-efficacy increase, additional information obtained through interaction experience did not significantly affect mental models in this regard. As assumed in the previous section, the significant interaction of the tutorial and time for self-efficacy seems to confirm that the initially different levels converge over time (see ). These results are also shown graphically in . No significant effect was found for the willingness to work with the robot ().

Figure 7. Mean ratings per time point for self-reported trust, self-efficacy, and willigness to work with the robot for each group with high (IR+) and low (IR−) information richness.

Table 8. Mixed ANOVA for willingness to work with robot.

Thus, while the information content, manipulated in different tutorial groups, showed little effect, it can be argued that a tutorial in general (independent of content) did have an effect. Information richness seemed to increase (no matter which tutorial was used) and thus seemed to have significantly influenced trust and self-efficacy. However, the interaction experience does not seem to have affected this level further. This may be due to several reasons. First, the tutorial may have generated adequate expectations that were further confirmed in the interaction, and the level of trust or self-efficacy therefore did not change further. Second, the interaction further dynamically changed the values by multiple increases and decreases throughout the game so that – by chance – they ended up at the same level again. Third, maybe there is a difference, but it is smaller than detectable by the power. However, a lack of change in values does not necessarily imply that no change in mental models occurred. Mental models might have changed, but these changes were not sufficient to adjust for trust and self-efficacy levels. To receive more clarity in this regard, further qualitative analyses are needed.

6. Study 2: Qualitative interviews

In this section we describe the qualitative study that took place after the participants attended the quantitative experiment.

6.1. Methods for qualitative analysis

6.1.1. Data collection

To complement the quantitative experiment, open-structured qualitative interviews were conducted with 37 people directly after their activities in the Deep Space 8K. The interviews were set up following a narrative approach with open-ended interview questions and the aim of creating an organic conversation (Flick, Citation2018; Flick & Gaskell, Citation2000). The goal was to explore participants’ experiences and subjective impressions regarding the robot. Specifically, the interviews focused on the participants’ perceptions and reflections regarding (a) the interaction and communication with the robot, (b) the collaborative tasks (i.e., the three mini-games), and (c) the general situation in the Deep Space 8K. The deployed interview technique allows the interviewees to outline issues and aspects regarding the aforementioned topics based on their subjective relevance (Flick & Gaskell, Citation2000). The interviews were conducted by three interviewers, one experienced with qualitative interviewing, two familiar with the setting in the Deep Space 8K.

An interview guideline was used in a flexible way, meaning that it contained relevant topics and questions, however, the questions were not asked in a strict order but rather whenever useful during the conversation. The interview started with the open question: “How was it for you in the Deep Space 8K? How did it go for you? – Please tell us briefly about it”. The further course of the conversation was basically determined by the initial accounts of the participants. This means the interviewer picked up on topics and issues mentioned by the interviewees that were central for the study goals (e.g. perceived problems concerning the interaction with the robot), and encouraged the participants to further elaborate on them. At the end of the conversation, some remaining open questions were asked – if not addressed by the participants earlier – e.g. how the participants have experienced the tutorial.

6.1.2. Data analysis

The interviews were conducted in German, audio recorded and later transcribed. The analysis of the qualitative data followed the approach of qualitative content analysis (Schreier, Citation2014). Next to a broad initial analysis and in order to complement the quantitative study results, the qualitative data was specifically screened and structured aiming to select relevant material containing participant’s reflections on the formation of mental models (subjective understanding of how the interaction with the robot works). This happened in a back-and-forth process between the second and the first author, discussing initial results from the qualitative content analysis. Based on that, the following analytical categories were defined: dynamics of subjective impressions, reports on what has changed during the interaction with the robots, and reflections on the tutorial. So, further coding of the selected material focused on participants’ reflections on how their subjective understanding of the interaction and communication with the robot changed during the experiment, as well as statements concerning the tutorial. This analysis aimed at reconstructing how mental models are formed.

6.2. Results and discussion of qualitative inquiry

In this section we describe the results of the qualitative content analysis complementing the quantitative study results. This comprises insights on the participants’ interpretation of the overall experience, and then specifically their formation of mental models, and reflections on the tutorial.

6.2.1. Participants’ interpretation of the overall experience

The setup in the Deep Space 8K created a complex setting involving a physical environment, a mixed-reality environment, a human-robot interaction, mini-games, equipment (e.g. shutter glasses, gloves), a research experiment, etc., with plenty of room for subjective interpretations. Thus, the interviewees expressed different subjective perceptions of the robot, for example, rather instrumental perspectives (e.g., the robot as a tool) or social attributions (e.g. praising the robot), as well as emphasizing the human-robot collaboration.

Concerning the experienced cooperation with the robot, the role of the human was either described as giving assistance to the robot (e.g., participants described themselves as “unskilled workers.”), receiving support from the robot (e.g., participants described themselves as “the boss” and the robot as “the assistant”) or equal to the robot. Related to that, participants subjectively described their role as either active (taking the initiative) or rather passive (observing the robot). For example, participants described collaborating with the robot in that they had to tell the robot what to do, while others stated they were observing the robot as it may already know what to do. Additionally, depending on the task (mini-game) participants also described having to switch between an active or rather passive role. Concerning mental models, the participants subjectively conceptualized the robot as more or less active and autonomous.

6.2.2. Formation of mental models

Focusing on the process, the analysis depicts that the initial phase of interaction was characterized by a lack of knowledge and uncertainty. This was more of an issue for the participants receiving the brief tutorial with basic information. However, participants who received the more detailed tutorial also perceived a kind of uncertainty (e.g., how exactly is it communicated to the robot that it should come over?). Specifically, the participants reported confusions and uncertainty due to a lack of feedback from the robot, for example, whether the robot perceived their signals (hand gestures), or if the robot was still processing, or if their input (gestures) were not detected (e.g., because they were too far away, or at the wrong height), or if the robot would need additional input. Further, participants reported that it was hard to detect signals from the robot (e.g., where is the robot-arm exactly pointing at?). Beyond that, some participants reported on uncertainty regarding the robot’s level of autonomy. Participants therefore questioned what the robot might have done automatically or autonomously (e.g. whether the robot would pick up the algae on its own). Further, some participants were unclear if their input (a certain gesture) had an effect or if the robot would have done the desired activity anyway. Some participants even wondered if the robot acted randomly.

Additionally, participants reported on uncertainties regarding the capability of the robot to recognize people. Participants mentioned that they got out of the way when the robot was approaching. Participants also referred to uncertainties concerning how to control the robot (e.g., which gestures exactly worked), or how to get the robot to do something specific (e.g. pick up an algae or to come over). Some participants also reported talking to the robot, despite being aware that this would not work: “Please understand me”. All these open questions regarding the functionality of the robot and the human-robot interaction, as reported by the participants, indicate towards mental models that are dynamic, open, and under development.

Based on these uncertainties regarding the human-robot interaction, the analysis depicts different trial-and-error strategies and tactics deployed by the participants to develop a useful mental model to successfully interact with the robot. One basic strategy (as reported by the participants) was to constantly try things out. Participants reported trying again and again until the robot responded or until they had the feeling it worked. For example, participants tried different hand movements (gestures) and when convinced that a certain gesture worked, they kept using it. Related to that, another strategy to get a better understanding of the robot was to deploy a process of elimination and to test assumptions on how the robot works. Participants described discarding certain attempts, if they believed that an attempt wouldn’t work (e.g., walking next to the robot as a signal to pick up an alga, which was ineffective). Or, for example, some participants reported wondering what the light signals at the bottom of the robot might mean. However, during the course of the interaction, it became obvious that the light signal was not feedback concerning the human-robot interaction (e.g. if an input gesture was detected, rather, it was merely the status of the robot as moving or standing). Further, participants reported observing the robot, what the robot was doing, as a relevant strategy to get a better understanding of the robot.

Some exemplary specific tactics the participants described in order to try things out and to test or eliminate certain assumptions were, for example, luring-gestures to get the robot to come over, or to place themselves at a certain position in the room to get the robot to move over to their position or to get attention from the robot. Another approach was guessing (e.g. which specific hatch the robot-arm was pointing at). Some participants tried to identify a certain sequence or logic behind an activity (e.g., sequence of hatches in mini-game 3), indicating these participants prioritized the logic of the mini-games over the human-robot collaboration. Further, some participants reported to repeatedly have pressed the button of the controller to trigger a certain reaction from the robot.

These attempts to interact with the robot are presented (here in this analysis) regardless of whether they worked out. However, they show the participants’ subjective interpretations of how the human-robot interaction might work. The overall assessment of the participants was that the communication with the robot was more difficult and unclear at the beginning, getting better and easier over time. The qualitative data indicates that participants constantly interpret aspects related to the robot (robot behavior, signals, movements, etc.) as well as related to the tasks of the mini-games and the overall setting. This subjective understanding of how the robot and the interaction with the robot works is constantly shaped and reshaped by these interpretations (independent from whether or not they are corresponding with how the robot actually works).

6.2.3. Tutorial

Overall, there was a rather positive assessment of the tutorial. For example, participants stated that the tutorial was perceived as useful and helpful, or more specifically, without the tutorial it would be very difficult or even impossible to interact with the robot. Thus, among the participants there was a widely held opinion that it would be even more useful to get a more detailed and expanded tutorial. This was especially the case for the participants that received only basic information in the brief version of the tutorial. These participants often described the tutorial as very general and unspecific.

7. General discussion

Based on an immersive interactive mixed-reality game with a physical mobile robot and a virtual environment, we explored mental model formation of human game-partners in triangulating two consecutive studies, a quantitative experiment, and a qualitative interview study. In the following section, we offer a synthesis of the quantitative and qualitative study results and discuss them in comparison with related works.

7.1. Summary and integration

In the quantitative study, we observed that the tutorial had a significant impact on the participants’ trust and self-efficacy expectations when comparing levels before and after the tutorial. Participants seemed to have received information in the tutorial that was integrated into a mental model and eventually led to an adjustment of these psychological variables. This effect illustrates a process of dynamic adaptation as observed in other studies (Kraus et al., Citation2020; Leichtmann, Lottermoser, et al., Citation2022). However, since we did not find a difference between the tutorial groups, which differed in the richness of information, one could assume that this higher level of information richness did not lead to a better mental model. The information may have been too little to make a difference, or the additional information could not be properly integrated into a mental model in the given time. However, participants evaluated the tutorial with higher information richness more positively showing that participants actually have a demand for higher amounts of prior information to form their expectations and that such a higher amount was not distracting or hindering in this study. This is in line with the feedback from the qualitative interviews, in which participants describe the tutorial as basically helpful, however, to receive more detailed information would be better.

The quantitative null-results of information richness on trust or self-efficacy differ from the findings of Körber (Citation2019), who found a difference between tutorial groups for different variables. However, it must be emphasized that a different content was shown in the tutorials presented by Körber (Citation2019). While we showed higher levels of information on how to communicate with the robot and explanations on robot signals in the tutorial group with more information, Körber (Citation2019) showed an additional reminder of the system’s limitations in one group. It can be concluded what is intuitively reasonable: Mental models are adapted to different degrees depending on the content of new information, which has different effects on psychological variables such as trust or self-efficacy. This means that complex mental models, which contain very different aspects of content with different valence, can also contain both trust-decreasing and trust-enhancing aspects at the same time. Such structures would therefore lead to a continuous adjustment of trust levels depending on the weighting of the different aspects in the model. The dynamics of such complex mental models are also discussed in the literature in line with our findings. For example, Leichtmann et al. (Citation2023) demonstrate that the expectations of workers toward robots are complex in nature, allowing them to be negative in some aspects such as social impact, while other aspects such as task-related changes can be more positive and these can influence the overall evaluation differently depending on the company.

The results from our qualitative inquiry showed that even participants who received the more detailed tutorial deploy trial-and-error strategies to figure out not only which gestures are working but how the gestures are precisely working. Such trial-and-error strategies described in the qualitative analysis indicate that the mental models of the robot were too rudimentary in our study, so the participants tried out different gestures or other ways to communicate with the robot or even resorted to other tactics (e.g. trying to understand the game-logic). The results of the qualitative inquiry thus show a dynamic formation of mental models. By constantly interpreting and questioning the different elements of the robot and its environment, such as the robot’s (arm) movements and (light) signals, as well as based on subjective interpretations of the rationale behind the robot’s behavior (e.g. its autonomy), a practically useful mental model to interact with the robot is formed.

Therefore not every increase in information automatically leads to an equally strong adjustment that affects actual behavior or an adjustment of psychological variables such as trust. For example, a trust-lowering effect of reminders of system limits may be larger than the trust-increasing effect of communication information, for communication information effects may only be visible after a specific period of trial-and-error. The effect of information richness as discussed by Gill et al. (Citation1998) may therefore need to 1) exceed a critical amount to trigger an adaptation process, and 2) be moderated by its content.

In addition to the content of the tutorial, many other variables could serve as potential moderators, with their effects warranting further investigation in future studies. Research in video game studies (Andersen et al. see 2012; Morin et al. see 2016; Kao et al. see 2021), for example, suggests that factors such as task complexity (Andersen et al., Citation2012) or players’ prior experience (Morin et al., Citation2016) can also influence the effectiveness of tutorials. In the case of our study, it can be speculated that participants may have had little prior experience with the robot or the complex game environment, hence possessing only rudimentary mental models, making a tutorial effective. At the same time, the tasks in the study (collecting algae) may not have been complex enough for the tutorial to have a significant impact on game outcomes (e.g., time to completion). Future studies could therefore use more complex tasks that require more intense cooperation to dive deeper in the effectiveness of tutorials.

7.2. Theoretical implications

The tutorial in our study differs in content compared to other work (Körber, Citation2019) and consequently also differs in how the tutorial affects human cognition and behavior as a result as discussed in the previous section. This suggests that the content of tutorials may function as a moderator for effects. Different content affects different aspects of the mental model, and this in turn has different effects on the results. In line with previous work (Leichtmann et al., Citation2023) mental models could therefore be considered as complex networks of connected information and beliefs. Such complex networks could affect outcomes differently depending of their structure. The idea of mental models as networks of connected information about a robot and its behavior allows to integrate different aspects that vary in valence (e.g., limitations, communication pathways, data protection, and more). For a better theoretical understanding, more in-depth analysis is necessary which aspects of the mental model affects which outcome to which degree.

As a second theoretical conclusion, it can be stated that while new information might be integrated into the existing mental model, not all of such change might become visible in behavior changes. There might be a certain threshold to which degree a mental model has to change in order to effect changes in other psychological variables such as trust. For example only a small amount of information might affect the mental model only to a limited degree that will not change other aspects connected to certain expectations. Future studies could systematically vary the amount and impact of certain types of information and test at which point such information causes differences in connected psychological constructs such as trust.

While the experiment tested the effects of incoming information on psychological outcomes, also information that is not explained affects such outcomes because users question different elements of the robot (e,g., users trying to make sense of some light signals that have not been explained). Future studies could test interaction effects on how user behavior is changing when certain type of information is lacking while a different type is given using 2 (information A given or lacking) x 2 (information B given or lacking) designs.

Finally, we would like to positively emphasize our methodical approach: While we manipulated the tutorial, the games themselves were deliberately left open to allow for different interaction and play experiences of the participants. Thus, different phenomena can be observed that emerged in a playful interaction. This deliberately exploratory-playful approach then makes it possible to combine quantitative and qualitative approaches. Although effects at the quantitative level may be ambiguous and difficult to interpret, interpretations can be supplemented by qualitative observations and interviews to allow for more stable conclusions. This has the advantage that phenomena can be observed and studied bottom-up without artificially creating a previously known phenomenon in the laboratory. This playful approach thus places itself at the point in the research process that contributes to a refinement of theories and hypotheses even before concrete confirmatory tests, for which very precise predictions usually have to exist but are often missing in the literature as Scheel et al. (Citation2021) points out. Research disciplines such as HRI (Leichtmann, Nitsch, & Mara, Citation2022) may at times not be ready for hypothetico-deductivism as tests are weak and thus researchers should engage more in steps prior to confirmatory tests such as descriptive and naturalistic observation as well as exploratory experimentation (see Scheel et al., Citation2021). Artistic-playful approaches as presented here (mixed-reality museum-based cooperative games) can make an important contribution in this respect, especially in HRI (Leichtmann, Nitsch, & Mara, Citation2022).

7.3. Limitations

Some limitations of the current study need to be pointed out that need further investigation in future studies. First, it must be emphasized that while the implementation of the study as a mixed reality experience with a real robot within a playful virtual underwater environment is innovative, the novelty of the approach and its playfulness can distract from the actual task and the interaction with the robot. Thus, the experimenters anecdotally reported that individuals paused throughout the game to consciously observe the underwater environment, and it also emerged from the interviews that individuals were trying to understand the game logic itself rather than the robot alone. In future studies, participants could be given more time to explore the VR environment before starting the actual task. This can prevent individuals from being too distracted by exploring the environment during the task.

In addition, the complex study design requiring the presence of several people during the study (experimenter, etc.) leads to a certain artificiality of the situation potentially influencing participant behaviors. A direct replication in VR where experimenters are not visible could shed some light on such experimenter influence.

A third limitation of the specific study setting is the museum location. This could influence which individuals are interested in participating and therefore may not be representative of the population. Although we did not specifically recruit museum visitors, it can be assumed that people who are interested in visiting an art museum have a higher level of education or a more open personality (DiMaggio, Citation1996; Kirchberg, Citation1996; Mastandrea et al., Citation2007, Citation2009). In this case, it might be speculated that individuals with a strong interest in innovation and technology are more likely to participate. Future studies with more diverse samples are needed.

Finally, it has to be mentioned specifically for the quantitative study that the true effect sizes of, for example, the manipulation of the tutorial are smaller than initially expected and therefore the present sample size might be too small and the statistical power too low – a problem that can be observed in the HRI literature in general (Leichtmann, Nitsch, & Mara, Citation2022). However, the sample size in this study was already at the maximum possible level in the given time period for this implementation effort. Nevertheless, future studies will have to use other study designs to achieve higher effect sizes or higher sample sizes through multi-lab projects. For example, stronger manipulations of the tutorials could lead to stronger effects such as larger differences in information richness by providing even more information on the robot’s functioning or by including short exercises in which the users can try out the communication with the robot as part of the tutorial (i.e., “learning by doing”) to have a more integrated mental model. This freedom to explore the interaction might also minimize trial-and-error strategies within the tasks themselves.

Another possible strategy to observe stronger effects is the use of tasks with higher complexity that make a tutorial even more necessary as indicated by Andersen et al. (Citation2012). With such complex tasks the strongest effects could be expected especially for participants with only limited experience compared to more experienced users of robotic technology (Andersen et al., Citation2012; Morin et al., Citation2016).

7.4. Practical implications

Although we could not find a significant effect on variables such as trust or self-efficacy, it is clear that in principle people would like more information about how to interact with the robot and how to interpret different signals. Tutorials appear to satisfy a certain need among users for clearer expectations about what to anticipate, thus potentially creating more accurate expectations regarding task-related concerns (see for example Leichtmann et al., Citation2023). This can be seen in the quantitative differences of the tutorial evaluation as well as in the statements made in the qualitative interviews. Here, however, even the detailed tutorial seems to offer too little information, or at least it was not prepared in such a way that it could be meaningfully integrated into a mental model. The fact that the participants could not form a sufficiently reliable mental model based on the rather simplistically presented prior information (in the form of frontal presentation of information and pictures) was also reflected in their strategies of trial-and-error in the interaction or by the fact that they sought other strategies of problem solving such as understanding a game logic instead of understanding the interaction partner.

There are two main takeaways here: in addition to the amount of info, the type of content also moderates the effect of a tutorial, and second, it can be concluded that other forms of instruction might be more useful. For example, the new information could be presented directly in an exemplary situation or gestures could also be tried out in a tutorial to promote integration into a mental model. Thus, not only is factual knowledge about the system in the sense of declarative knowledge required, but also knowledge from direct experience with the system corresponding to procedural knowledge.

The qualitative results demonstrate that not only does the content of the tutorial have an influence on interaction, but also what is not shown or not shown enough. For example, it is revealed from the interviews that people formed their own interpretations about the light signals and put their own meaning into it as this may not have been explained properly in the tutorials. Therefore, tutorials should also include information that is necessary to prevent frequent misinterpretations later on. Or, of course, the design should already take into account that less central information is not presented so saliently that it is overinterpreted and then no special clarification is required in tutorials.

8. Conclusion and outlook

This mixed-reality study with a mobile cooperative robot showed that individuals find information on how to communicate with their robotic interaction partner helpful and also desire correspondingly elaborated tutorials. However, it was shown that more information on how to interact with the robot did not lead to a change in trust or self-efficacy in this case, but that people still chose trial-and-error strategies in the interaction. It can be concluded that tutorials are effective, but that people also like to first test out for themselves how a robot reacts. From the comparison of our results with the existing literature, a moderating effect of the tutorial content can be suspected. For example, the effect size and direction differs if it is shown how to interact with the system or if limitations of the system are pointed out. Future studies should therefore examine different content, amounts, and depth of information for size and direction of effects – but not just of what is shown in the tutorial but also of what is not shown. In addition, it would be valuable for future research to consider conducting moderator analyses, particularly in relation to task complexity and users’ prior experience, enriching our understanding of effective instructional design in the realm of human-robot interaction.

Acknowledgments

We would like to thank the following persons who contributed to the success of this research project, whether by supporting the conceptualization or technical implementation of the mixed-reality game, by assisting data collection, or by providing valuable input during the writing of this paper: Lara Bauer, Stephanie Gross, Michael Heiml, Tobias Hoffmann, Brigitte Krenn, Thomas Layer-Wagner, Anna Katharina Paschmanns, Clarissa Veitch, Alfio Ventura, and Julian Zauner.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data that support the findings of this study are available from the corresponding author, M.M., upon reasonable request.

Additional information

Funding

Notes on contributors

Benedikt Leichtmann

Benedikt Leichtmann is Senior Researcher at the Department of Psychology of the Ludwig-Maximilians-Universität München in Germany after leaving the LIT Robopsychology Lab at Johannes Kepler University Linz in Austria in early 2023. He received his PhD in Psychology from RWTH Aachen University in Germany in 2021.

Thomas Meneweger

Thomas Meneweger is a research fellow at the LIT Robopsychology Lab at Johannes Kepler University Linz in Austria. He received a Master’s Degree in Sociology from the University of Salzburg in Austria in 2011.

Christine Busch

Christine Busch is a researcher at the Institute of Human Factors and Technology Management at the University of Stuttgart in Germany. She received a Master’s Degree in Psychology from Johannes Kepler University Linz in Austria in 2022.

Bernhard Reiterer

Bernhard Reiterer is a Senior Researcher at the Robotics Institute at Joanneum Research Forschungsgesellschaft mbH in Klagenfurt. He received a Master’s Degree in Computer Science from the University of Klagenfurt in Austria in 2007.

Kathrin Meyer

Kathrin Meyer is a research fellow at the LIT Robopsychology Lab at Johannes Kepler University Linz in Austria. She received a Diploma in Engineering for Computer-based Learning in 2005 and a Master’s Degree in Human-Centered Computing in 2017 from the University of Applied Sciences Upper Austria.

Daniel Rammer

Daniel Rammer is a Senior Researcher at the Ars Electronica Futurelab in Linz, Austria. He received a Master’s Degree in Media Technology and Design from the University of Applied Sciences Upper Austria in 2017.

Roland Haring

Roland Haring is Technical Director of the Ars Electronica Futurelab in Linz, Austria. He received a Master’s Degree in Media Technology and Design from the University of Applied Sciences Upper Austria in 2004.

Martina Mara

Martina Mara is Professor of Robopsychology and head of the LIT Robopsychology Lab at Johannes Kepler University Linz in Austria. She received her PhD in Psychology from the University of Koblenz-Landau in Germany in 2014 and her habilitation in Psychology from the University of Erlangen–Nuremberg in Germany in 2022.

References

- Andersen, E., O’Rourke, E., Liu, Y.-E., Snider, R., Lowdermilk, J., Truong, D., Cooper, S., & Popovic, Z. (2012). The impact of tutorials on games of varying complexity. [Paper presentation]. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 59–68. https://doi.org/10.1145/2207676.2207687

- Beggiato, M., & Krems, J. F. (2013). The evolution of mental model, trust and acceptance of adaptive cruise control in relation to initial information. Transportation Research Part F: Traffic Psychology and Behaviour, 18, 47–57. https://doi.org/10.1016/j.trf.2012.12.006

- Berg, J., Lottermoser, A., Richter, C., & Reinhart, G. (2019). Human-Robot-Interaction for mobile industrial robot teams. Procedia CIRP, 79, 614–619. https://doi.org/10.1016/j.procir.2019.02.080

- Brunner, E., & Munzel, U. (2000). The nonparametric Behrens-Fisher problem: Asymptotic theory and a small-sample approximation. Biometrical Journal, 42(1), 17–25. https://doi.org/10.1002/(SICI)1521-4036(200001)42:1<17::AID-BIMJ17>3.0.CO;2-U

- DiMaggio, P. (1996). Are art-museum visitors different from other people? The relationship between attendance and social and political attitudes in the United States. Poetics, 24(2–4), 161–180. https://doi.org/10.1016/S0304-422X(96)00008-3

- Durso, F. T., Rawson, K. A., & Girotto, S. (2007). Comprehension and situation awareness. In F. T. Durso, R. S. Nickerson, S. T. Dumais, S. Lewandowsky, & T. J. Perfect (Eds.), Handbook of applied cognition (pp. 163–193). John Wiley & Sons Ltd.

- Edelmann, A., Stumper, S., Kronstorfer, R., & Petzoldt, T. (2020). Effects of user instruction on acceptance and trust in automated driving [Paper presentation]. 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), September 2020, Rhodes, Greece, 1–6. https://doi.org/10.1109/ITSC45102.2020.9294511

- Endsley, M. R. (2000). Situation models: An avenue to the modeling of mental models. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 44(1), 61–64. https://doi.org/10.1177/154193120004400117

- Epley, N., Waytz, A., & Cacioppo, J. T. (2007). On seeing human: A three-factor theory of anthropomorphism. Psychological Review, 114(4), 864–886. https://doi.org/10.1037/0033-295X.114.4.864

- Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. https://doi.org/10.3758/BF03193146

- Flick, U. (2018). An introduction to qualitative research (6th). Sage Publications Limited.

- Flick, U., & Gaskell, G. (2000). Episodic interviewing. In M. W. Bauer (Eds.), Qualitative researching with text, image and sound (pp. 75–92). Sage. https://doi.org/10.4135/9781849209731.n5

- Gill, M. J., Swann, W. B., & Silvera, D. H. (1998). On the genesis of confidence. Journal of Personality and Social Psychology, 75(5), 1101–1114. https://doi.org/10.1037/0022-3514.75.5.1101

- Graetz, G., & Michaels, G. (2018). Robots at work. The Review of Economics and Statistics, 100(5), 753–768. https://doi.org/10.1162/rest_a_00754

- Hoff, K. A., & Bashir, M. (2015). Trust in automation: Integrating empirical evidence on factors that influence trust. Human Factors, 57(3), 407–434. https://doi.org/10.1177/0018720814547570

- Holm, S. (1979). A simple sequentially rejective multiple test procedure. Scandinavian Journal of Statistics, 6(2), 65–70.

- JASP Team. (2019). JASP. https://jasp-stats.org/

- JOANNEUM RESEARCH Forschungsgesellschaft mbH. (2022). Mobile manipulation. Retrieved November 28, 2022, from https://www.joanneum.at/en/robotics/infrastructure/mobile-manipulation

- Johnson, R. B., Onwuegbuzie, A. J., & Turner, L. A. (2007). Toward a definition of mixed methods research. Journal of Mixed Methods Research, 1(2), 112–133. https://doi.org/10.1177/1558689806298224

- Kao, D., Magana, A. J., & Mousas, C. (2021). Evaluating tutorial-based instructions for controllers in virtual reality games. Proceedings of the ACM on Human-Computer Interaction, 5(CHI PLAY), 1–28. https://doi.org/10.1145/3474661

- Kieras, D. E., & Bovair, S. (1984). The role of a mental model in learning to operate a device. Cognitive Science, 8(3), 255–273. https://doi.org/10.1207/s15516709cog0803_3

- Kirchberg, V. (1996). Museum visitors and non-visitors in Germany: A representative survey. Poetics, 24(2–4), 239–258. https://doi.org/10.1016/S0304-422X(96)00007-1

- Klein, G., Woods, D., Bradshaw, J., Hoffman, R., & Feltovich, P. (2004). Ten challenges for making automation a "team player" in joint human-agent activity. IEEE Intelligent Systems, 19(6), 91–95. https://doi.org/10.1109/MIS.2004.74

- Körber, M. (2019). Theoretical considerations and development of a questionnaire to measure trust in automation [Series Title: Advances in intelligent systems and computing]. In S. Bagnara, R. Tartaglia, S. Albolino, T. Alexander, & Y. Fujita (Eds.), Proceedings of the 20th Congress of the International Ergonomics Association (IEA 2018) (pp. 13–30, Vol. 823). Springer International Publishing. https://doi.org/10.1007/978-3-319-96074-6_2

- Körber, M., Baseler, E., & Bengler, K. (2018). Introduction matters: Manipulating trust in automation and reliance in automated driving. Applied Ergonomics, 66, 18–31. https://doi.org/10.1016/j.apergo.2017.07.006

- Krampell, M., Solís-Marcos, I., & Hjälmdahl, M. (2020). Driving automation state-of-mind: Using training to instigate rapid mental model development. Applied Ergonomics, 83, 102986. https://doi.org/10.1016/j.apergo.2019.102986

- Kraus, J., Scholz, D., Stiegemeier, D., & Baumann, M. (2020). The more you know: Trust dynamics and calibration in highly automated driving and the effects of take-overs, system malfunction, and system transparency. Human Factors, 62(5), 718–736. https://doi.org/10.1177/0018720819853686

- Kuka, D., Elias, O., Martins, R., Lindinger, C., Pramböck, A., Jalsovec, A., Maresch, P., Hörtner, H., & Brandl, P. (2009). DEEP SPACE: High resolution VR platform for multi-user interactive narratives. In I. A. Iurgel, N. Zagalo, & P. Petta (Eds.), Interactive storytelling (pp. 185–196). Springer.

- Lee, J. D., & See, K. A. (2004). Trust in automation: Designing for appropriate reliance. Human Factors, 46(1), 50–80. https://doi.org/10.1518/hfes.46.1.50_30392

- Leichtmann, B., Hartung, J., Wilhelm, O., & Nitsch, V. (2023). New short scale to measure workers’ attitudes toward the implementation of cooperative robots in industrial work settings: Instrument development and exploration of attitude structure. International Journal of Social Robotics, 15(6), 909–930. https://doi.org/10.1007/s12369-023-00996-0

- Leichtmann, B., Lottermoser, A., Berger, J., & Nitsch, V. (2022). Personal space in human-robot interaction at work: Effect of room size and working memory load. ACM Transactions on Human-Robot Interaction, 11(4), 1–19. https://doi.org/10.1145/3536167

- Leichtmann, B., Meyer, K., & Mara, M. (2022). CoBot studio clean-up – Deep space edition: Effect of introductory information on evaluation and collaborative human-robot team performance in a mixed reality game. Open Science Framework. https://doi.org/10.17605/OSF.IO/7RKDB

- Leichtmann, B., & Nitsch, V. (2020). How much distance do humans keep toward robots? Literature review, meta-analysis, and theoretical considerations on personal space in human-robot interaction. Journal of Environmental Psychology, 68, 101386. https://doi.org/10.1016/j.jenvp.2019.101386

- Leichtmann, B., Nitsch, V., & Mara, M. (2022). Crisis ahead? Why human-robot interaction user studies may have replicability problems and directions for improvement. Frontiers in Robotics and AI, 9, 838116. https://doi.org/10.3389/frobt.2022.838116

- Mara, M., Meyer, K., Heiml, M., Pichler, H., Haring, R., Krenn, B., Gross, S., Reiterer, B., Layer-Wagner, T. (2021). CoBot Studio VR: A virtual reality game environment for transdisciplinary research on interpretability and trust in human-robot collaboration. 4th International Workshop on Virtual, Augmented, and Mixed Reality for HRI (VAM-HRI 2021), March 2021, Boulder, Colorado, USA. https://openreview.net/forum?id=ZlAhl-wrYqH

- Mastandrea, S., Bartoli, G., & Bove, G. (2007). Learning through ancient art and experiencing emotions with contemporary art: Comparing visits in two different museums. Empirical Studies of the Arts, 25(2), 173–191. https://doi.org/10.2190/R784-4504-37M3-2370

- Mastandrea, S., Bartoli, G., & Bove, G. (2009). Preferences for ancient and modern art museums: Visitor experiences and personality characteristics. Psychology of Aesthetics, Creativity, and the Arts, 3(3), 164–173. https://doi.org/10.1037/a0013142

- Mobile Industrial Robots. (2022). Mobile robot from Mobile Industrial Robots - MiR100. Retrieved November 28, 2022, from https://www.mobile-industrial-robots.com/solutions/robots/mir100/

- Morin, R., Léger, P.-M., Senecal, S., Bastarache-Roberge, M.-C., Lefèbrve, M., Fredette, M. (2016). The effect of game tutorial: A comparison between casual and hardcore gamers. Proceedings of the 2016 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts (CHI PLAY Companion '16), October 2016, New York, NY, USA, 229–237. https://doi.org/10.1145/2968120.2987730

- Neyer, F. J., Felber, J., & Gebhardt, C. (2012). Entwicklung und Validierung einer Kurzskala zur Erfassung von Technikbereitschaft. Diagnostica, 58(2), 87–99. https://doi.org/10.1026/0012-1924/a000067

- Phillips, E., Ososky, S., Grove, J., & Jentsch, F. (2011). From tools to teammates: Toward the development of appropriate mental models for intelligent robots. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 55(1), 1491–1495. https://doi.org/10.1177/1071181311551310

- Reiterer, B., Brandstötter, M., Mara, M., Rammer, D., Haring, R., Heiml, M., Meyer, K., Hoffmann, T., Weyrer, M., Hofbaur, M. (2023). An immersive game projection setup for studies on collaboration with a real robot. Proceedings of the Austrian Robotics Workshop (ARW’23), April 2023, Linz, Austria.

- Robinson, N. L., Hicks, T.-N., Suddrey, G., & Kavanagh, D. J. (2020). The robot self-efficacy scale: Robot self-efficacy, likability and willingness to interact increases after a robot-delivered tutorial [Paper presentation]. 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), August 2020, Naples, Italy, 272–277. https://doi.org/10.1109/RO-MAN47096.2020.9223535

- Rueben, M., Klow, J., Duer, M., Zimmerman, E., Piacentini, J., Browning, M., Bernieri, F. J., Grimm, C. M., & Smart, W. D. (2021). Mental models of a mobile shoe rack: Exploratory findings from a long-term in-the-wild study. ACM Transactions on Human-Robot Interaction, 10(2), 1–36. https://doi.org/10.1145/3442620