Abstract

Autonomous vehicles (AVs) are rapidly evolving as a novel way of transportation. Nevertheless, there is a consensus that AVs cannot address all traffic scenarios independently. Consequently, there arises a need for remote human intervention. To pave the way for large-scale deployment of AVs onto public roadways, innovative models of remote operation must evolve. Such a paradigm is Tele-assistance, which posits that the low-level control of AVs should be delegated through high-level commands. Our work explores how such a command language should be constructed as a first step in designing a Tele-assistance user interface. Through interviews with 17 experienced teleoperators, we elicit a set of discrete commands that a remote operator can use to resolve various road scenarios. Subsequently, we create a scenario-command mapping and a thematic classification of the defined commands. Finally, we present an initial Tele-assistance interface design based on these commands.

1. Introduction

Recent strides in technological progress within domains like computer vision, sensor fusion, and artificial intelligence have facilitated the rapid advancement of autonomous vehicles (AVs) as an innovative and transformative mode of transportation (Fagnant & Kockelman, Citation2015). Prominent automotive manufacturers, alongside emerging startups, are actively developing diverse cutting-edge technologies to realize the capability of AVs to operate independently. Nevertheless, the current state of AV development reveals their inability to handle every conceivable road scenario autonomously. Instances such as road construction, malfunctioning traffic lights, or a busy intersection might prevent an AV from moving autonomously (Dixit et al., Citation2016). For example, in 2021, industry leaders WaymoFootnote1 and ZooxFootnote2 reported 7000–8000 miles per disengagementFootnote3 (Herger, Citation2022). Consequently, a consensus prevails across both academic and industrial circles that, in the foreseeable future, AVs will encounter traffic situations with inherent ambiguity that they cannot handle on their own, thus underscoring the need for remote human intervention (Bogdoll et al., Citation2021; Colley & Rukzio, Citation2020; Georg et al., Citation2018; Murphy et al., Citation2020; Politis et al., Citation2015).

An encouraging strategy for effectively addressing such scenarios and enabling widespread deployment of fully autonomous vehicles is teleoperation. Teleoperation involves a remote human operator (RO) who can oversee and govern the vehicle’s actions from a distance. When a vehicle encounters challenges within specific contexts, the RO can be summoned to evaluate the circumstances and guide the vehicle until the issue is resolved. The RO can be located in a remote operation center and may assist many AVs during a single teleoperation shift. Several teleoperation systems for AVs are presently operational and are undergoing further refinement by diverse automotive enterprises (GreyB, Citation2021; Herger, Citation2022). These solutions mainly focus on the Tele-driving paradigm, which entails the RO’s ongoing control of autonomous vehicles using a steering wheel and pedals (, left). However, the manual remote control of a vehicle was found to be extremely difficult (Tener & Lanir, Citation2023). Notably, due to the physical detachment of the RO from the operated AV, the inability to perceive the exerted forces upon the teleoperated vehicle or the ambient sound in its surroundings poses a significant challenge. Furthermore, latency emerges as an issue, as an extensive amount of information needs to be transmitted from the AV to the RO across the network (Georg & DIermeyer, Citation2019; Zhang, Citation2020).

Figure 1. Left – schematic drawing of teleoperation via a steering wheel and pedals (tele-driving). Right –teleoperation via high-level commands (tele-assistance).

A different paradigm, Tele-assistance, posits that humans can provide input to the automated system at a guidance level. Here, the RO entrusts the execution of low-level maneuvers to the AV, conveying high-level directives through a specialized interface (Flemisch et al., Citation2014). In Tele-assistance, the operator still receives and views the video feed and information from the remote vehicle; however, remote vehicle control is done through interface commands rather than directly driving the vehicle (, right).

The utilization of tele-assistance offers numerous benefits compared to manual vehicle operation. Firstly, employing remote directives promises to considerably reduce the duration of teleoperation sessions, as issuing concise commands like “continue” or “bypass” is much faster than manually steering the vehicle. Secondly, by disconnecting the RO from the low-level controls of the AV, such as the steering wheel and pedals, it becomes possible for ROs to manage heterogeneous vehicles (varying in size, width, etc.) and fleets (including private cars, shuttles, trucks, etc.) through universal commands. This facilitates smoother adaptation and learning, as ROs are not compelled to formulate new mental models when transitioning between different teleoperated vehicles (Tener & Lanir, Citation2023). Thirdly, tele-assistance delivers a crucial safety advantage. According to data from the U.S. Department of Transportation, 94 percent of accidents in the United States stem from human errors (Hussain & Zeadally, Citation2019). According to recent research by Waymo (Kusano et al., Citation2023), the AV crash rate per million miles of driving (0.41 incidents per million miles) is significantly less than the crash rate for human drivers (2.78). Consequently, entrusting the execution of low-level maneuvers to the autonomous agent holds significant potential for enhancing the safety of AVs. Lastly, tele-assistance can potentially alleviate the cognitive load that ROs experience using tele-driving interfaces, which demand an exceedingly high level of attention (Tener & Lanir, Citation2023).

Our research work focuses on the teleoperation of fully autonomous vehicles, with the primary objective being to establish an initial set of high-level commands, serving as a foundational framework for tele-assistance and developing a general communication language between AVs and ROs. Such a command language can be used as a basis for designing tele-assistance user interfaces. The current research focuses on a touch-based approach in which the remote operator provides high-level guidance to the AV using a tablet surface.

To derive these commands, we created simulations of ten carefully chosen scenarios derived from prior investigations (Dixit et al., Citation2016; Favarò et al., Citation2018; Lv et al., Citation2018; Tener and Lanir, Citation2023). Subsequently, these simulations were presented to seventeen adept teleoperators who were prompted to suggest high-level commands to resolve each scenario, following a methodology akin to an elicitation study (Wobbrock et al., Citation2009). Then, to exemplify how these commands can be employed, we designed an initial touch-based user interface (UI) for tele-assistance. Finally, we evaluated the command language by recalling several participants and showing them a design example of three use cases. The evaluation aimed to validate the completeness of the elicited commands and assess the overall paradigm of command-based AV teleoperation.

The principal contributions of this paper can be summarized as follows:

A scenario-command mapping that describes the high-level commands that are most appropriate in resolving ten representative scenarios in which AVs would need remote assistance.

A general list and description of high-level commands for tele-assistance divided into six major categories.

Initial design of a first-of-its-kind tele-assistance user interface.

2. Related work

We first describe earlier works on the teleoperation of autonomous vehicles and robots and then review works on tele-assistance interfaces.

2.1. Teleoperation of autonomous robots and vehicles

Unmanned ground vehicles (UGVs), operating without human presence on board, have a rich history dating back to the 1960s (Sheridan, Citation1989; Sheridan & Ferrell, Citation1963). Initially used in military and space applications (Fong & Thorpe, Citation2001; Hill & Bodt, Citation2007), UGVs today have diverse applications in agriculture, manufacturing, mining, last-mile delivery (Macioszek, Citation2018), and healthcare (Murphy et al., Citation2020). While UGVs can operate autonomously to varying degrees, a remote human operator with the ability to perceive the vehicle’s distant environment and issue control instructions using a teleoperation interface remains essential. Designing effective teleoperation interfaces for these vehicles is a significant challenge (Fong & Thorpe, Citation2001), with cognitive factors crucial in ensuring safe and reliable control. These factors include situation awareness (SA) (Adams, Citation2007), spatial orientation, RO’s workload and performance, limited field of view, attention switching, size and distance estimation, degraded motion perception, and more (Durantin et al., Citation2014; Van Erp & Padmos, Citation2003). Previous research has focused on enhancements of SA while minimizing cognitive and sensorimotor burdens (Fong & Thorpe, Citation2001). Subsequent studies delved into human performance concerns and user interface designs for teleoperation interfaces. These included strategies for effective decision-making, command issuance, exploration of the influence of video image reliability, field of view, orientation, viewpoint, and depth perception on human performance (Colley & Rukzio, Citation2020; Dixit et al., Citation2016). In addition, novel user interfaces have been investigated, including gestural, haptic (Fong et al., Citation2001), head-mounted displays (Grabowski et al., Citation2021), and virtual reality (Hedayati et al., Citation2018; Kot & Novák, Citation2018). However, the primary challenges in UGV teleoperation, especially in semi-autonomous functions, revolve around human perception, trust in automation, and defining the roles of humans and robots.

The field of AV research is relatively new, and it is rapidly advancing, driven by innovations in perception and decision-making technologies (Badue et al., Citation2021). Despite technological advancements, it is widely recognized that similar to UGVs, AVs will encounter limitations in dealing with certain road scenarios (Hampshire et al., Citation2020). These challenges include scenarios where AV sensors and algorithms are insufficient, such as when the AV cannot accurately assess a situation (e.g., determining whether an object is a flying plastic bag or a sliding rock), when it encounters unfamiliar situations (e.g., an unrecognized animal on the road), or when and AV faces perception issues (e.g., sensor problems in a heavy sandstorm). While humans might also face difficulties in these edge cases, they can excel in interpreting complex or novel situations. Therefore, a RO can often easily handle what may pose a complex challenge for an AV. Moreover, some aspects of vehicle operation may require human intervention to comply with regulations (e.g., deciding to enter a junction when the traffic light is malfunctioning or crossing a separation lane when the road is blocked). Consequently, having an RO is essential to address these edge-case scenarios effectively.

While both UGVs and AVs require ROs for safe and reliable operation, the teleoperation of AVs presents a unique and complex challenge mainly because AVs are responsible for passenger transportation, necessitating exceptional service quality in terms of reduced travel times, strict safety measures, and cost-effectiveness (Litman, Citation2020). Furthermore, the intricacies and uncertainties of urban roads surpass those of environments like agricultural fields, lunar terrains, logistics warehouses, and even aerial and maritime pathways. Consequently, the remote operation of a vehicle on urban roads is an exceptionally demanding task, requiring significant cognitive resources from the RO. This involves simultaneous management of lane keeping, speed, acceleration control, and fast response to unforeseen factors such as pedestrians, other vehicles, and unexpected incidents (Goodall, Citation2020).

To tackle the challenges above, several investigations (Bout et al., Citation2017) have been conducted to explore the potential of head-mounted displays (HMDs) in enhancing SA and spatial awareness among remote operators of AVs. However, these studies suggest that although cutting-edge HMDs offer a heightened sense of immersion, they may not improve driving performance. Recent research endeavors have begun to delve into the prerequisites for interfaces centered around teleoperation (Graf & Hussmann, Citation2020) and delineate the design space for AV teleoperation interfaces (Graf et al., Citation2020). Graff and Hussmann (Hedayati et al., Citation2018; Hill & Bodt, Citation2007) concentrated on compiling an exhaustive set of user requirements for AV teleoperation. This encompasses comprehensive information about the vehicle, its sensory apparatus, and the surrounding environment (such as vehicle positioning, status, weather conditions, etc.).

2.2. Tele-assistance

In their research work from 2021 (Bogdoll et al., Citation2021), Bogdol et al. surveyed recent teleoperation terminologies (as used by different companies and researchers). They proposed a taxonomy and a classification of the different teleoperation approaches. Two teleoperation approaches stand out in this comparison: Direct Control and Indirect Control. Direct Control, also called Tele-driving, is used in Level-1 (“Driver Assistance”) and Level-2 (“Partial Driving Automation”) of automation, as defined by the SAEFootnote4 (SAE, Citation2018), incorporating components such as a steering wheel, pedals, and multiple screens displaying real-time video feeds from various onboard cameras, including front, rear, right, and left perspectives. These tools allow ROs to take full remote control of the AV and manipulate its low-level movements. However, self-driving presents considerable challenges, such as the inability to feel speed and acceleration, latency, impaired visibility, and others (Tener & Lanir, Citation2023).

Indirect control, also often called Tele-assistance, is prevalent in Level-4 (“High Driving Automation”) and Level-5 (“Full Driving Automation”) and does not require the RO to take full remote control over the AV. In tele-assistance, the RO delegates the low-level maneuvers to the AV itself through a specialized interface and, therefore, can potentially mitigate the challenges without directly driving the remote vehicle.

Several studies have explored different components of tele-assistance. For instance, Mutzenich et al. (Mutzenich et al., Citation2021) proposed that the RO’s UI should afford tasks like communicating with service providers, passengers, and fleet management centers. Fennel et al. (Fennel et al., Citation2021) suggest a method to outline an AV’s desired path using a large-scale haptic interface that a user walks on. Schitz et al. (Schitz et al., Citation2020) tackle a similar challenge by defining a collision-free corridor where the AV can compute a safe path. Kettwich et al. (Kettwich et al., Citation2021) designed and evaluated a tele-assistance user interface tailored to the teleoperation of automated public transport shuttles following predetermined routes. This interface enables the teleoperator to select a specific shuttle, offer assistance by interacting with third parties or passengers, and adjust the shuttle’s trajectory using a touch screen. Flemisch (Flemisch et al., Citation2014) presents an approach in which the RO communicates with the AV through maneuver-based commands executed by automation.

Despite exploring various indirect control techniques, existing research has examined only fragments of the problem. With current technological advancements, particularly the evolution of AV systems that operate the vehicle fully autonomously using various sensors (LIDAR, radar, sonar, cameras, etc.), indirect control can be expanded to a communication language between humans and vehicles. Our research aims to lay the foundations for such a communication language by devising a concrete and generic set of high-level commands that can be used to guide an AV.

In summary, previous research (Fennel et al., Citation2021; Graf et al., Citation2020; Kettwich et al., Citation2021; Mutzenich et al., Citation2021; Schitz et al., Citation2020) examined various aspects of high-level guidance of AVs; however, according to our knowledge, no prior research devised a comprehensive set of discrete high-level commands that would handle most use cases and which an RO can use to guide AVs in edge-case scenarios.

3. Methodology

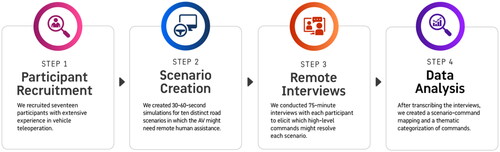

We simulated ten selected scenarios and used these scenarios to elicit commands. The study was conducted with 17 participants with extensive experience in the teleoperation of ground vehicles. Its primary purpose was to define a comprehensive and generic set of high-level commands that ROs can use to resolve most scenarios without taking manual control of the AV. The study methodology was inspired by elicitation studies (Wobbrock et al., Citation2009) in which referents (in this case, a video scenario) are shown to the participant, who is then asked to show/name the most appropriate input action for the different prompts. However, unlike elicitation studies in which there is usually one “winning” command for each referent (i.e., a single gesture is chosen for each interface command (Felberbaum & Lanir, Citation2018; Ruiz et al., Citation2011; Wobbrock et al., Citation2009)), here we allowed and analyzed multiple options for each scenario. The study was executed under ethical approval from our institution’s ethical review board (approval number 063/21). describes the major experiment’s steps.

3.1. Participants

Seventeen participants (7 identifying themselves as female and 10 identifying themselves as male) aged 23 to 45 (M = 33.35, SD = 7.55) participated in the study. Participants were recruited among participants in a large consortium, which was established to address the challenges of using autonomous fleets and was comprised of seven labs from five different universities and six companies from the industrial complex. We also used snowball sampling to reach more qualified remote operators based on the acquaintances we had established among the consortium members. Eleven participants had bachelor’s degrees or higher. Thirteen interviewees had extensive teleoperation experience with UGVs of various types, one participant had three plus years of operating a UGV using a joystick while having a direct visual connection with it, and three participants were involved in the development of sophisticated teleoperation systems while closely collaborating with teleoperators. Seven teleoperators have gained most of their teleoperation experience during army service while operating military vehicles, six participants are employed by civil and industrial companies that primarily focus on the teleoperation of autonomous shuttles and buses, and the rest are employed in large companies operating a wide variety of UGVs. All participants agreed to participate in the study, signed an informed consent form, and received a digital voucher for coffee and a pastry as symbolic compensation for their contribution. Specific details on the participants’ AV-related experience are provided in .

Table 1. Participants’ AV-related experience.

3.2. Scenario videos

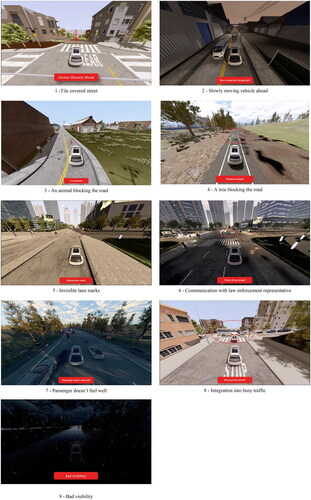

We selected ten distinct scenarios across various categories () based on previous research works (Bogdoll et al., Citation2021; Dixit et al., Citation2016; Favarò et al., Citation2018; Feng et al., Citation2020; Lv et al., Citation2018) that analyzed and categorized disengagement causes based on reports collected by California’s Department of Motor Vehicles (DMV). These specific scenarios were chosen according to three main criteria: (1) Coverage of a maximal number of intervention categories, (2) High potential to generate a discussion and elicit distinct, high-level commands, and (3) Technical limitations and simulation complexities of the simulation software (for instance, it was not possible to generate scenarios with deep puddles). We used a specialized simulation platform,Footnote6 to create 30-60 seconds-long simulations depicting the chosen road situations. lists the simulated scenarios, and shows nine video images from these simulations. The videos of the simulated scenarios are provided in the supplementary material.

Table 2. Simulated scenarios and their categories.

3.3. Procedure

The study was conducted remotely via the Zoom video-conferencing tool. All sessions were recorded and lasted around 75 minutes. A consent form was obtained at the beginning of each interview session, after which participants were asked to fill in a demographic questionnaire. Then, we explained the study’s purpose and the difference between tele-driving and tele-assistance. Finally, in the main part of the study, we showed nineFootnote7 simulated scenarios to each participant, one scenario at a time. For each simulated scenario, we asked participants to answer the following questions:

Which high-level commands would you use to solve this scenario?

What controls, screens, or information details would you like to see in the teleoperation interface to complete the task successfully?

What other actions could you take to solve the situation?

3.4. Data analysis

Initially, we manually transcribed the participants’ responses from recorded videos. Next, for each scenario, we gathered the responses for the first question (high-level commands used to solve the scenario), combining similar responses and eliminating redundancies (e.g., “continue” and “go ahead”), and excluded commands occurring less than three times. This enabled us to create a scenario-command mapping that lists the most popular commands that can be used to solve each scenario. In the second phase, we grouped the elicited commands, removing redundancies across scenarios and creating thematic categories based on thematic similarities. We used an inductive analysis approach for the categorization. The leading researcher did the analysis, with review meetings and discussions held with another researcher to reach a collaborative decision whenever there were unclear categories or decisions to make.

4. Results

In presenting the results, we first present the main commands given to each scenario. Then, we look at the entire command pool, grouping the commands into categories and providing more details on each category and command.

4.1. Mapping of commands to scenarios

Our first analysis, , presents the commands according to each scenario, ordered by the number of participants who mentioned each command. We removed commands mentioned by fewer than three participants and presented actionable commands only (e.g., commands that change the camera zoom were not included in this analysis).

Table 3. Mapping of tele-assistance commands to road scenarios.

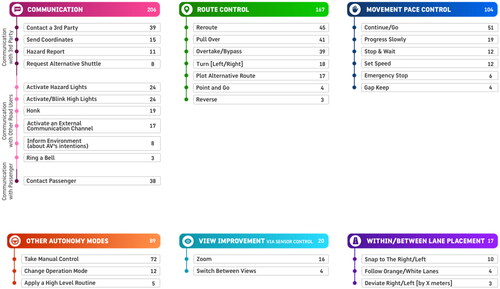

4.2. Categorization of high-level commands

The above high-level commands can be used as building blocks of a communication language between the ROs and AVs to be used in a possible interface. In addition, the above communication language can be expanded based on the command type. Therefore, in addition to scenario-commands mapping, we analyzed the number of appearances of each command across scenarios and categorized the commands into six major clusters. provides an overview of all high-level commands according to the categories. The following sub-sections provide details on each category and the specific commands in each category. The commands within each sub-category are sorted and presented in the order corresponding to the number of times the participants mentioned each command.

4.2.1. Communication

This category includes all the commands that might be needed to communicate with 3rd party representatives, other road users (drivers, pedestrians, gate guards, etc.), fleet management centers,Footnote8 and passengers.

4.2.1.1. Communication with 3rd parties

Contact a 3rd party (mentioned 39 times in the data) – a command that allows the RO to contact and request assistance from an ambulance, the police, a tow truck, a representative of the municipality, etc. For example, RO2 shared that she “…would call 911 through the teleoperation interface and deliver them [the vehicle’s] coordinates …” to assist a passenger who doesn’t feel well in scenario 7.

Send Coordinates (15) – is a command that allows the RO to send an AV's coordinates to a third party, the fleet management center, or a teleoperation center. This command can be secondary in other interaction routines, such as contacting a 3rd party (see above), reporting a hazard on the road, or requesting an alternative shuttle (see below).

Hazard report (11) – a command allowing the RO to report road issues. This command is necessary to help a specific AV continue its progress and propagate this message to all the other AVs in the area to optimize the traffic. RO2 gave a very detailed description of the desired interaction: “… I would want to see a map and mark the particular location of the obstacle on it … Tag the relevant road segment of the road and report the authorities to clear it and tell other AVs not to ride on it …”

Request an alternative vehicle (8) – a command that allows the RO to request an alternative vehicle to be sent to the scene. This command was primarily mentioned when discussing the 10th scenario (Flat tyre) and scenario 9 (Bad visibility), in which a human driver-controlled vehicle was requested.

4.2.1.2. Communication with other road users

The communication between an AV and its surroundings is an essential aspect of AV deployment (e.g. (Colley & Rukzio, Citation2020; Eisma et al., Citation2019; Mahadevan et al., Citation2018),). In our study, the following methods were suggested:

Activate hazard lights (24) – a command that allows the RO to activate the AV’s hazard lights to notify the environment about a problem it experiences. In several cases, the interviewees suggested that the hazard lights should be activated automatically as part of an emergency routine performed by the AV; at the same time, the RO should be able to access this low-level command when needed.

Activate/Blink high lights (24) – a command that allows the RO to activate the high lights. Like current road behaviors, high light activation may increase visibility and be used as a communication tool between vehicles and other road users. For instance, RO1 suggested activating high lights to “… show the tractor that it disturbs me [by blinking high lights] …” in scenario 2.

Honk (19) – The “Honk” command allows the RO to access the AV’s horn and utter a beeping sound to warn other road users (vehicles, humans, animals, etc.). It is worth mentioning that some interviewees considered the “Honk” command aggressive, and RO12 even explicitly stated that she “… doesn’t want to honk …” Therefore, a gentler option, such as “Ring a Bell” (see below), might be applicable in some cases.

Activate an external communication channel (microphone and speaker) (17) – The interviewees mentioned activating an external communication channel to talk with other road users and hear what’s happening in the remote scene. For example, RO1 suggested to “… ask other humans to help to move the dog [from the road] …” (3rd scenario), and RO4 mentioned that “… the RO should be able not only to talk with the police officer, but also hear the environment …” in scenario 6.

Inform Environment [about AV’s intentions] (8) – Several interviewees mentioned the need to communicate AV’s intentions to other road users in the remote environment. RO15 shared that “… in [his] company there is a [on screen (located on the AV’s body) that explains to the AV’s environment what the AV is doing …” and RO1 said that he “… would like to communicate with the police officer via a screen like in public transport today … This screen [should] show what the vehicle is doing: stopping, recognized an object, doors opening, etc.…” From the above comments, one may infer that the RO should have a way to communicate and inform about the AV’s intentions.

Ring a Bell (3) – command can also be added to the teleoperation UI. Several interviewees stated that such a command is already part of their system’s interface.

4.2.1.3. Communication with passengers

Contact passenger (38) − 15 out of 17 interviewees defined audio communication with the passenger as essential, and 14 interviewees also required an appropriate video feed. The most relevant scenario for this command was scenario 7 (Passenger doesn’t feel well), which required the RO to assess the passenger’s well-being before acting. For example, RO2 suggested to “… talk with the passenger and see if she just wants to wait it out or get another vehicle for her [which will take her home] …”

4.2.2. Route control

Reroute (45) – “Reroute” enables the RO to change an AV’s route between its current location and final destination or alter its final destination. For example, if a police officer redirects traffic, the AV’s destination might not change, but the route to it – will. In a different example, if a passenger does not feel well, the RO might decide to reroute the AV to the nearest hospital. Most interviewees described a “Map-based” interaction when rerouting, which means that after pressing this option, a map with several routes is expected to appear and allow the RO to select a course.

Pullover (41) – a command that guides the AV to stop safely at the side of the road. This command was mainly raised to resolve use cases where the AV cannot continue its mission, whether because of bad visibility, technical problems, or an emergency health situation with the passenger. RO16 described it vividly: “… If the passenger needs to throw up, the AV should automatically stop at the side of the road and activate hazard lights …”

Overtake/Bypass (39) – a command that guides the AV to overtake an obstacle from the left or the right sides, depending on the use case. Interviewees also expressed their willingness to see the AI’s suggestions for a possible overtaking route so that they only have to select the desired path.

Turn [left/right] (18) – A turning command was mostly mentioned for scenario 6, where a policeperson instructs the AV to change its path. Several interviewees suggested the officer would guide the AV via a dedicated application. In other words, if the policeperson wants to guide the AV to turn right, they will press such a button on a tablet, and the command will be propagated to the AV or the RO (via the remote assistance interface).

Plot alternative route (17) – a command that should allow the RO to activate a drawing tool in which the RO would be able to plot a path for the AV to follow. Different interviewees mentioned various surfaces on top of which the route should be plotted. For example, RO8 suggested planning the alternative route on top of a satellite image, while RO2 suggested HD maps.Footnote9 In addition, various micro-interaction techniques were proposed: RO2 said that the path should “… not [be] a continuous drawing of a path but clicking on points on the road …” and RO11 suggested that “… the AV should suggest a route that is divided into several sections and that I can change one of these sections …”

Point and Go (4) – is a command in which the RO selects a point on the scene and directs the AV to drive there. “Point and Go” differs from the “Plot Alternative Route” command because in “Point and Go,” the RO selects a point at a time and waits for the command’s execution, while when plotting an alternative route, she plots several points at a time and only then directs the AV to perform the desired maneuver.

Reverse (3) – a command that allows the AV to move backward without performing a “U-turn.” This command was mentioned in scenarios 1 (Tile-covered street) and 2 (Animal blocks the road), but it can also be helpful in various maneuvers on the road.

4.2.3. Movement pace control

Continue/Go (51) – As it sounds, “Continue” is a command that prompts the AV to continue its movement after stopping. For instance, “Continue” was the most mentioned command in the 1st scenario (Tile-covered street) because no real obstacle prevented the AV’s movement. We expect this command to be handy when sensors wrongly recognize imaginary obstacles. Additionally, the “Continue” command can be helpful after resolving road issues, such as removing barriers from the road (3rd and 4th scenarios).

Progress Slowly (19) – is a command that guides the AV to continue very slowly and allows immediate stoppage in case of need. RO14 suggested “… progress slowly until the situation becomes clearer …” in the 1st scenario (Tile-covered street), and RO15 told to “… crawl to see if I can bypass the obstacle without moving from the asphalt …” in the 4th scenario (A tree blocking the road).

Stop & Wait/Wait (12) – This command entails that the AV should stop and suspend its progress for some time. The amount of time an AV should break its movement can be predefined or controlled manually by the RO. For instance, RO4, RO10, and RO15 suggested stopping and waiting during the resolution of the 4th scenario (A tree blocking the road). Such action is essential if the AV should wait for assistance from a 3rd party or for an enthusiastic passenger who volunteered to move the tree branch from the road.

Set speed (12) – The participants wanted to control the AV’s movement speed in several scenarios. RO1 suggested reducing speed when entering a tile-covered street, and RO12 told to “… Snap to the right, go at a constant speed, and don’t perform overtakes …” when the lanes are not visible (5th scenario).

Stop/Emergency Stop (6) – The “Stop” command should immediately stop an AV in case of need. RO7 shared with us that “… the stop button should be visible. We had an emergency stop button connected to the AV via a separate networking channel …”

Gap Keep (4) – is a command that enables the RO to request from the AV to keep a constant distance (of her choice) from a selected vehicle. RO13 gave an excellent description of the desired interaction as a mitigation for the 8th scenario (Integration into heavy traffic): “… You press on a ‘Gap Keep’ button, select which vehicle to follow, and set the distance to X cm …”

4.2.4. Other autonomy modes

In this sub-section, we discuss commands that aggregate several commands that change AV’s general behavior. Such commands are essential because they transfer responsibilities from the human RO to the AV and shorten a single intervention session’s length.

Take Manual Control (72) – Based on our study, at this point of technological advancement in the field of AVs, continuous manual control (i.e., tele-driving) will be necessary as a fallback in many road situations. Therefore, an easy transition between higher-level commands and manual control is essential.

Changing operation modes (12) – Nine interviewees mentioned a transition to a different automatic driving mode as a possible mitigation technique for various road scenarios that require remote human intervention: RO3 and RO9 suggested using “Cautious Driving Mode” when entering a tile-covered street (1st scenario), RO17 mentioned an “Off-road Driving Mode” when bypassing a tree on a none asphalt road (4th scenario), and RO6 and RO8 mentioned an “Aggressive Driving Mode” to integrate into heavy traffic (8th scenario). Additional driving modes can and should be defined in the future, but the idea of a higher level of guidance and relaying more responsibilities to autonomy seems valuable.

Applying high-level routines (5) – An additional technique aggregates several high- and low-level commands into a single higher-level command. An excellent example of such a routine was mentioned by RO15 for scenario 10 (Flat tyre): “… when you have a Flat tyre, there should be a routine for this case: activate hazard light, notify the environment [about the problem], and pull over …” Similarly, to driving modes of operation, many more routines can be defined.

4.2.5. RO’s view improvement via sensor control

Zoom/Switch between cameras (16) – In many cases, interviewees wanted to improve their view of the scene. For example, in scenario 4 (A tree blocking the road), RO2 shared that she needs “… to explore the type of the surface before [she does] a maneuver …” and in scenario 6, RO1 said that he “… want[s] to have an option to zoom into the police officer to understand his gestures …”

Switch between views (4) – Several interviewees expressed willingness to change between different views and camera types to increase their remote scene comprehension. Some wanted to see video feeds from left, right, and rear cameras on the big screen. Others – to view the same scene via different sensor types. RO9 shared that she “… should be able to switch between day, night, thermal, and infra-red cameras …” when resolving the bad visibility conditions in scenario 9. While such sensors might be expensive and unavailable in large fleets of robotic taxis, we believe that having the affordance of seeing the same scene through different sensor types is valuable and has the potential to increase the RO’s SA.

4.2.6. Within/between lane placement control

Snap to the right/left (10) – This command directs an AV to move to the utmost right or left lanes in various road situations. The command was primarily mentioned when discussing the 5th scenario, in which lane marks disappear, and the AV needs to find alternative landmarks on which to base its movements. For instance, RO12 suggested to “… snap to the right, go at a constant speed, [and] don’t perform overtakes …”

Follow orange/white lanes (4) – To address two separate sets of lanes in the 5th scenario, the participants suggested having a command to distinguish which lane to follow. RO7 described the desired interaction in detail: “… If the AV can distinguish colors, it should tell me that it discovered two sets of lanes (orange and white) and show me two corresponding buttons. I would click on the orange button …. If the AV can’t distinguish colors, the AV should show an AR overlay of lanes. Then I would pick ‘this and this’ lanes as the right ones and press ‘Go’ …”

Deviate right/left [by X meters] (3) – a command that addresses the AV’s placement within its lane. When discussing the 2nd scenario (Slowly moving vehicle ahead), RO15 noticed that “… the AV is [too] close to the separation lane …” and suggested deviating to the right a bit. Additionally, RO1 mentioned the same command for the 3rd scenario (An animal blocking the road).

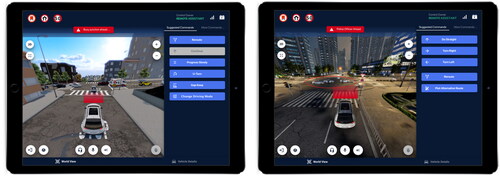

5. Idesign of a teleassistance user interface

Based on the high-level commands, their classification into thematical clusters, and the scenario-commands mapping, we designed an initial user interface to exemplify how these commands can be utilized in an actual tele-assistance station. In , we present a scenario in which a static obstacle (a fallen tree) blocks a one-lane road surrounded by non-asphalt roadsides. In this case, the AV cannot break its operational design domain ODDFootnote10 (driving on a non-asphalt road) without explicit permission from a human operator and will require teleoperation assistance from the teleoperation center. In such a scenario, the AV will request assistance. The raised request will be assessed by the teleoperation system to match it with an available teleoperator. The selected RO will receive an alert and see the presented screen (alongside the vehicle’s frontal view, see ). After receiving the alert and assessing the remote situation, the RO will choose a desired command (e.g., “Bypass from Left”) according to her or his judgment and monitor the maneuver till completion.

Figure 5. Initial tele-assistance user interface design. Suggested high-level commands (on the right side) are according to the elicited results for the “a tree blocking the road” use case, as shown in .

A tablet-based user interface was chosen because touch interfaces are ubiquitous, intuitive, and quickly adopted as a tool for command issuance. Moreover, other researchers (Kusano et al., Citation2023) also used a tablet-based input method to design teleoperation stations (Kettwich et al., Citation2021). We assume that the tablet-based interface will be part of a larger teleoperation station ecosystem (see , right), which will also include a large screen (or multiple screens) that presents a live video feed from several (left, front, right, and rear) cameras, a headset, a steering wheel (and pedals) that the RO can use when tele-driving is necessary, and possibly other components such as a map-based screen.

The above UI design incorporates several vital insights and design decisions: (1) The commands (seen as selection buttons on the right side of ) should be contextual and adaptive, showing only the relevant commands according to the detected intervention reason; (2) The commands are arranged according to the frequency of the commands in the data. For example, the command “Bypass from Right” was mentioned more than any other command when discussing the “Tree blocking the road” scenario; (3) Only the relevant subset of high-level commands is presented to the RO on the main screen. Other commands are still available to employ but are placed in a different UI subsection that is accessible to the RO on demand (in the “More Commands …” tab in the upper right corner of ); (4) To avoid unnecessary visual distractions, the most commonly used commands, and ones which may apply to all scenarios, are constantly visible in the interface using the white round buttons at the bottom of the screen; (5) As mentioned by the participants, it is imperative to emphasize the intervention reason for the RO (shown here in red on the top part of the screen). This information may be provided by the AV’s perception systems or the fleet management system that the AV is part of; (6) Finally, we chose a “third person perspective” view (Gorisse et al., Citation2017), which includes a top view of the AV and its surroundings, as this was deemed preferable by most teleoperators in the interviews. The frontal camera view can be seen in the auxiliary screens (see , right).

6. Initial evaluation

The results of the elicitation study yielded scenario-command mapping based on inputs from seventeen experienced ROs. Some commands for a scenario were raised by most of the participants, while others were raised only by a few. This evaluation aimed to receive further input regarding the completeness and practicality of the elicited commands and to assess the overall paradigm of command-based AV teleoperation.

6.1. Methodology

We selected three scenarios to evaluate the elicited commands and designed their respective user interface screens ( and ). These scenarios were selected since they are representative of common possible problems and encompass most elicited commands. Each screen included a screenshot from a simulated scenario and the high-level commands that can address the scenario according to our findings (see ). Seven participants from the elicitation part (RO1, RO2, RO6, RO7, RO12, RO13, and RO15) agreed to participate in the evaluation. We note that the purpose of the study was not to evaluate this specific interface design but to take another look at the list of elicited commands and assess the tele-assistance paradigm. The evaluation was conducted remotely via Zoom. For each participant, we first showed each of the designed screens, asking participants to comment on the scenario, given the suggested commands. Next, we asked the following questions:

Figure 6. Initial tele-assistance user interface design for “integration into heavy traffic” (left) and “communication with a law enforcement representative” (right) scenarios.

Is guiding a vehicle using discrete high-level commands via a specialized interface, such as this, a realistic approach?

Can the following commands be used to resolve the presented remote scenario?

Are there any unnecessary commands?

Are there any missing commands?

Following the abovementioned interviews, we transcribed the conversations and created a command-feedback mapping for each command included in the design. We then aggregated the documented data into several themes, as described next.

6.2. Results

Most participants believed that operating a vehicle using high-level commands can be very helpful and practical, and the elicited set of commands is sufficient to operate an AV in the presented representative road scenarios. Few participants commented that tele-assistance is desired but context-dependent because (1) Some scenarios cannot be handled using discrete commands, and (2) Guiding AVs using commands gives less feeling of control over the vehicle in comparison to direct driving. Next, we present the most significant themes that emerged from the interviews.

6.2.1. Existing commands related comments

During the evaluation, some of the commands were found not to be well-fit for specific road scenario resolutions:

Continue – Five participants were reluctant to use the “Continue” command in the “A tree blocking the road” scenario. One participant said, “…I don’t think going over the obstacle is necessarily a good default option. It’s not that the option shouldn’t exist; it’s just that this shouldn’t be the default option …” Additionally, most participants were reluctant to use this command in the “Integration into heavy traffic” scenario because it was too risky.

Progress Slowly – Much discussion revolved around the “Progress Slowly” command. RO13 implied that “Progress Slowly” is not a clear enough command: “… When we say, ‘Progress slowly’, is the AV going to slide and stop or is it going to move a fixed distance and stop? … You are not trying to drive in real-time with these buttons, so you can always define this command to be ‘move 0.5 meters and stop’ …” RO1 suggested to have two separate commands: “Slide” (can be without stopping) and “Inch Forward.” Another suggestion was to slide as long as the command is pressed (“click & hold” interaction). Finally, RO2 suggested a merge between “Stop” and “Continue” commands and an interchange between them, as it is done with “pause” and “play” commands in video systems.

U-Turn – three participants noted that performing a U-turn in the “A tree blocking the road” scenario does not make sense because it is a one-way road and because the ground surface (mud) might not allow it. Additionally, four participants were reluctant to use a “U-turn” to resolve the “Integration into heavy traffic” scenario because it didn’t allow them to pass through the junction. Thus, we note that the suggested high-level command language should enable the resolution of complex traffic situations without changing the AV’s original route. If this is not possible, the AV should be capable of rerouting autonomously and in advance.

Gap Keep – Two participants raised concerns about using this command in the “Integration into heavy traffic” scenario. One participant said that in that exact situation, the “Gap Keep” command did not have any meaning because the vehicle before was already inside the junction. However, this would be useful if several vehicles were ahead of the ego car. Another participant noted that “… It might be a problem to do [Gap Keep] in real-time since [while I was giving the command] the vehicle ahead of me already drove away …” RO2 also mentioned that the leading and the following vehicles might go in different directions “… but in whatever case the AV will just continue on its [own] path …” In other words, while “Gap Keep” was found to be a valid command, it might not be appropriate in the resolution of the upper-mentioned scenario.

6.2.2. Newly suggested commands

During the evaluation, several new commands were suggested; RO13 suggested adding a “Report” command, enabling the RO to note something about a specific intervention: “… [For example] If you run into an incident, there should be a way [not only] to report it to the authorities, but also a way to collect and view the logs … For example, 3-minute footage before and after the incident …” RO2 wanted an option to pass the problem to somebody else in case she cannot resolve the situation: “… [I want to be able to] Raise a ticket … I know I can’t solve this, so I need help …” Another suggestion was to enable textual input in the interface to allow its projection on the AVs body, such as “… I am stopping. You can safely cross the intersection …” which may improve the communication between the AV and the pedestrians around it.

6.2.3. Other significant insights

6.2.3.1. Command design as part of a larger teleoperation ecosystem

When designing the tele-assistance UI, designers should consider this UI part of a larger teleoperation station (see ). For example, RO6 noted that transitioning to a map view to “Plot Alternative Route” or “Reroute” is problematic because the map hides the video feed in the tele-assistance UI. At the same time, RO12 emphasized the need always to see a map view. One possible solution is to use external screens to show both the map and a continuous video feed from front cameras while dedicating the tele-assistance UI to high-level commands and other perspectives. In addition, several participants mentioned the need for a physical “Emergency Stop” button that should be separate from the tele-assistance UI. RO7 said, “… I'm missing a clear STOP button that stops the AV no matter what …” Another participant emphasized that such a button should allow the RO to react on a visceral level.

6.2.3.2. Discrete commands vs. continuous remote driving

During the evaluation, it became clear that continuous remote driving can be advantageous in some scenarios. For example, five participants claimed that taking manual control in the “Integration into heavy traffic” scenario was better than assisting the vehicle using discrete commands. One participant said that “… In this situation, I don’t want to resolve the situation using semi-autonomous commands. I want to get to a state when I do an action, and the vehicle immediately executes it. I [also] want to be able to change this action. This might not be only a stop because maybe a motorcycle enters the junction, and [then] I want to do an evading maneuver …” At the same time, RO13 said that “… [while] not all scenarios can be handled by discrete commands, [remote driving] should be the last resort …” Therefore, we believe that future teleoperation stations should support both tele-assistance and tele-driving.

6.2.3.3. Improve the effectiveness of the communication commands

Communication between the RO, other systems stakeholders (e.g., passengers), and road users should be as efficient as possible. For instance, RO12 noted, “… If I had to call someone, I wouldn’t do it … I came to drive not to talk over the phone …” and suggested an automatic way to report a hazard [instead of contacting a 3rd party]. In other words, she suggested speeding up the communication process using “Send Coordinates” and “Hazard Report” commands, which were initially elicited but were not included in the design.

6.2.3.4. Commands for view improvement and sensor control

ROs liked the suggested camera perspective and the options to zoom in/out, see a map screen, and switch between 2D and 3D views. At the same time, participants wanted more freedom and control over the presented scene. One participant articulated it vividly: “… [I don’t have] enough control over the view … One of the most important things is to control what I see … I need specific angles such as 'In Vehicle', 'On the side', 'Rear View', etc. in the fastest possible way. I [also] need all the PTZ (= Pan, Tilt, Zoom) at hand …” Several interesting scene investigation modes were suggested. RO1 suggested having an action called “Point and Look,” which enables zooming into the region that was pointed at. RO7 suggested drawing a rectangle and zooming into the desired section (a magnifying glass interaction). Finally, RO13 suggested utilizing the tablet’s affordances, such as the “pinch” gesture, to explore the remote environment further.

7. Discussion

In this study, we explored a communication language between human operators and autonomous vehicles to enable command-based control of a remote AV. We built upon previous research works that explored disengagement use cases and simulated scenarios to elicit a generic set of high-level commands that an RO can use to resolve various edge-case traffic situations. Our results present a concrete set of commands for ten representative use cases and their general categorization.

When examining the command categorization (), we see that the most frequently mentioned commands were related to the communication between the RO and other people. We further divided these into three categories – communication with 3rd parties (i.e., police, local municipalities, etc.), communication with other road users, and communication with passengers. Communication with 3rd parties (e.g., municipalities, police, etc.) is essential for requesting assistance and reporting various hazards. This emphasizes that teleoperation goes beyond a single teleoperator and requires a more extensive infrastructure that includes a fully connected teleoperation center. The teleoperation interface should consist of appropriate connections with these parties. Communication of AVs with other road users has been investigated mainly in the AutomotiveUI and HCI communities (Lanzer et al., Citation2020; Nguyen et al., Citation2019; Reig et al., Citation2018). Most works have focused on conveying the intentions of the AV to pedestrians and other road users (e.g., when stopping at a pedestrian crossing). The teleoperation interface can leverage these ideas. However, it might be essential to convey to other road users that a remote person is currently controlling and communicating through the AV. Finally, communication with passengers of the AV is also essential. Passengers should be informed of AV’s decisions to feel more confident and trust the AV (Reig et al., Citation2018). Furthermore, passengers who have a real-world perception of the problem can help with the intervention by providing information to the AV or the RO through an in-vehicle interface. How to design such an interface can be examined in future studies.

The other two most frequently mentioned categories were “Route Control” and “Movement Pace Control.” These categories include various commands to control the movement of the vehicle. One can claim that in some cases, some of these commands, e.g., “Bypass,” can be autonomously performed by Level-4 and Level-5 vehicles. However, it is essential to note that AVs have an operational design domain (ODD) system limiting their driving options. Therefore, situations where the AV must break the ODD must be carefully addressed. For example, in the Tree Blocking the Road scenario (, Image 4), the AV will have to overtake it and cross a continuous separation lane. By doing so, the AV breaks the ODD rules. Such an action might have both safety and legal implications, and therefore, an explicit command should be given by the RO. Thus, to enable flexibility of control, the tele-assistance UI should afford such commands in various use cases, as demonstrated by the commands in the above two categories.

In this study, we assumed a one-way communication direction from human to vehicle, primarily involving the issuance of commands through button clicks by the RO. However, collaboration between the RO and AV systems can extend beyond this basic interaction, encompassing more intricate data exchange and a broader spectrum of available interactions. There are various ways in which the communication form between RO and AV can be expanded. First, the AV can provide the RO with critical information from its perceptual engine. For example, the AV can classify objects in the scene (e.g., an animal blocking the road), which might improve RO’s situation awareness and performance if adequately emphasized by the teleoperation interface. In addition, the system can add information gained from external sources to the remote scene. For example, it can alert the RO about an existing vehicle positioned right around a turn (similar to what navigation systems do today). Second, an assisting agent, built as a computational engine that calculates various options according to the capability of the AV, the task at hand, and knowledge of previous ROs’ actions, can provide recommendations to the RO on various tasks. Such an agent can play a pivotal role in assisting the RO by presenting a range of scenario-specific choices for addressing a given situation. As suggested by Trabelsi et al. (Trabelsi et al., Citation2023), these options could manifest as on-screen overlays for remote drivers, offering visual guidance and suggestions. Another practical example involves proposing multiple alternative routes for circumventing an obstacle obstructing the roadway, as depicted by the green and orange overlays in . Thirdly, the semantics of the scene can be analyzed and used to expand current interactions (Majstorovic et al., Citation2022). For instance, the AV could prompt the human operator to identify or confirm the semantics associated with an obstructing object on the roadway, such as discerning between a flying plastic bag filled with air or a sliding rock. This “semantic tagging” process could benefit immediate decision-making and enhance the knowledge base of other vehicles when they encounter the same location.

Moreover, the selection of objects within the remote environment carries utility beyond human tagging, as it can also serve as a mechanism for issuing commands related to the chosen objects. For example, an RO might select a specific vehicle in the scene and request the AV to follow that vehicle using the “Gap Keep” command. The tele-assistance UI can also offer diverse means for specifying the desired movement trajectory. For instance, the RO could designate a destination to which the AV should navigate using a “Point and Go” command or sketch out a continuous path for the vehicle using the “Plot alternative route” command: these and various other interactions between the RO and the AV warrant further exploration and investigation.

Finally, while we believe tele-assistance has multiple benefits over direct driving, we must acknowledge that discrete commands will not fully substitute direct driving. For example, manually driving the car would still be needed in harsh weather conditions (e.g., heavy rain, as presented in , image 9). Thus, hybrid teleoperation stations might be the best option for future teleoperation stations, including direct driving and indirect guidance.

7.1. Limitations and future work

The current work has some limitations. First, we chose ten representative use cases shown to participants to elicit commands. The scenarios were selected from across the use-case categories to be comprehensive according to previous studies. Still, while we noticed that the suggested commands started to repeat themselves, other use cases might elicit other commands. Furthermore, in simulating the use cases, we were limited by the technical possibilities of the simulation software. For example, we could not simulate a deep puddle in the middle of the road or a policeperson who redirects traffic using her hands. Thus, the current list of commands may not be exhaustive.

In some cases, the interviewees related to the specifics of the video image instead of looking at the presented scenario more holistically. For example, RO16 pointed out that the AV was too close to the yellow separation line in the 2nd scenario (Slowly moving vehicle) and suggested commands that could address this situation. Finally, the fact that the tele-assistance paradigm might be advantageous over tele-driving in certain road scenarios is yet to be proven. In future research, an objective evaluation of tele-assistance measuring RO’s performance, cognitive load, safety, and situation awareness while comparing it to tele-driving is required.

While the presented commands are deeply rooted in real-world scenarios and in the accumulated knowledge of experienced teleoperators, there is still much to be done to translate these commands into a working user interface prototype. In and , we presented a suggested design to show how such commands can be used in an interface. Future work would extend and evaluate this interface with simulated scenarios. Additionally, the command language can be further expanded based on the presented categories and beyond. Therefore, the above results can be used both as a starting point for future design explorations and as an extensible framework for expanding a communication language between humans and AVs.

8. Conclusion

In recent years, the teleoperation of AVs has emerged as a practical and effective strategy to expedite the integration of AVs onto public roadways. Tele-driving and tele-assistance represent pivotal paradigms for remote AV operation, with the former presenting notable challenges and complexities. This work focuses on the latter approach, in which a remote human operator guides the AV through high-level commands rather than direct manual control. This approach offers potential benefits such as shorter teleoperation sessions, adaptability to diverse vehicles, improved safety, and reduced cognitive load for operators. This research proposes an initial set of high-level commands derived from simulated scenarios presented to experienced remote operators. These commands can serve as a foundation for establishing a generic communication language between AVs and ROs, as demonstrated by the initial design of a tele-assistance user interface. We believe that the current work is only a first step in the investigation of tele-assistance interfaces and may be helpful for designers, engineers, lawmakers, automakers, and other professionals from industry and academia who wish to design, develop, and test novel methods to create better and safer ways of transportation.

Supplemental Material

Download Zip (209.1 MB)Acknowledgments

This work was supported by the Israeli Innovation Authority, IDIT PhD fellowship, The Israeli Smart Transportation Research Center, and The Israeli Ministry of Aliyah and Integration. We also thank DriveU and Cognata for their collaboration and, specifically, Eli Shapira’s help throughout this work. Finally, we thank Mrs. Lior Kamitchi Rimon and Mr. Ariel Ratner for their assistance.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Felix Tener

Felix Tener is a PhD researcher and a lecturer in the Information Systems department at the University of Haifa. Felix’s research focuses on designing novel interfaces for the teleoperation of autonomous vehicles. Felix received his MS from Georgia Institute of Technology and his BS from the Technion.

Joel Lanir

Joel Lanir is an associate professor at the Information Systems Department at the University of Haifa where he directs the Human-Computer Interaction lab. His research interests lie in the general area of human-computer interaction, and specifically, mobile and context-aware computing, technology for cultural heritage, and information visualization.

Notes

1 https://waymo.com/- Waymo is formally known as the Google self-driving car project.

2 https://zoox.com/- Zoox is a subsidiary of Amazon developing autonomous vehicles that provide Mobility-as-a-Service.

3 Disengagement - a situation when the vehicle returns to manual control or the driver feels the need to take back the wheel from the AV decision system.

4 SAE – Society of Autonomous Engineers (https://www.sae.org/).

5 Tomcar - a type of commercial off-road utility vehicle.

7 The “Flat tire” scenario was described verbally since it was very challenging to simulate it.

8 A fleet management center is a center that belongs to the fleet operating company (e.g., Uber) and responsible for fleet maintenance and service providing.

10 SAE J3016 defines ODD as “Operating conditions under which a given driving automation system or feature thereof is specifically designed to function, including, but not limited to, environmental, geographical, and time-of-day restrictions, and/or the requisite presence or absence of certain traffic or roadway characteristics.”.

References

- Adams, J. A. (2007). Unmanned Vehicle Situation Awareness: A Path Forward. Proceedings of the 2007 Human Systems Integration Symposium, p. 615.

- Badue, C., Guidolini, R., Carneiro, R. V., Azevedo, P., Cardoso, V. B., Forechi, A., Jesus, L., Berriel, R., Paixão, T. M., Mutz, F., de Paula Veronese, L., Oliveira-Santos, T., & De Souza, A. F. (2021). Self-driving cars: A survey. Expert Systems with Applications, 165. https://doi.org/10.1016/j.eswa.2020.113816

- Bogdoll, D., Breitenstein, J., Heidecker, F., Bieshaar, M., Sick, B., Fingscheidt, T., & Zollner, J. M. (2021, October). Description of Corner Cases in Automated Driving: Goals and Challenges [Paper presentation]. IEEE International Conference on Computer Vision, 1023–1028. https://doi.org/10.1109/ICCVW54120.2021.00119

- Bogdoll, D., Orf, S., Töttel, L., & Zöllner, J. M. (2021). Taxonomy and survey on remote human input systems for driving automation systems. http://arxiv.org/abs/2109.08599

- Bout, M., Brenden, A. P., Klingeagrd, M., Habibovic, A., & Böckle, M. P. (2017). A head-mounted display to support teleoperations of shared automated vehicles [Paper presentation]. AutomotiveUI 2017 - 9th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications, Adjunct Proceedings, pp. 62–66. https://doi.org/10.1145/3131726.3131758

- Colley, M., & Rukzio, E. (2020). A design space for external communication of autonomous vehicles [Paper presentation]. Proceedings - 12th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2020, pp. 212–222. https://doi.org/10.1145/3409120.3410646

- Dixit, V. V., Chand, S., & Nair, D. J. (2016). Autonomous vehicles: Disengagements, accidents and reaction times. PLoS One, 11(12), e0168054. https://doi.org/10.1371/journal.pone.0168054

- Durantin, G., Gagnon, J. F., Tremblay, S., & Dehais, F. (2014). Using near infrared spectroscopy and heart rate variability to detect mental overload. Behavioural Brain Research, 259, 16–23. https://doi.org/10.1016/j.bbr.2013.10.042

- Eisma, Y. B., van Bergen, S., ter Brake, S. M., Hensen, M. T. T., Tempelaar, W. J., & de Winter, J. C. F. (2019). External human-machine interfaces: The effect of display location on crossing intentions and eye movements. Information (Switzerland), 11(1), 13. https://doi.org/10.3390/info11010013

- Fagnant, D. J., & Kockelman, K. (2015). Preparing a nation for autonomous vehicles: Opportunities, barriers and policy recommendations. Transportation Research Part A: Policy and Practice, 77, 167–181. https://doi.org/10.1016/j.tra.2015.04.003

- Favarò, F., Eurich, S., & Nader, N. (2018). Autonomous vehicles’ disengagements: Trends, triggers, and regulatory limitations. Accident; Analysis and Prevention, 110, 136–148. https://doi.org/10.1016/j.aap.2017.11.001

- Felberbaum, Y., & Lanir, J. (2018). Better understanding of foot gestures: An elicitation study [Paper presentation]. Conference on Human Factors in Computing Systems - Proceedings. https://doi.org/10.1145/3173574.3173908

- Feng, J., Yu, S., Chen, G., Gong, W., Li, Q., Wang, J., & Zhan, H. (2020). Disengagement causes analysis of automated driving system [Paper presentation]. Proceedings - 2020 3rd World Conference on Mechanical Engineering and Intelligent Manufacturing, WCMEIM 2020, pp. 36–39. https://doi.org/10.1109/WCMEIM52463.2020.00014

- Fennel, M., Zea, A., & Hanebeck, U. D. (2021). Haptic-guided path generation for remote car-like vehicles. IEEE Robotics and Automation Letters, 6(2), 4087–4094. https://doi.org/10.1109/LRA.2021.3067846

- Flemisch, F. O., Bengler, K., Bubb, H., Winner, H., & Bruder, R. (2014). Towards cooperative guidance and control of highly automated vehicles: H-Mode and Conduct-by-Wire. Ergonomics, 57(3), 343–360. https://doi.org/10.1080/00140139.2013.869355

- Fong, T. W., Conti, F., Grange, S., & Baur, C. (2001). Novel interfaces for remote driving: Gesture, haptic, and PDA. Mobile Robots XV and Telemanipulator and Telepresence Technologies VII, 4195, 300–311. https://doi.org/10.1117/12.417314

- Fong, T., & Thorpe, C. (2001). Vehicle teleoperation interfaces. Autonomous Robots, 11(1), 9–18. https://doi.org/10.1023/A:1011295826834

- Georg, J. M., & DIermeyer, F. (2019, October). An adaptable and immersive real time interface for resolving system limitations of automated vehicles with teleoperation [Paper presentation]. Conference Proceedings - IEEE International Conference on Systems, Man and Cybernetics, pp. 2659–2664. https://doi.org/10.1109/SMC.2019.8914306

- Georg, J. M., Feiler, J., DIermeyer, F., & Lienkamp, M. (2018, November). Teleoperated driving, a key technology for automated driving? Comparison of actual test drives with a head mounted display and conventional monitors∗ [Paper presentation]. IEEE Conference on Intelligent Transportation Systems, Proceedings, ITSC, pp. 3403–3408. https://doi.org/10.1109/ITSC.2018.8569408

- Goodall, N. (2020). Non-technological challenges for the remote operation of automated vehicles. Transportation Research Part A: Policy and Practice, 142, 14–26. https://doi.org/10.1016/j.tra.2020.09.024

- Gorisse, G., Christmann, O., Amato, E. A., & Richir, S. (2017). First- and third-person perspectives in immersive virtual environments: Presence and performance analysis of embodied users. Frontiers in Robotics and AI, 4. https://doi.org/10.3389/frobt.2017.00033

- Grabowski, A., Jankowski, J., & Wodzyński, M. (2021). Teleoperated mobile robot with two arms: The influence of a human-machine interface, VR training and operator age. International Journal of Human Computer Studies, 156. https://doi.org/10.1016/j.ijhcs.2021.102707

- Graf, G., & Hussmann, H. (2020). User requirements for remote teleoperation-based interfaces [Paper presentation]. Adjunct Proceedings - 12th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2020, pp. 85–88. https://doi.org/10.1145/3409251.3411730

- Graf, G., Palleis, H., & Hussmann, H. (2020). A design space for advanced visual interfaces for teleoperated autonomous vehicles [Paper presentation]. ACM International Conference Proceeding Series. https://doi.org/10.1145/3399715.3399942

- GreyB. (2021). Top 30 self driving technology and car companies. https://www.greyb.com/autonomous-vehicle-companies/#

- Hampshire, R. C., Bao, S., Lasecki, W. S., Daw, A., & Pender, J. (2020). Beyond safety drivers: Applying air traffic control principles to support the deployment of driverless vehicles. PLoS One, 15(5), e0232837. https://doi.org/10.1371/journal.pone.0232837

- Hedayati, H., Walker, M., & Szafir, D. (2018). Improving collocated robot teleoperation with augmented reality [Paper presentation]. ACM/IEEE International Conference on Human-Robot Interaction, pp. 78–86. https://doi.org/10.1145/3171221.3171251

- Herger, M. (2022). 2021 disengagement report from California. https://thelastdriverlicenseholder.com/2022/02/09/2021-disengagement-report-from-california/

- Hill, S. G., & Bodt, B. (2007). A field experiment of autonomous mobility: Operator workload for one and two robots [Paper presentation]. HRI 2007 - Proceedings of the 2007 ACM/IEEE Conference on Human-Robot Interaction - Robot as Team Member, pp. 169–176. https://doi.org/10.1145/1228716.1228739

- Hussain, R., & Zeadally, S. (2019). Autonomous cars: Research results, issues, and future challenges. IEEE Communications Surveys & Tutorials, 21(2), 1275–1313. https://doi.org/10.1109/COMST.2018.2869360

- Kettwich, C., Schrank, A., & Oehl, M. (2021). Teleoperation of highly automated vehicles in public transport: User-centered design of a human-machine interface for remote-operation and its expert usability evaluation. Multimodal Technologies and Interaction, 5(5), 26. https://doi.org/10.3390/mti5050026

- Kot, T., & Novák, P. (2018). Application of virtual reality in teleoperation of the military mobile robotic system TAROS. International Journal of Advanced Robotic Systems, 15(1), 172988141775154. https://doi.org/10.1177/1729881417751545

- Kusano, K. D., Scanlon, J. M., Chen, Y.-H., Mcmurry, T. L., Chen, R., Gode, T., & Victor, T. (2023). Comparison of Waymo Rider-only crash data to human benchmarks at 7.1 million miles. arXiv preprint arXiv:2312.12675.

- Lanzer, M., Babel, F., Yan, F., Zhang, B., You, F., Wang, J., & Baumann, M. (2020). Designing communication strategies of autonomous vehicles with pedestrians: An intercultural study [Paper presentation]. Proceedings - 12th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2020, pp. 122–131. https://doi.org/10.1145/3409120.3410653

- Litman, T. (2020). Autonomous vehicle implementation predictions: Implications for transport planning. Transportation Research Board Annual Meeting, 42, 1–39. https://trid.trb.org/View/1678741

- Lv, C., Cao, D., Zhao, Y., Auger, D. J., Sullman, M., Wang, H., Dutka, L. M., Skrypchuk, L., & Mouzakitis, A. (2018). Analysis of autopilot disengagements occurring during autonomous vehicle testing. IEEE/CAA Journal of Automatica Sinica, 5(1), 58–68. https://doi.org/10.1109/JAS.2017.7510745

- Macioszek, E. (2018). First and last mile delivery - problems and issues. In Advanced Solutions of Transport Systems for Growing Mobility: 14th Scientific and Technical Conference “Transport Systems. Theory & Practice 2017” Selected Papers (pp. 147–154). Springer International Publishing. https://doi.org/10.1007/978-3-319-62316-0_12

- Mahadevan, K., Somanath, S., & Sharlin, E. (2018). Communicating awareness and intent in autonomous vehicle-pedestrian interaction [Paper presentation]. Conference on Human Factors in Computing Systems - Proceedings 2018-April, pp. 1–12. https://doi.org/10.1145/3173574.3174003

- Majstorovic, D., Hoffmann, S., Pfab, F., Schimpe, A., Wolf, M.-M., & Diermeyer, F. (2022). Survey on teleoperation concepts for automated vehicles [Paper presentation]. https://doi.org/10.1109/SMC53654.2022.9945267

- Murphy, R. R., Gandudi, V. B. M., & Adams, J. (2020). Applications of robots for COVID-19 response. http://arxiv.org/abs/2008.06976

- Mutzenich, C., Durant, S., Helman, S., & Dalton, P. (2021). Updating our understanding of situation awareness in relation to remote operators of autonomous vehicles. Cognitive Research: principles and Implications, 6(1), 9. https://doi.org/10.1186/s41235-021-00271-8

- Nguyen, T. T., Holländer, K., Hoggenmueller, M., Parker, C., & Tomitsch, M. (2019). Designing for projection-based communication between autonomous vehicles and pedestrians [Paper presentation]. Proceedings - 11th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2019, pp. 284–294. https://doi.org/10.1145/3342197.3344543

- Politis, I., Brewster, S., & Pollick, F. (2015). Language-based multimodal displays for the handover of control in autonomous cars [Paper presentation], pp. 3–10. https://doi.org/10.1145/2799250.2799262

- Reig, S., Norman, S., Morales, C. G., Das, S., Steinfeld, A., & Forlizzi, J. (2018). A field study of pedestrians and autonomous vehicles [Paper presentation]. Proceedings - 10th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2018, pp. 198–209. https://doi.org/10.1145/3239060.3239064

- Ruiz, J., Li, Y., & Lank, E. (2011). User-defined motion gestures for mobile interaction [Paper presentation], pp. 197–206. Retrieved December 25, 2023, from https://dl-acm-org.ezproxy.haifa.ac.il/doi/10.1145/1978942.1978971 https://doi.org/10.1145/1978942.1978971

- SAE. (2018). Taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles.

- Schitz, D., Graf, G., Rieth, D., & Aschemann, H. (2020). Corridor-based shared autonomy for teleoperated driving. IFAC-PapersOnLine, 53(2), 15368–15373. https://doi.org/10.1016/j.ifacol.2020.12.2351

- Sheridan, T. B. (1989). Telerobotics. Automatica, 25(4), 487–507. https://doi.org/10.1016/0005-1098(89)90093-9

- Sheridan, T. B., & Ferrell, W. R. (1963). Remote manipulative control with transmission delay. IEEE Transactions on Human Factors in Electronics, HFE-4(1), 25–29. https://doi.org/10.1109/THFE.1963.231283

- Tener, F., & Lanir, J. (2023). Investigating intervention road scenarios for teleoperation of autonomous vehicles. Multimedia Tools and Applications, 1–17. https://doi.org/10.1007/s11042-023-17851-z

- Trabelsi, Y., Shabat, O., Lanir, J., Maksimov, O., & Kraus, S. (2023). Advice provision in teleoperation of autonomous vehicles [Paper presentation]. International Conference on Intelligent User Interfaces, Proceedings IUI, pp. 750–761. https://doi.org/10.1145/3581641.3584068

- Van Erp, J. B. F., & Padmos, P. (2003). Image parameters for driving with indirect viewing systems. Ergonomics, 46(15), 1471–1499. https://doi.org/10.1080/0014013032000121624

- Wobbrock, J. O., Morris, M. R., & Wilson, A. D. (2009). User-defined gestures for surface computing [Paper presentation]. Conference on Human Factors in Computing Systems - Proceedings, pp. 1083–1092. https://doi.org/10.1145/1518701.1518866

- Zhang, T. (2020). Toward automated vehicle teleoperation: Vision, opportunities, and challenges. IEEE Internet of Things Journal, 7(12), 11347–11354. https://doi.org/10.1109/JIOT.2020.3028766